How to Fine-Tune a Riva ASR Acoustic Model (Conformer-CTC) with TAO Toolkit

Contents

How to Fine-Tune a Riva ASR Acoustic Model (Conformer-CTC) with TAO Toolkit#

This tutorial walks you through how to fine-tune an NVIDIA Riva ASR acoustic model (Conformer-CTC) with NVIDIA TAO Toolkit.

NVIDIA Riva Overview#

NVIDIA Riva is a GPU-accelerated SDK for building speech AI applications that are customized for your use case and deliver real-time performance.

Riva offers a rich set of speech and natural language understanding services such as:

Automated speech recognition (ASR).

Text-to-Speech synthesis (TTS).

A collection of natural language processing (NLP) services, such as named entity recognition (NER), punctuation, and intent classification.

In this tutorial, we will fine-tune a Riva ASR acoustic model (Conformer-CTC) with TAO Toolkit.

To understand the basics of Riva ASR APIs, refer to Getting started with Riva ASR in Python.

For more information about Riva, refer to the Riva developer documentation.

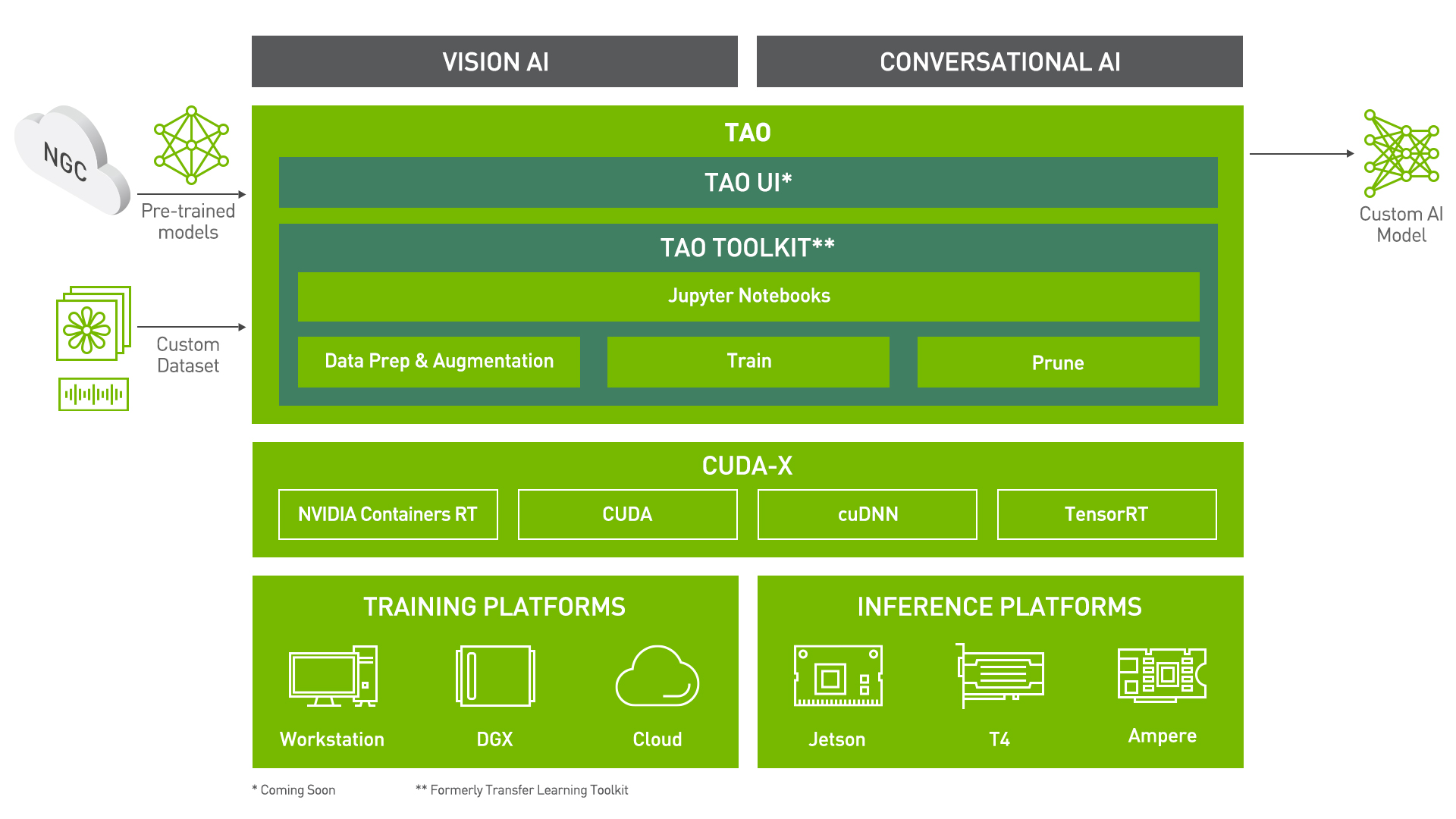

Train Adapt Optimize (TAO) Toolkit#

Train Adapt Optimize (TAO) Toolkit is a Python-based AI toolkit for taking purpose-built pre-trained AI models and customizing them with your own data. Developers, researchers, and software partners building intelligent vision AI applications and services, can bring their own data to fine-tune pre-trained models instead of going through the hassle of training the models from scratch.

Transfer learning extracts learned features from an existing neural network into a new one. Transfer learning is often used when creating a large training dataset is not feasible. The goal of this toolkit is to reduce that 80 hour workload to an 8 hour workload, which can enable data scientists to have considerably more train-test iterations in the same time frame.

Let’s see this in action with a use case for the ASR acoustic model.

Automatic Speech Recognition (ASR)#

Automatic Speech Recognition (ASR) is often the first step in building a conversational AI model. An ASR model converts audible speech into text. The main metric for these models is to reduce Word Error Rate (WER) while transcribing the text. Simply put, the goal is to take an audio file and transcribe it.

In this tutorial, we are going to discuss the Conformer-CTC model, which is an end-to-end ASR model that takes in audio and produces text.

Conformer-CTC supports both character-level and sub-word-level encodings. It employs a combination of self-attention and convolution modules to achieve the best of the two approaches. The self-attention layers support both absolute and relative positional encodings and can learn the global interaction, while the convolutions efficiently capture the local correlations.

ASR using TAO#

Installing and Setting up TAO#

Install TAO inside a Python virtual environment. We recommend performing this step first and then launching the tutorial from the virtual environment.

In addition to installing the TAO Python package, ensure you meet the following software requirements:

python3.6.9docker-ce> 19.03.5docker-API1.40nvidia-container-toolkit> 1.3.0nvidia-container-runtime> 3.4.0nvidia-docker2> 2.5.0nvidia-driver>= 455.23

Installing TAO is a simple pip install.

! pip install nvidia-pyindex

! pip install nvidia-tao

After installing TAO, the next step is to setup the mounts for TAO. The TAO launcher uses Docker containers under the hood, and for our data and results directory to be visible to Docker, they need to be mapped. The launcher can be configured using the config file ~/.tao_mounts.json. Apart from the mounts, you can also configure additional options like the environment variables and the amount of shared memory available to the TAO launcher.

Important: The following code creates a sample ~/.tao_mounts.json file. Here, we can map directories in which we save the data, specs, results, and cache. You should configure it for your specific use case so these directories are correctly visible to the Docker container.

# please define these paths on your local host machine

HOST_DATA_DIR = '/path/to/your/host/data'

HOST_SPECS_DIR = '/path/to/your/host/specs'

HOST_RESULTS_DIR = '/path/to/your/host/results'

%env HOST_DATA_DIR=$HOST_DATA_DIR

%env HOST_SPECS_DIR=$HOST_SPECS_DIR

%env HOST_RESULTS_DIR=$HOST_RESULTS_DIR

! mkdir -p $HOST_DATA_DIR

! mkdir -p $HOST_SPECS_DIR

! mkdir -p $HOST_RESULTS_DIR

# Mapping the Local Directories to the TAO Docker.

import json

import os

mounts_file = os.path.expanduser("~/.tao_mounts.json")

tlt_configs = {

"Mounts":[

{

"source": os.environ["HOST_DATA_DIR"],

"destination": "/data"

},

{

"source": os.environ["HOST_SPECS_DIR"],

"destination": "/specs"

},

{

"source": os.environ["HOST_RESULTS_DIR"],

"destination": "/results"

},

{

"source": os.path.expanduser("~/.cache"),

"destination": "/root/.cache"

}

],

"DockerOptions": {

"shm_size": "16G",

"ulimits": {

"memlock": -1,

"stack": 67108864

}

}

}

# Writing the mounts file.

with open(mounts_file, "w") as mfile:

json.dump(tlt_configs, mfile, indent=4)

!cat ~/.tao_mounts.json

You can check the Docker image versions and the tasks that it performs. You can also check by issuing tao --help or:

! tao info --verbose

Set Relevant Paths#

# NOTE: The following paths are set from the perspective of the TAO Docker.

# The data is saved here:

DATA_DIR = "/data"

SPECS_DIR = "/specs"

RESULTS_DIR = "/results"

# Set your encryption key and use the same key for all commands.

KEY = 'tlt_encode'

The command structure for the TAO interface can be broken down as follows: tao <task name> <subcommand>

Let’s see this in further detail.

Downloading Specs#

TAO’s conversational AI toolkit works off of spec files which make it easy to edit hyperparameters on the fly. We can proceed to downloading the spec files. You may choose to modify/rewrite these specs or even individually override them through the launcher. You can download the default spec files by using the download_specs command.

The -o argument indicates the folder where the default specification files will be downloaded. The -r argument instructs the script on where to save the logs. Ensure the -o points to an empty folder.

# delete the specs directory if it is already there to avoid errors

! tao speech_to_text_conformer download_specs \

-r $RESULTS_DIR/conformer \

-o $SPECS_DIR/conformer

Preparing the Dataset#

Downloading and Preprocessing the Datasets#

LibriSpeech ASR Dataset#

The train-clean-100 split of the LibriSpeech ASR dataset, which we’ll use as the training set, is publicly available here and can be downloaded directly. The dev-clean split of the LibriSpeech ASR dataset, which we’ll use as the validation set, is publicly available here and can also be downloaded directly. We’ve provided a script that downloads the splits for you. The preprocessing step entails converting the audio files from their native .flac format to .wav and generating a manifest file containing metadata for each audio file, both of which TAO Toolkit needs to train the model.

Install modules that the downloading and preprocessing script requires which aren’t part of the Python standard library.

!sudo apt install -y sox

!pip install sox

!pip install tqdm

!python ./get_librispeech_data.py --data_root=$HOST_DATA_DIR --data_sets='train_clean_100,dev_clean'

The filepaths in the manifest files are currently set with respect to the system on which we’re running this tutorial. They need to be set with respect to the tao container. The sed command will help us fix that.

Note the use of double quotes in calling sed. Those are necessary for sed to evaluate $HOST_DATA_DIR and $DATA_DIR. If you attempt to use single quotes, sed will replace the literal string $HOST_DATA_DIR with the literal string $DATA_DIR instead of replacing the value assigned to HOST_DATA_DIR with the value assigned to DATA_DIR.

!sed -i "s|$HOST_DATA_DIR|$DATA_DIR|g" $HOST_DATA_DIR/LibriSpeech/train_clean_100_manifest.json

!sed -i "s|$HOST_DATA_DIR|$DATA_DIR|g" $HOST_DATA_DIR/LibriSpeech/dev_clean_manifest.json

Remove the .tar.gz archive files to save space.

!rm $HOST_DATA_DIR/train_clean_100.tar.gz

!rm $HOST_DATA_DIR/dev_clean.tar.gz

Let’s listen to a sample audio file from the preprocessed training dataset.

# change path of the file here

import os

import IPython.display as ipd

path = os.environ["HOST_DATA_DIR"] + '/LibriSpeech/train-clean-100-processed/163-121908-0000.wav'

ipd.Audio(path)

Crowdsourced High-Quality Nigerian English Speech Dataset#

The evaluation/fine-tuning data is publicly available in several files here.

# Download the audio data

!wget 'https://www.openslr.org/resources/70/en_ng_female.zip' -P $HOST_DATA_DIR

!wget 'https://www.openslr.org/resources/70/en_ng_male.zip' -P $HOST_DATA_DIR

# Extract the evaluation/finetuning data

# Ensure that the unzip utility is available. If not, install it.

!unzip -nq $HOST_DATA_DIR/en_ng_female.zip -d $HOST_DATA_DIR/en_ng_female

!mv $HOST_DATA_DIR/en_ng_female/line_index.tsv $HOST_DATA_DIR/en_ng_female/line_index_female.tsv

!unzip -nq $HOST_DATA_DIR/en_ng_male.zip -d $HOST_DATA_DIR/en_ng_male

!mv $HOST_DATA_DIR/en_ng_male/line_index.tsv $HOST_DATA_DIR/en_ng_male/line_index_male.tsv

Define a function to extract the relevant information from the .tsv metadata files included with this dataset.

import os

import subprocess

def process_en_ng_tsvs(host_data_dir, data_dir):

genders = ['female','male']

entries = []

# Extract the relevant information from the tsv files

for gender in genders:

dataset = f'en_ng_{gender}'

tsv_name = f'line_index_{gender}.tsv'

tsv_file = os.path.join(host_data_dir, dataset, tsv_name)

with open(tsv_file, encoding='utf-8') as fin:

for line in fin:

label, text = line[: line.index("\t")], line[line.index("\t") + 1 :]

speaker_id = label.split('_')[1]

host_wav_file = os.path.join(host_data_dir, dataset, label + '.wav')

wav_file = os.path.join(data_dir, dataset, label + '.wav')

transcript_text = text.lower().strip()

# check duration

duration = subprocess.check_output("soxi -D {0}".format(host_wav_file), shell=True)

entry = {}

entry['audio_filepath'] = wav_file

entry['duration'] = float(duration)

entry['text'] = transcript_text

entry['gender'] = gender

entry['speaker_id'] = speaker_id

entries.append(entry)

return entries

Define a function to generate *manifest.json metadata files from the .tsv metadata files included with this dataset.

import json

import random

def generate_en_ng_manifest(host_data_dir, data_dir, random_seed=0, val_split=0.1):

# Extract the relevant information from the tsv files

entries = process_en_ng_tsvs(host_data_dir, data_dir)

# Generate the manifest files

# Set the random seed for reproducibility

random.seed(random_seed)

random.shuffle(entries)

num_val_entries = int(val_split * len(entries))

ft_manifest_file = os.path.join(host_data_dir, 'en_ng_ft_manifest.json')

val_manifest_file = os.path.join(host_data_dir, 'en_ng_val_manifest.json')

with open(ft_manifest_file, 'w') as fout:

for m in entries[:-num_val_entries]:

fout.write(json.dumps(m) + '\n')

with open(val_manifest_file, 'w') as fout:

for m in entries[-num_val_entries:]:

fout.write(json.dumps(m) + '\n')

Generate the manifest files for the Nigerian English Speech dataset.

generate_en_ng_manifest(HOST_DATA_DIR, DATA_DIR)

Let’s listen to an audio file from the Nigerian English dataset.

# change path of the file here

import os

import IPython.display as ipd

path = os.environ["HOST_DATA_DIR"] + '/en_ng_male/ngm_02436_00539200207.wav'

ipd.Audio(path)

Training commands for Conformer-CTC are similar to those of QuartzNet.

Training#

Create Tokenizer#

Before we can do the actual training, we need to pre-process the text. This step is called subword tokenization that creates a subword vocabulary for the text. This is different from Jasper/QuartzNet because only single characters are regarded as elements in the vocabulary in their cases, while in Conformer-CTC, the subword can be one or multiple characters. We can use the create_tokenizer command to create the tokenizer that generates the subword vocabulary for us for use in training.

! tao speech_to_text_conformer create_tokenizer \

-e $SPECS_DIR/conformer/create_tokenizer.yaml \

-r $RESULTS_DIR/conformer/create_tokenizer \

manifests=$DATA_DIR/LibriSpeech/train_clean_100_manifest.json \

output_root=$DATA_DIR/LibriSpeech/train-clean-100 \

vocab_size=1024

The TAO interface enables you to configure the training parameters from the command-line interface.

The process of opening the training script, finding the parameters of interest (which might be spread across multiple files), and making the changes needed, is being replaced by a simple command-line interface.

For example, if the number of epochs are needed to be modified along with a change in the learning rate, you can add trainer.max_epochs=10 and optim.lr=0.02 and train the model. Sample commands are given below.

A list of some of the customizable parameters along with their default values is as follows:

trainer:

gpus: 1num_nodes: 1max_epochs: 5max_steps: nullcheckpoint_callback: false

training_ds:

sample_rate: 16000batch_size: 32trim_silence: truemax_duration: 16.7shuffle: trueis_tarred: falsetarred_audio_filepaths: null

validation_ds:

sample_rate: 16000batch_size: 32shuffle: false

optim:

name: adamlr: 0.1betas: [0.9, 0.999]weight_decay: 0.0001

The following steps may take a considerable amount of time depending on the GPU being used. For the best experience, we recommend using an A100 GPU.

For training a Conformer-CTC ASR model in TAO, we use the tao speech_to_text_conformer train command with the following arguments:

-e: Path to the spec file-g: Number of GPUs to use-r: Path to the results folder-m: Path to the model-k: User specified encryption key to use while saving/loading the modelAny overrides to the spec file. For example,

trainer.max_epochs.

Training Conformer-CTC#

Training for even a single epoch on the train-clean-100 split and validating on the dev-clean split of the LibriSpeech ASR dataset will take a considerable amount of time. For good model performance, hundreds of training epochs may be required.

! tao speech_to_text_conformer train \

-e $SPECS_DIR/conformer/train_conformer_bpe_large.yaml \

-g 1 \

-k $KEY \

-r $RESULTS_DIR/conformer/train \

training_ds.manifest_filepath=$DATA_DIR/LibriSpeech/train_clean_100_manifest.json \

validation_ds.manifest_filepath=$DATA_DIR/LibriSpeech/dev_clean_manifest.json \

trainer.max_epochs=1 \

training_ds.num_workers=4 \

validation_ds.num_workers=4 \

training_ds.batch_size=4 \

validation_ds.batch_size=4 \

model.tokenizer.dir=$DATA_DIR/LibriSpeech/train-clean-100/tokenizer_spe_unigram_v1024

ASR Evaluation#

Now that we have a model trained, we need to check how well it performs.

!tao speech_to_text_conformer evaluate \

-e $SPECS_DIR/conformer/evaluate.yaml \

-g 1 \

-k $KEY \

-m $RESULTS_DIR/conformer/train/checkpoints/trained-model.tlt \

-r $RESULTS_DIR/conformer/evaluate \

test_ds.manifest_filepath=$DATA_DIR/LibriSpeech/dev_clean_manifest.json

ASR Fine-Tuning#

After the model is trained, evaluated, and there is a need for fine-tuning, the following command can be used to fine-tune the ASR model. This step can also be used for transfer learning by making changes in the train.json and dev.json files to add new data.

The list for customizations is the same as the training parameters with the exception for parameters which affect the model architecture. Also, instead of training_ds we have finetuning_ds.

Note: If you want to proceed with a trained dataset for better inference results, you can find a .nemo model here.

Simply rename the .nemo file to .tlt and pass it through the fine-tune pipeline.

Note: The fine-tune spec files contain specifics to fine-tune the English model we just trained to Russian. If you want to proceed with English, ensure the changes are in the spec file finetune.yaml which you can find in the SPEC_DIR folder you mapped. Ensure to delete older fine-tuning checkpoints if you choose to change the language after fine-tuning it as-is.

Write a Fine-Tuning Spec File for the Nigerian English Speech Dataset#

%%bash

tee $HOST_SPECS_DIR/conformer/finetune_en_ng.yaml <<'EOF'

# Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

# TLT spec file for fine-tuning a previously trained ASR models based on CTC over the MCV Russian dataset.

trainer:

max_epochs: 3 # This is low for demo purposes

tlt_checkpoint_interval: 1

# Whether or not to change the decoder vocabulary.

# Note that this MUST be set if the labels change, e.g. to a different language's character set

# or if additional punctuation characters are added.

change_vocabulary: false

tokenizer:

dir: ???

type: "bpe" # Can be either bpe or wpe

# Fine-tuning settings: training dataset

finetuning_ds:

manifest_filepath: ???

batch_size: 4

trim_silence: true

shuffle: true

is_tarred: false

tarred_audio_filepaths: null

# Fine-tuning settings: validation dataset

validation_ds:

manifest_filepath: ???

batch_size: 4

shuffle: false

# Fine-tuning settings: optimizer

optim:

name: novograd

lr: 0.001

EOF

Finetune Conformer-CTC#

Training and validating for even a single epoch on the Nigerian English ASR dataset will take a considerable amount of time. For good model performance, hundreds of training epochs may be required.

!tao speech_to_text_conformer finetune \

-e $SPECS_DIR/conformer/finetune_en_ng.yaml \

-g 1 \

-k $KEY \

-m $RESULTS_DIR/conformer/train/checkpoints/trained-model.tlt \

-r $RESULTS_DIR/conformer/finetune \

finetuning_ds.manifest_filepath=$DATA_DIR/en_ng_ft_manifest.json \

validation_ds.manifest_filepath=$DATA_DIR/en_ng_val_manifest.json \

trainer.max_epochs=1 \

finetuning_ds.num_workers=20 \

validation_ds.num_workers=20 \

trainer.gpus=1 \

tokenizer.dir=$DATA_DIR/LibriSpeech/train-clean-100/tokenizer_spe_unigram_v1024

ASR Model Export#

With TAO, you can also export your model in a format that can be deployed using NVIDIA Riva; a highly performant application framework for multi-modal conversational AI services using GPUs. The same command for exporting to ONNX can be used here. The only small variation is the configuration for export_format in the spec file.

Export to Riva#

!tao speech_to_text_conformer export \

-e $SPECS_DIR/conformer/export.yaml \

-g 1 \

-k $KEY \

-m $RESULTS_DIR/conformer/train/checkpoints/trained-model.tlt \

-r $RESULTS_DIR/conformer/riva \

export_format=RIVA \

export_to=asr-model.riva

Export to ONNX#

Note: Export to ONNX is not needed for Riva.

!tao speech_to_text_conformer export \

-e $SPECS_DIR/conformer/export.yaml \

-g 1 \

-k $KEY \

-m $RESULTS_DIR/conformer/train/checkpoints/trained-model.tlt \

-r $RESULTS_DIR/conformer/export \

export_format=ONNX

ASR Inference using TLT Checkpoint#

ASR Inference with TAO Toolkit#

In this section, we are going to run inference on the TLT checkpoint with TAO Toolkit.

For real-time inference and best latency, we need to deploy this model on Riva. Refer to the How to Deploy a Custom Acoustic Model (Conformer-CTC) Trained with TAO Toolkit on Riva tutorial.

You might have to work with the infer.yaml file to select the files you want for inference.

!tao speech_to_text_conformer infer \

-e $SPECS_DIR/conformer/infer.yaml \

-g 1 \

-k $KEY \

-m $RESULTS_DIR/conformer/train/checkpoints/trained-model.tlt \

-r $RESULTS_DIR/conformer/infer \

file_paths=[$DATA_DIR/LibriSpeech/dev-clean-processed/1272-128104-0000.wav]

ASR Inference using ONNX#

TAO provides the capability to use the exported .eonnx model for inference. The command tao speech_to_text infer_onnx is very similar to the inference command for .tlt models. Again, the inputs in the spec file used is just for demo purposes, you may choose to try out your custom input.

!tao speech_to_text_conformer infer_onnx \

-e $SPECS_DIR/conformer/infer_onnx_conformer.yaml \

-g 1 \

-k $KEY \

-m $RESULTS_DIR/conformer/export/exported-model.eonnx \

-r $RESULTS_DIR/conformer/infer_onnx \

file_paths=[$DATA_DIR/LibriSpeech/dev-clean-processed/1272-128104-0000.wav]

What’s Next?#

You can use TAO to build custom models for your own applications, or you could deploy the custom model to NVIDIA Riva.