Supported Job Templates

The following subsections describe the currently supported job templates.

Create, update, or destroy one or more hosts on a specific AWX inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "AWX Inventory Host Update".

Make sure that all required variables described below are defined before running this job. You can define these variables either as inventory variables or as job template variables.

The following variables are required to update inventory:

|

Variable |

Default |

Type |

|

controller_host |

URL to the AWX controller instance |

String |

|

controller_oauthtoken |

OAuth token for the AWX controller instance |

String |

|

hostname |

URL to the AWX controller instance |

String |

Alternatively, you can specify the following variables for update inventory:

|

Variable |

Default |

Type |

|

controller_host |

URL to the AWX controller instance |

String |

|

controller_username |

Username for the AWX controller instance |

String |

|

controller_password |

Password for the AWX controller instance |

String |

|

hostname |

Hostname or a hostname expression of the host(s) to update |

String |

The following variables are available to update inventory:

|

Variable |

Description |

|

api_url |

URL to your cluster bring-up REST API. This variable item is required when the hostname_regex_enabled is set to true. |

|

description |

Description to use for the host(s) |

|

host_enabled |

Determine whether the host(s) should be enabled |

|

hostname_regex_enabled |

Determine whether to use hostname expression to create the hostnames |

|

host_state |

State of the hosts resources. Options: present; or absent. |

|

inventory |

Name of the inventory the host(s) should be made a member of |

The following are variable definitions and default values to update inventory:

|

Variable |

Default |

Type |

|

api_url |

'' |

String |

|

description |

'' |

String |

|

host_enabled |

true |

Boolean |

|

hostname_regex_enabled |

true |

Boolean |

|

host_state |

'present' |

String |

|

inventory |

'IB Cluster Inventory' |

String |

Perform cable validation according to a given topology file.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "Cable Validation".

Warningmake sure that the filenames you provide in the ip_files and topo_files parameters, are names of files located at /opt/nvidia/cot/cable_validation_files.

The following variables are required to run cable validation:

|

Variable |

Description |

|

api_url |

URL to your cluster bring-up REST API. |

|

ip_files |

List of IP filenames to use for cable validation. |

|

topo_files |

List of topology filenames to use for cable validation. |

Alternatively, you can specify the following variables for cable validation:

|

Variable |

Description |

|

remove_agents |

Specify to remove the agents from the switches once validation is complete. |

|

delay_time |

Time (in seconds) to wait between queries of async requests. |

The following are variable definitions and default values to run cable validation:

|

Variable |

Default |

Type |

|

remove_agents |

true |

Boolean |

|

delay_time |

10 |

Integer |

The following example shows how to provide the ip_files and topo_files parameters:

ip_files: ['test-ip-file.ip']

topo_files: ['test-topo-file.topo']

In this example, the cable validation tool would expect to find the test-ip-file.ip and test-topo-file.topo files at /opt/nvidia/cot/cable_validation_files.

Ensure that Python environment for the COT client is installed on one or more hosts.

By default, this job template is configured to run against the ib_host_manager group of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "COT Python Alignment".

The following variables are available for cluster orchestration Python environment installation:

|

Variable |

Description |

|

cot_dir |

Target path to installation root folder |

|

force |

Install the package even if it is already up to date |

|

working_dir |

Path to the working directory on the host |

The following are variable definitions and default values for cluster bring-up client installation:

|

Variable |

Default |

Type |

|

cot_dir |

'/opt/nvidia/cot' |

String |

|

force |

false |

Boolean |

|

working_dir |

'/tmp' |

String |

This job runs high performance tests on the hosts of the inventory.

By default, this job template is configured to run against the ib_host_manager group of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "ClusteKit".

ClusterKit relies on the HPC-X package. Make sure HPC-X package is installed.

The following variables are available for running ClusterKit:

|

Variable |

Description |

|

clusterkit_hostname |

Hostname expressions that represent the hostnames to run tests on |

|

clusterkit_options |

List of optional arguments for the tests |

|

clusterkit_path |

Path to the clusterkit executable script |

|

ib_device |

Name of the RDMA device of the port used to connect to the fabric |

|

inventory_group |

Name of the inventory group for the hostnames to run tests on. This variable item is not available when either the use_hostfile is set to false or the clusterkit_hostname is set. |

|

max_hosts |

Limit the number of hostnames. This variable item is not available when the use_hostfile is set to false. |

|

use_hostfile |

Determine whether to use a file for hostnames to run tests on |

|

working_dir |

Path to the working directory on the host |

The following are variable definitions and default values for running ClusterKit:

|

Variable |

Default |

Type |

|

clusterkit_hostname |

null |

String |

|

clusterkit_options |

[] |

List[String] |

|

clusterkit_path |

'/opt/nvidia/hpcx/clusterkit/bin/clusterkit.sh' |

String |

|

ib_device |

'mlx5_0' |

String |

|

inventory_group |

all |

String |

|

max_hosts |

-1 |

Integer |

|

use_hostfile |

true |

Boolean |

|

working_dir |

'/tmp' |

String |

The ClusterKit results are uploaded to the database after each run and can be accessed via the API.

The following are REST requests to retrieve ClusterKit results:

|

URL |

Response |

Method Type |

|

/api/performance/clusterkit/results |

Get a list of all the ClusterKit run IDs stored in the database |

GET |

|

/api/performance/clusterkit/results/<run_id> |

Get a ClusterKit run's results based on its run ID |

GET |

|

/api/performance/clusterkit/results/<run_id>?raw_data=true |

Get a ClusterKit run's test results as they are stored in the ClusterKit JSON output file based on its run ID. Using the query param "raw_data". |

GET |

|

/api/performance/clusterkit/results/<run_id>?test=<test name> |

Get a specific test result of the ClusterKit run based on its run ID. Using the query param "test". |

GET |

|

Query Param |

Description |

|

test |

Returns a specific test result of the ClusterKit run |

|

raw |

Returns the data as it is stored in the ClusterKit output JSON files |

Examples:

$ curl 'http://cluster-bringup:5000/api/performance/clusterkit/results' ["20220721_152951", "20220721_151736", "20220721_152900", "20220721_152702"]

$ curl 'http://cluster-bringup:5000/api/performance/clusterkit/results/20220721_152951?raw_data=true&test=latency' {

"Cluster": "Unknown",

"User": "root",

"Testname": "latency",

"Date_and_Time": "2022/07/21 15:29:51",

"JOBID": 0,

"PPN": 28,

"Bidirectional": "True",

"Skip_Intra_Node": "True",

"HCA_Tag": "Unknown",

"Technology": "Unknown",

"Units": "usec",

"Nodes": {"ib-node-01": 0, "ib-node-02": 1},

"Links": [[0, 41.885]]

}

This job collects fabric counters with and without traffic based on CollectX and ClusterKit tools.

By default, this job template is configured to run with the ib_host_manager group specified in the IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "Fabric Health Counters Collection".

The following are available for running Fabric Health Counters Collection:

|

Variable |

Description |

|

clusterkit_path |

Path to the ClusterKit executable script |

|

collection_interval |

Interval of time between counter samples in minutes |

|

cot_executable |

Path to the installed cotclient tool |

|

counters_output_dir |

Directory path to save counters data |

|

ib_device |

Name of the RDMA device of the port used to connect to the fabric |

|

idle_test_time |

Time to run monitor counters without traffic in minutes |

|

format_generate |

Formats the collection counters data with the specified type |

|

hpcx_dir |

Path to the HPC-X directory |

|

reset_counters |

Specify to reset counters before starting the counters collection |

|

stress_test_time |

Time to run monitor counters with traffic in minutes |

|

ufm_telemetry_path |

Path for the UFM Telemetry directory located in the ib_host_manager_server |

|

working_dir |

Path to the working directory on the host |

The following are variable definitions and default values for the fabric health counters collection:

|

Variable |

Default |

Type |

|

clusterkit_path |

'{hpcx_dir}/clusterkit/bin/clusterkit.sh' |

String |

|

collection_interval |

5 |

Integer |

|

cot_executable |

'/opt/nvidia/cot/client/bin/cotclient' |

String |

|

counters_output_dir |

'/tmp/collectx_counters_{date}_{time}/' |

String |

|

ib_device |

'mlx5_0' |

String |

|

idle_test_time |

30 |

Integer |

|

format_generate |

'basic' |

String |

|

hpcx_dir |

'/opt/nvidia/hpcx' |

String |

|

reset_counters |

true |

Boolean |

|

stress_test_time |

30 |

Integer |

|

ufm_telemetry_path |

'{working_dir}/ufm_telemetry' |

String |

|

working_dir |

'/tmp' |

String |

This job performs diagnostics on the fabric's state based on ibdiagnet checks, SM files, and switch commands.

By default, this job template is configured to run against the ib_host_manager group of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "IB Fabric Health Checks".

The following variables are available for running IB Fabric Health Checks:

|

Variable |

Description |

|

check_max_failure_percentage |

Max failure percentage for fabric health checks |

|

cot_executable |

Path to the installed cotclient tool |

|

exclude_scope |

List of node GUIDs and their ports to be excluded |

|

ib_device |

Name of the RDMA device of the port used to connect to the fabric |

|

routing_check |

Specify for routing check |

|

sm_configuration_file |

Path for SM configuration file; supported only when the SM is running on the ib_host_manager |

|

sm_unhealthy_ports_check |

Specify for SM unhealthy ports check; supported only when the SM is running on the ib_host_manager |

|

topology_type |

Type of topology to discover |

|

mlnxos_switch_hostname |

Hostname expression that represents switches running MLNX-OS |

|

mlnxos_switch_username |

Username to authenticate against the target switches |

|

mlnxos_switch_password |

Password to authenticate against the target switches |

The following are variable definitions and default values for the health check:

|

Variable |

Default |

Type |

|

check_max_failure_percentage |

1 |

Float |

|

cot_executable |

'/opt/nvidia/cot/client/bin/cotclient' |

String |

|

exclude_scope |

NULL |

List(String) |

|

ib_device |

'mlx5_0' |

String |

|

routing_check |

True |

Boolean |

|

sm_configuration_file |

'/etc/opensm/opensm.conf' |

String |

|

sm_unhealthy_ports_check |

false |

Boolean |

|

topology_type |

'infiniband' |

String |

|

mlnxos_switch_hostname |

NULL |

String |

|

mlnxos_switch_username |

NULL |

String |

|

mlnxos_switch_password |

NULL |

String |

The following example shows how to exclude ports using the exclude_scope variable:

exclude_scope: ['0x1234@1/3', '0x1235']

In this example, IB Fabric Health Check runs over the fabric except on ports 1 and 3 of node GUID 0x1234 and all ports of node GUID 0x1235.

The following example shows how to configure switch variables:

mlnxos_switch_hostname: 'ib-switch-t[1-2],ib-switch-s1'

mlnxos_switch_username: 'admin'

mlnxos_switch_password: 'my_admin_password'

In this example, IB Fabric Health Check performs a check that requires switch connection over ib-switch-t1, ib-switch-t2, and ib-switch-s1 using the username admin and password my_admin_password for the connection.

This job discovers network topology and updates the database.

By default, this job template is configured to run against the ib_host_manager group of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "IB Network Discovery".

The following variables are required for network discovery:

|

Name |

Default |

Type |

|

api_url |

URL to your cluster bring-up REST API |

String |

For the network discovery to find the IPs of MLNX-OS switches, the ufm_telemetry_path variable is required. This feature is supported for UFM Telemetry version 1.11.0 and above.

The following variables are available for network discovery:

|

Variable |

Description |

|

clear_topology |

Use to clear previous topology data. |

|

ufm_telemetry_path |

Path for the UFM Telemetry folder located on the ib_host_manager_server. Specify for using UFM Telemetry's ibdiagnet tool for the network discovery (e.g., '/tmp/ufm_telemetry'). |

|

switch_username |

Username to authenticate against MLNX-OS switches |

|

switch_password |

Password to authenticate against MLNX-OS switches |

|

cot_python_interpreter |

Path to cluster orchestration Python interpreter |

|

ib_device |

Name of the in-band HCA device to use (e.g., 'mlx5_0') |

|

subnet |

Name of a subnet which the topology nodes of the are member of |

The following are variables definitions and default values for network discovery:

|

Variable |

Default |

Type |

|

clear_topology |

false |

Boolean |

|

ufm_telemetry_path |

NULL |

String |

|

cot_python_interpreter |

'/opt/nvidia/cot/client/bin/python/' |

String |

|

ib_device |

'mlx5_0' |

String |

|

subnet |

'infiniband-default' |

String |

This job installs NVIDIA® UFM® Telemetry on one or more hosts.

By default, this job template is configured to run against the ib_host_manager group of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "UFM Telemetry Upgrade".

The following variables are required for UFM Telemetry installation:

|

Variable |

Description |

|

ufm_telemetry_package_url |

URL for UFM Telemetry to download |

The following variables are available for UFM Telemetry installation:

|

Variable |

Description |

|

working_dir |

Destination path for installing UFM Telemetry. The package will be placed in a subdirectory called ufm_telemetry. Default: /tmp. |

|

ufm_telemetry_checksum |

Checksum of the UFM Telemetry package to download |

This job installs NVIDIA® MLNX_OFED driver on one or more hosts.

Refer to the official NVIDIA Linux Drivers documentation for further information.

By default, this job template is configured to run against the hosts of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "MLNX_OFED Upgrade".

By default, the MLNX_OFED package is downloaded from the MLNX_OFED download center. You must specify the ofed_version (or use its default value) and the ofed_package_url variables when the download center is not available.

The following variables are available for MLNX_OFED installation:

|

Variable |

Description |

|

force |

Install MLNX_OFED package even if it is already up to date |

|

ofed_checksum |

Checksum of the MLNX_OFED package to download |

|

ofed_dependencies |

List of all package dependencies for the MLNX_OFED package |

|

ofed_install_options |

List of optional arguments for the installation command |

|

ofed_package_url |

URL of the MLNX_OFED package to download (default: auto-detection). In addition, you must specify the ofed_version parameter or use its default value. |

|

ofed_version |

Version number of the MLNX_OFED package to install |

|

working_dir |

Path to the working directory on the host |

The following are variable definitions and default values for MLNX_OFED installation:

|

Variable |

Default |

Type |

|

force |

false |

Boolean |

|

ofed_checksum |

'' |

String |

|

ofed_dependencies |

[] |

List |

|

ofed_install_options |

[] |

List |

|

ofed_package_url |

'' |

String |

|

ofed_version |

23.04-0.5.3.3 |

String |

|

working_dir |

'/tmp' |

String |

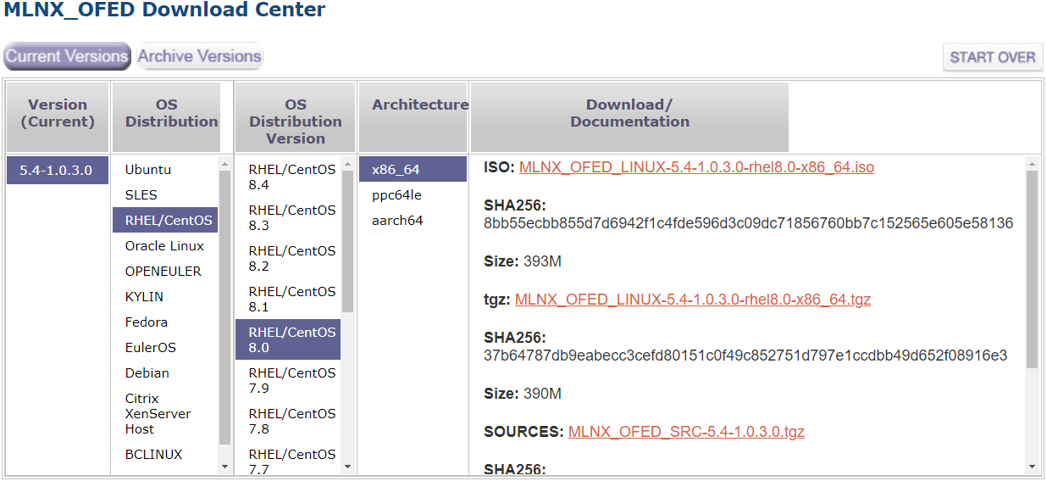

The following example shows MLNX_OFED for RHEL/CentOS 8.0 on the MLNX_OFED Download Center:

ofed_checksum: 'SHA256: 37b64787db9eabecc3cefd80151c0f49c852751d797e1ccdbb49d652f08916e3' ofed_version: '5.4-1.0.3.0'

This job installs updates system firmware/OS software on one or more MLNX-OS switches.

By default, this job template is configured to run against the ib_host_manager group of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "MLNX-OS Upgrade".

Make sure all required variables described below are defined before running this job. You can define these variables either as inventory variables or as job template variables.

The following variables are required to update MLNX-OS system:

|

Variable |

Description |

Type |

|

mlnxos_image_url |

URL of the MLNX-OS image to download |

String |

|

switch_username |

Username to authenticate against target switches |

String |

|

switch_password |

Password to authenticate against target switches |

String |

|

switches |

List of IP addresses/hostnames of the switches to upgrade |

List[String] |

The following variables are available to update MLNX-OS system:

|

Variable |

Description |

|

command_timeout |

Time (in seconds) to wait for the command to complete |

|

force |

Update MLNX-OS system even if it is already up to date |

|

image_url |

Alias name for mlnxos_image_url. This variable item is not available when the mlnxos_image_url is set. |

|

reload_command |

Specify an alternative command to reload switch system |

|

reload_timeout |

Time (in seconds) to wait for the switch system to reload |

|

remove_images |

Determine whether to remove all images on disk before system upgrade starts |

The following are variable definitions and default values for update MLNX-OS system:

|

Variable |

Default |

Type |

|

command_timeout |

240 |

Integer |

|

force |

false |

Boolean |

|

reload_command |

'"reload noconfirm"' |

String |

|

reload_timeout |

200 |

Integer |

|

remove_images |

false |

Boolean |

This job executes configuration commands on one or more MLNX-OS switches.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "MLNX-OS Configure".

The following variables are required to configure MLNX-OS system:

|

Variable |

Description |

Type |

|

switch_config_commands |

List of configuration commands to execute |

List[String] |

|

switch_username |

Username to authenticate against target switches |

String |

|

switch_password |

Password to authenticate against target switches |

String |

|

switches |

List of IP addresses/hostnames of the switches to configure |

List[String] |

The following variables are available to configure MLNX-OS system:

|

Variable |

Description |

|

save_config |

Indicates to save the system configuration after the execution completed |

The following are variable definitions and default values to configure MLNX-OS system:

|

Variable |

Default |

Type |

|

save_config |

true |

Boolean |

This job installs NVIDIA® MFT package on one or more hosts.

Refer to the official Mellanox Firmware Tools documentation for further information.

By default, this job template is configured to run against the hosts of IB Cluster Inventory.

To run this job template:

Go to Resources > Templates.

Click the Launch Template button on "MFT Upgrade".

By default, the MFT package is downloaded from the MFT download center. You must specify the mft_version (or use its default value) and the mft_package_url variables when the download center is not available.

The following variables are available for MFT installation:

|

Variable |

Description |

|

force |

Install MFT package even if it is already up to date |

|

mft_checksum |

Checksum of MFT package to download |

|

mft_dependencies |

List of all package dependencies for the MFT package |

|

mft_install_options |

List of optional arguments for the installation command |

|

mft_package_url |

URL of the MFT package to download (default: auto-detection). In addition, you must specify the mft_version parameter or use its default value. |

|

mft_version |

Version number of the MFT package to install |

|

working_dir |

Path to the working directory on the host |

The following are variable definitions and default values for MFT installation:

|

Variable |

Default |

Type |

|

force |

false |

Boolean |

|

mft_checksum |

'' |

String |

|

mft_dependencies |

[] |

List |

|

mft_install_options |

[] |

List |

|

mft_package_url |

'' |

String |

|

mft_version |

'4.24.0-72' |

String |

|

working_dir |

'/tmp' |

String |

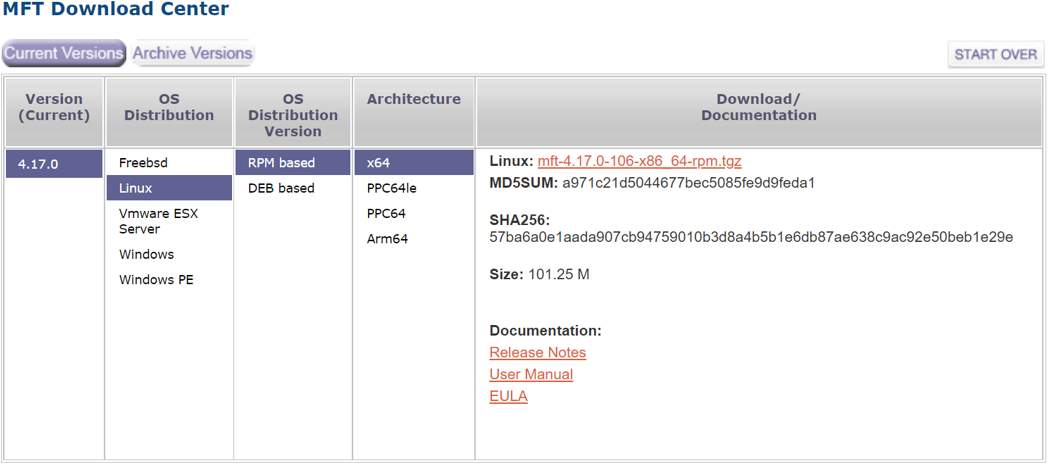

The following example shows MFT for RedHat on the MFT Download Center:

mft_checksum: 'sha256: 57ba6a0e1aada907cb94759010b3d8a4b5b1e6db87ae638c9ac92e50beb1e29e' mft_version: '4.17.0-106'

This job installs NVIDIA® HPC-X® package on one or more hosts.

Refer to the official NVIDIA HPC-X documentation for further information.

By default, this job template is configured to run against the hosts of IB Cluster Inventory. You must set the hpcx_install_once variable to true when installing the HPC-X package to a shared location.

To run this job template:

Go to Resources > Templates.

Click the "Launch Template" button on "HPC-X Upgrade".

By default, the HPC-X package is downloaded from the HPC-X download center. You need to specify the hpcx_version (or use its default value) and the hpcx_package_url variables when the download center is not available.

The following variables are available for HPC-X installation:

|

Variable |

Description |

|

force |

Install HPC-X package even if it is already up to date |

|

hpcx_checksum |

Checksum of the HPC-X package to download |

|

hpcx_dir |

Target path for HPC-X installation folder |

|

hpcx_install_once |

Specify whether to install HPC-X package via single host. May be used to install the package on a shared directory. |

|

hpcx_package_url |

URL of the HPC-X package to download (default: auto-detection). In addition, you must specify the hpcx_version parameter or use its default value. |

|

hpcx_version |

Version number of the HPC-X package to install |

|

ofed_version |

Version number of the OFED package compatible to the HPC-X package. This variable item is required when MLNX_OFED is not installed on the host. |

|

working_dir |

Path to the working directory on the host |

The following are variable definitions and default values for HPC-X installation:

|

Variable |

Default |

Type |

|

force |

false |

Boolean |

|

hpcx_checksum |

'' |

String |

|

hpcx_dir |

'/opt/nvidia/hpcx' |

String |

|

hpcx_install_once |

false |

Boolean |

|

hpcx_package_url |

'' |

String |

|

hpcx_version |

'2.15.0' |

String |

|

ofed_version |

'' |

String |

|

working_dir |

'/tmp' |

String |

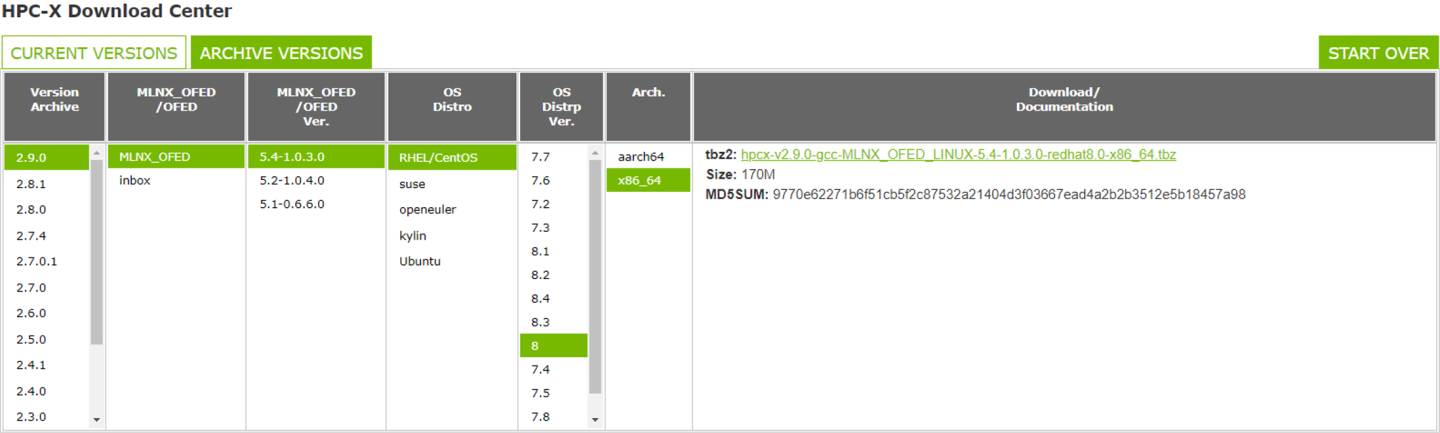

The following example shows HPC-X for RedHat 8.0 on the HPC-X Download Center:

hpcx_checksum: 'sha256: 57ba6a0e1aada907cb94759010b3d8a4b5b1e6db87ae638c9ac92e50beb1e29e' hpcx_version: '2.9.0' ofed_version: ''

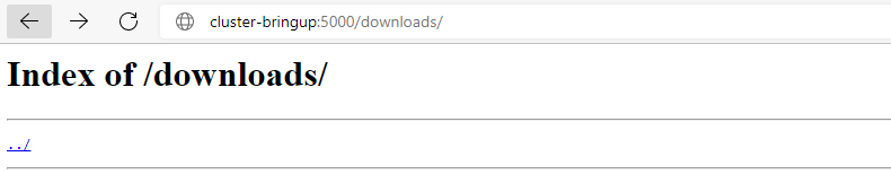

A file server is useful when you must access files (e.g., packages, images, etc.) that are not available on the WEB.

The files can be accessed over the following URL: http://<host>:<port>/downloads/ where host (IP address/hostname) and port are the address of your cluster bring-up host.

For example, if cluster-bringup is the hostname of your cluster bring-up host and the TCP port is 5000 as defined in the suggested configuration, then files can be accessed over the URL http://cluster-bringup:5000/downloads/.

To see all available files, open your browser and navigate to http://cluster-bringup:5000/downloads/.

Create a directory for a specific cable firmware image and copy a binary image file into it. Run:

[root@cluster-bringup ~]# mkdir -p \ /opt/nvidia/cot/files/linkx/rel-38_100_121/iffu [root@cluster-bringup ~]# cp /tmp/hercules2.bin \ /opt/nvidia/cot/files/linkx/rel-38_100_121/iffu

The file can be accessed over the URL http://cluster-bringup:5000/downloads/linkx/rel-38_100_121/iffu/hercules2.bin.

To see all available files, open a browser and navigate to http://cluster-bringup:5000/downloads/.

To see the image file, navigate to http://cluster-bringup:5000/downloads/linkx/rel38_100_121/iffu/.