RDG for VMware vSphere 8.0 with NSX 4.x Accelerated by NVIDIA Networking

Created on Dec 12 2022

Scope

This document provides details on the Enhanced Data Path (EDP) mode configuration on a VDS switch, using an NVIDIA network fabric to achieve a high performance data plane.

VMware ESXi, vSphere Cluster, vCenter and NSX installation and configuration are out of the scope of this document.

Abbreviations and Acronyms

|

Term |

Definition |

Term |

Definition |

|

DPDK |

Data Plane Development Kit |

PMD |

Poll Mode Driver |

|

DAC |

Direct Attach Copper |

SR-IOV |

Single Root I/O Virtualization |

|

EDP |

Enhanced Data Path |

RSS |

Receive Side Scaling |

|

ENS |

Enhanced Networking Stack |

TEP |

Tunnel Endpoints |

|

FPO |

Flow Processing Offload |

VDS |

|

|

MPPS |

Million Packets per Second |

VM |

Virtual Machine |

|

NFV |

Network Function Virtualization |

VF |

Virtual Function |

|

NIC |

Network Interface Card |

VNF-C |

Virtual Network Function Component |

|

NUMA |

Non-Uniform Memory Access |

vNIC |

Virtual Network Interface |

References

How-to: Install NVIDIA Firmware Tools (MFT) on VMware ESXi 6.7/7.0.

How-to: Firmware Update for NVIDIA ConnectX-5/6 Adapter on VMware ESXi 6.5 and Above.

NVIDIA® Data Plane Development Kit (DPDK) | Poll Mode Driver (PMD)

Introduction

This document describes how to configure the VMware vSphere 8.0 cluster and VMware NSX 4.0.1.1 with NVIDIA ConnectX-6 DX network adapter. The ENS Model 1 and Model 0 for DPDK application on Ubuntu 20.04 Virtual Machine on top of ESXi 8.0 GA native driver will be configured.

This guide assumes the following software and drivers are installed:

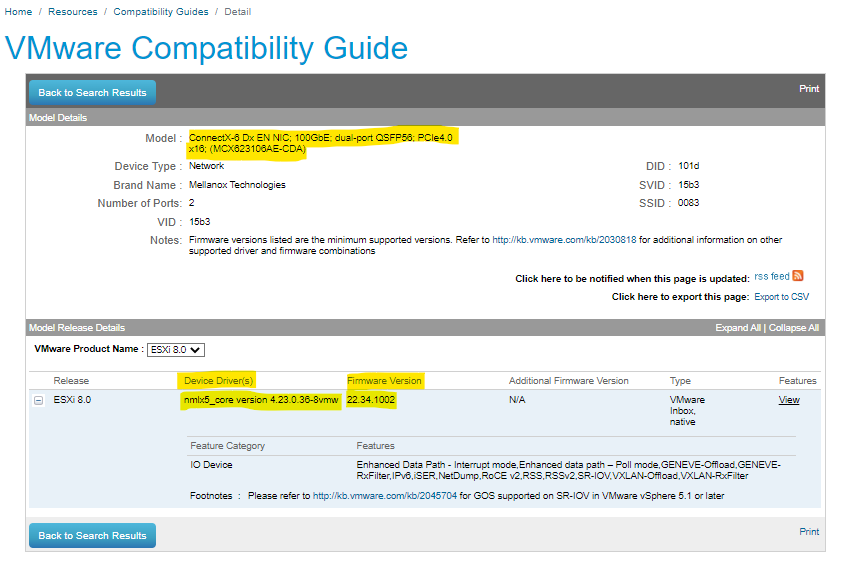

NVIDIA® ConnectX®-6 Dx with EXi driver version 4.23.0.36 and driver firmware version 22.34.1002

TRex v2.87 as a packet generator

ENS Model 0

In Model 0 there is n o hardware offload. All procedures are performed in the software (ENS).

Model 0 is supported on NVIDIA ConnectX-4 and newer cards from ESXi Version 6.7 and above, and supports the following features:

RSS (from ESXi Version 7.0u2).

Offload of inner and/or outer checksum validation to NVIDIA ConnectX hardware.

ENS Model 1

In ENS Model 1, NIC offloads support partial flow processing, such as packet classification and decapsulation on Rx. Therefore, even when the flow processing is offloaded to the hardware, the VMkernel networking stack must still see the packets and finish the processing on the Rx path, or preprocess them for encapsulation offload.

ENS Model 1 works with GENEVE but not with VXLAN.

EDP Modes

Interrupt mode: In this mode, the network interface card (NIC) driver receives packets through interrupt signals. Although this mode has a relatively high latency, it does not consume significant CPU cores overhead.

Poll mode: In this mode, the NIC driver constantly checks the NIC hardware for incoming packets. This mode offers low latency reception of packets but requires dedicated CPU cores to continuously poll for incoming packets.

Model 1 supports both modes — Poll mode and Interrupt mode.

Solution Architecture

Key Components and Technologies

NVIDIA® Cumulus® Linux is the industry's most innovative open network operating system that allows you to automate, customize, and scale your data center network like no other.

NVIDIA Spectrum Ethernet Switches

Flexible form-factors with 16 to 128 physical ports, supporting 1GbE through 400GbE speeds.

Based on a ground-breaking silicon technology optimized for performance and scalability, NVIDIA Spectrum switches are ideal for building high-performance, cost-effective, and efficient Cloud Data Center Networks, Ethernet Storage Fabric, and Deep Learning Interconnects.

NVIDIA combines the benefits of NVIDIA Spectrum™ switches, based on an industry-leading application-specific integrated circuit (ASIC) technology, with a wide variety of modern network operating system choices, including NVIDIA Cumulus® Linux , SONiC and NVIDIA Onyx®.

NVIDIA ConnectX SmartNICs

10/25/40/50/100/200 and 400G Ethernet Network Adapters

The industry-leading NVIDIA® ConnectX® family of smart network interface cards (SmartNICs) offer advanced hardware offloads and accelerations.

NVIDIA Ethernet adapters enable the highest ROI and lowest Total Cost of Ownership for hyperscale, public and private clouds, storage, machine learning, AI, big data, and telco platforms.

The NVIDIA® LinkX® product family of cables and transceivers provides the industry’s most complete line of 10, 25, 40, 50, 100, 200, and 400GbE in Ethernet and 100, 200 and 400Gb/s InfiniBand products for Cloud, HPC, hyperscale, Enterprise, telco, storage and artificial intelligence, data center applications.

NSX is a multi-hypervisor capable networking and security platform. It is a highly extensible platform that can address endpoint heterogeneity including containers, Kubernetes support, public clouds and cross cloud services with AWS, and other hypervisors.

DPDK is a set of libraries and drivers that support accelerated software processing for greater throughput and scalability.

DPDK enables higher levels of packet processing throughput than what is achievable using the standard Linux kernel network stack.

This optimized library gives application developers the ability to address challenging data plane processing needs that are typically found in Telecom workloads.Enhanced Network Stack (also appears as Enhanced Datapath) is a networking stack mode which provides superior network performance when configured and enabled. It is primarily utilized in NFV workloads, which require the performance benefits this mode provides.

ENS utilizes the DPDK Poll Mode driver model to significantly improve packet rate and latency for small message sizes.Enhanced Datapath - Standard: This mode is a variant of the Enhanced Data Path mode. It is available only on ESXi hypervisor 7.0 and later versions. Please consult your account representative for applicability.

Enhanced Datapath - Performance: This is the Enhanced Data Path switch mode for ESXi host transport node. This mode provides accelerated networking performance. It requires nodes to use VMXNET3 vNIC enabled network cards. It is not supported on NSX Edge nodes and Public Gateways. The supported hypervisor is ESXi. It is recommended to run ESXi v6.7 U2 and later versions.

TRex - Realistic Traffic Generator

TRex is an open-source, low-cost, stateful, and stateless traffic generator fueled by DPDK. It generates L4-7 traffic based on pre-processing and smart replay of L7 traffic templates. TRex amplifies both client and server side traffic and can scale up to 200Gb/sec with one UCS.

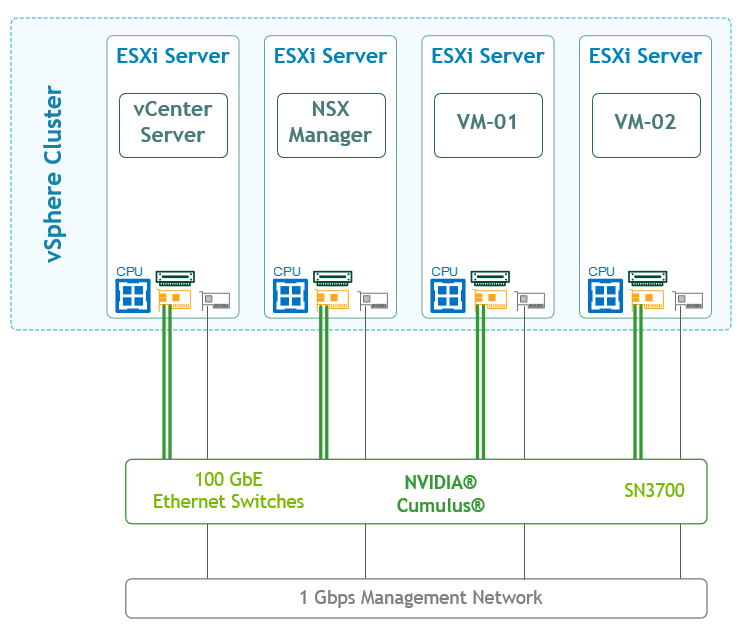

Logical Design

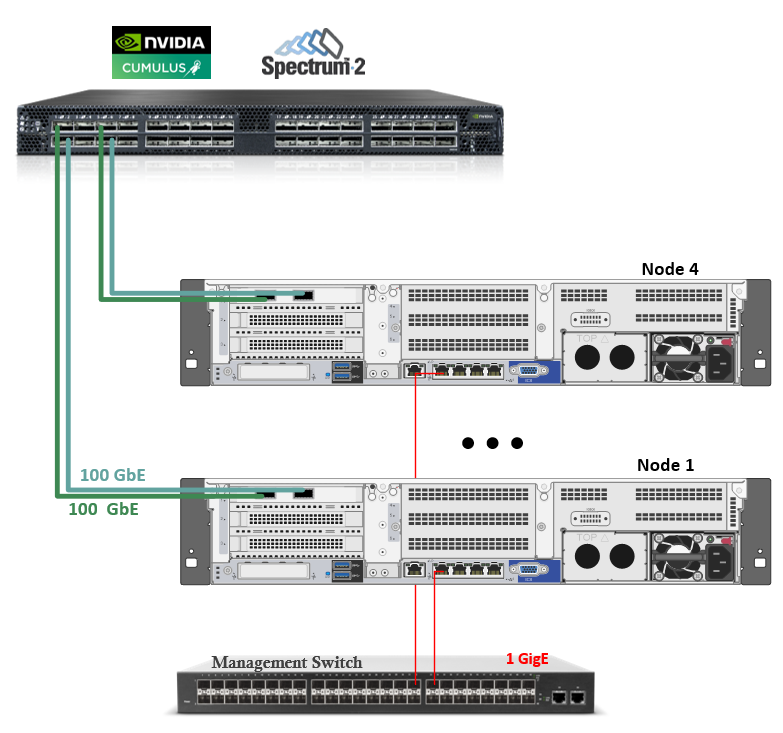

The setup used here includes 4 ESXi servers connected to one NVIDIA® Spectrum®-2 SN3000 Ethernet switch.

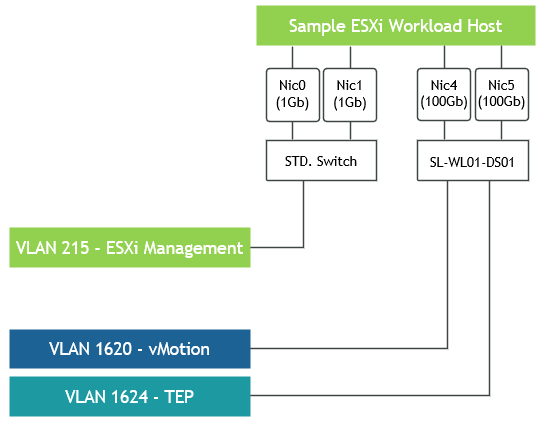

Host Network Design

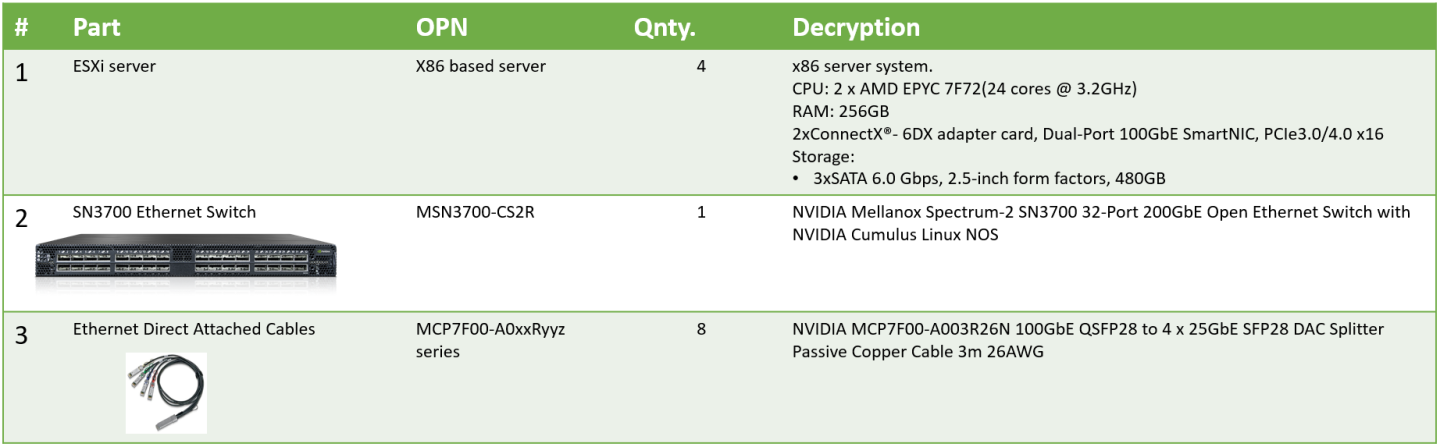

Bill of Materials

vSphere Cluster

Deployment and Configuration

Wiring

Setup Configuration

This document does not cover highly available VMware vSphere cluster deployment.

Before starting the configuration process, make sure you are familiar with VMware vSphere, vCenter and NSX deployment and management procedures.

This guide does not cover the required vSphere and vCenter installation and configuration, as these are often based on customer's requirements.

The installation process requires administrator privileges on the target machine.

In addition, the following components are required:

1. ESXi server platform with NVIDIA® ConnectX®-6 Dx network adapter with inbox ESXi driver and firmware version 22.34.1002.

2. NVIDIA® Spectrum®-2 SN3000 Ethernet switch.

3. VMware vSphere 8.0 Cluster installed and configured.

4. VMware vCenter 8.0a installed and configured.

5. VMware NSX 4.0.1.1 .

Network

Prerequisites

Switch OS

NVIDIA Cumulus 5

.1.

Management Network

DHCP and DNS services are required.

The components installation and configuration are not covered in this guide.

Network Switch Configuration

Switch Configuration

Run the following commands on both Leaf switches in the vSphere Cluster to configure the VLAN .

Switch console

nv set interface swp1-32 bridge domain br_default

nv set bridge domain br_default vlan 215,1620,1624

nv set bridge domain br_default untagged 215

nv config apply

nv config save

Hosts Preparation

Hosts in the vSphere Cluster must be configured before a data plane intensive workload can be attached to the VDS Enhanced switch.

To prepare the host for setup:

Physical server configuration

All ESXi servers must have the same PCIe placement for the NIC and expose the same interface name.

vSphere cluster with minimum 3 VMware vSphere ESXi 8.0 hosts

vCenter 8.0 server

WarningInstallation of vCenter, ESXi hosts and configuration vSphere cluster are beyond the scope of this document.

Installer privileges: The installation requires administrator privileges on the target machine

Connection to ESXi host management interface

High speed network connectivity

Verify that NTP is configured in your environment, and that it works properly.

Make sure to:

Disable Virtualization (SR-IOV) in the BIOS (prerequisites).

Disable SR-IOV in the firmware and in the MLNX_OFED Driver. See the following document for further information.

To ensure optimal performance:

Configure BIOS for optimal performance.

Enable CPU hyperthreading.

Enable Turbo Boost.

Disable NUMA node interleaving.

Power Management: Set this setting to "High" or "Maximum Performance" (verbiage depending on the vendor) to ensure that the CPUs always runs at least at the base frequency and uses the shallowest idle. The VMworld 2019 “Extreme Performance Series: Performance Best Practices (HBI2526BE)” presentation is an excellent source of information about the Power Management technology. Its major conclusion, however, does not apply to this workload, which may not benefit from higher maximum Turbo Boost frequencies and could be at greater risk of jitter.

Enable Hyperthreading on the ESXi server:

Enable this setting on systems that support it. Hyperthreading allows a single processor core to run two independent threads simultaneously. On processors with hyperthreading, each core can have two logical threads that share the core's resources, such as memory caches and functional units. BIOS providers might refer to the hyperthreaded core as a ‘Logical Processor’.

Turbo Boost: Enable this setting in the BIOS. It allows the processor to operate faster than the rated frequency for peak loads. For more information about Turbo Boost, see Frequently Asked Questions on the Intel Turbo Boost Technology page on Intel's website.

NUMA Node Interleaving: Ensure that this setting is disabled. With the NUMA node interleaving setting enabled, the hypervisor sees the available memory as one contiguous area. Therefore, the ability to place memory pages local to the CPU is lost, and the hypervisor sees all resources on the host as local.

Network Adapter Compatibility

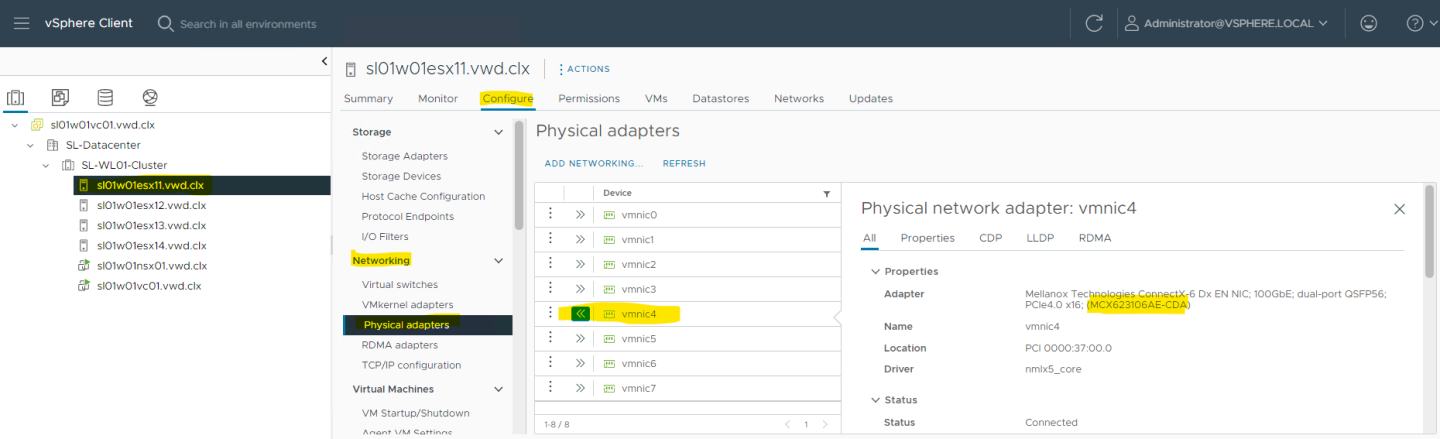

To check the NIC's model, go to the vSphere Client, and navigate to:

ESXi server > "Configure" > "Networking" > "Physical Adapters".

Look for the relevant device on the right pane.

For example, in the below image, the

MCX623106AE-CDA

adapter card OPN is shown.

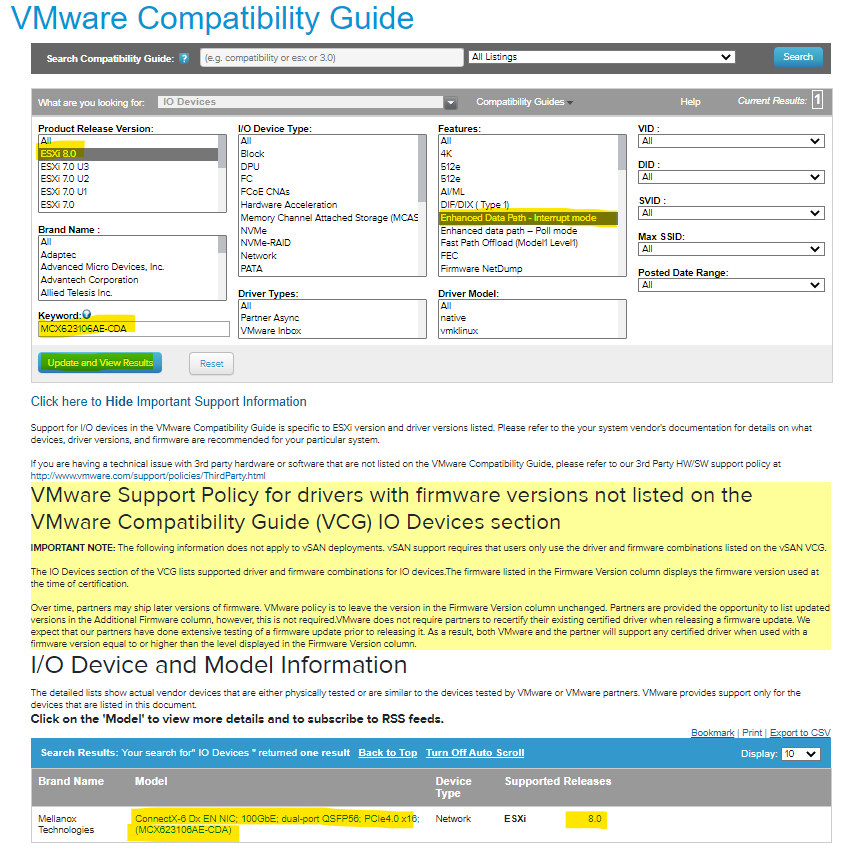

To check if the NIC supports Enhanced Data Path, go to the VMware Compatibility Guide, and follow the below steps:

Go to "Product Release Version", and select the ESXi version.

Go to "Features", and select "Enhanced Data Path - Interrupt Mode" and "Enhanced Data Path – Poll Mode".

Under Keyword, inset the NIC OPN.

Look for your NIC OPN in the list under "I/O Device and Model Information".

Click the model link to check the driver and firmware versions.

Network Adapter Firmware update

To update the firmware to version 22.34.1002, please refer to the following link: HowTo Install NVIDIA Firmware Tools (MFT) on VMware ESXi 6.7 and 7.0 - Solutions - NVIDIA Networking Docs.

Hosts Network Configuration

Prerequisites

vSphere SL-WL01-Cluster clusters with VMware vSphere ESXi 8.0 or above hosts.

vCenter 8.0a or above.

The installation requires administrator privileges on the target machine.

Connection to ESXi host management interface.

High speed network connectivity.

This table provides details on the ESXi servers and switches in the SL-WL01-Cluster cluster, system names and their network configurations.

|

Server |

Server Name |

IP and NICs |

|

|

High-Speed Ethernet Network |

Management Network 192.168.1.0/24 |

||

|

ESXi-01 |

sl01w01esx11 |

vmk1: 192.168.22.111 (vMotion) |

vmk0: 192.168.1.111 From DHCP (reserved) |

|

ESXi-02 |

sl01w01esx12 |

vmk1: 192.168.22.112 (vMotion) |

vmk0: 192.168.1.112 From DHCP (reserved) |

|

ESXi-03 |

sl01w01esx13 |

vmk1: 192.168.22.113 (vMotion) |

vmk0: 192.168.1.113 From DHCP (reserved) |

|

ESXi-04 |

sl01w01esx14 |

vmk1: 192.168.22.114 (vMotion) |

vmk0: 192.168.1.114 From DHCP (reserved) |

|

Leaf-01 |

clx-swx-035 |

- |

10.7.215.37 |

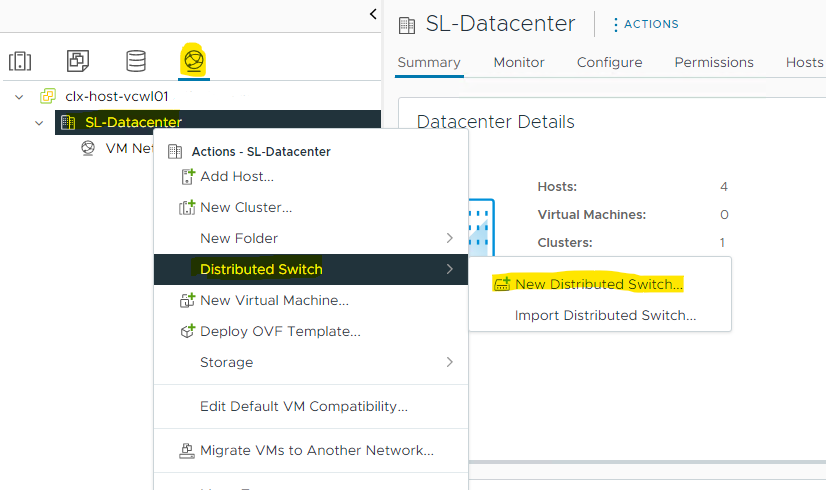

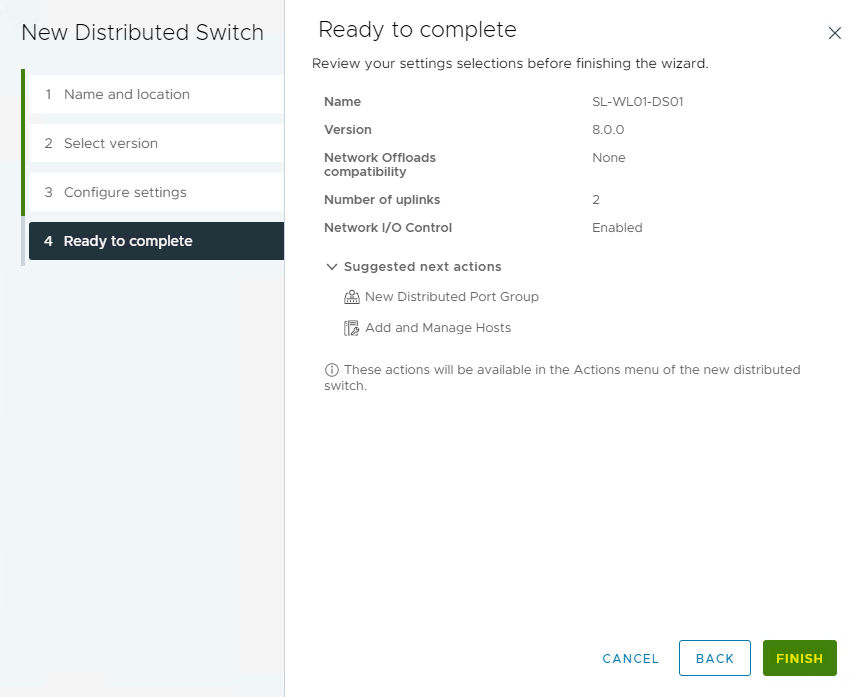

Creating a Distributed Switch for DPDK Traffic

To create a new vDS:

Launch the vSphere Web Client, and connect to a vCenter Server instance.

Create a distributed switch for vSAN traffic.

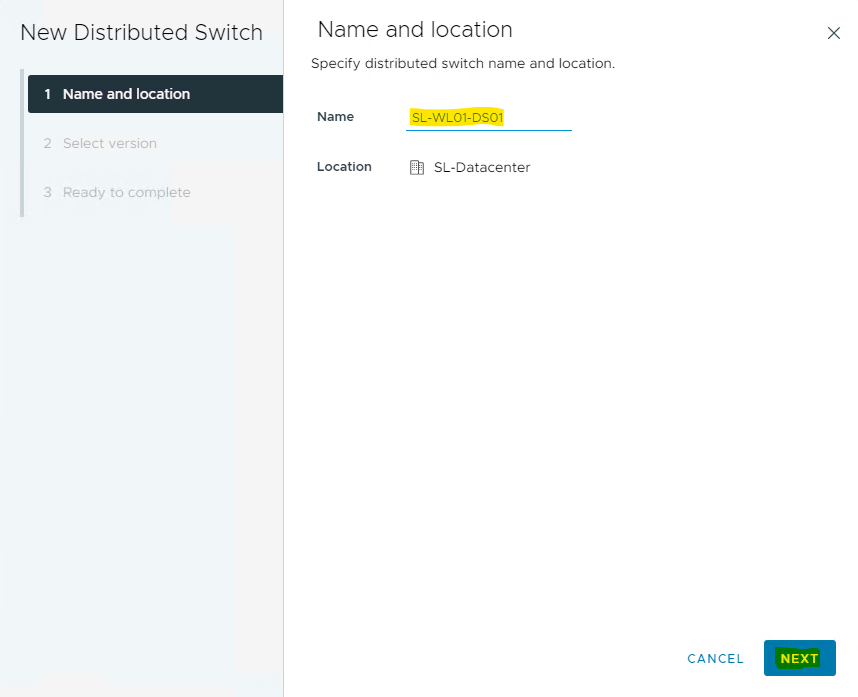

Provide a name for the new distributed switch , and select the location in which you would like to store the new vDS within the vCenter inventory (a data center object or a folder).

Click NEXT .

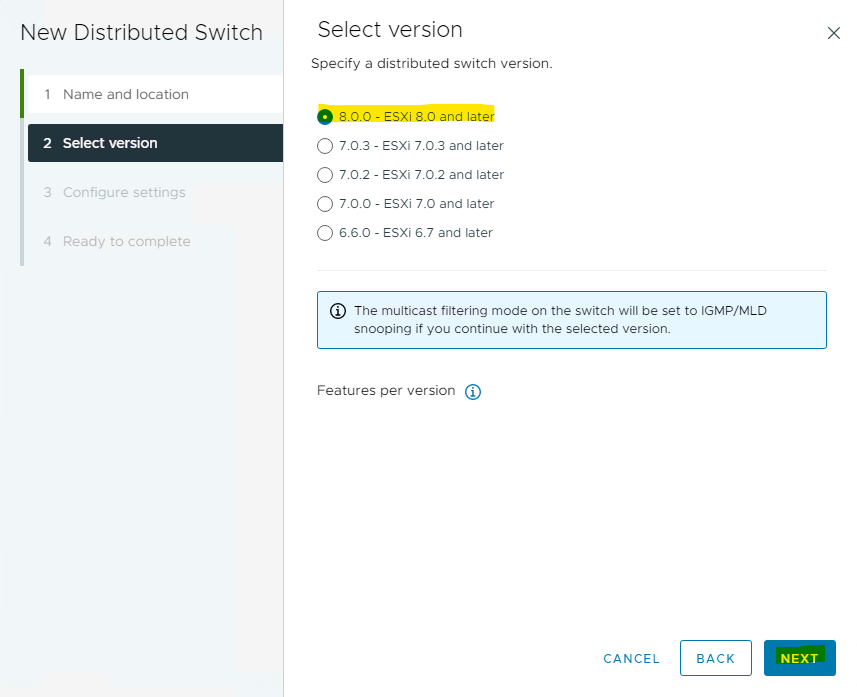

Select the version of the vDS to create.

Click NEXT.

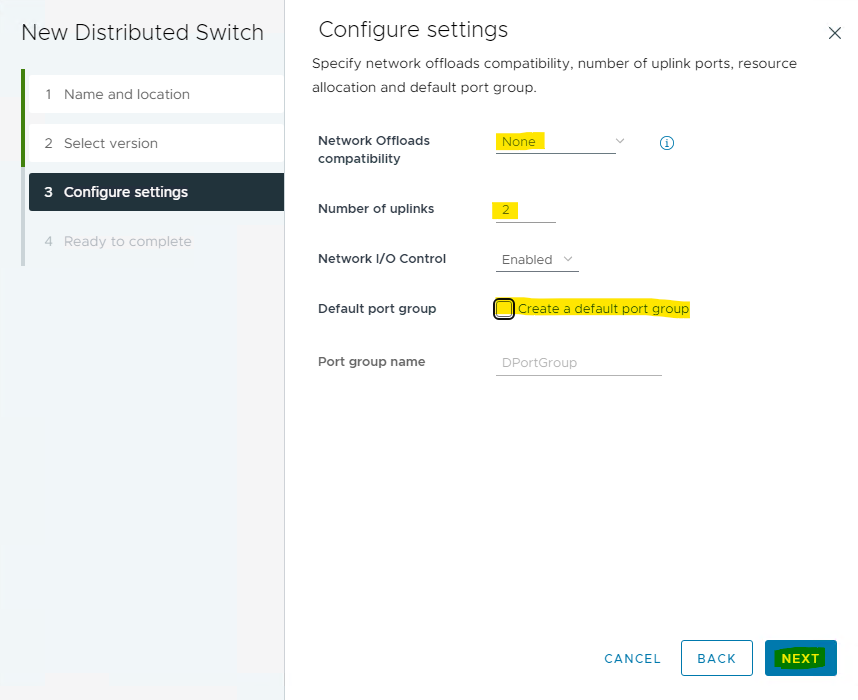

Specify the Netwrok Offloads compatibility as None, and the number of uplink ports as 2. Uncheck the Create a default port group box, and enter a name for that group.

Click NEXT.

Click Finish.

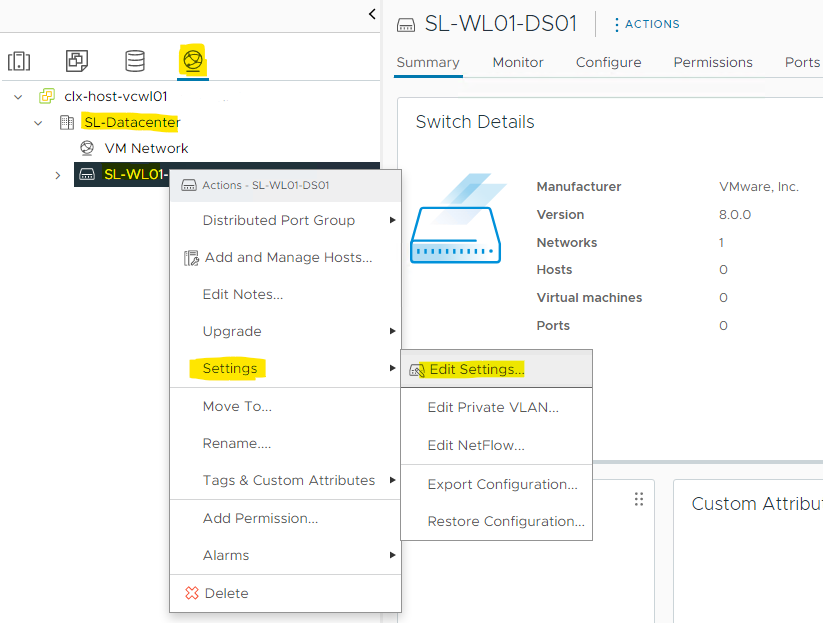

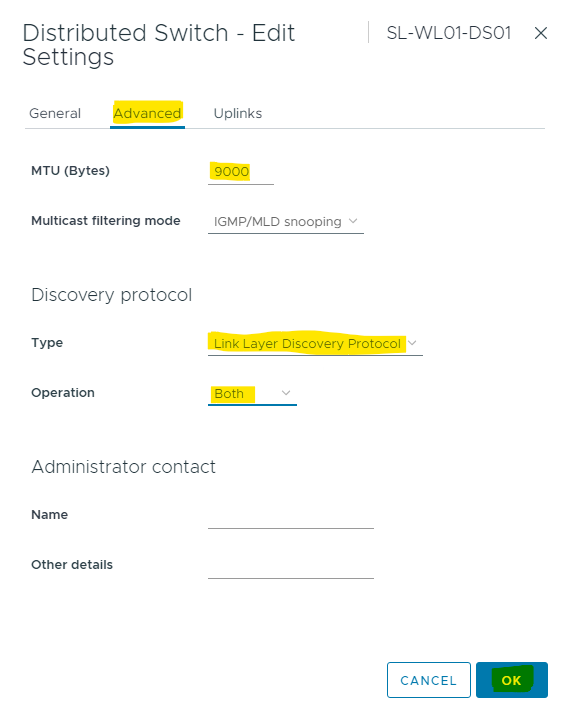

Set the MTU for the newly created distributed switch.

Right-click the new distributed switch in the list of objects, and select Settings → Edit Settings... from the Actions menu.

In the Storage-DSwitch-Edit Settings dialog box, set the MTU to 9000, Discovery Protocol to Link Layer Discovery Protocol and Operation to Both.

Click OK.

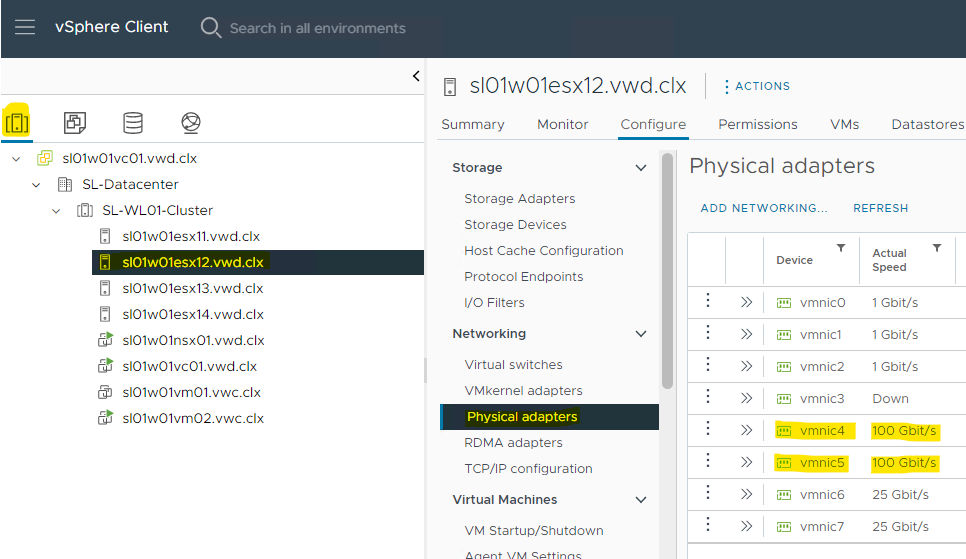

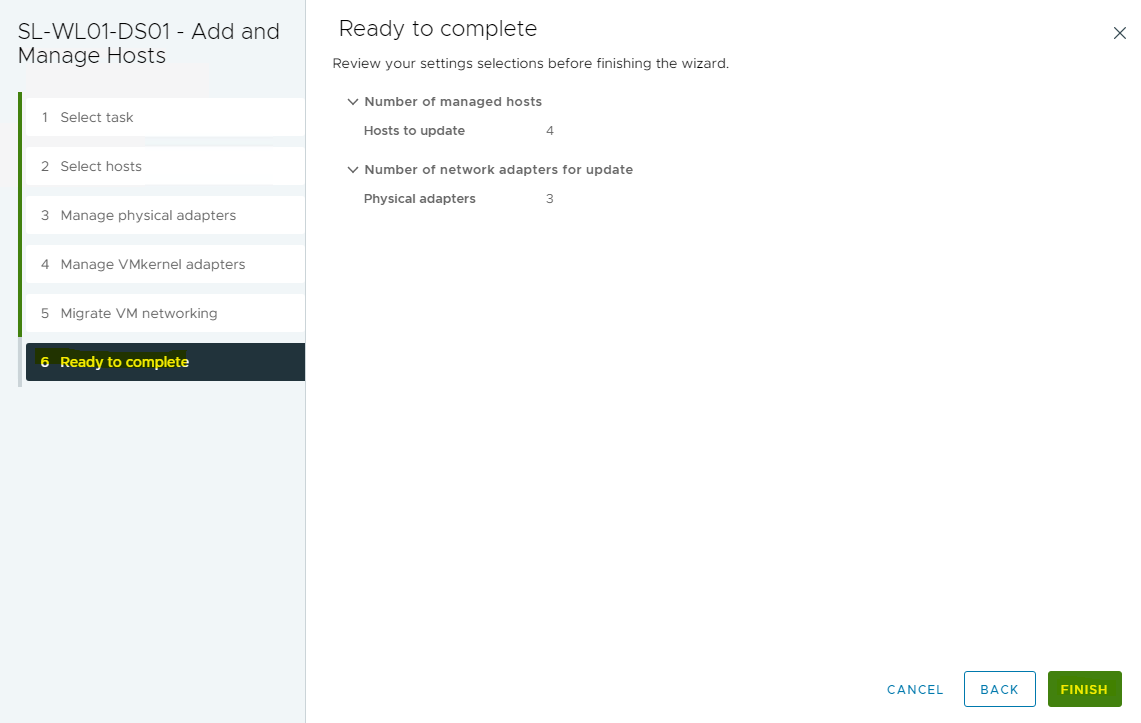

Adding Hosts to a vDS

To add an ESXi host to an existing vDS:

Launch the vSphere Web Client, and connect to a vCenter Server instance.

Navigate to the list of Hosts in the SL MGMT cluster, and select ESXi host.

Select Configure → Networking → Physical adapters.

Check the network ports that you are going to use. In this case, vmnic4 and vmnic5 are used.

Navigate to the list of distributed switches.

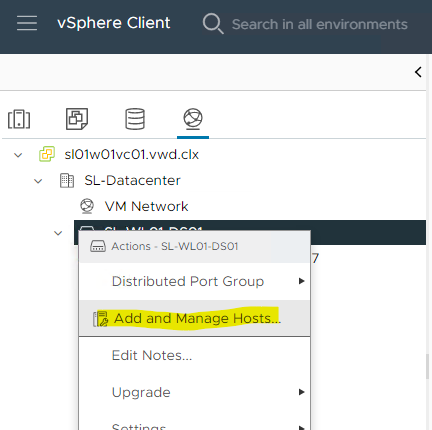

Right-click on the new distributed switch in the list of objects, and select Add and Manage Hosts from the Actions menu.

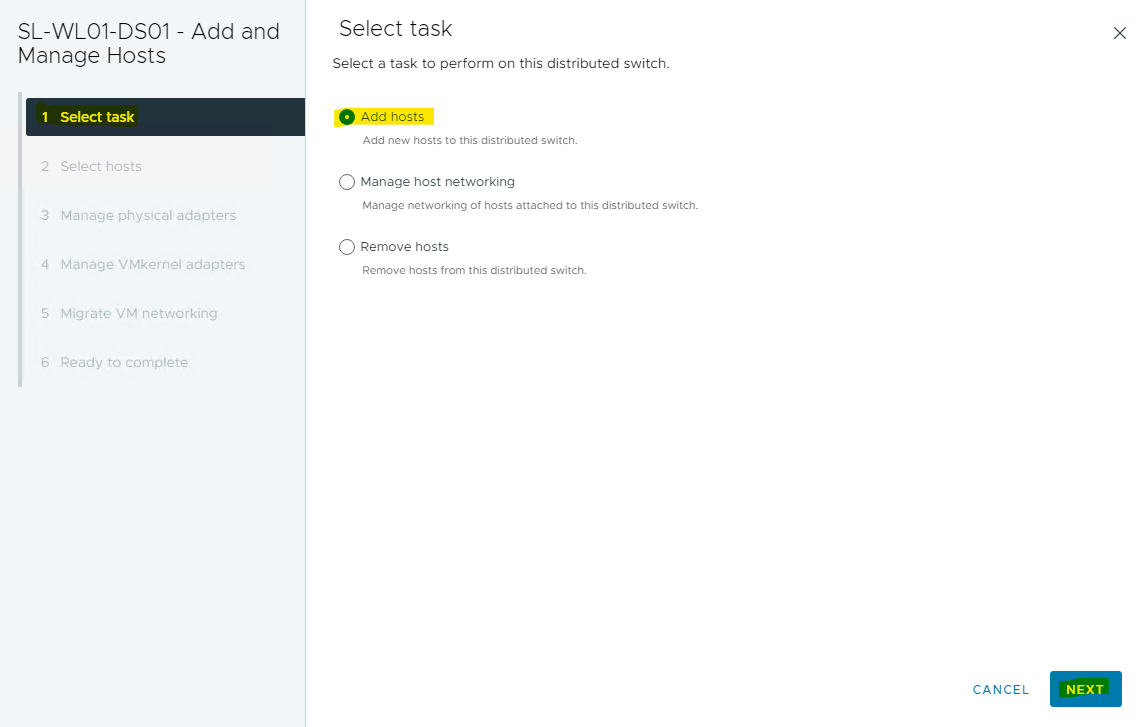

Select the Add hosts button, and click NEXT .

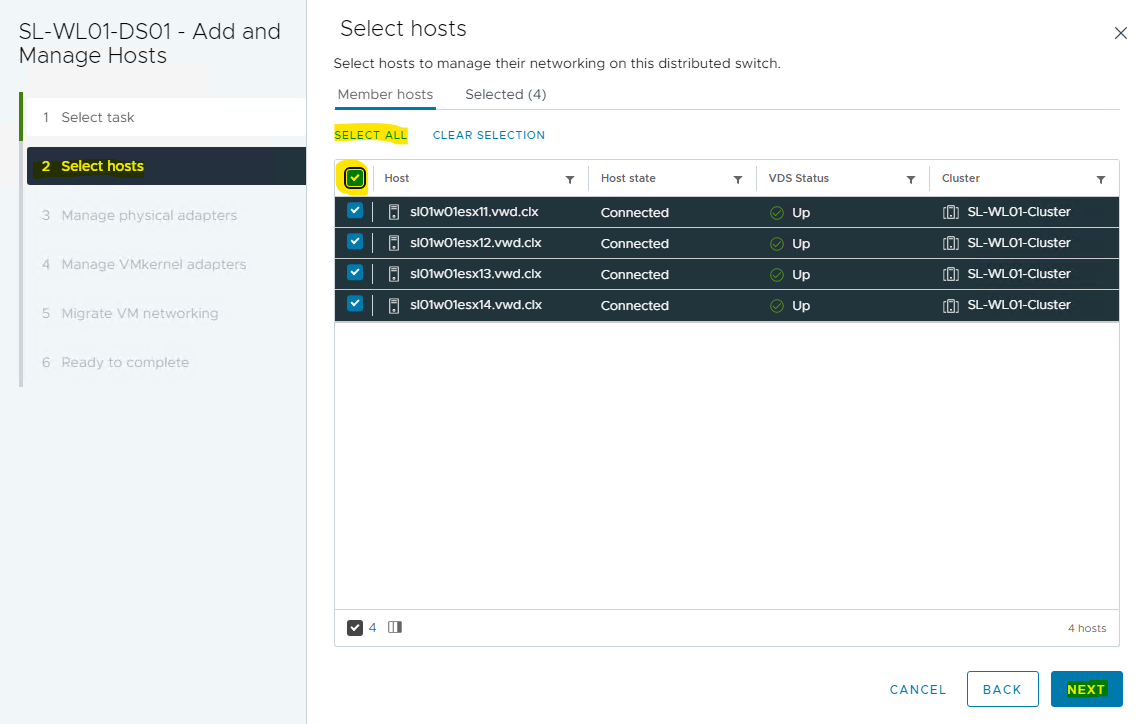

From the list of the new hosts, check the boxes with the names of each ESXi host you would like to add to the VDS.

Click NEXT.

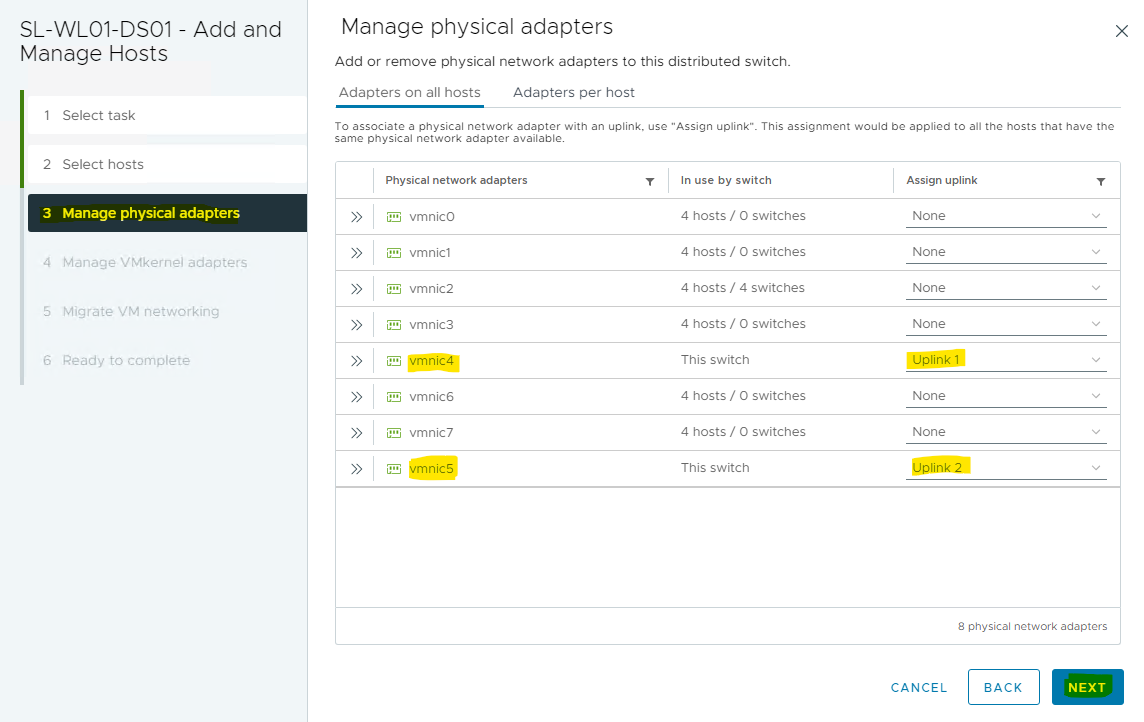

In the next Manage physical adapters menu, click on Adapters on all hosts and configure the adapters (in this case - vmnic4 and vmnic5) in an ESXi host as Uplink 1 and Uplink 2 for the VDS.

In the next Manage VMkernel adapters and Migrate VM networking menus, click NEXT to continue.

Click FINISH.

Adding a Port Group and a VMkernel Network for vMotion traffic is out of the scope of this document.

VMware NSX Manager Installation and Configuration

Prerequisites

Below are the prerequisites for deploying an NSX Manager.

Necessary resources to deploy NSX-Manager appliance on vCenter.

Port group for Management network. It is preferable to keep on the same network as vCenter.

Allocate IP address for NSX Manager. Allocate 4 IPs in case you plan to growth to 3 nodes cluster.

In this guide we use only 1 NSX Manager.

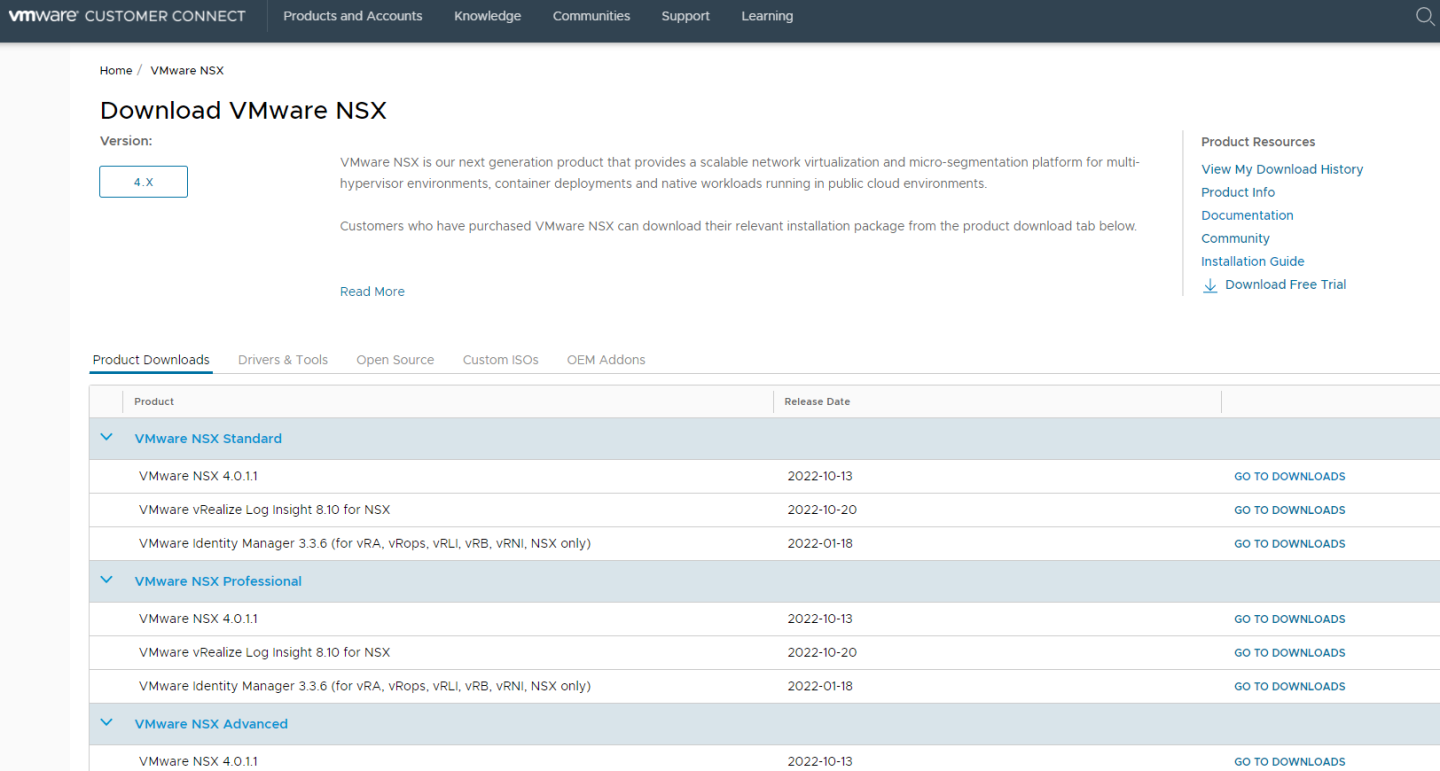

NSX licenses and media OVA file downloaded from the VMware website.

Create a DNS A record for the NSX-Manager Hostnames.

Network Latency Requirements

The maximum network latency between NSX Managers in a NSX Manager cluster is 10ms.

The maximum network latency between NSX Managers and Transport Nodes is 150ms.

Storage Requirements

The maximum disk access latency is under 10ms.

It is recommended that NSX Managers are placed in a shared storage.

Storage should be highly available to avoid an outage causing all NSX Manager file systems to be set into a read-only mode upon a storage failure event.

Please consult relevant documentation for your storage technology on how to optimally design a highly available storage solution.

Deployment

Download the NSX manager OVA from my.vmware.com.

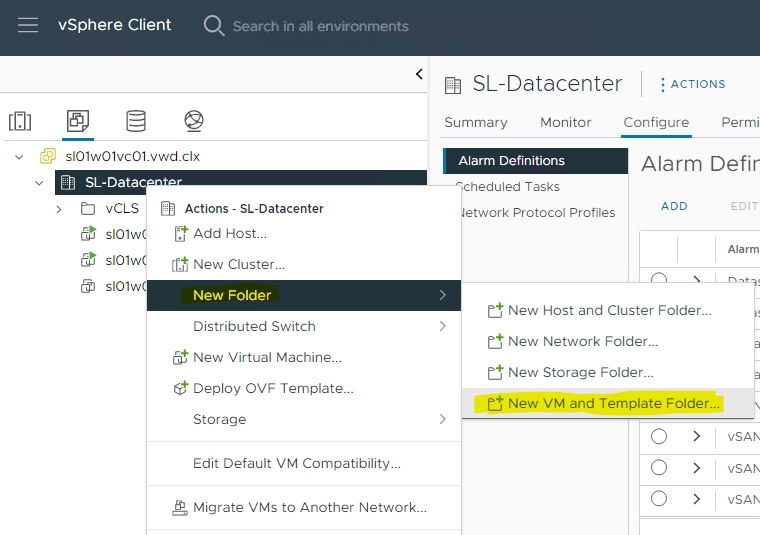

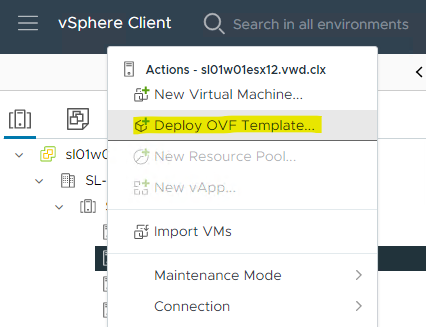

Login to the vCenter.

Create a new VM Folder.

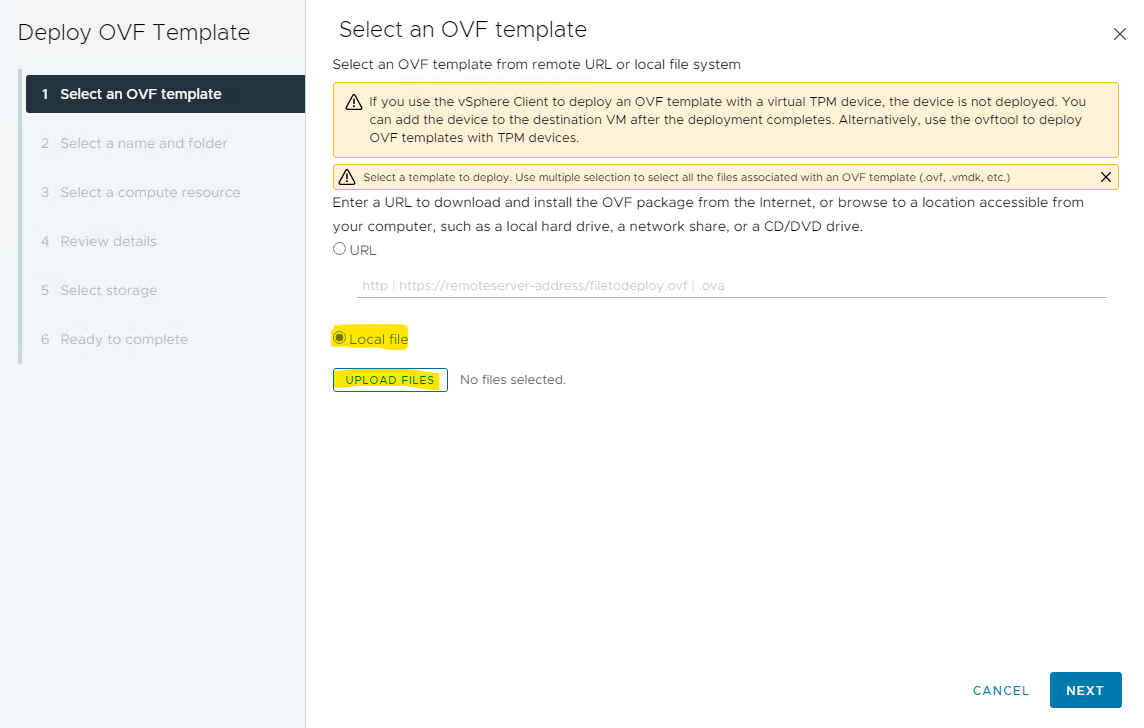

Click VMs and Templates -> Right click the appropriate folder (where you would like to deploy the NSX Manager) -> Click Deploy OVF Template .

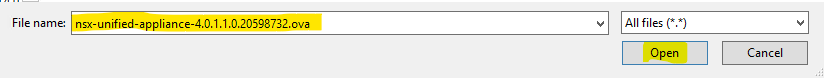

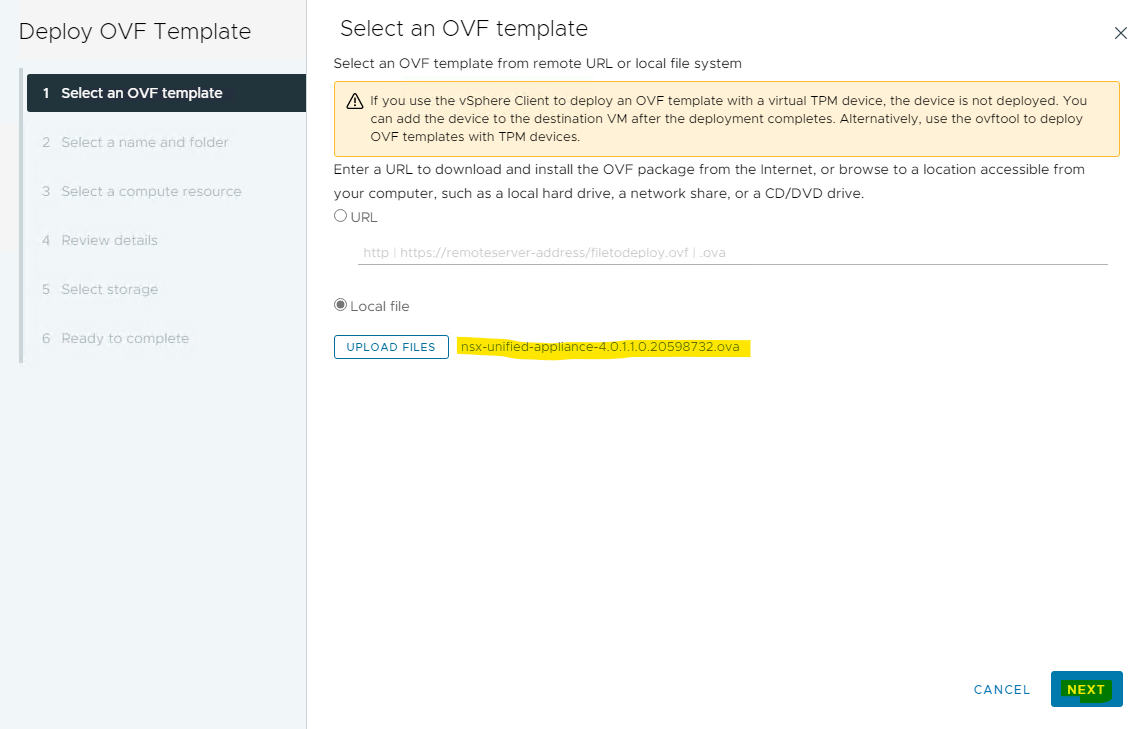

Select Local file , and click on UPLOAD FILES.

Browse the OVA file for NSX unified appliance. Click on Open and N EXT .

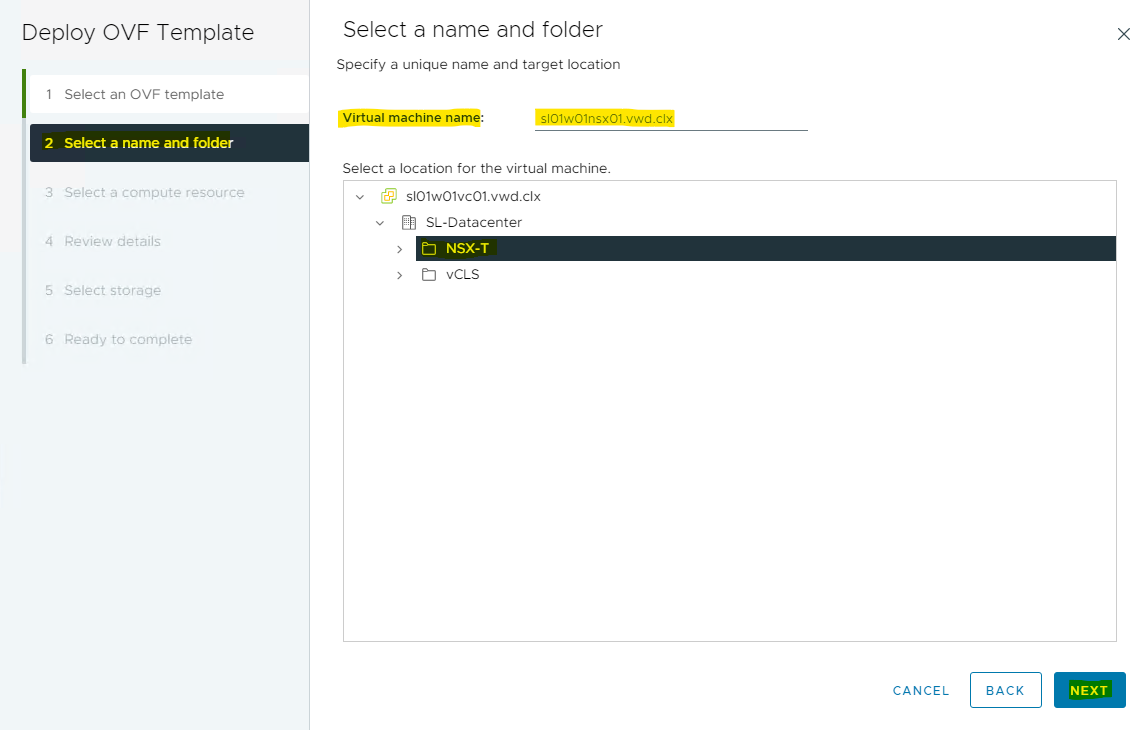

Assign a VM name, and select the folder in which you wish to place the NSX manager. Click NEXT.

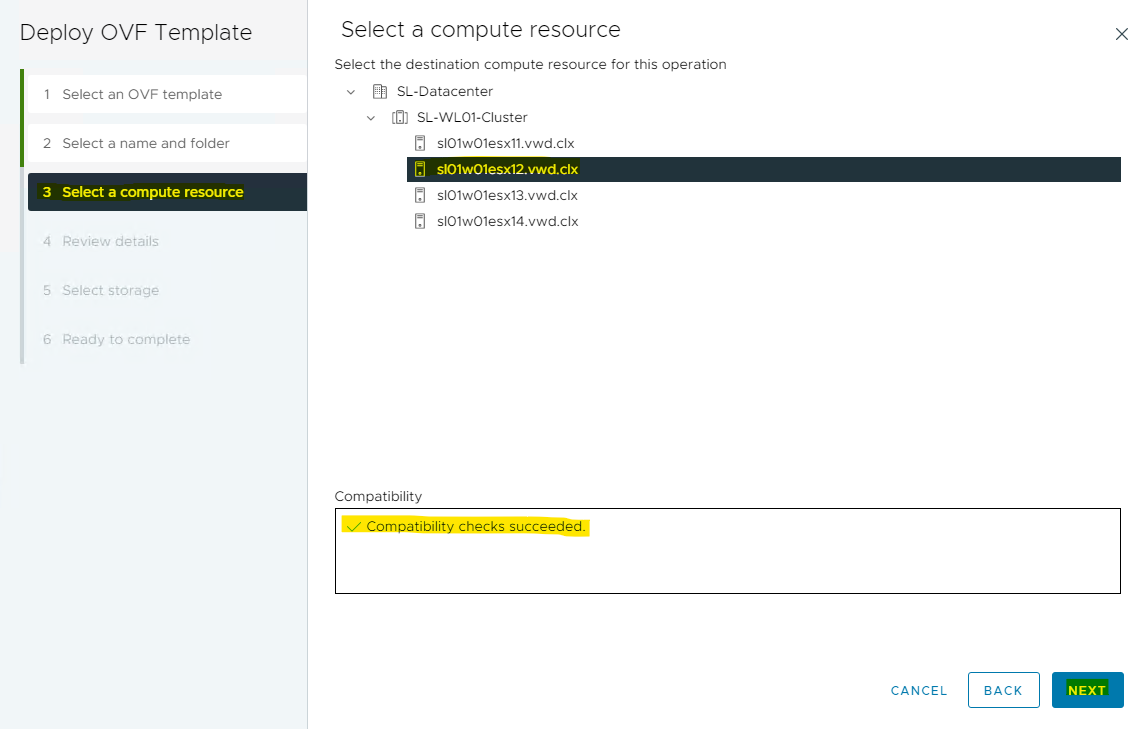

Select the comp ute resource whe re you wish to place your NSX manager, i.e. the cluster, resource pool or the host. Verify a successful compatibility, and c lick NEXT.

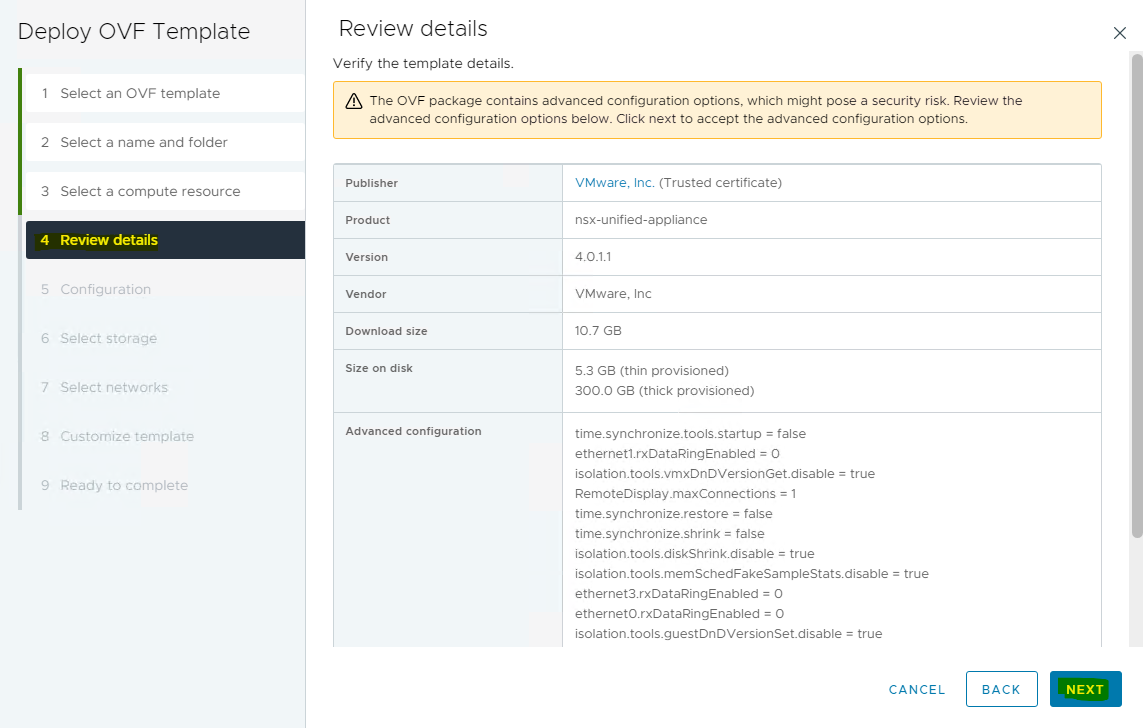

Review the details, and c lick NEXT.

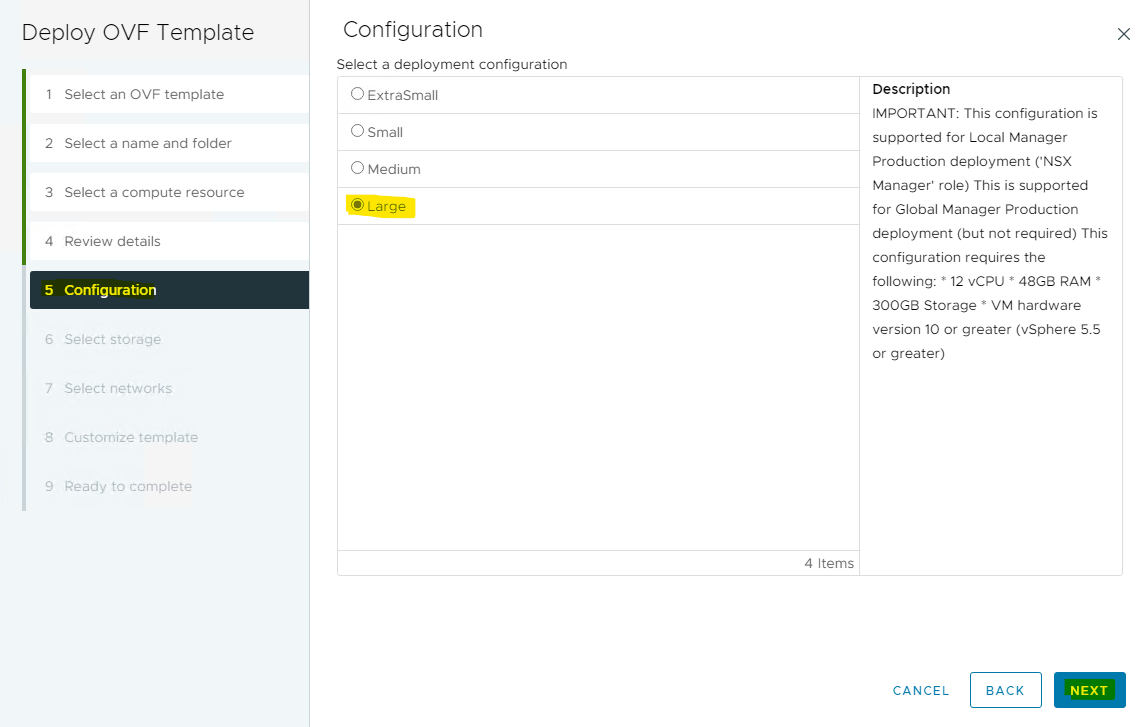

Select deployment size (Medium), and click NEXT.

WarningWhen selecting "Small" as the deployment configuration, some of the services do not work. Always use "Medium" or "Large".

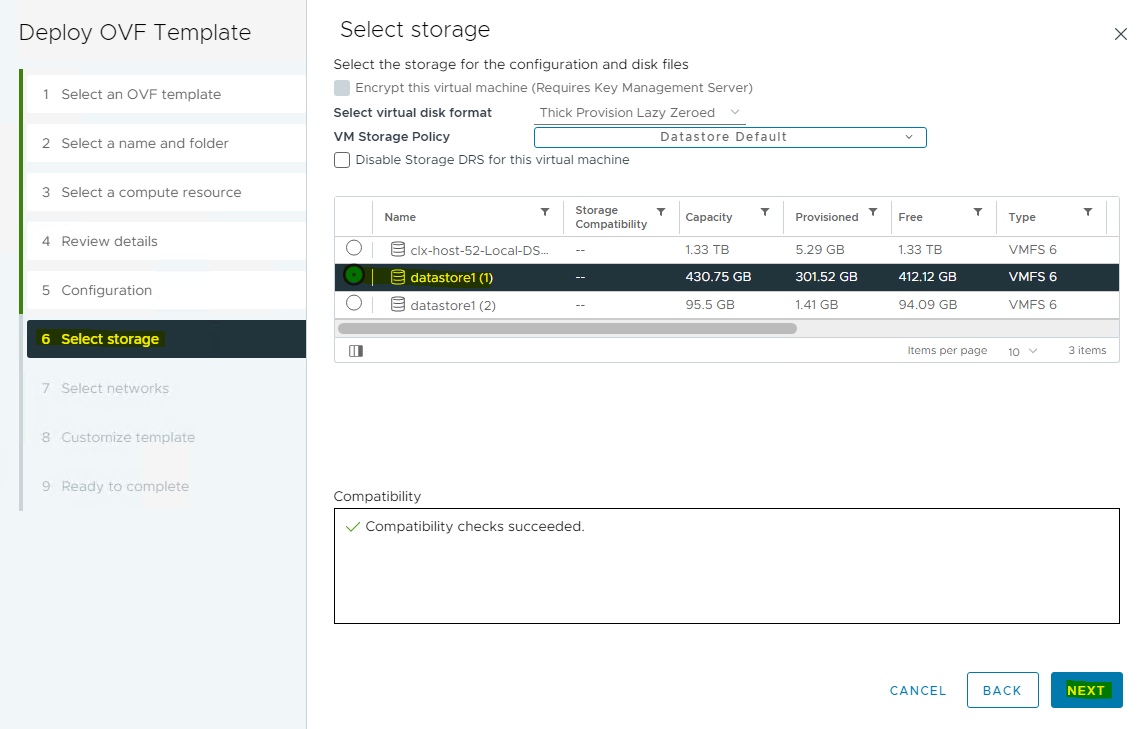

Select the Storage/datastore where the NSX manager should be placed. C lick NEXT.

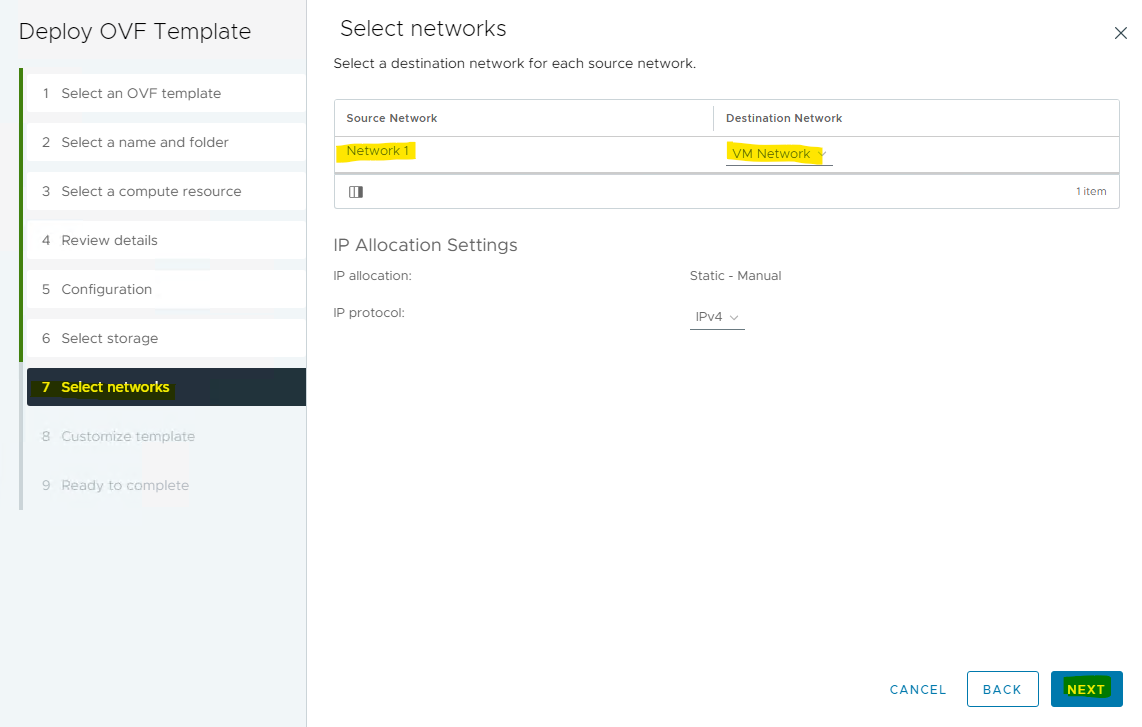

Select the Management network, and click NEXT to move to the "Customize Template" screen.

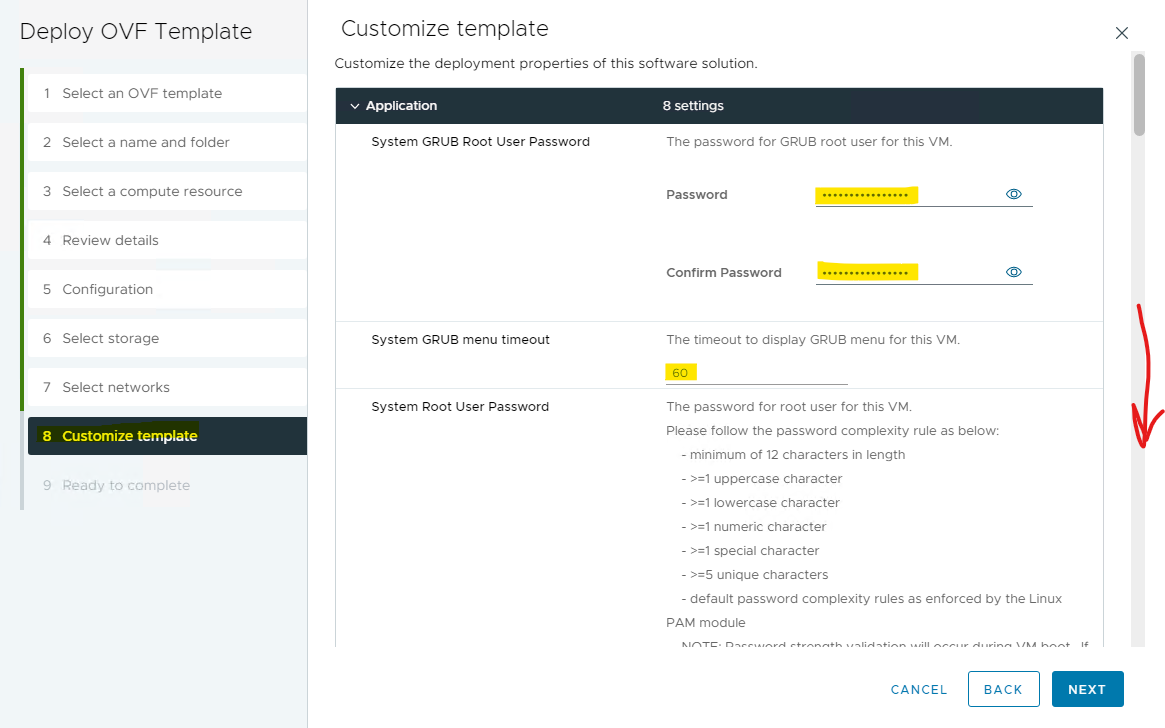

Specify the GRUB root password, and scroll down.

ImportantPassword complexity (minimum 12 symbols) is required.

-

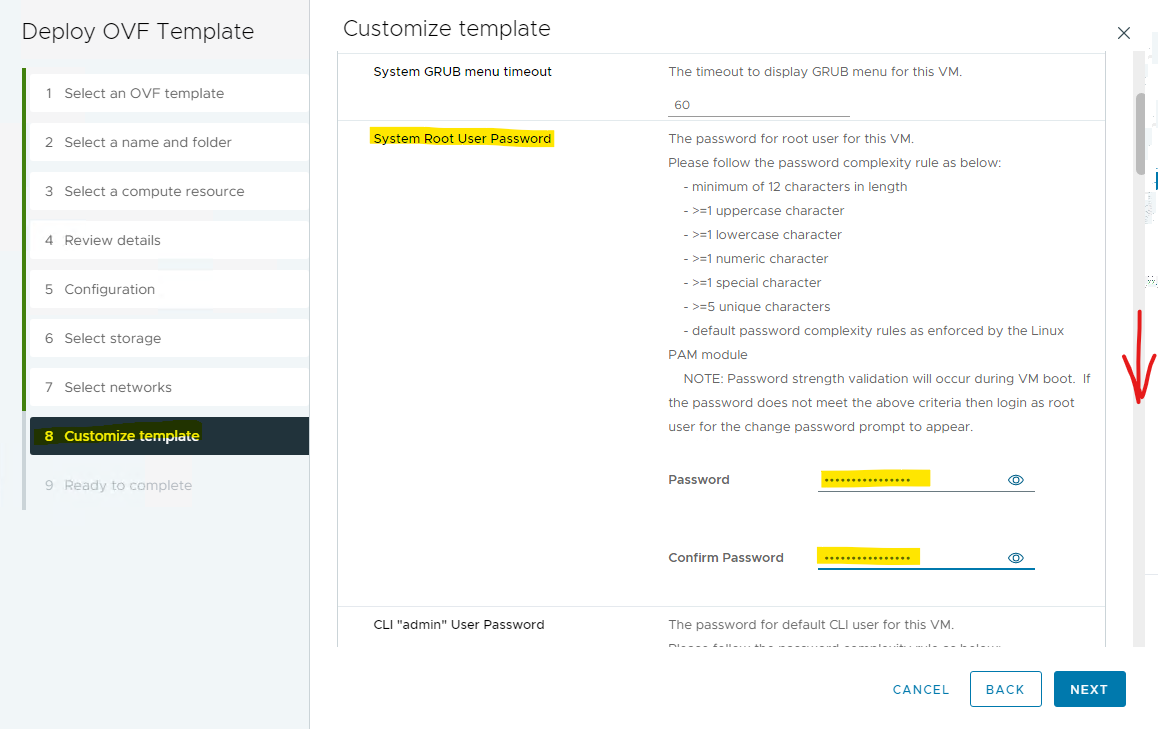

Specify the root password, and scroll down.

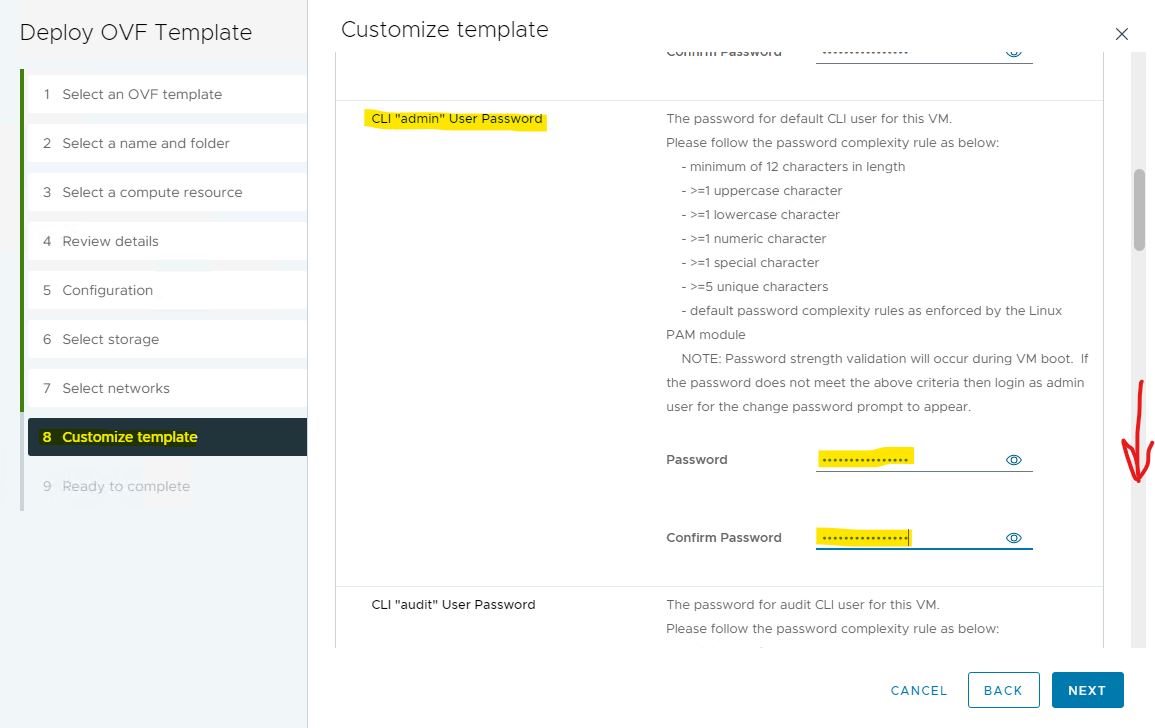

Specify the admin, and scroll down.

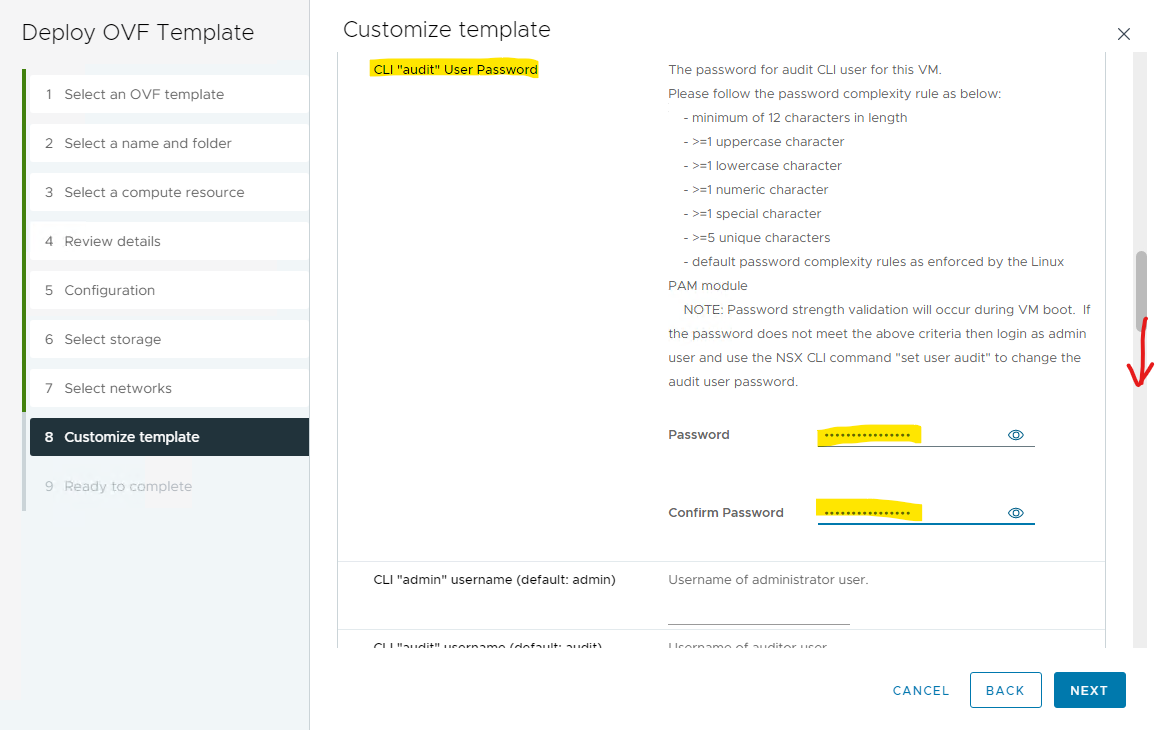

Specify the audit account password, and scroll down.

Provide the Hostname, Rolename (the NSX Manager has 3 roles, as seen below) and networking details. Then, scroll down .

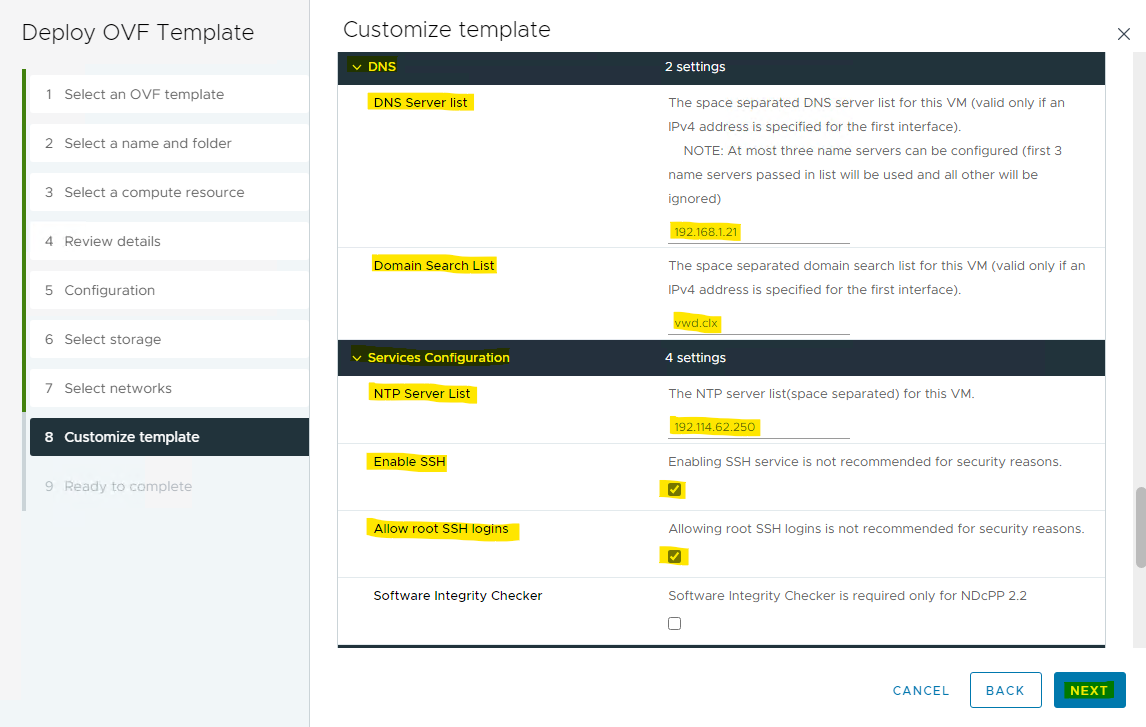

Assign the DNS and NTP details, and choose whether you need SSH to be enabled on the NSX Manager. Click NEXT.

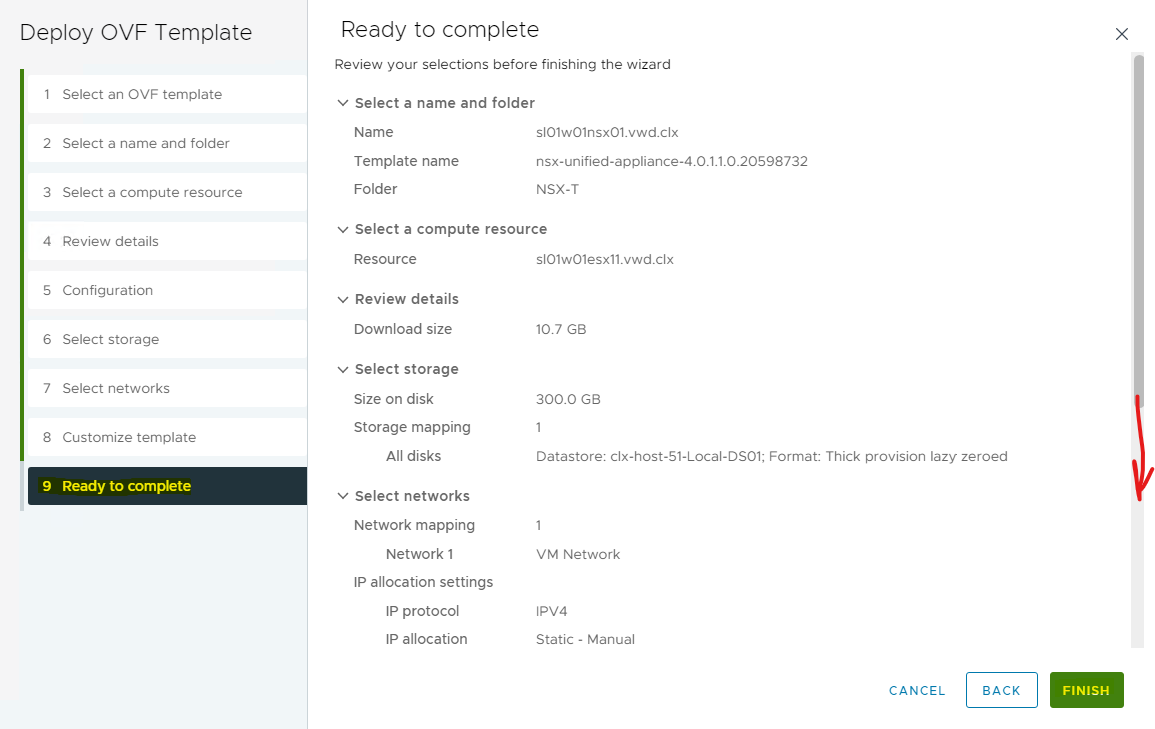

Review the details, and click FINISH to complete the wizard. Wait for the NSX manager appliance to be successfully deployed.

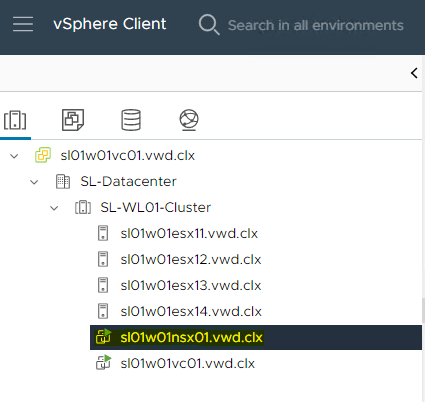

As seen below, the NSX Manager will be deployed in a maximum of 5-10 minutes.

Once the appliance is successfully deployed, Power it on and wait for at least 15 minutes for all the services to come up.

Once the services are up, a login prompt is shown. At this point, wait for 10 more minutes as backend services must start the web portal related services.

Post Deployment Health Checks

Once done, o pen the console and login with the admin credentials by using putty. You will be shown the version number and the role.

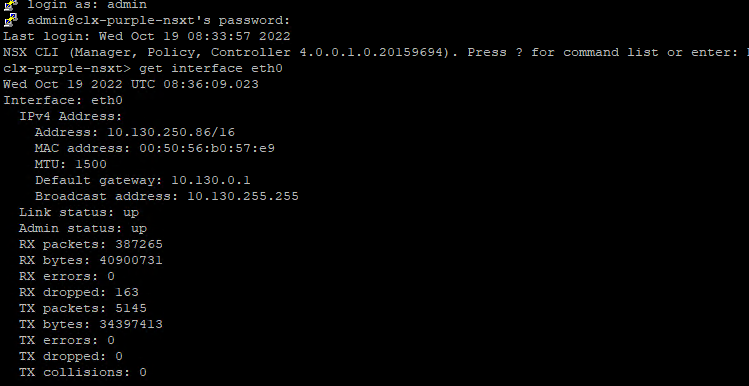

Next, Verify the network configuration by using the below command.

NSX-T Manager Console

get interface eth0

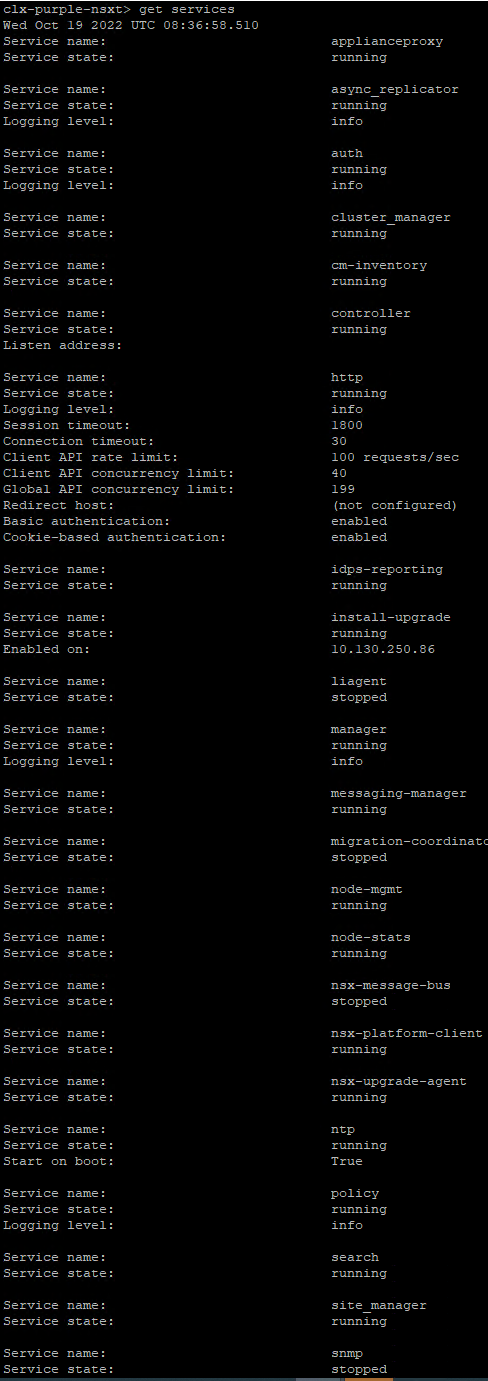

Check the services status by running the following command.

NSX-T Manager Console

get services

Liagent, migration-coordinator, and SNMP services are not started by default.

Configuration

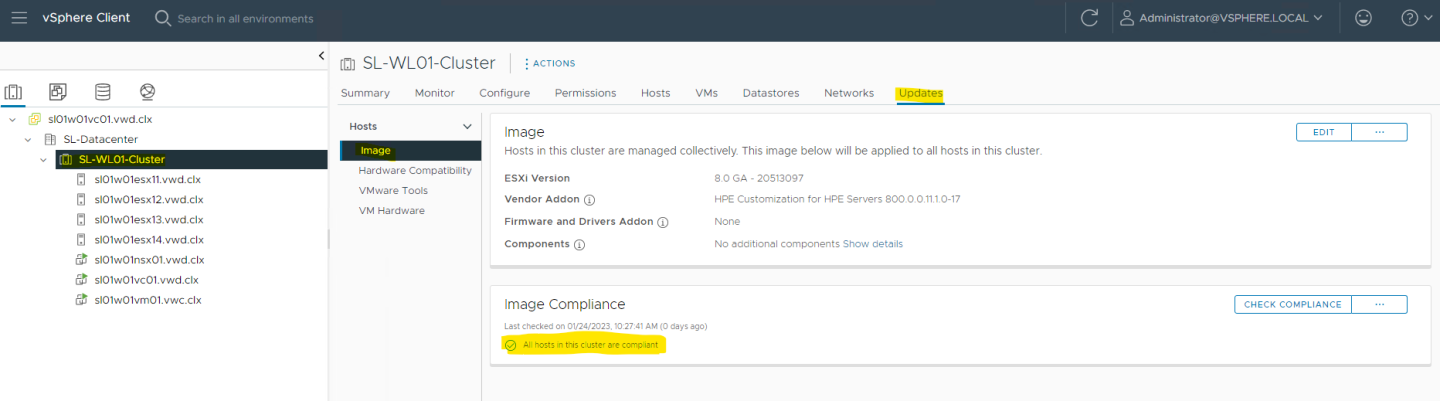

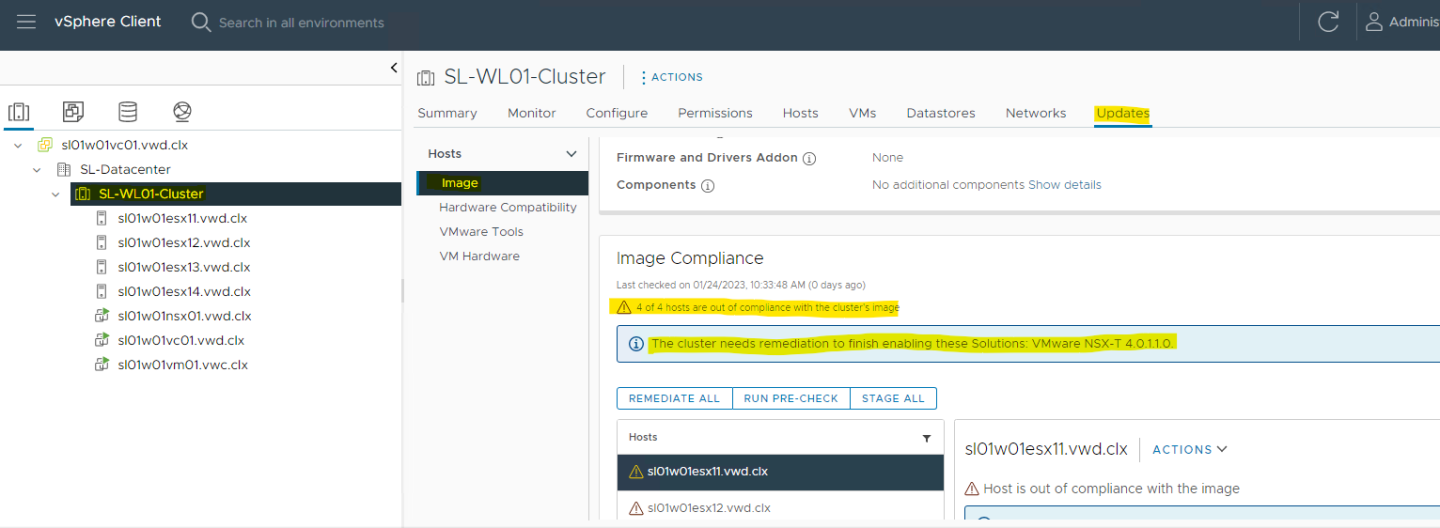

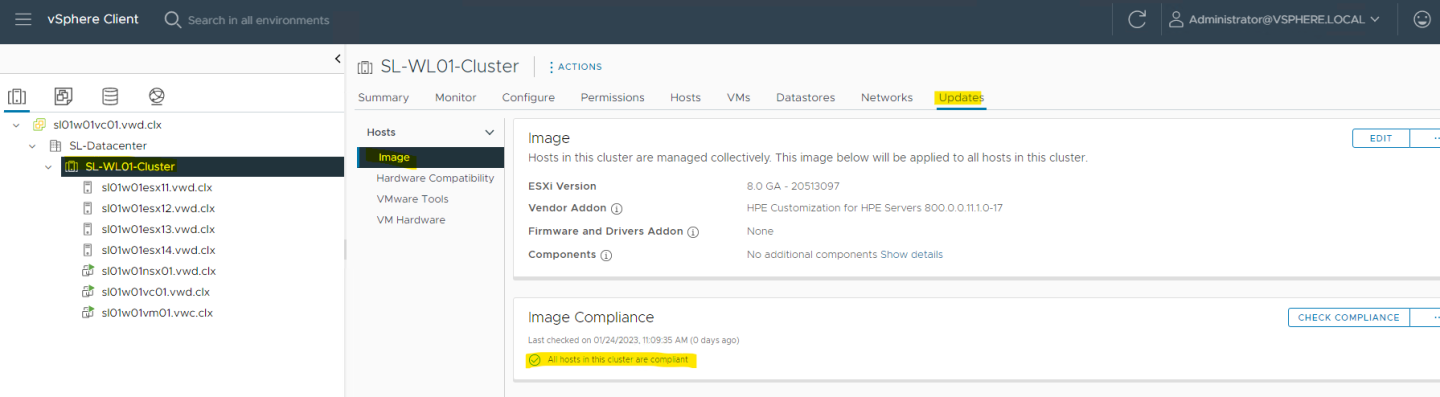

Before you are starting to configure the NSX, please make sure that all ESXi hosts in this cluster are compliant.

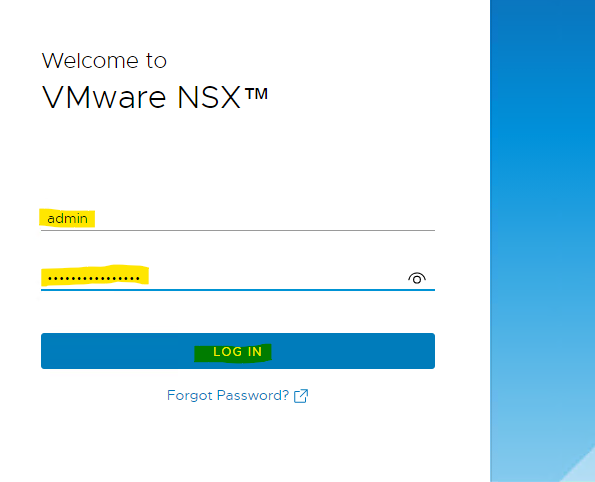

Login from the NSX manager UI login page by using the following URL: " https://<fqdn or IP> ".

Accept the EULA to log into your first NSX manager.

Here you can decide whether you want to join the Customer Experience Improvement Program.

You may take a look at the "What's new" page, or skip it if you wish to move on.

Finally, the NSX manager interface should open up.

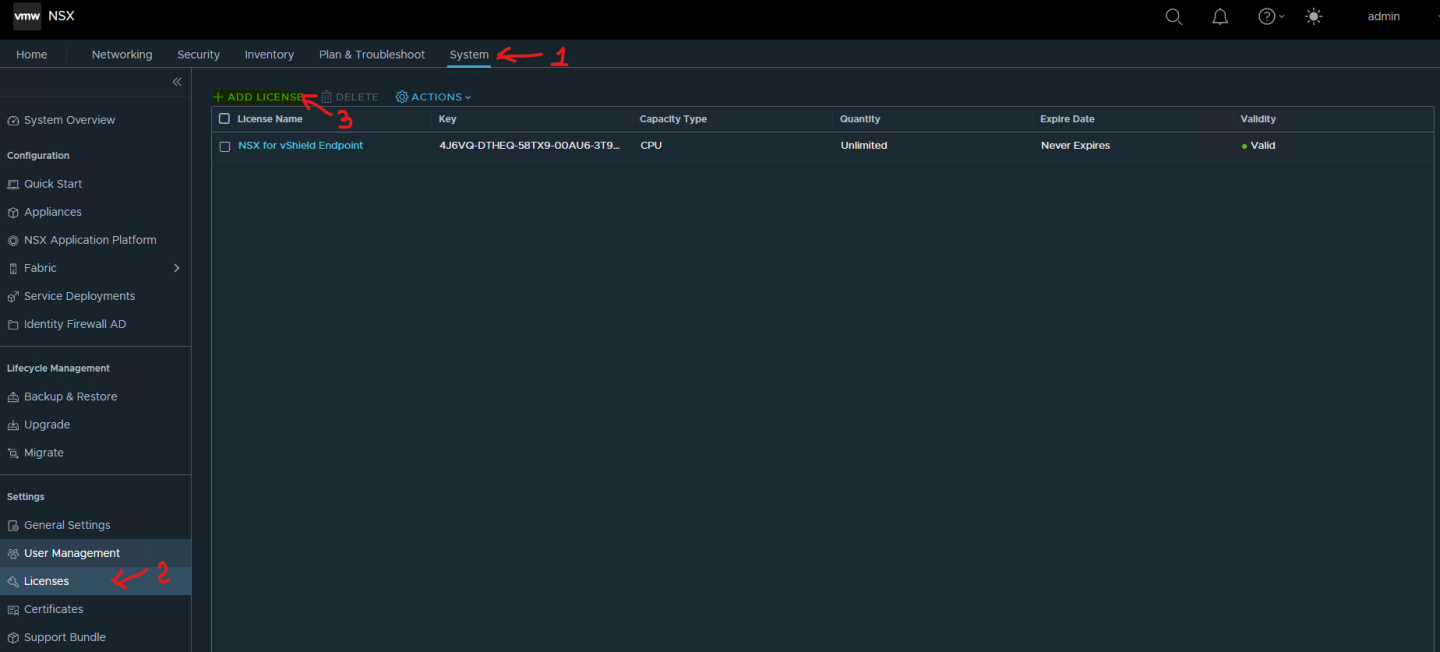

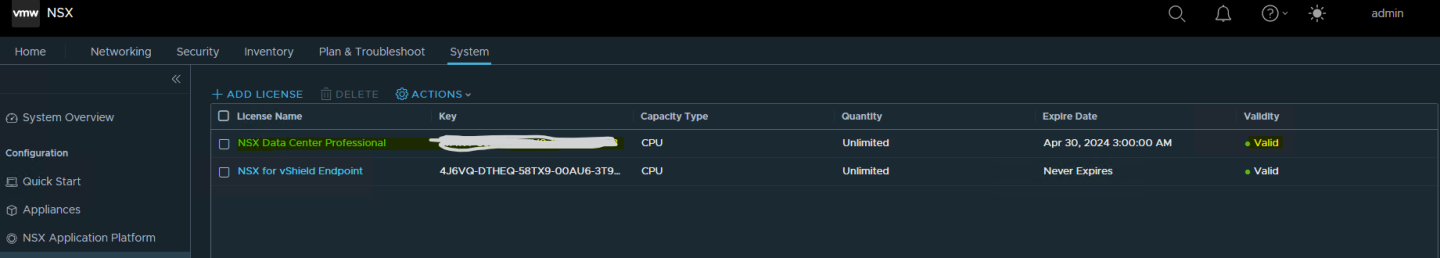

To add your NSX license, n avigate to System->Licenses , and click on + ADD LICENSE.

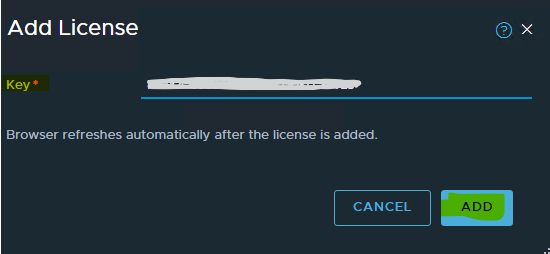

Add a license , and click on ADD .

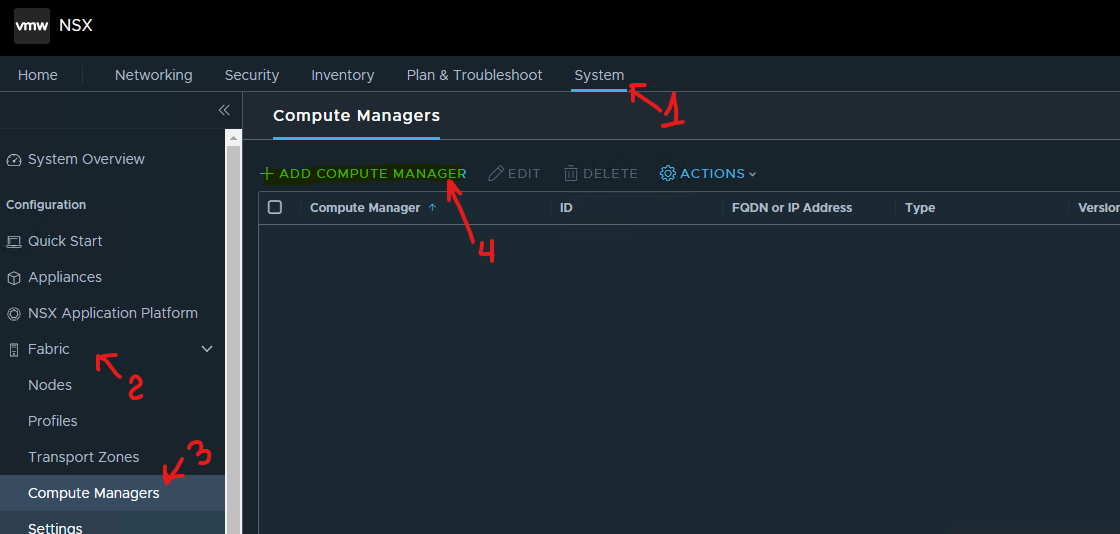

Add a Compute Manager

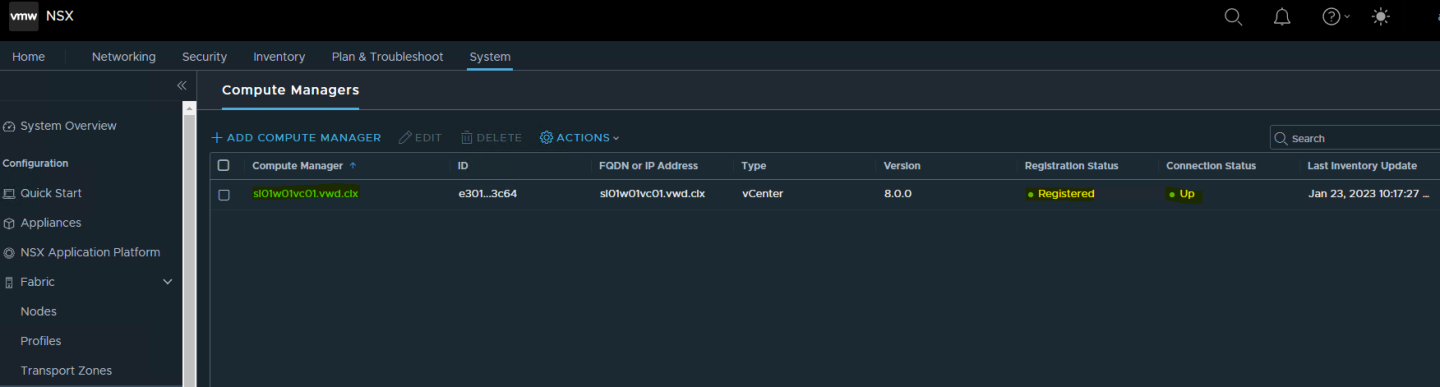

To add a vCenter as a compute manager, navigate to System->Fabric->Compute Managers, and c lick on ADD COMPUTE MANAGER.

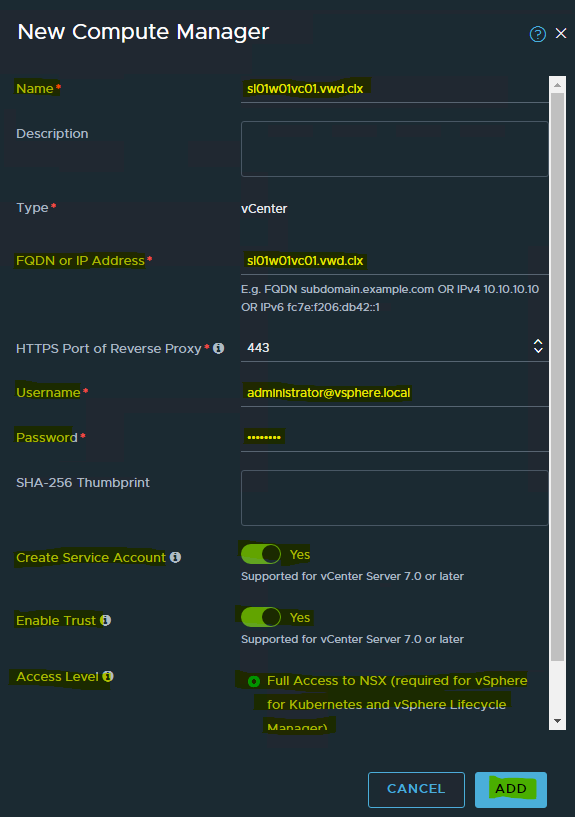

Fill in the vCenter details, and click ADD.

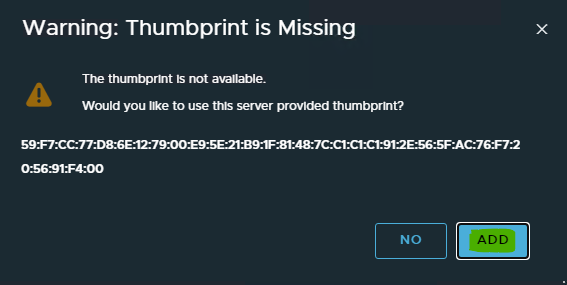

In the vCenter server thumbprint window, click on ADD.

Wait for the registration to complete. You will see the status change to registered, and the connection status set to "Up".

Wait for the registration to complete. You will see the status change to registered, and the connection status set to "Up".

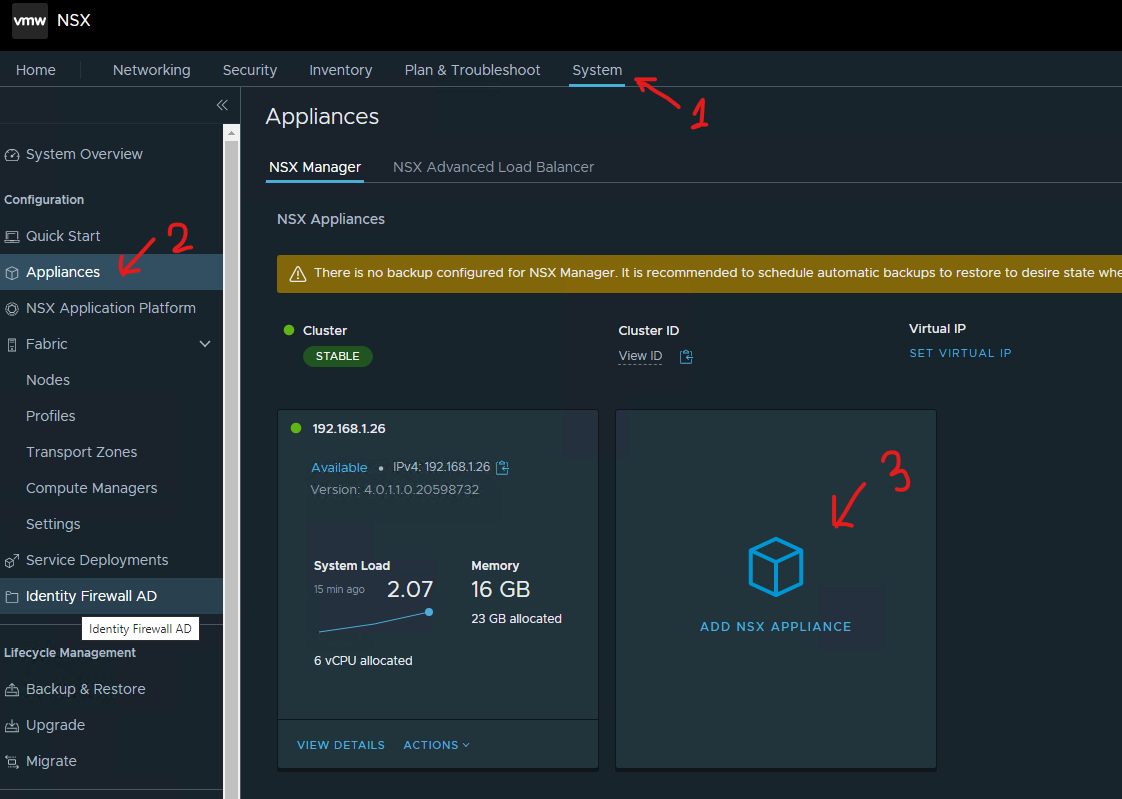

Optional

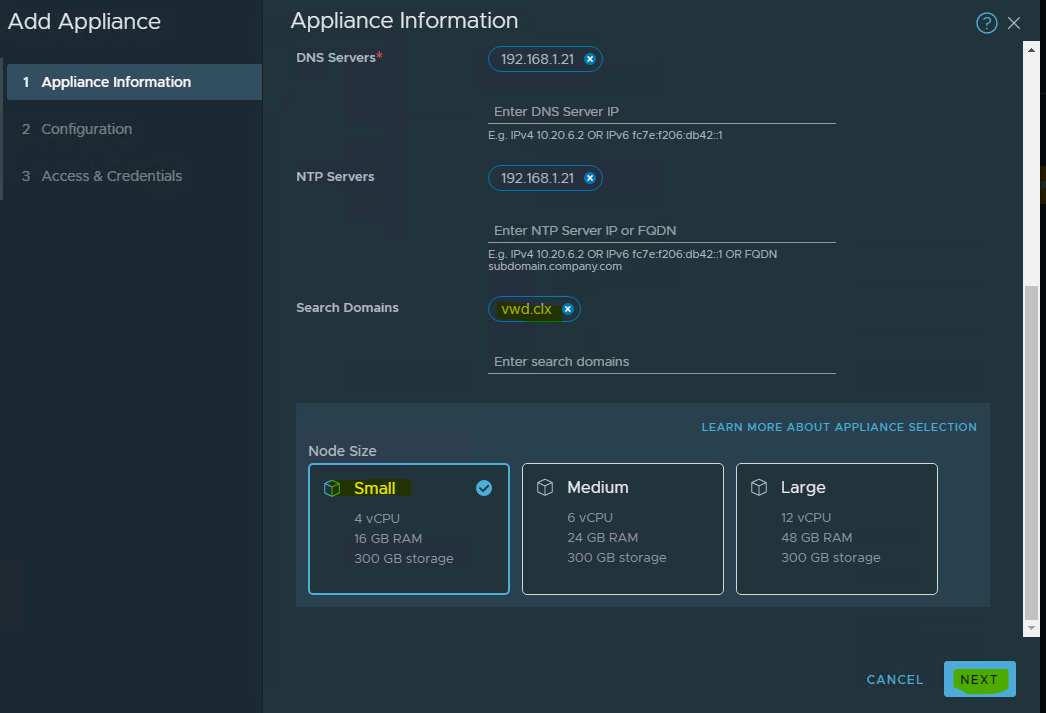

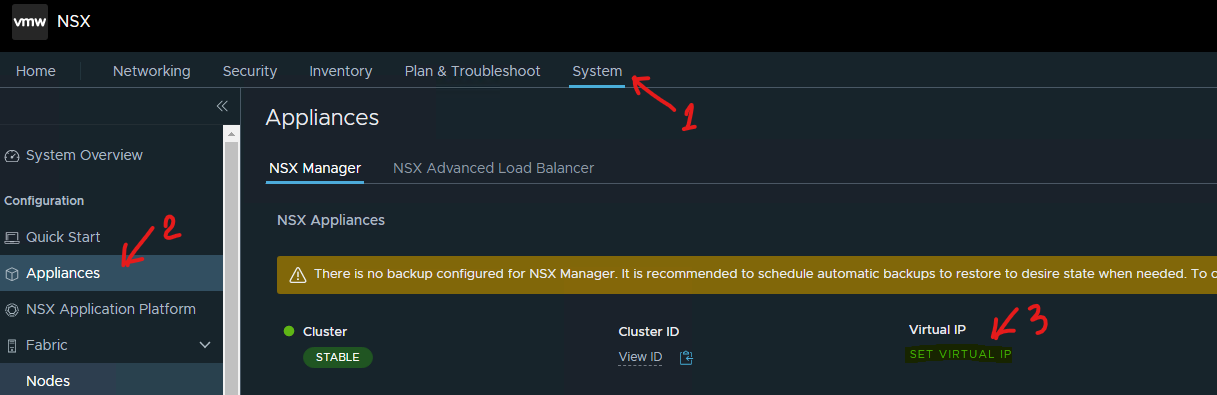

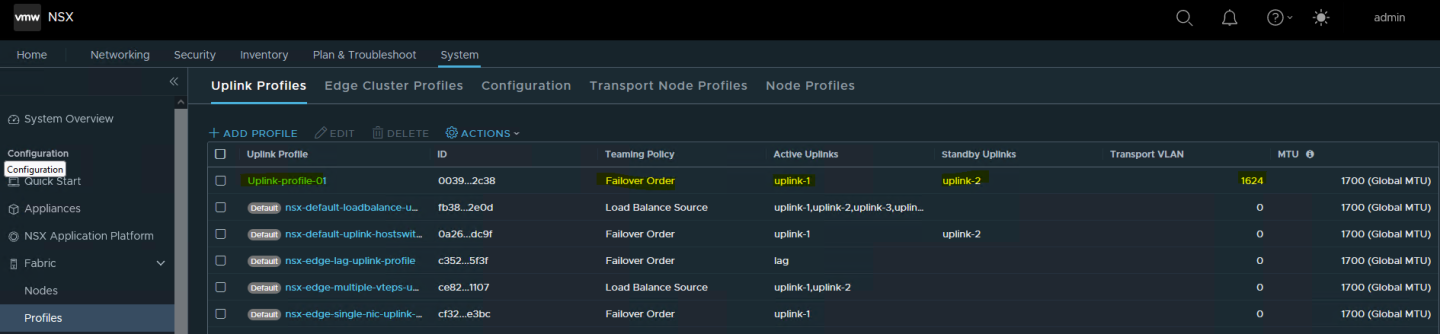

To deploy additional NSX manager nodes, navigate to System → Appliances, and click on Add NSX appliance.

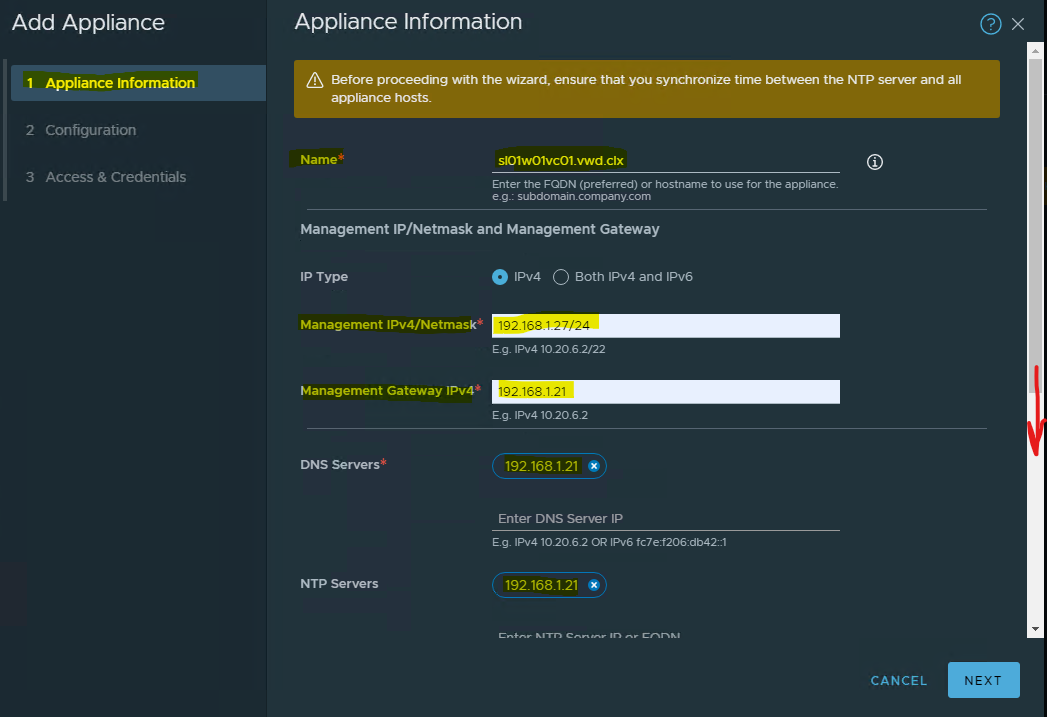

Fill in the additional manager details, such as Hostname, Management IP/Netmask, Gateway, DNS and NTP, and select the Node Size of the manager ( the size should be identical to the size of the first manager ). Click NEXT.

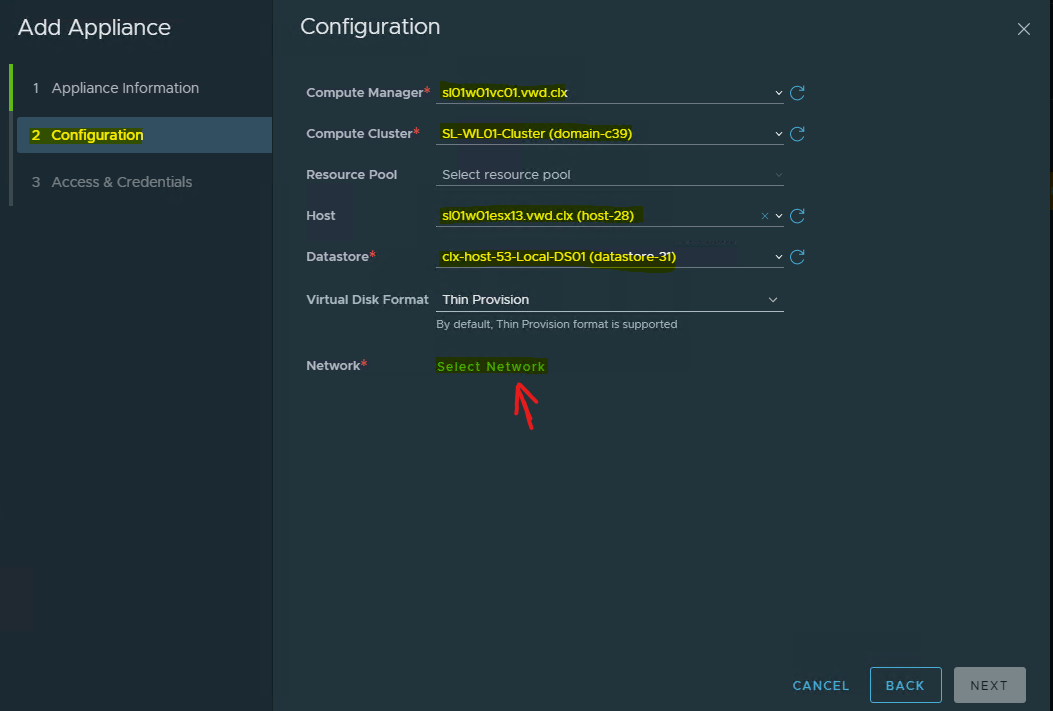

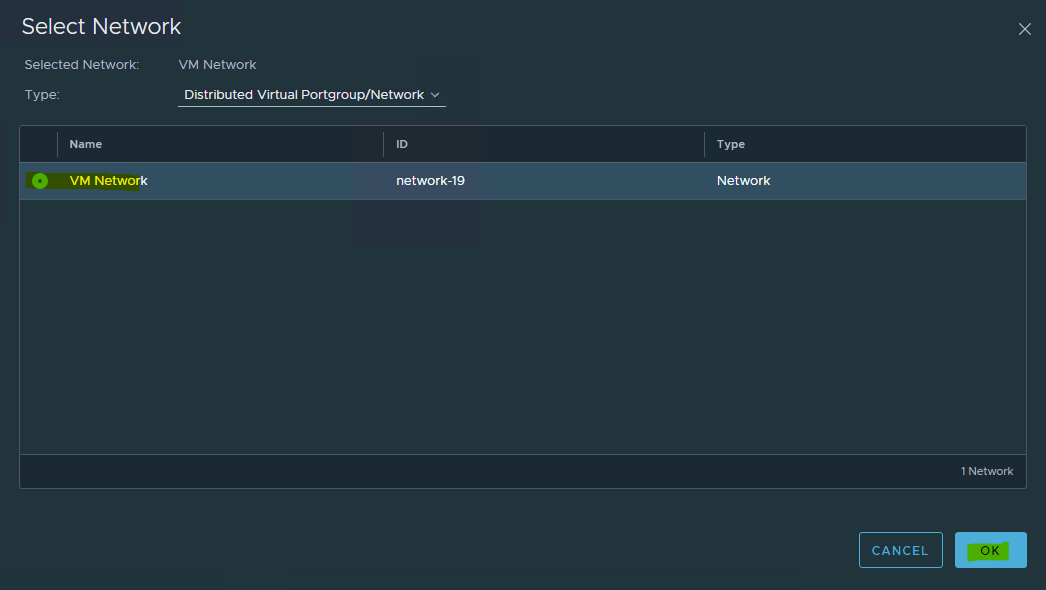

Select the appliance placement and network configurations, and click NEXT.

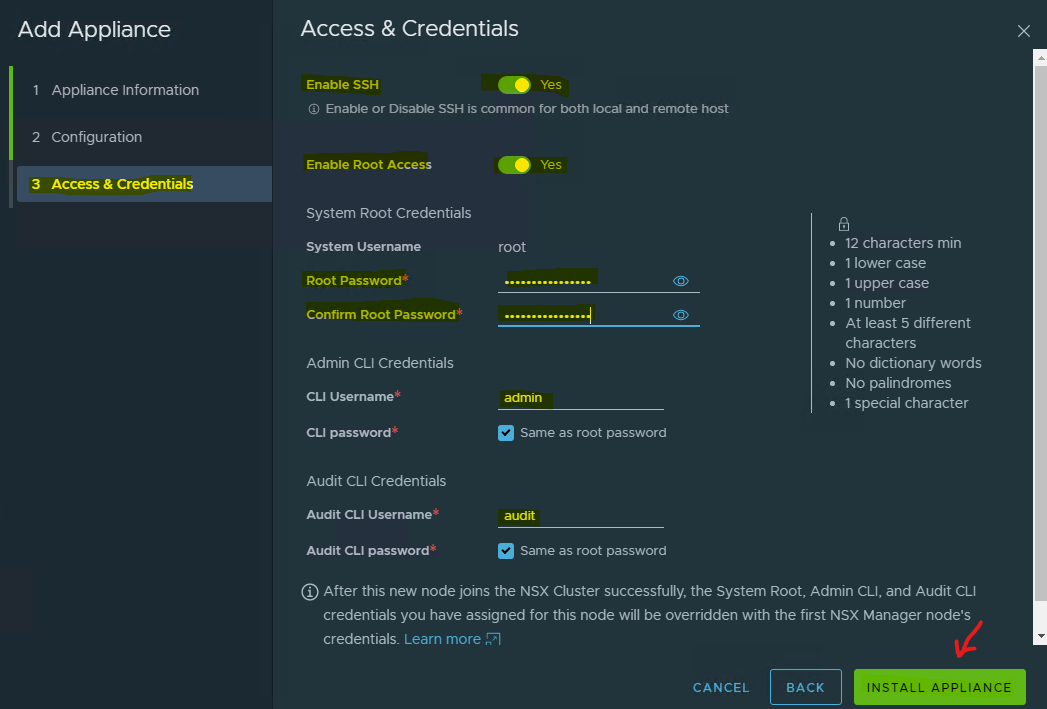

Complete the NSX manager passwords for access (use the same password used in the first manager ), and enable SSH access.

Click on INSTALL APPLIANCE.

Repeat the same steps for deploying a third NSX manager node. Wait for both nodes to be deployed, and for the joined cluster to be created.

Assign the cluster VIP for ease of management. Make sure that the cluster IP does not cause load balancing.

The deployment of the NSX manager and controller cluster are completed.

Enhanced Data Path Configuration

Network administrators must prepare the network with the supported NICs and drivers before creating VDS enabled transport zones in the enhanced data path mode.

Uplink Profiles

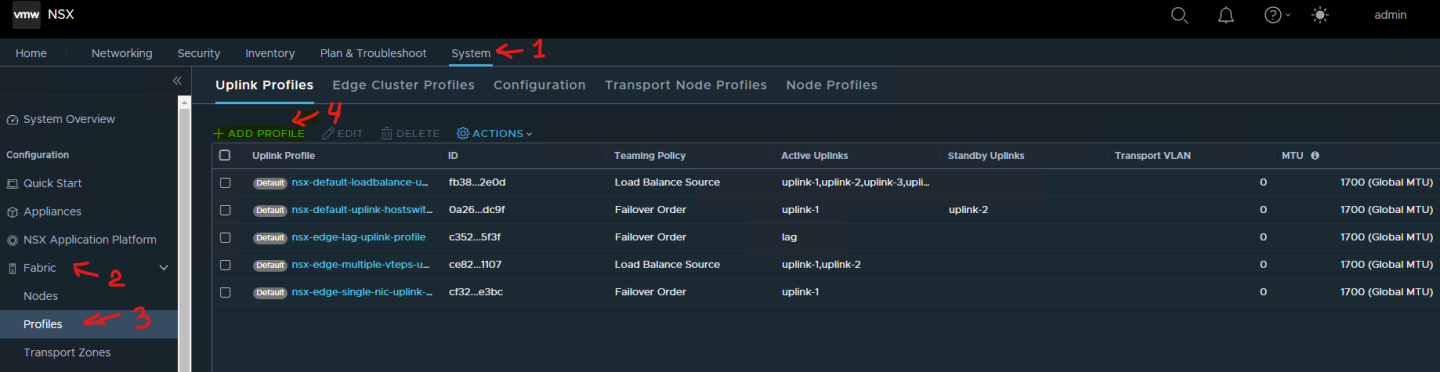

An uplink profile defines the policies for links from hypervisor hosts to the NSX logical switches.

The settings defined by these profiles may include teaming policies, active/standby links, transport VLAN ID and MTU settings.

Uplink profiles enable consistent configuration of identical capabilities for network adapters across multiple hosts and nodes. By default, two uplink profiles are provided with NSX, but since they cannot be edited, new profiles for the Edge uplink should be created for hosts’ uplinks as well.

See Create an Uplink Profile for further details.

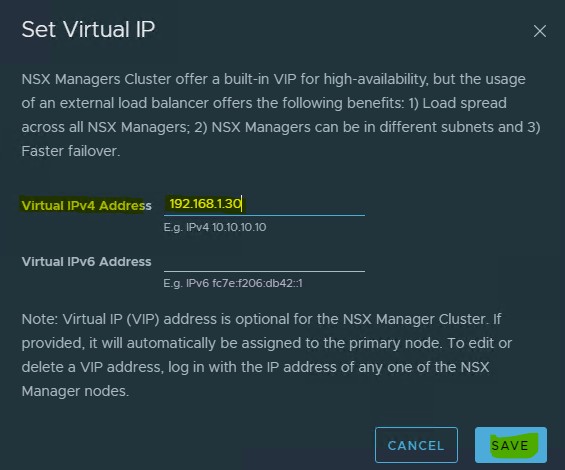

Create a Host Uplink Profile

To create a Host uplink profile i

n the

NSX Manager

,

navigate to System → Fabric → Profiles → Uplink Profiles → +ADD.

Assign a name to the profile, and fill the description (optional).

Under "Teamings", set the Teaming Policy to "Failover Order".

Set the Active Uplinks to uplink-1 and the Standby Uplinks to uplink-2.

The Transport VLAN will be an Overlay VLAN ID (in our example - 1624), since these uplinks are connected directly to the hosts, and must be tagged accordingly.

Transport Zones

Transport Zones dictate which hosts (and consequently, which VMs) can participate in the use of a particular network. There are two types of transport zones - an Overlay and a VLAN.

The overlay transport zone is used by both host transport nodes and NSX Edges, and is responsible for communication over the overlay network.

The VLAN transport zone is used by the NSX Edge for its VLAN uplinks.

Both types create a VDS on the host or Edge to allow virtual-to-physical packet flow by binding logical router uplinks and downlinks to physical NICs. For more information, please see Transport Zones and Profiles.

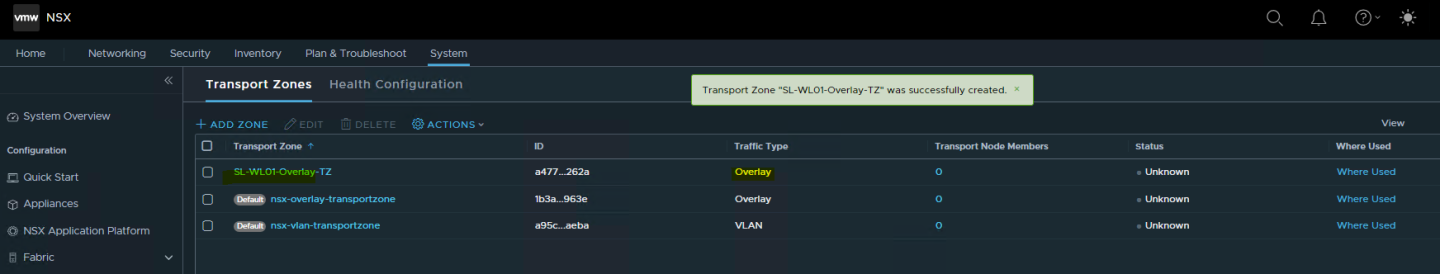

Creating a Transport Zone

To create a Transport Zone i n the NSX Manager , navigate to System → Fabric → Transport Zones → +ADD.

Transport Zone with Overlay Traffic Type

Provide a Name, and select the Traffic Type as Overlay (Geneve). Click ADD.

Traffic Type as VLAN is out of the scope of this document.

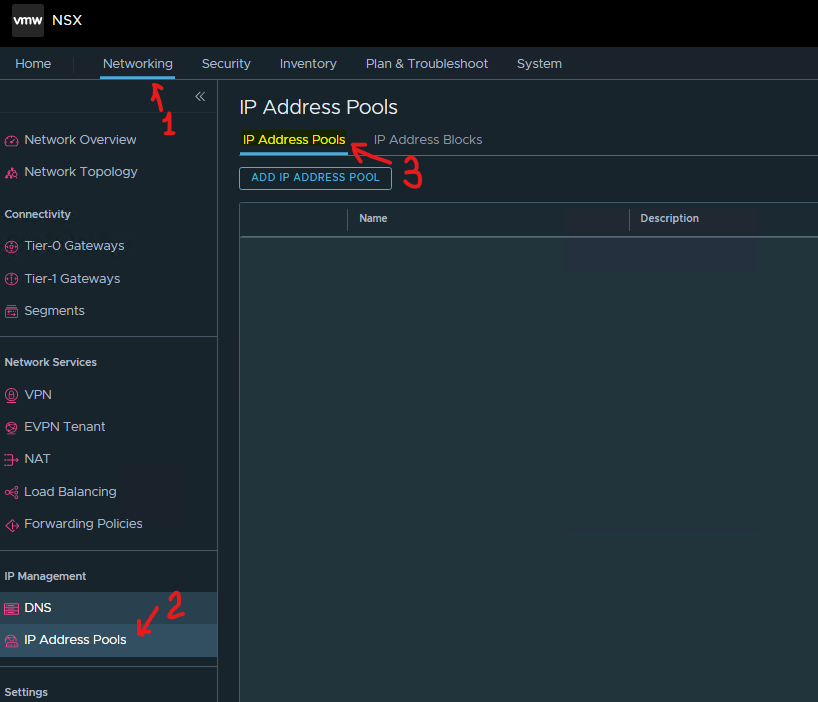

Creating an NSX IP Pool

Each transport node, i.e. hypervisors, is assigned with an IP address for the TEP interface.

You can use DHCP, a Static IP list and an IP address Pool to assign IP addresses for the TEP (Tunnel Endpoint) interfaces.

To configure the ESXi hosts as transport nodes, create an NSX IP Pool to assign IP addresses for the TEP interfaces.

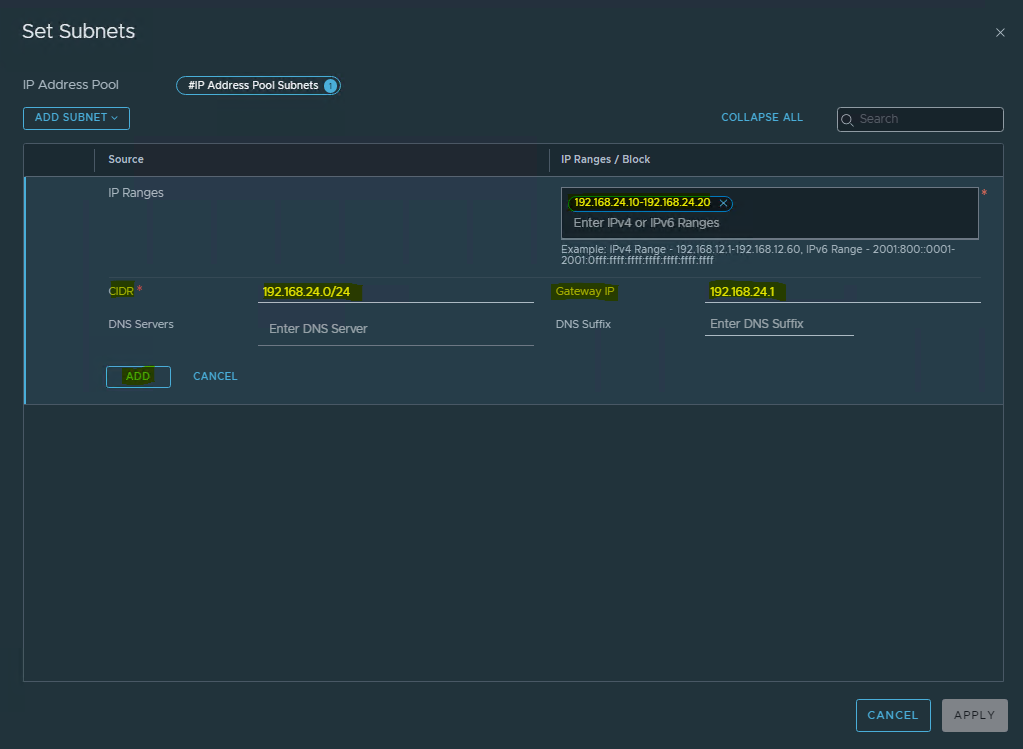

To create an IP Pool i n the NSX Manager:

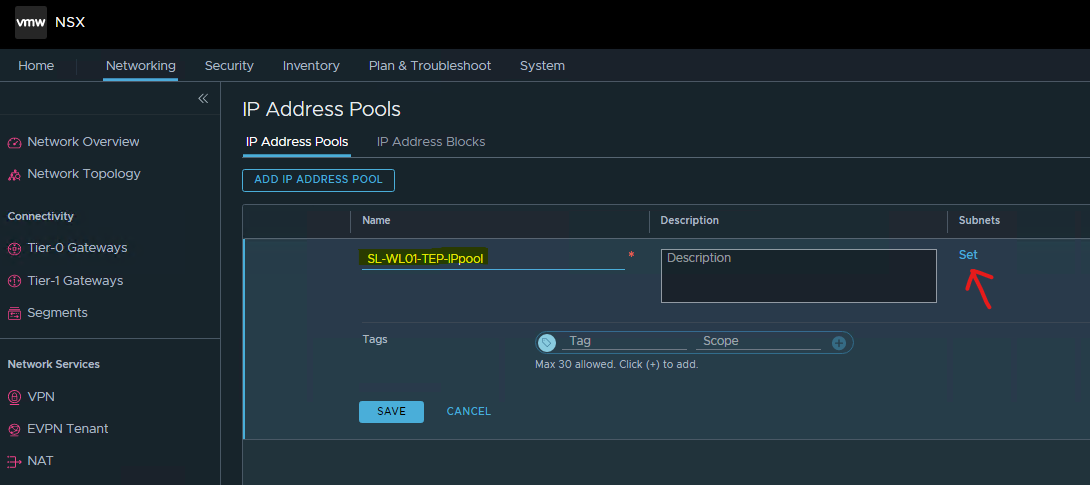

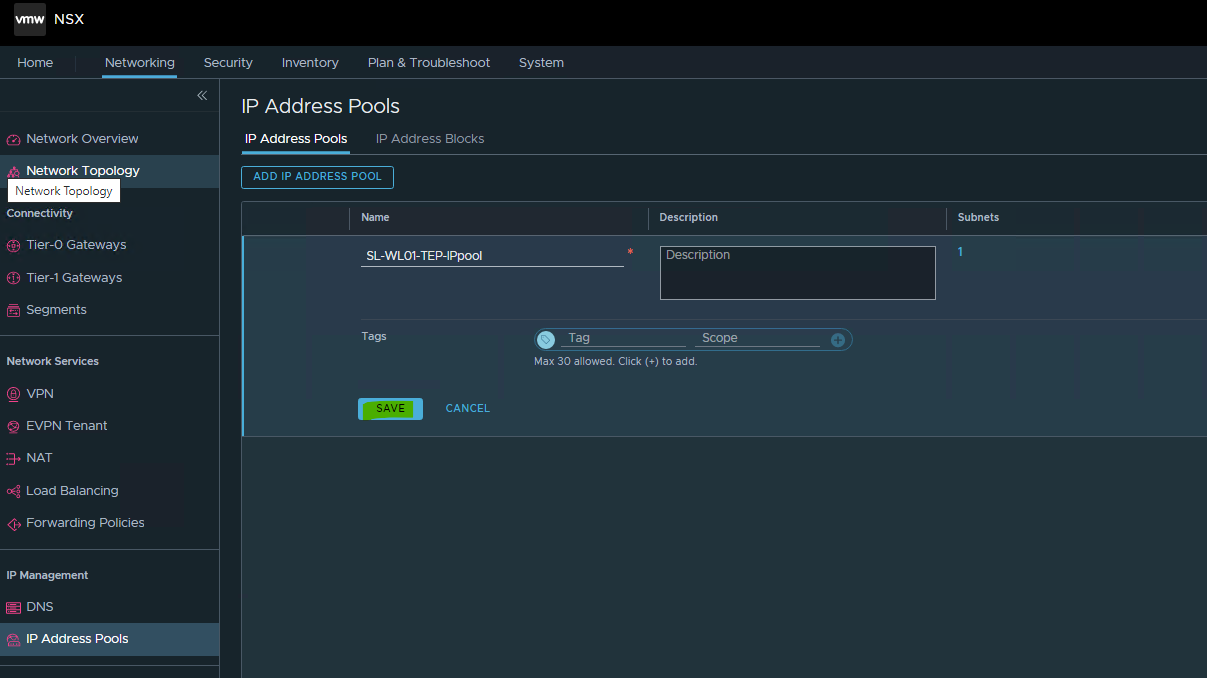

Navigate to Networking → IP Address Pools → ADD IP ADDRESS POOL .

Specify the name and description of the IP address pool, and click “ Set”.

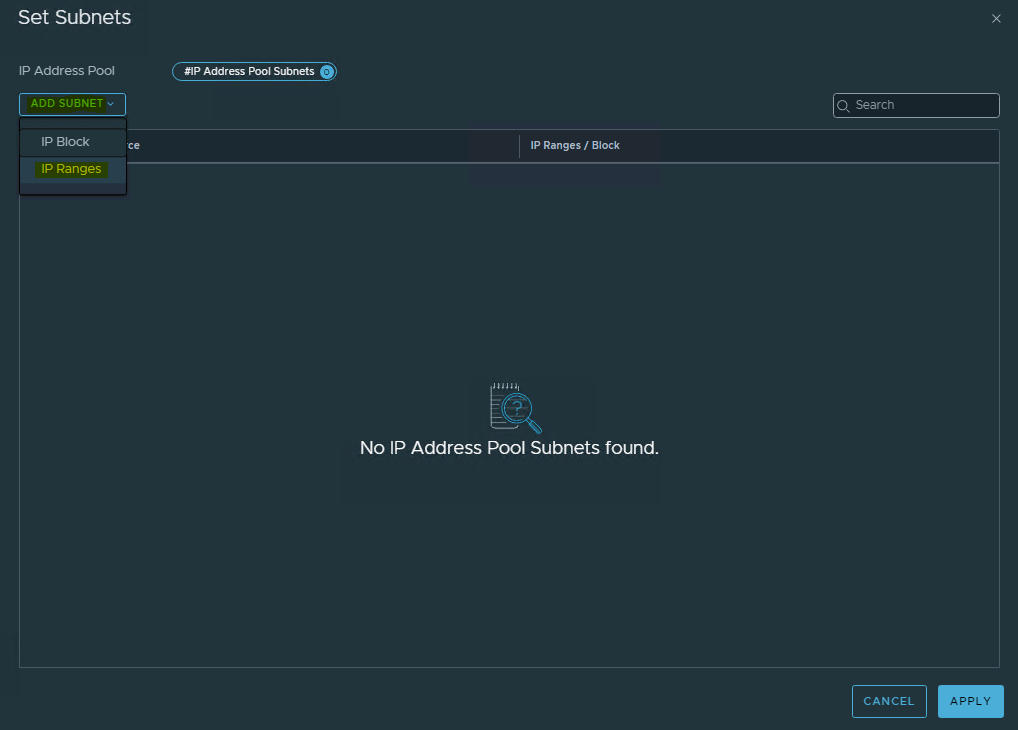

Click ADD SUBNET → Select “ IP Ranges ” .

Specify the IP address ranges along with the CIDR and the Gateway IP address for the specified IP ranges. Click “ADD” .

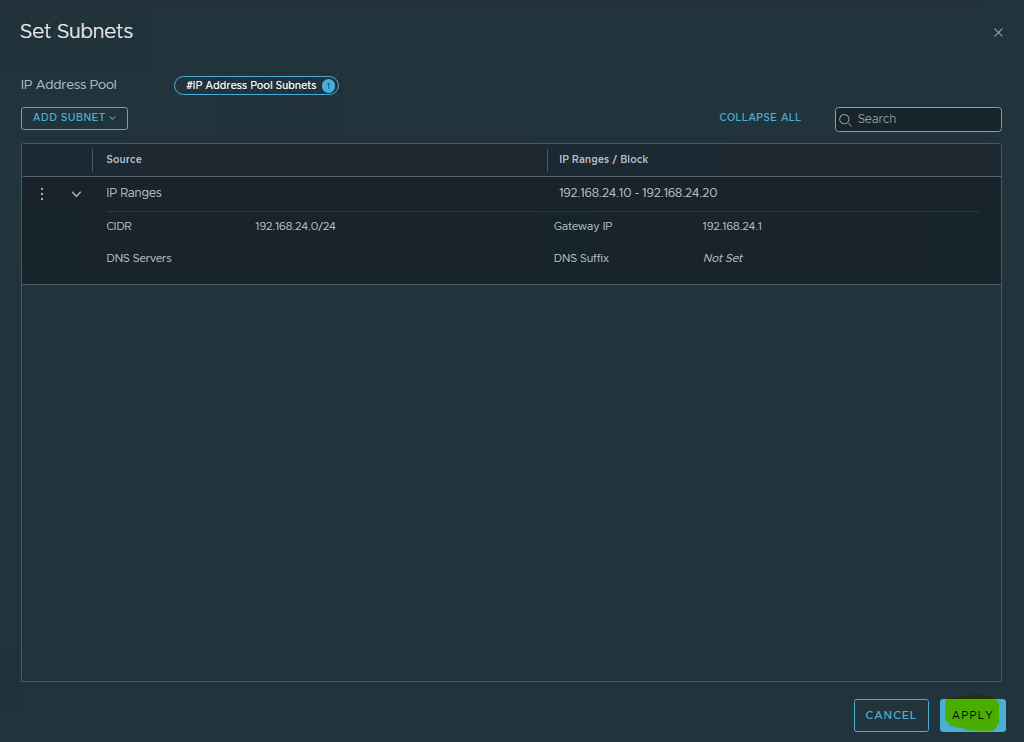

Click Apply.

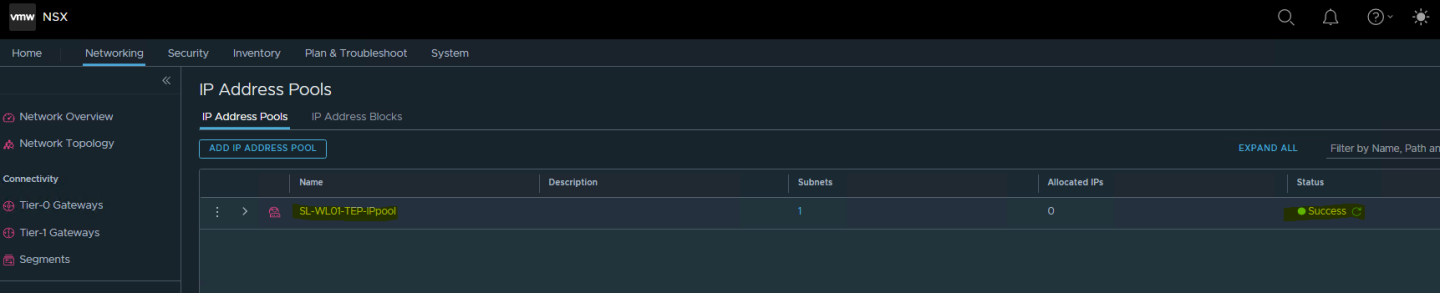

Click “Save” to create the IP Pool.

Once the IP Pool is created, the status is changed to “Success” .

Host Transport Node

A host transport node is a node that participates in an NSX overlay or VLAN networking.

VDS Modes:

Standard applies to all the supported hosts.

Enhanced Datapath - Standard is an interrupt driven variant of the Enhanced Data Path mode.

Enhanced Datapath - Performance is the Enhanced Data Path switch mode. This mode provides accelerated networking performance, but also introduces additional prerequisites. In order to benefit from this mode, workloads must be compiled with DPDK and use VMNET3 for their vNIC.

Installing NSX components on a Host Transport Node

To add a Host Transport Node:

1. Ensure that at least one Physical NIC (pNIC) is available for NSX. In the below example, the host is configured with a ConnectX-6 Dx pNIC, vmnic0 on SL-WL01-Cluster.

2. Make sure the ConnectX-6 Dx card is running firmware version 22.34.1002:

Enable SSH Access to ESXi server.

Log into ESXi vSphere Command-Line Interface with root permissions.

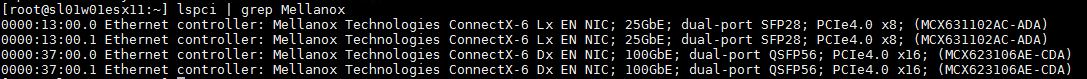

Verify that the host is equipped with an NVIDIA adapter card.

ESXi CLI

lspci | grep Mellanox

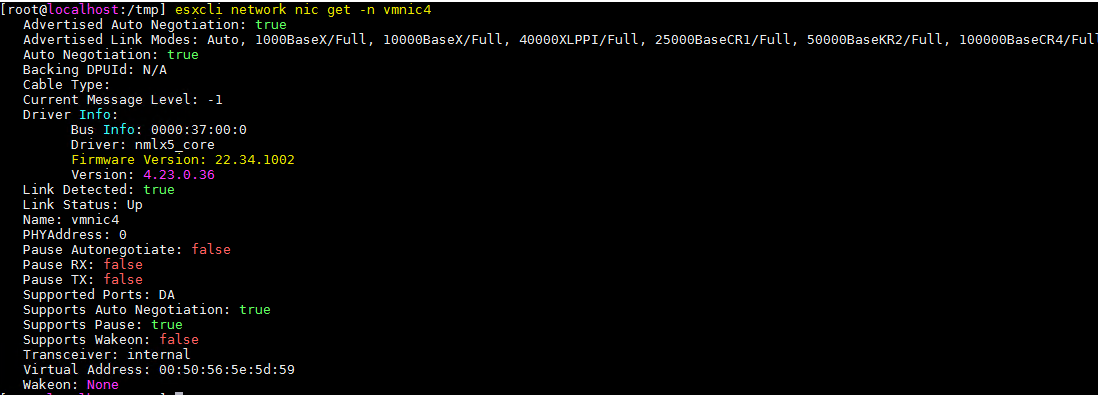

3. Verify that driver firmware version 22.34.1002 is installed.

ESXi CLI

esxcli network nic get -n vmnic4

If the above driver versions are not installed, refer to the below user guides to learn how to upgrade your drivers to the latest versions: HowTo Install NVIDIA Firmware Tools (MFT) on VMware ESXi 6.7 and 7.0 - Solutions - NVIDIA Networking Docs

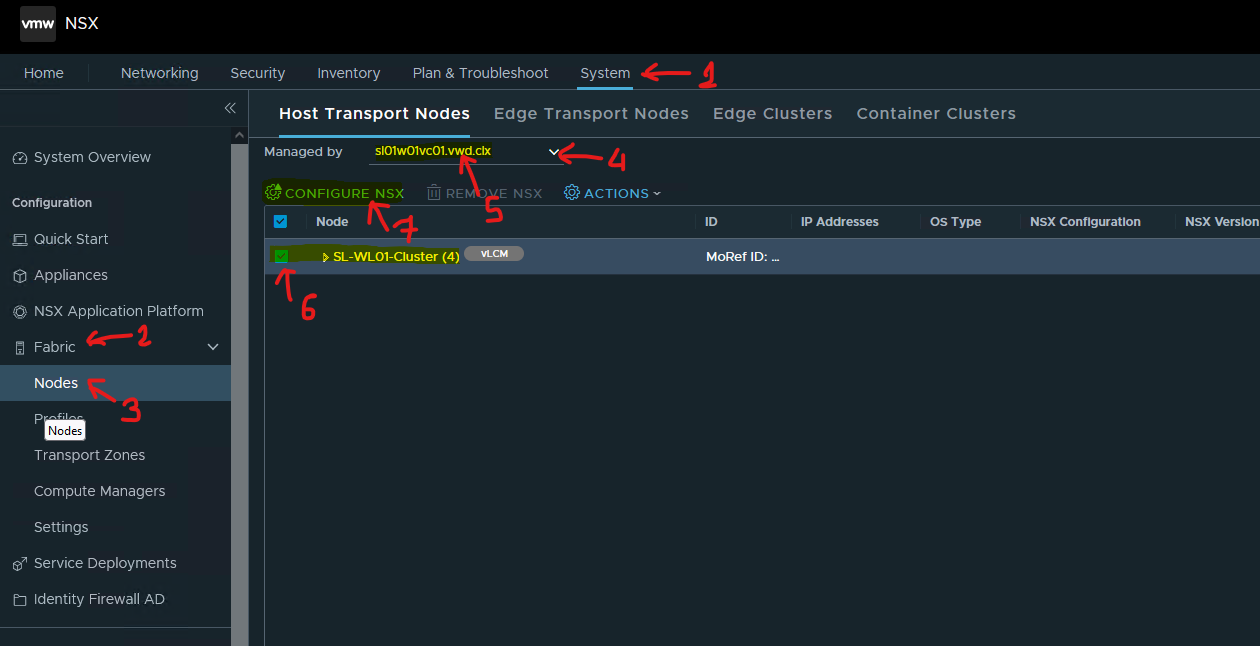

4. Push out the necessary NSX components to the hosts.

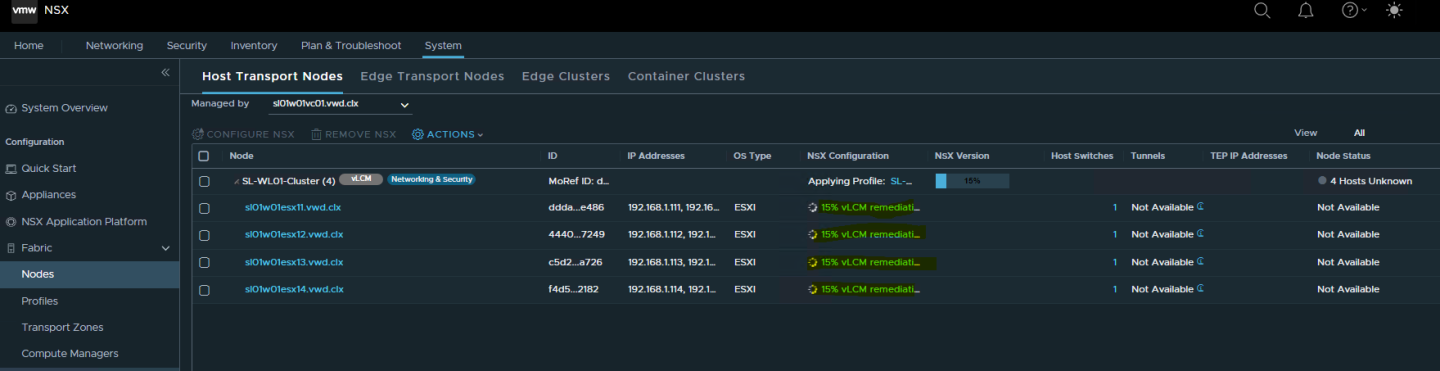

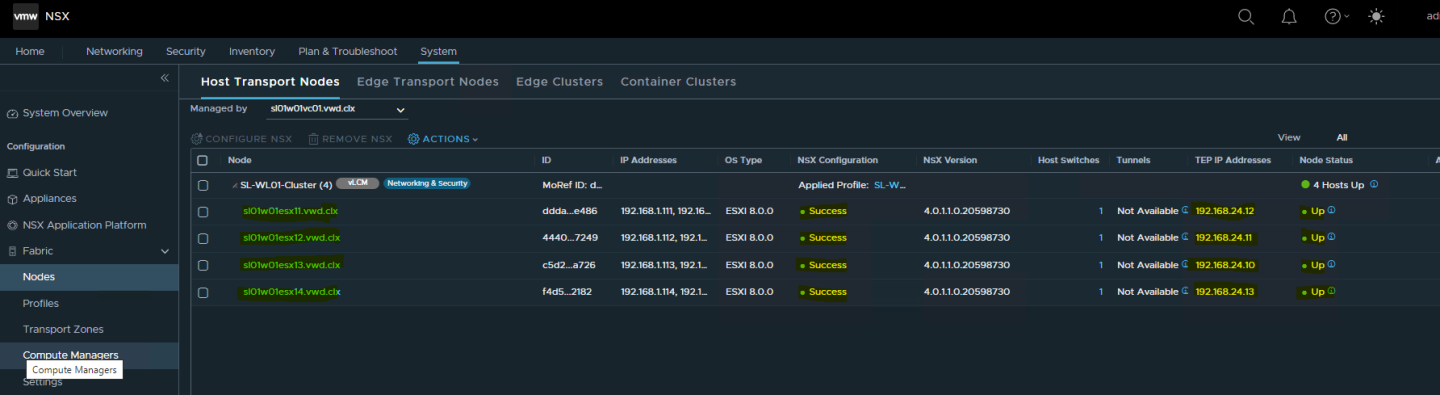

In the NSX Manager, navigate to System → Fabric → Nodes → Host Transport Nodes, and from the “Managed By” dropdown menu, change the Managed by from Standalone Hosts to the vCenter server you just added.

By expanding the name of the cluster, you will be able to see that your hosts are not in a prepared state and are not connected to the NSX Controller.

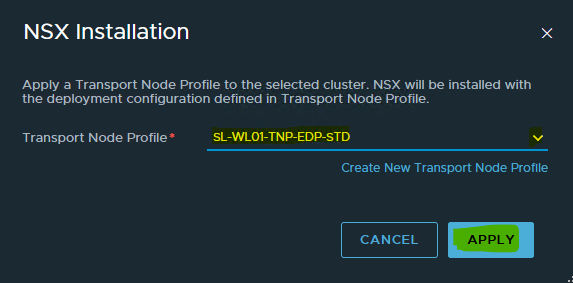

Select the Cluster and click Configure NSX.

5. Click on Create New Transport Node Profile.

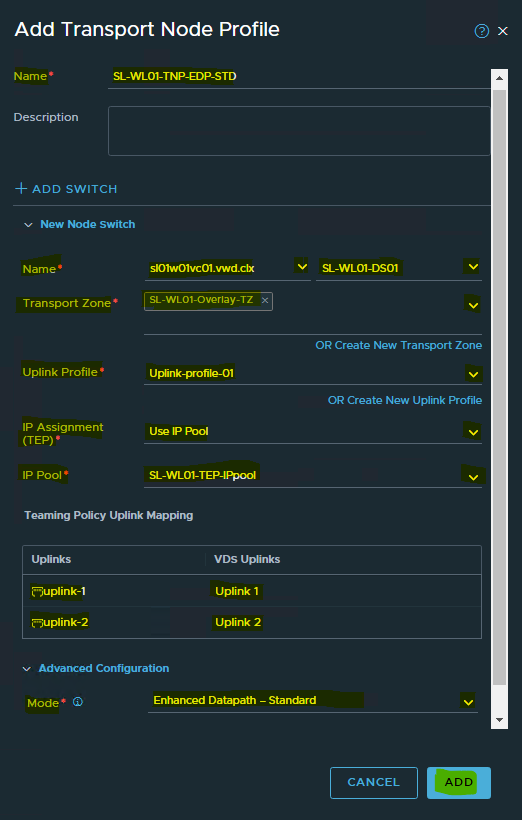

6. Provide the below details i n the Add Transport Node Profile window

Provide a profile Name (SL-WL01-TNP-EDP-STD).

Select vCenter and VDS in the Name.

From the Transport Zone dropdown menu, select the SL-WL01-Overlay-TZ - Overlay transport zone(s) created earlier.

From the Uplink Profile dropdown menu, select the host uplink profile created earlier (Uplink-profile-01).

From the IP Assignment dropdown menu, select the Use IP Pool.

From the IP Pool dropdown menu, select the SL-WL01-TEP-IPpool Tunnel EndPoint (TEP) IP Pool created earlier.

Select uplink-1 and uplink-2 in the Teaming Policy Uplink Mapping.

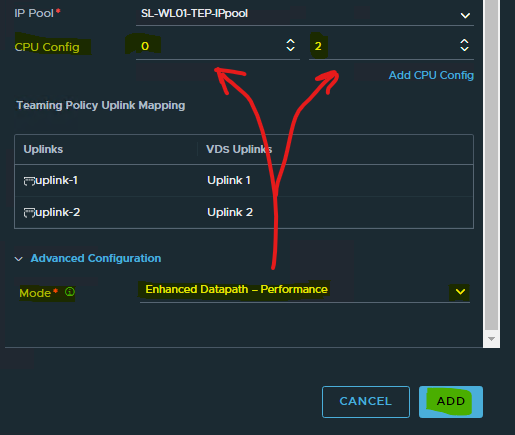

From the Mode dropdown menu, select the Enhanced Datapath - Standard or Enhanced Datapath - Performance.

In case you selected the Enhanced Datapath - Performance mode, you must provide the CPU Config.

7. To provide the CPU Config, check to which NUMA node the NIC is connected:

Enable the SSH Access to ESXi server.

Log into ESXi vSphere Command-Line Interface with root permissions.

Run the following commands in ESXi CLI:

To find out the number for NUMA nodes on your host, run the “esxcli hardware memory get” command.

ESXi Host Console

~ esxcli hardware memory get

Physical Memory: 274731274240 Bytes

Reliable Memory: 0 Bytes

NUMA Node Count: 4

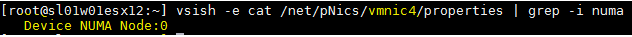

8. To find out the NIC’s affinity, run the following command:

vsish -e cat /net/pNics/<vmnicX>/properties | grep -i numa

Sample:

ESXi CLI

vsish -e cat /net/pNics/vmnic4/properties | grep -i numa

9. Enter the CPU Config, select NUMA node and numbers of CPU Lcores.

10. Click ADD.

11. On the NSX Installation window, check a Transport Node Profile and click Apply.

This starts the host preparation process, so allow a few minutes for the NSX VIBs to be installed on the hosts and for the transport nodes to be configured.

In the vSphere cluster:

Click the refresh button as needed, until you see that the status is “Success” and that the status indicators are “Up”.

In the vSphere Cluster:

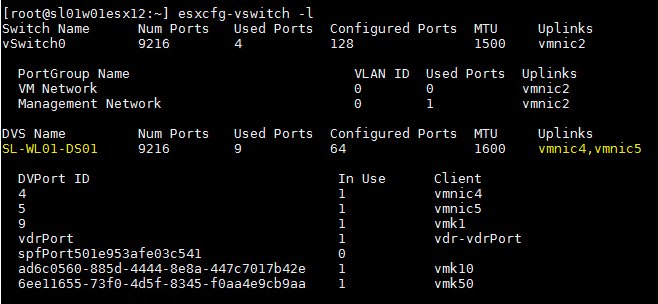

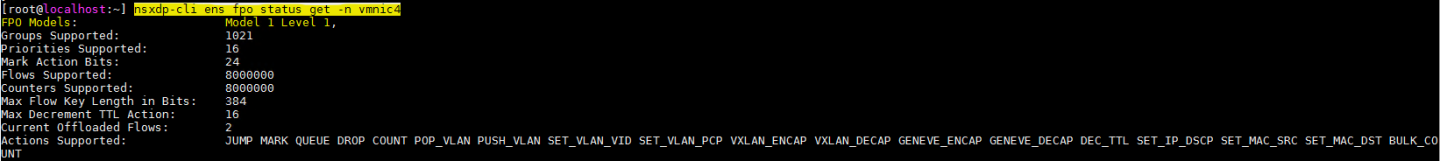

Verifying FPO Model 1 Level 1 is Enabled

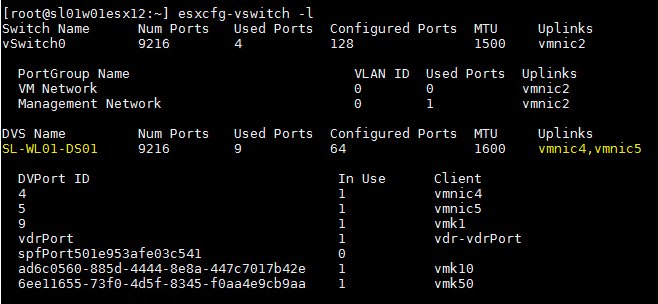

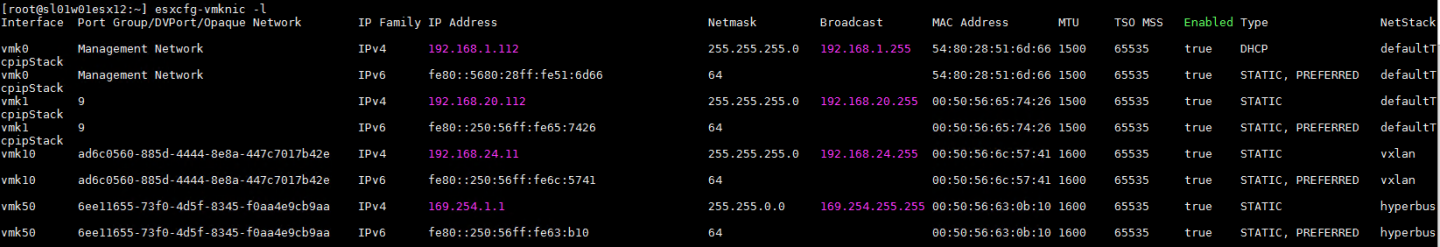

Log into the ESXi vSphere Command-Line Interface with root permissions, and run the following commands in the ESXi CLI to get details on the new VDS switch and VMK interface(s):

ESXi CLI

esxcfg-vswitch -l

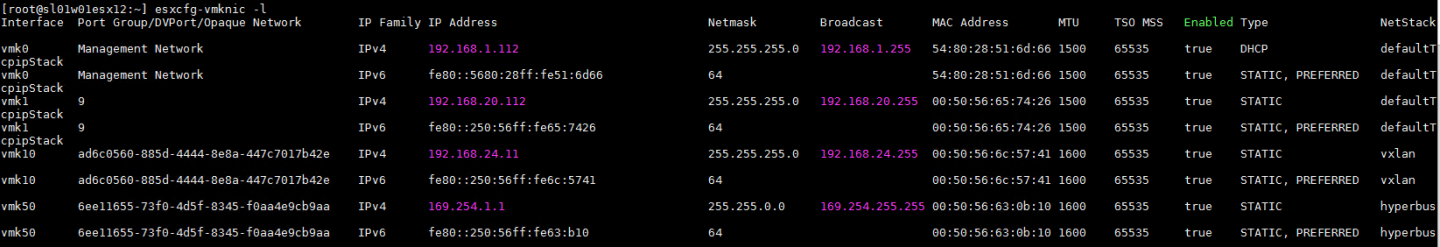

ESXi CLI

esxcfg-vmknic -l

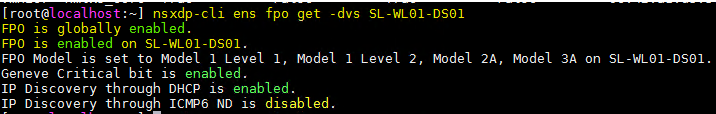

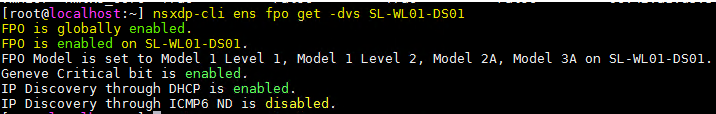

Check the FPO model on the VDS (SL-WL01-DS01).

ESXi CLI

nsxdp-cli ens fpo get -dvs SL-WL01-DS01

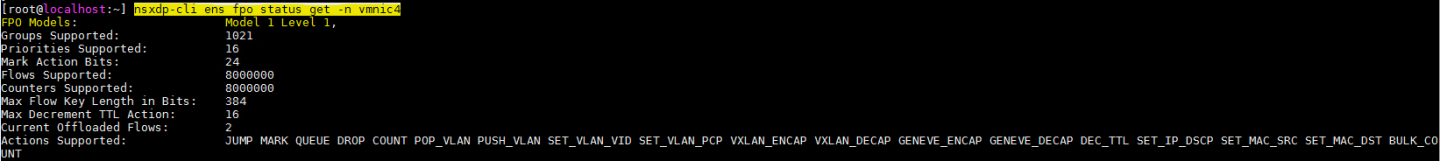

Check the FPO Model on the vmnic4 (in our environment).

ESXi CLI

nsxdp-cli ens fpo status get -n vmnic4

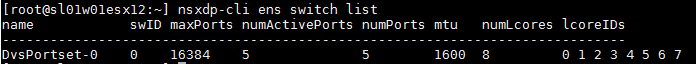

Run t he nsxdp-cli command to see the ENS switch.

ESXi CLI

nsxdp-cli ens switch list

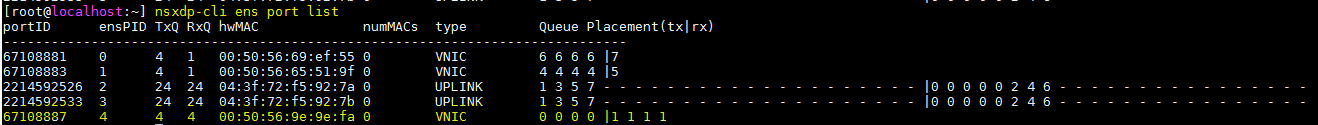

Check the ENS ports.

To check ENS ports in Enhanced Datapath - Performance mode ( formerly - a Poll

), run the following command:

ESXi CLI

nsxdp-cli ens port list

ESXi CLI

~ nsxdp-cli ens port list

portID ensPID TxQ RxQ hwMAC numMACs type Queue Placement(tx|rx)

------------------------------------------------------------------------------

67108883 0 1 1 00:50:56:6e:bc:a4 0 GENERIC 0 |0

67108884 1 1 1 00:50:56:62:ed:f9 0 GENERIC 0 |0

2214592526 2 1 2 00:00:00:00:00:00 0 UPLINK 0 |0

2214592533 2 1 2 00:00:00:00:00:00 0 UPLINK 0 |0

To Check ENS ports in the Enhanced Datapath - Standard (

formerly - an Interrupt

) mode, please run the following command:

ESXi CLI

nsxdp-cli ens port list

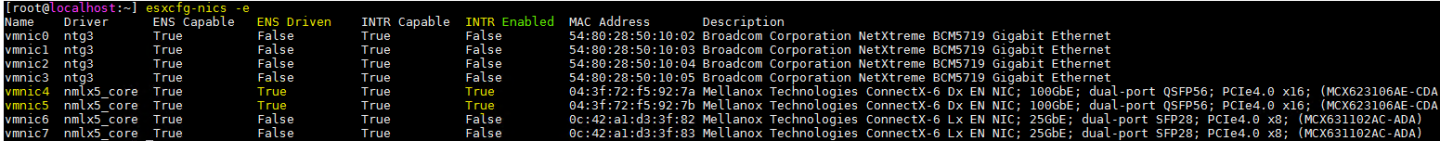

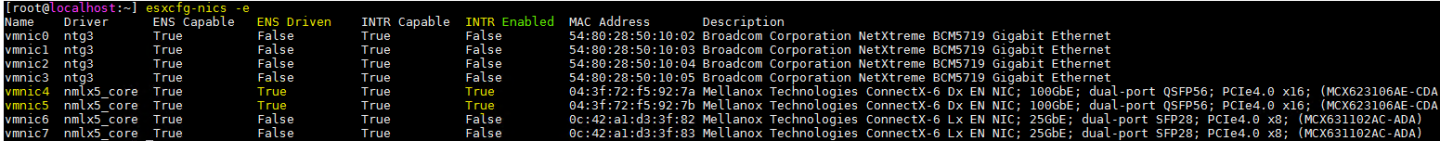

Show the ENS status of the interfaces: make sure that the ENS driven is TRUE, and check whether the Enhanced Datapath - Standard mode is Enabled/Disabled on vmnic4,5.

ESXi CLI

esxcfg-nics -e

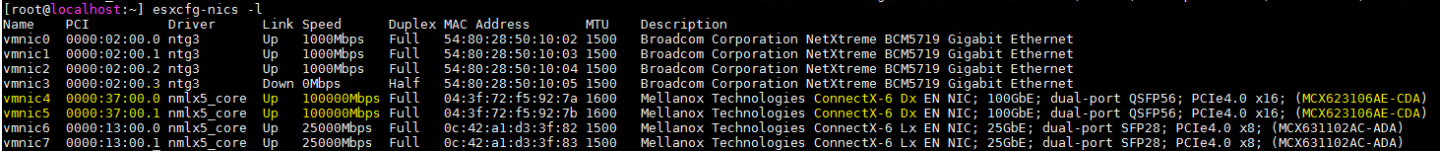

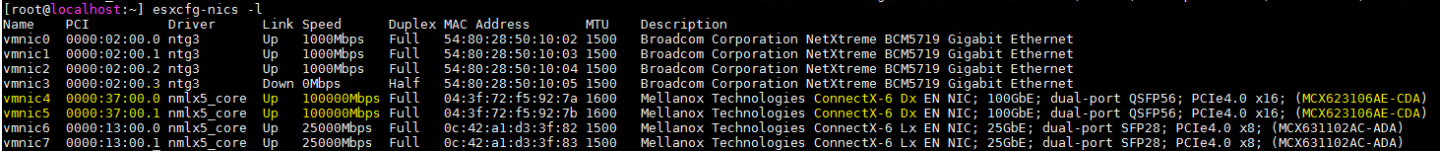

Make sure that vmnic4,5 is UP.

ESXi CLI

esxcfg-nics -l

Creating a Segment

An NSX Segment ( formerly - a Logical Switch) is a representation of layer-2 connectivity across transport nodes (ESXi Hosts), with layer-3 IP reachability between the segments. Each segment is assigned a VNI (virtual network identifier) which is similar to a VLAN ID.

Virtual machines attached to the same segment can communicate with each other, even across separate physical hosts via Geneve tunnels.

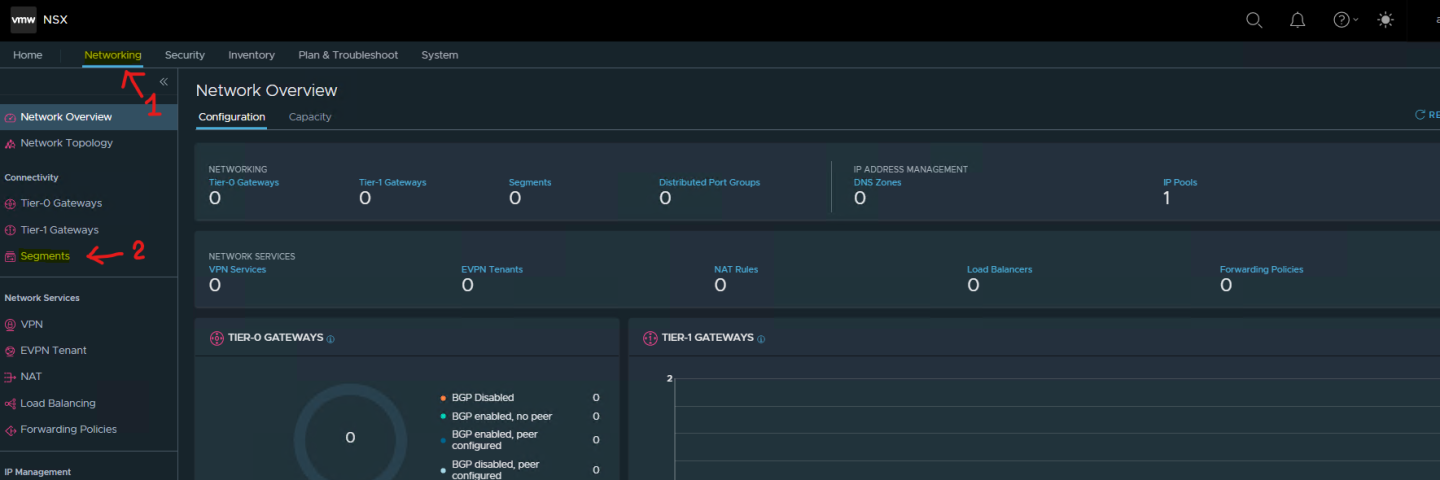

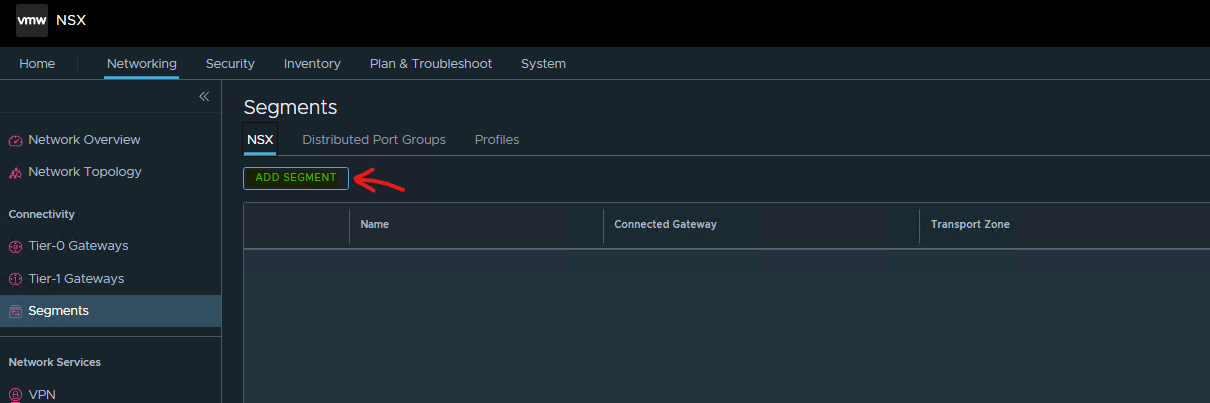

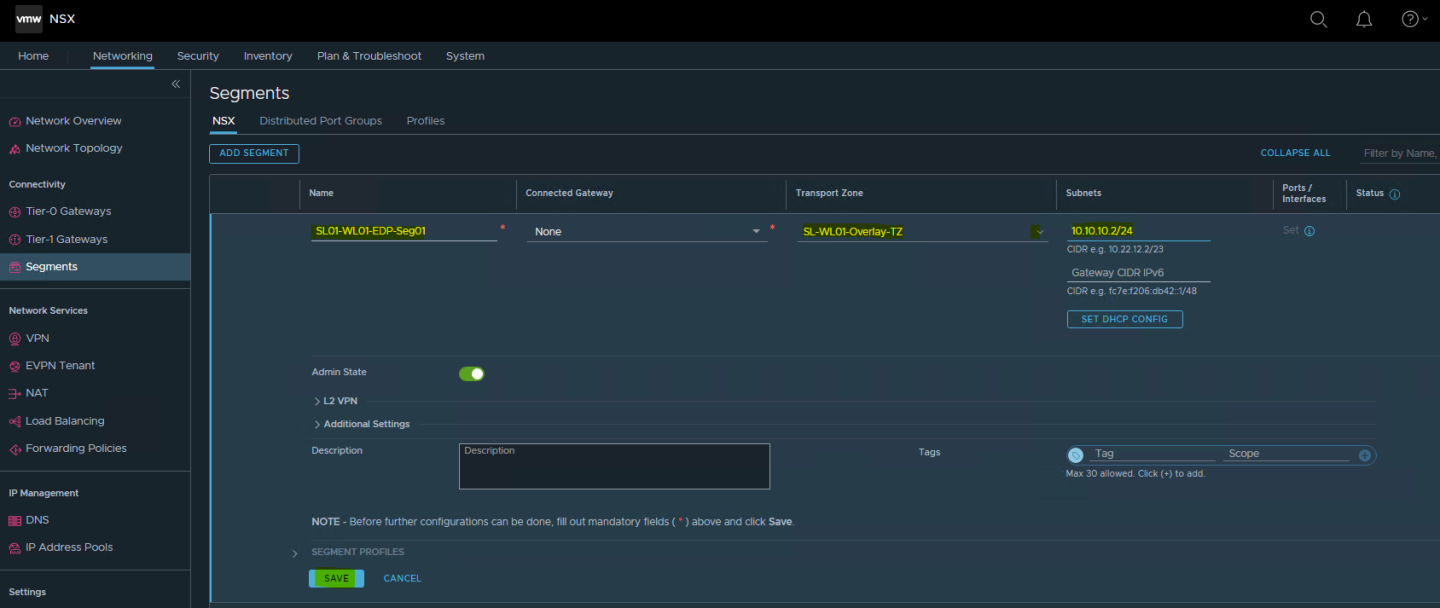

To create the Segment:

Log in from the NSX manager UI login page by using the URL "https://<fqdn or IP>".

Navigate to Networking → Segments.

Click ADD Segment.

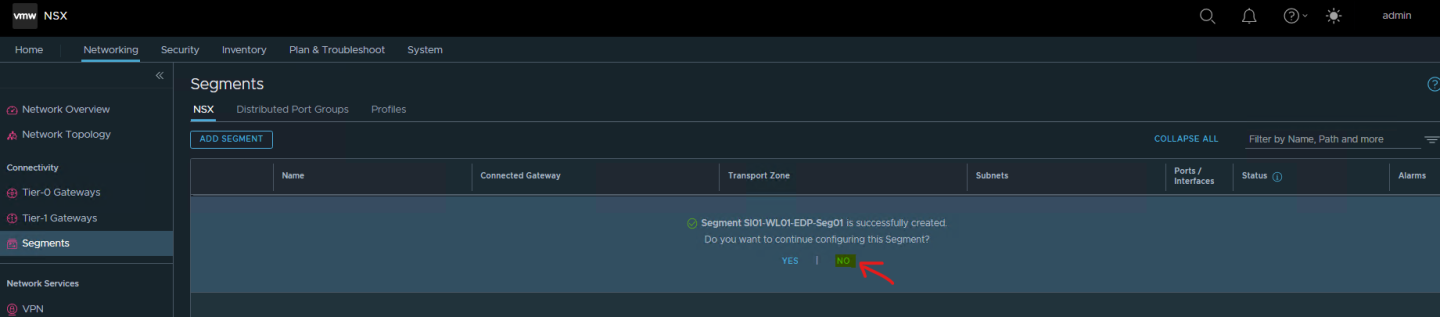

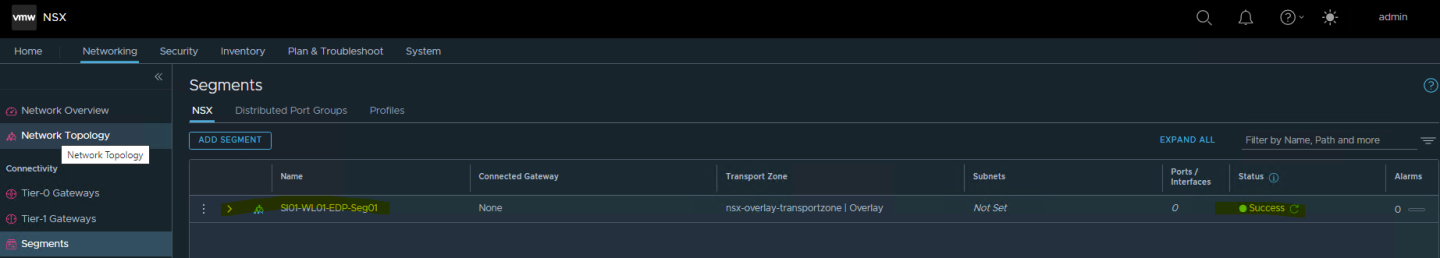

Fill up the Segment Name, Transport Zone, Subnets and VLAN. Click SAVE .

Click NO.

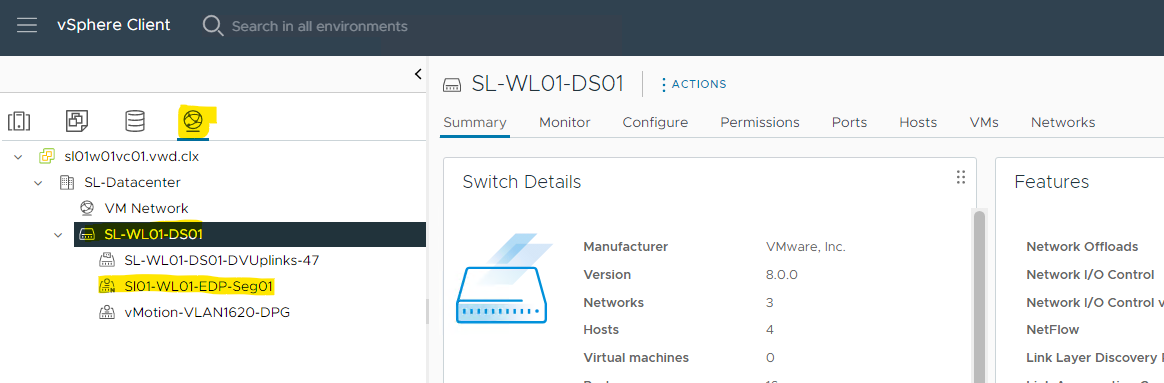

If you enter your vCenter, and Navigate to Networking → VDS, you will now see this new Segment (Distributed Port Group) to which you can attach a VM.

Configuring a Virtual Machine

This section explains how to run DPDK TestPMD workload i n the ENS Flow Processing Offload (FPO) Model 1 Level 1.

To configure the ENS Flow Processing Offload (FPO) Model 0, please see Appendix A.

Adding a Network Adapter to a VM

Locate the Virtual Machine in the vSphere Web Client.

Select a data center, folder, cluster, resource pool, or a host, and click the Related Objects tab.

Click Virtual Machines, and select the virtual machine from the list.

Shut Down Host OS on the Virtual Machine. The VM is used with Ubuntu 20.04 Operation System.

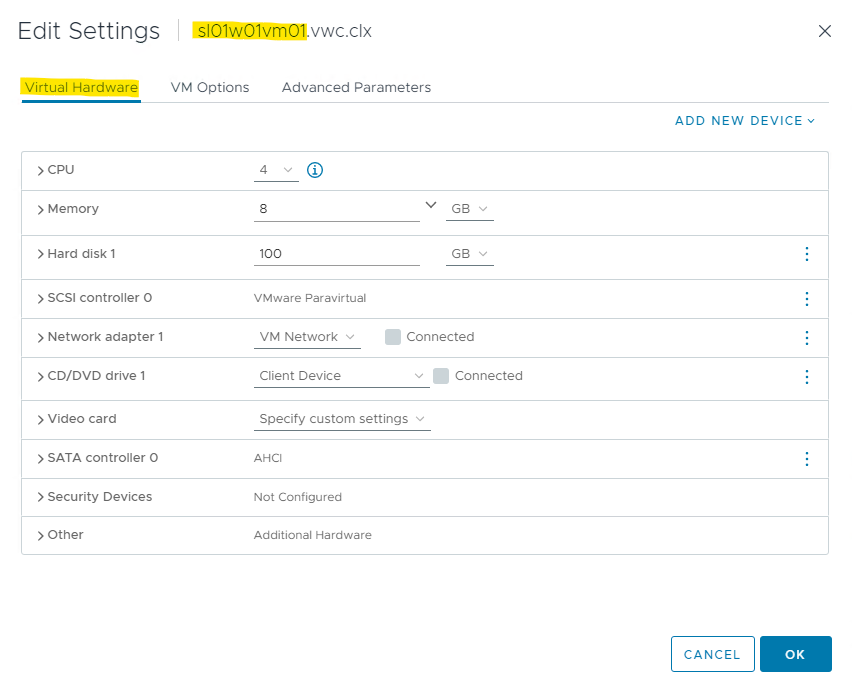

For optimal performance and to attach the VM to the Segment created earlier, Select the VM, and go to "Edit Settings" → Virtual Hardware.

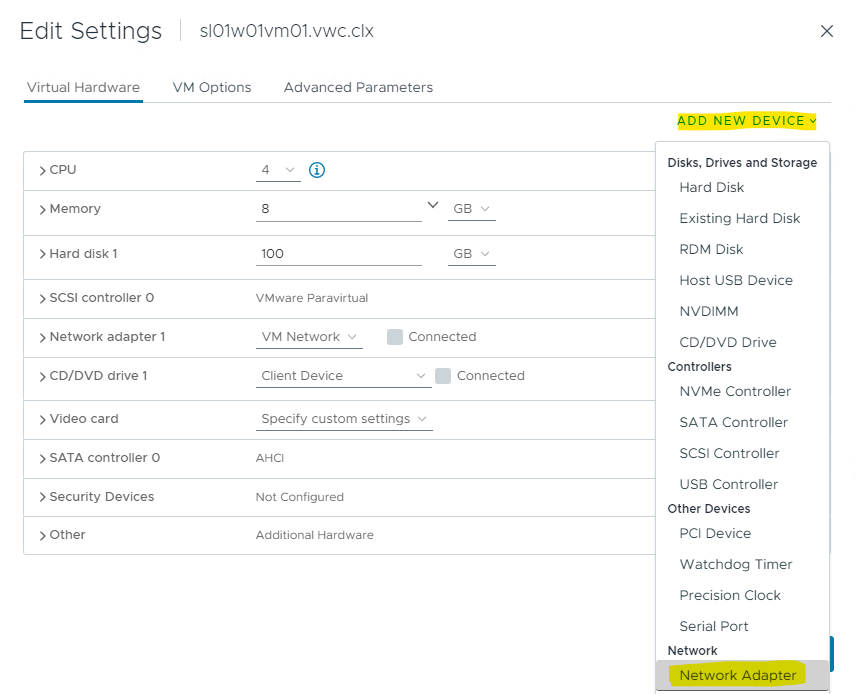

Click ADD NEW DEVICE → Network adapter.

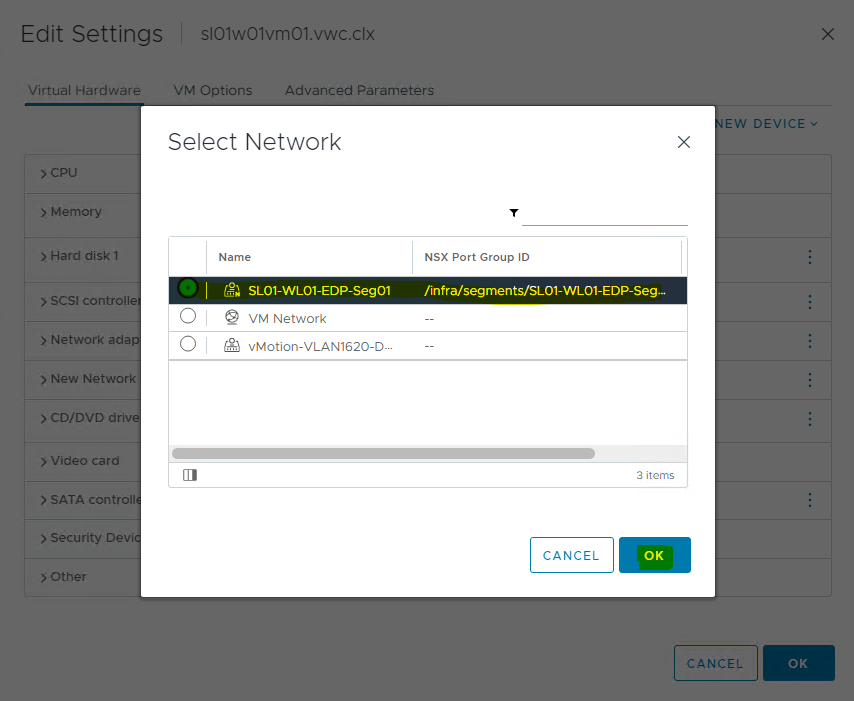

Click on the NEW Network drop-down menu, and select Browse...

Select the relevant Segment. In this case, the group is SL-WL01-EDP-Seg01. Click OK.

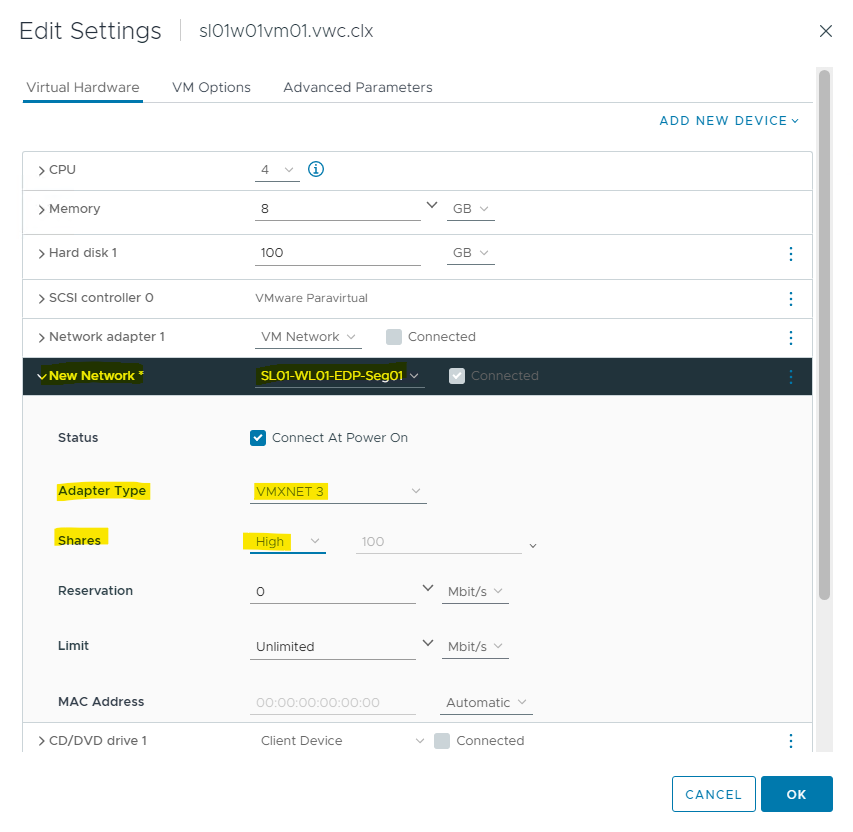

Expand the New Network section.

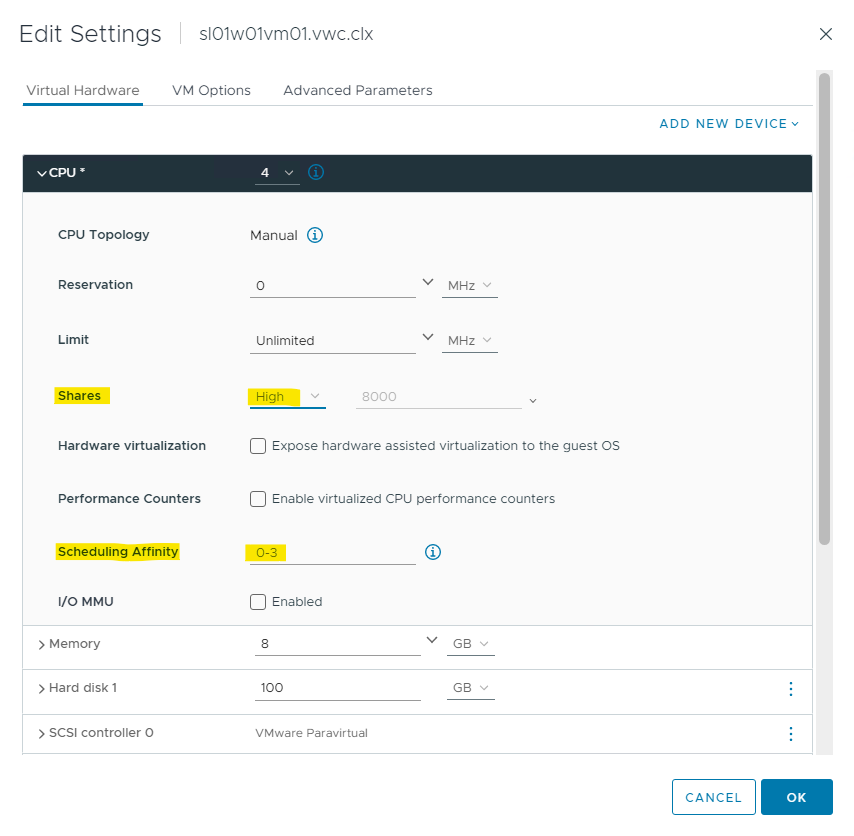

Verify that the Adapter Type is VMXNET3, and change Shares to High.

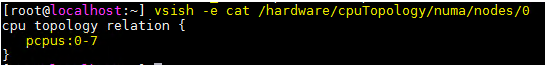

For optimal performance, select logical processor affinity for this VM. To check the range of logical processors, run the following command.

ESXi CLI

vsish -e cat /hardware/cpuTopology/numa/nodes/0

Select the VM, right-click it, and select Edit Settings.

Set Shares and Scheduling Affinity.

Sample:

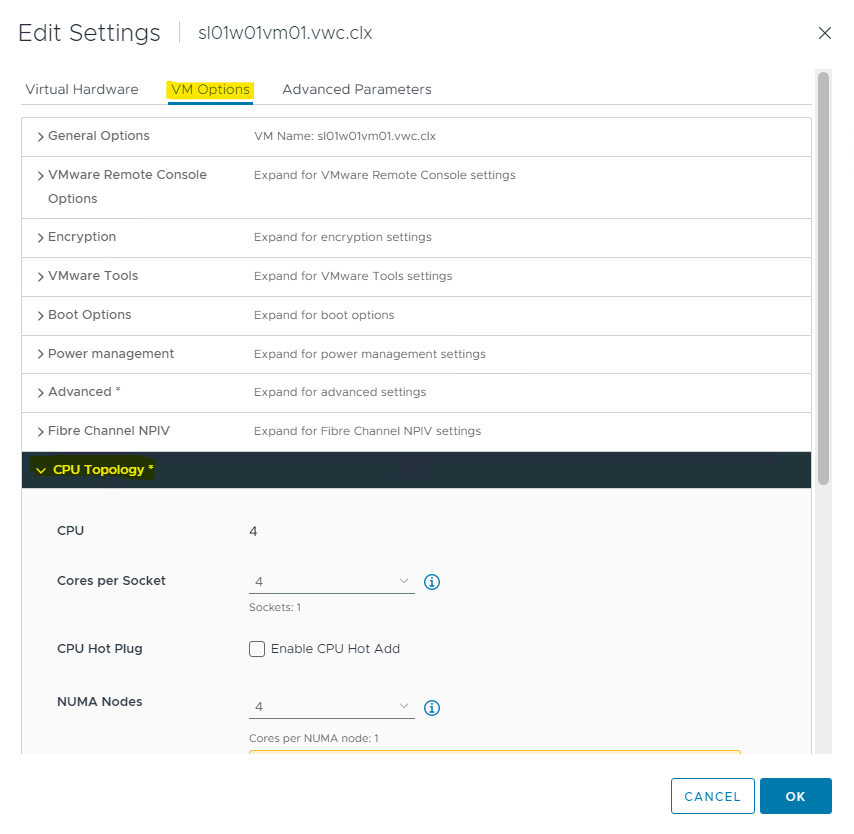

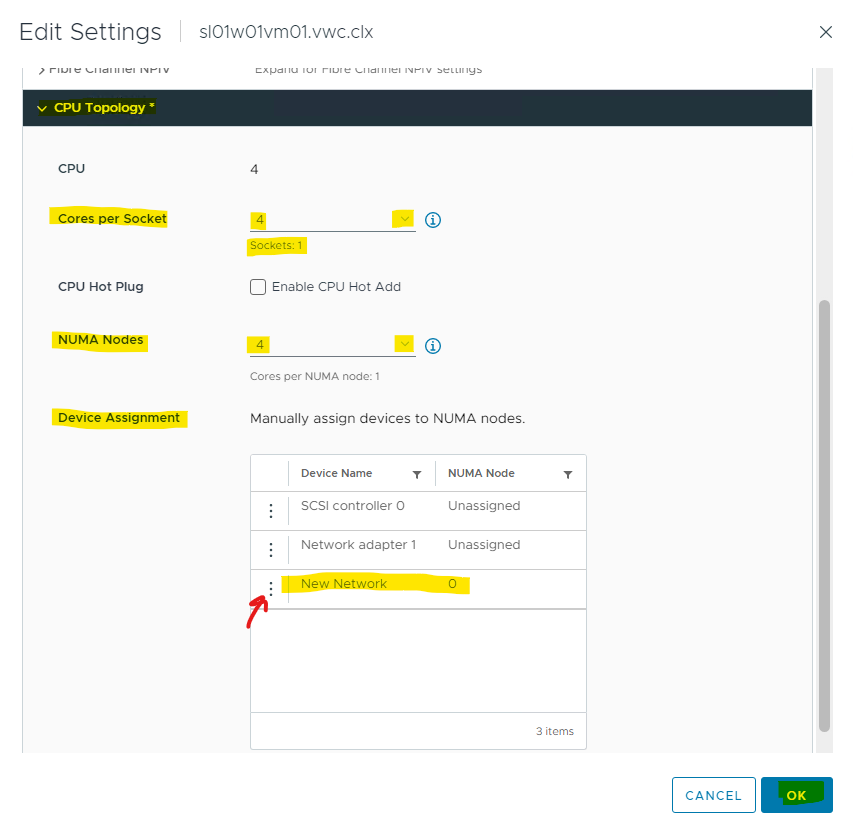

Select the VM Options tab. Expand the CPU Topology section.

Select Cores per Socket, NUMA Node and Device Assignment for New Network.

Example:

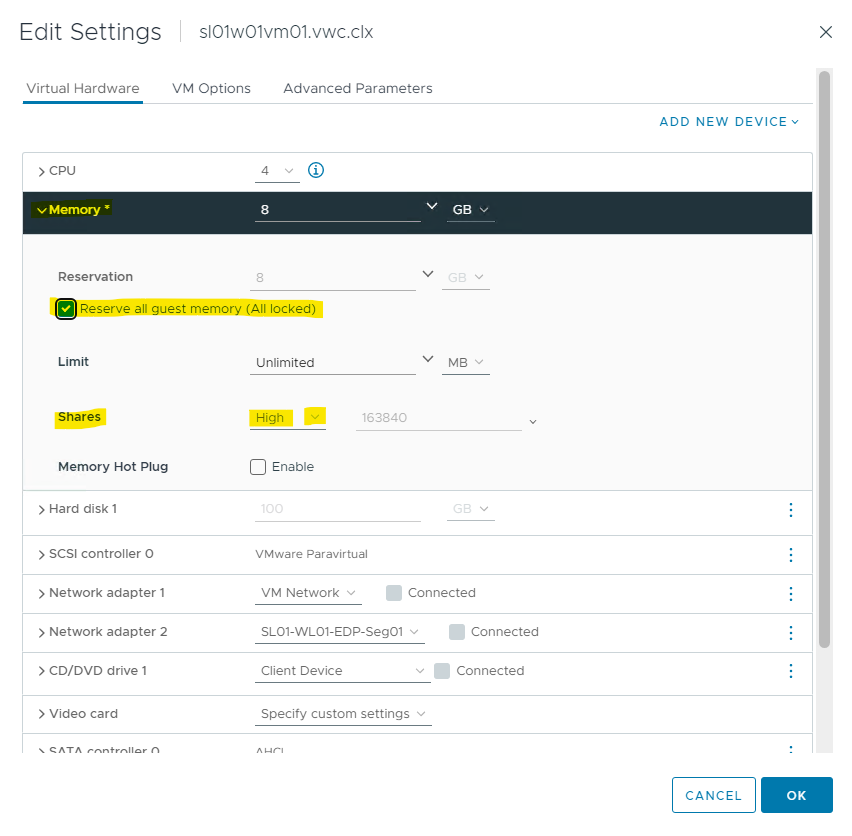

Select Reserve all guest memory and set Memory Shares to High.

Enable Huge Page Support.

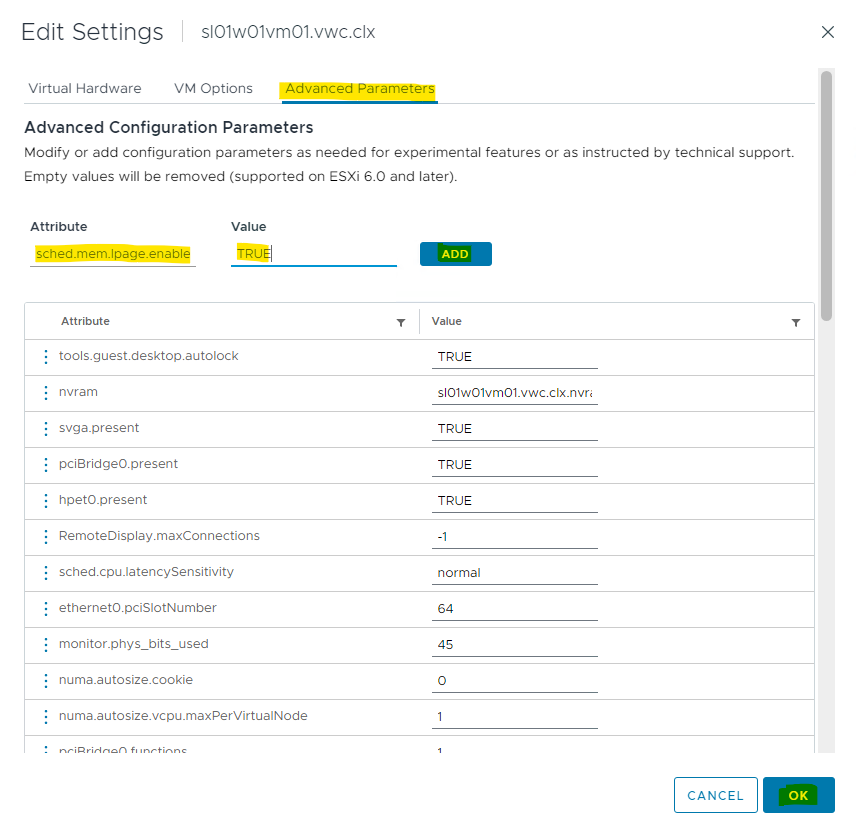

The virtual infrastructure supports backing Guest OS memory with 1GB Huge Pages for memory intensive and large memory DPDK applications.

To use 1GB Huge Pages to back the DPDK application's guest operating system memory, set sched.mem.lpage.enable1GPage = TRUE to the VM.

ImportantBefore you enable Huge Pages, consider the resource availability for other workloads in the cluster and for cluster operations such as HA and DRS.

To set Huge Pages, perform the following steps:

Click VM Options, and select Advanced.

Scroll down to Configuration Parameters, and select Edit Settings.

Click Add Configuration Params, and enter sched.mem.lpage.enable1GPage = TRUE. Click OK.

Power on the VM.

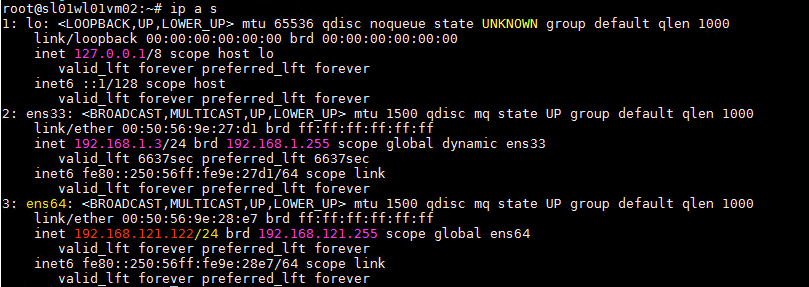

Set up a Static IP on the second VM's interface.

Check the ENS ports on the ESXi server.

ESXi CLI

nsxdp-cli ens port list

Important

ImportantCreate a VM on the second ESXi host, and repeat the Add Network Adapter to the VM step.

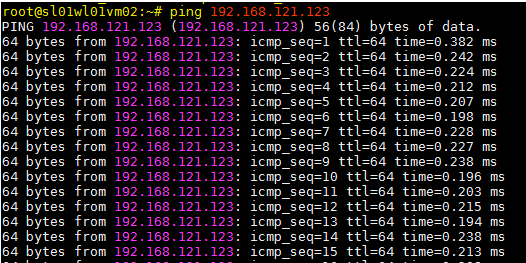

Run Ping.

Check the Offload traffic running on the ESXi host:

ESXi CLI

[root@sl01w01esx12:~] nsxdp-cli ens flow-table dump -l 0 FT dstMAC srcMAC VLAN srcPort srcIP dstIP proto VNI srcPort/type dstPort/code Actions hwHits hwBytes ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- L4 00:50:56:9e:64:1a 00:50:56:9e:28:e7 0 3 192.168.121.122 192.168.121.123 1 66560 8 0 bmap:0x1400080 inval(s):131 cg:1091 dp:0x4 len:704; GENEVE DECAP; DFW on dstPort; 0 0 [root@sl01w01esx12:~] nsxdp-cli ens flow-table dump -l 1 FT dstMAC srcMAC VLAN srcPort srcIP dstIP proto VNI srcPort/type dstPort/code Actions hwHits hwBytes ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- L4 00:50:56:9e:28:e7 00:50:56:9e:64:1a 0 4 192.168.121.123 192.168.121.122 1 0 0 0 bmap:0x20000c0 inval(s):119 cg:1091 dp:0x3 len:814; DFW on srcPort; VNI: 66560; GENEVE ENCAP VNI: 66560; 0 0

Preparing the Driver for the DPDK Application

To prepare the driver for the DPDK, run the following steps in VM OS:

Build DPDK v20.05

Clone DPDK:

VM console

cd /root git clone git://dpdk.org/dpdk dpdk_repo cd dpdk_repo git checkout v20.05

Install meson:

VM console

apt install python3-pip sudo update-alternatives --install /usr/bin/python python /usr/bin/python3 1 pip3 install meson

Install ninja:

VM console

cd /tmp wget https://github.com/ninja-build/ninja/releases/download/v1.10.2/ninja-linux.zip apt install unzip unzip ninja-linux.zip cp ninja /usr/local/bin/

Build DPDK:

VM console

cd /root/dpdk_repo meson -Dtests=false -Ddisable_drivers=net/ark,net/atlantic,net/avp,net/axgbe,net/bond,net/i*,net/p*,net/netvsc --prefix=`pwd`/dpdk-install build ninja -C build

Prepare the Driver

Before the driver preparation, retrieve the second network interface name and ID.

To check the interface names, which are different from the management IP, follow this e xample:

VM console

~# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:bc:e3:6c brd ff:ff:ff:ff:ff:ff

inet 10.7.215.62/24 brd 10.7.215.255 scope global dynamic ens160

valid_lft 4602sec preferred_lft 4602sec

inet6 fe80::250:56ff:febc:e36c/64 scope link

valid_lft forever preferred_lft forever

3: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:bc:5b:10 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.62/24 brd 192.168.1.255 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:febc:5b10/64 scope link

valid_lft forever preferred_lft forever

In our case, ens192 is the second adapter.

Check the PCI ID of the driver .

Example:

VM console

~# lspci | grep VMXNET3

03:00.0 Ethernet controller: VMware VMXNET3 Ethernet Controller (rev 01)

0b:00.0 Ethernet controller: VMware VMXNET3 Ethernet Controller (rev 01)

In ours case it is 0b:00.0.

To prepare the driver, refer to the following example:

VM console

~# ifconfig ens192 0

~# /root/dpdk_repo/usertools/dpdk-devbind.py -u 0b:00.0

~# modprobe uio

~# insmod /root/dpdk-kmods/linux/igb_uio/igb_uio.ko

~# /root/dpdk_repo/usertools/dpdk-devbind.py -b igb_uio 0b:00.0

Verification

For verification, examine the network devices using the DPDK-compatible driver:

VM console

~# /root/dpdk_repo/usertools/dpdk-devbind.py --status-dev net

Network devices using DPDK-compatible driver

============================================

0000:0b:00.0 'VMXNET3 Ethernet Controller 07b0' drv=igb_uio unused=vmxnet3,vfio-pci

Network devices using kernel driver

===================================

0000:03:00.0 'VMXNET3 Ethernet Controller 07b0' if=ens160 drv=vmxnet3 unused=igb_uio,vfio-pci *Active*

Appendix A - Enable FPO Model 0

To Enable FPO Model 0, perform the following steps:

Check the FPO model.

Log into the ESXi vSphere Command-Line Interface with root permissions, and run the following commands in the ESXi CLI to view information about the new N-VDS switch and VMK interface(s).

ESXi CLI

esxcfg-vswitch -l

ESXi Host Console

esxcfg-vmknic -l

.Check the FPO model on the VDS (SL-WL01-DS01) switch.

ESXi CLI

~ nsxdp-cli ens fpo get -dvs SL-WL01-DS01

Check the running FPO Model on the vmnic4 (in our environment).

ESXi Host Console

nsxdp-cli ens fpo status get -n vmnic4

Enable FPO Model 0.

Enable Model 0.

ESXi CLI

~ nsxdp-cli ens fpo set -dvs SL-WL01-DS01 --disable The new setting will take effect after reactivating ENS.

Run t he nsxdp-cli command to see the ENS switch.

ESXi CLI

~ nsxdp-cli ens switch list name swID maxPorts numActivePorts numPorts mtu numLcores lcoreIDs ------------------------------------------------------------------------------ DvsPortset-0 0 16384 3 3 9000 1 0

D isable ENS by running the following command, and wait 5-10 seconds.

ESXi CLI

~ esxcfg-vswitch -Y DvsPortset-0

Ena ble the ENS.

To Enable ENS in the Enhanced Datapath - Performance mode ( formerly - a Poll ), run the following command.ESXi Host Console

~ esxcfg-vswitch -y DvsPortset-0

To Enable ENS in the Enhanced Datapath - Standard ( formerly - an Interrupt ) mode, please run the following command.

ESXi Host Console

~ esxcfg-vswitch -y -i DvsPortset-0

Check the FPO model.

ESXi CLI

~ nsxdp-cli ens fpo get -dvs SL-WL01-DS01 FPO is globally enabled. FPO is disabled on SL-WL01-DS01. FPO Model is set to Model 1 Level 1 but disabled on SL-WL01-DS01. Geneve Critical bit is enabled. IP Discovery through DHCP is enabled. IP Discovery through ICMP6 ND is disabled.

Check the ENS ports.

To Check the ENS ports in the Enhanced Datapath - Standard (formerly - an Interrupt) mode, please run the following command.

ESXi CLI

~ nsxdp-cli ens port list portID ensPID TxQ RxQ hwMAC numMACs type Queue Placement(tx|rx) ------------------------------------------------------------------------------ 67108884 0 4 1 00:50:56:6c:57:41 0 VNIC 4 4 4 4 |5 67108885 1 4 1 00:50:56:63:0b:10 0 VNIC 2 2 2 2 |3 2214592528 2 24 24 04:3f:72:f5:92:6b 0 UPLINK 1 3 5 7 - - - - - - - - - - - - - - - - - - - - |0 0 0 0 0 2 4 6 - - - - - - - - - - - - - - - - 2214592526 3 24 24 04:3f:72:f5:92:6a 0 UPLINK 1 3 5 7 - - - - - - - - - - - - - - - - - - - - |0 0 0 0 0 2 4 6 - - - - - - - - - - - - - - - -

Show ENS status of interfaces. ENS driven is TRUE on vmnic4 and vmnic5.

ESXi CLI

esxcfg-nics -e

Check that vmnic4 and vmnic5 is UP.

ESXi CLI

esxcfg-nics -l

Go to Configure a Virtual Machine steps.

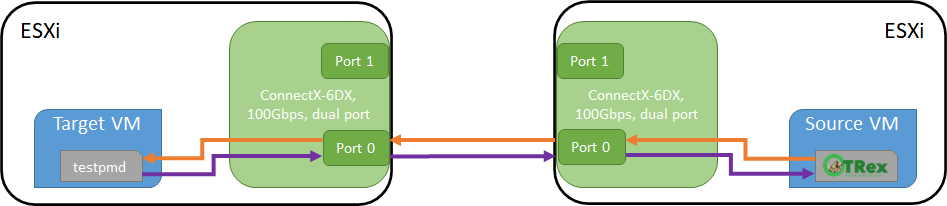

Appendix B - Performance Testing

Performance Testing

Disclaimer: The performance results listed in this document are indicative, and should not be considered formal performance targets for NVIDIA products.

Setup

Setup Specs:

Intel(R) Xeon(R) Gold 6130 CPU @ 2.10GHz , 16-Cores, 2 NUMA nodes

RAM 96 GB

NIC ConnectX-6 Dx dual-port (yet the traffic goes only to a single port with 100 Gb/s)

Driver v4.23.0.36

Firmware v22.34.1002

VMware ESXi 8.0.0 build-20513097

VMware vCenter 8.0.0 build-20920323

VMware NSX 4.0.1.1.0.20598726 4.0.1.1.0.20598726 .0.1.1.0.20598726

DPDK & testpmd v21.05 with 5tswap forward-mode

TRex v3.02 as a packet generator

The environment was optimized according to this document.

Commands

On the testpmd side:

In the test lab, 4 Cores are used for testpmd and 4 RX/TX queues.

VM console

# cd dpdk/build/app/

# ./dpdk-testpmd -l 0-5 --master-lcore=0 -w 0000:0b:00.0,rxq_cqe_comp_en=1,mprq_en=1,rxqs_min_mprq=1 --socket-mem=1024 -- --burst=64 --txd=2048 --rxd=2048 --mbcache=512 --rxq=4 --txq=4 --nb-cores=4 --forward-mode=5tswap -i -a --rss-udp --port-topology=loop

On the TRex side:

VM console

# cd v3.2/

# nohup ./t-rex-64 --no-scapy-server --no-ofed-check -i -c 8 &

# ./trex-console

> start -f udp_64_simple.py -m 2mpps -p 0

>tui

udp_64_simple.py file:

VM console

from trex_stl_lib.api import *

class STLS1(object):

def create_stream (self):

pkt = Ether()/IP(src="16.0.0.1",dst="48.0.0.1")/UDP(dport=12)/(22*'x')

vm = STLScVmRaw( [

STLVmFlowVar(name="v_port",

min_value=4337,

max_value=5337,

size=2, op="inc"),

STLVmWrFlowVar(fv_name="v_port",

pkt_offset= "UDP.sport" ),

STLVmFixChecksumHw(l3_offset="IP",l4_offset="UDP",l4_type=CTRexVmInsFixHwCs.L4_TYPE_UDP),

]

)

return STLStream(packet = STLPktBuilder(pkt = pkt ,vm = vm ) ,

mode = STLTXCont(pps = 8000000) )

def get_streams (self, direction = 0, **kwargs):

# create 1 stream

return [ self.create_stream() ]

# dynamic load - used for trex console or simulator

def register():

return STLS1()

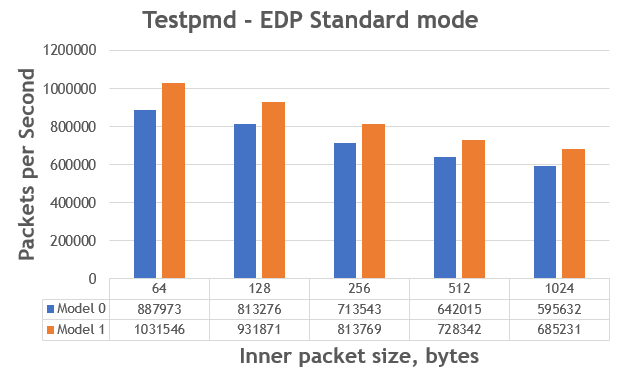

Overlay Results

Conclusion

The benchmark results in this performance study show the great advantages of the Model 1 configuration in terms of MPPS.

Model 1 provides up to 16% performance improvement compared to Model 0.

The ConnectX-6 Dx network adapter allows to o ffload the entire data-path to the ConnectX hardware. This offloads the CPU from performing the data communication tasks and generates a significant performance boost that is critical in the new era of accelerated computing associated with a massive amount of data transfers.

Authors

|

Boris Kovalev Boris Kovalev has worked for the past several years as a Solutions Architect, focusing on NVIDIA Networking/Mellanox technology, and is responsible for complex machine learning, Big Data and advanced VMware-based cloud research and design. Boris previously spent more than 20 years as a senior consultant and solutions architect at multiple companies, most recently at VMware. He has written multiple reference designs covering VMware, machine learning, Kubernetes, and container solutions which are available at the Mellanox Documents website. |