DOCA Virtio-net Service Guide

This guide provides instructions on how to use the DOCA virtio-net service container on top of NVIDIA® BlueField®-3 networking platform .

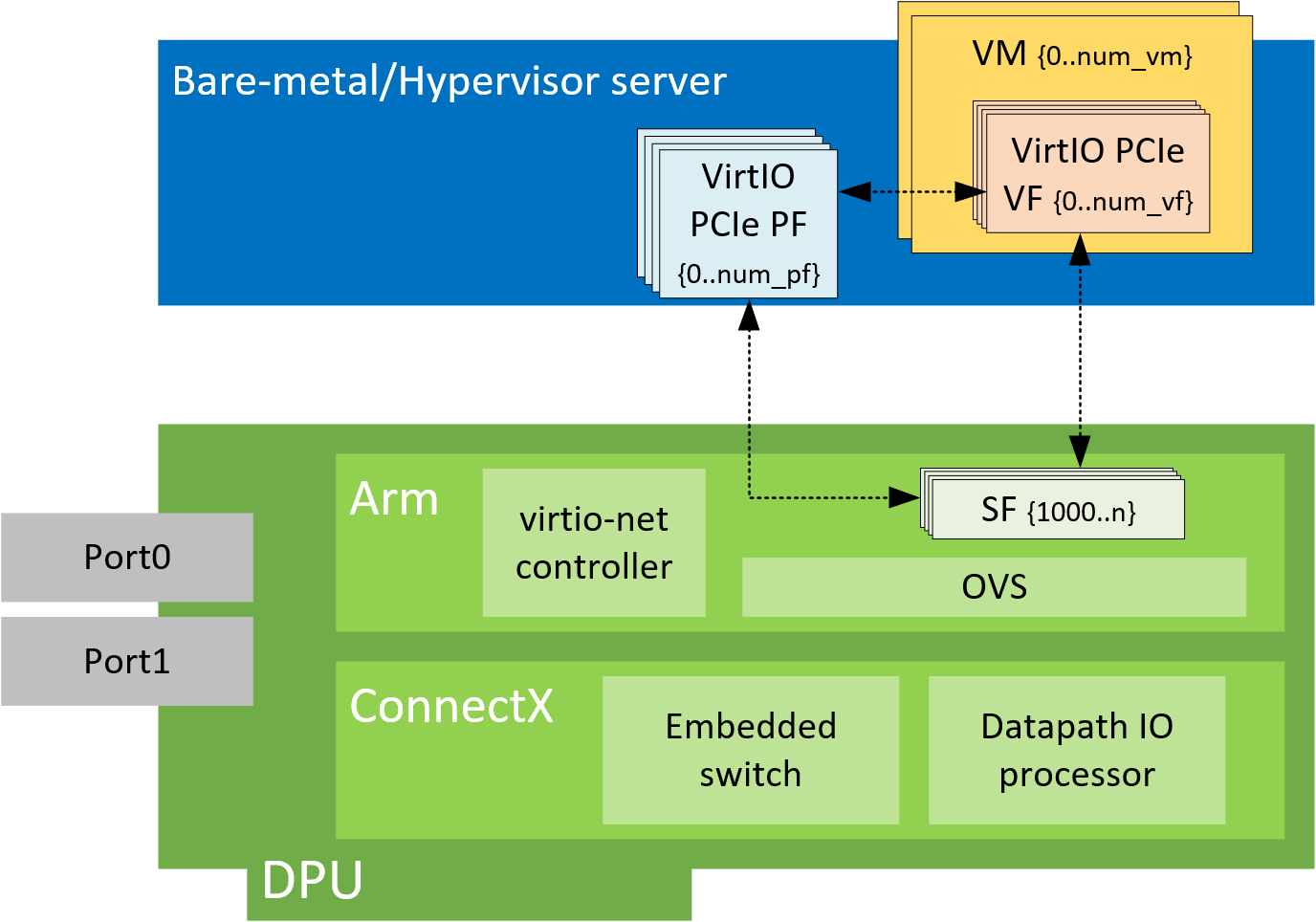

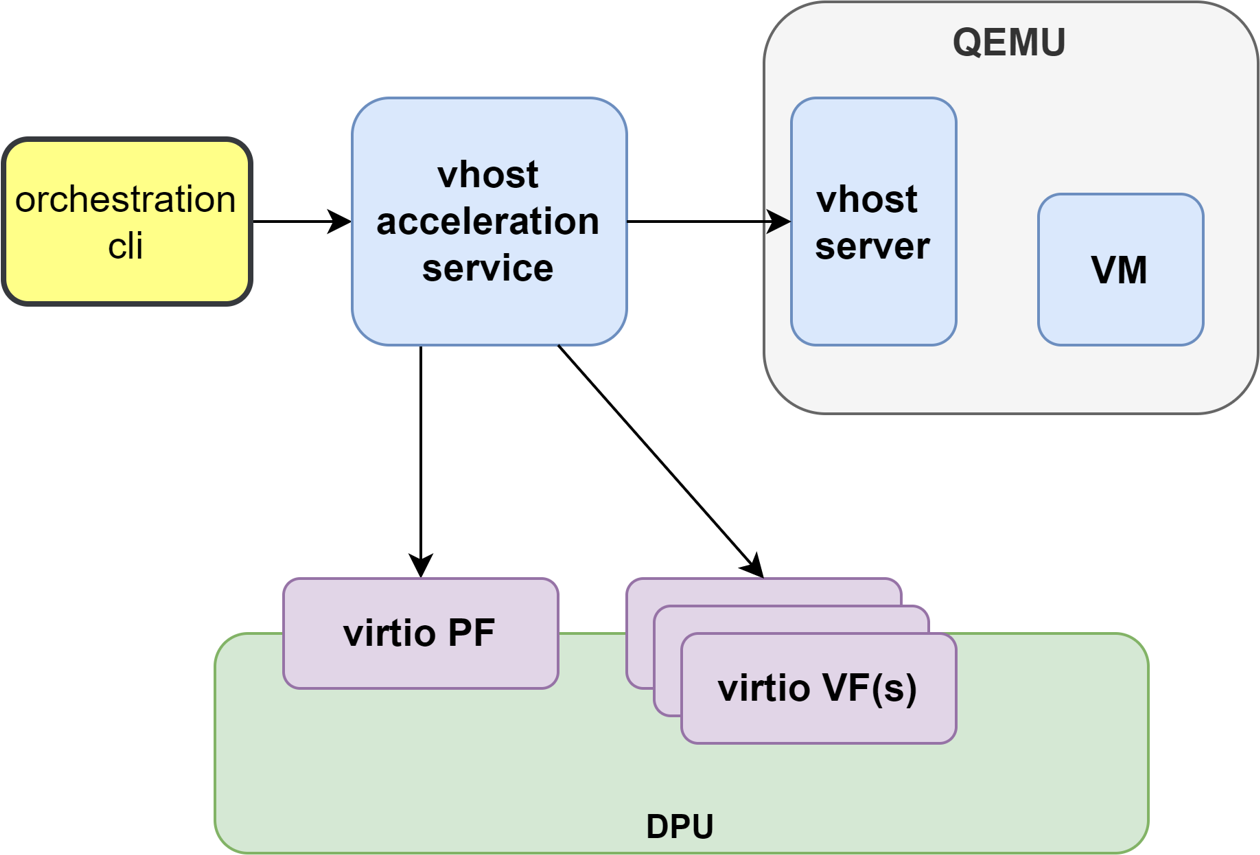

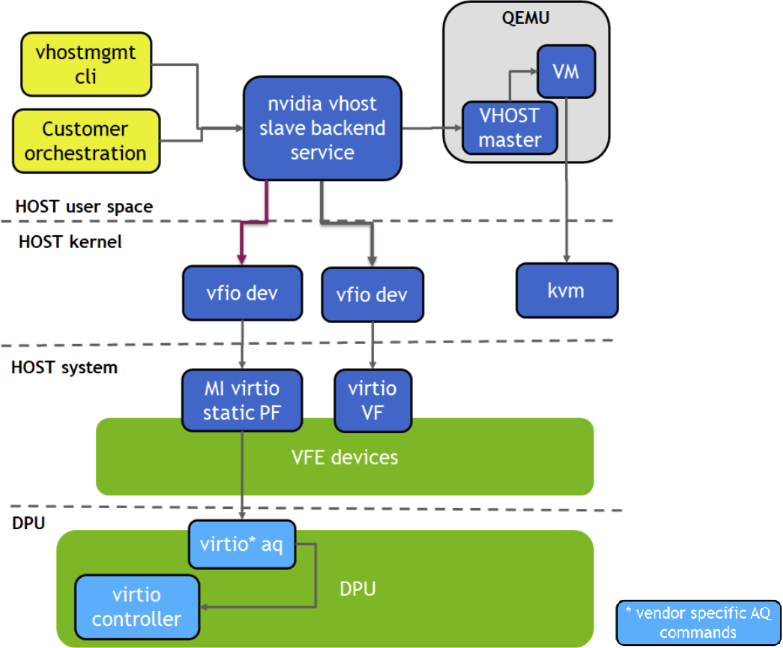

NVIDIA® BlueField® virtio-net enables users to create virtio-net PCIe devices in the system where the BlueField is connected. In a traditional virtualization environment, virtio-net devices can be emulated by QEMU from the hypervisor, or offloading part of the work (e.g., dataplane) to the NIC (e.g., vDPA). Compared to those solutions, virtio-net PCIe devices offload both data and control plane to the BlueField networking device. The PCIe virtio-net devices exposed to the hypervisor do not depend on QEMU or other software emulators/vendor drivers from the guest OS.

The solution is based on BlueField family technology on top of virtual switch and OVS, so that virtio-net devices can benefit from the full SDN and hardware offload methodologies.

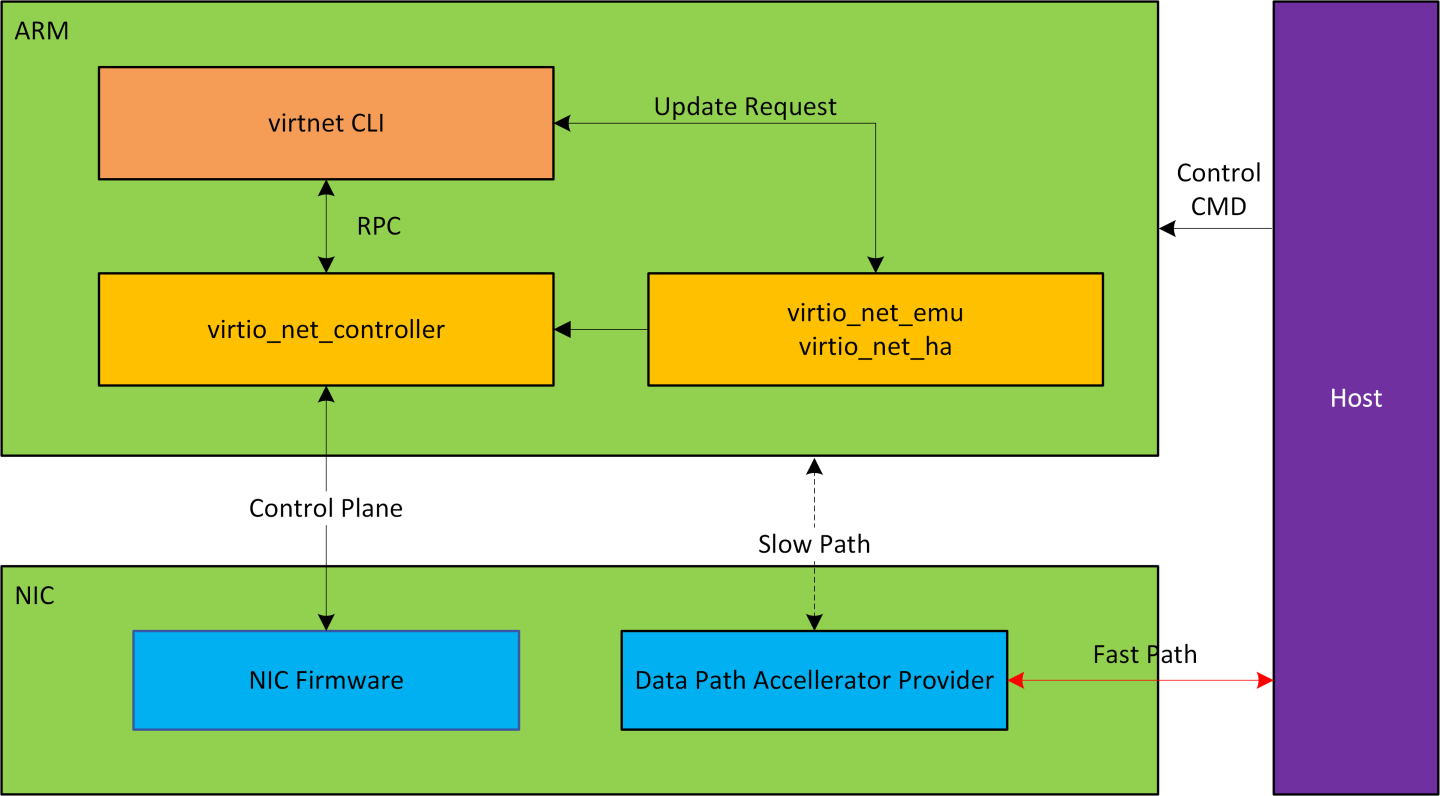

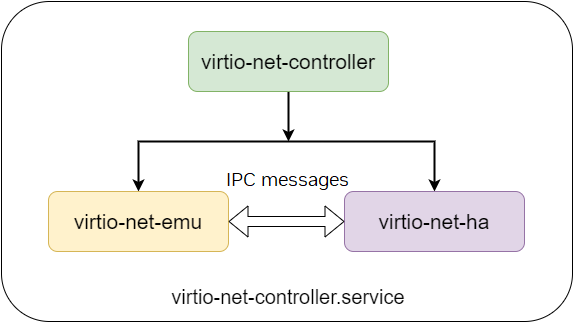

Virtio-net-controller is a systemd service which runs the BlueField with a command-line interface (CLI) frontend to communicate with the service running in the background. The controller systemd service is enabled by default and runs automatically after certain firmware configurations are deployed.

Refer to "Virtio-net Deployment" for more information.

The processes virtio_net_emu and virtio_net_ha are created to manage live update and high availability.

Updating OS Image on BlueField

To install the BFB bundle on the NVIDIA® BlueField®, run the following command from the Linux hypervisor:

[host]# sudo bfb-install --rshim <rshimN> --bfb <image_path.bfb>

For more information, refer to section "Deploying BlueField Software Using BFB from Host" in the NVIDIA BlueField DPU BSP documentation.

Updating NIC Firmware

From the BlueField networking platform, run:

[dpu]# sudo /opt/mellanox/mlnx-fw-updater/mlnx_fw_updater.pl --force-fw-update

For more information, refer to section "Upgrading Firmware" in the NVIDIA DOCA Installation Guide for Linux.

Configuring NIC Firmware

As default, DPU should be configured in DPU mode. A simple way to confirm DPU is running at DPU mode is to log into the BlueField Arm system and check if p0 and pf0hpf both exists by running command below.

[dpu]# ip link show

Virtio-net full emulation only works in DPU mode. For more information about DPU mode configuration, please refer to BlueField Modes of Operation.

Before enabling the virtio-net service, configure firmware via mlxconfig tool is required. There are examples on typical configurations, the table listed relevant mlxconfig entry descriptions.

For mlxconfig configuration changes to take effect, perform a BlueField system-level reset.

|

Mlxconfig Entries |

Description |

|

|

Must be set to |

|

|

Total number of PCIe functions (PFs) exposed by the device for virtio-net emulation. Those functions are persistent along with host/BlueField power cycle. |

|

|

The max number of virtual functions (VFs) that can be supported for each virtio-net PF |

|

|

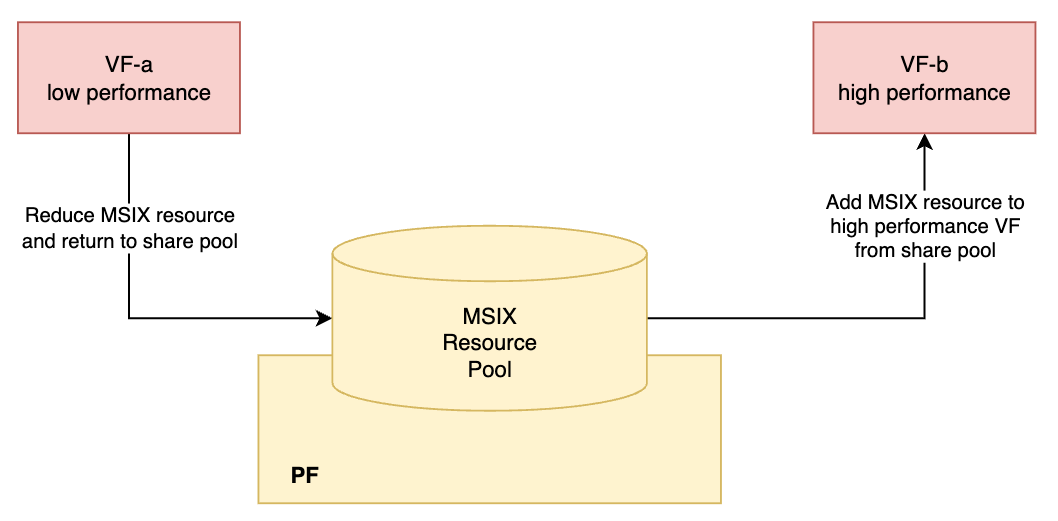

Number of MSI-X vectors assigned for each PF of the virtio-net emulation device, minimal is |

|

|

Number of MSI-X vectors assigned for each VF of the virtio-net emulation device, minimal is |

|

|

When |

|

|

The maximum number of emulated switch ports. Each port can hold a single PCIe device (emulated or not). This determines the supported maximum number of hot-plug virtio-net devices. The maximum number depends on hypervisor PCIe resource, and cannot exceed 31. Note

Check system PCIe resource. Changing this entry to a big number may results in the host not booting up, which would necessitate disabling the BlueField device and clearing the host NVRAM.

|

|

|

When |

|

|

The total number of scalable function (SF) partitions that can be supported for the current PF. Valid only when Note

This entry differs between the BlueField and host side

|

|

|

Log (base 2) of the BAR size of a single SF, given in KB. Valid only when |

|

|

When |

|

|

Enable single-root I/O virtualization (SR-IOV) for virtio-net and native PFs |

|

|

Enable expansion ROM option for PXE for virtio-net functions Note

All virtio

|

|

|

Enable expansion ROM option for UEFI for Arm based host for virtio-net functions |

|

|

Enable expansion ROM option for UEFI for x86 based host for virtio-net functions |

The maximum number of supported devices is listed below. It does not apply when there are hot-plug and VF created at the same time.

|

Static PF |

Hot-plug PF |

VF |

|

31 |

31 |

1008 |

The maximum supported number of hotplug PFs depends on the host PCI resource, it may support less or none on specific systems. Refer to host BIOS specification.

Static PF

Static PF is defined as virtio-net PFs which are persistent even after DPU or host power cycle. It also supports creating SR-IOV VFs.

The following is an example for enabling the system with 4 static PFs (VIRTIO_NET_EMULATION_NUM_PF) only:

10 SFs (PF_TOTAL_SF) are reserved to take into account other application using the SFs.

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=4 \

VIRTIO_NET_EMULATION_NUM_VF=0 \

VIRTIO_NET_EMULATION_NUM_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=0 \

PCI_SWITCH_EMULATION_NUM_PORT=0 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=64 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

SRIOV_EN=0

Hotplug PF

Hotplug PF is defined as virtio-net PFs which can be hotplugged or unplugged dynamically after the system comes up.

Hotplug PF does not support creating SR-IOV VFs.

The following is an example for enabling 16 hotplug PFs (PCI_SWITCH_EMULATION_NUM_PORT):

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=0 \

VIRTIO_NET_EMULATION_NUM_VF=0 \

VIRTIO_NET_EMULATION_NUM_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=1 \

PCI_SWITCH_EMULATION_NUM_PORT=16 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=64 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

SRIOV_EN=0

SR-IOV VF

SR-IOV VF is defined as virtio-net VFs created on top of PFs. Each VF gets an individual virtio-net PCIe devices.

VFs cannot be dynamically created or destroyed, they can only change from X to 0, or from 0 to X.

VFs will be destroyed when reboot host or unbind PF from virtio-net kernel driver.

The following is an example for enabling 126 VFs per static PF—504 (4 PF x 126) VFs in total:

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=4 \

VIRTIO_NET_EMULATION_NUM_VF=126 \

VIRTIO_NET_EMULATION_NUM_MSIX=64 \

VIRTIO_NET_EMULATION_NUM_VF_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=0 \

PCI_SWITCH_EMULATION_NUM_PORT=0 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=512 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

NUM_VF_MSIX=0 \

SRIOV_EN=1

PF/VF Combinations

Creating static/hotplug PFs and VFs at the same time is supported.

The total sum of PCIe functions to the external host must not exceed 256. For example:

If there are 2 PFs with no VFs (

NUM_OF_VFS=0) and there is 1 RShim, then the remaining static functions is 253 (256-3).If 1 virtio-net PF is configured (

VIRTIO_NET_EMULATION_NUM_PF=1), then up to 252 virtio-net VFs can be configured (VIRTIO_NET_EMULATION_NUM_VF=252)If 2 virtio-net PF (

VIRTIO_NET_EMULATION_NUM_PF=2), then up to 125 virtio-net VFs can be configured (VIRTIO_NET_EMULATION_NUM_VF=125)

The following is an example for enabling 15 hotplug PFs, 2 static PFs, and 200 VFs (2 PFs x 100):

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=2 \

VIRTIO_NET_EMULATION_NUM_VF=100 \

VIRTIO_NET_EMULATION_NUM_MSIX=10 \

VIRTIO_NET_EMULATION_NUM_VF_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=1 \

PCI_SWITCH_EMULATION_NUM_PORT=15 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=256 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

NUM_VF_MSIX=0 \

SRIOV_EN=1

In hotplug virtio-net PFs and virtio-net SR-IOV VFs setups, only up to 15 hotplug devices are supported.

System Configuration

Host System Configuration

For hotplug device configuration, it is recommended to modify the hypervisor OS kernel boot parameters and add the options below:

pci=realloc

For SR-IOV configuration, first enable SR-IOV from the host.

Refer to MLNX_OFED documentation under Features Overview and Configuration > Virtualization > Single Root IO Virtualization (SR-IOV) > Setting Up SR-IOV for instructions on how to do that.

Make sure to add the following options to Linux boot parameter.

intel_iommu=on iommu=pt

Add pci=assign-busses to the boot command line when creating more than 127 VFs. Without this option, the following errors may trigger from the host and the virtio driver would not probe those devices.

pci 0000:84:00.0: [1af4:1041] type 7f class 0xffffff

pci 0000:84:00.0: unknown header type 7f, ignoring device

Because the controller from the BlueField side provides hardware resources and acknowledges (ACKs) the request from the host's virtio-net driver, it is mandatory to reboot the host OS (or unload the virtio-net driver) first and the BlueField afterwards. This also applies to reconfiguring a controller from the BlueField platform (e.g., reconfiguring LAG). Unloading the virtio-net driver from host OS side is recommended.

BlueField System Configuration

Virtio-net full emulation is based on ASAP^2. For each virtio-net device created from host side, there is an SF representor created to represent the device from the BlueField side. It is necessary to have the SF representor in the same OVS bridge of the uplink representor.

The SF representor name is designed in a fixed pattern to map different type of devices.

|

Static PF |

Hotplug PF |

SR-IOV VF |

|

|

SF Range |

1000-1999 |

2000-2999 |

3000 and above |

For example, the first static PF gets the SF representor of en3f0pf0sf1000 and the second hotplug PF gets the SF representor of en3f0pf0sf2001. It is recommended to verify the name of the SF representor from the sf_rep_net_device field in the output of virtnet list.

[dpu]# virtnet list

{

...

"devices": [

{

"pf_id": 0,

"function_type": "static PF",

"transitional": 0,

"vuid": "MT2151X03152VNETS0D0F2",

"pci_bdf": "14:00.2",

"pci_vhca_id": "0x2",

"pci_max_vfs": "0",

"enabled_vfs": "0",

"msix_num_pool_size": 0,

"min_msix_num": 0,

"max_msix_num": 32,

"min_num_of_qp": 0,

"max_num_of_qp": 15,

"qp_pool_size": 0,

"num_msix": "64",

"num_queues": "8",

"enabled_queues": "7",

"max_queue_size": "256",

"msix_config_vector": "0x0",

"mac": "D6:67:E7:09:47:D5",

"link_status": "1",

"max_queue_pairs": "3",

"mtu": "1500",

"speed": "25000",

"rss_max_key_size": "0",

"supported_hash_types": "0x0",

"ctrl_mac": "D6:67:E7:09:47:D5",

"ctrl_mq": "3",

"sf_num": 1000,

"sf_parent_device": "mlx5_0",

"sf_parent_device_pci_addr": "0000:03:00.0",

"sf_rep_net_device": "en3f0pf0sf1000",

"sf_rep_net_ifindex": 15,

"sf_rdma_device": "mlx5_4",

"sf_cross_mkey": "0x18A42",

"sf_vhca_id": "0x8C",

"sf_rqt_num": "0x0",

"aarfs": "disabled",

"dim": "disabled"

}

]

}

Once SF representor name is located, add it to the same OVS bridge of the corresponding uplink representor and make sure the SF representor is up:

[dpu]# ovs-vsctl show

f2c431e5-f8df-4f37-95ce-aa0c7da738e0

Bridge ovsbr1

Port ovsbr1

Interface ovsbr1

type: internal

Port en3f0pf0sf0

Interface en3f0pf0sf0

Port p0

Interface p0

[dpu]# ovs-vsctl add-port ovsbr1 en3f0pf0sf1000

[dpu]# ovs-vsctl show

f2c431e5-f8df-4f37-95ce-aa0c7da738e0

Bridge ovsbr1

Port ovsbr1

Interface ovsbr1

type: internal

Port en3f0pf0sf0

Interface en3f0pf0sf0

Port en3f0pf0sf1000

Interface en3f0pf0sf1000

Port p0

Interface p0

[dpu]# ip link set dev en3f0pf0sf1000 up

Usage

After firmware/system configuration and after system power cycle, the virtio-net devices should be ready to deploy.

First, make sure that mlxconfig options take effect correctly by issuing the following command:

The output has a list with 3 columns: default configuration, current configuration, and next-boot configuration. Verify that the values under the 2nd column match the expected configuration.

[dpu]# mlxconfig -d 03:00.0 -e q | grep -i \*

* PER_PF_NUM_SF False(0) True(1) True(1)

* NUM_OF_VFS 16 0 0

* PF_BAR2_ENABLE True(1) False(0) False(0)

* PCI_SWITCH_EMULATION_NUM_PORT 0 8 8

* PCI_SWITCH_EMULATION_ENABLE False(0) True(1) True(1)

* VIRTIO_NET_EMULATION_ENABLE False(0) True(1) True(1)

* VIRTIO_NET_EMULATION_NUM_VF 0 126 126

* VIRTIO_NET_EMULATION_NUM_PF 0 1 1

* VIRTIO_NET_EMULATION_NUM_MSIX 2 64 64

* VIRTIO_NET_EMULATION_NUM_VF_MSIX 0 64 64

* PF_TOTAL_SF 0 508 508

* PF_SF_BAR_SIZE 0 8 8

If the system is configured correctly, virtio-net-controller service should be up and running. If the service does not appear as active, double check the firmware/system configurations above.

[dpu]# systemctl status virtio-net-controller.service

● virtio-net-controller.service - Nvidia VirtIO Net Controller Daemon

Loaded: loaded (/etc/systemd/system/virtio-net-controller.service; enabled; vendor preset: disabled)

Active: active (running)

Docs: file:/opt/mellanox/mlnx_virtnet/README.md

Main PID: 30715 (virtio_net_cont)

Tasks: 55

Memory: 11.7M

CGroup: /system.slice/virtio-net-controller.service

├─30715 /usr/sbin/virtio_net_controller

├─30859 virtio_net_emu

└─30860 virtio_net_ha

To reload or restart the service, run:

[dpu]# systemctl restart virtio-net-controller.service

When using "force kill" (i.e., kill -9 or kill -SIGKILL) for the virtio-net-controller service, users should use kill -9 -<pid of virtio_net_controller process, i.e. 30715 in previous example> (note the dash "-" before the pid).

Hotplug PF Devices

Creating PF Devices

To create a hotplug virtio-net device, run:

[dpu]# virtnet hotplug -i mlx5_0 -f 0x0 -m 0C:C4:7A:FF:22:93 -t 1500 -n 3 -s 1024

InfoRefer to "Virtnet CLI Commands" for full usage.

This command creates one hotplug virtio-net device with MAC address

0C:C4:7A:FF:22:93, MTU 1500, and 3 virtio queues with a depth of 1024 entries. The device is created on the physical port ofmlx5_0. The device is uniquely identified by its index. This index is used to query and update device attributes. If the device is created successfully, an output similar to the following appears:{ "bdf": "15:00.0", "vuid": "MT2151X03152VNETS1D0F0", "id": 0, "transitional": 0, "sf_rep_net_device": "en3f0pf0sf2000", "mac": "0C:C4:7A:FF:22:93", "errno": 0, "errstr": "Success" }

Add the representor port of the device to the OVS bridge and bring it up. Run:

[dpu]# ovs-vsctl add-port <bridge> en3f0pf0sf2000 [dpu]# ip link set dev en3f0pf0sf2000 up

Once steps 1-2 are completed, the virtio-net PCIe device should be available from hypervisor OS with the same PCIe BDF.

[host]# lspci | grep -i virtio 15:00.0 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

Probe virtio-net driver (e.g., kernel driver):

[host]# modprobe -v virtio-pci && modprobe -v virtio-net

The virtio-net device should be created. There are two ways to locate the net device:

Check the dmesg from the host side for the corresponding PCIe BDF:

[host]# dmesg | tail -20 | grep 15:00.0 -A 10 | grep virtio_net [3908051.494493] virtio_net virtio2 ens2f0: renamed from eth0

Check all net devices and find the corresponding MAC address:

[host]# ip link show | grep -i "0c:c4:7a:ff:22:93" -B 1 31: ens2f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 0c:c4:7a:ff:22:93 brd ff:ff:ff:ff:ff:ff

Check that the probed driver and its BDF match the output of the hotplug device:

[host]# ethtool -i ens2f0 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: 0000:15:00.0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no

Now the hotplug virtio-net device is ready to use as a common network device.

Destroying PF Devices

To hot-unplug a virtio-net device, run:

[dpu]# virtnet unplug -p 0

{'id': '0x1'}

{

"errno": 0,

"errstr": "Success"

}

The hotplug device and its representor are destroyed.

SR-IOV VF Devices

Creating SR-IOV VF Devices

After configuring the firmware and BlueField/host system with correct configuration, users can create SR-IOV VFs.

The following procedure provides an example of creating one VF on top of one static PF:

Locate the virtio-net PFs exposed to the host side:

[host]# lspci | grep -i virtio 14:00.2 Network controller: Red Hat, Inc. Virtio network device

Verify that the PCIe BDF matches the backend device from the BlueField side:

[dpu]# virtnet list { ... "devices": [ { "pf_id": 0, "function_type": "static PF", "transitional": 0, "vuid": "MT2151X03152VNETS0D0F2", "pci_bdf": "14:00.2", "pci_vhca_id": "0x2", "pci_max_vfs": "0", "enabled_vfs": "0", "msix_num_pool_size": 0, "min_msix_num": 0, "max_msix_num": 32, "min_num_of_qp": 0, "max_num_of_qp": 15, "qp_pool_size": 0, "num_msix": "64", "num_queues": "8", "enabled_queues": "7", "max_queue_size": "256", "msix_config_vector": "0x0", "mac": "D6:67:E7:09:47:D5", "link_status": "1", "max_queue_pairs": "3", "mtu": "1500", "speed": "25000", "rss_max_key_size": "0", "supported_hash_types": "0x0", "ctrl_mac": "D6:67:E7:09:47:D5", "ctrl_mq": "3", "sf_num": 1000, "sf_parent_device": "mlx5_0", "sf_parent_device_pci_addr": "0000:03:00.0", "sf_rep_net_device": "en3f0pf0sf1000", "sf_rep_net_ifindex": 15, "sf_rdma_device": "mlx5_4", "sf_cross_mkey": "0x18A42", "sf_vhca_id": "0x8C", "sf_rqt_num": "0x0", "aarfs": "disabled", "dim": "disabled" } ] }

Probe

virtio_pciandvirtio_netmodules from the host:[host]# modprobe -v virtio-pci && modprobe -v virtio-net

The PF net device should be created.

[host]# ip link show | grep -i "4A:82:E3:2E:96:AB" -B 1 21: ens2f2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 4a:82:e3:2e:96:ab brd ff:ff:ff:ff:ff:ff

The MAC address and PCIe BDF should match between the BlueField side (

virtnet list) and host side (ethtool).[host]# ethtool -i ens2f2 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: 0000:14:00.2 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no

To create SR-IOV VF devices on the host, run the following command with the PF PCIe BDF (

0000:14:00.2in this example):[host]# echo 1 > /sys/bus/pci/drivers/virtio-pci/0000\:14\:00.2/sriov_numvfs

1 extra virtio-net device is created from the host:

[host]# lspci | grep -i virtio 14:00.2 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01) 14:00.4 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

The BlueField side shows the VF information from

virtnet listas well:[dpu]# virtnet list ... { "vf_id": 0, "parent_pf_id": 0, "function_type": "VF", "transitional": 0, "vuid": "MT2151X03152VNETS0D0F2VF1", "pci_bdf": "14:00.4", "pci_vhca_id": "0xD", "pci_max_vfs": "0", "enabled_vfs": "0", "num_msix": "12", "num_queues": "8", "enabled_queues": "7", "max_queue_size": "256", "msix_config_vector": "0x0", "mac": "16:FF:A2:6E:6D:A9", "link_status": "1", "max_queue_pairs": "3", "mtu": "1500", "speed": "25000", "rss_max_key_size": "0", "supported_hash_types": "0x0", "ctrl_mac": "16:FF:A2:6E:6D:A9", "ctrl_mq": "3", "sf_num": 3000, "sf_parent_device": "mlx5_0", "sf_parent_device_pci_addr": "0000:03:00.0", "sf_rep_net_device": "en3f0pf0sf3000", "sf_rep_net_ifindex": 18, "sf_rdma_device": "mlx5_5", "sf_cross_mkey": "0x58A42", "sf_vhca_id": "0x8D", "sf_rqt_num": "0x0", "aarfs": "disabled", "dim": "disabled" }

Add the corresponding SF representor to the OVS bridge as the virtio-net PF and bring it up. Run:

[dpu]# ovs-vsctl add-port <bridge> en3f0pf0sf3000 [dpu]# ip link set dev en3f0pf0sf3000 up

Now the VF is functional.

SR-IOV enablement from the host side takes a few minutes. For example, it may take 5 minutes to create 504 VFs.

It is recommended to disable VF autoprobe before creating VFs.

[host]# echo 0 > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_drivers_autoprobe

[host]# echo <num_vfs> > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_numvfs

Users can pass through the VFs directly to the VM after finishing. If using the VFs inside the hypervisor OS is required, bind the VF PCIe BDF:

[host]# echo <virtio_vf_bdf> > /sys/bus/pci/drivers/virtio-pci/bind

Keep in mind to reenable the autoprobe for other use cases:

[host]# echo 1 > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_drivers_autoprobe

Creating VFs for the same PF on different threads may cause the hypervisor OS to hang.

Destroying SR-IOV VF Devices

To destroy SR-IOV VF devices on the host, run:

[host]# echo 0 > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_numvfs

When the echo command returns from the host OS, it does not necessarily mean the BlueField side has finished its operations. To verify that the BlueField is done, and it is safe to recreate the VFs, either:

Check controller log from the BlueField and make sure you see a log entry similar to the following:

[dpu]# journalctl -u virtio-net-controller.service -n 3 -f virtio-net-controller[5602]: [INFO] virtnet.c:675:virtnet_device_vfs_unload: static PF[0], Unload (1) VFs finished

Query the last VF from the BlueField side:

[dpu]# virtnet query -p 0 -v 0 -b {'all': '0x0', 'vf': '0x0', 'pf': '0x0', 'dbg_stats': '0x0', 'brief': '0x1', 'latency_stats': '0x0', 'stats_clear': '0x0'} { "Error": "Device doesn't exist" }

Once VFs are destroyed, SFs created for virtio-net from the BlueField side are not destroyed but are saved into the SF pool for reuse later.

Restarting virtio-net-controller service while performing device create/destroy for either hotplug or VF is unsupported.

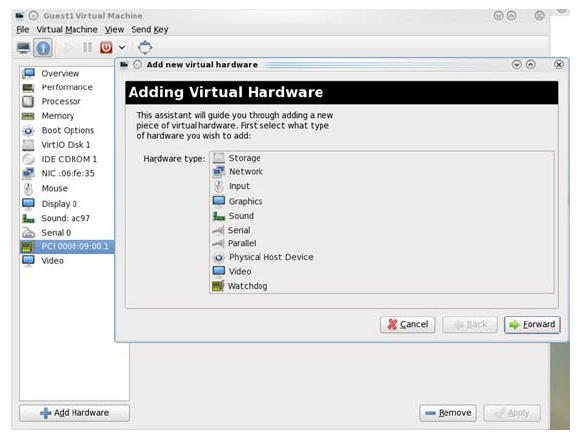

Assigning Virtio-net Device to VM

All virtio-net devices (static/hotplug PF and VF) support PCIe passthrough to a VM. PCIe passthrough allows the device to get better performance in the VM.

Assigning a virtio-net device to a VM can be done via virt-manager or virsh command.

Locating Virtio-net Devices

All virtio-net devices can be scanned by the PCIe subsystem in hypervisor OS and displayed as a standard PCIe device. Run the following command to locate the virtio-net devices devices with its PCIe BDF.

[host]# lspci | grep 'Virtio network'

00:09.1 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

Using virt-manager

Start virt-manager, run the following command:

[host]# virt-manager

Make sure your system has xterm enabled to show the virt-manager GUI.

Double-click the virtual machine and open its Properties. Navigate to Details → Add hardware → PCIe host device.

Choose a virtio-net device virtual function according to its PCIe device (e.g., 00:09.1), reboot or start the VM.

Using virsh Command

Run the following command to get the VM list and select the target VM by

Namefield:[host]# virsh list --all Id Name State ---------------------------------------------- 1 host-101-CentOS-8.5 running

Edit the VMs XML file, run:

[host]# virsh edit <VM_NAME>

Assign the target virtio-net device PCIe BDF to the VM, using

vfioas driver, replaceBUS/SLOT/FUNCTION/BUS_IN_VM/SLOT_IN_VM/FUNCTION_IN_VMwith corresponding settings.<

hostdevmode='subsystem'type='pci'managed='no'> <drivername='vfio'/> <source> <addressdomain='0x0000'bus='<#BUS>' slot='<#SLOT>' function='<#FUNCTION>'/> </source> <addresstype='pci'domain='0x0000'bus='<#BUS_IN_VM>' slot='<#SLOT_IN_VM>' function='<#FUNCTION_IN_VM>'/> </hostdev>For example, assign target device

00.09.1to the VM and its PCIe BDF within the VM is01:00.0:<hostdev mode=

'subsystem'type='pci'managed='no'> <driver name='vfio'/> <source> <address domain='0x0000'bus='0x00'slot='0x09'function='0x1'/> </source> <address type='pci'domain='0x0000'bus='0x01'slot='0x00'function='0x0'/> </hostdev>Destroy the VM if it is already started:

[host]# virsh destory <VM_NAME>

Start the VM with new XML configuration:

[host]# virsh start <VM_NAME>

Configuration File Options

The controller service has an optional JSON format configuration file which allows users to customize several parameters. The configuration file should be defined on the DPU at /opt/mellanox/mlnx_virtnet/virtnet.conf. This file is read every time the controller starts.

Controller systemd service should be restarted when there is configuration file change. Dynamic change of virtnet.conf is not supported.

|

Parameter |

Default Value |

Type |

Description |

||||||||||||||||||||||||||||

|

|

|

String |

RDMA device (e.g., |

||||||||||||||||||||||||||||

|

|

|

String |

RDMA device (e.g., |

||||||||||||||||||||||||||||

|

|

|

String |

The RDMA device (e.g., |

||||||||||||||||||||||||||||

|

|

|

String |

RDMA LAG device (e.g., |

||||||||||||||||||||||||||||

|

|

|

List |

The following sub-parameters can be used to configure the static PF:

|

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies whether LAG is used Note

If LAG is used, make sure to use the correct IB dev for static PF

|

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies whether the DPU is a single port device. It is mutually exclusive with |

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies whether recovery is enabled. If unspecified, recovery is enabled by default. To disable it, set |

||||||||||||||||||||||||||||

|

|

|

Number |

Determines the initial SF pool size as the percentage of Note

|

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies whether to destroy the SF pool. When set to 1, the controller destroys the SF pool when stopped/restarted (and the SF pool is recreated if |

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies whether packed VQ mode is enabled. If unspecified, packed VQ is disabled by default. To enable, set

Note

The virtio driver on the guest OS must be unloaded when restarting the controller if the

|

||||||||||||||||||||||||||||

|

|

|

Number |

When enabled, the mergeable buffers feature is negotiated with the host driver. This feature allows the guest driver to use multiple RX descriptor chains to receive a single receive packet, hence increase bandwidth. Note

The virtio driver on the guest OS must be unloaded when restarting the controller if the

|

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies the start DPA core for virtnet application. Valid only for NVIDIA® BlueField®-3 and up. Value must be greater than 0 and less than 11. Together with Note

This is advanced options when there are multiple DPA applications running at the same time. Regular user should keep this option as default.

|

||||||||||||||||||||||||||||

|

|

|

Number |

Specifies the end DPA core for virtnet application. Valid only for BlueField-3 and up. Value must be greater than |

||||||||||||||||||||||||||||

|

|

|

List |

The following sub-parameters can be used to configure the VF:

|

Configuration File Examples

Validate the JSON format of the configuration file before restarting the controller, especially the syntax and symbols. Otherwise, the controller may fail to start.

Configuring LAG on Dual Port BlueField

Refer to "Link Aggregation" documentation for information on configuring BlueField in LAG mode.

Refer to the "Link Aggregation" page for information on configuring virtio-net in LAG mode.

Configuring Static PF on Dual Port BlueField

The following configures all static PFs to use mlx5_0 (port 0) as the data path device in a non-LAG configuration, and the default MAC and features for the PF:

{

"ib_dev_p0": "mlx5_0",

"ib_dev_p1": "mlx5_1",

"ib_dev_for_static_pf": "mlx5_0",

"is_lag": 0,

"static_pf": {

"mac_base": "08:11:22:33:44:55",

"features": "0x230047082b"

}

}

Configuring VF Specific Options

The following configures VFs with default parameters. With this configuration, each PF assigns the MAC based on mac_base up to 126 VFs. Each VF creates 4 queue pairs, with each queue having a depth of 256.

If vfs_per_pf is less than the VIRTIO_NET_EMULATION_NUM_VF in mlxconfig, and more VFs are created, duplicated MACs would be assigned to different VFs.

{

"vf": {

"mac_base": "06:11:22:33:44:55",

"features": "0x230047082b",

"vfs_per_pf": 126,

"max_queue_pairs": 4,

"max_queue_size": 256

}

}

User Front End CLI

To communicate with the virtio-net-controller backend service, a user frontend program, virtnet, is installed on the BlueField which is based on r emote procedure call (RPC) protocol with JSON format output. Run the following command to check its usage:

usage: virtnet [-h] [-v] {hotplug,unplug,list,query,modify,log,version,restart,validate,update,health,debug,stats} ...

Nvidia virtio-net-controller command line interface v24.10.20

positional arguments:

{hotplug,unplug,list,query,modify,log,version,restart,validate,update,health,debug,stats}

** Use -h for sub-command usage

hotplug hotplug virtnet device

unplug unplug virtnet device

list list all virtnet devices

query query all or individual virtnet device(s)

modify modify virtnet device

log set log level

version show virtio net controller version info

restart Do fast restart of controller without killing the service

validate validate configurations

update update controller

health controller health utility

debug For debug purpose, cmds can be changed without notice

stats stats of virtnet device

options:

-h, --help show this help message and exit

-v, --version show program's version number and exit

Virtnet supports command line autocomplete by inputting one command with tab.

To check the currently running controller version:

# virtnet -v

Hotplug

This command hotplugs a virtio-net PCIe PF device exposed to the host side.

Syntax

virtnet hotplug -i IB_DEVICE -m MAC -t MTU -n MAX_QUEUES -s MAX_QUEUE_SIZE [-h] [-u SF_NUM] [-f FEATURES] [-l]

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

String |

Yes |

RDMA device (e.g., Options:

|

|

|

|

Hex Number |

No |

Feature bits to be enabled in hex format. Refer to the "Virtio-net Feature Bits" page. Note

Note that some features are enabled by default. Query the device to show the supported bits.

|

|

|

|

Number |

Yes |

MAC address of the virtio-net device. Note

Controller does not validate the MAC address (other than its length). The user must ensure MAC is valid and unique.

|

|

|

|

Number |

Yes |

Maximum transmission unit (MTU) size of the virtio-net device. It must be less than the uplink rep MTU size. |

|

|

|

Number |

Yes |

Mutually exclusive with Max number of virt queues could be created for the virtio-net device. TX, RX, ctrl queues are counted separately (e.g., 3 has 1 TX VQ, 1 RX VQ, 1 Ctrl VQ). Note

This option will be depreciated in the future.

|

|

|

|

Number |

Yes |

Mutually exclusive with

Number of data VQ pairs. One VQ pair has one TX queue and one RX queue. It does not count control or admin VQ. From the host side, it appears as |

|

|

|

Number |

Yes |

Maximum number of buffers in the virt queue, between 0x4 and 0x8000. Must be power of 2. |

|

|

|

Number |

No |

SF number to be used for this hotplug device, must between 2000 and 2999. |

|

|

|

N/A |

No |

Create legacy (transitional) hotplug device |

Output

|

Entry |

Type |

Description |

|

String |

The PCIe BDF (bus:device:function) number enumerated by host. The user should see this PCIe device from host side. |

|

String |

Unique device SN. It can be used as an index to query/modify/unplug this device. |

|

Num |

Unique device ID. It can be used as an index to query/modify/unplug this device. |

|

Num |

Is the current device a transitional hotplug device.

|

|

String |

The SF representor name represents the virtio-net device. It should be added into the OVS bridge. |

|

String |

The hotplug virtio-net device MAC address |

|

|

Num |

Error number if hotplug failed.

|

|

|

String |

Explanation of the error number |

Example

The following example of hot plugging one device with MAC address 0C:C4:7A:FF:22:93, MTU 1500, and 1 pair of virtual queue (QP) pair with a depth of 1024 entries. The device is created on the physical port of mlx5_0.

# virtnet hotplug -i mlx5_0 -m 0C:C4:7A:FF:22:93 -t 1500 -qp 1 -s 1024

{

"bdf": "15:00.0",

"vuid": "MT2151X03152VNETS1D0F0",

"id": 0,

"transitional": 0,

"sf_rep_net_device": "en3f0pf0sf2000",

"mac": "0C:C4:7A:FF:22:93",

"errno": 0,

"errstr": "Success"

}

Unplug

This command unplugs a virtio-net PCIe PF device.

Syntax

virtnet unplug [-h] [-p PF | -u VUID]

Only one of

--pf

and

--vuid

is needed to unplug the device.

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

Number |

Yes |

Unique device ID returned when doing hotplug. Can be retrieved by using |

|

|

|

String |

Yes |

Unique device SN returned when doing hotplug. Can be retrieved by using |

Output

|

Entry |

Type |

Description |

|

|

Num |

Error number if operation failed

|

|

|

String |

Explanation of the error number |

Example

Unplug-hotplug device using the PF ID:

# virtnet unplug -p 0

{'id': '0x1'}

{

"errno": 0,

"errstr": "Success"

}

List

This command lists all existing virtio-net devices, with global information and individual information for each device.

Syntax

virtnet list [-h]

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

Output

The output has two main sections. The first section wrapped by the controller are global configurations and capabilities.

|

Entry |

Type |

Description |

|

|

String |

Entries under this section is global information for the controller |

|

|

String |

The RDMA device manager used to manage internal resources. Should be default |

|

String |

Maximum number of devices that can be hotpluged |

|

String |

Total number of emulated devices managed by the device emulation manager |

|

String |

Maximum number of virt queues supported per device |

|

String |

Maximum number of descriptors the device can send in a single tunnel request |

|

String |

Total list of features supported by device |

|

String |

Currently supported virt queue types: Packed and Split |

|

|

String |

Currently supported event modes: |

Each device has its own section under devices.

|

Entry |

Type |

Description |

|

|

String |

Entries under this section is per device information |

|

|

Number |

Physical function ID |

|

|

String |

Function type: Static PF, hotplug PF, VF |

|

Number |

The current device a transitional hotplug device:

|

|

String |

Unique device SN, it can be used as an index to query/modify/unplug a device |

|

String |

Bus:device:function to describe the virtio-net PCIe device |

|

|

Number |

Virtual HCA identifier for the general virtio-net device. For debug purposes only. |

|

|

Number |

Maximum number of virtio-net VFs that can be created for this PF. Valid only for PFs. |

|

Number |

Currently enabled number of virtio-net VFs for this PF |

|

Number |

Number of free dynamic MSIX available for the VFs on this PF |

|

Number |

The minimum number of dynamic MSI-Xs that can be set for an virtio-net VF |

|

Number |

The maximum number of dynamic MSI-Xs that can be set for an virtio-net VF |

|

Number |

The minimum number of dynamic data VQ pairs (i.e., each pair has one TX and 1 RX queue) that can be set for an virtio-net VF |

|

Number |

The minimum number of dynamic data VQ pairs (i.e., each pair has one TX and 1 RX queue) that can be set for an virtio-net VF |

|

Number |

Number of free dynamic data VQ pairs (i.e., each pair has one TX and 1 RX queue) available for the VFs on this PF |

|

|

Number |

Maximum number of MSI-X available for this device |

|

|

Number |

Maximum virtual queues can be created for this device, driver can choose to create less |

|

|

Number |

Currently enabled number of virtual queues by the driver |

|

Number |

Maximum virtual queue depth in byte can be created for each VQ, driver can use less |

|

String |

MSIX vector number used by the driver for the virtio config space. 0xFFFF means that no vector is requested. |

|

String |

The virtio-net device permanent MAC address, can be only changed from controller side via modify command |

|

|

Number |

Link status of the virtio-net device on the driver side

|

|

|

Number |

Number of data VQ pairs. One VQ pair has one TX queue and one RX queue. Control or admin VQ are not counted. From the host side, it appears as |

|

Number |

The virtio-net device MTU. Default is 1500. |

|

Number |

The virtio-net device link speed in Mb/s |

|

Number |

The maximum supported length of the RSS key. Only applicable when |

|

Number |

Supported hash types for this device in hex. Only applicable when

|

|

String |

Admin MAC address configured by driver. Not persistent with driver reload or host reboot. |

|

|

Number |

Number of queue pairs/channels configured by the driver. From the host side, it appears as |

|

|

Number |

Scalable function number used for this virtio-net device |

|

|

String |

The RDMA device to use to create the SF |

|

String |

The PCIe device address (bus:device:function) to use to create the SF |

|

String |

Represents the virtio-net device |

|

Number |

The SF representor network interface index |

|

String |

The SF RDMA device interface name |

|

|

Number |

The cross-device MKEY created for the SF. For debug purposes only. |

|

|

Number |

Virtual HCA identifier for the SF. For debug purposes only. |

|

|

Number |

The RQ table ID used for this virtio-net device. For debug purposes only. |

|

|

String |

Whether Accelerated Receive Flow Steering configuration is enabled or disabled |

|

|

String |

Whether dynamic interrupt moderation (DIM) is enabled or disabled |

Example

The following is an example of a list with 1 static PF created:

# virtnet list

{

"controller": {

"emulation_manager": "mlx5_0",

"max_hotplug_devices": "0",

"max_virt_net_devices": "1",

"max_virt_queues": "256",

"max_tunnel_descriptors": "6",

"supported_features": {

"value": "0x8b00037700ef982f",

" 0": "VIRTIO_NET_F_CSUM",

" 1": "VIRTIO_NET_F_GUEST_CSUM",

" 2": "VIRTIO_NET_F_CTRL_GUEST_OFFLOADS",

" 3": "VIRTIO_NET_F_MTU",

" 5": "VIRTIO_NET_F_MAC",

" 11": "VIRTIO_NET_F_HOST_TSO4",

" 12": "VIRTIO_NET_F_HOST_TSO6",

" 15": "VIRTIO_F_MRG_RX_BUFFER",

" 16": "VIRTIO_NET_F_STATUS",

" 17": "VIRTIO_NET_F_CTRL_VQ",

" 18": "VIRTIO_NET_F_CTRL_RX",

" 19": "VIRTIO_NET_F_CTRL_VLAN",

" 21": "VIRTIO_NET_F_GUEST_ANNOUNCE",

" 22": "VIRTIO_NET_F_MQ",

" 23": "VIRTIO_NET_F_CTRL_MAC_ADDR",

" 32": "VIRTIO_F_VERSION_1",

" 33": "VIRTIO_F_IOMMU_PLATFORM",

" 34": "VIRTIO_F_RING_PACKED",

" 36": "VIRTIO_F_ORDER_PLATFORM",

" 37": "VIRTIO_F_SR_IOV",

" 38": "VIRTIO_F_NOTIFICATION_DATA",

" 40": "VIRTIO_F_RING_RESET",

" 41": "VIRTIO_F_ADMIN_VQ",

" 56": "VIRTIO_NET_F_HOST_USO",

" 57": "VIRTIO_NET_F_HASH_REPORT",

" 59": "VIRTIO_NET_F_GUEST_HDRLEN",

" 63": "VIRTIO_NET_F_SPEED_DUPLEX"

},

"supported_virt_queue_types": {

"value": "0x1",

" 0": "SPLIT"

},

"supported_event_modes": {

"value": "0x5",

" 0": "NO_MSIX_MODE",

" 2": "MSIX_MODE"

}

},

"devices": [

{

"pf_id": 0,

"function_type": "static PF",

"transitional": 0,

"vuid": "MT2306XZ00BNVNETS0D0F2",

"pci_bdf": "e2:00.2",

"pci_vhca_id": "0x2",

"pci_max_vfs": "0",

"enabled_vfs": "0",

"msix_num_pool_size": 0,

"min_msix_num": 0,

"max_msix_num": 256,

"min_num_of_qp": 0,

"max_num_of_qp": 127,

"qp_pool_size": 0,

"num_msix": "256",

"num_queues": "255",

"enabled_queues": "0",

"max_queue_size": "256",

"msix_config_vector": "0xFFFF",

"mac": "16:B0:E0:41:B8:0D",

"link_status": "1",

"max_queue_pairs": "127",

"mtu": "1500",

"speed": "100000",

"rss_max_key_size": "0",

"supported_hash_types": "0x0",

"ctrl_mac": "00:00:00:00:00:00",

"ctrl_mq": "0",

"sf_num": 1000,

"sf_parent_device": "mlx5_0",

"sf_parent_device_pci_addr": "0000:03:00.0",

"sf_rep_net_device": "en3f0pf0sf1000",

"sf_rep_net_ifindex": 10,

"sf_rdma_device": "mlx5_3",

"sf_cross_mkey": "0x12642",

"sf_vhca_id": "0x124",

"sf_rqt_num": "0x0",

"aarfs": "disabled",

"dim": "disabled"

}

]

}

Query

This command queries detailed information for a given device, including all VQ information if created.

Syntax

virtnet query [-h] {[-a] | [-p PF] [-v VF] | [-u VUID]} [--dbg_stats] [-b] [--latency_stats] [-q QUEUE_ID] [--stats_clear]

The options

--pf

,

--vf

,

--vuid

, and

--all

are mutually exclusive

(except

--pf

and

--vf

which can be used together)

, but one of them must be applied.

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

N/A |

No |

Query all the detailed information for all available devices. It can be time consuming if a large number of devices is available. |

|

|

|

Number |

No |

Unique device ID for the PF. Can be retrieved by using |

|

|

|

Number |

No |

Unique device ID for the VF. Can be retrieved by using |

|

|

|

String |

No |

Unique device SN for the device (PF/VF). Can be retrieved by using |

|

|

|

Number |

No |

Queue index of the device VQs |

|

|

|

N/A |

No |

Query brief information of the device (does not print VQ information) |

|

|

N/A |

N/A |

No |

Print debug counters and information Note

This option will be depreciated in the future.

|

|

|

N/A |

N/A |

No |

Clear all the debug counter stats Note

This option will be depreciated in the future.

|

Output

Output has two main sections.

The first section, wrapped by

devices, are configuration and capabilities on the device level, the majority of which are the same as thelistcommand. This section only covers the differences between the two.Entry

Type

Description

devicesString

Entries under this section is per-device information

pci_dev_idString

Virtio-net PCIe device ID. Default: 0x1041.

NoteThis option will be depreciated in the future.

pci_vendor_idString

Virtio-net PCIe vendor ID. Default: 0x1af4.

NoteThis option will be depreciated in the future.

pci_class_codeString

Virtio-net PCIe device class code. Default: 0x20000.

NoteThis option will be depreciated in the future.

pci_subsys_idString

Virtio-net PCIe vendor ID. Default: 0x1041.

NoteThis option will be depreciated in the future.

pci_subsys_vendor_idString

Virtio-net PCIe subsystem vendor ID. Default: 0x1af4.

NoteThis option will be depreciated in the future.

pci_revision_idString

Virtio-net PCIe revision ID. Default: 1.

NoteThis option will be depreciated in the future.

device_featuresString

Enabled device feature bits according to the virtio spec. Refer to section "Feature Bits".

driver_featuresString

Enabled driver feature bits according to the virtio spec. Valid only when the driver probes the device. Refer to "Feature Bits".

statusString

Device status field bit masks according to the virtio spec:

ACKNOWLEDGE (bit 0)DRIVER (bit 1)DRIVER_OK (bit 2)FEATURES_OK (bit 3)DEVICE_NEEDS_RESET (bit 6)FAILED (bit 7)

resetNumber

Shows if the current virtio-net device undergoing reset:

0 – not undergoing reset

1 – undergoing reset

enabledNumber

Shows if the current virtio-net device is enabled:

0 – disabled, likely FLR has occurred

1 – enabled

The second section, wrapped by

enabled-queues-info, provides per-VQ information:Entry

Type

Description

indexNumber

VQ index starting from 0 to

enabled_queuessizeNumber

Driver VQ depth in bytes. It is bound by device

max_queues_size.msix_vectorNumber

The MSI-X vector number used for this VQ

enableNumber

If current VQ is enabled or not

0 – disabled

1 – enabled

notify_offsetNumber

Driver reads this to calculate the offset from start of notification structure at which this virtqueue is located

descriptor_addressNumber

The physical address of the descriptor area

driver_addressNumber

The physical address of the driver area

device_addressNumber

The physical address of the device area

received_descNumber

Total number of received descriptors by the device on this VQ

NoteThis option will be depreciated in the future.

completed_descNumber

Total number of completed descriptors by the device on this VQ

NoteThis option will be depreciated in the future.

bad_desc_errorsNumber

Total number of bad descriptors received on this VQ

NoteThis option will be depreciated in the future.

error_cqesNumber

Total number of error CQ entries on this VQ

NoteThis option will be depreciated in the future.

exceed_max_chainNumber

Total number of chained descriptors received that exceed the maximum allowed chain by device

NoteThis option will be depreciated in the future.

invalid_bufferNumber

Total number of times the device tried to read or write buffer that is not registered to the device

NoteThis option will be depreciated in the future.

batch_numberNumber

The number of RX descriptors for the last received packet. Relevant for BlueField-3 only.

NoteThis option will be depreciated in the future.

dma_q_used_numberNumber

The DMA q index used for this VQ. Relevant for BlueField-3 only.

NoteThis option will be depreciated in the future.

handler_schd_numberNumber

Scheduler number for this VQ. Relevant for BlueField-3 only.

NoteThis option will be depreciated in the future.

aux_handler_schd_numberNumber

Aux scheduler number for this VQ. Relevant for BlueField-3 only.

NoteThis option will be depreciated in the future.

max_post_desc_numberNumber

Maximum number of posted descriptors on this VQ. Relevant for DPA.

NoteThis option will be depreciated in the future.

total_bytesNumber

Total number of bytes handled by this VQ. Relevant for BlueField-3 only

NoteThis option will be depreciated in the future.

rq_cq_max_countNumber

Event generation moderation counter of the queue. Relevant for RQ.

NoteThis option will be depreciated in the future.

rq_cq_periodNumber

Event generation moderation timer for the queue in 1 µ sec granularity. Relevant for RQ.

NoteThis option will be depreciated in the future.

rq_cq_period_modeNumber

Current period mode for RQ

0x0 –

default_mode– use device best defaults0x1 –

upon_event–queue_periodtimer restarts upon event generation0x2 –

upon_cqe–queue_periodtimer restarts upon completion generation

NoteThis option will be depreciated in the future.

Example

The following is an example of querying the information of the first PF:

# virtnet query -p 0

{

"devices": [

{

"pf_id": 0,

"function_type": "static PF",

"transitional": 0,

"vuid": "MT2349X00018VNETS0D0F1",

"pci_bdf": "23:00.1",

"pci_vhca_id": "0x1",

"pci_max_vfs": "0",

"enabled_vfs": "0",

"pci_dev_id": "0x1041",

"pci_vendor_id": "0x1af4",

"pci_class_code": "0x20000",

"pci_subsys_id": "0x1041",

"pci_subsys_vendor_id": "0x1af4",

"pci_revision_id": "1",

"device_feature": {

"value": "0x8930032300ef182f",

" 0": "VIRTIO_NET_F_CSUM",

" 1": "VIRTIO_NET_F_GUEST_CSUM",

" 2": "VIRTIO_NET_F_CTRL_GUEST_OFFLOADS",

" 3": "VIRTIO_NET_F_MTU",

" 5": "VIRTIO_NET_F_MAC",

" 11": "VIRTIO_NET_F_HOST_TSO4",

" 12": "VIRTIO_NET_F_HOST_TSO6",

" 16": "VIRTIO_NET_F_STATUS",

" 17": "VIRTIO_NET_F_CTRL_VQ",

" 18": "VIRTIO_NET_F_CTRL_RX",

" 19": "VIRTIO_NET_F_CTRL_VLAN",

" 21": "VIRTIO_NET_F_GUEST_ANNOUNCE",

" 22": "VIRTIO_NET_F_MQ",

" 23": "VIRTIO_NET_F_CTRL_MAC_ADDR",

" 32": "VIRTIO_F_VERSION_1",

" 33": "VIRTIO_F_IOMMU_PLATFORM",

" 37": "VIRTIO_F_SR_IOV",

" 40": "VIRTIO_F_RING_RESET",

" 41": "VIRTIO_F_ADMIN_VQ",

" 52": "VIRTIO_NET_F_VQ_NOTF_COAL",

" 53": "VIRTIO_NET_F_NOTF_COAL",

" 56": "VIRTIO_NET_F_HOST_USO",

" 59": "VIRTIO_NET_F_GUEST_HDRLEN",

" 63": "VIRTIO_NET_F_SPEED_DUPLEX"

},

"driver_feature": {

"value": "0x8000002300ef182f",

" 0": "VIRTIO_NET_F_CSUM",

" 1": "VIRTIO_NET_F_GUEST_CSUM",

" 2": "VIRTIO_NET_F_CTRL_GUEST_OFFLOADS",

" 3": "VIRTIO_NET_F_MTU",

" 5": "VIRTIO_NET_F_MAC",

" 11": "VIRTIO_NET_F_HOST_TSO4",

" 12": "VIRTIO_NET_F_HOST_TSO6",

" 16": "VIRTIO_NET_F_STATUS",

" 17": "VIRTIO_NET_F_CTRL_VQ",

" 18": "VIRTIO_NET_F_CTRL_RX",

" 19": "VIRTIO_NET_F_CTRL_VLAN",

" 21": "VIRTIO_NET_F_GUEST_ANNOUNCE",

" 22": "VIRTIO_NET_F_MQ",

" 23": "VIRTIO_NET_F_CTRL_MAC_ADDR",

" 32": "VIRTIO_F_VERSION_1",

" 33": "VIRTIO_F_IOMMU_PLATFORM",

" 37": "VIRTIO_F_SR_IOV",

" 63": "VIRTIO_NET_F_SPEED_DUPLEX"

},

"status": {

"value": "0xf",

" 0": "ACK",

" 1": "DRIVER",

" 2": "DRIVER_OK",

" 3": "FEATURES_OK"

},

"reset": "0",

"enabled": "1",

"num_msix": "64",

"num_queues": "63",

"enabled_queues": "63",

"max_queue_size": "256",

"msix_config_vector": "0x0",

"mac": "4E:6A:E1:41:D8:BE",

"link_status": "1",

"max_queue_pairs": "31",

"mtu": "1500",

"speed": "200000",

"rss_max_key_size": "0",

"supported_hash_types": "0x0",

"ctrl_mac": "4E:6A:E1:41:D8:BE",

"ctrl_mq": "31",

"sf_num": 1000,

"sf_parent_device": "mlx5_0",

"sf_parent_device_pci_addr": "0000:03:00.0",

"sf_rep_net_device": "en3f0pf0sf1000",

"sf_rep_net_ifindex": 12,

"sf_rdma_device": "mlx5_2",

"sf_cross_mkey": "0xC042",

"sf_vhca_id": "0x7E8",

"sf_rqt_num": "0x0",

"aarfs": "disabled",

"dim": "disabled",

"enabled-queues-info": [

{

"index": "0",

"size": "256",

"msix_vector": "0x1",

"enable": "1",

"notify_offset": "0",

"descriptor_address": "0x10cece000",

"driver_address": "0x10cecf000",

"device_address": "0x10cecf240",

"received_desc": "256",

"completed_desc": "0",

"bad_desc_errors": "0",

"error_cqes": "0",

"exceed_max_chain": "0",

"invalid_buffer": "0",

"batch_number": "64",

"dma_q_used_number": "6",

"handler_schd_number": "4",

"aux_handler_schd_number": "3",

"max_post_desc_number": "0",

"total_bytes": "0",

"rq_cq_max_count": "0",

"rq_cq_period": "0",

"rq_cq_period_mode": "1"

},

......

}

]

}

]

}

Stats

This command is recommended for obtaining all packet counter information. The existing packet counter information available using the virtnet list and virtnet query commands, but will be deprecated in the future.

This command retrieves the packet counters for a specified device, including detailed information for all Rx and Tx virtqueues (VQs).

To enable/disable byte wise packet counters for each Rx queue, use the following command:

virtnet modify {[-p PF] [-v VF]} device -pkt_cnt {enable,disable}

When enabled, byte-wise packet counters are initialized to zero.

When disabled, the previous values are retained for debugging purposes. The command will still return these old, disabled counter values.

Packet counters are attached to an RQ. Thus, RQ must be created first. This means that the virtio-net device should be probed by the driver on the host OS before running the commands above.

Syntax

virtnet stats [-h] {[-p PF] [-v VF] | [-u VUID]} [-q QUEUE_ID]

The options

--pf

,

--vf

, and

--vuid

are mutually exclusive

(except

--pf

and

--vf

which can be used together)

, but one of them must be applied.

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

Number |

No |

Unique device ID for the PF. Can be retrieved by using |

|

|

|

Number |

No |

Unique device ID for the VF. Can be retrieved by using |

|

|

|

String |

No |

Unique device SN for the device (PF/VF). Can be retrieved by using |

|

|

|

Number |

No |

Queue index of the device RQs or SQs |

Output

The output has two sections.

The first section wrapped by

deviceare device details along with the packet counter statics enable state.Entry

Type

Description

deviceString

Entries under this section is per-device information

pf_idString

Physical function ID

packet_countersString

Indicates whether the packet counters feature is enabled or disabled

The second section wrapped by queues-stats are information for each receive VQ.

Entry

Type

Description

VQ IndexNumber

The VQ index starts at 0 (the first RQ) and continues up to the last SQ

rx_64_or_less_octet_packetsNumber

The number of packets received with a size of 0 to 64 bytes. Relevant for BlueField-3 RQ.

rx_65_to_127_octet_packetsNumber

The number of packets received with a size of 65 to 127 bytes. Relevant for BlueField-3 RQ.

rx_128_to_255_octet_packetsNumber

The number of packets received with a size of 128 to 255 bytes. Relevant for BlueField-3 RQ.

rx_256_to_511_octet_packetsNumber

The number of packets received with a size of 256 to 511 bytes. Relevant for BlueField-3 RQ.

rx_512_to_1023_octet_packetsNumber

The number of packets received with a size of 512 to 1023 bytes. Relevant for BlueField-3 RQ.

rx_1024_to_1522_octet_packetsNumber

The number of packets received with a size of 1024 to 1522 bytes. Relevant for BlueField-3 RQ.

rx_1523_to_2047_octet_packetsNumber

The number of packets received with a size of 1523 to 2047 bytes. Relevant for BlueField-3 RQ.

rx_2048_to_4095_octet_packetsNumber

The number of packets received with a size of 2048 to 4095 bytes. Relevant for BlueField-3 RQ.

rx_4096_to_8191_octet_packetsNumber

The number of packets received with a size of 4096 to 8191 bytes. Relevant for BlueField-3 RQ.

rx_8192_to_9022_octet_packetsNumber

The number of packets received with a size of 8192 to 9022 bytes. Relevant for BlueField-3 RQ.

received_descNumber

Total number of received descriptors by the device on this VQ

completed_descNumber

Total number of completed descriptors by the device on this VQ

bad_desc_errorsNumber

Total number of bad descriptors received on this VQ

error_cqesNumber

Total number of error CQ entries on this VQ

exceed_max_chainNumber

Total number of chained descriptors received that exceed the max allowed chain by device

invalid_bufferNumber

Total number of times the device tried to read or write a buffer which is not registered to the device

batch_numberNumber

The number of RX descriptors for the last received packet. Relevant for BlueField-3.

dma_q_used_numberNumber

The DMA q index used for this VQ. Relevant for BlueField-3.

handler_schd_numberNumber

Scheduler number for this VQ. Relevant for BlueField-3.

aux_handler_schd_numberNumber

Aux scheduler number for this VQ. Relevant for BlueField-3.

max_post_desc_numberNumber

Maximum number of posted descriptors on this VQ. Relevant for DPA.

total_bytesNumber

Total number of bytes handled by this VQ. Relevant for BlueField-3.

rq_cq_max_countNumber

Event generation moderation counter of the queue. Relevant for RQ.

rq_cq_periodNumber

Event generation moderation timer for the queue in 1 µ sec granularity. Relevant for RQ.

rq_cq_period_modeNumber

Current period mode for RQ

0x0 –

default_mode– use device best defaults0x1 –

upon_event–queue_periodtimer restarts upon event generation0x2 –

upon_cqe–queue_periodtimer restarts upon completion generation

Example

The following is an example of querying the packet statistics information of PF 0 and VQ 0 (i.e., RQ):

# virtnet stats -p 0 -q 0

{'pf': '0x0', 'queue_id': '0x0'}

{

"device": {

"pf_id": 0,

"packet_counters": "Enabled",

"queues-stats": [

{

"VQ Index": 0,

"rx_64_or_less_octet_packets": 0,

"rx_65_to_127_octet_packets": 259,

"rx_128_to_255_octet_packets": 0,

"rx_256_to_511_octet_packets": 0,

"rx_512_to_1023_octet_packets": 0,

"rx_1024_to_1522_octet_packets": 0,

"rx_1523_to_2047_octet_packets": 0,

"rx_2048_to_4095_octet_packets": 199,

"rx_4096_to_8191_octet_packets": 0,

"rx_8192_to_9022_octet_packets": 0,

"received_desc": "4096",

"completed_desc": "0",

"bad_desc_errors": "0",

"error_cqes": "0",

"exceed_max_chain": "0",

"invalid_buffer": "0",

"batch_number": "64",

"dma_q_used_number": "0",

"handler_schd_number": "44",

"aux_handler_schd_number": "43",

"max_post_desc_number": "0",

"total_bytes": "0",

"err_handler_schd_num": "0",

"rq_cq_max_count": "0",

"rq_cq_period": "0",

"rq_cq_period_mode": "1"

}

]

}

}

Modify Device

This command modifies the attributes of a given device.

Syntax

virtnet modify [-h] [-p PF] [-v VF] [-u VUID] [-a] {device,queue} ...

The options

--pf

,

--vf

,

--vuid

, and

--all

are mutually exclusive

(except

--pf

and

--vf

which can be used together)

, but one of them must be applied.

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

N/A |

No |

Modify all available device attributes depending on the selection of

|

|

|

|

Number |

No |

Unique device ID for the PF. May be retrieved using |

|

|

|

Number |

No |

Unique device ID for the VF. May be retrieved using |

|

|

|

String |

No |

Unique device SN for the device (PF/VF). May be retrieved by using |

|

|

N/A |

Number |

No |

Modify device specific options |

|

|

N/A |

N/A |

No |

Modify queue specific options |

Device Options

virtnet modify device [-h] [-m MAC] [-t MTU] [-e SPEED] [-l LINK]

[-s STATE] [-f FEATURES]

[-o SUPPORTED_HASH_TYPES] [-k RSS_MAX_KEY_SIZE]

[-r RX_MODE] [-n MSIX_NUM] [-q MAX_QUEUE_SIZE]

[-d DST_PORT] [-b RX_DMA_Q_NUM]

[-dc {enable,disable}] [-pkt_cnt {enable,disable}]

[-aarfs {enable,disable}] [-qp MAX_QUEUE_PAIRS] [-dim {enable,disable}]

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

String |

No |

Show the help message and exit |

|

|

|

Number |

No |

The virtio-net device MAC address |

|

|

|

Number |

No |

The virtio-net device MTU |

|

|

|

Number |

No |

The virtio-net device link speed in Mb/s |

|

|

|

Number |

No |

The virtio-net device link status

|

|

|

|

Number |

No |

The virtio-net device status field bit masks according to the virtio spec:

|

|

|

|

Hex Number |

No |

The virtio-net device feature bits according to the virtio spec |

|

|

|

Hex Number |

No |

Supported hash types for this device in hex. Only applicable when

|

|

|

|

Number |

No |

The maximum supported length of RSS key. Only applicable when |

|

|

|

Hex Number |

No |

The RX mode exposed to the driver:

|

|

|

|

Number |

No |

Maximum number of VQs (both data and ctrl/admin VQ). It is bound by the cap of

|

|

|

|

Number |

No |

Maximum number of buffers in the VQ. The queue size value is always a power of 2. The maximum queue size value is 32768. |

|

|

|

Number |

No |

Number of data VQ pairs. One VQ pair has one TX queue and one RX queue. Control or admin VQs are not counted. From the host side, it appears as |

|

|

|

Hex number |

No |

Modify IPv4 Note

Will be depreciated in the future.

|

|

|

|

Number |

No |

Modify max RX DMA queue number |

|

|

|

String |

No |

Enable/disable virtio-net drop counter |

|

|

|

String |

No |

Enable/disable virtio-net device packet counter stats |

|

|

|

String |

No |

Enable/disable auto-AARFS. Only applicable for PF devices (static PF and hotplug PF). |

|

|

|

String |

No |

Enable/disable dynamic interrupt moderation (DIM) |

The following modify options require unbinding the virtio device from virtio-net driver in the guest OS:

macmtufeaturesmsix_nummax_queue_sizemax_queue_pairs

For example:

On the guest OS:

[host]# echo "bdf of virtio-dev" > /sys/bus/pci/drivers/virtio-pci/unbind

On the DPU side:

Modify the max queue size of device:

[dpu]# virtnet modify -p 0 -v 0 device -q 2048

Modify the MSI-X number of VF device:

[dpu]# virtnet modify -p 0 -v 0 device -n 8

Modify the MAC address of virtio physical device ID 0 (or with its "VUID string", which can be obtained through virtnet list/query):

[dpu]# virtnet modify -p 0 device -m 0C:C4:7A:FF:22:93

Modify the maximum number of queue pairs of VF device:

[dpu]# virtnet modify -p 0 -v 0 device -qp 2

On the guest OS:

[host]# echo "bdf of virtio-dev" > /sys/bus/pci/drivers/virtio-pci/bind

Queue Options

virtnet modify queue [-h] -e {event,cqe} -n PERIOD -c MAX_COUNT

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

String |

No |

Show the help message and exit |

|

|

|

String |

No |

RQ period mode: |

|

|

|

Number |

No |

The event generation moderation timer for the queue in 1 µ sec granularity |

|

|

|

Number |

No |

The max event generation moderation counter of the queue |

Output

|

Entry |

Type |

Description |

|

|

Number |

Error number:

|

|

|

String |

Explanation of the error number |

Example

To modify the link status of the first VF on the first PF to be down:

# virtnet modify -p 0 device -l 0

{'pf': '0x0', 'all': '0x0', 'subcmd': '0x0', 'link': '0x0'}

{

"errno": 0,

"errstr": "Success"

}

Log

This command manages the log level of virtio-net-controller.

Syntax

virtnet log [-h] -l {info,err,debug}

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

String |

Yes |

Change the log level of |

Output

|

Entry |

Type |

Description |

|

|

String |

Success or failed with message |

Example

To change the log level to info:

# virtnet log -l info

{'level': 'info'}

"Success"

To monitor current log output of the controller service with the latest 100 lines printed out:

$ journalctl -u virtio-net-controller -f -n 100

Validate

This command validates configurations of virtio-net-controller.

Syntax

virtnet validate [-h] -f PATH_TO_FILE

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

String |

No |

Validate the JSON format of the |

Output

|

Entry |

Type |

Description |

|

|

String |

Success or failed with message |

Example

To check if virtnet.conf is a valid JSON file:

# virtnet validate -f /opt/mellanox/mlnx_virtnet/virtnet.conf

/opt/mellanox/mlnx_virtnet/virtnet.conf is valid

Version

This command prints current and updated version of virtio-net-controller.

Syntax

virtnet version [-h]

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

Output

|

Entry |

Type |

Description |

|

|

String |

The original controller version |

|

|

String |

The to be updated controller version |

Example

Check current and next available controller version:

# virtnet version

[

{

"Original Controller": "v24.10.17"

},

{

"Destination Controller": "v24.10.19"

}

]

Update

This command performs a live update to another version installed on the OS. Instead of a complete shutdown and recreating all existing devices, this procedure updates to the new version with minimal down time.

Syntax

virtnet update [-h] [-s | -t]

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

N/A |

No |

Start live update virtio-net-controller |

|

|

|

N/A |

No |

Check live update status |

Output

|

Entry |

Type |

Description |

|

|

String |

If the update started successfully |

Example

To start the live update process, run:

# virtnet update -s

{'start': '0x1'}

"Update started, use 'virtnet update -t' or check logs for status"

To check the update status during the update process:

# virtnet update -t

{'status': '0x1'}

{

"status": "inactive",

"last live update status": "success",

"time_used (s)": 0.604152

}

Restart

This command performs a fast restart of the virtio-net-controller service. Compared to regular restart (using systemctl restart virtio-net-controller) this command has shorter down time per device.

Syntax

virtnet restart [-h]

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

Output

|

Entry |

Type |

Description |

|

|

String |

If the fast restart finishes successfully

|

Example

To start the live update process, run:

# virtnet restart

SUCCESS

Health

This command shows health information for given devices.

The virtio-net driver must be loaded for this command to show valid information.

Syntax

virtnet health [-h] {[-a] | [-p PF] [-v VF] | [-u VUID]} [show]

The options

--pf

,

--vf

,

--vuid

, and

--all

are mutually exclusive (except

--pf

and

--vf

which can be used together), but one of them must be applied.

|

Option |

Abbr |

Argument Type |

Required |

Description |

|

|

|

N/A |

No |

Show the help message and exit |

|

|

|

N/A |

No |

Query all the detailed information for all available devices. It can be time consuming if a large number of devices is available. |

|

|

|

Number |

No |

Unique device ID for the PF. Can be retrieved by using |

|

|

|

Number |

No |

Unique device ID for the VF. Can be retrieved by using |

|

|

|

String |

No |

Unique device SN for the device (PF/VF). Can be retrieved by using |

|

Sub-command |

Required |

Description |

|

|

Yes |

Show health information for given devices |

Output

|

Entry |

Type |

Description |

|

|

Number |

Physical function ID |

|

|

String |

Function type: Static PF, hotplug PF, VF |

|

String |

Unique device SN, it can be used as an index to query/modify/unplug a device |

|

String |

Device status field bit masks according to the virtio spec:

|

|

String |

|

|

Number |

The number of recoveries has been performed |

|

Dictionary |

Two types of health information are included:

where

and

Detailed descriptions of each error can be found in Health Statistics. |

Example

The following is an example of showing the information of the first PF:

# virtnet health -p 0 show

{'pf': '0x0', 'all': '0x0', 'subcmd': '0x0'}

{

"pf_id": 0,

"type": "static PF",

"vuid": "MT2306XZ00BPVNETS0D0F1",

"dev_status": {

"value": "0xf",

" 0": "ACK",

" 1": "DRIVER",

" 2": "DRIVER_OK",

" 3": "FEATURES_OK"

},

"health_status": "Good",

"health_recover_counter": 0,

"dev_health_details": {

"control_plane_errors": {

"sf_rqt_update_err": 0,

"sf_drop_create_err": 0,

"sf_tir_create_err": 0,

"steer_rx_domain_err": 0,

"steer_rx_table_err": 0,

"sf_flows_apply_err": 0,

"aarfs_flow_init_err": 0,

"vlan_flow_init_err": 0,

"drop_cnt_config_err": 0

},

"data_plane_errors": {

"sq_stall": 0,

"dma_q_stall": 0,

"spurious_db_invoke": 0,

"aux_not_invoked": 0,

"dma_q_errors": 0,

"host_read_errors": 0

}

}

}

Error Code

CLI commands will return non-0 error code upon failure. All error numbers are negative. When there is error happening from log, it could return error number as well.

If the error number is greater than -1000, it's standard error. Please refer to Linux error code at errno

If the error number is less or equal -1000, please refer to the table below for the explaination.

|

Errno |

Error Name |

Error Description |

|

|

|

Failed to validate device feature |

|

|

|

Failed to find device |

|

|

|

Failed - Device is not hotplugged |

|

|

|

Failed - Device did not start |

|

|

|

Failed - Virtio driver should not be loaded |

|

|

|

Failed to add epoll |

|

|

|

Failed - ID input exceeds the max range |

|

|

|

Failed - VUID is invalid |

|

|

|

Failed - MAC is invalid |

|

|

|

Failed - MSIX is invalid |

|

|

|

Failed - MTU is invalid |

|

|

|

Failed to find port contex |

|

|

|

Failed to load config from recovery file |

|

|

|

Failed to save config into recovery file |

|

|

|

Failed to create recovery file |

|

|

|

Failed to delete MAC in recovery file |

|

|

|

Failed to load MAC from recovery file |

|

|

|

Failed to save MAC into recovery file |

|

|

|

Failed to save MQ into recovery file |

|

|

|

Failed to load PF number from recovery file |

|

|

|

Failed to save RX mode into recovery file |

|

|

|

Failed to save PF and SF number into recovery file |

|

|

|

Failed to load SF number from recovery file |

|

|

|

Failed to apply MAC flow by SF |