NVIDIA DOCA Flow Inspector Service Guide

This guide provides instructions on how to use the DOCA Flow Inspector service container on top of NVIDIA® BlueField® DPU.

DOCA Flow Inspector service enables real-time data monitoring and extraction of telemetry components. These components can be leveraged by various services, including those focused on security, big data, and other purposes.

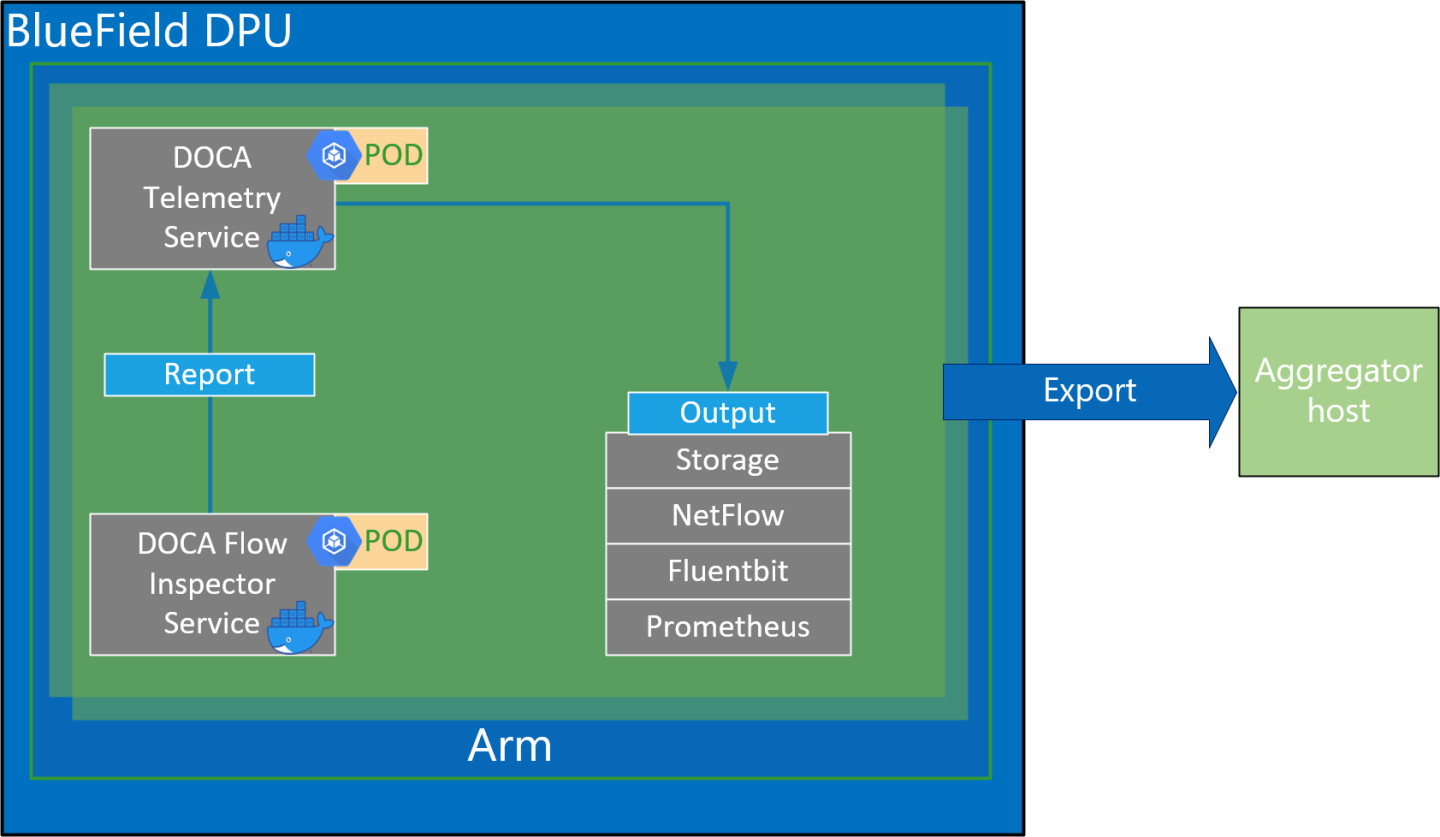

DOCA Flow Inspector service is linked to DOCA Telemetry Service (DTS). It receives mirrored packets from the user parses the data, and forwards it to the DTS, which aggregates predefined statistics from various providers and sources. The service utilizes the DOCA Telemetry API to communicate with the DTS, while the DPDK infrastructure facilitates packet acquisition at a user-space layer.

DOCA Flow Inspector operates within its dedicated Kubernetes pod on BlueField, aimed at receiving mirrored packets for analysis. The received packets are parsed and transmitted, in a predefined structure, to a telemetry collector that manages the remaining telemetry aspects.

Service Flow

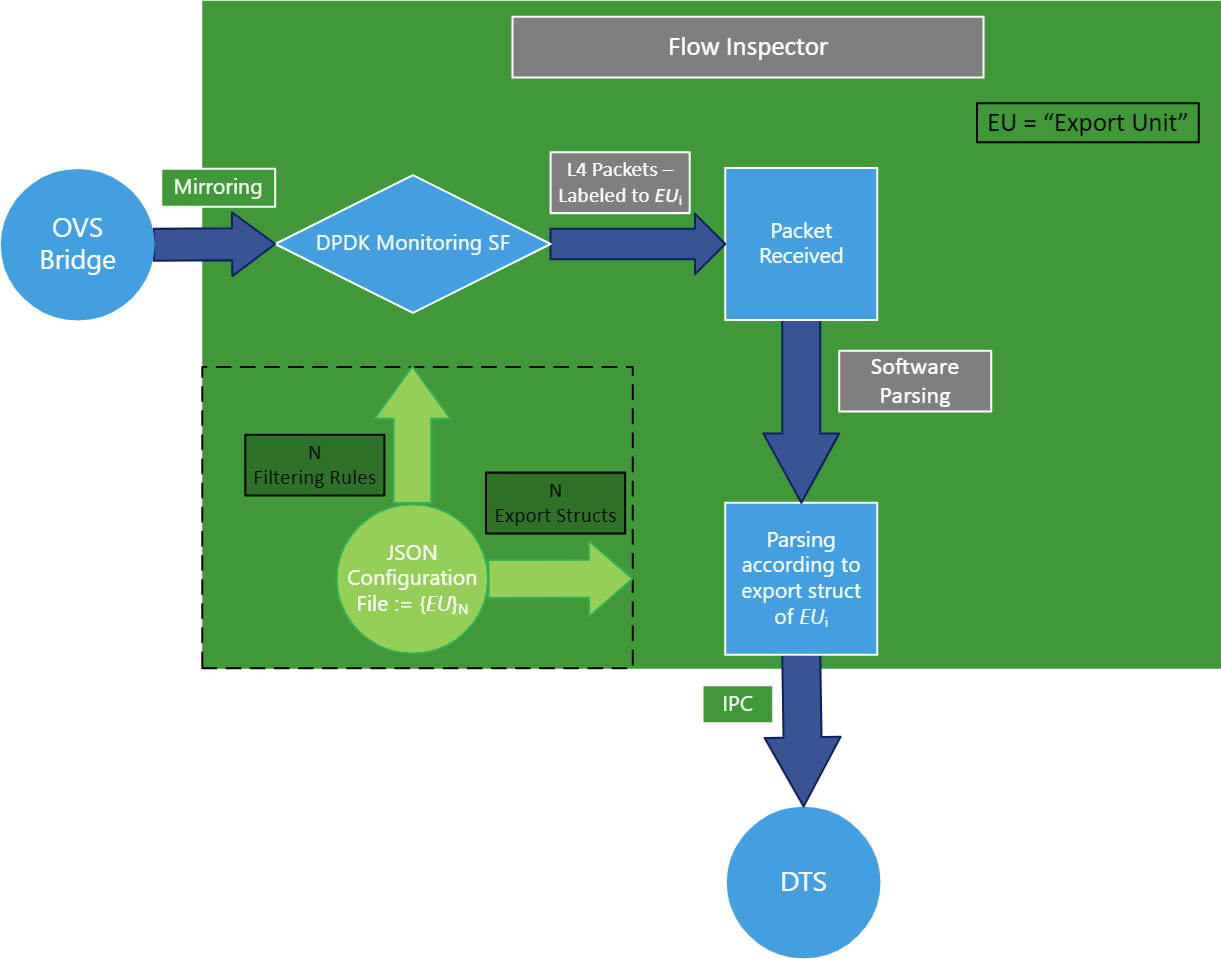

The DOCA Flow Inspector receives a configuration file in a JSON format which includes which of the mirrored packets should be filtered and which information should be sent to DTS for inspection.

The configuration file can include several export units under the "export-units" attribute. Each one is comprised of a "filter" and an "export". Each packet that matches one filter (based on the protocol and ports in the L4 header) is then parsed to the corresponding requested struct defined in the export. That information only is sent for inspection. A packet that does not match any filter is dropped.

In addition, the configuration file could contain FI optional configuration flags, see JSON format and example in the Configuration section.

The service watches for changes in the JSON configuration file in runtime and for any change that reconfigures the service.

The DOCA Flow Inspector runs on top of DPDK to acquire L4. The packets are then filtered and HW-marked with their export unit index. The packets are then parsed according to their export unit and export struct, and then forwarded to the telemetry collector using IPC.

Configuration phase:

A JSON file is used as input to configure the export units (i.e., filters and corresponding export structs).

The filters are translated to HW rules on the SF (scalable function port) using the DOCA Flow library.

The connection to the telemetry collector is initialized and all export structures are registered to DTS.

Inspection phase:

Traffic is mirrored to the relevant SF.

Ingress traffic is received through the configured SF.

Non-L4 traffic and packets that do not match any filter are dropped using hardware rules.

Packets matching a filter are marked with the export unit index they match and are passed to the software layer in the Arm cores.

Packets are parsed to the desired struct by the index of export unit.

The telemetry information is forwarded to the telemetry agent using IPC.

Mirrored packets are freed.

If the JSON file is changed, run the configuration phase with the updated file.

Before deploying the flow inspector container, ensure that the following prerequisites are satisfied:

Create the needed files and directories. Folders should be created automatically. Make sure the .json file resides inside the folder:

$ touch /opt/mellanox/doca/services/flow_inspector/bin/flow_inspector_cfg.json

Validate that DTS's configuration folders exist. They should be created automatically when DTS is deployed.

$ sudo mkdir -p /opt/mellanox/doca/services/telemetry/config $ sudo mkdir -p /opt/mellanox/doca/services/telemetry/ipc_sockets $ sudo mkdir -p /opt/mellanox/doca/services/telemetry/data

Allocate huge pages as needed by DPDK. This requires root privileges.

$ sudo echo

2048> /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepagesOr alternatively:

$ sudo echo

'2048'| sudo tee -a /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages $ sudo mkdir /mnt/huge $ sudo mount -t hugetlbfs nodev /mnt/hugeDeploy a scalable function according to NVIDIA BlueField DPU Scalable Function User Guide and mirror packets accordingly using the Open vSwitch command.

For example:Mirror packets from p0 to sf4:

$ ovs-vsctl add-br ovsbr1 $ ovs-vsctl add-port ovsbr1 p0 $ ovs-vsctl add-port ovsbr1 en3f0pf0sf4 $ ovs-vsctl -- --id=

@p1get port en3f0pf0sf4 \ -- --id=@p2get port p0 \ -- --id=@mcreate mirror name=m0 select-dst-port=@p2select-src-port=@p2output-port=@p1\ -- set bridge ovsbr1 mirrors=@mMirror packets from pf0hpf or p0 that pass through sf4:

$ ovs-vsctl add-br ovsbr1 $ ovs-vsctl add-port ovsbr1 pf0hpf $ ovs-vsctl add-port ovsbr1 p0 $ ovs-vsctl add-port ovsbr1 en3f0pf0sf4 $ ovs-vsctl -- --id=

@p1get port en3f0pf0sf4 \ -- --id=@p2get port pf0hpf \ -- --id=@mcreate mirror name=m0 select-dst-port=@p2select-src-port=@p2output-port=@p1\ -- set bridge ovsbr1 mirrors=@m$ ovs-vsctl -- --id=@p1get port en3f0pf0sf4 \ -- --id=@p2get port p0 \ -- --id=@mcreate mirror name=m0 select-dst-port=@p2select-src-port=@p2output-port=@p1\ -- set bridge ovsbr1 mirrors=@mThe output of last command (creating the mirror) should output a sequence of letters and numbers similar to the following:

0d248ca8-66af-427c-b600-af1e286056e1

WarningThe designated SF must be created as a trusted function. Additional details can be found in the Scalable Function Setup Guide.

For information about the deployment of DOCA containers on top of the BlueField DPU, refer to NVIDIA DOCA Container Deployment Guide.

DTS is available on NGC, NVIDIA's container catalog. Service-specific configuration steps and deployment instructions can be found under the service's container page.

The order of running DTS and DOCA Flow Inspector is important. You must launch DTS, wait a few seconds, and then launch DOCA Flow Inspector.

JSON Input

The DOCA Flow Inspector configuration file should be placed under /opt/mellanox/doca/services/flow_inspector/bin/<json_file_name>.json and be built in the following format:

{

/* Optional param, time period to check for changes in JSON config file (in seconds) and flush telemetry buffer if enabled (default is 60 seconds) */

"config-sample-rate": <time>,

/* Optional param, telemetry buffer size in bytes (default is 60KB) */

"telemetry-buffer-size": <size>,

/* Optional param, enable periodic telemetry buffer flush and defining the period time (in seconds) */

"telemetry-flush-rate": <numeric value in seconds>,

/* Mandatory param, Flow Inspector export units */

"export-units":

[

/* Export Unit 0 */

{

"filter":

{ "protocols": [<L4 protocols separated by comma>], # What L4 protocols are allowed

"ports":

[

[<source port>, <destination port>],

[<source ports range>, <destination ports range>],

<... more pairs of source, dest ports>

]

},

"export":

{

"fields": [<fields to be part of export struct, separated by comma>] # the Telemetry event will contain these fields.

}

},

<... More Export Units>

]

}

Export Unit Attributes

Allowed protocols:

"TCP"

"UDP"

Port range:

It is possible to insert a range of ports for both source and destination

Range should include borders [start_port-end_port]

Allowed ports:

All ports in range 0-65535 as a string

Or * to indicate any ports

Allowed fields in export struct:

timestamp – timestamp indicating when it was received by the service

host_ip – the IP of the host running the service

src_mac – source MAC address

dst_mac – destination MAC address

src_ip – source IP

dst_ip – destination IP

protocol – L4 protocol

src_port – source port

dst_port – destination port

flags – additional flags (relevant to TCP only)

data_len – data payload length

data_short – short version of data (payload sliced to first 64 bytes)

data_medium – medium version of data (payload sliced to first 1500 bytes)

data_long – long version of data (payload sliced to first 9*1024 bytes)

JSON example:

{

/* Optional param, time period to check for changes in JSON config file (in seconds) and flush telemetry buffer if enabled (default is 60 seconds) */

"config-sample-rate": 30,

/* Optional param, telemetry maximum buffer size in bytes */

"telemetry-buffer-size": 70000,

/* Optional param, enable periodic telemetry buffer flush and defining the period time (in seconds) */

"telemetry-flush-rate": 1.5,

/* Mandatory param, Flow Inspector export units */

"export-units":

[

/* Export Unit 0 */

{

"filter":

{

"protocols": ["tcp", "udp"],

"ports":

[

["*","433-460"],

["20480","28341"],

["28341","20480"],

["68", "67"],

["67", "68"]

]

},

"export":

{

"fields": ["timestamp", "host_ip", "src_mac", "dst_mac", "src_ip", "dst_ip", "protocol", "src_port",

"dst_port", "flags", "data_len", "data_long"]

}

},

/* Export Unit 1 */

{

"filter":

{

"protocols": ["tcp"],

"ports":

[

["5-10","422"],

["80","80"]

]

},

"export":

{

"fields": ["timestamp","dst_ip", "host_ip", "data_len", "flags", "data_medium"]

}

}

]

}

If a packet header contains L4 ports or L4 protocol which are not specified in any filter, they are filtered out.

Yaml File

The .yaml file downloaded from NGC can be easily edited according to your needs.

env:

# Set according to the local setup

- name: SF_NUM_1

value: "2" # Additional EAL flags, if needed

- name: EAL_FLAGS

value: "" # Service-Specific command line arguments

- name: SERVICE_ARGS

value: "--policy /flow_inspector/flow_inspector_cfg.json -l 60"

The SF_NUM_1 value can be changed according to the SF used in the OVS configuration and can be found using the command in NVIDIA BlueField DPU Scalable Function User Guide.

The EAL_FLAGS value must be changed according to the DPDK flags required when running the container.

The SERVICE_ARGS are the runtime arguments received by the service:

-l, --log-level <value> – sets the (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE>

-p, --policy <json_path> – sets the JSON path inside the container

Verifying Output

Enabling write to data in the DTS allows debugging the validity of the DOCA Flow Inspector.

To allow DTS to write locally, uncomment the following line in /opt/mellanox/doca/services/telemetry/config/dts_config.ini:

#output=/data

Any changes in dts_config.ini necessitate restarting the pod for the new settings to apply.

The schema folder contains JSON-formatted metadata files which allow reading the binary files containing the actual data. The binary files are written according to the naming convention shown in the following example:

Requires installing the tree runtime utility (apt install tree).

$ tree /opt/mellanox/doca/services/telemetry/data/

/opt/mellanox/doca/services/telemetry/data/

├── {year}

│ └── {mmdd}

│ └── {hash}

│ ├── {source_id}

│ │ └── {source_tag}{timestamp}.bin

│ └── {another_source_id}

│ └── {another_source_tag}{timestamp}.bin

└── schema

└── schema_{MD5_digest}.json

New binary files appear when:

The service starts

When the binary file's max age/size restriction is reached

When JSON file is changed and new schemas of telemetry are created

An hour passes

If no schema or no data folders are present, refer to the Troubleshooting section in NVIDIA DOCA Telemetry Service Guide.

source_id is usually set to the machine hostname. source_tag is a line describing the collected counters, and it is often set as the provider's name or name of user-counters.

Reading the binary data can be done from within the DTS container using the following command:

crictl exec -it <Container-ID> /opt/mellanox/collectx/bin/clx_read -s /data/schema /data/path/to/datafile.bin

The data written locally should be shown in the following format assuming a packet matching Export Unit 1 from the example has arrived:

{

"timestamp": 1656427771076130,

"host_ip": "10.237.69.238",

"src_ip": "11.7.62.4",

"dst_ip": "11.7.62.5",

"data_len": 1152,

"data_short": "Hello World"

}

When troubleshooting container deployment issues, it is highly recommended to follow the deployment steps and tips in the "Review Container Deployment" section of the NVIDIA DOCA Container Deployment Guide.

Pod is Marked as "Ready" and No Container is Listed

Error

When deploying the container, the pod's STATE is marked as Ready, an image is listed, however no container can be seen running:

$ sudo crictl pods

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME

3162b71e67677 4 seconds ago Ready doca-flow-inspector-my-dpu default 0 (default)

$ sudo crictl images

IMAGE TAG IMAGE ID SIZE

k8s.gcr.io/pause 3.2 2a060e2e7101d 487kB

nvcr.io/nvidia/doca/doca_flow_inspector 1.1.0-doca2.0.2 2af1e539eb7ab 86.8MB

$ sudo crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

Solution

In most cases, the container did start, but immediately exited. This could be checked using the following command:

$ sudo crictl ps -a

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

556bb78281e1d 2af1e539eb7ab 6 seconds ago Exited doca-flow-inspector 1 3162b71e67677 doca-flow-inspector-my-dpu

Should the container fail (i.e., state of Exited), it is recommended to examine the Flow Inspector's main log at /var/log/doca/flow_inspector/flow_inspector_fi_dev.log.

In addition, for a short period of time after termination, the container logs could also be viewed using the container's ID:

$ sudo crictl logs 556bb78281e1d

...

2023-10-04 11:42:55 - flow_inspector - FI - ERROR - JSON file was not found <config-file-path>.

Pod is Not Listed

Error

When placing the container's YAML file in the Kubelet's input folder, the service pod is not listed in the list of pods:

$ sudo crictl pods

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME

Solution

In most cases, the pod does not start due to the absence of the requested hugepages. This can be verified using the following command:

$ sudo journalctl -u kubelet -e. . .

Oct 04 12:12:19 <my-dpu> kubelet[2442376]: I1004 12:12:19.905064 2442376 predicate.go:103] "Failed to admit pod, unexpected error while attempting to recover from admission failure" pod="default/doca-flow-inspector-<my-dpu>" err="preemption: error finding a set of pods to preempt: no set of running pods found to reclaim resources: [(res: hugepages-2Mi, q: 104563999874), ]"