Sync Event

DOCA Sync Event API is considered thread-unsafe

DOCA Sync Event does not currently support GPU related features.

DOCA Sync Event (SE) is a software synchronization mechanism for parallel execution across the CPU, DPU, DPA and remote nodes. The SE holds a 64-bit counter which can be updated, read, and waited upon from any of these units to achieve synchronization between executions on them.

To achieve the best performance, DOCA SE defines a subscriber and publisher locality, where:

Publisher – the entity which updates (sets or increments) the event value

Subscriber – the entity which gets and waits upon the SE

NoteBoth publisher and subscriber can read (get) the actual counter's value.

Based on hints, DOCA selects memory locality of the SE counter, closer to the subscriber side. Each DOCA SE is configured with a single publisher location and a single subscriber location which can be the CPU or DPU.

The SE control path happens on the CPU (either host CPU or DPU CPU) through the DOCA SE CPU handle. It is possible to retrieve different execution-unit-specific handles (DPU/DPA/GPU/remote handles) by exporting the SE instance through the CPU handle. Each SE handle refers to the DOCA SE instance from which it is retrieved. By using the execution-unit-specific handle, the associated SE instance can be operated from that execution unit.

In a basic scenario, synchronization is achieved by updating the SE from one execution and waiting upon the SE from another execution unit.

DOCA SE can be used as a context which follows the architecture of a DOCA Core Context, it is recommended to read the following sections of the DOCA Core page before proceeding:

DOCA SE based applications can run either on the host machine or on the NVIDIA® BlueField® DPU target and can involve DPA, GPU and other remote nodes.

Using DOCA SE with DPU requires BlueField to be configured to work in DPU mode as described in NVIDIA BlueField DPU Modes of Operation .

Asynchronous wait on a DOCA SE requires NVIDIA® BlueField-3® or newer.

DOCA SE can be converted to a DOCA Context as defined by DOCA Core. See DOCA Context for more information.

As a context, DOCA SE leverages DOCA Core architecture to expose asynchronous tasks/events offloaded to hardware.

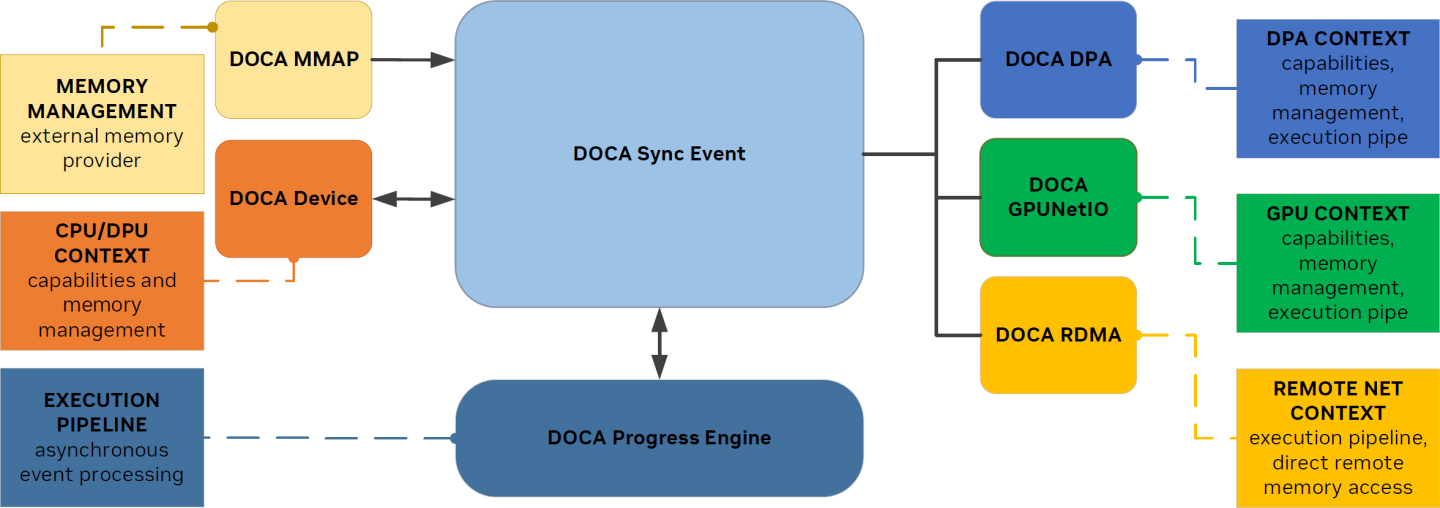

The figure that follows demonstrates components used by DOCA SE. DOCA Device provides information on the capabilities of the configured HW used by SE to control system resources.

DOCA DPA, GPUNetIO, and RDMA modules are required for cross-device synchronization (could be DPA, GPU, or remote peer respectively).

DOCA SE allows flexible memory management by its ability to specify an external buffer, where a DOCA mmap module handles memory registration for advanced synchronization scenarios.

For asynchronous operation scheduling, SE uses the DOCA Progress Engine (PE) module.

DOCA Sync Event Components Diagram

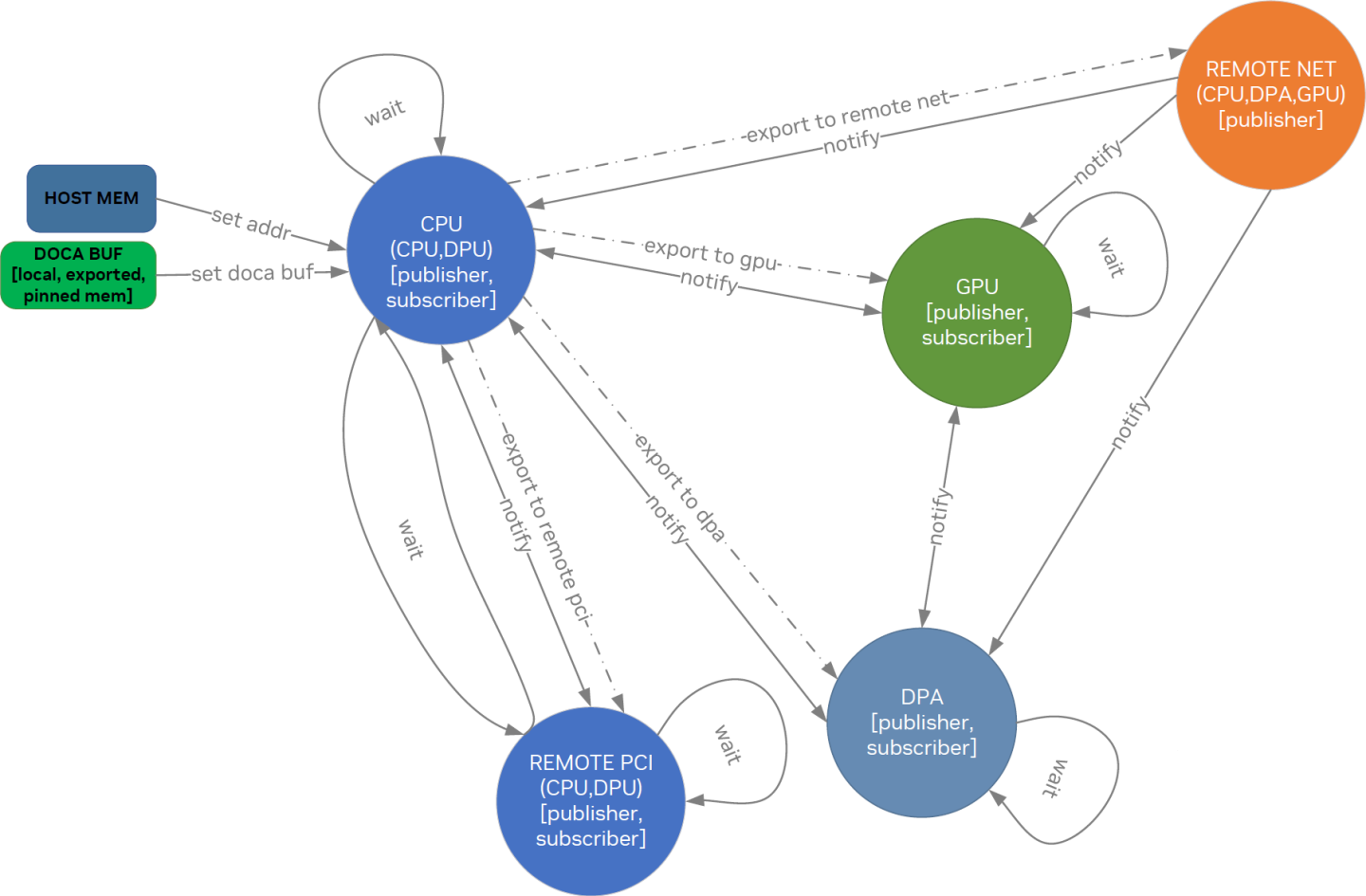

The following diagram represents DOCA SE synchronization abilities on various devices.

DOCA Sync Event Interaction Diagram

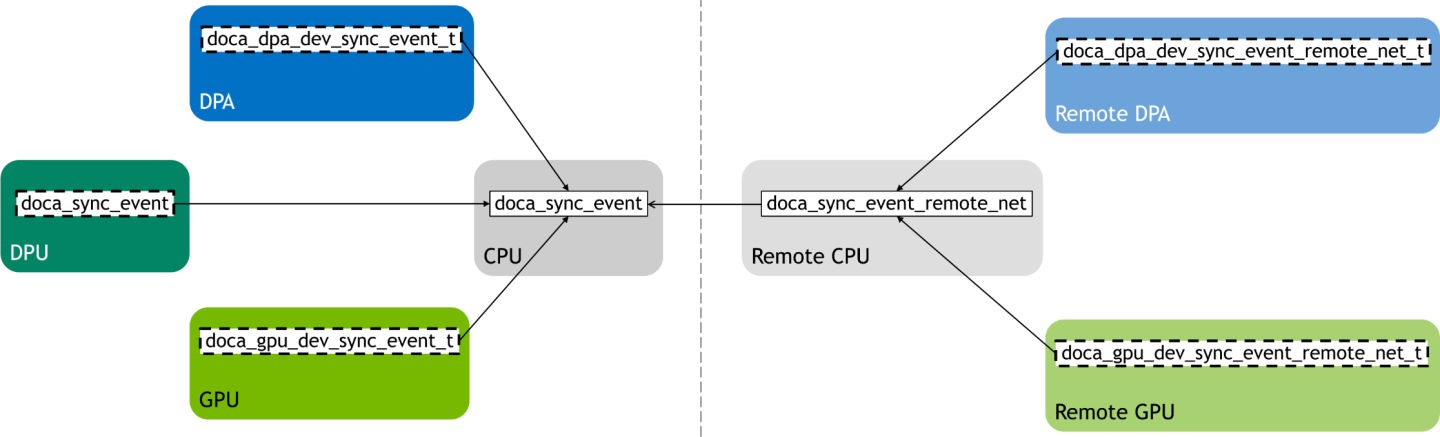

DOCA Sync Event Objects

DOCA SE exposes different types of handles per execution unit as detailed in the following table.

|

Execution Unit |

Type |

Description |

|

CPU (host/DPU) |

struct doca_sync_event |

Handle for interacting with the SE from the CPU |

|

DPU |

struct doca_sync_event |

Handle for interacting with the SE from the DPU |

|

DPA |

doca_dpa_dev_sync_event_t |

Handle for interacting with the SE from the DPA |

|

GPU |

doca_gpu_dev_sync_event_t |

Handle for interacting with the SE from the GPU |

|

Remote net CPU |

doca_sync_event_remote_net |

Handle for interacting with the SE from a remote CPU |

|

Remote net DPA |

doca_dpa_dev_sync_event_remote_net_t |

Handle for interacting with the SE from a remote DPA |

|

Remote net GPU |

doca_gpu_dev_sync_event_remote_net_t |

Handle for interacting with the SE from a remote GPU |

Each one of these handle types has its own dedicated API for creating the handle and interacting with it.

Any DOCA SE creation starts with creating CPU handle by calling doca_sync_event_create API.

After creation, the SE entity could be shared with local PCIe, remote CPU, DPA, and GPU by a dedicated handle creation via the DOCA SE export flow, as illustrated in the following diagram:

Operation Modes

DOCA SE exposes two different APIs for starting it depending on the desired operation mode, synchronous or asynchronous.

Once started, SE operation mode cannot be changed.

Synchronous Mode

Start the SE to operate in synchronous mode by calling doca_sync_event_start.

In synchronous operation mode, each data path operation (get, update, wait) blocks the calling thread from continuing until the operation is done.

An operation is considered done if the requested change fails and the exact error can be reported or if the requested change has taken effect.

Asynchronous Mode

To start the SE to operate in asynchronous mode, convert the SE instance to doca_ctx by calling doca_sync_event_as_ctx. Then use DOCA CTX API to start the SE and DOCA PE API to submit tasks on the SE (see section "DOCA Progress Engine" for more).

Configurations

Mandatory Configurations

These configurations must be set by the application before attempting to start the SE:

DOCA SE CPU handle must be configured by providing the runtime hints on the publisher and subscriber locations. Both the subscriber and publisher locations must be configured using the following APIs:

doca_sync_event_add_publisher_location_<cpu|dpa|gpu|remote_net>

doca_sync_event_add_subscriber_location_<cpu|dpa|gpu>

For the asynchronous use case, at least one task/event type must be configured. See configuration of tasks.

Optional Configurations

If these configurations are not set, a default value is used.

Allowed for CPU-DPU SEs only, this configuration provides an 8-byte host buffer to be used as the backing memory of the SE. If set, it is user responsibility to handle the memory (i.e., preserve the memory allocated during DOCA SE lifecycle and free it after DOCA SE destruction). If not provided, the SE backing memory is allocated by the SE.

Export DOCA Sync Event to Another Execution Unit

To use an SE from an execution unit other than the CPU, it must be exported to get a handle for the specific execution unit:

DPA – doca_sync_event_export_to_dpa returns a DOCA SE DPA handle (doca_dpa_dev_sync_event_t) which can be passed to the DPA SE data path APIs from the DPA kernel

GPU – doca_sync_event_export_to_gpu returns a DOCA SE GPU handle (doca_gpu_dev_sync_event_t) which can be passed to the GPU SE data path APIs for the CUDA kernel

DPU – doca_sync_event_export_to_dpu returns a blob which can be used from the DPU CPU to instantiate a DOCA SE DPU handle (struct doca_sync_event) using the doca_sync_event_create_from_export function

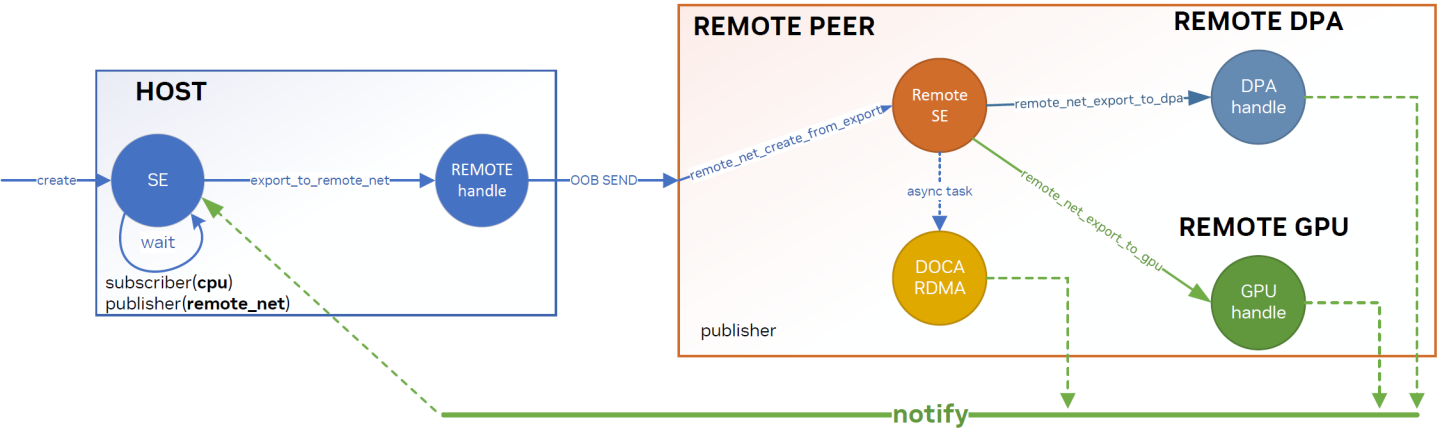

DOCA SE allows notifications from remote peers (remote net) utilizing capabilities of the DOCA RDMA library. The following figure illustrates the remote net export flow:

Remote net CPU – doca_sync_event_export_to_remote_net returns a blob which can be used from the remote net CPU to instantiate a DOCA SE remote net CPU handle (struct doca_sync_event_remote_net) using the doca_sync_event_remote_net_create_from_export function. The handle can be used directly for submitting asynchronous tasks through the doca_rdma library or exported to the remote DPA/GPU.

Remote net DPA – doca_sync_event_remote_net_export_to_dpa returns a DOCA SE remote net DPA handle (doca_dpa_dev_sync_event_remote_net_t) which can be passed to the DPA RDMA data path APIs from a DPA kernel

Remote net GPU – doca_sync_event_remote_net_export_to_gpu returns a DOCA SE remote net GPU handle (doca_gpu_dev_sync_event_remote_net_t) which can be passed to the GPU RDMA data path APIs from a CUDA kernel

The CPU handle (struct doca_sync_event) can be exported only to the location where the SE is configured.

Prior to calling any export function, users must first verify it is supported by calling the corresponding export capability getter: doca_sync_event_cap_is_export_to_dpa_supported, doca_sync_event_cap_is_export_to_gpu_supported, doca_sync_event_cap_is_export_to_dpu_supported, or doca_sync_event_cap_is_export_to_remote_net_supported.

Prior to calling any *_create_from_export function, users must first verify it is supported by calling the corresponding create from the export capability getter: doca_sync_event_cap_is_create_from_export_supported or doca_sync_event_cap_remote_net_is_create_from_export_supported.

Once created from an export, both the SE DPU handle struct doca_sync_event and the SE remote net CPU handle struct doca_sync_event_remote_net cannot be configured, but only the SE DPU handle must be started before it is used.

Device Support

DOCA SE needs a device to operate. For instructions on picking a device, see DOCA Core device discovery.

Both NVIDIA® BlueField ® -2 and BlueField ® -3 devices are supported as well as any doca_dev is supported.

Asynchronous wait (blocking/polling) is supported on NVIDIA® BlueField ® -3 and NVIDIA® ConnectX®-7 and later.

As device capabilities may change in the future (see DOCA Capability Check), it is recommended to choose your device using any relevant capability method (starting with the prefix doca_sync_event_cap_*).

For BlueField-3, ignore the output of doca_sync_event_cap_task_wait_gt_is_supported as it may falsely indicate that the feature is not supported.

Capability APIs to query whether sync event can be constructed from export blob:

doca_sync_event_cap_is_create_from_export_supported

doca_sync_event_cap_remote_net_is_create_from_export_supported

Capability APIs to query whether sync event can be exported to other execution units:

doca_sync_event_cap_is_export_to_dpu_supported

doca_sync_event_cap_is_export_to_dpa_supported

doca_sync_event_cap_is_export_to_gpu_supported

doca_sync_event_cap_is_export_to_remote_net_supported

doca_sync_event_cap_remote_net_is_export_to_dpa_supported

doca_sync_event_cap_remote_net_is_export_to_gpu_supported

Capability APIs to query whether an asynchronous task is supported:

doca_sync_event_cap_task_get_is_supported

doca_sync_event_cap_task_notify_set_is_supported

doca_sync_event_cap_task_notify_add_is_supported

doca_sync_event_cap_task_wait_gt_is_supported

This section describes execution on CPU. For additional execution environments refer to section "Alternative Datapath Options".

DOCA Sync Event Data Path Operations

The DOCA SE synchronization mechanism is achieved using exposed datapath operations. The API exposes a function for "writing" to the SE and for "reading" the SE.

The synchronous API is a set of functions which can be called directly by the user, while the asynchronous API is exposed by defining a corresponding doca_task for each synchronous function to be submitted on a DOCA PE (see DOCA Progress Engine and DOCA Context for additional information).

Remote net CPU handle (struct doca_sync_event_remote_net) can be used for submitting asynchronous tasks using the DOCA RDMA library.

Prior to asynchronous task submission, users must check if the job is supported using doca_error_t doca_sync_event_cap_task_<task_type>_is_supported.

For BlueField-3, ignore the output of doca_sync_event_cap_task_wait_gt_is_supported as it may falsely indicates that the feature is not supported.

The following subsections describe the DOCA SE datapath operation with respect to synchronous and asynchronous operation modes.

Publishing on DOCA Sync Event

Setting DOCA Sync Event Value

Users can set DOCA SE to a 64-bit value:

Synchronously by calling doca_sync_event_update_set

Asynchronously by submitting a doca_sync_event_task_notify_set task

Adding to DOCA Sync Event Value

Users can atomically increment the value of a DOCA SE:

Synchronously by calling doca_sync_event_update_add

Asynchronously by submitting a doca_sync_event_task_notify_add task

Subscribe on DOCA Sync Event

Getting DOCA Sync Event Value

Users can get the value of a DOCA SE:

Synchronously by calling doca_sync_event_get

Asynchronously by submitting a doca_sync_event_task_get task

Waiting on DOCA Sync Event

Waiting for an event is the main operation for achieving synchronization between different execution units.

Users can wait until an SE reaches a specific value in a variety of ways.

Synchronously

doca_sync_event_wait_gt waits for the value of a DOCA SE to be greater than a specified value in a "polling busy wait" manner (100% processor utilization). This API enables users to wait for an SE in real time.

doca_sync_event_wait_gt_yield waits for the value of a DOCA SE to be greater than a specified value in a "periodically busy wait" manner. After each polling iteration, the calling thread relinquishes the CPU so a new thread gets to run. This API allows a tradeoff between real-time polling to CPU starvation.

This wait method is supported only from the CPU.

Asynchronously

DOCA SE exposes an asynchronous wait method by defining a doca_sync_event_task_wait_gt task.

Submitting a doca_sync_event_task_wait_gt job is limited to an SE with a value in the range of [0, 254] (before applying the wait mask) and is limited to a wait threshold in the range of [0, 254]. Other configurations result in anomalous behavior.

Users can wait for wait-job completion in the following methods:

Blocking – get a doca_event_handle_t from the doca_pe to blocking-wait on

Polling – poll the wait task by calling doca_pe_progress

Asynchronous wait (blocking/polling) is supported on BlueField-3 and ConnectX-7 and later.

Users may leverage the doca_sync_event_task_get job to implement asynchronous wait by asynchronously submitting the task on a DOCA PE and comparing the result to some threshold.

Tasks

DOCA SE context exposes asynchronous tasks that leverage the DPU hardware according to the DOCA Core architecture. See DOCA Core Task.

Get Task

The get task retrieves the value of a DOCA SE.

Configuration

|

Description |

API to Set the Configuration |

API to Query Support |

|

Enable the task |

doca_sync_event_task_get_set_conf |

doca_sync_event_cap_task_get_is_supported |

|

Number of tasks |

doca_sync_event_task_get_set_conf |

- |

Input

Common input described in DOCA Core Task.

|

Name |

Description |

|

Return value |

8-bytes memory pointer to hold the DOCA SE value |

Output

Common output described in DOCA Core Task .

Task Successful completion

After the task is completed successfully, the return value memory holds the DOCA SE value.

Task Failed Completion

If the task fails midway:

The context may enter a stopping state if a fatal error occurs

The return value memory may be modified

Limitations

All limitations are described in DOCA Core Task.

Notify Set Task

The notify set task allows setting the value of a DOCA SE.

Configuration

|

Description |

API to Set the Configuration |

API to Query Support |

|

Enable the task |

doca_sync_event_task_notify_set_set_conf |

doca_sync_event_cap_task_notify_set_is_supported |

|

Number of tasks |

doca_sync_event_task_notify_set_set_conf |

- |

Input

Common input described in DOCA Core Task.

|

Name |

Description |

|

Set value |

64-bit value to set the DOCA SE value to |

Output

Common output described in DOCA Core Task.

Task Successful completion

After the task is completed successfully, the DOCA SE value is set to the given set value.

Task Failed completion

If the task fails midway, the context may enter a stopping state if a fatal error occurs.

Limitations

This operation is not atomic. Other limitations are described in DOCA Core Task.

Notify Add Task

The notify add task allows atomically setting the value of a DOCA SE.

Configuration

|

Description |

API to Set the Configuration |

API to Query Support |

|

Enable the task |

doca_sync_event_task_notify_add_set_conf |

doca_sync_event_cap_task_notify_add_is_supported |

|

Number of tasks |

doca_sync_event_task_notify_add_set_conf |

- |

Input

Common input described in DOCA Core Task.

|

Name |

Description |

|

Increment value |

64-bit value to atomically increment the DOCA SE value by |

|

Fetched value |

8-bytes memory pointer to hold the DOCA SE value before the increment |

Output

Common output described in DOCA Core Task.

Task Successful completion

After the task is completed successfully the following occurs:

The DOCA SE value is incremented according to the given increment value

The fetched value memory holds the DOCA SE value before the increment

Task Failed completion

If the task fails midway:

The context may enter a stopping state if a fatal error occurs

The fetched value memory may be modified.

Limitations

All limitations are described in DOCA Core Task.

Wait Greater-than Task

The notify add task allows atomically waiting for a DOCA SE value to be greater than some threshold.

Configuration

|

Description |

API to set the configuration |

API to query support |

|

Enable the task |

doca_sync_event_task_wait_gt_set_conf |

doca_sync_event_cap_task_wait_gt_is_supported |

|

Number of tasks |

doca_sync_event_task_wait_gt_set_conf |

- |

Input

Common input described in DOCA Core Task.

|

Name |

Description |

|

Wait threshold |

64-bit value to wait for the DOCA SE value to be greater than |

|

Mask |

64-bit mask to apply on the DOCA SE value before comparing with the wait threshold |

Output

Common output described in DOCA Core Task.

Task Successful completion

After the task is completed successfully the following will happen:

The DOCA SE value is grater than the given wait threshold.

Task Failed completion

If the task fails midway, the context may enter a stopping state if a fatal error occurs.

Limitations

Submitting a doca_sync_event_task_wait_gt job is limited to a SE with a value in the range [0, 254] and is limited to a wait threshold in the range [0, 254]. Other configurations result in anomalous behavior.

Other limitations are described in DOCA Core Task.

Events

DOCA SE context exposes asynchronous events to notify about changes that happen unexpectedly, according to the DOCA Core architecture.

The only event DOCA SE context exposes is common events as described in DOCA Core Event.

The DOCA SE context follows the Context state machine as described in DOCA Core Context State Machine.

The following subsection describe how to move to specific states and what is allowed in each state.

Idle

In this state, it is expected that the application will:

Destroy the context; or

Start the context

Allowed operations in this state:

Configure the context according to section "Configurations"

Start the context

It is possible to reach this state as follows:

|

Previous State |

Transition Action |

|

None |

Create the context |

|

Running |

Call stop after making sure all tasks have been freed |

|

Stopping |

Call progress until all tasks are completed and then freed |

Starting

This state cannot be reached.

Running

In this state, it is expected that the application will:

Allocate and submit tasks

Call progress to complete tasks and/or receive events

Allowed operations in this state:

Allocate previously configured task

Submit an allocated task

Call stop

It is possible to reach this state as follows:

|

Previous State |

Transition Action |

|

Idle |

Call start after configuration |

Stopping

In this state, it is expected that the application will:

Call progress to complete all inflight tasks (tasks will complete with failure)

Free any completed tasks

Allowed operations in this state:

Call progress

It is possible to reach this state as follows:

|

Previous State |

Transition Action |

|

Running |

Call progress and fatal error occurs |

|

Running |

Call stop without freeing all tasks |

Multiple SE handles (for different execution units) associated with the same DOCA SE instance can live simultaneously, though the teardown flow is performed only from the CPU on the CPU handle.

Users must validate active handles associated with the CPU handle during the teardown flow because DOCA SE does not do that.

Stopping DOCA Sync Event

To stop a DOCA SE:

Synchronous – call doca_sync_event_stop on the CPU handle

Asynchronous – stop the DOCA context associated with the DOCA SE instance

Stopping a DOCA SE must be followed by destruction. Refer to section "Destroying DOCA Sync Event" for details.

Destroying DOCA Sync Event

Once stopped, a DOCA SE instance can be destroyed by calling doca_sync_event_destroy on the CPU handle.

Remote net CPU handle instance terminates and frees by calling doca_sync_event_remote_net_destroy on the remote net CPU handle.

Upon destruction, all the internal resources are released, allocated memory is freed, associated doca_ctx (if it exists) is destroyed, and any associated exported handles (other than CPU handles) and their resources are destroyed.

DOCA SE supports datapath on CPU (see section " Execution Phase") and also on DPA and GPU.

GPU Datapath

DOCA SE does not currently support GPU related features.

DPA Datapath

An SE with DPA-subscriber configuration currently supports synchronous APIs only.

Once a DOCA SE DPA handle (doca_dpa_dev_sync_event_t) has been retrieved it can be used within a DOCA DPA kernel as described in DOCA DPA Sync Event.

This section provides DOCA SE sample implementation on top of the BlueField DPU.

The sample demonstrates how to share an SE between the host and the DPU while simultaneously interacting with the event from both the host and DPU sides using different handles.

Running DOCA Sync Event Sample

Refer to the following documents:

NVIDIA DOCA Installation Guide for Linux for details on how to install BlueField-related software.

NVIDIA DOCA Troubleshooting Guide for any issue you may encounter with the installation, compilation, or execution of DOCA samples.

To build a given sample:

cd /opt/mellanox/doca/samples/doca_common/sync_event_<host|dpu> meson /tmp/build ninja -C /tmp/build

WarningThe binary doca_sync_event_<host|dpu> is created under /tmp/build/.

Sample (e.g., sync_event_dpu) usage:

Usage: doca_sync_event_dpu [DOCA Flags] [Program Flags] DOCA Flags: -h, --help Print a help synopsis -v, --version Print program version information -l, --log-level Set the (numeric) log level

forthe program <10=DISABLE,20=CRITICAL,30=ERROR,40=WARNING,50=INFO,60=DEBUG,70=TRACE> --sdk-log-level Set the SDK (numeric) log levelforthe program <10=DISABLE,20=CRITICAL,30=ERROR,40=WARNING,50=INFO,60=DEBUG,70=TRACE> -j, --json <path> Parse all command flags from an input json file Program Flags: -d, --dev-pci-addr Device PCI address -r, --rep-pci-addr DPU representor PCI address --async Start DOCA Sync Event in asynchronous mode (synchronous mode bydefault) --async_num_tasks Async num tasksforasynchronous mode --atomic Update DOCA Sync Event using Add operation (Set operation bydefault)WarningThe flag --rep-pci-addr is relevant only for the DPU.

For additional information per sample, use the -h option:

/tmp/build/doca_sync_event_<host|dpu> -h

Samples

Sync Event DPU

This sample should be run on the DPU before Sync Event Host.

This sample demonstrates creating an SE from an export on the DPU which is associated with an SE on the host and interacting with the SE to achieve synchronization between the host and DPU.

The sample logic includes:

Reading configuration files and saving their content into local buffers.

Locating and opening DOCA devices and DOCA representors matching the given PCIe addresses.

Initializing DOCA Comm Channel.

Receiving SE blob through Comm Channel.

Creating SE from export.

Starting the above SE in the requested operation mode (synchronous or asynchronous).

Interacting with the SE from the DPU:

Waiting for signal from the host – synchronously or asynchronously (with busy wait polling) according to user input.

Signaling the SE for the host – synchronously or asynchronously, using set or atomic add, according to user input.

Cleaning all resources.

Reference:

/opt/mellanox/doca/samples/doca_common/sync_event_dpu/sync_event_dpu_sample.c

/opt/mellanox/doca/samples/doca_common/sync_event_dpu/sync_event_dpu_main.c

/opt/mellanox/doca/samples/doca_common/sync_event_dpu/meson.build

Sync Event Host

This sample should run on the host only after Sync Event DPU on the DPU has been started.

This sample demonstrates how to initialize a SE on the host to be shared with the DPU, how to export it to DPU, and how to interact with the SE to achieve synchronization between the host and DPU.

The sample logic includes:

Reading configuration files and saving their content into local buffers.

Locating and opening the DOCA device matching the given PCIe address.

Creating and configuring the SE to be shared with the DPU.

Starting the above SE in the requested operation mode (synchronous or asynchronous).

Initializing DOCA Comm Channel.

Exporting the SE and sending it through the Comm Channel.

Interacting with the SE from the host:

Signaling the SE for the DPU – synchronously or asynchronously, using set or atomic add, according to user input.

Waiting for a signal from the DPU – synchronously or asynchronously, with busy wait polling, according to user input.

Cleaning all resources.

Reference:

/opt/mellanox/doca/samples/doca_common/sync_event_host/sync_event_host_sample.c

/opt/mellanox/doca/samples/doca_common/sync_event_host/sync_event_host_main.c

/opt/mellanox/doca/samples/doca_common/sync_event_host/meson.build