Advanced Features

Tuning MLNX SNAP for the best performance may require additional resources from the system (CPU, memory) and may affect SNAP controller scalability.

Increasing Number of Used Arm Cores

By default, MLNX SNAP uses 4 Arm cores, with core mask 0xF0. The core mask is configurable in /etc/default/mlnx_snap (parameter CPU_MASK) for best performance (i.e., CPU_MASK=0xFF).

As SNAP is an SPDK based application, it constantly polls the CPU and therefore occupies 100% of the CPU it runs on.

Disabling Mem-pool

When mem-pool is enabled, that reduces the memory footprint but decreases the overall performance.

To configure the controller to not use mem-pool, set MEM_POOL_SIZE=0 in /etc/default/mlnx_snap.

See section "Mem-pool" for more information.

Maximizing Single IO Transfer Data Payload

Increasing datapath staging buffer sizes improves performance for larger block sizes (>4K):

For NVMe, this can be controlled by increasing the MDTS value either in the JSON file or the RPC parameter. For more information regarding MDTS, refer to the NVMe specification. The default value is 4 (64K buffer), and the maximum value is 6 (256K buffer).

For virtio-blk, this can be controlled using the seg_max and size_max RPC parameters. For more information regarding these parameters, refer to the VirtIO-blk specification. No hard-maximum limit exists.

Increasing Emulation Manager MTU

The default MTU for the emulation manager network interface is 1500. Increasing MTU to over 4K on the emulation manager (e.g., MTU=4200) also enables the SNAP application to transfer larger amount of data in a single Host→DPU memory transactions, which may improve performance.

Optimizing Number of Queues and MSIX Vector (virtio-blk only)

SNAP emulated queues are spread evenly across all configured PFs (static and dynamic) and defined VFs per PF (whether functions are being used or not). This means that the larger the total number of functions SNAP is configured with (either PFs or VFs), the less queues and MSIX resources each function will be assigned which would affect its performance accordingly. Therefore, it is recommended to configure in Firmware Configuration the minimal number of PFs and VFs per PF desired for that specific system.

Another consideration is matching between MSIX vector size and the desired number of queues. The standard virtio-blk kernel driver uses an MSIX vector to get events on both control and data paths. When possible, it assigns exclusive MSIX for each virtqueue (e.g., per CPU core) and reserves an additional MSIX for configuration changes. If not possible, it uses a single MSIX for all virtqueues. Therefore, to ensure best performance with virtio-blk devices, the condition VIRTIO_BLK_EMULATION_NUM_MSIX > virtio_blk_controller.num_queues must be applied.

The total number of MSIXs is limited on BlueField-2 cards, so MSIX reservation considerations may apply when running with multiple devices. For more information, refer to this FAQ.

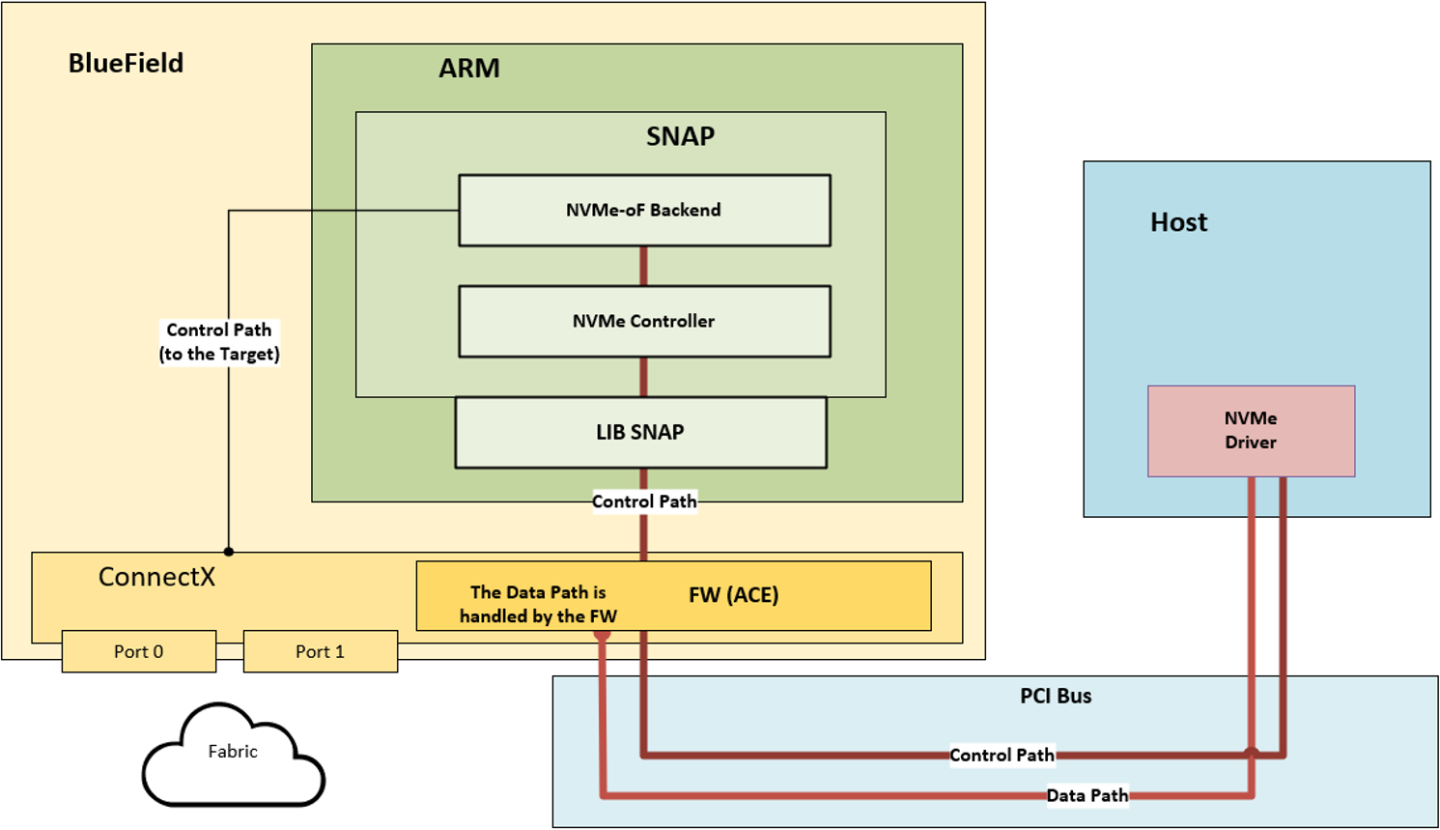

The NVMe-RDMA full offload mode allows reducing the Arm cores CPU cost by offloading the data-path directly to the FW/HW. If the user needs to control the data plane or the backend this mode does not allow it.

In full offload mode the control plane is handled at the SW level, while the data plane is being handled at the FW level and requires no SW interaction. For that reason, the user has no control over the backend devices. Thus they are detected automatically and no namespace management commands are required.

The NVMe-RDMA architecture:

In this mode, a remote target parameter must be provided using a JSON configuration file (a JSON file example can be found in /etc/mlnx_snap/mlnx_snap_offload.json.example) and the NVMe controller can detect and connect to the relevant backends by itself.

As the SNAP application does not participate in the datapath and needs fewer resources, it is recommended to reduce CPU_MASK to a single core (i.e., CPU_MASK=0x80). Refer to "Increasing Number of Used Arm Cores" for CPU_MASK configuration.

After configuration is done, users must create the NVMe subsystem and (offloaded) controller. Note that snap_rpc.py controller_nvme_namespace_attach is not required and --rdma_device mlx5_2 is provided to mark the relevant RDMA interface for the connection.

The following example creates an NVMe full-offload controller:

# snap_rpc.py subsystem_nvme_create "Mellanox_NVMe_SNAP" "Mellanox NVMe SNAP Controller"

# snap_rpc.py controller_nvme_create mlx5_0 --subsys_id 0 --pci_bdf 88:00.2 --nr_io_queues 32 --mdts 4 -c /etc/mlnx_snap/mlnx_snap.json --rdma_device mlx5_2

This is the matching JSON file example:

{

"ctrl": {

"offload": true,

},

"backends": [

{

"type": "nvmf_rdma",

"name": "testsubsystem",

"paths": [

{

"addr": "1.1.1.1",

"port": 4420,

"ka_timeout_ms": 15000,

"hostnqn": "r-nvmx03"

}

]

}

]

}

Full offload mode requires that the provided RDMA device (given in --rdma_device parameter) supports RoCE transport (typically SF interfaces). Full offload mode for virtio-blk is not supported.

The discovered namespace ID may be remapped to get another ID when exposed to the host in order to comply with firmware limitations.

SR-IOV configuration depends on the kernel version:

Optimal configuration may be achieved with a new kernel in which the sriov_drivers_autoprobe sysfs entry exists in /sys/bus/pci/devices/<BDF>/

Otherwise, the minimal requirement may be met if the sriov_totalvfs sysfs entry exists in /sys/bus/pci/devices/<BDF>/

SR-IOV configuration needs to be done on both the host and DPU side, marked in the following example as [HOST] and [ARM] respectively. This example assumes that there is 1 VF on static virtio-blk PF 86:00.3 (NVMe flow is similar), and that a "Malloc0" SPDK BDEV exists.

Optimal Configuration

[ARM] snap_rpc.py controller_virtio_blk_create mlx5_0 -d 86:00.3 --bdev_type none

[HOST] modprobe -v virtio-pci && modprobe -v virtio-blk

[HOST] echo 0 > /sys/bus/pci/devices/0000:86:00.3/sriov_drivers_autoprobe

[HOST] echo 1 > /sys/bus/pci/devices/0000:86:00.3/sriov_numvfs [ARM] snap_rpc.py controller_virtio_blk_create mlx5_0 --pf_id 0 --vf_id 0 --bdev_type spdk --bdev Malloc0

\* Continue by binding the VF PCIe function to the desired VM. *\

After configuration is finished, no disk is expected to be exposed in the hypervisor. The disk only appears in the VM after the PCIe VF is assigned to it using the virtualization manager. If users want to use the device from the hypervisor, they simply need to bind the PCIe VF manually.

Minimal Requirement

[ARM] snap_rpc.py controller_virtio_blk_create mlx5_0 -d 86:00.3 --bdev_type none

[HOST] modprobe -v virtio-pci && modprobe -v virtio-blk

[HOST] echo 1 > /sys/bus/pci/devices/0000:86:00.3/sriov_numvfs

\* the host now hangs until configuration is performed on the DPU side *\ [ARM] snap_rpc.py controller_virtio_blk_create mlx5_0 --pf_id 0 --vf_id 0 --bdev_type spdk --bdev Malloc0

\* Host is now released *\

\* Continue by binding the VF PCIe function to the desired VM. *\

Hotplug PFs do not support SR-IOV.

It is recommended to add pci=assign-busses to the boot command line when creating more than 127 VFs.

Without this option, the following errors may appear from host, and the virtio driver will not probe these devices.

pci 0000:84:00.0: [1af4:1041] type 7f class 0xffffff

pci 0000:84:00.0: unknown header type 7f, ignoring device

Zero-copy is supported on SPDK 21.07 and higher.

The SNAP-direct feature allows SNAP applications to transfer data directly from the host memory to remote storage without using any staging buffer inside the DPU.

SNAP enables the feature according to the SPDK BDEV configuration only when working against an SPDK NVMe-oF RDMA block device.

To configure the controller to use Zero Copy, set the following in /etc/default/mlnx_snap:

For virtio-blk:

VIRTIO_BLK_SNAP_ZCOPY=1

For NVMe:

NVME_SNAP_ZCOPY=1

NVMe/TCP Zero Copy is implemented as a custom NVDA_TCP transport in SPDK NVMe initiator and it is based on a new XLIO socket layer implementation.

The implementation is different for Tx and Rx:

The NVMe/TCP Tx Zero Copy is similar between RDMA and TCP in that the data is sent from the host memory directly to the wire without an intermediate copy to Arm memory

The NVMe/TCP Rx Zero Copy allows achieving partial zero copy on the Rx flow by eliminating copy from socket buffers (XLIO) to application buffers (SNAP). But data still must be DMA'ed from Arm to host memory.

To enable NVMe/TCP Zero Copy, use SPDK v22.05.nvda --with-xlio.

For more information about XLIO including limitations and bug fixes, refer to the NVIDIA Accelerated IO (XLIO) Documentation.

To configure the controller to use NVMe/TCP Zero Copy, set the following in /etc/default/mlnx_snap:

EXTRA_ARGS="-u –mem-size 1200 –wait-for-rpc"

NVME_SNAP_TCP_RX_ZCOPY=1

SPDK_XLIO_PATH=/usr/lib/libxlio.so

MIN_HUGEMEM=4G

To connect using NVDA_TCP transport:

If /etc/mlnx_snap/spdk_rpc_init.conf is not being used, add the following at the start of the file in the given order:

sock_set_default_impl -i xlio framework_start_init

When the mlnx_snap service is started, run the following command:

[ARM] spdk_rpc.py bdev_nvme_attach_controller -b <NAME> -t NVDA_TCP -f ipv4 -a <IP> -s <PORT> -n <SUBNQN>

If /etc/mlnx_snap/spdk_rpc_init.conf is not being used, once the service is started, run the following commands in the given order:

[ARM] spdk_rpc.py sock_set_default_impl -i xlio [ARM] spdk_rpc.py framework_start_init [ARM] spdk_rpc.py bdev_nvme_attach_controller -b <NAME> -t NVDA_TCP -f ipv4 -a <IP> -s <PORT> -n <SUBNQN>

NVDA_TCP transport is fully interoperable with other implementations based on the NVMe/TCP specifications.

NVDA_TCP limitations:

SPDK multipath is not supported

NVMe/TCP data digest is not supported

SR-IOV is not supported

As SNAP is a standard user application running on the DPU OS, it is vulnerable to system interferences, like closing SNAP application gracefully (i.e., stopping mlnx_snap service), killing SNAP process brutally (i.e., running kill -9), or even performing full OS restart to DPU. If there are exposed devices already in use by host drivers when any of these interferences occur, that may cause the host drivers/application to malfunction.

To avoid such scenarios, the SNAP application supports a "Robustness and Recovery" option. So, if the SNAP application gets interrupted for any reason, the next instance of the SNAP application will be able to resume where the previous instance left off.

This functionality can be enabled under the following conditions:

Only virtio-blk devices are used (this feature is currently not supported for NVMe protocol)

By default, SNAP application is programmed to survive any kind of "graceful" termination, including controller deletion, service restart, and even (graceful) Arm reboot. If extended protection against brutal termination is required, such as sending SIGKILL to SNAP process or performing brutal Arm shutdown, the --force_in_order flag must be added to the snap_rpc.py controller_virtio_blk_create command.

NoteThe force_in_order flag may impact performance if working with remote targets as it may cause high rates of out-of-order completions or if different queues are served in different rates.

It is the user's responsibility to open the recovered virtio-blk controller with the exact same characteristics as the interrupted virtio-blk controller (same remote storage device, same BAR parameters, etc.)

By default, SNAP application pre-allocates all required memory buffers in advance.

A great amount of allocated memory may be required when using:

Large number of controllers (as with SR-IOV)

Large number of queues per controller

High queue-depth

Large mdfs (for NVMe) or seg_max and size_max (for virtio-blk)

To reduce the memory footprint of the application, users may choose to use mem-pool (a shared memory buffer pool) instead. However, using mem-pool may decrease overall performance.

To configure the controller to use mem-pool rather than private ones:

In /etc/default/mlnx_snap, set the parameter MEM_POOL_SIZE to a non-zero value. This parameter accepts K/M/G notations (e.g., MEM_POOL_SIZE=100M). If K/M/G notation is not specified, the value defaults to bytes.

Users must choose the right value for their needs—a value too small may cause longer starvations, while a value too large consumes more memory. As a rule of thumb, typical usage may choose to set it as a minimum (num_devices*4MB, 512MB).

Upon controller creation, add the option --mempool. For example:

snap_rpc.py controller_nvme_create mlx5_0 —subsys_id 0 —pf_id 0 --mem pool

NoteThe per controller mem-pool configuration is independent from all others. Users can set some controllers to work with mem-pool and other controllers to work without it.

SNAP supports virtio-blk transitional devices. Virtio transitional devices refer to devices supporting drivers conforming to modern specification and legacy drivers (conforming to legacy 0.95 specifications).

To configure virtio-blk PCIe functions to be transitional devices, special firmware configuration parameters must be applied:

VIRTIO_BLK_EMULATION_PF_PCI_LAYOUT (0: MODERN / 1: TRANSITIONAL) – configures transitional device support for PFs

NoteThis parameter is currently not supported.

VIRTIO_BLK_EMULATION_VF_PCI_LAYOUT (0: MODERN / 1: TRANSITIONAL) – configures transitional device support for underlying VFs

NoteThis parameter is currently not supported.

VIRTIO_EMULATION_HOTPLUG_TRANS (True/False) – configures transitional device support for hot-plugged virtio-blk devices

To use virtio-blk transitional devices, Linux boot parameters must be set on the host:

If the kernel version is older than 5.1, set the following Linux boot parameter on the host OS:

intel_iommu=off

If virtio_pci is built-in from host OS, set the following Linux boot parameter:

virtio_pci.force_legacy=

1If virtio_pci is a kernel module rather than built-in from host OS, use force legacy to load the module:

modprobe -rv virtio_pci modprobe -v virtio_pci force_legacy=

1

For hot-plugged functions, additional configuration must be applied during SNAP hotplug operation:

# snap_rpc.py emulation_device_attach mlx5_0 virtio_blk -- transitional_device --bdev_type spdk --bdev BDEV

Live migration is a standard process supported by QEMU which allows system administrators to pass devices between virtual machines in a live running system. For more information, refer to QEMU VFIO device Migration documentation.

Live migration is supported for SNAP virtio-blk devices. It can be activated using a driver with proper support (e.g., NVIDIA's proprietary VDPA-based Live Migration Solution). For more info, refer to TBD.

If the physical function (PF) has been removed, for instance, using vDPA provisioning virtio-blk PF with the command:

python ./app/vfe-vdpa/vhostmgmt mgmtpf -a 0000:af:00.3

It is advisable to confirm and restore the presence of controllers in SNAP before attempting to re-add them using the command:

python dpdk-vhost-vfe/app/vfe-vdpa/vhostmgmt vf -v /tmp/sock-blk-0 -a 0000:59:04.5