Introduction

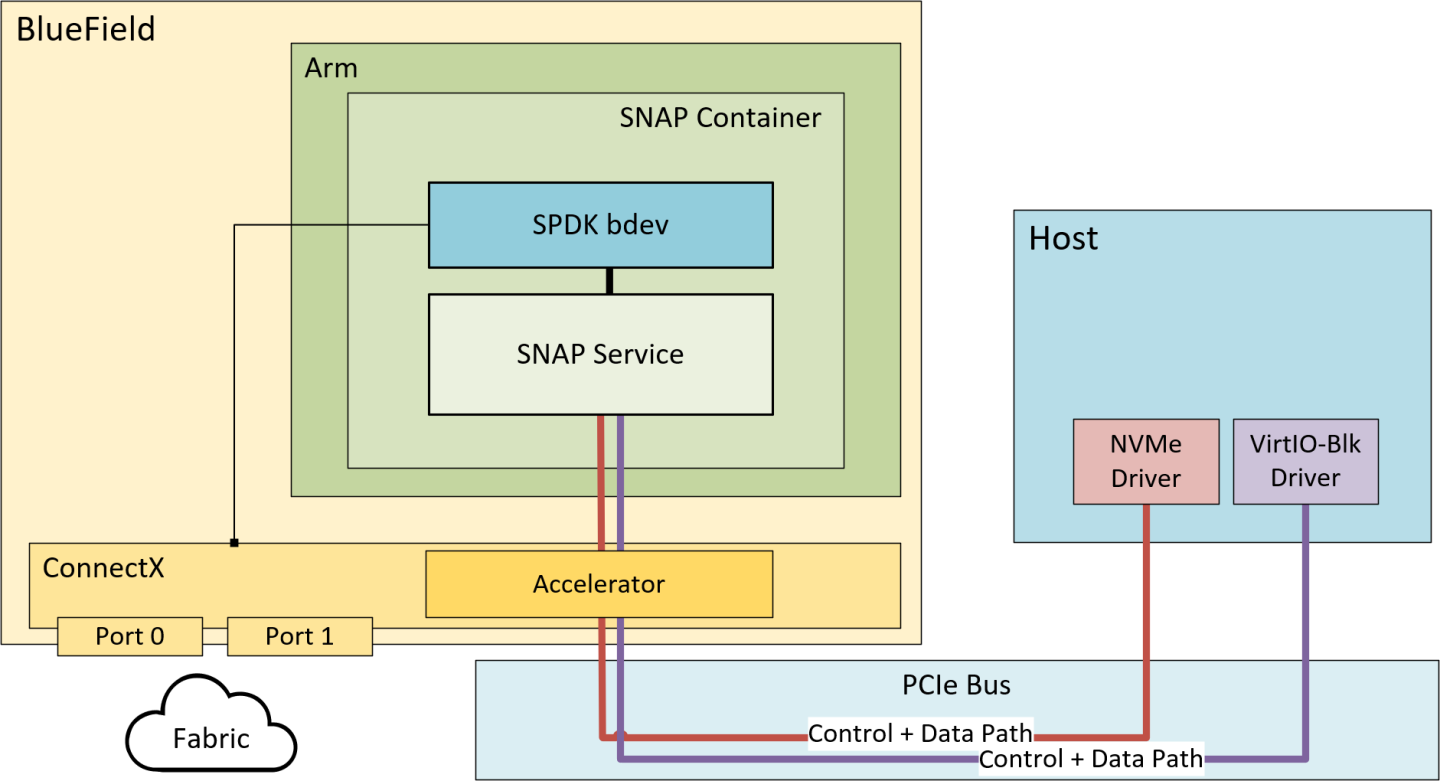

NVIDIA® BlueField® SNAP and virtio-blk SNAP (storage-defined network accelerated processing) technology enables hardware-accelerated virtualization of local storage. NVMe/virtio-blk SNAP presents networked storage as a local block-storage device (e.g., SSD) emulating a local drive on the PCIe bus. The host OS or hypervisor uses its standard storage driver, unaware that communication is done, not with a physical drive, but with NVMe/virtio-blk SNAP framework. Any logic may be applied to the I/O requests or to the data via the NVMe/virtio-blk SNAP framework prior to redirecting the request and/or data over a fabric-based network to remote or local storage targets.

NVMe/virtio-blk SNAP is based on the NVIDIA® BlueField® DPU family technology and combines unique software-defined hardware-accelerated storage virtualization with the advanced networking and programmability capabilities of the DPU. NVMe/virtio-blk SNAP together with the BlueField DPU enable a world of applications addressing storage and networking efficiency and performance.

The traffic arriving from the host towards the emulated PCIe device is redirected to its matching storage controller opened on the mlnx_snap service.

The controller implements the device specification and may expose backend device accordingly (in this use case SPDK is used as the storage stack that exposes backend devices). When a command is received, the controller executes it.

Admin commands are mostly answered immediately, while I/O commands are redirected to the backend device for processing.

The request-handling pipeline is completely asynchronous, and the workload is distributed across all Arm cores (allocated to SPDK application) to achieve the best performance.

The following are key concepts for SNAP:

- Full flexibility in fabric/transport/protocol (e.g. NVMe-oF/iSCSI/other, RDMA/TCP, ETH/IB)

- NVMe and virtio-blk emulation support

- Programmability

- Easy data manipulation

- Allowing zero-copy DMA from the remote storage to the host

- Using Arm cores for data path

BlueField SNAP for NVIDIA® BlueField®-2 DPU is licensed software. Users must purchase a license per BlueField-2 DPU to use them.

NVIDIA® BlueField®-3 DPU does not have license requirements to run BlueField SNAP.

In this approach, the container could be downloaded from NVIDIA NGC and could be easily deployed on the DPU.

The yaml file includes SNAP binaries aligned with the latest spdk.nvda version. In this case, the SNAP sources are not available, and it is not possible to modify SNAP to support different SPDK versions (SNAP as an SDK package should be used for that).

SNAP 4.x is not pre- installed on the BFB but can be downloaded manually on demand .

For instructions on how to install the SNAP container, please see "SNAP Container Deployment".

The SNAP development package (custom) is intended for those wishing to customize the SNAP service to their environment, usually to work with a proprietary bdev and not with the spdk.nvda version. This allows users to gain full access to the service code and the lib headers which enables them to compile their changes.

SNAP Emulation Lib

This includes the protocols libraries and the interaction with the firmware/hardware (PRM) as well as:

Plain shared objects (*.so)

Static archives (*.a)

pkgconfig definitions (*.pc)

Include files (*.h)

SNAP Service Sources

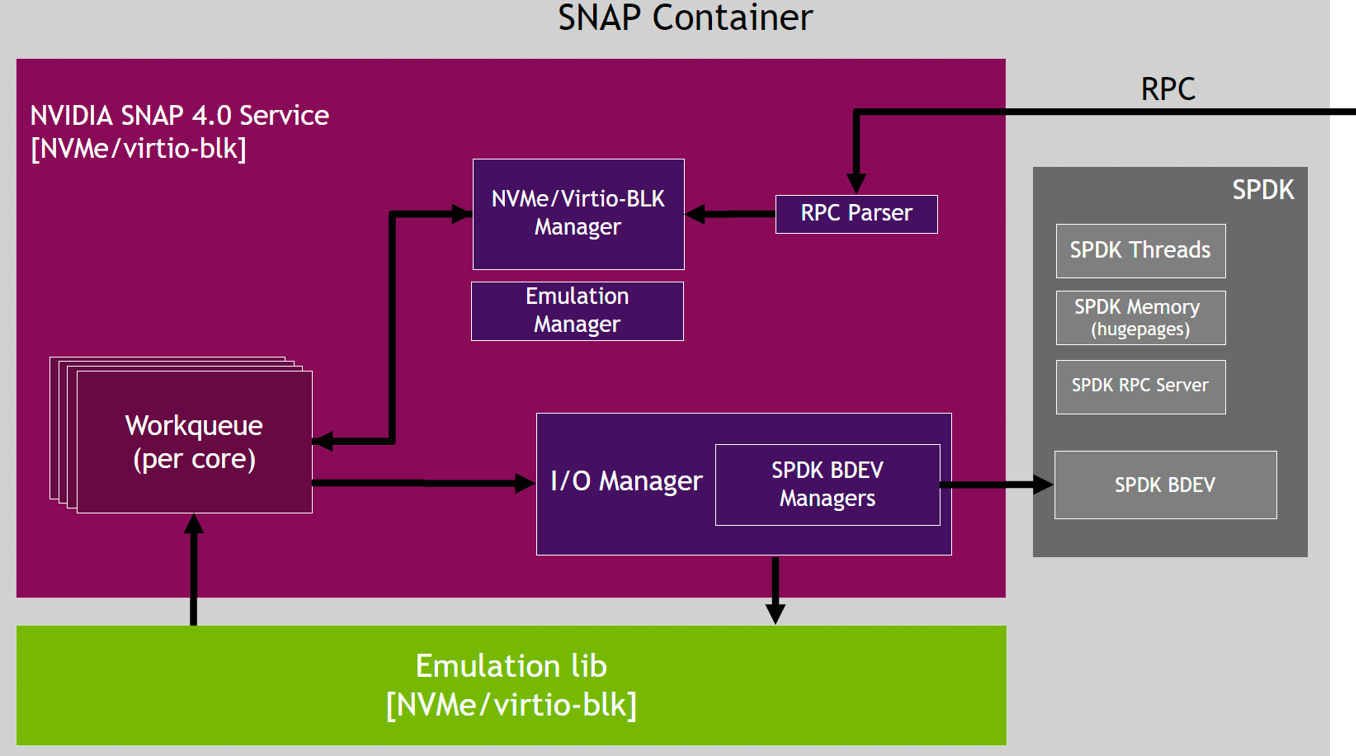

This includes the following managers:

Emulation device managers:

Emulation manager – manages the device emulations, function discovery, and function events

Hotplug manager – manages the device emulations hotplug and hot-unplug

Config manager – handles common configurations and RCPs (which are not protocol-specific)

Service infrastructure managers:

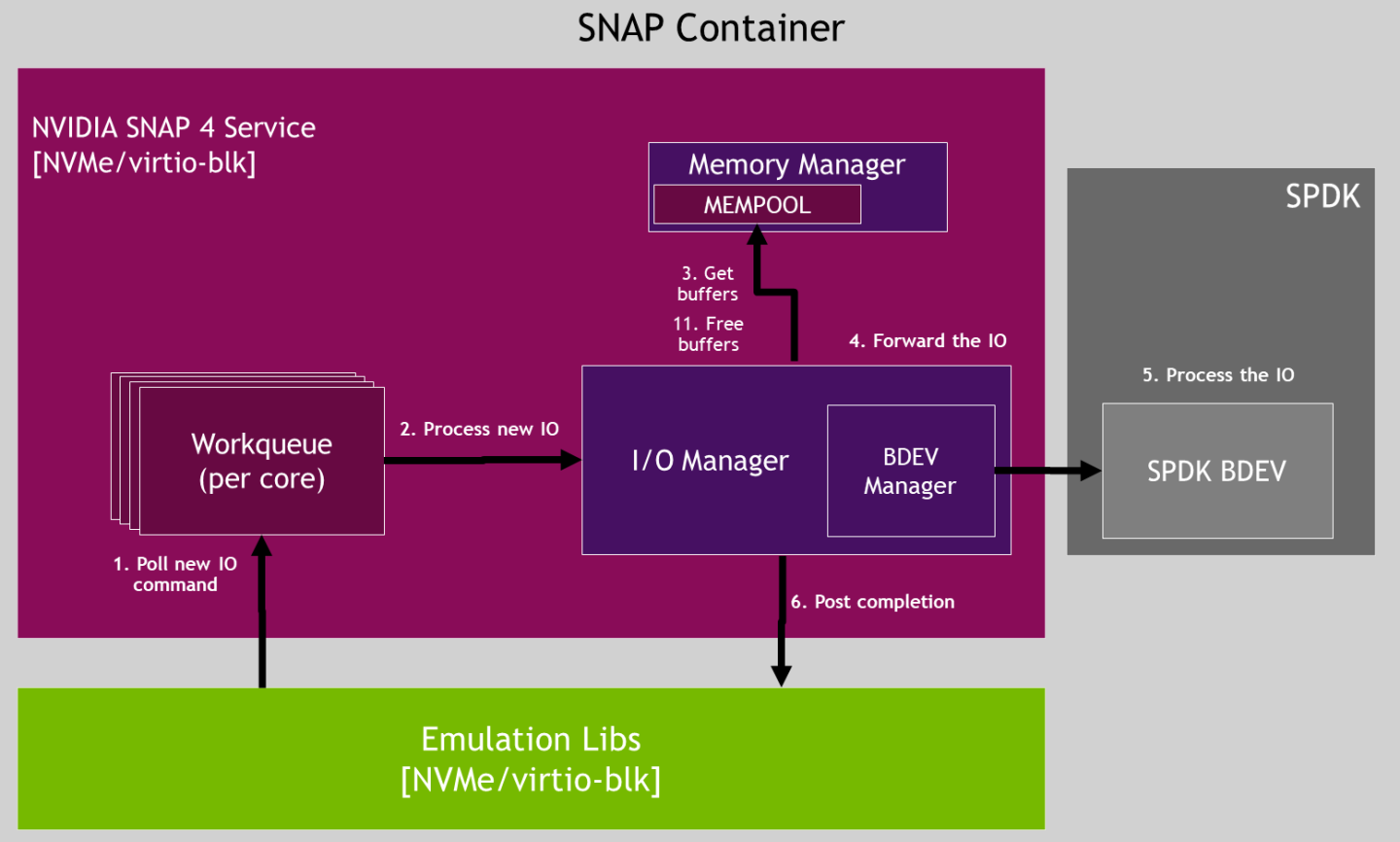

Memory manager – handles the SNAP mempool which is used to copy into the Arm memory when zero-copy between the host and the remote target is not used

Thread manager – handles the SPDK threads

Protocol specific control path managers:

NVMe manager – handles the NVMe subsystem, NVMe controller and Namespace functionalities

VBLK manager – handles the virtio-blk controller functionalities

IO manager:

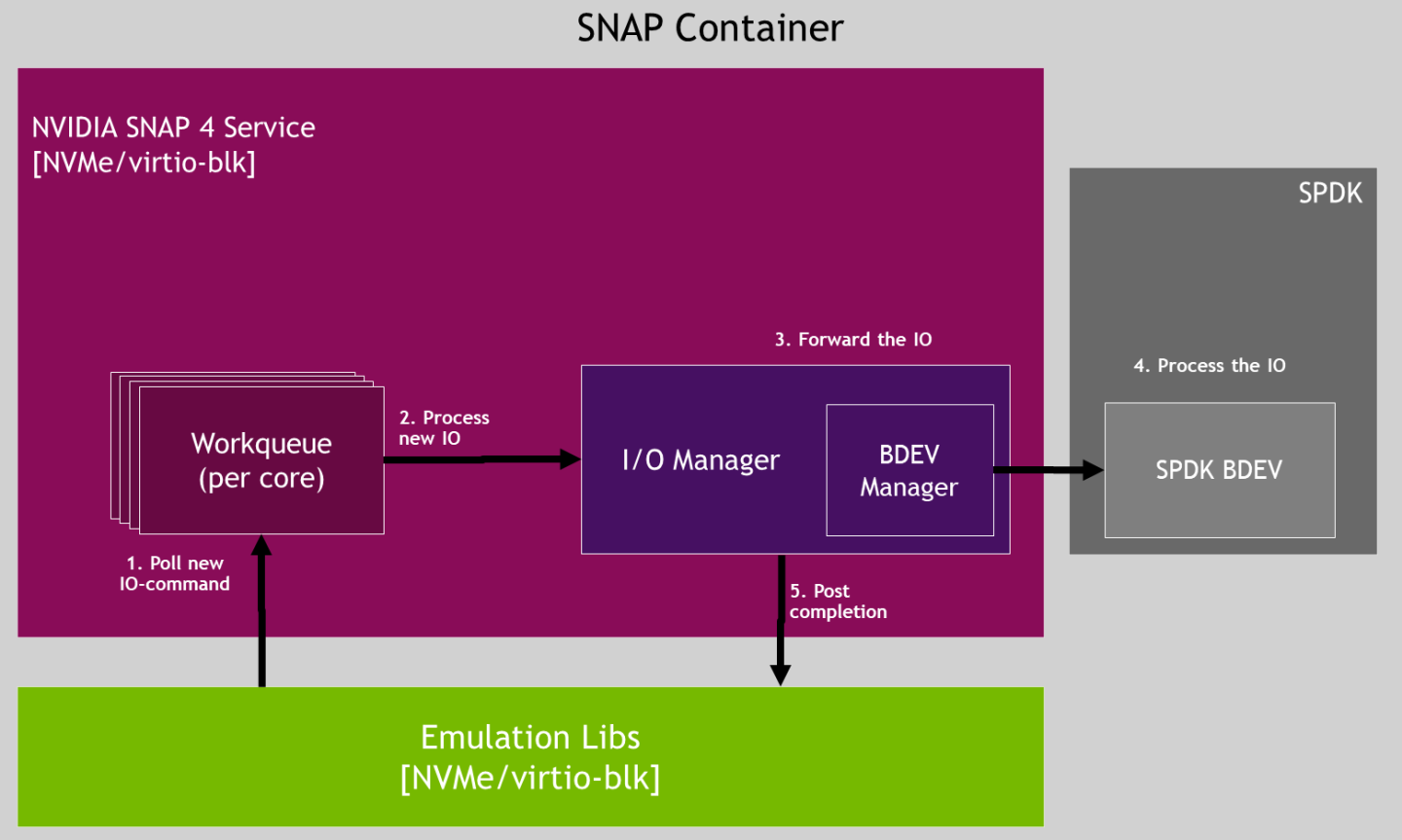

Implements the IO path for regular and optimized flows (RDMA ZC and TCP XLIO ZC)

Handles the bdev creation and functionalities

SNAP Service Dependencies

SNAP service depends on the following libraries:

SPDK – depends on the bdev and the SPDK resources, such as SPDK threads, SPDK memory, and SPDK RPC service

XLIO (for NVMeTCP acceleration)

SNAP Service Flows

IO Flows

Example of RDMA zero-copy read/write IO flow:

Example of RDMA non-zero-copy read IO flow: