SNAP Deployment

This section describes how to deploy SNAP as a container.

SNAP does not come pre-installed with the BFB.

To install NVIDIA® BlueField®-3 BFB:

[host] sudo bfb-install --rshim <rshimN> --bfb <image_path.bfb>

For more information, please refer to section "Installing Full DOCA Image on DPU" in the NVIDIA DOCA Installation Guide for Linux.

[dpu] sudo /opt/mellanox/mlnx-fw-updater/mlnx_fw_updater.pl --force-fw-update

For more information, please refer to section "Upgrading Firmware" in the NVIDIA DOCA Installation Guide for Linux.

FW configuration may expose new emulated PCI functions, which can be later used by the host's OS. As such, user must make sure all exposed PCI functions (static/hotplug PFs, VFs) are backed by a supporting SNAP SW configuration, otherwise these functions will remain malfunctioning and host behavior will be undefined.

Clear the firmware config before implementing the required configuration:

[dpu] mst start [dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 reset

Review the firmware configuration:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 query

Output example:

mlxconfig -d /dev/mst/mt41692_pciconf0 -e query | grep NVME Configurations: Default Current Next Boot * NVME_EMULATION_ENABLE False(0) True(1) True(1) * NVME_EMULATION_NUM_VF 0 125 125 * NVME_EMULATION_NUM_PF 1 2 2 NVME_EMULATION_VENDOR_ID 5555 5555 5555 NVME_EMULATION_DEVICE_ID 24577 24577 24577 NVME_EMULATION_CLASS_CODE 67586 67586 67586 NVME_EMULATION_REVISION_ID 0 0 0 NVME_EMULATION_SUBSYSTEM_VENDOR_ID 0 0 0

Where the output provides 5 columns:

Non-default configuration marker (*)

Firmware configuration name

Default firmware value

Current firmware value

Firmware value after reboot – shows a configuration update which is pending system reboot

To enable storage emulation options, the first DPU must be set to work in internal CPU model:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s INTERNAL_CPU_MODEL=1

To enable the firmware config with virtio-blk emulation PF:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s VIRTIO_BLK_EMULATION_ENABLE=1 VIRTIO_BLK_EMULATION_NUM_PF=1

To enable the firmware config with NVMe emulation PF:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s NVME_EMULATION_ENABLE=1 NVME_EMULATION_NUM_PF=1

For a complete list of the SNAP firmware configuration options, refer to "Appendix – DPU Firmware Configuration".

Power cycle is required to apply firmware configuration changes.

RDMA/RoCE Firmware Configuration

RoCE communication is blocked for BlueField OS's default interfaces (named ECPFs, typically mlx5_0 and mlx5_1). If RoCE traffic is required, additional network functions must be added, scalable functions (or SFs), which do support RoCE transport.

To enable RDMA/RoCE:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s PER_PF_NUM_SF=1

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s PF_SF_BAR_SIZE=8 PF_TOTAL_SF=2

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0.1 s PF_SF_BAR_SIZE=8 PF_TOTAL_SF=2

This is not required when working over TCP or RDMA over InfiniBand.

SR-IOV Firmware Configuration

SNAP supports up to 512 total VFs on NVMe and up to 1000 total VFs on virtio-blk. The VFs may be spread between up to 4 virtio-blk PFs or 2 NVMe PFs.

Common example:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s SRIOV_EN=1 PER_PF_NUM_SF=1 LINK_TYPE_P1=2 LINK_TYPE_P2=2 PF_TOTAL_SF=1 PF_SF_BAR_SIZE=8 TX_SCHEDULER_BURST=15

NoteWhen using 64KB pagesize OS, PF_SF_BAR_SIZE=10 should be configured (instead of 8)

Virtio-blk 250 VFs example (1 queue per VF):

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s VIRTIO_BLK_EMULATION_ENABLE=1 VIRTIO_BLK_EMULATION_NUM_VF=125 VIRTIO_BLK_EMULATION_NUM_PF=2 VIRTIO_BLK_EMULATION_NUM_MSIX=2

Virtio-blk 1000 VFs example (1 queue per VF):

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s VIRTIO_BLK_EMULATION_ENABLE=1 VIRTIO_BLK_EMULATION_NUM_VF=250 VIRTIO_BLK_EMULATION_NUM_PF=4 VIRTIO_BLK_EMULATION_NUM_MSIX=2 VIRTIO_NET_EMULATION_ENABLE=0 NUM_OF_VFS=0 PCI_SWITCH_EMULATION_ENABLE=0

NVMe 250 VFs example (1 IO-queue per VF):

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s NVME_EMULATION_ENABLE=1 NVME_EMULATION_NUM_VF=125 NVME_EMULATION_NUM_PF=2 NVME_EMULATION_NUM_MSIX=2

Hot-plug Firmware Configuration

Once enabling PCIe switch emulation, BlueField can support up to 14 hotplug NVMe/Virtio-blk functions. "PCI_SWITCH_EMULATION_NUM_PORT-1" hot-plugged PCIe functions. These slots are shared among all DPU users and applications and may hold hot-plugged devices of type NVMe, virtio-blk, virtio-fs, or others (e.g., virtio-net).

To enable PCIe switch emulation and determine the number of hot-plugged ports to be used:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s PCI_SWITCH_EMULATION_ENABLE=1 PCI_SWITCH_EMULATION_NUM_PORT=16

PCI_SWITCH_EMULATION_NUM_PORT equals 2 + the number of hot-plugged PCIe functions.

For additional information regarding hot plugging a device, refer to section "Hotplugged PCIe Functions Management".

Hotplug is not guaranteed to work on AMD machines.

Enabling PCI_SWITCH_EMULATION_ENABLE could potentially impact SR-IOV capabilities on Intel and AMD machines.

Currently, hotplug PFs do not support SR-IOV.

UEFI Firmware Configuration

To use the storage emulation as a boot device, it is recommended to use the DPU's embedded UEFI expansion ROM drivers to be used by the UEFI instead of the original vendor's BIOS ones.

To enable UEFI drivers:

[dpu] mlxconfig -d /dev/mst/mt41692_pciconf0 s EXP_ROM_VIRTIO_BLK_UEFI_x86_ENABLE=1 EXP_ROM_NVME_UEFI_x86_ENABLE=1

Modifying SF Trust Level to Enable Encryption

To allow the mlx5_2 and mlx5_3 SFs to support encryption, it is necessary to designate them as trusted:

Configure the trust level by editing /etc/mellanox/mlnx-sf.conf, adding the command /usr/bin/mlxreg:

/usr/bin/mlxreg -d 03:00.0 –-reg_name VHCA_TRUST_LEVEL –-yes –-indexes "vhca_id=0x0,all_vhca=0x1" –-set "trust_level=0x1" /usr/bin/mlxreg -d 03:00.1 --reg_name VHCA_TRUST_LEVEL --yes --indexes "vhca_id=0x0,all_vhca=0x1" --set "trust_level=0x1" /sbin/mlnx-sf –action create -–device 0000:03:00.0 -–sfnum 0 --hwaddr 02:11:3c:13:ad:82 /sbin/mlnx-sf –action create -–device 0000:03:00.1 -–sfnum 0 --hwaddr 02:76:78:b9:6f:52

Reboot the DPU to apply changes.

Setting Device IP and MTU

To configure the MTU, restrict the external host port ownership:

[dpu] # mlxprivhost -d /dev/mst/mt41692_pciconf0 r --disable_port_owner

List the DPU device’s functions and IP addresses:

[dpu] # ip -br a

Set the IP on the SF function of the relevant port and the MTU:

[dpu] # ip addr add 1.1.1.1/24 dev enp3s0f0s0

[dpu] # ip addr add 1.1.1.2/24 dev enp3s0f1s0

[dpu] # ip link set dev enp3s0f0s0 up

[dpu] # ip link set dev enp3s0f1s0 up

[dpu] # sudo ip link set p0 mtu 9000

[dpu] # sudo ip link set p1 mtu 9000

[dpu] # sudo ip link set enp3s0f0s0 mtu 9000

[dpu] # sudo ip link set enp3s0f1s0 mtu 9000

[dpu] # ovs-vsctl set int en3f0pf0sf0 mtu_request=9000

[dpu] # ovs-vsctl set int en3f1pf1sf0 mtu_request=9000

After reboot, IP and MTU configurations of devices will be lost. To configure persistent network interfaces, refer to appendix "Configure Persistent Network Interfaces".

SNAP NVMe/TCP XLIO does not support dynamically changing IP during deployment.

System Configurations

Configure the system's network buffers:

Append the following line to the end of the /etc/sysctl.conf file:

net.core.rmem_max = 16777216 net.ipv4.tcp_rmem = 4096 16777216 16777216 net.core.wmem_max = 16777216

Run the following:

[dpu] sysctl --system

DPA Core Mask

The d ata path accelerator (DPA) is a cluster of 16 cores with 16 execution units (EUs) per core.

Only EUs 0-170 are available for SNAP.

SNAP supports reservation of DPA EUs for NVMe or virtio-blk controllers. By default, all available EUs, 0-170, are shared between NVMe, virtio-blk, and other DPA applications on the system (e.g., virtio-net).

To assign specific set of EUs, set the following environment variable:

For NVMe:

dpa_nvme_core_mask=0x<EU_mask>

For virtio-blk:

dpa_virtq_split_core_mask=0x<EU_mask>

The core mask must contain valid hexadecimal digits (it is parsed right to left). For example, dpa_virtq_split_core_mask=0xff00 sets 8 EUs (i.e., EUs 8-16).

There is a hardware limit of 128 queues (threads) per DPA EU.

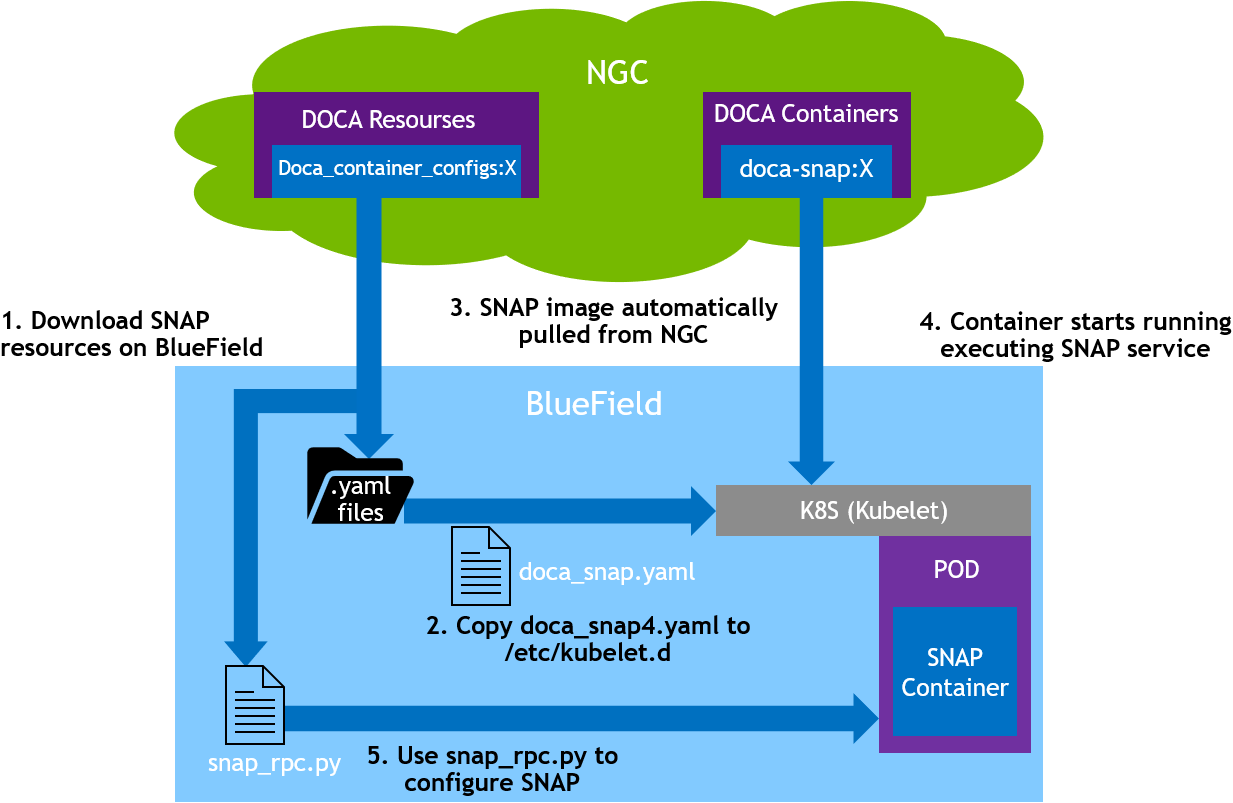

SNAP container is available on the DOCA SNAP NVIDIA NGC catalog page.

SNAP container deployment on top of the BlueField DPU requires the following sequence:

Setup preparation and SNAP resource download for container deployment. See section "Preparation Steps" for details.

Adjust the doca_snap.yaml for advanced configuration if needed according to section "Adjusting YAML Configuration".

Deploy the container. The image is automatically pulled from NGC. See section "Spawning SNAP Container" for details.

The following is an example of the SNAP container setup.

Preparation Steps

Step 1: Allocate Hugepages

Allocate 2GiB hugepages for the SNAP container according to the DPU OS's Hugepagesize value:

Query the Hugepagesize value:

[dpu] grep Hugepagesize /proc/meminfo

In Ubuntu, the value should be 2048KB. In CentOS 8.x, the value should be 524288KB.

Append the following line to the end of the /etc/sysctl.conf file:

For Ubuntu or CentOS 7.x setups (i.e., Hugepagesize = 2048 kB):

vm.nr_hugepages = 1024

For CentOS 8.x setups (i.e., Hugepagesize = 524288 kB):

vm.nr_hugepages = 4

Run the following:

[dpu] sysctl --system

If live upgrade is utilized in this deployment, it is necessary to allocate twice the amount of resources listed above for the upgraded container.

If other applications are running concurrently within the setup and are consuming hugepages, make sure to allocate additional hugepages beyond the amount described in this section for those applications.

When deploying SNAP with a high scale of connections (i.e., disks 500 or more), the default allocation of hugepages (2GB) becomes insufficient. This shortage of hugepages can be identified through error messages in the SNAP and SPDK layers. These error messages typically indicate failures in creating or modifying QPs or other objects.

Step 2: Create nvda_snap Folder

The folder /etc/nvda_snap is used by the container for automatic configuration after deployment.

Downloading YAML Configuration

The .yaml file configuration for the SNAP container is doca_snap.yaml. The download command of the .yaml file can be found on the DOCA SNAP NGC page.

Internet connectivity is necessary for downloading SNAP resources. To deploy the container on DPUs without Internet connectivity, refer to appendix "Appendix – Deploying Container on Setups Without Internet Connectivity".

Adjusting YAML Configuration

The .yaml file can easily be edited for advanced configuration.

The SNAP .yaml file is configured by default to support Ubuntu setups (i.e., Hugepagesize = 2048 kB) by using hugepages-2Mi.

To support other setups, edit the hugepages section according to the DPU OS's relevant Hugepagesize value. For example, to support CentOS 8.x configure Hugepagesize to 512MB:

limits: hugepages-512Mi: "<number-of-hugepages>Gi"

NoteWhen deploying SNAP with a large number of controllers (500 or more), the default allocation of hugepages (2GB) becomes insufficient. This shortage of hugepages can be identified through error messages, typically indicate failures in creating or modifying QPs or other objects. In these cases more hugepages needed.

The following example edits the .yaml file to request 16 CPU cores for the SNAP container and 4Gi memory, 2Gi of them are hugepages:

resources: requests: memory: "2Gi" cpu: "8" limits: hugepages-2Mi: "2Gi" memory: "4Gi" cpu: "16" env: - name: APP_ARGS value: "-m 0xffff"

NoteIf all BlueField-3 cores are requested, the user must verify no other containers are in conflict over the CPU resources.

To automatically configure SNAP container upon deployment:

Add spdk_rpc_init.conf file under /etc/nvda_snap/. File example:

bdev_malloc_create 64 512

Add snap_rpc_init.conf file under /etc/nvda_snap/.

Virtio-blk file example:

virtio_blk_controller_create --pf_id 0 --bdev Malloc0

NVMe file example:

nvme_subsystem_create --nqn nqn.2022-10.io.nvda.nvme:0 nvme_namespace_create -b Malloc0 -n 1 --nqn nqn.2022-10.io.nvda.nvme:0 --uuid 16dab065-ddc9-8a7a-108e-9a489254a839 nvme_controller_create --nqn nqn.2022-10.io.nvda.nvme:0 --ctrl NVMeCtrl1 --pf_id 0 --suspended nvme_controller_attach_ns -c NVMeCtrl1 -n 1 nvme_controller_resume -c NVMeCtrl1

Edit the .yaml file accordingly (uncomment):

env: - name: SPDK_RPC_INIT_CONF value: "/etc/nvda_snap/spdk_rpc_init.conf" - name: SNAP_RPC_INIT_CONF value: "/etc/nvda_snap/snap_rpc_init.conf"

NoteIt is user responsibility to make sure SNAP configuration matches firmware configuration. That is, an emulated controller must be opened on all existing (static/hotplug) emulated PCIe functions (either through automatic or manual configuration). A PCIe function without a supporting controller is considered malfunctioned, and host behavior with it is anomalous.

Spawning SNAP Container

Run the Kubernetes tool:

[dpu] systemctl restart containerd

[dpu] systemctl restart kubelet

[dpu] systemctl enable kubelet

[dpu] systemctl enable containerd

Copy the updated doca_snap.yaml file to the /etc/kubelet.d directory.

Kubelet automatically pulls the container image from NGC described in the YAML file and spawns a pod executing the container.

cp doca_snap.yaml /etc/kubelet.d/

The SNAP service starts initialization immediately, which may take a few seconds. To verify SNAP is running:

Look for the message "SNAP Service running successfully" in the log

Send spdk_rpc.py spdk_get_version to confirm whether SNAP is operational or still initializing

Debug and Log

View currently active pods, and their IDs (it might take up to 20 seconds for the pod to start):

crictl pods

Example output:

POD ID CREATED STATE NAME

0379ac2c4f34c About a minute ago Ready snap

View currently active containers, and their IDs:

crictl ps

View existing containers and their ID:

crictl ps -a

Examine the logs of a given container (SNAP logs):

crictl logs <container_id>

Examine the kubelet logs if something does not work as expected:

journalctl -u kubelet

The container log file is saved automatically by Kubelet under /var/log/containers.

Refer to section "RPC Log History" for more logging information.

Stop, Start, Restart SNAP Container

SNAP binaries are deployed within a Docker container as SNAP service, which is managed as a supervisorctl service. Supervisorctl provides a layer of control and configuration for various deployment options.

In the event of a SNAP crash or restart, supervisorctl detects the action and waits for the exited process to release its resources. It then deploys a new SNAP process within the same container, which initiates a recovery flow to replace the terminated process.

In the event of a container crash or restart, kubeletclt detects the action and waits for the exited container to release its resources. It then deploys a new container with a new SNAP process, which initiates a recovery flow to replace the terminated process.

After containers crash or exit, the kubelet restarts them with an exponential back-off delay (10s, 20s, 40s, etc.) which is capped at five minutes. Once a container has run for 10 minutes without an issue, the kubelet resets the restart back-off timer for that container. Restarting the SNAP service without restarting the container helps avoid the occurrence of back-off delays.

Different SNAP termination options

Container termination:

To kill the container, remove the .yaml file form /etc/kubelet.d/. To start the container, cp back the .yaml file to the same path:

cp doca_snap.yaml /etc/kubelet.d/

To restart the container (with sig-term), using crictl tool, use the -t (timeout) option:

crictl stop -t 10 <container-id>

SNAP process termination:

To restart the SNAP service without restarting the container. Kill the SNAP service process on the DPU. different signals can be used to choose different termination options. For example:

pkill -9 -f snap

System Preparation

Allocate 2GiB hugepages for the SNAP container according to the DPU OS's Hugepagesize value:

Query the Hugepagesize value:

[dpu] grep Hugepagesize /proc/meminfo

In Ubuntu, the value should be 2048KB. In CentOS 8.x, the value should be 524288KB.

Append the following line to the end of the /etc/sysctl.conf file:

For Ubuntu or CentOS 7.x setups (i.e., Hugepagesize = 2048 kB):

vm.nr_hugepages = 1024

For CentOS 8.x setups (i.e., Hugepagesize = 524288 kB):

vm.nr_hugepages = 4

Run the following:

[dpu] sysctl --system

If live upgrade is utilized in this deployment, it is necessary to allocate twice the amount of resources listed above for the upgraded container.

If other applications are running concurrently within the setup and are consuming hugepages, make sure to allocate additional hugepages beyond the amount described in this section for those applications.

When deploying SNAP with a high scale of connections (i.e., disks 500 or more), the default allocation of hugepages (2GB) becomes insufficient. This shortage of hugepages can be identified through error messages in the SNAP and SPDK layers. These error messages typically indicate failures in creating or modifying QPs or other objects.

Installing SNAP Source Package

Install the package:

For Ubuntu, run:

dpkg -i snap-sources_<version>_arm64.*

For CentOS, run:

rpm -i snap-sources_<version>_arm64.*

Build, Compile, and Install Sources

To build SNAP with a custom SPDK, see section "Replace the BFB SPDK".

Move to the sources folder. Run:

cd /opt/nvidia/nvda_snap/src/

Build the sources. Run:

meson /tmp/build

Compile the sources. Run:

meson compile -C /tmp/build

Install the sources. Run:

meson install -C /tmp/build

Configure SNAP Environment Variables

To config the environment variables of SNAP, run:

source /opt/nvidia/nvda_snap/src/scripts/set_environment_variables.sh

Run SNAP Service

/opt/nvidia/nvda_snap/bin/snap_service

Replace the BFB SPDK (Optional)

Start with installing SPDK.

For legacy SPDK versions (e.g., SPDK 19.04) see the Appendix – Install Legacy SPDK.

To build SNAP with a custom SPDK, instead of following the basic build steps, perform the following:

Move to the sources folder. Run:

cd /opt/nvidia/nvda_snap/src/

Build the sources with spdk-compat enabled and provide the path to the custom SPDK. Run:

meson setup /tmp/build -Denable-spdk-compat=true -Dsnap_spdk_prefix=</path/to/custom/spdk>

Compile the sources. Run:

meson compile -C /tmp/build

Install the sources. Run:

meson install -C /tmp/build

Configure SNAP env variables and run SNAP service as explained in section "Configure SNAP Environment Variables" and "Run SNAP Service".

Build with Debug Prints Enabled (Optional)

Instead of the basic build steps, perform the following:

Move to the sources folder. Run:

cd /opt/nvidia/nvda_snap/src/

Build the sources with buildtype=debug. Run:

meson --buildtype=debug /tmp/build

Compile the sources. Run:

meson compile -C /tmp/build

Install the sources. Run:

meson install -C /tmp/build

Configure SNAP env variables and run SNAP service as explained in section "Configure SNAP Environment Variables" and "Run SNAP Service".

Automate SNAP Configuration (Optional)

The script run_snap.sh automates SNAP deployment. Users must modify the following files to align with their setup. If different directories are utilized by the user, edits must be made to run_snap.sh accordingly:

Edit SNAP env variables in:

/opt/nvidia/nvda_snap/bin/set_environment_variables.sh

Edit SPDK initialization RPCs calls:

/opt/nvidia/nvda_snap/bin/spdk_rpc_init.conf

Edit SNAP initialization RPCs calls:

/opt/nvidia/nvda_snap/bin/snap_rpc_init.conf

Run the script:

/opt/nvidia/nvda_snap/bin/run_snap.sh