RDG for VMware vSAN ESA over NVIDIA RoCE on VMware vSphere 8.0

Created on Jan 12,2022 by Boris Kovalev

On this page

Scope

This document describes the configuration process of VMware vSAN Express Storage Architecture (ESA) over NVIDIA® RDMA over Converged Ethernet ( RoCE) in VMware vSphere 8.0 and NVIDIA® end-to-end 100 Gb/s Ethernet solution.

Abbreviations and Acronyms

Term | Definition | Term | Definition |

DAC | Direct Attached Cable | NVMe | Non-Volatile Memory express |

DHCP | Dynamic Host Configuration Protocol | NVMe-oF | Non-Volatile Memory express over Fabrics |

ESA | Express Storage Architecture | OSA | Original Storage Architecture |

HCI | Hyperconverged infrastructure | PVRDMA | Paravirtual Remote Direct Memory Access |

HDD | Hard (magnetic) Disk Drive | RDMA | Remote Direct Memory Access |

iSCSI | Internet Small Computer System Interface | RoCE | Remote Direct Memory Access over Converged Ethernet |

iSER | Internet Small Computer System Interface over RDMA | SDS | Software-Defined Storage |

NFS | Network File System | SSD | Solid-State Drive |

NFSoRDMA | Network File System over Remote Direct Memory Access | vDS | vSphere Distributed Switch |

NOS | Network Operation System | VM | Virtual Machine |

Introduction

Hybrid cloud has become the dominant architecture for enterprises seeking to extend their compute capabilities by using public clouds while maintaining on-premises clusters that are fully interoperable with their cloud service providers.

To meet demands, provide services and allocate resources efficiently, enterprise IT teams have deployed hyperconverged architectures that use the same servers for compute and storage. These architectures include three core technologies: software-defined-compute (or server virtualization), software-defined-networking and software-defined storage (SDS). Taken together, these three enable a software-defined data center. Also these hyperconverged architectures widely adopt high-performance Ethernet for server-to-server and server-to-storage communication.

vSAN is VMware’s enterprise storage solution for SDS that supports hyperconverged infrastructure systems and is fully integrated with VMware vSphere as a distributed layer of software within the ESXi hypervisor. vSAN eliminates the need for external shared storage and simplifies storage configuration through storage policy-based management. Through virtual machine storage policies, users can define storage requirements and capabilities.

vSAN aggregates local, direct-attached storage devices to create and share a single storage pool across all hosts in the hyperconverged cluster, utilizing faster flash SSD for cache and inexpensive HDD to maximize capacity.

RDMA (Remote Direct Memory Access) is an innovative networking technology that boosts data communication performance and efficiency. RDMA makes data transfers more efficient and enables fast data movement between servers and storage without using the OS or burdening the server’s CPU. Throughput is increased, latency reduced and the CPU is freed to run applications.

RDMA over Converged Ethernet (RoCE) is a network protocol that allows RDMA over an Ethernet network. There are two RoCE versions, RoCE v1 and RoCE v2. RoCE v1 is an Ethernet link layer protocol and hence allows communication between any two hosts in the same Ethernet broadcast domain. RoCE v2 is an internet layer protocol which means that RoCE v2 packets can be routed. Although the RoCE protocol benefits from the characteristics of a converged Ethernet network, the protocol can also be used on a traditional or non-converged Ethernet network.

RDMA technology is already widely used for efficient data transfer in large cloud deployments, such as High Performance Computing (HPC), Artificial Intelligence (AI) and Machine Learning (ML), Non-Volatile Memory express over Fabrics (NVME-oF) and Internet Small Computer System Interface over RDMA (iSER)-based storage, NFSoRDMA, mission-critical SQL databases such as Oracle’s RAC (Exadata), IBM DB2 pureScale, Microsoft SQL solutions, Teradata and more.

For the last several years, VMware has been adding RDMA support to ESXi, including PVRDMA (paravirtual RDMA) to accelerate data transfers between virtual servers and iSER and NVMe-oF for remote storage acceleration.

VMware’s vSAN over RDMA is fully qualified and available as of the ESXi 7.0 U3d release, making it ready for deployments.

The announcement of VMware vSAN Express Storage Architecture™ or ESA for short, represents a massive step forward in the capabilities of the solution. This is an optional, alternative storage architecture (OSA) to the vSAN original storage architecture also found in vSAN 8.

The best way to think of the vSAN Express Storage Architecture is as a new way to process and store data. It is an optional, alternative architecture in vSAN that is designed to achieve all-new levels of efficiency, scalability, and performance. The ESA is optimized to exploit the full potential of the very latest in hardware, and unlocks new capabilities.

This document provides instructions on how to configure vSAN ESA over RDMA Datastores located on local NVMe disks in VMware vSphere 8.0 over NVIDIA end-to-end 100 Gb/s Ethernet solution.

HCI Bench v2.8.0 HCIBench will be used for benchmarks to show performance improvements between vSAN over RDMA and vSAN over TCP protocols by using the same hardware.

References

Solution Architecture

Key Components and Technologies

vSAN over RDMA Support for VMware

vSAN over RDMA provides increased performance for vSAN.

Each vSAN host must have a vSAN certified RDMA-capable NIC, as listed in the vSAN section of the VMware Compatibility Guide.

All hosts in the cluster must support RDMA. If any host loses RDMA support, the entire vSAN cluster switches to TCP.

vSAN over RDMA supports NIC failover, but does not support LACP or IP-hash-based NIC teaming.

NVIDIA® Cumulus® Linux is the industry's most innovative open network operating system that allows you to automate, customize, and scale your data center network like no other.

10/25/40/50/100/200 and 400G Ethernet Network Adapters

The industry-leading NVIDIA® ConnectX® family of smart network interface cards (SmartNICs) offer advanced hardware offloads and accelerations.

NVIDIA Ethernet adapters enable the highest ROI and lowest Total Cost of Ownership for hyperscale, public and private clouds, storage, machine learning, AI, big data, and telco platforms.

NVIDIA Spectrum Ethernet Switches

Flexible form-factors with 16 to 128 physical ports, supporting 1GbE through 400GbE speeds.

Based on a ground-breaking silicon technology optimized for performance and scalability, NVIDIA Spectrum switches are ideal for building high-performance, cost-effective, and efficient Cloud Data Center Networks, Ethernet Storage Fabric, and Deep Learning Interconnects.

NVIDIA combines the benefits of NVIDIA Spectrum™ switches, based on an industry-leading application-specific integrated circuit (ASIC) technology, with a wide variety of modern network operating system choices, including NVIDIA Cumulus® Linux , SONiC and NVIDIA Onyx®.

The NVIDIA® LinkX® product family of cables and transceivers provides the industry’s most complete line of 10, 25, 40, 50, 100, 200, and 400GbE in Ethernet and 100, 200 and 400Gb/s InfiniBand products for Cloud, HPC, hyperscale, Enterprise, telco, storage and artificial intelligence, data center applications.

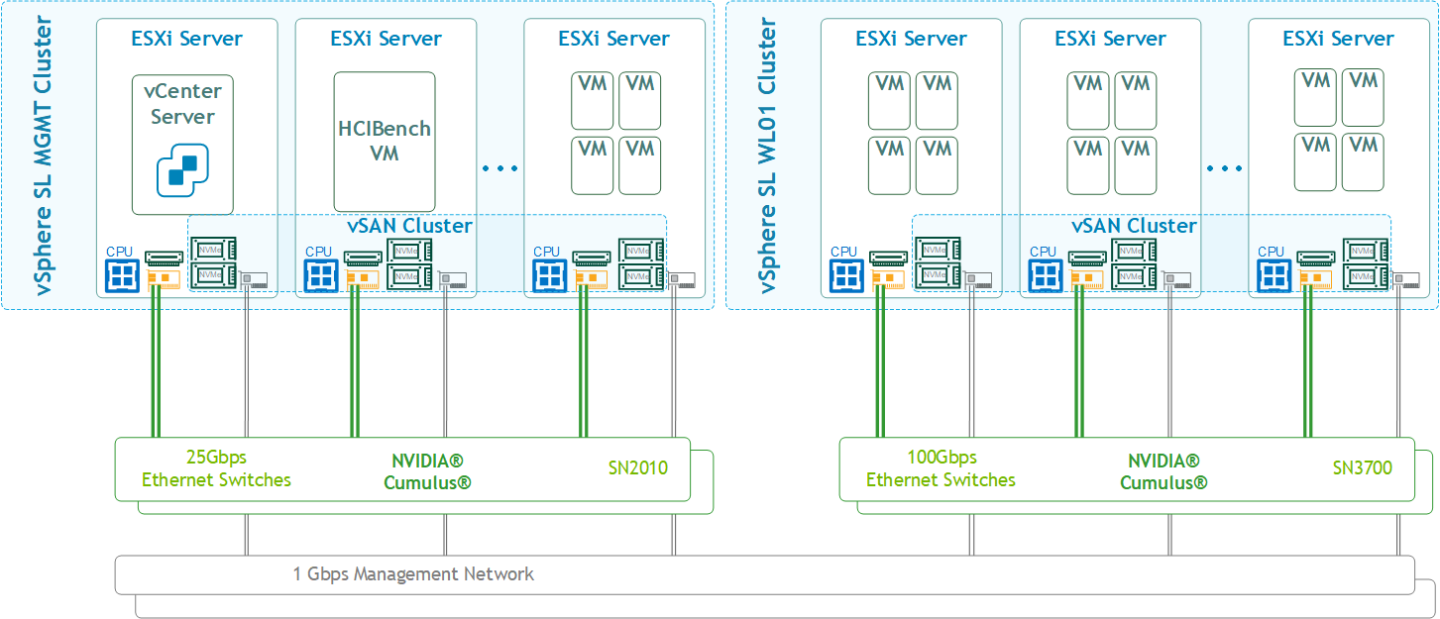

Logical Design

Software Stack Components

This guide assumes that the following software and drivers are installed:

VMware ESXi 8.0, build 20513097

VMware vCenter 8.0, build 20519528

Distributed Switch 8.0.0

NVIDIA® ConnectX® Driver for VMware ESXi Server v4.23.0.36

NVIDIA® ConnectX®-6DX FW version 22.34.1002

Network Operational System (NOS): NVIDIA Cumulus™ v 5.1

HCIBench version 2.8.0

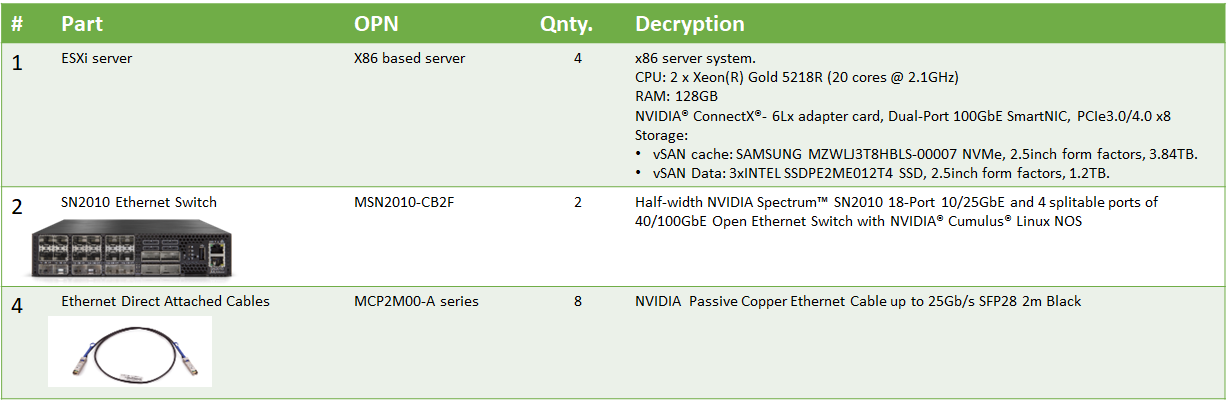

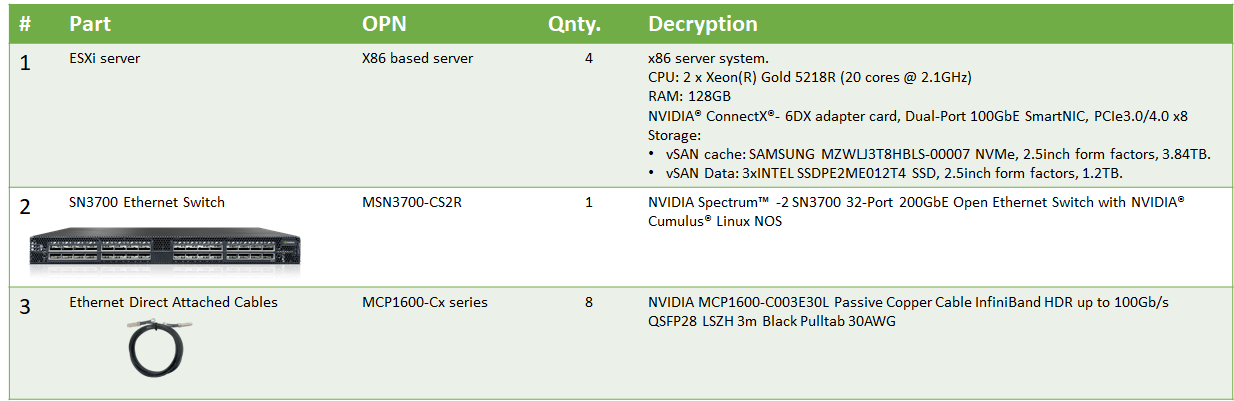

Bill of Materials

The following hardware setup is utilized in the vSphere environment that is described in this guide.

Management Cluster:

Workload Cluster:

Deployment and Configuration

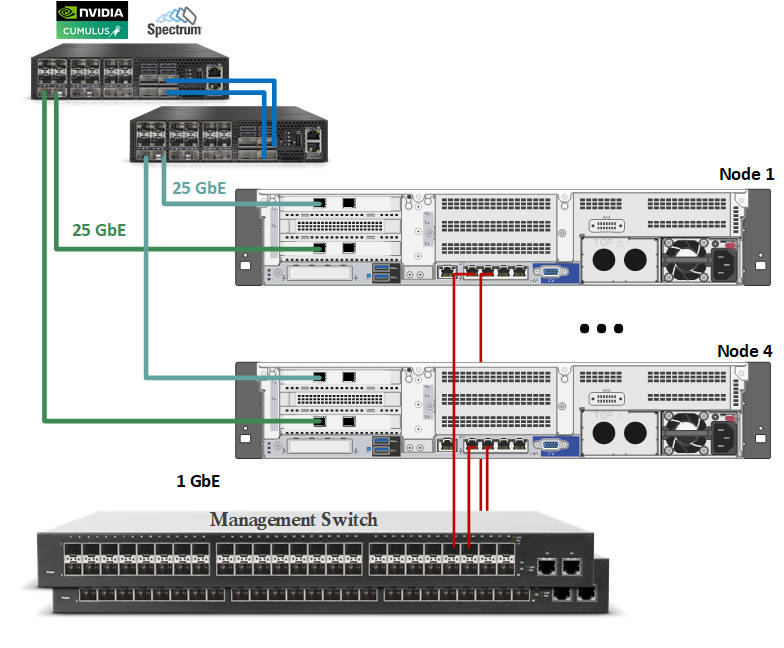

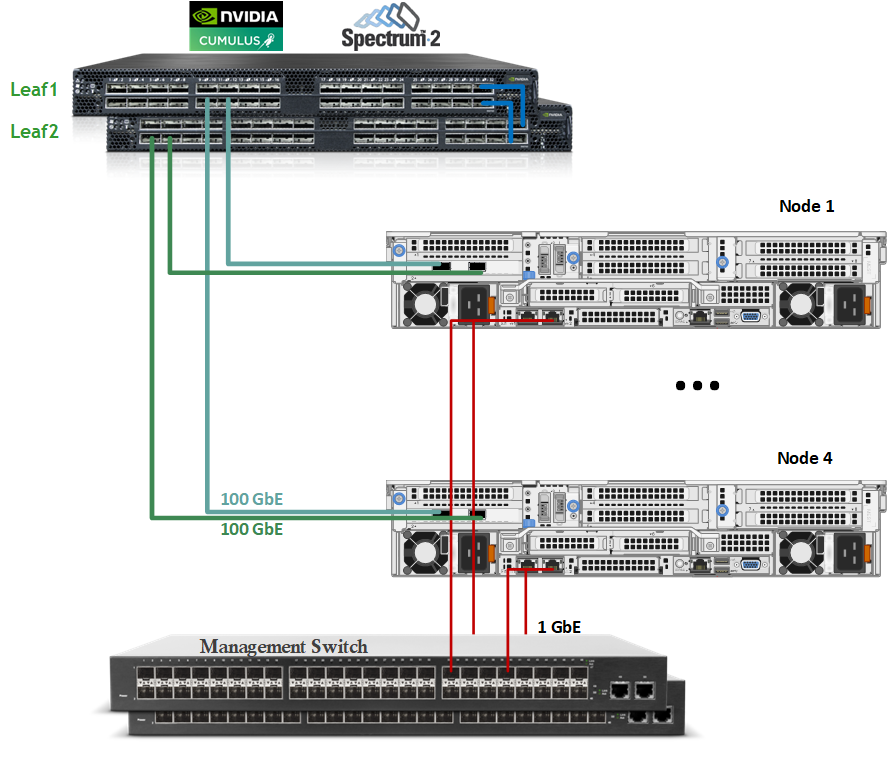

Wiring

This document covers a highly available VMware vSphere cluster deployment.

Management Cluster:

Workload Cluster:

Network

Prerequisites

Switch OS

NVIDIA Cumulus 5.1

Network adapter

2x ConnectX-6 Dx or LX. Use only the same model network adapters from the same vendor on each end of the connection.

Management Network

DHCP and DNS services are required. The components installation and configuration are not covered in this guide.

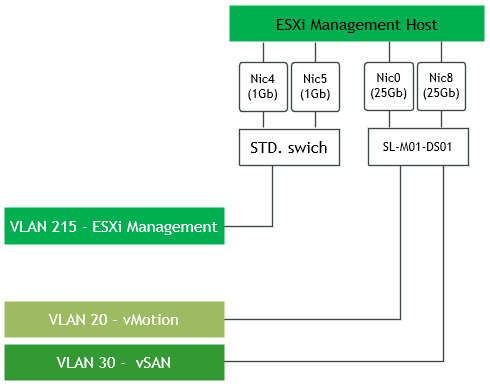

vSphere Switches Design

Management Cluster Host

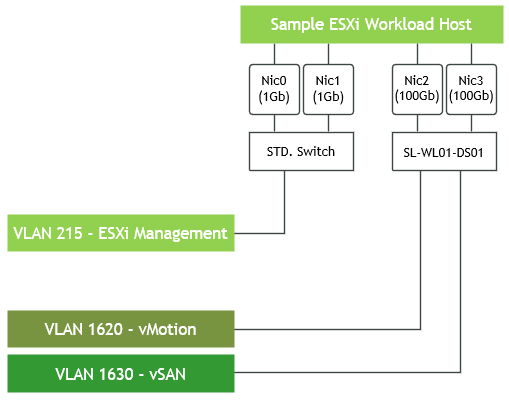

Workload Cluster Host

Hosts Network Configuration

This table provides details on the ESXi server, switches and storage system, including their names and their network configuration.

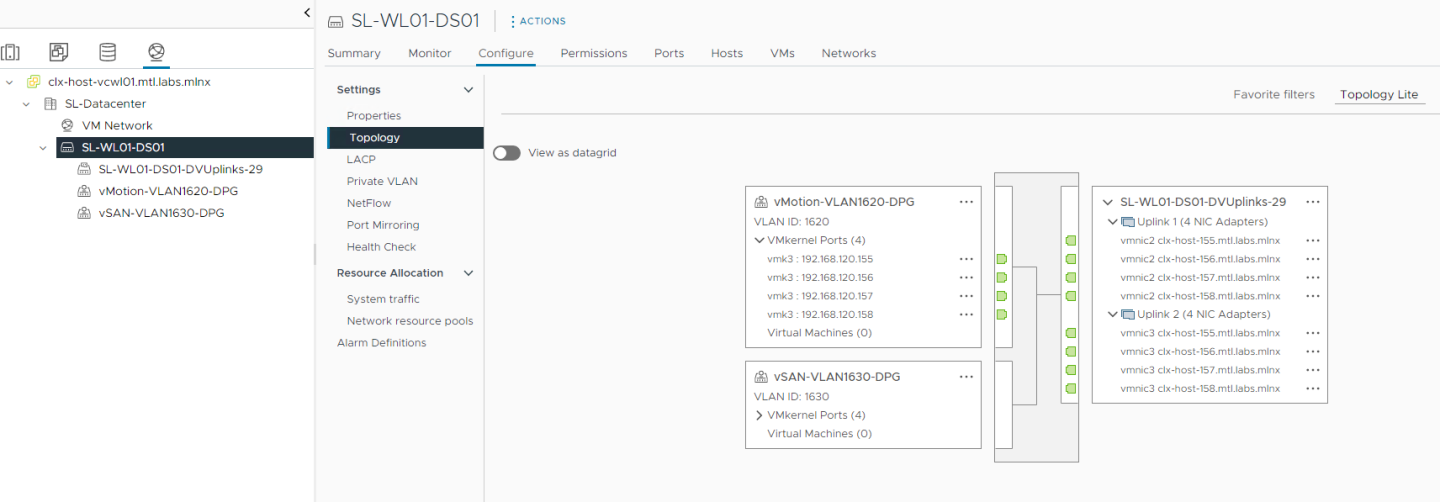

The one distributed port group (DPG per cluster - vSAN-VLAN1630-DPG, vSAN-VLAN30-DPG) is required to support Active/Passive vSAN connectivity.

SL MGMT Cluster

Server | Server Name | IP and NICs | |

High-Speed Ethernet Network | Management Network 10.7.215.0/24 | ||

SL MGMT Cluster | |||

ESXi-01 | clx-host-51 | vmk1: 192.168.120.51 (vMotion) vmk2: 192.168.130.51 (vSAN) | vmk0: 10.7.215.51 From DHCP (reserved) |

ESXi-02 | clx-host-52 | vmk1: 192.168.120.52 (vMotion) vmk2: 192.168.130.52 (vSAN) | vmk0: 10.7.215.52 From DHCP (reserved) |

ESXi-03 | clx-host-53 | vmk1: 192.168.120.53(vMotion) vmk2: 192.168.130.53 (vSAN) | vmk0: 10.7.215.53 From DHCP (reserved) |

ESXi-04 | clx-host-54 | vmk1: 192.168.120.54(vMotion) vmk2: 192.168.130.54 (vSAN) | vmk0: 10.7.215.54 From DHCP (reserved) |

Leaf-01 | clx-swx-033 | 10.7.215.233 | |

Leaf-02 | clx-swx-034 | 10.7.215.234 | |

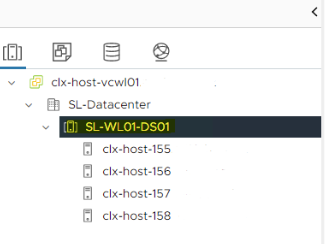

SL WL01 Cluster

Server | Server Name | IP and NICs | |

High-Speed Ethernet Network | Management Network 10.238.0.0/24 | ||

ESXi-05 | clx-host-155 | vmk1: 192.168.20.169 (vMotion) vmk2: 192.168.30.169 (vSAN) | vmk0: 10.238.55.1 From DHCP (reserved) |

ESXi-06 | clx-host-156 | vmk1: 192.168.20.170 (vMotion) vmk2: 192.168.30.170 (vSAN) | vmk0: 10.238.56.1 From DHCP (reserved) |

ESXi-07 | clx-host-157 | vmk1: 192.168.20.171 (vMotion) vmk2: 192.168.30.171 (vSAN) | vmk0: 10.238.57.1 From DHCP (reserved) |

ESXi-08 | clx-host-158 | vmk1: 192.168.20.172 (vMotion) vmk2: 192.168.30.172 (vSAN) | vmk0: 10.238.58.1 From DHCP (reserved) |

Leaf-03 | clx-swx-035 | 10.7.7.90 | |

Leaf-04 | clx-swx-036 | 10.7.7.91 | |

Network Switch Configuration

Port channel and VLAN configuration

Run the following commands on both Leaf switches in the Management Cluster to configure port channel and VLAN .

Switch console

nv set interface bond1 type bond

nv set interface bond1 bond member swp21-22

nv set interface bond1 bridge domain br_default

nv set bridge domain br_default vlan 130

nv set bridge domain br_default vlan 100

nv set interface bond1 bridge domain br_default vlan all 130

nv set interface bond1 bridge domain br_default untagged 100

nv set interface bond1 bridge domain br_default vlan all add 120

nv config apply

nv config save

Run the following commands on both Leaf switches in the Workload Cluster to configure the port channel and VLAN .

Switch console

nv set interface bond1 type bond

nv set interface bond1 bond member swp31-32

nv set interface bond1 bridge domain br_default

nv set bridge domain br_default vlan 215

nv set bridge domain br_default vlan 1620

nv set bridge domain br_default vlan 1630

nv set interface bond1 bridge domain br_default untagged 215

nv set interface bond1 bridge domain br_default vlan all 1620

nv set interface bond1 bridge domain br_default vlan all 1630

nv config apply

nv config save

Enable RDMA over Converged Ethernet Lossless (with PFC and ETS)

RoCE transport is utilized to accelerate vSAN networking. To get the highest possible results, the network is configured to be lossless.

Run the following commands on all Leaf switches to configure lossless networks for NVIDIA Cumulus.

Switch console

nv set qos roce

nv config apply

nv config save

To Check RoCE configuration, run the following command:

Switch console

$sudo nv show qos roce

operational applied description

------------------ ----------- -------- ------------------------------------------------------

enable on Turn the feature 'on' or 'off'. The default is 'off'.

mode lossless lossless Roce Mode

cable-length 100 100 Cable Length(in meters) for Roce Lossless Config

congestion-control

congestion-mode ECN Congestion config mode

enabled-tc 0,3 Congestion config enabled Traffic Class

max-threshold 1.43 MB Congestion config max-threshold

min-threshold 146.48 KB Congestion config min-threshold

pfc

pfc-priority 3 switch-prio on which PFC is enabled

rx-enabled enabled PFC Rx Enabled status

tx-enabled enabled PFC Tx Enabled status

trust

trust-mode pcp,dscp Trust Setting on the port for packet classification

RoCE PCP/DSCP->SP mapping configurations

===========================================

pcp dscp switch-prio

-- --- ----------------------- -----------

0 0 0,1,2,3,4,5,6,7 0

1 1 8,9,10,11,12,13,14,15 1

2 2 16,17,18,19,20,21,22,23 2

3 3 24,25,26,27,28,29,30,31 3

4 4 32,33,34,35,36,37,38,39 4

5 5 40,41,42,43,44,45,46,47 5

6 6 48,49,50,51,52,53,54,55 6

7 7 56,57,58,59,60,61,62,63 7

RoCE SP->TC mapping and ETS configurations

=============================================

switch-prio traffic-class scheduler-weight

-- ----------- ------------- ----------------

0 0 0 DWRR-50%

1 1 0 DWRR-50%

2 2 0 DWRR-50%

3 3 3 DWRR-50%

4 4 0 DWRR-50%

5 5 0 DWRR-50%

6 6 6 strict-priority

7 7 0 DWRR-50%

RoCE pool config

===================

name mode size switch-priorities traffic-class

-- --------------------- ------- ----- ----------------- -------------

0 lossy-default-ingress Dynamic 50.0% 0,1,2,4,5,6,7 -

1 roce-reserved-ingress Dynamic 50.0% 3 -

2 lossy-default-egress Dynamic 50.0% - 0,6

3 roce-reserved-egress Dynamic inf - 3

Exception List

=================

description

vSAN Cluster Creation

VMware vSAN Requirements

There are many considerations to be made from a requirements perspective when provisioning a VMware vSAN software-defined storage solution.

Please refer to the official VMware vSAN 8 Design Guide, chapter 5 "Requirements for Enabling vSAN".

vSAN with RDMA Requirements

vSAN version 8.0 and the versions above support RDMA communication. To use it:

Each vSAN host must have a vSAN certified RDMA-capable NIC, as listed in the vSAN section of the VMware Compatibility Guide

Only the same model network adapters from the same vendor can be used on each end of the connection

All hosts in the cluster must support RDMA. If any host loses RDMA support, the entire vSAN cluster switches to TCP

Preparing vSphere Cluster for vSAN

Prerequisites

Physical server configuration

All ESXi servers must have the same PCIe placement for the NIC and expose the same interface name.

vSphere cluster with minimum 3 VMware vSphere ESXi 8.0 hosts

vCenter 8.0

Installer privileges: The installation requires administrator privileges on the target machine

Connection to ESXi host management interface

High speed network connectivity

Verify that NTP is configured in your environment and that it works properly.

To create a vSAN cluster, create a vSphere host cluster .

The installation of vCenter, ESXi hosts, and the configuration of the vSphere cluster (DRS, HA) are beyond the scope of this document.

To enable the exchange of data in the vSAN cluster, you must provide a VMkernel network adapter for vSAN traffic on each ESXi host.

Firstly, make sure to create a vSphere Distributed Switch (vDS) with a distributed port group on a vSphere cluster with one Active and one Standby uplink.

vSAN with RDMA supports NIC failover, but does not support LACP or IP-hash-based NIC teaming.

Creating a Distributed Switch for vSAN Traffic

To create a new vDS:

Launch the vSphere Web Client and connect to a vCenter Server instance.

On the vSphere Web Client home screen, select the vCenter object from the list on the left.

Hover over the Distributed Switches from the Inventory Lists area, then click on New Distributed Switch (see image below) to launch the New vDS creation wizard:

Provide a name for the new distributed switch , and select the location within the vCenter inventory where you would like to store the new vDS (a data center object or a folder).

Click NEXT .

Select the version of the vDS to create.

Click NEXT.

Specify the Netwrok Offloads compatibility as None, and number of uplink ports as 2. Uncheck the Create a default port group box, and enter a name for that group.

Click NEXT.

Click Finish.

Set the MTU for the newly created distributed switch.

Right-click the new distributed switch in the list of objects, and select Settings → Edit Settings... from the Actions menu.

In the Storage-DSwitch-Edit Settings dialog box, set the MTU to 9000, Discovery protocol to Link Layer Discovery Protocol and Operation to Both.

Click OK.

Adding Hosts to vDS

To add an ESXi host to an existing vDS:

Launch the vSphere Web Client, and connect to a vCenter Server instance.

Navigate to the list of Hosts in the SL MGMT cluster and select ESXi host.

Select Configure → Networking → Physical adapters.

Check the network ports that you are going to use. In this case, vmnic2 and vmnic3 are used.

Navigate to the list of distributed switches.

Right-click on the new distributed switch in the list of objects, and select Add and Manage Hosts from the Actions menu.

Select the Add hosts button and click NEXT .

From the list of the new hosts, check the boxes with the names of each ESXi host you would like to add to the VDS.

Click NEXT.

In the next Manage physical adapters menu, click on Adapters on all hosts and configure the adapters (in this case - vmnic2 and vmnic3) in an ESXi host as Uplink 1 and Uplink 2 for the VDS.

In the next Manage VMkernel adapters and Migrate VM networking menus, click NEXT to continue.

Click FINISH.

-

Repeat the Distributed Switch for vSAN Traffic steps for Workload cluster.

Creating a Distributed Port Group for Storage Traffic

This section lists the steps required to create two distributed port groups with one Active and one Standby uplinks.

Add VMkernel Adapters for Distributed Port Groups by right-clicking on Distributed switch, and select Distributed Port Group>New Distributed Port Group.

On the New Distributed Port Group dialog box, enter a Name such as <vSAN-VLAN1630-DPG>, and click NEXT.

In the VLAN type field, select VLAN, and set the VLAN ID to your VLAN (in this example - 1630). Check the Customize default policies configuration checkbox, and click NEXT.

On the Security dialog box, click NEXT.

On the Traffic shaping dialog box, click NEXT.

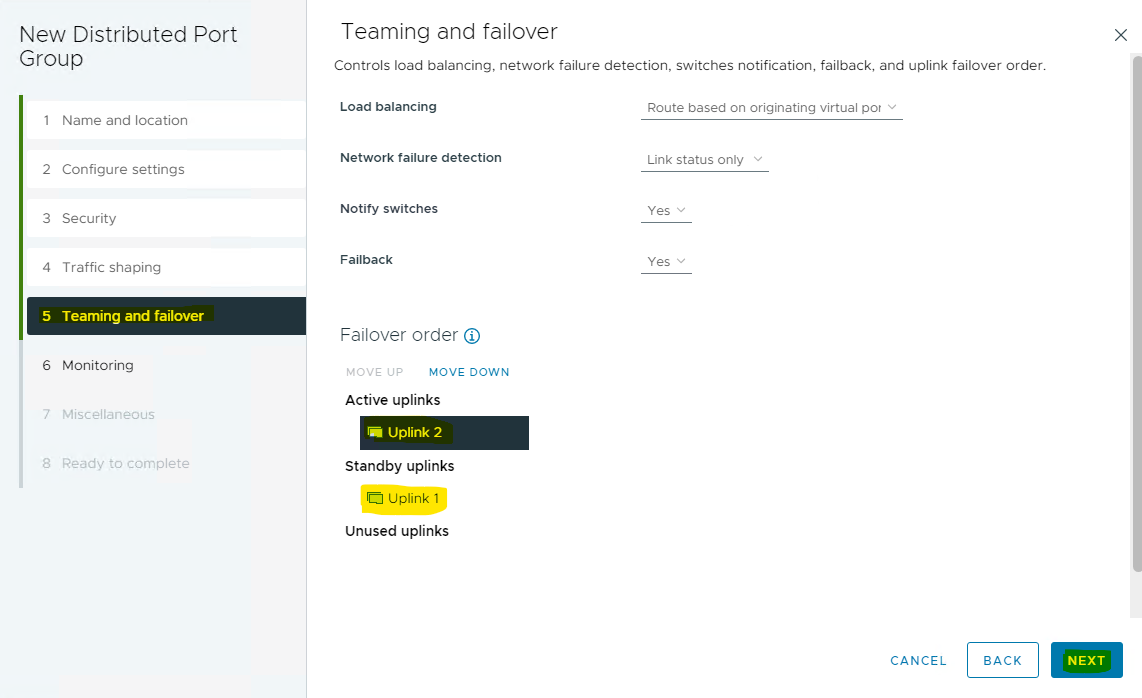

NIC Teaming with RDMA

RDMA for vSAN supports the following teaming policies for virtual switches.

• Route-based on originating virtual port

• Route-based on source MAC hash

• Use explicit failover order

In the Teaming and failover dialog box, select Uplink 1 as active uplink, and set Uplink 2 to be the standby uplink. Click NEXT.

In the Monitoring dialog box, set NetFlow to Disabled, and click NEXT.

In the Miscellaneous dialog box, set Block All Ports to No, and click NEXT.

In the Ready to complete dialog box, review all the changes, and click FINISH.

Repeat Creating a Distributed Port Group for vMotion Traffic.

In the VLAN type as VLAN field, set the VLAN ID to your VLAN (in this example - 1620).

In the Teaming and failover dialog box, select Uplink 2 as active uplink and set Uplink 1 to be the standby uplink.

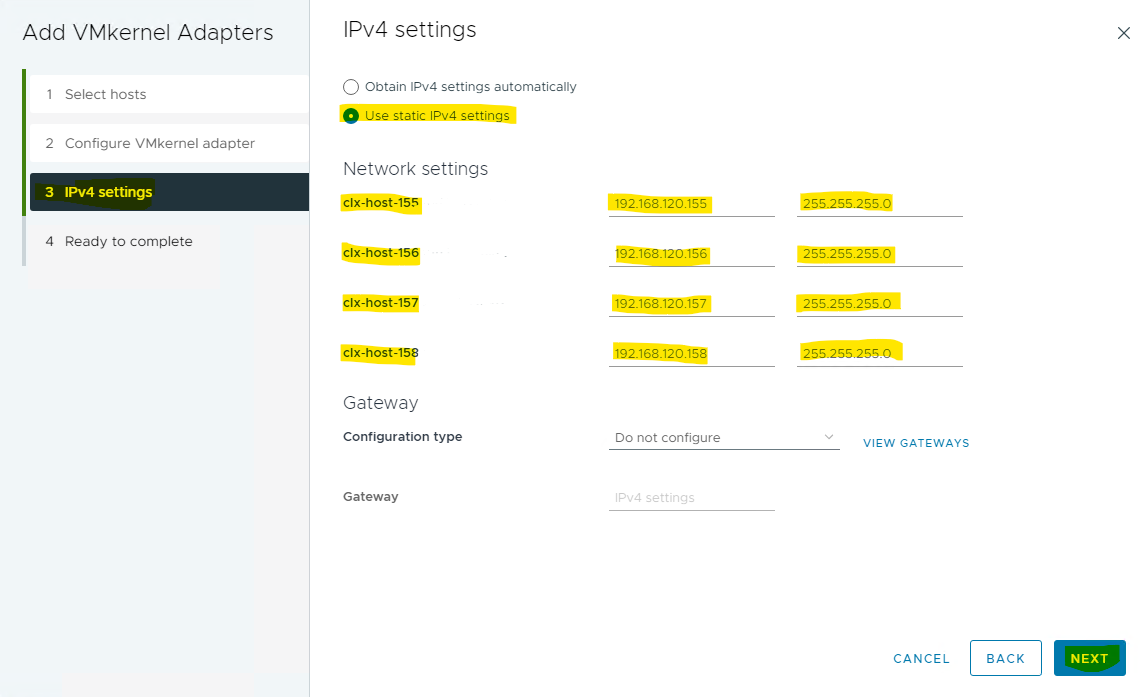

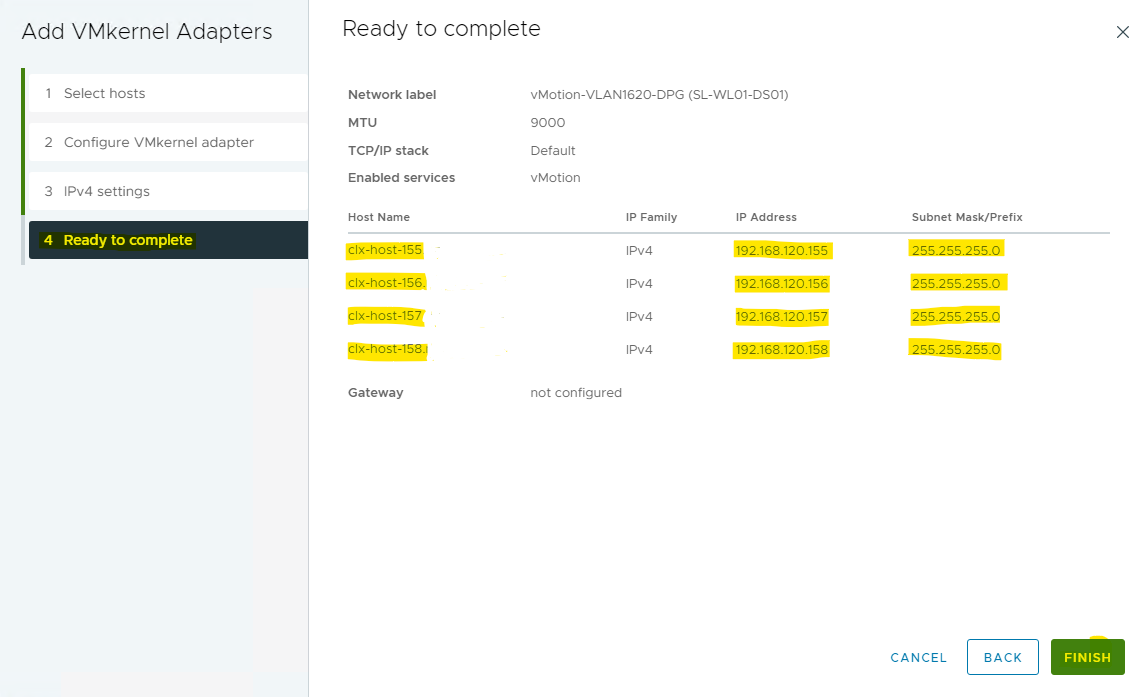

Adding a VMkernel Network for vSAN

To add VMkernel adapters for distributed port groups, follow the steps below.

Right click on distributed port group and select Add VMkernel Adapters.

Select Attached Hosts, and click NEXT.

Select vSAN (in this example) in Available services, and click NEXT.

Enter the Network Settings and Gateway details, and click NEXT.

Click FINISH.

vSAN network is enabled for the host.

Repeat the Adding a VMkernel Network steps for the vMotion, and any other required port groups.

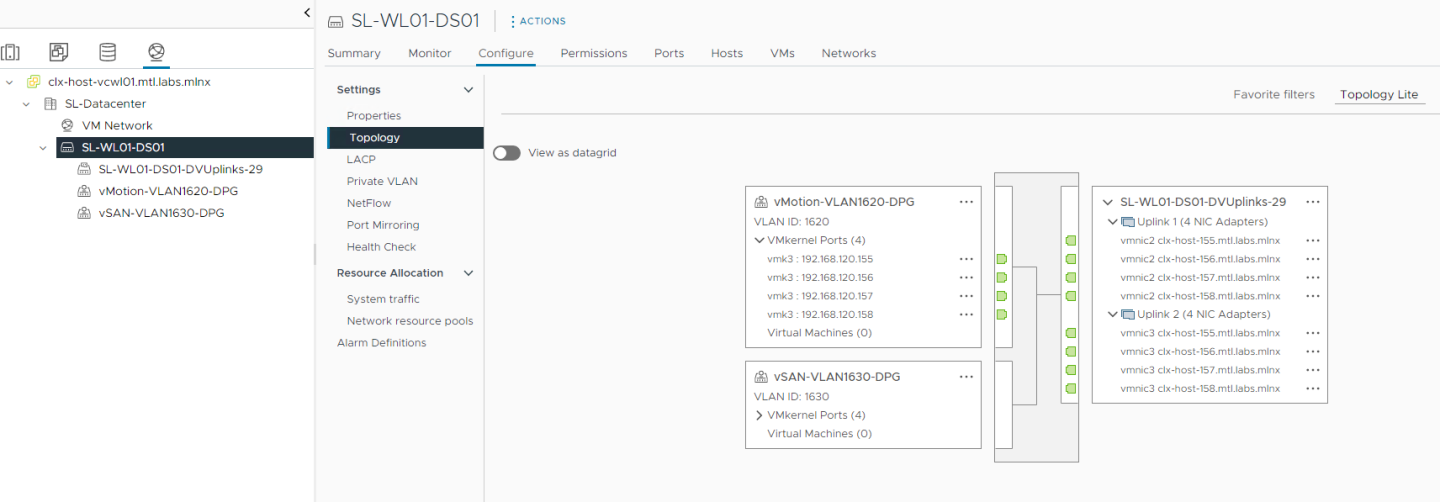

Once the ESXi Cluster Networking configuration is complete, it can be verified under the Distributed Switch>Configure>Topology tab.

Manually Enabling vSAN

Use the HTML5-based vSphere Client to configure your vSAN cluster.

You can use Quickstart to quickly create and configure a vSAN cluster. For more information, please see Using Quickstart to Configure and Expand a vSAN Cluster .

To enable and configure vSAN, follow the steps below.

Select Configure → vSAN → Services. Select Standard vSAN cluster, and click Configure.

Select the vSAN ESA , and click Next.

Configure the data management features, including Data-At-Rest encryption, Data-In-Transit encryption and RDMA support. C lick NEXT.

Claim disks for the vSAN cluster, and click NEXT.

Each host requires at least one flash device in the cache tier, and one or more devices in the capacity tier.

Create a fault domain, in case you need it , and click NEXT.

Review the configuration, and click FINISH.

Configure a vSAN Datastore Storage Policy

To configure a Storage Policy, follow the steps below.

Navigate to your vSAN Datastore and select General under Configure.

Click on Edit Default Storage Policy.

Select vSAN ESA Default Plicy - RAID5 and click OK.

Configure License Settings for a vSAN Cluster

You must assign a license to a vSAN cluster before its evaluation period or its currently assigned license expire.

Some advanced features, such as all-flash configuration and stretched clusters, require a designated license plan.

Prerequisites

To view and manage the vSAN licenses, you must have the Global.Licenses privilege enabled on the vCenter Server system.

To assign a license to a vSAN cluster, follow the steps below.

Navigate to your vSAN cluster.

Right click on the cluster and select Assign vSAN Cluster License... from the Actions menu .

Select an existing license, and click OK.

Validate the Assigned License by:

Navigating to your vSAN cluster.

Clicking the Configure tab.

Selecting vSAN Cluster under Licensing.

Once you enable vSAN , a single datastore is created.

You can review the Skyline Health, Physical Disks, Resyncing Objects, Capacity and Performance.

In addition, you can run Proactive Tests of the vSAN datastore.

Done!

Appendix

Test the Environment

Hardware and Software Components

Host under test:

Server, Dell PowerEdge R750 vSAN Ready Node, with 2 x Intel(R) Xeon(R) Gold 6326 CPU @ 2.90GHz 16 cores, 384GB of RAM.

NVIDIA ConnectX-6 Dx EN NIC; 100GbE; dual-port QSFP56; PCIe4.0 x16; (MCX623106AN-CDA), with the driver 4.23.0.36-8vmw.800.1.0.20513097 and FW 22.34.10.02 versions.

4xDell Ent NVMe P5600 MU U.2 1.6TB ( vSAN ESA Default Plicy - RAID5 )

VMware ESXi™ 8.0 GA

Network:

• NVIDIA Spectrum® SN3700 Open Ethernet Switch

NVIDIA® Cumulus® Linux v5.1 Network OS

• NVIDIA MCP1650-H001E30 Passive Copper Cable InfiniBand HDR up to 200Gb/s QSFP56 LSZH 1m Black Pulltab 30AWG

Virtual Machine and Benchmark Configuration

We used HCI Bench v2.8.0 FIO benchmark workloads to measure performance with the following parameter configurations:

HCIBench | |

Benchmarking Tool | FIO |

Number of VMs | 32 |

VM's Number of CPU | 4 |

VM's Number of Data Disk | 8 |

VM's Size of RAM in GB | 8 |

VM's Size of Data Disk in GiB | 14 |

Number of Disks to Test | 8 |

Working-Set Percentage | 100 |

Number of Threads Per Disk | 4 |

Random Percentage | 100 |

Performance Results

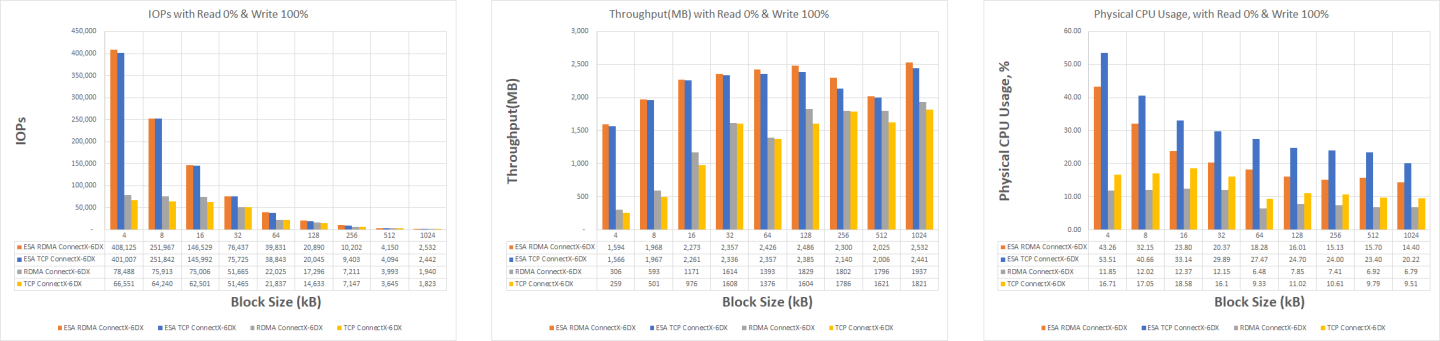

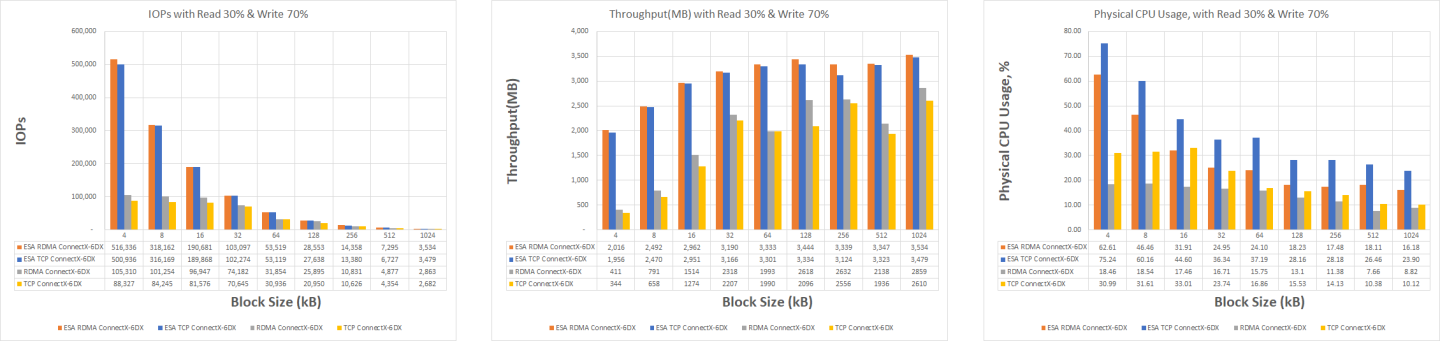

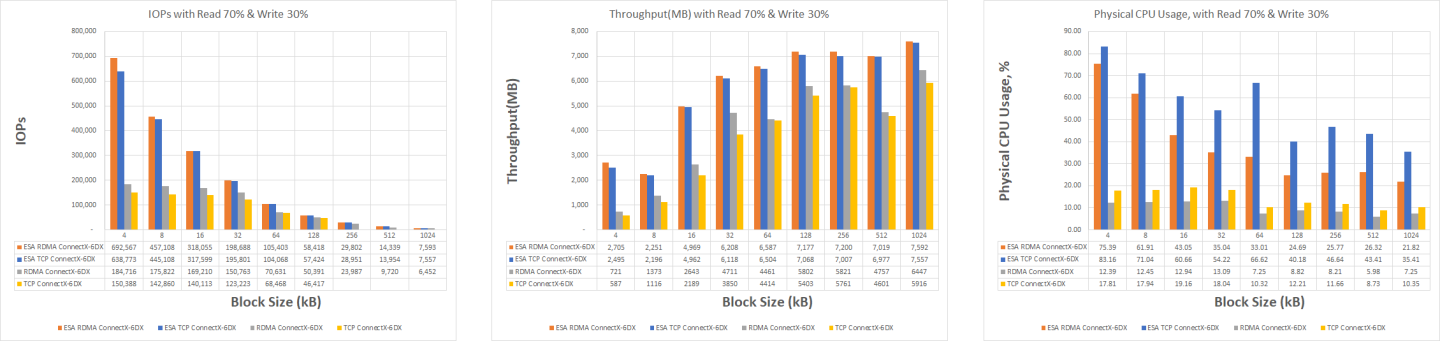

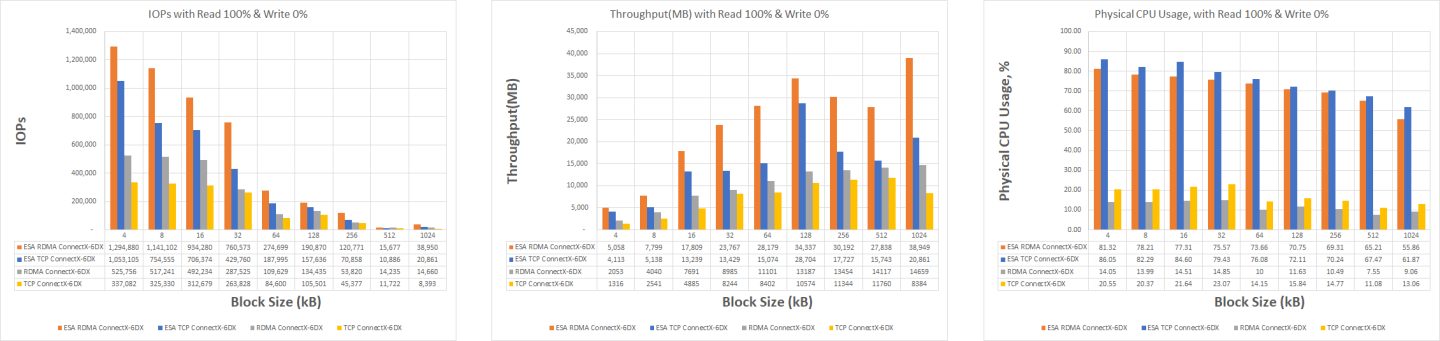

The HCI Bench used Random Read and Random Write IO patterns with various IO sizes from 4 KB to 1024 KB.

We compare the IOPS, Throughput and CPU usage between vSAN over RDMA and TCP on the cluster.

Storage policy - vSAN ESA Default Plicy - RAID5.

The benchmark runs had the virtual disks placed on:

Essential Storage Architecture:

vSAN data: 4xDell Ent NVMe P5600 MU U.2 1.6TB

Disk Group 1 for Original Storage Architecture(OSA):

vSAN cache: Dell Ent NVMe P5600 MU U.2 1.6TB

vSAN data: 3xDell Ent NVMe P5600 MU U.2 1.6TB

The performance results listed in this document are indicative, and should not be considered as formal performance targets for NVIDIA products.

Conclusion

The benchmark results in this performance study show consistent supremacy of vSAN ESA over RDMA compared to either vSAN ESA over TCP or vSAN OSA:

vSAN ESA over RDMA achives up to 86% more IOPs than ESA TCP and up to 165% more IOPs than OSA over RDMA.

vSAN ESA over RDMA achives up to 86% higher throughput than ESA TCP and up to 165% throughput more than OSA over RDMA.

vSAN ESA over RDMA achives up to 74% Read and up to 11.6% Write lower latency than ESA TCP and up to 79% Read and up to 11.6% Write lower latency than OSA over RDMA.

vSAN ESA over RDMA achives up to 33.6% lower physical CPU usage than ESA TCP.

Note: In our environment, we use read intensive disks. this can explained the difference between read and write results.

In addition, the benchmark results in this performance study show consistent supremacy of vSAN ESA over RDMA.

As a result, vSAN ESA over RDMA allows running more VMs on the same hardware with more performance and lower latency.

Running vSAN ESA over RDMA, which offloads the CPU from performing the data communication tasks, generates a significant performance boost critical in the new era of accelerated computing associated with massive data transfers.

It is expected that vSAN ESA over RDMA will eventually replace vSAN ESA over TCP and vSAN OSA over RDMA and become the leading transport technology in vSphere-enabled data centers.

Authors

| Boris Kovalev Boris Kovalev has worked for the past several years as a Solutions Architect, focusing on NVIDIA Networking/Mellanox technology, and is responsible for complex machine learning, Big Data and advanced VMware-based cloud research and design. Boris previously spent more than 20 years as a senior consultant and solutions architect at multiple companies, most recently at VMware. He has written multiple reference designs covering VMware, machine learning, Kubernetes, and container solutions which are available at the Mellanox Documents website. |