High Availability

UFM HA supports High-Availability on the host level for UFM Enterprise appliances. The solution is based on a pacemaker to monitor services, and on DRBD to sync file-system states.

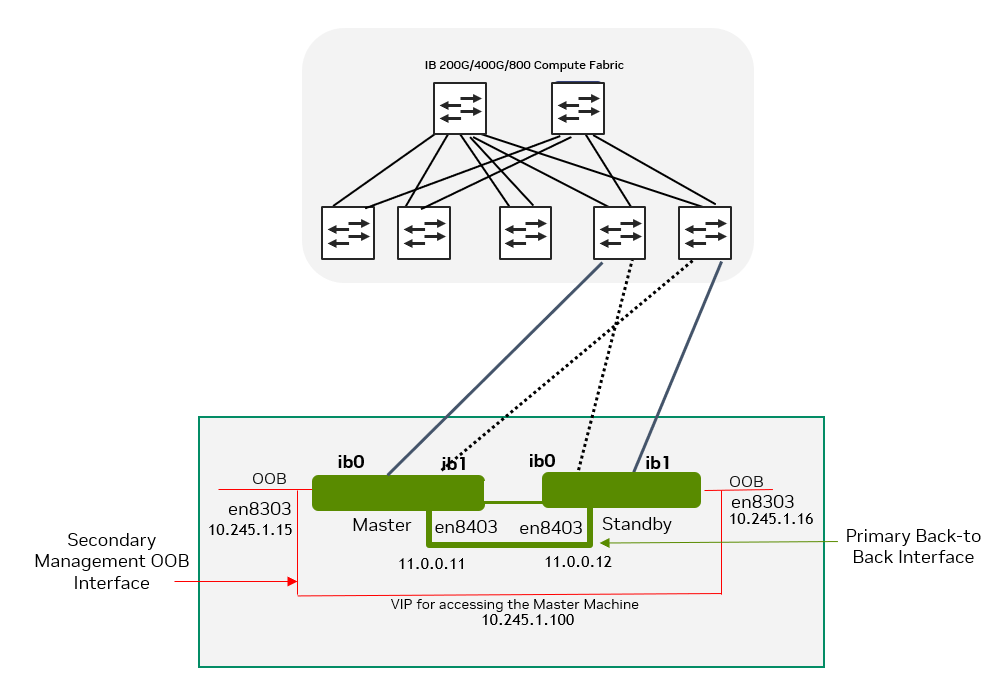

The diagram below describes the connectivity scheme of the UFM High-Availability cluster.

UFM HA should be configured on two appliances, master and standby.

High-availability should be configured first on on the standby node. When completed, it should be configured on the master node.

Command Usage:

# ufm_ha_cluster config --help

Usage: ufm_ha_cluster config [<options>]

The config command configures ha add-on for ufm server.

Options:

|

Option |

Description |

-r | --role <node role> |

Node role (master or standby) - Mandatory |

-e | --peer-primary-ip <ip address> |

Peer node primary ip address - Mandatory |

-l | --local-primary-ip <ip address> |

Local node primary ip address - Mandatory |

-E | --peer-secondary-ip <ip address> |

Peer node secondary ip address - Mandatory |

-L | --local-secondary-ip <ip address> |

Local node secondary ip address - Mandatory |

-i | --virtual-ip <virtual-ip> OR |

Cluster virtual IP OR Do not create virtual IP resource - Mutual exclusive with virtual-IP option One of the two options is mandatory |

-p | --hacluster-pwd <pwd> |

hacluster user password - Mandatory |

-f | --ha-config-file <file path> |

HA configuration file - The default is ufm-ha.conf |

Configure HA with VIP (Virtual IP)

[On Standby Server] Run the following command to configure Standby Server:

ufm_ha_cluster config -r standby \ --local-primary-ip <local back-to-back IP> \ --peer-primary-ip <peer back-to-back IP> \ --local-secondary-ip <local management IP> \ --peer-secondary-ip <peer management IP> \ --virtual-ip <virtual management IP used

foraccessing the master node> \ --hacluster-pwd <password>[On Master Server] Run the following command to configure Master Server:

ufm_ha_cluster config -r master \ --local-primary-ip <local back-to-back IP> \ --peer-primary-ip <peer back-to-back IP> \ --local-secondary-ip <local management IP> \ --peer-secondary-ip <peer management IP> \ --virtual-ip <virtual management IP used

foraccessing the master node> \ --hacluster-pwd <password>

Alternatively, you can run the CLI command ufm ha configure.

You must wait until after configuration for DRBD sync to finish before starting the UFM cluster. To check the DRBD sync status, run:

ufm_ha_cluster status

Configure HA without VIP (on a Dual Subnet)

Please change the variables in the commands below based on your setup.

[On Standby Server] Run the following command to configure Standby Server:

ufm_ha_cluster config -r standby \ --local-primary-ip <local back-to-back IP> \ --peer-primary-ip <peer back-to-back IP> \ --local-secondary-ip <local management IP> \ --peer-secondary-ip <peer management IP> \ --hacluster-pwd <password> \ --no-vip

[On Master Server] Run the following command to configure Master Server:

ufm_ha_cluster config -r master \ --local-primary-ip <local back-to-back IP> \ --peer-primary-ip <peer back-to-back IP> \ --local-secondary-ip <local management IP> \ --peer-secondary-ip <peer management IP> \ --hacluster-pwd <password> \ --no-vip

Alternatively, you can run the CLI command ufm ha configure dual-subnet.

You must wait until after configuration for DRBD sync to finish before starting the UFM cluster. To check the DRBD sync status, run:

ufm_ha_cluster status

To manage the HA cluster, use the ufm_ha_cluster tool.

ufm_ha_cluster Usage

# ufm_ha_cluster --help =================================================================== UFM-HA version:

5.3.0-17------------------------------------------------------------------- Usage: ufm_ha_cluster [-h|--help] <command> [<options>] This script manages UFM HA cluster.Options:

OPTIONS: -h|--help Show

thismessage COMMANDS: version HA cluster version config Configure HA cluster cleanup Remove HA configurations status Check HA cluster status failover Master node failover takeover Standby node takeover start Start HA services stop Stop HA services detach etach the standby from cluster attach Attach anewstandby to cluster enable-maintain Enable maintenance to cluster disable-maintain Disable maintenance to cluster reset Reset DRBD connectivity from split-brain is-master checkifthe current node is a master is-running checkifufm services are running is-ha Checkifrunning in HA modeFor further information on each command, run:

ufm_ha_cluster <command> --help

To check UFM HA cluster status, run:

ufm_ha_cluster status

To start the UFM HA cluster, run:

ufm_ha_cluster start

To stop the UFM HA cluster, run:

ufm_ha_cluster stop

Execute the failover command on the master appliance to become the standby appliance. Run:

ufm_ha_cluster failover

Execute the takeover command on the standby machine to become the master appliance. Run:

ufm_ha_cluster takeover

For additional information on configuring UFM HA, please refer to Installing UFM Server Software for High Availability . Since the UFM HA package and related components (i.e. pacemaker and DRBD) are already deployed, follow instructions from step 6 (Configure HA from the main server) and onward.