NVIDIA BlueField Container Deployment Guide

This guide provides an overview and deployment configuration of DOCA containers for NVIDIA® BlueField® DPU.

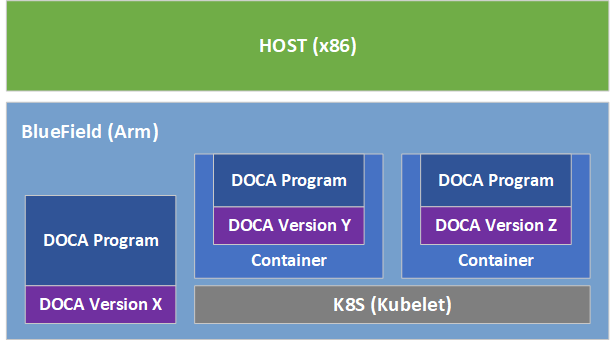

DOCA containers allow for easy deployment of ready-made DOCA environments to the DPU, whether it is a DOCA service bundled inside a container and ready to be deployed, or a development environment already containing the desired DOCA version.

Containerized environments enable the users to decouple DOCA programs from the underlying BlueField software. Each container is pre-built with all needed libraries and configurations to match the specific DOCA version of the program at hand. One only needs to pick the desired version of the service and pull the ready-made container of that version from NVIDIA's container catalog.

The different DOCA containers are listed on NGC, NVIDIA's container catalog, and can be found under both the "DOCA" and "DPU" labels.

Refer to the NVIDIA DOCA Installation Guide for Linux for details on how to install BlueField related software

BlueField image version required is 3.9.0 and higher

Container deployment based on standalone Kubelet, as presented in this guide, is currently in alpha version and is subject to change in future releases.

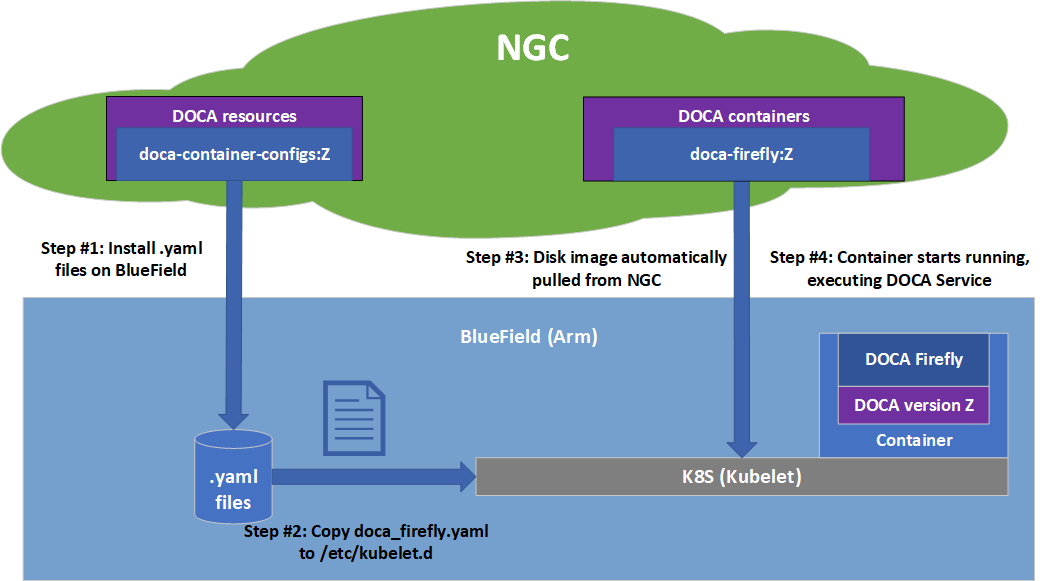

Deploying containers on top of the BlueField DPU requires the following setup sequence:

Pull the container .yaml configuration files.

Modify the container's .yaml configuration file.

Deploy the container. The image is automatically pulled from NGC.

Some of the steps only need to be performed once, while others are required before the deployment of each container.

What follows is an example of the overall setup sequence using the DOCA Firefly container as an example.

Pull Container YAML Configurations

This step pulls the .yaml configurations from NGC. If you have already performed this step for other DOCA containers you may skip to the next section.

To pull the latest resource version:

Pull the entire resource as a *.zip file:

wget https://api.ngc.nvidia.com/v2/resources/nvidia/doca/doca_container_configs/versions/2.7.0v2/zip -O doca_container_configs_2.7.0v2.zip

Unzip the resource:

unzip -o doca_container_configs_2.7.0v2.zip -d doca_container_configs_2.7.0v2

More information about additional versions can be found in the NGC resource page.

Container-specific Instructions

Some containers require specific configuration steps for the resources used by the application running inside the container and modifications for the .yaml configuration file of the container itself.

Refer to the container-specific instructions listed under the container's relevant page on NGC.

Structure of NGC Resource

The DOCA NGC resource downloaded in section "Pull Container YAML Configurations" contains a configs directory under which a dedicated folder per DOCA version is located. For example, 2.0.2 will include all currently available .yaml configuration files for DOCA 2.0.2 containers.

doca_container_configs_2.0.2v1

├── configs

│ ├── 1.2.0

│ │ ...

│ └── 2.0.2

│ ├── doca_application_recognition.yaml

│ ├── doca_blueman.yaml

│ ├── doca_devel.yaml

│ ├── doca_devel_cuda.yaml

│ ├── doca_firefly.yaml

│ ├── doca_flow_inspector.yaml

│ ├── doca_hbn.yaml

│ ├── doca_ips.yaml

│ ├── doca_snap.yaml

│ ├── doca_telemetry.yaml

│ └── doca_url_filter.yaml

In addition, the resource also contains a scripts directory under which services may choose to provide additional helper-scripts and configuration files to use with their services.

The folder structure of the scripts directory is as follows:

+ doca_container_configs_2.0.2v1

+-+ configs

| +-- ...

+-+ scripts

+-+ doca_firefly <== Name of DOCA Service

+-+ doca_hbn <== Name of DOCA Service

| +-+ 1.3.0

| | +-- ... <== Files for the DOCA HBN version "1.3.0"

| +-+ 1.4.0

| | +-- ... <== Files for the DOCA HBN version "1.4.0"

A user wishing to deploy an older version of the DOCA service would still have access to the suitable YAML file (per DOCA release under configs) and scripts (under the service-specific version folder which resides under scripts).

Spawn Container

Once the desired .yaml file is updated, simply copy the configuration file to Kubelet's input folder. Here is an example using the doca_firefly.yaml, corresponding to the DOCA Firefly service.

cp doca_firefly.yaml /etc/kubelet.d

Kubelet automatically pulls the container image from NGC and spawns a pod executing the container. In this example, the DOCA Firelfy service starts executing right away and its printouts would be seen via the container's logs.

Review Container Deployment

When deploying a new container, it is recommended to follow this procedure to ensure successful completion of each step in the deployment:

View currently active pods and their IDs:

sudo crictl pods

InfoIt may take up to 20 seconds for the pod to start.

When deploying a new container, search for a matching line in the command's output:

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME 06bd84c07537e

4seconds ago Ready doca-firefly-my-dpudefault0(default)If a matching line fails to appear, it is recommended to view Kubelet's logs to get more information about the error:

sudo journalctl -u kubelet --since -5m

Once the issue is resolved, proceed to the next steps.

InfoFor more troubleshooting information and tips, refer to the matching section in our Troubleshooting Guide.

Verify that the container image is successfully downloaded from NGC into the DPU's container registry (download time may vary based on the size of the container image):

sudo crictl images

Example output:

IMAGE TAG IMAGE ID SIZE k8s.gcr.io/pause

3.22a060e2e7101d 251kB nvcr.io/nvidia/doca/doca_firefly1.1.0-doca2.0.2134cb22f3461187.4MBView currently active containers and their IDs:

sudo crictl ps

Once again, find a matching line for the deployed container (boot time may vary depending on the container's image size):

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD b505a05b7dc23 134cb22f34611

4minutes ago Running doca-firefly006bd84c07537e doca-firefly-my-dpuIn case of failure, to see a line matching the container, check the list of all recent container deployments:

sudo crictl ps -a

It is possible that the container encountered an error during boot and exited right away:

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD de2361ec15b61 134cb22f34611

1second ago Exited doca-firefly14aea5f5adc91d doca-firefly-my-dpuDuring the container's lifetime, and for a short timespan after it exits, once can view the containers logs as were printed to the standard output:

sudo crictl logs <container-id>

In this case, the user can learn from the log that the wrong configuration was passed to the container:

$ sudo crictl logs de2361ec15b61 Starting DOCA Firefly - Version

1.1.0... Requested the following PTPinterface: p10 Failed to findinterface"p10". Aborting

For additional information and guides on using crictl, refer to the Kubernetes documentation.

Stop Container

The recommended way to stop a pod and its containers is as follows:

Delete the .yaml configuration file for Kubelet to stop the pod:

rm /etc/kubelet.d/<file name>.yaml

Stop the pod directly (only if it still shows "Ready"):

sudo crictl stopp <pod-id>

Once the pod stops, it may also be necessary to stop the container itself:

sudo crictl stop <container-id>

This section provides a list of common errors that may be encountered when spawning a container. These account for the vast majority of deployment errors and are easy to verify first before trying to parse the Kubelet journal log.

If more troubleshooting is required, refer to the matching section in the Troubleshooting Guide.

Yaml Syntax

The syntax of the .yaml file is extremely sensitive and minor indentation changes may cause it to stop working. The file uses spaces (' ') for indentations (two per indent). Using any other number of spaces causes an undefined behavior.

Huge Pages

The container only spawns once all the required system resources are allocated on the DPU and can be reserved for the container. The most notable resource is huge pages.

Before deploying the container, make sure that:

Huge pages are allocated as required per container.

Both the amount and size of pages match the requirements precisely.

Once huge pages are allocated, it is recommended to restart the container service to apply the change:

sudo systemctl restart kubelet.service sudo systemctl restart containerd.service

Once the above operations are completed successfully, the container could be deployed (YAML can be copied to /etc/kubelet.d).

Manual Execution from Within Container - Debugging

The deployment described in this section requires an in-depth knowledge of the container's structure. As this structure might change from version to version, it is only recommended to use this deployment for debugging, and only after other debugging steps have been attempted.

Although most containers define the entrypoint.sh script as the container's ENTRYPOINT, this option is only valid for interaction-less sessions. In some debugging scenarios, it is useful to have better control of the programs executed within the container via an interactive shell session. Hence, the .yaml file supports an additional execution option.

Uncommenting (i.e., removing # from) the following 2 lines in the .yaml file causes the container to boot without spawning the container's entrypoint script.

# command: ["sleep"]

# args: ["infinity"]

In this execution mode, users can attach a shell to the spawned container:

crictl exec -it <container-id> /bin/bash

Once attached, users get a full shell session enabling them to execute internal programs directly at the scope of the container.

Container deployment on the BlueField DPU can be done in air-gapped networks and does not require an Internet connection. As explained previously, per DOCA service container, there are 2 required components for successful deployment:

Container image – hosted on NVIDIA's NGC catalog

YAML file for the container

From an infrastructure perspective, one additional module is required:

k8s.gcr.io/pause container image

Pulling Container for Offline Deployment

When preparing an air-gapped environment, users must pull the required container images in advance so they could be imported locally to the target machine:

docker pull <container-image:tag>

docker save <container-image:tag> > <name>.tar

The following example pulls DOCA Firefly 1.1.0-doca2.0.2:

docker pull nvcr.io/nvidia/doca/doca_firefly:1.1.0-doca2.0.2

docker save nvcr.io/nvidia/doca/doca_firefly:1.1.0-doca2.0.2 > firefly_v1.1.0.tar

Some of DOCA's container images support multiple architectures, causing the docker pull command to pull the image according to the architecture of the machine on which it is invoked. Users may force the operation to pull an Arm image by passing the --platform flag:

docker pull --platform=linux/arm64 <container-image:tag>

Importing Container Image

After exporting the image from the container catalog, users must place the created *.tar files on the target machine on which to deploy them. The import command is as follows:

ctr --namespace k8s.io image import <name>.tar

For example, to import the firefly .tar file pulled in the previous section:

ctr --namespace k8s.io image import firefly_v1.1.0.tar

Examining the status of the operation can be done using the image inspection command:

crictl images

Built-in Infrastructure Support

The DOCA image comes pre-shipped with the k8s.gcr.io/pause image:

/opt/mellanox/doca/services/infrastructure/

├── docker_pause_3_2.tar

└── enable_offline_containers.sh

This image is imported by default during boot as part of the automatic activation of DOCA Telemetry Service (DTS).

Importing the image independently of DTS can be done using the enable_offline_container.sh script located under the same directory as the image's *.tar file.

In versions prior to DOCA 4.2.0, this image can be pulled and imported as follows:

Exporting the image:

docker pull k8s.gcr.io/pause:

3.2docker save k8s.gcr.io/pause:3.2> docker_pause_3_2.tarImporting the image:

ctr --namespace k8s.io image

importdocker_pause_3_2.tar crictl images IMAGE TAG IMAGE ID SIZE k8s.gcr.io/pause3.22a060e2e7101d 487kB

A subset of the DOCA services are available for host-based deployment as well. This is indicated in those services' deployment and can also be identified by having container tags on NGC with the *-host suffix.

In contrast to the managed DPU environment, the deployment of DOCA services on the host is based on docker. This deployment can be extended further based on the user's own container runtime solution.

Docker Deployment

DOCA services for the host are deployed directly using Docker.

Make sure Docker is installed on your host. Run:

docker version

If it is not installed, visit the official Install Docker Engine webpage for installation instructions.

Make sure the Docker service is started. Run:

sudo systemctl daemon-reload sudo systemctl start docker

Pull the container image directly from NGC (can also be done using the docker run command):

Visit the NGC page of the desired container.

Under the "Tags" menu, select the desired tag and click the paste icon so it is copied to the clipboard.

The docker pull command will be as follows:

sudo docker pull <NGC container tag here>

For example:

sudo docker pull nvcr.io/nvidia/doca/doca_firefly:

1.1.0-doca2.0.2-hostNoteFor DOCA services with deployments on both DPU and host, make sure to select the tag ending with -host.

Deploy the DOCA service using Docker:

The deployment is performed using the following command:

sudo docker run --privileged --net=host -v <host directory>:<container directory> -e <env variables> -it <container tag> /entrypoint.sh

InfoFor more information, refer to Docker's official documentation.

The specific deployment command for each DOCA service is listed in their respective deployment guide.