DOCA Flow

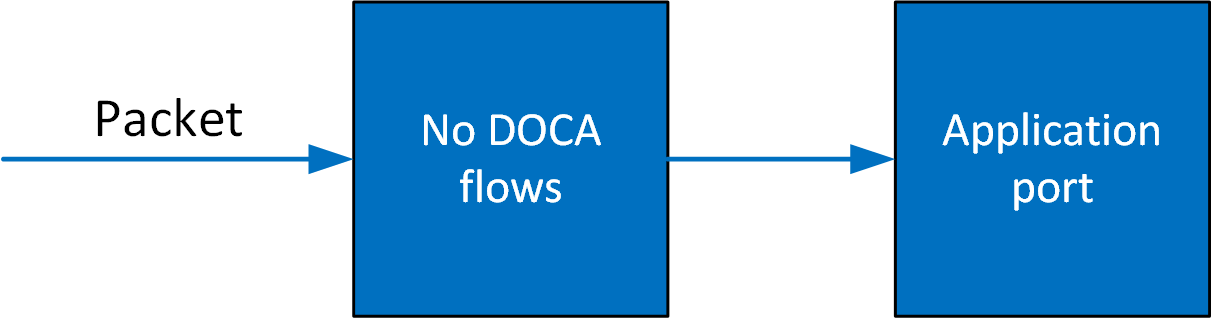

This guide describes how to deploy the DOCA Flow library, the philosophy of the DOCA Flow API, and how to use it. The guide is intended for developers writing network function applications that focus on packet processing (such as gateways). It assumes familiarity with the network stack and DPDK.

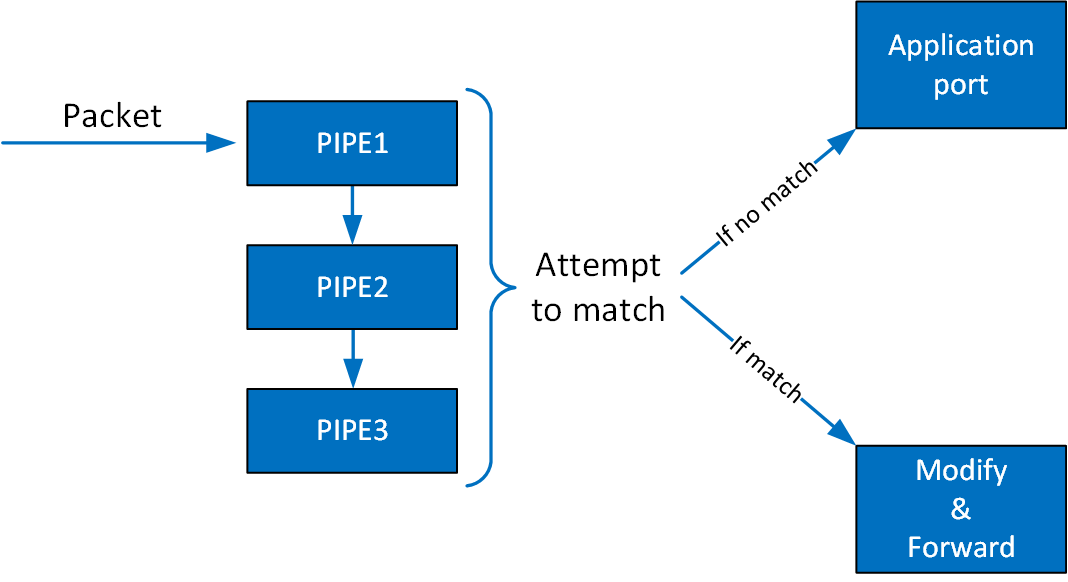

DOCA Flow is the most fundamental API for building generic packet processing pipes in hardware. The DOCA Flow library provides an API for building a set of pipes, where each pipe consists of match criteria, monitoring, and a set of actions. Pipes can be chained so that after a pipe-defined action is executed, the packet may proceed to another pipe.

Using DOCA Flow API, it is easy to develop hardware-accelerated applications that have a match on up to two layers of packets (tunneled).

MAC/VLAN/ETHERTYPE

IPv4/IPv6

TCP/UDP/ICMP

GRE/VXLAN/GTP-U/ESP/PSP

Metadata

The execution pipe can include packet modification actions such as the following:

Modify MAC address

Modify IP address

Modify L4 (ports)

Strip tunnel

Add tunnel

Set metadata

Encrypt/Decrypt

The execution pipe can also have monitoring actions such as the following:

Count

Policers

The pipe also has a forwarding target which can be any of the following:

Software (RSS to subset of queues)

Port

Another pipe

Drop packets

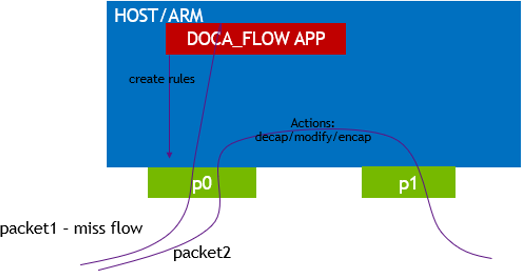

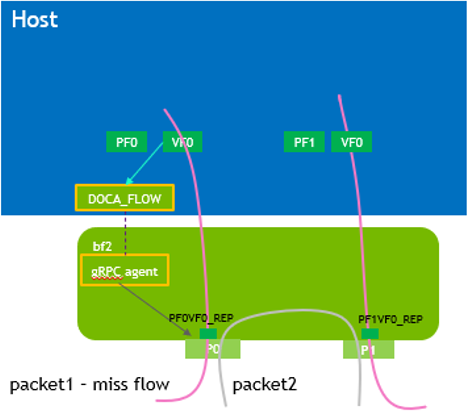

A DOCA Flow-based application can run either on the host machine or on an NVIDIA® BlueField® DPU target. Flow-based programs require an allocation of huge pages, hence the following commands are required:

echo '1024' | sudo tee -a /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

sudo mkdir /mnt/huge

sudo mount -t hugetlbfs nodev /mnt/huge

On some operating systems (RockyLinux, OpenEuler, CentOS 8.2) the default huge page size on the DPU (and Arm hosts) is larger than 2MB, often 512MB. Users can check the size of the huge pages on their OS using the following command:

$ grep -i huge /proc/meminfo

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

FileHugePages: 0 kB

HugePages_Total: 4

HugePages_Free: 4

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 524288 kB

Hugetlb: 6291456 kB

In this case, instead of allocating 1024 pages, users should only allocate 4:

echo '4' | sudo tee -a /sys/kernel/mm/hugepages/hugepages-524288kB/nr_hugepages

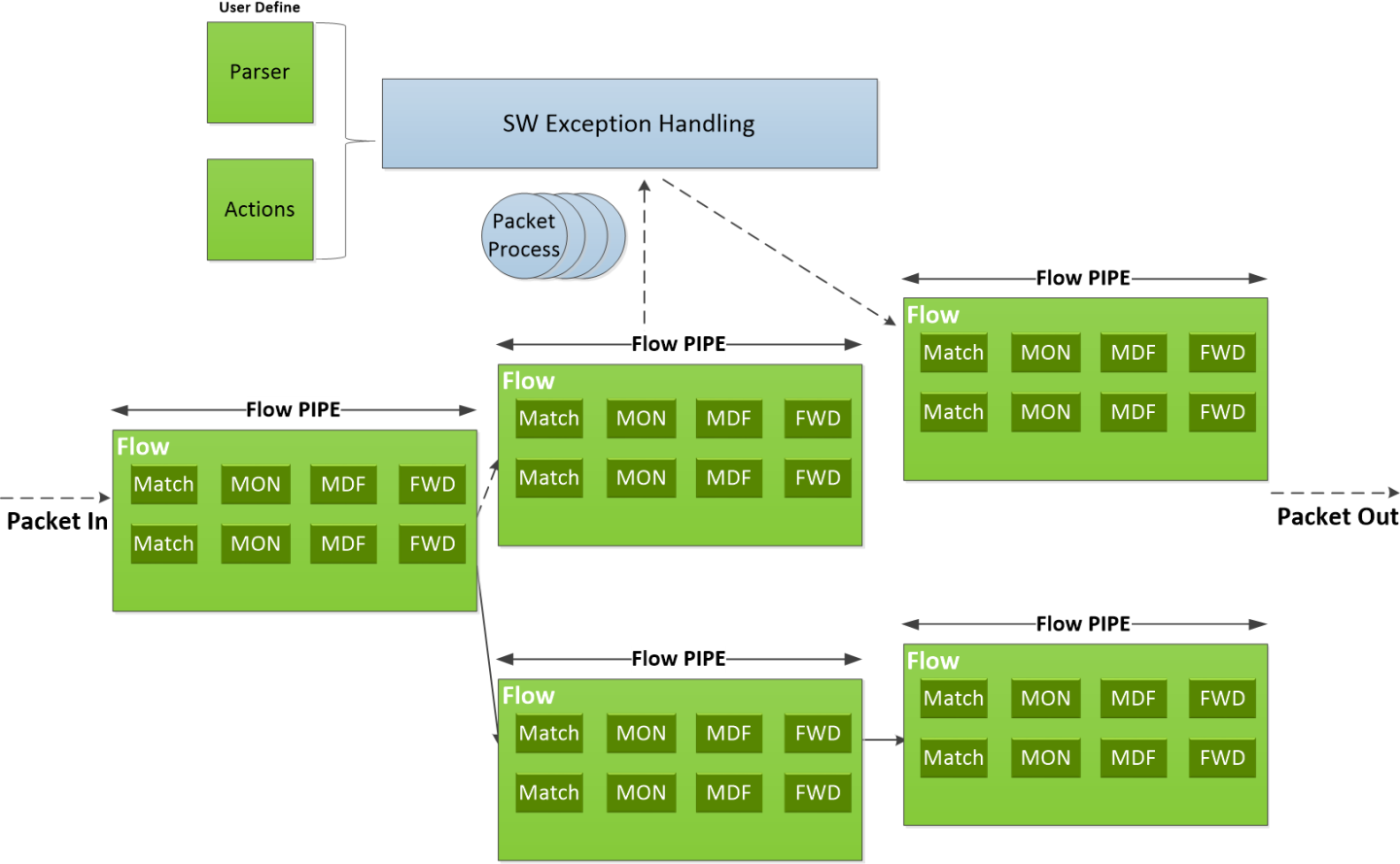

The following diagram shows how the DOCA Flow library defines a pipe template, receives a packet for processing, creates the pipe entry, and offloads the flow rule in NIC hardware.

Features of DOCA Flow:

User-defined set of matches parser and actions

DOCA Flow pipes can be created or destroyed dynamically

Packet processing is fully accelerated by hardware with a specific entry in a flow pipe

Packets that do not match any of the pipe entries in hardware can be sent to Arm cores for exception handling and then reinjected back to hardware

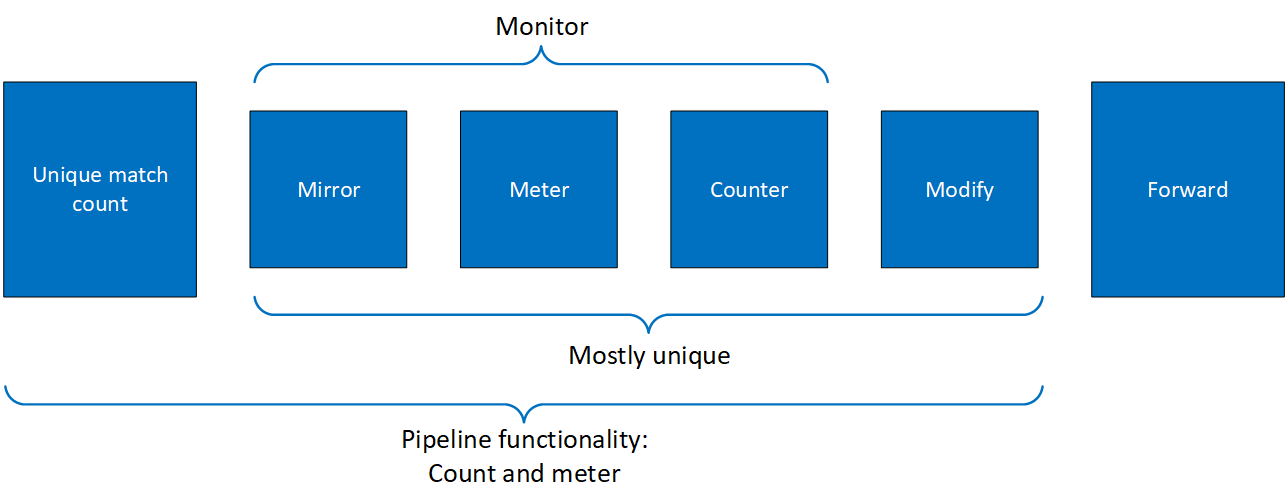

The DOCA Flow pipe consists of the following components:

Monitor (MON in the diagram) - counts, meters, or mirrors

Modify (MDF in the diagram) - modifies a field

Forward (FWD in the diagram) - forwards to the next stage in packet processing

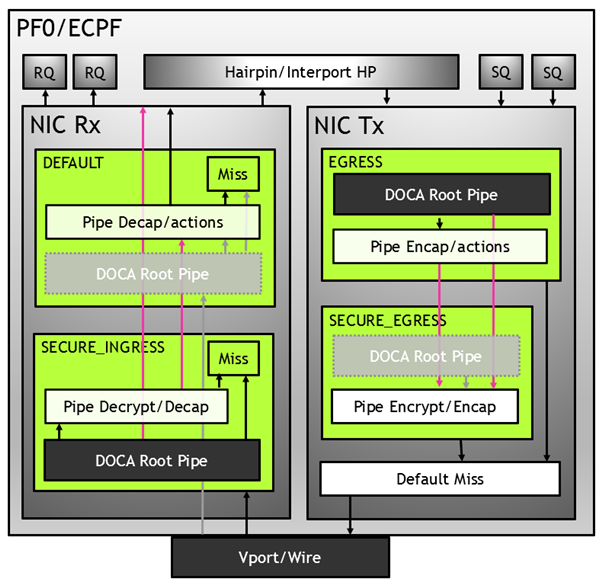

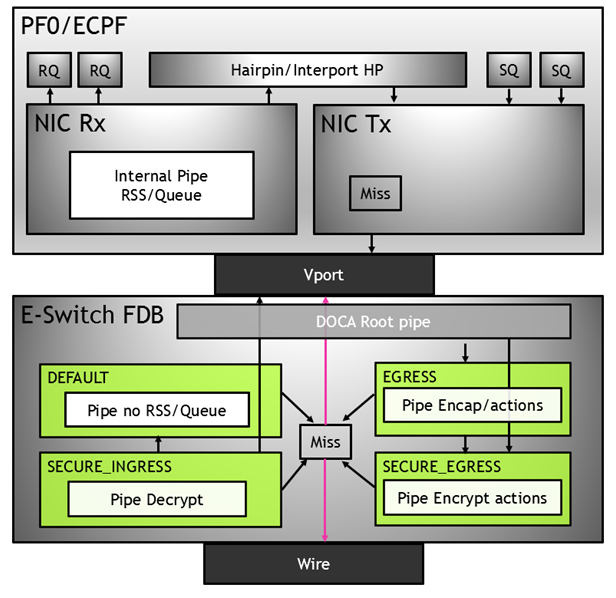

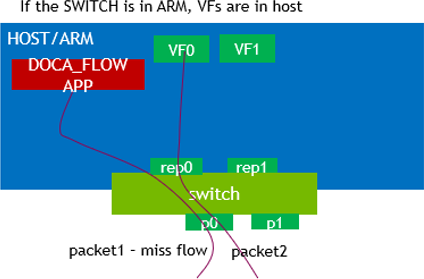

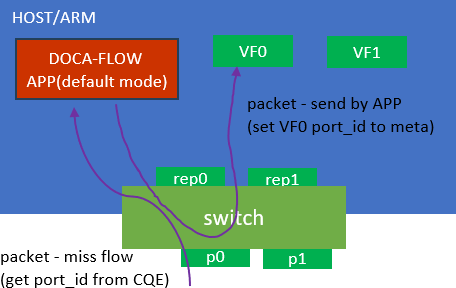

DOCA Flow organizes pipes into high-level containers named domains to address the specific needs of the underlying architecture.

A key element in defining a domain is the packet direction and a set of allowed actions.

A domain is a pipe attribute (also relates to shared objects)

A domain restricts the set of allowed actions

Transition between domains is well-defined (packets cannot cross domains arbitrarily)

A domain may restrict the sharing of objects between packet directions

Packet direction can restrict the move between domains

List of Steering Domains

DOCA Flow provides the following set of predefined steering domains:

|

Domain |

Description |

|

DOCA_FLOW_PIPE_DOMAIN_DEFAULT |

|

|

DOCA_FLOW_PIPE_DOMAIN_SECURE_INGRESS |

|

|

DOCA_FLOW_PIPE_DOMAIN_EGRESS |

|

|

DOCA_FLOW_PIPE_DOMAIN_SECURE_EGRESS |

|

Domains in VNF Mode

Domains in Switch Mode

You can find more detailed information on DOCA Flow API in NVIDIA DOCA Library APIs.

The pkg-config (*.pc file) for the DOCA Flow library is doca-flow.

The following sections provide additional details about the library API.

doca_flow_cfg

This structure is required input for the DOCA Flow global initialization function doca_flow_init. The user must create and set this structure's fields using the API doca_error_t doca_flow_cfg_create(struct doca_flow_cfg **cfg).

doca_error_t doca_flow_cfg_create(struct doca_flow_cfg **cfg);

cfg – DOCA Flow configuration structure

This function creates and allocates the DOCA Flow configuration structure.

doca_error_t doca_flow_cfg_destroy(struct doca_flow_cfg *cfg);

cfg – DOCA Flow configuration structure

This function destroys and frees the DOCA Flow configuration structure.

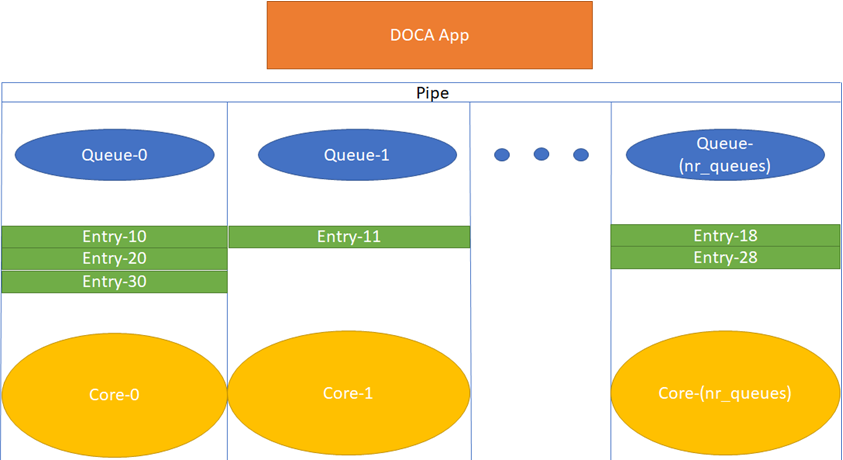

doca_error_t doca_flow_cfg_set_pipe_queues(struct doca_flow_cfg *cfg, uint16_t pipe_queues);

cfg – DOCA Flow configuration structure

pipe_queues – The pipe's number of queues for each offload thread

This function sets the number of hardware acceleration control queues. It is expected for the same core to always use the same queue_id. In cases where multiple cores access the API using the same queue_id, the application must use locks between different cores/threads.

doca_error_t doca_flow_cfg_set_nr_counters(struct doca_flow_cfg *cfg, uint32_t nr_counters);

cfg – DOCA Flow configuration structure

nr_counters – The number of counters to configure

This function sets the number of regular (non-shared) counters to configure.

doca_error_t doca_flow_cfg_set_nr_meters(struct doca_flow_cfg *cfg, uint32_t nr_meters);

cfg – DOCA Flow configuration structure

nr_meters – number of traffic meters to configure

This function sets the number of regular (non-shared) traffic meters to configure.

doca_error_t doca_flow_cfg_set_nr_acl_collisions(struct doca_flow_cfg *cfg, uint8_t nr_acl_collisions);

cfg – DOCA Flow configuration structure

nr_acl_collisions – The number of pre-configured collisions

This function sets the number of collisions for the ACL module.

Maximum value is 8

If not set, then default value is 3

doca_error_t doca_flow_cfg_set_mode_args(struct doca_flow_cfg *cfg, const char *mode_args);

cfg – DOCA Flow configuration structure

mode_args – The DOCA Flow architecture mode

This function sets the DOCA Flow architecture mode.

doca_error_t doca_flow_cfg_set_nr_shared_resource(struct doca_flow_cfg *cfg, uint32_t nr_shared_resource, enum doca_flow_shared_resource_type type);

cfg – DOCA Flow configuration structure

nr_shared_resource – Number of shared resource

type – Shared resource type

This function sets the number of shared resource per type:

Type DOCA_FLOW_SHARED_RESOURCE_METER – number of meters that can be shared among flows

Type DOCA_FLOW_SHARED_RESOURCE_COUNT – number of counters that can be shared among flows

Type DOCA_FLOW_SHARED_RESOURCE_RSS – number of RSS that can be shared among flows

Type DOCA_FLOW_SHARED_RESOURCE_IPSEC_SA – number of IPSec SAs actions that can be shared among flows

Type DOCA_FLOW_SHARED_RESOURCE_PSP – number of PSP actions that can be shared among flows

See "Shared Counter Resource" section for more information.

doca_error_t doca_flow_cfg_set_queue_depth(struct doca_flow_cfg *cfg, uint32_t queue_depth);

cfg – DOCA Flow configuration structure

queue_depth – The number of pre-configured queue size

This function sets the n umber of flow rule operations a queue can hold.

This value is preconfigured at port start ( queue_size).

Default value is 128

Passing 0 or not setting the value would set the default value

Maximum value is 1024

doca_error_t doca_flow_cfg_set_cb_pipe_process(struct doca_flow_cfg *cfg, doca_flow_pipe_process_cb cb);

cfg – DOCA Flow configuration structure

cb – Callback for pipe process completion

This function sets the callback function for the entry to be called during doca_flow_entries_process to complete entry operation (i.e., add, update, delete, and aged).

doca_error_t doca_flow_cfg_set_cb_entry_process(struct doca_flow_cfg *cfg, doca_flow_entry_process_cb cb);

cfg – DOCA Flow configuration structure

cb – Callback for entry create/destroy

This function sets the callback for creating/destroying an entry.

doca_error_t doca_flow_cfg_set_cb_shared_resource_unbind(struct doca_flow_cfg *cfg, doca_flow_shared_resource_unbind_cb cb);

cfg – DOCA Flow configuration structure

cb – Callback for unbinding of a shared resource

This function sets the callback for unbinding of a shared resource.

doca_error_t doca_flow_cfg_set_rss_key(struct doca_flow_cfg *cfg, const uint8_t *rss_key, uint32_t rss_key_len);

cfg – DOCA Flow configuration structure

rss_key – RSS hash key

rss_key_len – RSS hash key length (in bytes)

This function sets the RSS key used by default RSS rules.

If not set, the underlying driver's default RSS key is used.

doca_error_t doca_flow_cfg_set_default_rss(struct doca_flow_cfg *cfg, const struct doca_flow_resource_rss_cfg *rss);

cfg – DOCA Flow configuration structure

rss – RSS global configuration

This function sets the global RSS configuration for all ports. It is overridden by the port's RSS configuration. This configuration is used at port start which, in non-isolated mode, creates default RSS rules to forward incoming packets to traffic receive queues (i.e., RXQ) created by the user.

If users creates their own hairpin queues (i.e., doca_flow_port_cfg.dev is not set in "switch" mode), RSS configuration must also be set to reflect the number of traffic receive queues and calculate the correct hairpin queue index internally. Otherwise, packets would not go to the correct hairpin queue.

doca_flow_entry_process_cb

typedef void (*doca_flow_entry_process_cb)(struct doca_flow_pipe_entry *entry,

uint16_t pipe_queue, enum doca_flow_entry_status status,

enum doca_flow_entry_op op, void *user_ctx);

entry [in] – p ointer to pipe entry

pipe_queue [in] – q ueue identifier

status [in] – entry processing status (see doca_flow_entry_status)

op [in] – entry's operation, defined in the following enum:

DOCA_FLOW_ENTRY_OP_ADD – Add entry operation

DOCA_FLOW_ENTRY_OP_DEL – Delete entry operation

DOCA_FLOW_ENTRY_OP_UPD – Update entry operation

DOCA_FLOW_ENTRY_OP_AGED – Aged entry operation

user_ctx [in] – user context as provided to doca_flow_pipe_add_entry

NoteUser context is set once to the value provided to doca_flow_pipe_add_entry (or to any doca_flow_pipe_*_add_entry variant) as the usr_ctx parameter, and is then reused in subsequent callback invocation for all operations. This user context must remain available for all potential invocations of the callback depending on it, as it is memorized as part of the entry and provided each time.

shared_resource_unbind_cb

typedef void (*shared_resource_unbind_cb)(enum engine_shared_resource_type type,

uint32_t shared_resource_id,

struct engine_bindable *bindable);

type [in] – engine shared resource type. Supported types are: meter, counter, rss, crypto, mirror.

shared_resource_id [in] – shared resource; unique ID

bindable [in] – pointer to bindable object (e.g., port, pipe)

doca_flow_resource_rss_cfg

struct doca_flow_resource_rss_cfg {

uint32_t outer_flags;

uint32_t inner_flags;

uint16_t *queues_array;

int nr_queues;

enum doca_flow_rss_hash_function rss_hash_func;

};

outer_flags – RSS offload type on the outermost packet

inner_flags – RSS offload type on the innermost packet

queues_array – pointer to receive queues ID (i.e., [0, 1, 2, 3])

nr_queues – number of receive queues ID (i.e., 4)

rss_hash_func – RSS hash type, DOCA_FLOW_RSS_HASH_FUNCTION_TOEPLITZ or DOCA_FLOW_RSS_HASH_FUNCTION_SYMMETRIC_TOEPLITZ

Noteouter_flags , inner_flags , rss_hash_func are ignored by default RSS rules which use the driver's default values.

doca_flow_port_cfg

This struct is required input for the DOCA Flow port initialization function, doca_flow_port_start. The user must create and set this structure's fields using the following API:

doca_error_t doca_flow_port_cfg_create(struct doca_flow_port_cfg **cfg);

cfg – DOCA Flow port configuration structure

This function creates and allocates the DOCA Flow port configuration structure.

doca_error_t doca_flow_port_cfg_destroy(struct doca_flow_port_cfg *cfg);

cfg – DOCA Flow port configuration structure

This function destroys and frees the DOCA Flow port configuration structure.

doca_error_t doca_flow_port_cfg_set_devargs(struct doca_flow_port_cfg *cfg, const char *devargs);

cfg – DOCA Flow port configuration structure

devargs – A string containing specific configuration port configurations

This function sets specific port configurations.

For usage information of the type and devargs fields, refer to section "Start Point".

doca_error_t doca_flow_port_cfg_set_priv_data_size(struct doca_flow_port_cfg *cfg, uint16_t priv_data_size);

cfg – DOCA Flow port configuration structure

priv_data_size – User private data size

This function sets the user's private data size. Per port, users may define private data where application-specific info can be stored.

doca_error_t doca_flow_port_cfg_set_dev(struct doca_flow_port_cfg *cfg, void *dev);

cfg – DOCA Flow port configuration structure

dev – Device

This function sets the port's doca_dev.

Used to create internal hairpin resource for switch mode and crypto resources

Mandatory for switch mode and to use PSP or IPsec SA shared resources

doca_error_t doca_flow_port_cfg_set_rss_cfg(struct doca_flow_port_cfg *cfg, const struct doca_flow_resource_rss_cfg *rss_cfg);

cfg – DOCA Flow port configuration structure

rss_cfg – RSS configuration

This function sets the port's RSS configuration (optional) used by default RSS rules of this port.

This configuration overrides global RSS configuration in doca_flow_cfg.

This configuration is used to create default RSS rules of this port to forward packets to traffic receive queues (i.e., RXQ) in non-isolated mode.

doca_flow_pipe_cfg

This is a pipe configuration that contains the user-defined template for the packet process. The user must create and set this structure's fields using the following API:

doca_error_t doca_flow_pipe_cfg_create(struct doca_flow_pipe_cfg **cfg, struct doca_flow_port *port);

cfg – DOCA Flow port configuration structure

port – DOCA Flow port

This function creates and allocates the DOCA Flow port configuration structure. It also sets the port of the pipeline.

The port here is a final parameter (i.e., to set another port, the user must first destroy the current pipe configuration).

doca_error_t doca_flow_pipe_cfg_destroy(struct doca_flow_pipe_cfg *cfg);

cfg – DOCA Flow port configuration structure

This function destroys and frees the DOCA Flow pipe configuration structure.

doca_error_t doca_flow_pipe_cfg_set_match(struct doca_flow_pipe_cfg *cfg, const struct doca_flow_match *match, const struct doca_flow_match *match_mask);

cfg – DOCA Flow port configuration structure

match – DOCA Flow match

match_mask – DOCA Flow match mask

This function sets the matcher and the match mask for the pipeline.

Note that:

match is for all pipe types except for DOCA_FLOW_PIPE_HASH where it is ignored

match_mask is only for pipes of types DOCA_FLOW_PIPE_BASIC, DOCA_FLOW_PIPE_CONTROL, DOCA_FLOW_PIPE_HASH, and DOCA_FLOW_PIPE_ORDERED_LIST

If one of the fields is not relevant, user should pass NULL instead.

doca_error_t doca_flow_pipe_cfg_set_actions(struct doca_flow_pipe_cfg *cfg, struct doca_flow_actions *const *actions, struct doca_flow_actions *const *actions_masks, struct doca_flow_action_descs *const *action_descs, size_t nr_actions);

cfg – DOCA Flow pipe configuration structure

actions – DOCA Flow actions array

actions_masks – DOCA Flow actions mask array

action_descs – DOCA Flow actions descriptor array

nr_actions – Number of actions

This function sets the actions, actions mask, and actions descriptor for the pipeline.

Note that:

actions is only for pipes of type DOCA_FLOW_PIPE_BASIC and DOCA_FLOW_PIPE_HASH

actions_masks is only for pipes of type DOCA_FLOW_PIPE_BASIC and DOCA_FLOW_PIPE_HASH

action_descs is only for pipes of type DOCA_FLOW_PIPE_BASIC, DOCA_FLOW_PIPE_CONTROL, and DOCA_FLOW_PIPE_HASH

If one of the fields is not relevant, user should pass NULL instead.

doca_error_t doca_flow_pipe_cfg_set_monitor(struct doca_flow_pipe_cfg *cfg, const struct doca_flow_monitor *monitor);

cfg – DOCA Flow port configuration structure

monitor – DOCA Flow monitor

This function sets the monitor for the pipeline.

Note that the monitor is only for pipes of type DOCA_FLOW_PIPE_BASIC, DOCA_FLOW_PIPE_CONTROL, and DOCA_FLOW_PIPE_HASH.

doca_error_t doca_flow_pipe_cfg_set_ordered_lists(struct doca_flow_pipe_cfg *cfg, struct doca_flow_ordered_list *const *ordered_lists, size_t nr_ordered_lists);

cfg – DOCA Flow port configuration structure

ordered_lists – DOCA Flow ordered lists array

nr_ordered_lists – Number of ordered lists

This function sets an array of ordered list types for the pipeline.

struct doca_flow_ordered_list {

uint32_t idx;

uint32_t size;

const void **elements;

enum doca_flow_ordered_list_element_type *types;

};

idx – List index among the lists of the pipe

At pipe creation, it must match the list position in the array of lists

At entry insertion, it determines which list to use

size – Number of elements in the list

elements – An array of DOCA Flow structure pointers, depending on the types

types – Types of DOCA Flow structures each of the elements is pointing to. This field includes the following ordered list element types:

DOCA_FLOW_ORDERED_LIST_ELEMENT_ACTIONS – Ordered list element is struct doca_flow_actions. The next element is struct doca_flow_action_descs which is associated with the current element.

DOCA_FLOW_ORDERED_LIST_ELEMENT_ACTION_DESCS – Ordered list element is struct doca_flow_action_descs. If the previous element type is ACTIONS, the current element is associated with it. Otherwise, the current element is ordered with regards to the previous one.

DOCA_FLOW_ORDERED_LIST_ELEMENT_MONITOR – Ordered list element is struct doca_flow_monitor

Note that the ordered lists are only for pipes of type DOCA_FLOW_PIPE_ORDERED_LIST.

doca_error_t doca_flow_pipe_cfg_set_name(struct doca_flow_pipe_cfg *cfg, const char *name);

cfg – DOCA Flow port configuration structure

name – Pipe name

This function sets the name of the pipeline.

doca_error_t doca_flow_pipe_cfg_set_type(struct doca_flow_pipe_cfg *cfg, enum doca_flow_pipe_type type);

cfg – DOCA Flow port configuration structure

type – DOCA Flow pipe type

This function sets the type of the pipeline, it includes the following pipe types:

DOCA_FLOW_PIPE_BASIC – Flow pipe

DOCA_FLOW_PIPE_CONTROL – Control pipe

DOCA_FLOW_PIPE_LPM – LPM pipe

DOCA_FLOW_PIPE_ACL – ACL pipe

DOCA_FLOW_PIPE_ORDERED_LIST – Ordered list pipe

DOCA_FLOW_PIPE_HASH – Hash pipe

If not set, then by default, the pipeline's type is DOCA_FLOW_PIPE_BASIC:

doca_error_t doca_flow_pipe_cfg_set_domain(struct doca_flow_pipe_cfg *cfg, enum doca_flow_pipe_domain domain);

cfg – DOCA Flow port configuration structure

domain – DOCA Flow pipe steering domain

This function sets the steering domain of the pipeline, it includes the following domains:

DOCA_FLOW_PIPE_DOMAIN_DEFAULT – Default pipe domain for actions on ingress traffic

DOCA_FLOW_PIPE_DOMAIN_SECURE_INGRESS – Pipe domain for secure actions on ingress traffic

DOCA_FLOW_PIPE_DOMAIN_EGRESS – Pipe domain for actions on egress traffic

DOCA_FLOW_PIPE_DOMAIN_SECURE_EGRESS – Pipe domain for actions on egress traffic

If not set, then by default, the pipeline's steering domain is DOCA_FLOW_PIPE_DOMAIN_DEFAULT.

doca_error_t doca_flow_pipe_cfg_set_is_root(struct doca_flow_pipe_cfg *cfg, bool is_root);

cfg – DOCA Flow pipe configuration structure

is_root – If the pipe is root

This function determines whether the pipeline is root. If true, then the pipe is a root pipe executed on packet arrival. If not set, then by default, the pipeline is not root.

Only one root pipe is allowed per port of any type.

doca_error_t doca_flow_pipe_cfg_set_nr_entries(struct doca_flow_pipe_cfg *cfg, uint32_t nr_entries);

cfg – DOCA Flow port configuration structure

nr_entries – Maximum number of flow rules

This function sets the pipeline's maximum number of flow rules. If not set, then by default, the maximum number of flow rules is 8k.

doca_error_t doca_flow_pipe_cfg_set_is_resizable(struct doca_flow_pipe_cfg *cfg, bool is_resizable);

cfg – DOCA Flow port configuration structure

is_resizable – If the pipe is resizable

This function determines whether the pipeline supports the resize operation. If not set, then by default, the pipeline does not support the resize operation.

doca_error_t doca_flow_pipe_cfg_set_enable_strict_matching(struct doca_flow_pipe_cfg *cfg, bool enable_strict_matching);

cfg – DOCA Flow port configuration structure

enable_strict_matching – If the pipe supports strict matching

This function determines whether the pipeline supports strict matching.

If true, relaxed matching (enabled by default) is disabled for this pipe

If not set, then by default, the pipeline doesn't support strict matching

doca_error_t doca_flow_pipe_cfg_set_dir_info(struct doca_flow_pipe_cfg *cfg, enum doca_flow_direction_info dir_info);

cfg – DOCA Flow port configuration structure

dir_info – DOCA Flow direction info

This function sets the pipeline's direction information:

DOCA_FLOW_DIRECTION_BIDIRECTIONAL – Default for traffic in both directions

DOCA_FLOW_DIRECTION_NETWORK_TO_HOST – Network to host traffic

DOCA_FLOW_DIRECTION_HOST_TO_NETWORK – Host to network traffic

dir_info is supported in Switch Mode only.

dir_info is optional. It can provide potential optimization at the driver layer. Configuring the direction information properly optimizes the traffic steering.

doca_error_t doca_flow_pipe_cfg_set_miss_counter(struct doca_flow_pipe_cfg *cfg, bool miss_counter);

cfg – DOCA Flow port configuration structure

miss_counter – If to enable miss counter

This function determines whether to add a miss counter to the pipe. If true, then the pipe would have a miss counter and the user can query it using doca_flow_query_pipe_miss. If not set, then by default, the miss counter is disabled.

Miss counter may impact performance and should be avoided if not required by the application.

doca_error_t doca_flow_pipe_cfg_set_congestion_level_threshold(struct doca_flow_pipe_cfg *cfg, uint8_t congestion_level_threshold);

cfg – DOCA Flow port configuration structure

congestion_level_threshold – Congestion level threshold

This function sets the congestion threshold for the pipe in percentage (0,100]. If not set, then by default, the congestion threshold is zero.

doca_error_t doca_flow_pipe_cfg_set_user_ctx(struct doca_flow_pipe_cfg *cfg, void *user_ctx);

cfg – DOCA Flow port configuration structure

user_ctx – User context

This function sets the pipeline's user context.

doca_flow_parser_geneve_opt_cfg

This is a parser configuration that contains the user-defined template for a single GENEVE TLV option.

struct doca_flow_parser_geneve_opt_cfg {

enum doca_flow_parser_geneve_opt_mode match_on_class_mode;

doca_be16_t option_class;

uint8_t option_type;

uint8_t option_len;

doca_be32_t data_mask[DOCA_FLOW_GENEVE_DATA_OPTION_LEN_MAX];

};

match_on_class_mode – role of option_class in this option (enum doca_flow_parser_geneve_opt_mode). This field includes the following class modes:

DOCA_FLOW_PARSER_GENEVE_OPT_MODE_IGNORE – class is ignored, its value is neither part of the option identifier nor changeable per pipe/entry

DOCA_FLOW_PARSER_GENEVE_OPT_MODE_FIXED – class is fixed (the class defines the option along with the type)

DOCA_FLOW_PARSER_GENEVE_OPT_MODE_MATCHABLE – class is the field of this option; different values can be matched for the same option (defined by type only)

option_class – option class ID (must be set when class mode is fixed)

option_type – option type

option_len – length of the option data (in 4-byte granularity)

data_mask – mask for indicating which dwords (DWs) should be configured on this option

NoteThis is not a bit mask. Each DW can contain either 0xffffffff for configure or 0x0 for ignore. Other values are not valid.

doca_flow_meta

There is a maximum DOCA_FLOW_META_MAX-byte scratch area which exists throughout the pipeline.

The user can set a value to metadata, copy from a packet field, then match in later pipes. Mask is supported in both match and modification actions.

The user can modify the metadata in different ways based on its actions' masks or descriptors:

ADD – set metadata scratch value from a pipe action or an action of a specific entry. Width is specified by the descriptor.

COPY – copy metadata scratch value from a packet field (including the metadata scratch itself). Width is specified by the descriptor.

NoteIn a real application, it is encouraged to create a union of doca_flow_meta defining the application's scratch fields to use as metadata.

struct doca_flow_meta { union { uint32_t pkt_meta; /**< Shared with application via packet. */ struct { uint32_t lag_port :2; /**< Bits of LAG member port. */ uint32_t type :2; /**< 0: traffic 1: SYN 2: RST 3: FIN. */ uint32_t zone :28; /**< Zone ID for CT processing. */ } ct; }; uint32_t u32[DOCA_FLOW_META_MAX / 4 - 1]; /**< Programmable user data. */ uint32_t mark; /**< Mark id. */ };

pkt_meta – Metadata can be received along with the packet

u32[] – Scratch are u32[]

u32[0] contains the IPsec syndrome in the lower 8 bits if the packet passes the pipe with IPsec crypto action configured in full offload mode:

0 – signifies a successful IPsec operation on the packet

1 – bad replay. Ingress packet sequence number is beyond anti-reply window boundaries.

u32[1] contains the IPsec packet sequence number (lower 32 bits) if the packet passes the pipe with IPsec crypto action configured in full offload mode

mark – O ptional parameter that may be communicated to the software. If it is set and the packet arrives to the software, the value can be examined using the software API.

When DPDK is used, MARK is placed on the struct rte_mbuf (see "Action: MARK" section in official DPDK documentation )

When the Kernel is used, MARK is placed on the struct sk_buff 's MARK field

Some DOCA pipe types (or actions) use several bytes in the scratch area for internal usage. So, if the user has set these bytes in PIPE-1 and read them in PIPE-2, and between PIPE-1 and PIPE-2 there is PIPE-A which also uses these bytes for internal purpose, then these bytes are overwritten by the PIPE-A. This must be considered when designing the pipe tree.

The bytes used in the scratch area are presented by pipe type in the following table:

|

Pipe Type/Action |

Bytes Used in Scratch |

|

orderd_list |

[0, 1, 2, 3] |

|

LPM |

[0, 1, 2, 3] |

|

mirror |

[0, 1, 2, 3] |

|

ACL |

[0, 1, 2, 3] |

|

Fwd from ingress to egress |

[0, 1, 2, 3] |

doca_flow_parser_meta

This structure contains all metadata information which hardware extracts from the packet.

These fields contain read-only hardware data which can be used to match on.

struct doca_flow_parser_meta {

uint32_t port_meta;

uint16_t random;

uint8_t ipsec_syndrome;

uint8_t psp_syndrome;

enum doca_flow_meter_color meter_color;

enum doca_flow_l2_meta outer_l2_type;

enum doca_flow_l3_meta outer_l3_type;

enum doca_flow_l4_meta outer_l4_type;

enum doca_flow_l2_meta inner_l2_type;

enum doca_flow_l3_meta inner_l3_type;

enum doca_flow_l4_meta inner_l4_type;

uint8_t outer_ip_fragmented;

uint8_t inner_ip_fragmented;

uint8_t outer_l3_ok;

uint8_t outer_ip4_checksum_ok;

uint8_t outer_l4_ok;

uint8_t outer_l4_checksum_ok;

uint8_t inner_l3_ok;

uint8_t inner_ip4_checksum_ok;

uint8_t inner_l4_ok;

uint8_t inner_l4_checksum_ok;

};

port_meta – Programmable source vport.

random – Random value to match regardless to packet data/headers content. Application should not assume that this value is kept during the lifetime of the packet. It holds a different random value for each matching.

NoteWhen random matching is used for sampling, the number of entries in the pipe must be 1 (doca_flow_pipe_attr.nb_flows = 1).

ipsec_syndrome – IPsec decrypt/authentication syndrome, Valid syndromes:

DOCA_FLOW_CRYPTO_SYNDROME_OK- IPsec decryption and authentication success

DOCA_FLOW_CRYPTO_ICV_FAIL - IPsec authentication failure

DOCA_FLOW_CRYPTO_BAD_TRAILER - IPsec trailer length exceeded ESP payload

psp_syndrome – PSP decrypt/authentication syndrome, Valid syndromes:

DOCA_FLOW_CRYPTO_SYNDROME_OK- PSP decryption and authentication success

DOCA_FLOW_CRYPTO_ICV_FAIL - PSP authentication failure

DOCA_FLOW_CRYPTO_BAD_TRAILER - PSP trailer overlaps with headers

meter_color – Meter colors (enum doca_flow_meter_color). Valid colors:

DOCA_FLOW_METER_COLOR_GREEN – Meter marking packet color as green

DOCA_FLOW_METER_COLOR_YELLOW – Meter marking packet color as yellow

DOCA_FLOW_METER_COLOR_RED – Meter marking packet color as red.

outer_l2_type – Outer L2 packet type (enum doca_flow_l2_meta). Valid L2 types:

DOCA_FLOW_L2_META_NO_VLAN – No VLAN present

DOCA_FLOW_L2_META_SINGLE_VLAN – Single VLAN present

DOCA_FLOW_L2_META_MULTI_VLAN – Multiple VLAN present

outer_l3_type – Outer L3 packet type (enum doca_flow_l3_meta). Valid L3 types:

DOCA_FLOW_L3_META_NONE – L3 type is none of the below

DOCA_FLOW_L3_META_IPV4 – L3 type is IPv4

DOCA_FLOW_L3_META_IPV6 – L3 type is IPv6

outer_l4_type – Outer L4 packet type (enum doca_flow_l4_meta). Valid L4 types:

DOCA_FLOW_L4_META_NONE – L4 type is none of the below

DOCA_FLOW_L4_META_TCP – L4 type is TCP

DOCA_FLOW_L4_META_UDP – L4 type is UDP

DOCA_FLOW_L4_META_ICMP – L4 type is ICMP or ICMPv6

DOCA_FLOW_L4_META_ESP – L4 type is ESP

inner_l2_type – Inner L2 packet type (enum doca_flow_l2_meta). Valid L2 types:

DOCA_FLOW_L2_META_NO_VLAN – No VLAN present

DOCA_FLOW_L2_META_SINGLE_VLAN – Single VLAN present

DOCA_FLOW_L2_META_MULTI_VLAN – Multiple VLAN present

inner_l3_type – Inner L3 packet type (enum doca_flow_l3_meta). Valid L3 types:

DOCA_FLOW_L3_META_NONE – L3 type is none of the below

DOCA_FLOW_L3_META_IPV4 – L3 type is IPv4

DOCA_FLOW_L3_META_IPV6 – L3 type is IPv6

inner_l4_type – Inner L4 packet type (enum doca_flow_l4_meta). Valid L4 types:

DOCA_FLOW_L4_META_NONE – L4 type is none of the below

DOCA_FLOW_L4_META_TCP – L4 type is TCP

DOCA_FLOW_L4_META_UDP – L4 type is UDP

DOCA_FLOW_L4_META_ICMP – L4 type is ICMP or ICMPv6

DOCA_FLOW_L4_META_ESP – L4 type is ESP

outer_ip_fragmented – Whether outer IP packet is fragmented

inner_ip_fragmented – Whether inner IP packet is fragmented

outer_l3_ok – Whether outer network layer is valid regardless to IPv4 checksum

outer_ip4_checksum_ok – Whether outer IPv4 checksum is valid, packets without outer IPv4 header are taken as invalid checksum

outer_l4_ok – Whether outer transport layer is valid including L4 checksum

outer_l4_checksum_ok – Whether outer transport layer checksum is valid. Packets without outer TCP/UDP header are taken as invalid checksum.

inner_l3_ok – Whether inner network layer is valid regardless to IPv4 checksum

inner_ip4_checksum_ok – Whether inner IPv4 checksum is valid. Packets without inner IPv4 header are taken as invalid checksum.

inner_l4_ok – Whether inner transport layer is valid including L4 checksum

inner_l4_checksum_ok – Whether inner transport layer checksum is valid. Packets without inner TCP/UDP header are taken as invalid checksum.

Matching on either outer_l4_ok=1 or inner_l4_ok=1 means that all L4 checks (length, checksum, etc.) are ok.

Matching on either outer_l4_ok=0 or inner_l4_ok=0 means that all L4 checks are not ok.

It is not possible to match using these fields for cases where a part of the checks is okay and a part is not ok.

doca_flow_header_format

This structure defines each layer of the packet header format.

struct doca_flow_header_format {

struct doca_flow_header_eth eth;

uint16_t l2_valid_headers;

struct doca_flow_header_eth_vlan eth_vlan[DOCA_FLOW_VLAN_MAX];

enum doca_flow_l3_type l3_type;

union {

struct doca_flow_header_ip4 ip4;

struct doca_flow_header_ip6 ip6;

};

enum doca_flow_l4_type_ext l4_type_ext;

union {

struct doca_flow_header_icmp icmp;

struct doca_flow_header_udp udp;

struct doca_flow_header_tcp tcp;

struct doca_flow_header_l4_port transport;

};

};

eth – Ethernet header format, including source and destination MAC address and the Ethernet layer type. If a VLAN header is present then eth.type represents the type following the last VLAN tag.

l2_valid_headers – bitwise OR one of the following options: DOCA_FLOW_L2_VALID_HEADER_VLAN_0, DOCA_FLOW_L2_VALID_HEADER_VLAN_1

eth_vlan – VLAN tag control information for each VLAN header.

l3_type – Layer 3 type, indicates the next layer is IPv4 or IPv6.

ip4 – IPv4 header format.

ip6 – IPv6 header format.

l4_type_ext – The next layer type after the layer 3.

icmp – ICMP header format.

udp – UDP header format.

tcp – TCP header format.

transport – header format for source and destination ports; used for defining match or actions with relaxed matching while not caring about the L4 protocol (whether TCP or UDP). This is used only if the l4_type_ext is DOCA_FLOW_L4_TYPE_EXT_TRANSPORT.

doca_flow_header_ip4

This structure defines IPv4 fields. This structure is relevant only when l3_type is DOCA_DOCA_FLOW_L3_TYPE_IP4.

struct doca_flow_header_ip4 {

doca_be32_t src_ip;

doca_be32_t dst_ip;

uint8_t version_ihl;

uint8_t dscp_ecn;

doca_be16_t total_len;

doca_be16_t identification;

doca_be16_t flags_fragment_offset;

uint8_t next_proto;

uint8_t ttl;

};

src_ip – source IP address.

dst_ip – destination IP address.

version_ihl – IP version (4 bits) and Internet Header Length (4 bits). The IHL part is supported as destination in DOCA_FLOW_ACTION_ADD operation.

dscp_ecn – type of service containing DSCP (6 bits) and ECN.

total_len – total length field. It is supported as destination in DOCA_FLOW_ACTION_ADD operation.

identification – IP fragment identification.

flags_fragment_offset – IP fragment flags (3 bits) and IP fragment offset (13 bits).

next_proto – IP next protocol.

ttl – Time-to-live. It is supported as destination in DOCA_FLOW_ACTION_ADD operation.

doca_flow_header_ip6

This structure defines IPv6 fields. This structure is relevant only when l3_type is DOCA_DOCA_FLOW_L3_TYPE_IP6.

struct doca_flow_header_ip6 {

doca_be32_t src_ip[4];

doca_be32_t dst_ip[4];

uint8_t traffic_class;

doca_be32_t flow_label;

doca_be16_t payload_len;

uint8_t next_proto;

uint8_t hop_limit;

};

src_ip – source IP address.

dst_ip – destination IP address.

traffic_class – traffic class containing DSCP (6 bits) and ECN.

flow_label – Only 20 bits (LSB) are relevant for specific value/mask, but marking it as changeable is done by all 32 bits (0xffffffff).

payload_len – Payload length. It is supported as destination in DOCA_FLOW_ACTION_ADD operation.

next_proto – IP next protocol.

hop_limit – Supported as destination in DOCA_FLOW_ACTION_ADD operation.

doca_flow_tun

This structure defines tunnel headers.

struct doca_flow_tun {

enum doca_flow_tun_type type;

union {

struct {

enum doca_flow_tun_ext_vxlan_type vxlan_type;

union {

uint8_t vxlan_next_protocol;

doca_be16_t vxlan_group_policy_id;

};

doca_be32_t vxlan_tun_id;

};

struct {

enum doca_flow_tun_ext_gre_type gre_type;

doca_be16_t protocol;

union {

struct {

bool key_present;

doca_be32_t gre_key;

};

struct {

doca_be32_t nvgre_vs_id;

uint8_t nvgre_flow_id;

};

};

};

struct {

doca_be32_t gtp_teid;

};

struct {

doca_be32_t esp_spi;

doca_be32_t esp_sn;

};

struct {

struct doca_flow_header_mpls mpls[DOCA_FLOW_MPLS_LABELS_MAX];

};

struct {

struct doca_flow_header_geneve geneve;

union doca_flow_geneve_option geneve_options[DOCA_FLOW_GENEVE_OPT_LEN_MAX];

};

struct {

struct doca_flow_header_psp psp;

};

};

};

type – type of tunnel (enum doca_flow_tun_type). Valid tunnel types:

DOCA_FLOW_TUN_VXLAN – VXLAN tunnel

DOCA_FLOW_TUN_GRE – GRE tunnel with option KEY (optional)

DOCA_FLOW_TUN_GTP – GTP tunnel

DOCA_FLOW_TUN_ESP – ESP tunnel

DOCA_FLOW_TUN_MPLS_O_UDP – MPLS tunnel (supports up to 5 headers)

DOCA_FLOW_TUN_GENEVE – GENEVE header format including option length, VNI, next protocol, and options.

DOCA_FLOW_TUN_PSP – PSP tunnel

vxlan_type – type of VXLAN extension (enum doca_flow_tun_ext_vxlan_type). Valid extension types:

DOCA_FLOW_TUN_EXT_VXLAN_STANDARD – Standard VXLAN tunnel (default)

DOCA_FLOW_TUN_EXT_VXLAN_GPE – VXLAN-GPE

DOCA_FLOW_TUN_EXT_VXLAN_GBP – VXLAN-GBP

vxlan_next_protocol – VXLAN-GPE next protocol

vxlan_group_policy_id – VXLAN-GBP group policy_id

vxlan_tun_vni – VNI (24) + reserved (8)

gre_type – type of GRE extension(enum doca_flow_tun_ext_gre_type). Valid extension types:

DOCA_FLOW_TUN_EXT_GRE_STANDARD – Standard GRE tunnel (default)

DOCA_FLOW_TUN_EXT_GRE_NVGRE – NVGRE

protocol – GRE next protocol

key_present – GRE option KEY is present

gre_key – GRE key option, match on this field only when key_present is true

nvgre_vs_id – NVGRE virtual subnet id(24) + reserved (8)

nvgre_flow_id – NVGRE flow ID

gtp_teid – GTP TEID

esp_spi – IPsec session parameter index

esp_sn – IPsec sequence number

mpls – List of MPLS header format

geneve – GENEVE header format

geneve_options – List DWs describing GENEVE TLV options

psp – PSP header format

The following table details which tunnel types support which operation on the tunnel header:

|

Tunnel Type |

Match 1 |

L2 encap 2 |

L2 decap 2 |

L3 encap 2 |

L3 decap 2 |

Modify 3 |

Copy 4 |

|

DOCA_FLOW_TUN_VXLAN |

✔ |

✔ |

✔ |

✘ |

✘ |

✔ |

✔ |

|

DOCA_FLOW_TUN_GRE |

✔ |

✔ |

✔ |

✔ |

✔ |

✘ |

✘ |

|

DOCA_FLOW_TUN_GTP |

✔ |

✘ |

✘ |

✔ |

✔ |

✔ |

✔ |

|

DOCA_FLOW_TUN_ESP |

✔ |

✘ |

✘ |

✘ |

✘ |

✔ |

✔ |

|

DOCA_FLOW_TUN_MPLS_O_UDP |

✔ |

✘ |

✘ |

✔ |

✔ |

✘ |

✔ |

|

DOCA_FLOW_TUN_GENEVE |

✔ |

✔ |

✔ |

✔ |

✔ |

✔ |

✔ |

|

DOCA_FLOW_TUN_PSP |

✔ |

✘ |

✘ |

✘ |

✘ |

✔ |

✔ |

Support for matching on this tunnel header, configured in the tun field in struct doca_flow_match.

Decapsulation/encapsulation of the tunnel header is to be enabled in struct doca_flow_actions using encap_type and decap_type. It is the user's responsibility to determine whether a tunnel is type L2 or L3. If the user sets settings unaligned with the packets coming, anomalous behavior may occur.

Support for modifying this tunnel header, configured in the tun field in struct doca_flow_actions.

Support for copying fields to/from this tunnel header, configured in struct doca_flow_action_descs.

DOCA Flow Tunnel GENEVE

The DOCA_FLOW_TUN_GENEVE type includes the basic header for GENEVE and an array for GENEVE TLV options.

The GENEVE TLV options must be configured before in parser creation (doca_flow_parser_geneve_opt_create).

doca_flow_header_geneve

This structure defines GENEVE protocol header.

struct doca_flow_header_geneve {

uint8_t ver_opt_len;

uint8_t o_c;

doca_be16_t next_proto;

doca_be32_t vni;

};

ver_opt_len – version (2) + options length (6). The length is expressed in 4-byte multiples, excluding the GENEVE header.

o_c – OAM packet (1) + critical options present (1) + reserved (6).

next_proto – GENEVE next protocol. When GENEVE has options, it describes the protocol after the options.

gre_key – GENEVE VNI (24) + reserved (8).

doca_flow_geneve_option

This object describes a single DW (4-bytes) from the GENEVE option header. It describes either the first DW in the option including class, type, and length, or any other data DW.

union doca_flow_geneve_option {

struct {

doca_be16_t class_id;

uint8_t type;

uint8_t length;

};

doca_be32_t data;

};

class_id – Option class ID

type – Option type

length – Reserved (3) + option data length (5). The length is expressed in 4-byte multiples, excluding the option header.

data – 4 bytes of option data

GENEVE Matching Notes

Option type and length must be provided for each option at pipe creation time in a match structure

When class mode is DOCA_FLOW_PARSER_GENEVE_OPT_MODE_FIXED, option class must also be provided for each option at pipe creation time in a match structure

Option length field cannot be matched

Type field is the option identifier, it must be provided as a specific value upon pipe creation

Option data is taken as changeable when all data is filled with 0xffffffff including DWs which were not configured at parser creation time

In the match_mask structure, the DWs which have not been configured at parser creation time must be zero

GENEVE Modification Notes

When GENEVE option modification is requested, the actions_mask structure must be provided. The option identifiers are provided in the mask structure while the values are provided in actions structure.

The options type and length must be provided for each option at pipe creation time in an action mask structure.

When the class mode is DOCA_FLOW_PARSER_GENEVE_OPT_MODE_FIXED, the option class must also be provided for each option at pipe creation time in an action mask structure.

Since class_id and type mask fields are used for identifiers, their modification is limited:

Specific value 0 is not supported on pipe creation, value 0 is interpreted as ignored. Modifying them to 0 is enabled per entry when they are marked as changeable during pipe creation.

Modifying partial mask is not supported.

The option length field cannot be modified.

The option data is taken as changeable when all the data is filled with 0xffffffff including DWs which were not configured at parser creation time.

In the actions_mask structure, the DWs which have not been configured at parser creation time must be zero.

Only data DW configured during parser creation can be modified.

Modification of the options type and class_id is supported only for options configured with class mode DOCA_FLOW_PARSER_GENEVE_OPT_MODE_MATCHABLE.

The order of options in the action structure does not necessarily reflect their order in the packet or match structure.

GENEVE Encapsulation Notes

The encapsulation size is constant per doca_flow_actions structure. The size is determined by the tun.geneve.ver_opt_len field, so it must be specified at pipe creation time.

The total encapsulation size is limited by 128 bytes. The tun.geneve.ver_opt_len field should follow this limitation according to the requested outer header sizes:

Header Included in Outer

Maximal tun.geneve.ver_opt_len Value

ETH + VLAN + IPV4 + UDP + GENEVE

18

ETH + VLAN + IPV6 + UDP + GENEVE

13

ETH + IPV4 + UDP + GENEVE

19

ETH + IPV6 + UDP + GENEVE

14

Options in encapsulation data do not have to be configured at parser creation time.

When at least one of the encap fields are changeable, GENEVE options are also taken as changeable regardless of values provided during pipe creation time. Thus, GENEVE option values must be provided again for each entry.

GENEVE Decapsulation Notes

The options to decapsulate do not have to be configured at parser creation time.

DOCA Flow Tunnel PSP

doca_flow_header_psp

This structure defines PSP protocol header.

struct doca_flow_header_psp {

uint8_t nexthdr;

uint8_t hdrextlen;

uint8_t res_cryptofst;

uint8_t s_d_ver_v;

doca_be32_t spi;

doca_be64_t iv;

doca_be64_t vc;

};

nexthdr – An IP protocol number, identifying the type of the next header.

hdrextlen – Length of this header in 8-octet units, not including the first 8 octets. When hdrextlen is non-zero, a virtualization cookie and/or other header extension fields may be present.

res_cryptofst – Reserved (2) + crypt offset (6). Crypt offset is the offset from the end of the initialization vector to the start of the encrypted portion of the payload, measured in 4-octet units.

s_d_ver_v – Sample at receiver (1) + drop after sampling (1) + version (4 bits) + V (1 bit) + 1 (always set bit).

spi – A 32-bit value that is used by a receiver to identify the security association (SA) to which an incoming packet is bound. High-order bit of this value indicates which master key is to be used for decryption.

iv – A unique value for each packet sent over a SA.

vc – An optional field, present if and only if V is set. It may contain a VNI or other data, as defined by the implementation of doca_flow_resource_meter_cfg.

All PSP header fields and bits can be matched/modified. Special care must be taken on the mask used to isolated desired bits; especially in byte fields that contains multiple bit fields like s_d_ver_v.

doca_flow_match

This structure is a match configuration that contains the user-defined fields that should be matched on the pipe.

struct doca_flow_match {

uint32_t flags;

struct doca_flow_meta meta;

struct doca_flow_parser_meta parser_meta;

struct doca_flow_header_format outer;

struct doca_flow_tun tun;

struct doca_flow_header_format inner;

};

flags – Match items which are no value needed.

meta – Programmable metadata.

parser_meta – Read-only metadata.

outer – Outer packet header format.

tun – Tunnel info

inner – Inner packet header format.

doca_flow_match_condition

This structure is a "match with compare result" configuration that contains the user-defined fields compare result that should be matched on the pipe. It can only be used for adding control pipe entry.

struct doca_flow_match_condition {

enum doca_flow_compare_op operation;

union {

struct {

struct doca_flow_desc_field a;

struct doca_flow_desc_field b;

uint32_t width;

} field_op;

};

};

operation – Match condition operation

a – Compare field descriptor A. The string field must be specified.

b – Compare field descriptor B. When string field is NULL, B is the immediate value. The value is taken from the field described by the A string in the attached match structure.

width – Compare width

The operation field includes the following compare operations:

DOCA_FLOW_COMPARE_EQ – Match with the compare operation to be equal (A = B)

DOCA_FLOW_COMPARE_NE – Match with the compare operation to be not equal (A ≠ B)

DOCA_FLOW_COMPARE_LT – Match with the compare operation to be less than (A < B)

DOCA_FLOW_COMPARE_LE – Match with the compare operation to be less equal (A ≤ B)

DOCA_FLOW_COMPARE_GT – Match with the compare operation to great than (A > B)

DOCA_FLOW_COMPARE_GE – Match with the compare operation to great equal (A ≥ B)

doca_flow_actions

This structure is a flow actions configuration.

struct doca_flow_actions {

uint8_t action_idx;

uint32_t flags;

enum doca_flow_resource_type decap_type;

union {

struct doca_flow_resource_decap_cfg decap_cfg;

uint32_t shared_decap_id;

};

bool pop;

struct doca_flow_meta meta;

struct doca_flow_header_format outer;

struct doca_flow_tun tun;

enum doca_flow_resource_type encap_type;

union {

struct doca_flow_resource_encap_cfg encap_cfg;

uint32_t shared_encap_id;

};

bool has_push;

struct doca_flow_push_action push;

bool has_crypto_encap;

struct doca_flow_crypto_encap_action crypto_encap;

struct doca_flow_crypto_action crypto;

};

action_idx – Index according to place provided on creation.

flags – Action flags.

decap_type – If decap_type is SHARED, the shared_decap_id takes effect. If NON_SHARED, the decap_cfg takes effect. If NONE, then there is no decap.

decap_cfg – The decap config for decap_type is NON_SHARED.

shared_decap_id – The shared decap ID for decap_type is SHARED.

pop – Pop header while it is set to true.

meta – Modify meta value.

outer – Modify outer header.

tun – Modify tunnel header.

encap_type – If encap_type is SHARED, the shared_encap_id takes effect. If NON_SHARED, the encap_cfg takes effect. If NONE, then there is no encap.

encap_cfg – The encap config for encap_type is NON_SHARED.

shared_encap_id – The shared encap ID for encap_type is SHARED.

has_push – Push header while it is set to true.

push – Push header data information.

has_crypto_encap – Perform packet reformat for crypto protocols while it is set to true. If set to true, the structure doca_flow_crypto_encap_action provides a description for the header and trailer to be inserted or removed.

crypto_encap – Crypto protocols header and trailer data information.

crypto – Contains crypto action information.

Action Order

When setting actions they are executed in the following order:

Crypto (decryption)

Decap

Pop

Meta

Outer

Tun

Push

Encap

Crypto (encryption)

doca_flow_encap_action

This structure is an encapsulation action configuration.

struct doca_flow_encap_action {

struct doca_flow_header_format outer;

struct doca_flow_tun tun;

};

outer – L2/3/4 layers of the outer tunnel header.

L2 - src/dst MAC addresses, ether type, VLAN

L3 - IPv4/6 src/dst IP addresses, TTL/hop_limit, dscp_ecn

L4 - the UDP dst port is determined by the tunnel type

tun – The specific fields of the used tunnel protocol. Supported tunnel types: GRE, GTP, VXLAN, MPLS, GENEVE.

doca_flow_push_action

This structure is a push action configuration.

struct doca_flow_push_action {

enum doca_flow_push_action_type type;

union {

struct doca_flow_header_eth_vlan vlan;

};

};

type – Push action type.

vlan – VLAN data.

The type field includes the following push action types:

DOCA_FLOW_PUSH_ACTION_VLAN – push VLAN.

doca_flow_crypto_encap_action

This structure is a crypto protocol packet reformat action configuration.

struct doca_flow_crypto_encap_action {

enum doca_flow_crypto_encap_action_type action_type;

enum doca_flow_crypto_encap_net_type net_type;

uint16_t icv_size;

uint16_t data_size;

uint8_t encap_data[DOCA_FLOW_CRYPTO_HEADER_LEN_MAX];

};

action_type – Reformat action type.

net_type – Protocol mode, network header type.

icv_size – Integrity check value size, in bytes; defines the trailer size.

data_size – Header size in bytes to be inserted from encap_data.

encap_data – Header data to be inserted.

The action_type field includes the following crypto encap action types:

DOCA_FLOW_CRYPTO_REFORMAT_NONE – No crypto encap action performed.

DOCA_FLOW_CRYPTO_REFORMAT_ENCAP – Add/insert the crypto header and trailer to the packet. data_size and encap_data should provide the data for the headers being inserted.

DOCA_FLOW_CRYPTO_REFORMAT_DECAP – Remove the crypto header and trailer from the packet. data_size and encap_data should provide the data for the headers being inserted for tunnel mode.

The net_type field includes the following protocol/network header types:

DOCA_FLOW_CRYPTO_HEADER_NONE – No header type specified.

DOCA_FLOW_CRYPTO_HEADER_ESP_TUNNEL – ESP tunnel mode header type. On encap, the full tunnel header data for new L2+L3+ESP should be provided. On decap, the new L2 header data should be provided.

DOCA_FLOW_CRYPTO_HEADER_ESP_OVER_IP – ESP transport over IP mode header type. On encap, the data for ESP header being inserted should be provided.

DOCA_FLOW_CRYPTO_HEADER_UDP_ESP_OVER_IP – UDP+ESP transport over IP mode header type. On encap, the data for UDP+ ESP headers being inserted should be provided.

DOCA_FLOW_CRYPTO_HEADER_ESP_OVER_LAN – ESP transport over UDP/TCP mode header type. On encap, the data for ESP header being inserted should be provided.

DOCA_FLOW_CRYPTO_HEADER_PSP_TUNNEL – UDP+PSP tunnel mode header type. On encap, the full tunnel header data for new L2+L3+UDP+PSP should be provided. On decap, the new L2 header data should be provided.

DOCA_FLOW_CRYPTO_HEADER_PSP_OVER_IPV4 – UDP+PSP transport over IPv4 mode header type. On encap, the data for UDP+PSP header being inserted should be provided.

DOCA_FLOW_CRYPTO_HEADER_PSP_OVER_IPV6 – UDP+PSP transport over IPv6 mode header type. On encap, the data for UDP+PSP header being inserted should be provided.

The icv_size field can be either 8, 12 or 16 for ESP, and 16 for PSP.

The data_size field should not exceed DOCA_FLOW_CRYPTO_HEADER_LEN_MAX; can be zero for decap in non-tunnel modes.

doca_flow_crypto_action

This structure is a crypto action configuration to perform packet data encryption and decryption.

struct doca_flow_crypto_action {

enum doca_flow_crypto_action_type action_type;

enum doca_flow_crypto_resource_type resource_type;

union {

struct {

bool sn_en;

} ipsec_sa;

};

uint32_t crypto_id;

};

action_type – Crypto action type.

resource_type – PSP or IPsec resource.

ipsec_sa.sn_en – Enable ESP sequence number generation/checking (IPsec only).

crypto_id – Shared crypto resource ID or Shared PSP/IPsec SA resource ID.

The action_type field includes the following crypto action types:

DOCA_FLOW_CRYPTO_ACTION_NONE – No crypto action specified.

DOCA_FLOW_CRYPTO_ACTION_ENCRYPT – Encrypt packet data according to the chosen protocol.

DOCA_FLOW_CRYPTO_ACTION_DECRYPT – Decrypt packet data according to the chosen protocol.

The resource_type field includes the following protocols supported:

DOCA_FLOW_CRYPTO_RESOURCE_NONE – No crypto resource specified.

DOCA_FLOW_CRYPTO_RESOURCE_IPSEC_SA – IPsec resource.

DOCA_FLOW_CRYPTO_RESOURCE_PSP – PSP resource.

doca_flow_action_descs

This structure describes operations executed on packets matched by the pipe.

Detailed compatibility matrix and usage can be found in section "Summary of Action Types".

struct doca_flow_action_descs {

uint8_t nb_action_desc;

struct doca_flow_action_desc *desc_array;

};

nb_action_desc – Maximum number of action descriptor array (i.e., number of descriptor array elements)

desc_array – Action descriptor array pointer

struct doca_flow_action_desc

struct doca_flow_action_desc {

enum doca_flow_action_type type;

union {

struct {

struct doca_flow_desc_field src;

struct doca_flow_desc_field dst;

unit32_t width;

} field_op;

};

};

type – Action type.

field_op – Field to copy/add source and destination descriptor. Add always applies from field bit 0 .

The type field includes the following forwarding modification types:

DOCA_FLOW_ACTION_AUTO – modification type derived from pipe action

DOCA_FLOW_ACTION_ADD – add from field value or packet field. Supports meta scratch, ipv4_ttl, ipv6_hop, tcp_seq, and tcp_ack.

InfoAdding from packet fields is supported only with NVIDIA® BlueField®-3 and NVIDIA® ConnectX®-7.

DOCA_FLOW_ACTION_COPY – copy field

doca_flow_desc_field

This struct is the flow descriptor's field configuration.

struct doca_flow_desc_field {

const char *field_string;

/**< Field selection by string. */

uint32_t bit_offset;

/**< Field bit offset. */

};

field_string – Field string. Describes which packet field is selected in string format.

bit_offset – Bit offset in the field.

NoteThe complete supported field string could be found in the "Field String Support" appendix.

doca_flow_monitor

This structure is a monitor configuration.

struct doca_flow_monitor {

enum doca_flow_resource_type meter_type;

/**< Type of meter configuration. */

union {

struct {

enum doca_flow_meter_limit_type limit_type;

/**< Meter rate limit type: bytes / packets per second */

uint64_t cir;

/**< Committed Information Rate (bytes/second). */

uint64_t cbs;

/**< Committed Burst Size (bytes). */

} non_shared_meter;

struct {

uint32_t shared_meter_id;

/**< shared meter id */

enum doca_flow_meter_color meter_init_color;

/**< meter initial color */

} shared_meter;

};

enum doca_flow_resource_type counter_type;

/**< Type of counter configuration. */

union {

struct {

uint32_t shared_counter_id;

/**< shared counter id */

} shared_counter;

};

uint32_t shared_mirror_id;

/**< shared mirror id. */

bool aging_enabled;

/**< Specify if aging is enabled */

uint32_t aging_sec;

/**< aging time in seconds.*/

};

meter_type – Defines the type of meter. Meters can be shared, non-shared, or not used at all.

non_shared_meter – non-shared meter params

limit_type – bytes versus packets measurement

cir – committed information rate of non-shared meter

cbs – committed burst size of non-shared meter

shared_meter – shared meter params

shared_meter_id – meter ID that can be shared among multiple pipes

meter_init_colr – the initial color assigned to a packet entering the meter

counter_type – defines the type of counter. Counters can be shared, or not used at all.

shared_counter_id – counter ID that can be shared among multiple pipes

shared_mirror_id – mirror ID that can be shared among multiple pipes

aging_enabled – set to true to enable aging

aging_sec – number of seconds from the last hit after which an entry is aged out

doca_flow_resource_type is defined as follows.:

enum doca_flow_resource_type {

DOCA_FLOW_RESOURCE_TYPE_NONE,

DOCA_FLOW_RESOURCE_TYPE_SHARED,

DOCA_FLOW_RESOURCE_TYPE_NON_SHARED

};

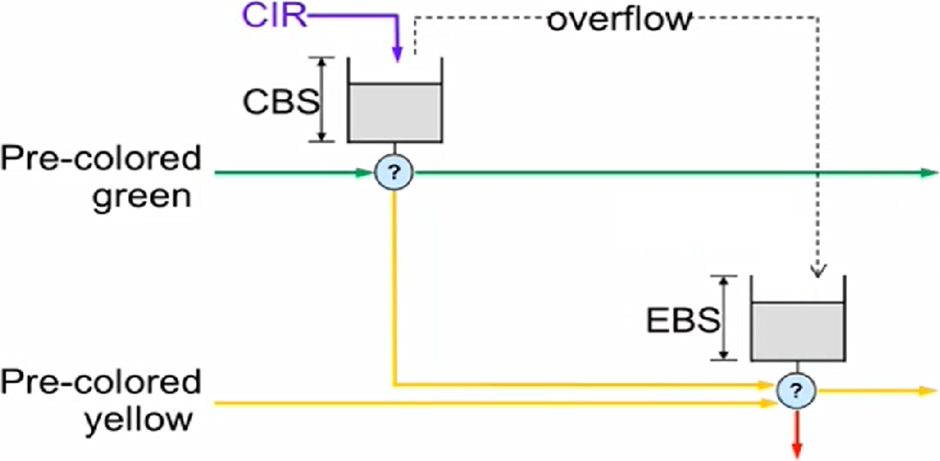

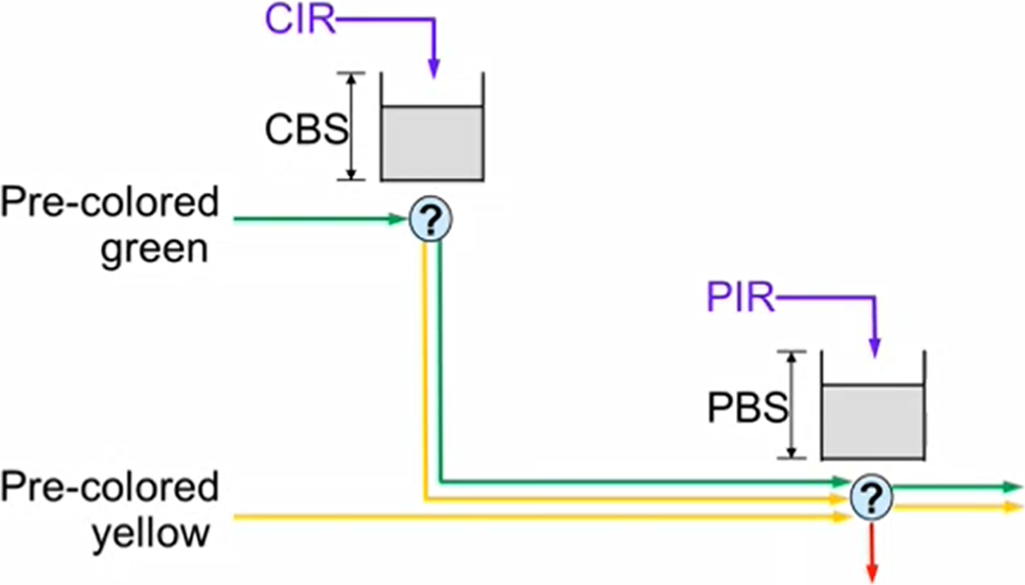

T(c) is the number of available tokens. For each packet where b equals the number of bytes, if t(c)-b≥0 the packet can continue, and tokens are consumed so that t(c)=t(c)-b. If t(c)-b<0, the packet is dropped.

CIR is the maximum bandwidth at which packets continue being confirmed. Packets surpassing this bandwidth are dropped. CBS is the maximum number of bytes allowed to exceed the CIR to be still CIR confirmed. Confirmed packets are handled based on the fwd parameter.

The number of <cir,cbs> pair different combinations is limited to 128.

Metering packets can be individual (i.e., per entry) or shared among multiple entries:

For the individual use case, set meter_type to DOCA_FLOW_RESOURCE_TYPE_NON_SHARED

For the shared use case, set meter_type to DOCA_FLOW_RESOURCE_TYPE_SHARED and shared_meter_id to the meter identifier

Counting packets can be individual (i.e., per entry) or shared among multiple entries:

For the individual use case, set counter type to DOCA_FLOW_RESOURCE_TYPE_SHARED and shared_counter_id to the counter identifier

For the shared use case, use a non-zero shared_counter_id

Mirroring packets can only be used as shared with a non-zero shared_mirror_id.

doca_flow_fwd

This structure is a forward configuration which directs where the packet goes next.

struct doca_flow_fwd {

enum doca_flow_fwd_type type;

union {

struct {

uint32_t rss_outer_flags;

uint32_t rss_inner_flags;

unit32_t *rss_queues;

int num_of_queues;

};

struct {

unit16_t port_id;

};

struct {

struct doca_flow_pipe *next_pipe;

};

struct {

struct doca_flow_pipe *pipe;

uint32_t idx;

} ordered_list_pipe;

struct {

struct doca_flow_target *target;

};

};

};

type – indicates the forwarding type

rss_outer_flags – RSS offload types on the outer-most layer (tunnel or non-tunnel).

rss_inner_flags – RSS offload types on the inner layer of a tunneled packet.

rss_queues – RSS queues array

num_of_queues – number of queues

port_id – destination port ID

next_pipe – next pipe pointer

ordered_list_pipe.pipe – ordered list pipe to select an entry from

ordered_list_pipe.idx – index of the ordered list pipe entry

target - target pointer

The type field includes the forwarding action types defined in the following enum:

DOCA_FLOW_FWD_RSS – forwards packets to RSS

DOCA_FLOW_FWD_PORT – forwards packets to port

DOCA_FLOW_FWD_PIPE – forwards packets to another pipe

DOCA_FLOW_FWD_DROP – drops packets

DOCA_FLOW_FWD_ORDERED_LIST_PIPE - forwards packet to a specific entry in an ordered list pipe

DOCA_FLOW_FWD_TARGET – forwards packets to a target

The rss_outer_flags and rss_inner_flags fields must be configured exclusively (either outer or inner).

Each outer/inner field is a bitwise OR of the RSS fields defined in the following enum:

DOCA_FLOW_RSS_IPV4 – RSS by IPv4 header

DOCA_FLOW_RSS_IPV6 – RSS by IPv6 header

DOCA_FLOW_RSS_UDP – RSS by UDP header

DOCA_FLOW_RSS_TCP – RSS by TCP header

DOCA_FLOW_RSS_ESP – RSS by ESP header

When specifying an RSS L4 type (DOCA_FLOW_RSS_TCP or DOCA_FLOW_RSS_UDP) it must have a bitwise OR with RSS L3 types (DOCA_FLOW_RSS_IPV4 or DOCA_FLOW_RSS_IPV6).

doca_flow_query

This struct is a flow query result.

struct doca_flow_query {

uint64_t total_bytes;

uint64_t total_pkts;

};

The struct doca_flow_query contains the following elements:

total_bytes – total bytes that hit this flow.

total_ptks – total packets that hit this flow.

doca_flow_init

This function is the global initialization function for DOCA Flow.

doca_error_t doca_flow_init(const struct doca_flow_cfg *cfg);

cfg [in] – Pointer to flow config structure.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_NO_MEMORY – Memory allocation failed

DOCA_ERROR_NOT_SUPPORTED – Unsupported pipe type

DOCA_ERROR_UNKNOWN – Otherwise

doca_flow_init must be invoked first before any other function in this API (except doca_flow_cfg API, to create and set doca_flow_cfg). This is a one-time call used for DOCA Flow initialization and global configurations.

doca_flow_destroy

This function is the global destroy function for DOCA Flow.

void doca_flow_destroy(void);

doca_flow_destroy must be invoked last to stop using DOCA Flow.

doca_flow_port_start

This function starts a port with its given configuration. It creates one port in the DOCA Flow layer, allocates all resources used by this port, and creates the default offload flow rules to redirect packets into software queues .

doca_error_t doca_flow_port_start(const struct doca_flow_port_cfg *cfg,

struct doca_flow_port **port);

cfg [in] – Pointer to DOCA Flow config structure.

port [out] – Pointer to DOCA Flow port handler on success.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_NO_MEMORY – Memory allocation failed

DOCA_ERROR_NOT_SUPPORTED – Unsupported pipe type

DOCA_ERROR_UNKNOWN – Otherwise

doca_flow_port_start modifies the state of the underlying DPDK port implementing the DOCA port. The DPDK port is stopped, then the flow configuration is applied, calling rte_flow_configure before starting the port again.

doca_flow_port_start must be called before any other DOCA Flow API to avoid conflicts.

In switch mode, the representor port must be stopped before switch port is stopped.

doca_flow_port_stop

This function releases all resources used by a DOCA flow port and removes the port's default offload flow rules.

doca_error_t doca_flow_port_stop(struct doca_flow_port *port);

port [in] – Pointer to DOCA Flow port handler.

doca_flow_port_pair

This function pairs two DOCA ports. After successfully pairing the two ports, traffic received on either port is transmitted via the other port by default.

For a pair of non-representor ports, this operation is required before port-based forwarding flows can be created. It is optional, however, if either port is a representor.

The two paired ports have no order.

A port cannot be paired with itself.

doca_error_t *doca_flow_port_pair(struct doca_flow_port *port,

struct doca_flow_port *pair_port);

port [in] – Pointer to the DOCA Flow port structure.

pair_port [in] – Pointer to another DOCA Flow port structure.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input.

DOCA_ERROR_NO_MEMORY – Memory allocation failed.

DOCA_ERROR_UNKNOWN – Otherwise.

doca_flow_pipe_create

This function creates a new pipeline to match and offload specific packets. The pipeline configuration is defined in the doca_flow_pipe_cfg (configured using the doca_flow_pipe_cfg_set_* API). The API creates a new pipe but does not start the hardware offload.

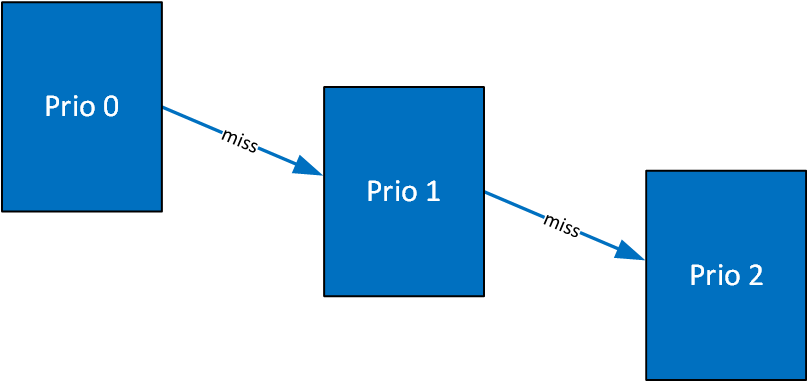

When cfg type is DOCA_FLOW_PIPE_CONTROL, the function creates a special type of pipe that can have dynamic matches and forwards with priority.

doca_error_t

doca_flow_pipe_create(const struct doca_flow_pipe_cfg *cfg,

const struct doca_flow_fwd *fwd,

const struct doca_flow_fwd *fwd_miss,

struct doca_flow_pipe **pipe);

cfg [in] – Pointer to flow pipe config structure.

fwd [in] – Pointer to flow forward config structure.

fwd_miss [in] – Pointer to flow forward miss config structure. NULL for no fwd_miss.

NoteWhen fwd_miss configuration is provided for basic and hash pipes , they are executed on miss. For any other pipe type, the configuration is ignored.

pipe [out] – Pointer to pipe handler on success.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_NOT_SUPPORTED – Unsupported pipe type

DOCA_ERROR_DRIVER – Driver error

doca_flow_pipe_add_entry

This function adds a new entry to a pipe. When a packet matches a single pipe, it starts hardware offload. The pipe defines which fields to match. This API does the actual hardware offload, with the information from the fields of the input packets.

doca_error_t

doca_flow_pipe_add_entry(uint16_t pipe_queue,

struct doca_flow_pipe *pipe,

const struct doca_flow_match *match,

const struct doca_flow_actions *actions,

const struct doca_flow_monitor *monitor,

const struct doca_flow_fwd *fwd,

unit32_t flags,

void *usr_ctx,

struct doca_flow_pipe_entry **entry);

pipe_queue [in] – Queue identifier

pipe [in] – Pointer to flow pipe

match [in] – Pointer to flow match. Indicates specific packet match information.

actions [in] – Pointer to modify actions. Indicates specific modify information.

monitor [in] – Pointer to monitor actions

fwd [in] – Pointer to flow forward actions

flags [in] – can be set as DOCA_FLOW_WAIT_FOR_BATCH or DOCA_FLOW_NO_WAIT

DOCA_FLOW_WAIT_FOR_BATCH – this entry waits to be pushed to hardware

DOCA_FLOW_NO_WAIT – this entry is pushed to hardware immediately

usr_ctx [in] – Pointer to user context (see note at doca_flow_entry_process_cb)

entry [out] – Pointer to pipe entry handler on success

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_DRIVER – Driver error

Some sanity checks may be omitted to avoid extra delays during flow insertion. For example, when forwarding to a pipe, the next_pipe field of struct doca_flow_fwd must contain a valid pointer. DOCA does not detect misconfigurations like these in the release build of the library.

doca_flow_pipe_update_entry

This function overrides the actions specified when the entry was last updated. If the intent is for some actions to be left unmodified, then the application must pass those as arguments to the update function.

doca_error_t

doca_flow_pipe_update_entry(uint16_t pipe_queue,

struct doca_flow_pipe *pipe,

const struct doca_flow_actions *actions,

const struct doca_flow_monitor *mon,

const struct doca_flow_fwd *fwd,

const enum doca_flow_flags_type flags,

struct doca_flow_pipe_entry *entry);

pipe_queue [in] – Queue identifier.

pipe [in] – Pointer to flow pipe.

actions [in] – Pointer to modify actions. Indicates specific modify information.

mon [in] – Pointer to monitor actions.

fwd [in] – Pointer to flow forward actions.

flags [in] – can be set as DOCA_FLOW_WAIT_FOR_BATCH or DOCA_FLOW_NO_WAIT.

DOCA_FLOW_WAIT_FOR_BATCH – this entry waits to be pushed to hardware.

DOCA_FLOW_NO_WAIT – this entry is pushed to hardware immediately.

entry [in] – Pointer to pipe entry to update.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_DRIVER – Driver error

doca_flow_pipe_control_add_entry

This function adds a new entry to a control pipe. When a packet matches a single pipe, it starts hardware offload. The pipe defines which fields to match. This API does the actual hardware offload with the information from the fields of the input packets.

doca_error_t

doca_flow_pipe_control_add_entry(uint16_t pipe_queue,

uint32_t priority,

struct doca_flow_pipe *pipe,

const struct doca_flow_match *match,

const struct doca_flow_match *match_mask,

const struct doca_flow_match_condition *condition,

const struct doca_flow_actions *actions,

const struct doca_flow_actions *actions_mask,

const struct doca_flow_action_descs *action_descs,

const struct doca_flow_monitor *monitor,

const struct doca_flow_fwd *fwd,

struct doca_flow_pipe_entry **entry);

pipe_queue [in] – Queue identifier.

priority [in] – Priority value.

pipe [in] – Pointer to flow pipe.

match [in] – Pointer to flow match. Indicates specific packet match information.

match_mask [in] – Pointer to flow match mask information.

condition [in] – Pointer to flow match condition information.

actions [in] – Pointer to modify actions. Indicates specific modify information.

actions_mask [in] – Pointer to modify actions' mask. Indicates specific modify mask information.

action_descs [in] – Pointer to action descriptors.

monitor [in] – Pointer to monitor actions.

fwd [in] – Pointer to flow forward actions.

entry [out] – Pointer to pipe entry handler on success.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_DRIVER – Driver error

Using a match condition cannot be mixed with exact match. Therefore, when condition is valid match_mask must be NULL.

When condition uses immediate value, the match structure must be provided with the value. Otherwise, match must also be NULL.

doca_flow_pipe_lpm_add_entry

This function adds a new entry to an LPM pipe. This API does the actual hardware offload all entries when flags is set to DOCA_FLOW_NO_WAIT.

doca_error_t

doca_flow_pipe_lpm_add_entry(uint16_t pipe_queue,

struct doca_flow_pipe *pipe,

const struct doca_flow_match *match,

const struct doca_flow_match *match_mask,

const struct doca_flow_actions *actions,

const struct doca_flow_monitor *monitor,

const struct doca_flow_fwd *fwd,

unit32_t flags,

void *usr_ctx,

struct doca_flow_pipe_entry **entry);

pipe_queue [in] – Queue identifier.

pipe [in] – Pointer to flow pipe.

match [in] – Pointer to flow match. Indicates specific packet match information.

match_mask [in] – Pointer to flow match mask information.

actions [in] – Pointer to modify actions. Indicates specific modify information.

monitor [in] – Pointer to monitor actions.

fwd [in] – Pointer to flow FWD actions.

flags [in] – Can be set as DOCA_FLOW_WAIT_FOR_BATCH or DOCA_FLOW_NO_WAIT.

DOCA_FLOW_WAIT_FOR_BATCH – LPM collects this flow entry

DOCA_FLOW_NO_WAIT – LPM adds this entry, builds the LPM software tree, and pushes all entries to hardware immediately

usr_ctx [in] – Pointer to user context (see note at doca_flow_entry_process_cb)

entry [out] – Pointer to pipe entry handler on success.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input.

DOCA_ERROR_DRIVER – Driver error.

doca_flow_pipe_lpm_update_entry

This function updates an LPM entry with a new set of actions.

doca_error_t

doca_flow_pipe_lpm_update_entry(uint16_t pipe_queue,

struct doca_flow_pipe *pipe,

const struct doca_flow_actions *actions,

const struct doca_flow_monitor *monitor,

const struct doca_flow_fwd *fwd,

const enum doca_flow_flags_type flags,

struct doca_flow_pipe_entry *entry);

pipe_queue [in] – Queue identifier.

pipe [in] – Pointer to flow pipe.

actions [in] – Pointer to modify actions. Indicates specific modify information.

monitor [in] – Pointer to monitor actions.

fwd [in] – Pointer to flow FWD actions.

flags [in] – Can be set as DOCA_FLOW_WAIT_FOR_BATCH or DOCA_FLOW_NO_WAIT.

DOCA_FLOW_WAIT_FOR_BATCH – LPM collects this flow entry

DOCA_FLOW_NO_WAIT – LPM updates this entry and pushes all entries to hardware immediately

entry [in] – Pointer to pipe entry to update.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input.

DOCA_ERROR_DRIVER – Driver error.

doca_flow_pipe_acl_add_entry

This function adds a new entry to an ACL pipe. This API performs the actual hardware offload for all entries when flags is set to DOCA_FLOW_NO_WAIT.

doca_error_t

doca_flow_pipe_acl_add_entry(uint16_t pipe_queue,

struct doca_flow_pipe *pipe,

const struct doca_flow_match *match,

const struct doca_flow_match *match_mask,

uint8_t priority,

const struct doca_flow_fwd *fwd,

unit32_t flags,

void *usr_ctx,

struct doca_flow_pipe_entry **entry);

pipe_queue [in] – Queue identifier.

pipe [in] – Pointer to flow pipe.

match [in] – Pointer to flow match. Indicates specific packet match information.

match_mask [in] – Pointer to flow match mask information.

priority [in] – Priority value.

fwd [in] – Pointer to flow FWD actions.

flags [in] – Can be set as DOCA_FLOW_WAIT_FOR_BATCH or DOCA_FLOW_NO_WAIT.

DOCA_FLOW_WAIT_FOR_BATCH – ACL collects this flow entry

DOCA_FLOW_NO_WAIT – ACL adds this entry, builds the ACL software model, and pushes all entries to hardware immediately

usr_ctx [in] – Pointer to user context (see note at doca_flow_entry_process_cb)

entry [out] – Pointer to pipe entry handler on success.

Returns – DOCA_SUCCESS on success. Error code in case of failure:

DOCA_ERROR_INVALID_VALUE – Received invalid input

DOCA_ERROR_DRIVER – Driver error

doca_flow_pipe_ordered_list_add_entry