Overview

Welcome to the trial of NVIDIA AI Workflows on NVIDIA LaunchPad.

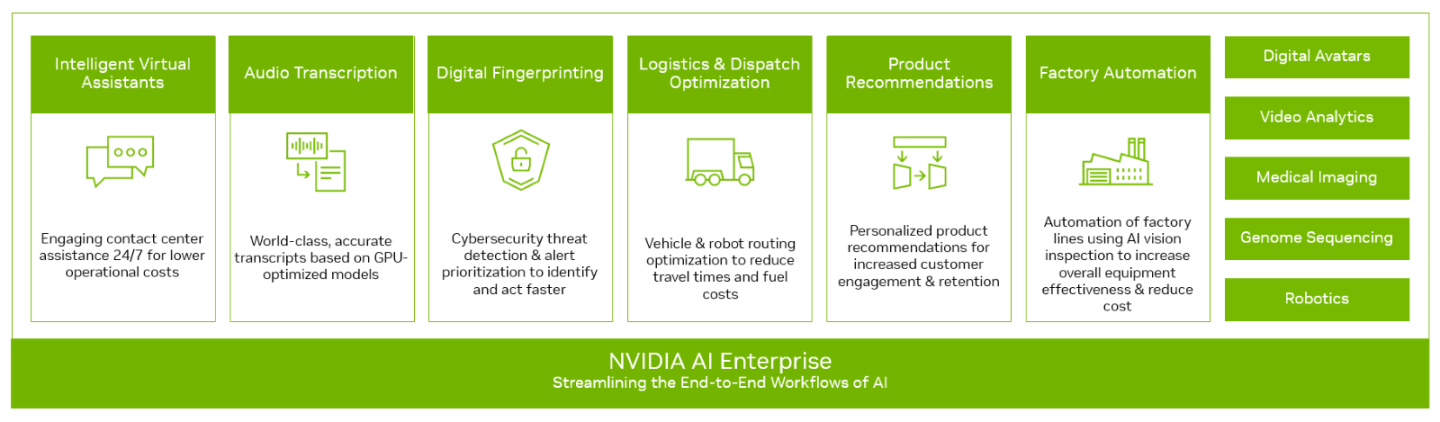

NVIDIA AI Workflows are available as part of NVIDIA AI Enterprise – an end-to-end, secure, cloud-native suite of AI software, enabling organizations to solve new challenges while increasing operational efficiency. Organizations start their AI journey by using the open, freely available NGC libraries and frameworks to experiment and pilot. When they’re ready to move from pilot to production, enterprises can easily transition to a fully managed and secure AI platform with an NVIDIA AI Enterprise subscription. This gives enterprises deploying business-critical AI the assurance of business continuity with NVIDIA Enterprise Support and access to NVIDIA AI experts.

Within this LaunchPad lab, you will gain experience with AI workflows that can accelerate your path to AI outcomes. These are packaged AI workflow examples that include NVIDIA AI frameworks and pretrained models, as well as resources such as Helm charts, Jupyter notebooks, and documentation to help customers get started in building AI-based solutions more quickly. NVIDIA’s cloud-native AI workflows run as microservices that can be deployed on Kubernetes alone or with other microservices to create production-ready applications.

Key Benefits:

Reduce development time at a lower cost

Improve accuracy and performance

Gain confidence in outcomes by leveraging NVIDIA expertise

Speech AI is used across various industries, like financial services, retail, healthcare, and manufacturing. For this lab, you will walk through the NVIDIA virtual assistant AI workflow, which includes audio transcription, and adds Riva customizations for the domain-specific language used within the financial services industry.

To assist you in your LaunchPad journey, there are a couple of important links on the left-hand navigation pane of this page. In the next step, you will use Jupyter and SSH links.

NVIDIA AI Workflows are intended to provide reference solutions for leveraging NVIDIA frameworks to build AI solutions for solving common use cases. These workflows guide fine-tuning and AI model creation to build upon NVIDIA frameworks. The pipelines to create applications are highlighted, as well as guidance on deploying customized applications and integrating them with various components typically found in enterprise environments, such as components for orchestration and management, storage, security, networking, etc.

NVIDIA AI Workflows are available on NVIDIA NGC for NVIDIA AI Enterprise software customers.

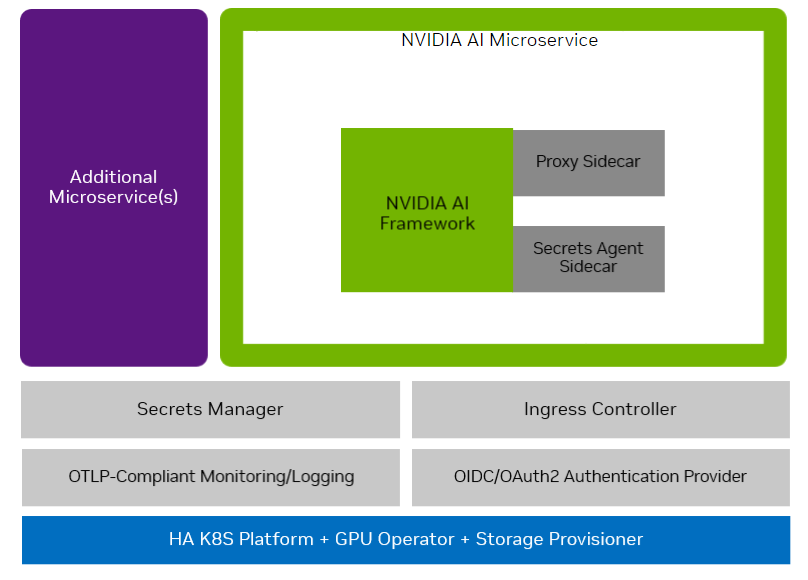

NVIDIA AI Workflows are deployed as a package containing the AI framework and the tools for automating a cloud-native solution. AI Workflows also have packaged components that include enterprise-ready implementations with best practices that ensure reliability, security, performance, scalability, and interoperability while allowing a path for you to deviate.

A typical workflow may look like the following diagram:

The components and instructions used in the workflow are intended to be used as examples for integration and may need to be sufficiently production-ready on their own, as stated. The workflow should be customized and integrated into one’s infrastructure, using the workflow as a reference. For example, all of the instructions in these workflows assume a single node infrastructure, whereas production deployments should be performed in a high availability (HA) environment.

To reduce the time to develop an intelligent virtual assistant for a contact center, NVIDIA has developed two workflows centered around Speech AI:

- Audio Transcription

- Intelligent Virtual Assistant

The audio transcription solution demonstrates how to use NVIDIA Riva AI Services, specifically automatic speech recognition (ASR) for transcription.

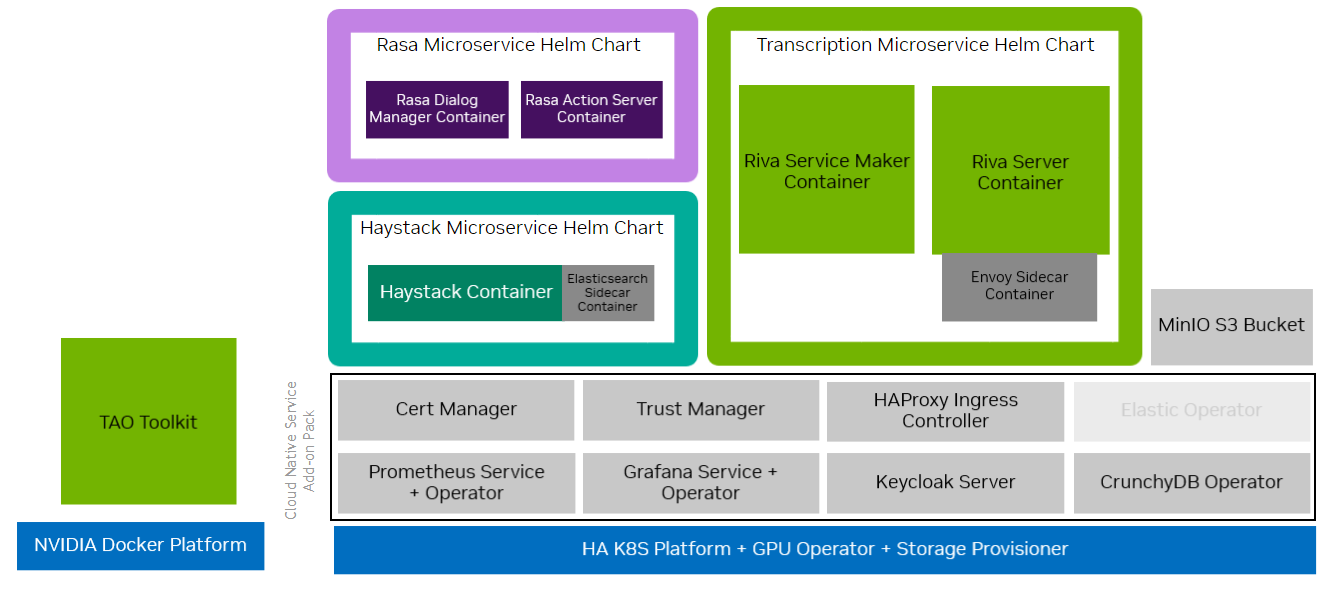

The intelligent virtual assistant integrates ASR and TTS into the conversational application pipelines by illustrating how to combine Riva services with third-party open-source NLP services like Haystack and open-source dialog managers like Rasa.

These workflows include the following:

Training and inference pipelines for Audio Transcription and deploying an Intelligent Virtual Assistant.

A production solution deployment reference includes components like authentication, logging, and monitoring the workflow.

Cloud Native deployable bundle packaged as Helm charts

The Intelligent Virtual Assistant workflow demonstrates using Riva ASR to transcribe a user’s spoken question, interpreting the user’s intent in the question, and constructing a relevant response, which is delivered to the user in synthesized natural speech using Riva TTS.

This Virtual Assistant workflow was pre-deployed on LaunchPads K8s distribution as a collection of Helm charts, each running a microservice.

Riva ASR and TTS services

Rasa dialog manager with Intent and slot management

Haystack NLP service for Information Retrieval Question Answering (IRQA)

The Helm charts also integrate with Cloud Native components like Keycloak, Prometheus, and Grafana for authentication and monitoring.

- Rasa Dialog Management and Training the Intent Slot Classification Model

- Information Retrieval and Question Answering (IRQA) with Haystack NLP

Every intelligent virtual assistant requires a way to manage the state and flow of the conversation. A dialog manager recognizes the user intent and routes the question to the correct prepared response or a fulfillment service. This provides context for the question and frames how the Virtual Assistant can respond properly. Rasa also maintains the dialog state by filling slots set by the developer to remember the context of the conversation. Rasa can be trained to understand user intent by giving examples of each intent and slots to be recognized.

The Haystack IRQA pipeline searches a list of given documents and generates a long-form response to the user question. This is helpful for companies with massive amounts of unstructured data that need to be consumed in a form that is helpful to the user.

Let’s start with a walk-through of the end-to-end workflow deployment using the example software stack components previously described. You will access each lab part using the links in the left-hand navigation pane.

First, we will run through a sample training pipeline to fine-tune the models used by Riva for ASR services. The intelligent virtual assistant solution adds Riva customization for a domain-specific use case, i.e., Financial Services Industry (FSI).