Linking DGXs, Switches, ConnectX-7 Adapters, and BlueField-3 DPUs Overview

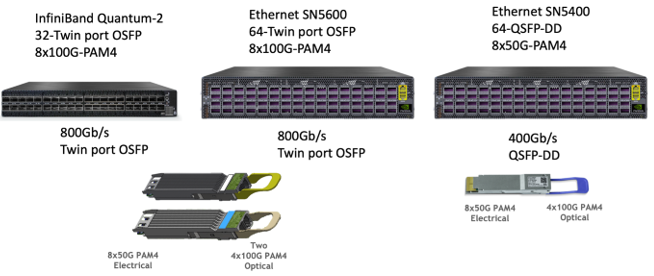

The Quantum-2 NDR InfiniBand and Spectrum-4 SN5600 Ethernet line of OSFP air-cooled, 400Gb/s network switches are based on 100G-PAM4 signal modulation. Although 400Gb/s is the speed rating, these switches use connector cages that house two 400Gb/s ports in a single cage called 2x400G twin-port OSFP and are used exclusively in these air-cooled switches.

SN5400 and SN4000 series Ethernet switches, based on 50G-PAM4 modulation, use QSFP-DD connectors and accept QSFP56 and QSFP28 connectors.

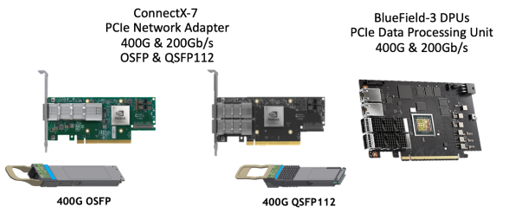

ConnectX network adapters and BlueField data processing units (DPUs) employ only flat-top connector shells of single-port OSFP, QSFP112, QSFP56 form-factors as flat tops are assumed and not denoted in part number descriptions. QSFP-DD is used in Ethernet switches only and not in ConnectX-7 or BlueField-3.

The twin-port OSFP cages enables doubling the number of 400Gb/s ports. 1RU, 32-cage Quantum-2 and 2RU, Spectrum-4 switches support 64x 400Gb/s ports and the 64-cage Spectrum-4 switch supports 128x 400Gb/s ports. This feature enables a single switch to create an enormous network. For example, a single SN5600 Ethernet switch with 64-cages (128-ports) has the same networking capability as 6-to-7 SN4000 switches.

Quantum-2 NDR InfiniBand and Spectrum-4 Ethernet Switch Cages and Twin-port, Finned-top OSFP Plugs

The ConnectX-7 Ethernet Network Interface Cards (NICs) and InfiniBand Host Channel Adapters (HCAs) are PCIe bus-based network adapters. The 100G-PAM4-based ConnectX-7 supports both InfiniBand and Ethernet in the same card. These are inserted into compute servers and storage subsystem PCIe Gen5 bus slots which act as the backbone of a subsystem chassis. PCIe-based adapter cards may have 8, 16, or 32-channels of 16 or 32Gb/transfer at the PCIe bus. The adapter ICs translate the large number of slower PCIe lanes and modulation type into 4-8 lanes at 100G-PAM4 and into the Ethernet or InfiniBand protocols. These signals are presented to the OSFP or QSFP112 connector cages that the cables or transceiver plugs are inserted into.

200G/400G ConnectX-7 are offered in QSFP112 and OSFP

200G/400G BlueField-3 are offered in QSFP112 only

Based on PCIe Gen 5 at 32G transfers/sec

Some ConnectX-6 and BlueField-2 adapters are protocol-specific and have different part numbers for InfiniBand and Ethernet and may require specific-protocol and part number cables and transceivers.

This creates a wide array of product SKUs with a twin-port finned-top OSFPs and QSFP-DD for the switch side and OSFP, QSFP112, QSFP56, or QSFP-DD cables and transceivers for everything else.

ConnectX-7 and BlueField-3 Adapter Cages and Single-port, Flat-top OSFP and QSFP112 Plugs

QSFP cages in switches and adapters have a unique backwards compatibility feature enabling older versions to be inserted into newer models. For example:

4-channel QSFP112 (4x100G-PAM4) cages can support QSFP56 (4x50G-PAM4) and QSFP28 (4x25G-NRZ) devices.

QSFP-DD (8x50G-PAM4) cage has 8-channels by using 2 rows of electrical pins in the cage supports QSFP112, 56, and 28.

Modulation speeds are negotiated by the switches and adapters. Many, but not all transceivers, can down shift to slower modulation speeds.

Twin-port OSFP and single-port OSFP cages are not compatible with the QSFP line of devices.

No QSFP-to-OSFP adapter (QSA) is offered.

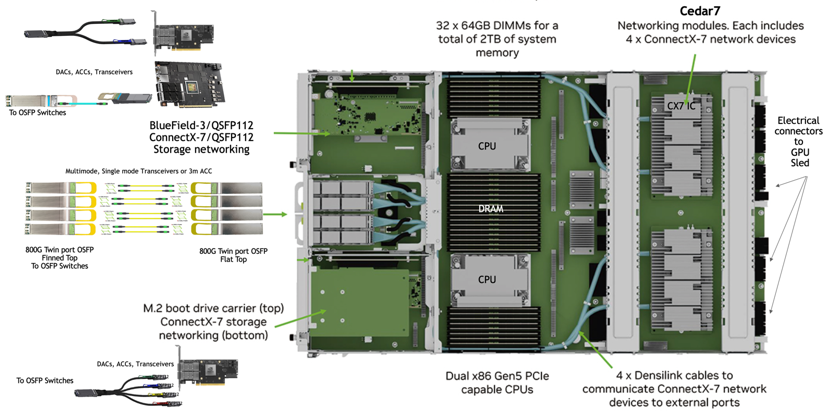

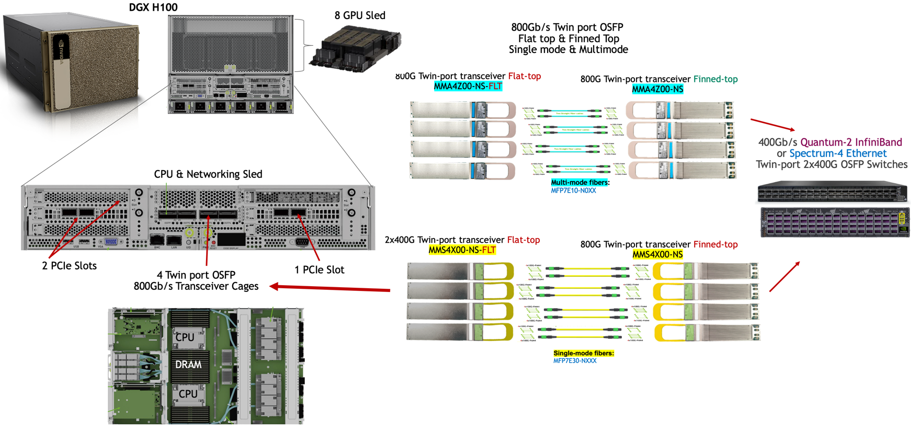

The DGX H100 system has two networking areas: one for GPUs and another for general networking.

Cedar7's eight ConnectX-7 mezzanine cards are linked to switches.

Four PCIe Gen5 bus connectors for ConnectX-7 and BlueField-3 adapters using standard LinkX cables and transceivers, either OSFP or QSFP112, QSFP56.

The PCIe bus cards mounted in the DGX H100 chassis are network adapters and DPUs and must link to Ethernet or InfiniBand switches.

Cedar7 ConnectX-7 Mezzanine Cards

The DGX H100 is essentially a CPU and GPU server and ConnectX adapter and BlueField-3 DPU complex that must be connected to a network switch. It has eight ConnectX-7 ICs mounted on two mezzanine Cedar7 cards located in the back of the CPU server chassis. One side links directly to the eight GPU cluster located on the GPU sled above and the other side uses an internal DAC cable to link to the front of the server chassis four 800G cages. These cages have internal, air-cooled, riding heat sinks so use a twin-port, flat-top,800G OSFP transceiver cage.

Each 800G cage supports two 400G ConnectX-7 ICs on the internal Cedar7 cards at 400G each. The four, twin-port OSFP flat-top cages support 800G single-mode or multimode transceivers and are usually linked to air-cooled switches using twin-port OSFP finned-top transceivers. This approach reduces the redundant PCB, cards, brackets, cages, etc. and reduces the overall size.

DACs and ACCs are generally not used due to the internal chassis cabling losses between the ConnectX-7 IC Cedar cards in the chassis backside and four OSFP cages on the chassis front creates too significant signal losses, resulting in very short cable lengths. Only one 3-meter ACC is offered.

PCIe Cards

The DGX H1000 also has four PCIe Gen5 connectors for ConnectX-7 and BlueField-3 adapters and uses standard LinkX DAC, ACC cables, or transceivers in multimode or single-mode optics. The cards mounted in the DGX H100 chassis are network adapters and DPUs and must link to twin-port OSFP-based switches in Ethernet or InfiniBand. These cards receive the cable split ends from switches for any set up.

DGX H100 GPU-Cedar7 Flat-top OSFP Networking

CPU Sled Top-View Twin-port OSFP Cages on Left; Cedar7 Mezzanine Cards on Right