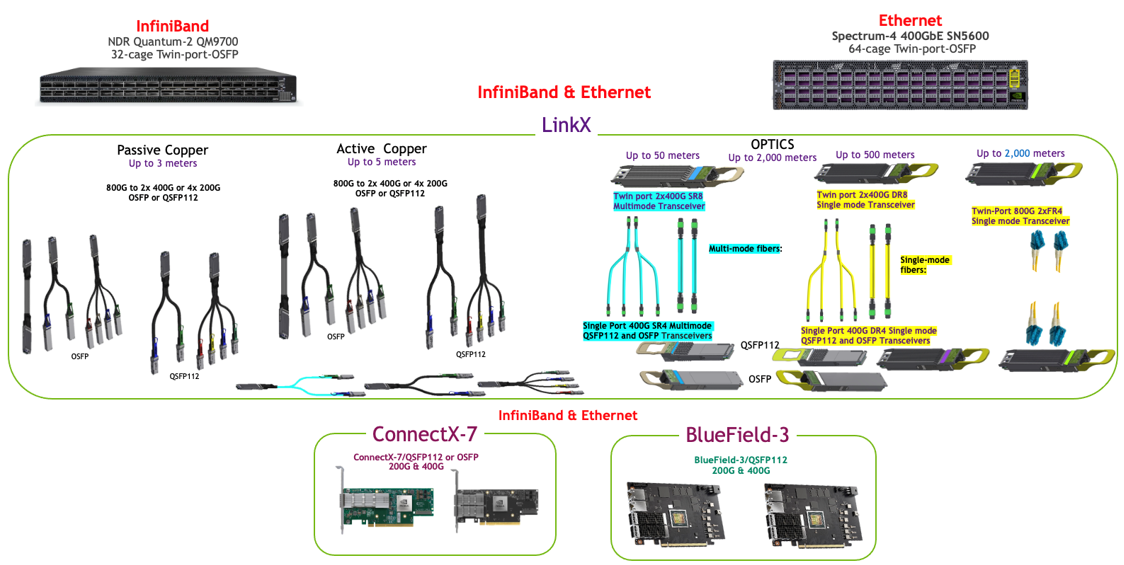

NVIDIA Quantum, Spectrum, and LinkX Product Lines

The NVIDIA LinkX line of cables and transceivers offers a wide array of products for configuring any network switching and adapter system. This product line is specifically optimized for 100G-PAM4 line rates.

The NVIDIA networking product line includes:

Quantum InfiniBand switches

Spectrum Ethernet switches

ConnectX-7 PCIe-based network adapters

BlueField-3 PCIe-based Data Processing Units (DPUs)

These are all interconnected using the LinkX cables and transceiver product including:

Direct attached copper (DAC) cables

Active copper cables (ACC)

Multimode transceivers and active optical cables (AOCs)

Single mode transceivers

Crossover optical fibers

The above-mentioned product set creates a wide array of interconnect capabilities to build any computing and networking configuration from traditional, simple fat-tree, leaf-spine networks to complex HPC-style 4D-Torus supercomputer configurations.

NVIDIA’s 100G-PAM4-based 400Gb/s networking product line

NVIDIA’s LinkX cables and transceivers 100G-PAM4 product line focuses exclusively on accelerated and artificial intelligence computing systems:

Data center-oriented reaches up to 2 kilometers but generally <50-meters

High data rates

800Gb/s to the switch

400Gb/s to the network adapter and DPU

Low latency delays in transferring huge amounts of data using 100G-PAM4 line rates

Crossover fibers to directly attach two transceivers together

To minimize data retransmissions, the cables and transceivers are designed and tested to extremely low bit error ratios (BER) required for low-latency, high-bandwidth applications. These are designed to work seamlessly with NVIDIA’s Quantum InfiniBand and Spectrum Ethernet network switches, ConnectX PCIe network adapters, and BlueField PCIe DPUs. While the traditional IEEE Ethernet cables and transceivers offering may have hundreds of different parts optimized for specific use cases, for accelerated AI computing, NVIDIA focuses on only a few parts that are optimized for exceptionally high data rates and low latency.

For InfiniBand cables and transceivers, specific lengths, or reaches, are chosen in coordination with the switch buffer latency requirements, latency delays in transceiver and optical fiber. This is done to minimize latency delays in very high-speed systems – a critical factor for AI accelerated systems. Most AI and HPC systems are in size less than the area of two tennis courts. Distances are kept short between components to minimize latency delays.

NVIDIA designs and builds complete subsystems based on the above switches, adapters, and interconnects. All these subsystems, including the major integrated circuits, are designed, manufactured, and/or sourced, all from one supplier—NVIDIA, and tested to work optimally in NVIDIA end-to-end complete configurations—specifically for artificial intelligence and accelerated applications.

Network switches have specific models for InfiniBand or Ethernet protocols. However, the LinkX cables and transceivers, ConnectX-7 network adapters, and BlueField-3 DPUs all contain both InfiniBand and Ethernet firmware and when inserted into a specific protocol switch, will automatically adopt, and support that protocol. This holds true for the 100G-PAM4 based product lines but fragments at slower line rates into some parts being dual-protocol and some being protocol-specific. See the NVIDIA LinkX Cables and Transceivers Key Technologies document for more information.

For 100GbE, EDR, 200GbE, and HDR networking, the same QSFP28 or QSFP56 connector is used on both the switches and adapters. For 100G-PAM4 systems, the connector used in the switches is unique from those used in adapters and DPUs. This is an important point and set up the basis for the various products. This creates a wide variety of LinkX parts and specific configurations rules.

Switches use only 800G twin-port OSFP finned top cables and transceivers.

DGX H100 systems use a flat top version of the above.

ConnectX-7 uses 400G flat top OSFP or QSFP112/56/28 cables and transceivers.

BlueField-3 uses only QSFP112/56/28 cables and transceivers; no OSFP devices.

QSFP112-based ConnectX-7 adapters and BlueField-3 DPUs accept legacy QSFP56 and QSFP28 slower devices. QSFP112 cannot be used in OSFP switches.