Introduction

This is the user guide for InfiniBand/Ethernet adapter cards based on the ConnectX-6 integrated circuit device. ConnectX-6 connectivity provides the highest performing low latency and most flexible interconnect solution for PCI Express Gen 3.0/4.0 servers used in enterprise datacenters and high-performance computing environments.

ConnectX-6 Virtual Protocol Interconnect® adapter cards provide up to two ports of 200Gb/s for InfiniBand and Ethernet connectivity, sub-600ns latency and 200 million messages per second, enabling the highest performance and most flexible solution for the most demanding High-Performance Computing (HPC), storage, and datacenter applications.

ConnectX-6 is a groundbreaking addition to the NVIDIA ConnectX series of industry-leading adapter cards. In addition to all the existing innovative features of past ConnectX versions, ConnectX-6 offers a number of enhancements that further improve the performance and scalability of datacenter applications. In addition, specific PCIe stand-up cards are available with a cold plate for insertion into liquid-cooled Intel® Server System D50TNP platforms.

ConnectX-6 is available in two form factors: low-profile stand-up PCIe and Open Compute Project (OCP) Spec 3.0 cards with QSFP connectors. Single-port, HDR, stand-up PCIe adapters are available based on either ConnectX-6 or ConnectX-6 DE (ConnectX-6 Dx enhanced for HPC applications).

Make sure to use a PCIe slot that is capable of supplying the required power and airflow to the ConnectX-6 as stated in Specifications.

Configuration | OPN | Marketing Description |

MCX651105A-EDAT | ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR IB and 100GbE, single-port QSFP56, PCIe4.0 x8, tall bracket | |

MCX653105A-HDAT | ConnectX-6 InfiniBand/Ethernet adapter card, HDR IB (200Gb/s) and 200GbE, single-port QSFP56, PCIe4.0 x16, tall bracket | |

MCX653106A-HDAT | ConnectX-6 InfiniBand/Ethernet adapter card, HDR IB (200Gb/s) and 200GbE, dual-port QSFP56, PCIe3.0/4.0 x16, tall bracket | |

MCX653105A-ECAT | ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR IB and 100GbE), single-port QSFP56, PCIe3.0/4.0 x16, tall bracket | |

MCX653106A-ECAT | ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR IB and 100GbE), dual-port QSFP56, PCIe3.0/4.0 x16, tall bracket | |

MCX683105AN-HDAT | ConnectX-6 DE InfiniBand adapter card, HDR, single-port QSFP, PCIe 3.0/4.0 x16, No Crypto, Tall Bracket | |

ConnectX-6 PCIe x16 Cards for liquid-cooled Intel® Server System D50TNP platforms | MCX653105A-HDAL | ConnectX-6 InfiniBand/Ethernet adapter card, HDR IB (200Gb/s) and 200GbE, single-port QSFP56, PCIe4.0 x16, cold plate for liquid-cooled Intel® Server System D50TNP platforms, tall bracket, ROHS R6 |

MCX653106A-HDAL | ConnectX-6 InfiniBand/Ethernet adapter card, HDR IB (200Gb/s) and 200GbE, dual-port QSFP56, PCIe4.0 x16, cold plate for liquid-cooled Intel® Server System D50TNP platforms, tall bracket, ROHS R6 | |

MCX654105A-HCAT | ConnectX-6 InfiniBand/Ethernet adapter card kit, HDR IB (200Gb/s) and 200GbE, single-port QSFP56, Socket Direct 2x PCIe3.0 x16, tall brackets | |

MCX654106A-HCAT | ConnectX-6 InfiniBand/Ethernet adapter card, HDR IB (200Gb/s) and 200GbE, dual-port QSFP56, Socket Direct 2x PCIe3.0/4.0x16, tall bracket | |

MCX654106A-ECAT | ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR InfiniBand and 100GbE), dual-port QSFP56, Socket Direct 2x PCIe3.0/4.0 x16, tall bracket | |

ConnectX-6 Single-slot Socket Direct Cards (2x PCIe x8 in a row) | MCX653105A-EFAT | ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR IB and 100GbE), single-port QSFP56, PCIe3.0/4.0 Socket Direct 2x8 in a row, tall bracket |

MCX653106A-EFAT | ConnectX-6 InfiniBand/Ethernet adapter card, 100Gb/s (HDR100, EDR IBand100GbE), dual-port QSFP56, PCIe3.0/4.0 Socket Direct 2x8 in a row, tall bracket |

ConnectX-6 PCIe x8 Card

ConnectX-6 with a single PCIe x8 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 4.0 slot.

Part Number | MCX651105A-EDAT | |

Form Factor/Dimensions | PCIe Half Height, Half Length / 167.65mm x 68.90mm | |

Data Transmission Rate | Ethernet: 10/25/40/50/100 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 | |

Network Connector Type | Single-port QSFP56 | |

PCIe x8 through Edge Connector | PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s | |

RoHS | RoHS Compliant | |

Adapter IC Part Number | MT28908A0-XCCF-HVM | |

ConnectX-6 PCIe x16 Card

ConnectX-6 with a single PCIe x16 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 3.0 slot, or up to 200Gb/s in a PCIe Gen 4.0 slot. This form-factor is available also for Intel® Server System D50TNP Platforms where an Intel liquid-cooled cold plate is used for adapter cooling mechanism.

Part Number | MCX653105A-ECAT | MCX653106A-ECAT | MCX653105A-HDAT | MCX653106A-HDAT | |

Form Factor/Dimensions | PCIe Half Height, Half Length / 167.65mm x 68.90mm | ||||

Data Transmission Rate | Ethernet: 10/25/40/50/100 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 | Ethernet: 10/25/40/50/100/200 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100, HDR | |||

Network Connector Type | Single-port QSFP56 | Dual-port QSFP56 | Single-port QSFP56 | Dual-port QSFP56 | |

PCIe x16 through Edge Connector | PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s | ||||

RoHS | RoHS Compliant | ||||

Adapter IC Part Number | MT28908A0-XCCF-HVM | ||||

ConnectX-6 DE PCIe x16 Card

ConnectX-6 DE (ConnectX-6 Dx enhanced for HPC applications) with a single PCIe x16 slot can support a bandwidth of up to 100Gb/s in a PCIe Gen 3.0 slot, or up to 200Gb/s in a PCIe Gen 4.0 slot.

Part Number | MCX683105AN-HDAT |

Form Factor/Dimensions | PCIe Half Height, Half Length / 167.65mm x 68.90mm |

Data Transmission Rate | InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100, HDR |

Network Connector Type | Single-port QSFP56 |

PCIe x16 through Edge Connector | PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s |

RoHS | RoHS Compliant |

Adapter IC Part Number | MT28924A0-NCCF-VE |

ConnectX-6 for Liquid-Cooled Intel® Server System D50TNP Platforms

The below cards are available with a cold plate for insertion into liquid-cooled Intel® Server System D50TNP platforms.

Part Number | MCX653105A-HDAL | MCX653106A-HDAL | |

Form Factor/Dimensions | PCIe Half Height, Half Length / 167.65mm x 68.90mm | ||

Data Transmission Rate | Ethernet: 10/25/40/50/100/200 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100, HDR | ||

Network Connector Type | Single-port QSFP56 | Dual-port QSFP56 | |

PCIe x16 through Edge Connector | PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s | ||

RoHS | RoHS Compliant | ||

Adapter IC Part Number | MT28908A0-XCCF-HVM | ||

ConnectX-6 Socket Direct™ Cards

The Socket Direct technology offers improved performance to dual-socket servers by enabling direct access from each CPU in a dual-socket server to the network through its dedicated PCIe interface.

Please note that ConnectX-6 Socket Direct cards do not support Multi-Host functionality (i.e. connectivity to two independent CPUs). For ConnectX-6 Socket Direct card with Multi-Host functionality, please contact NVIDIA.

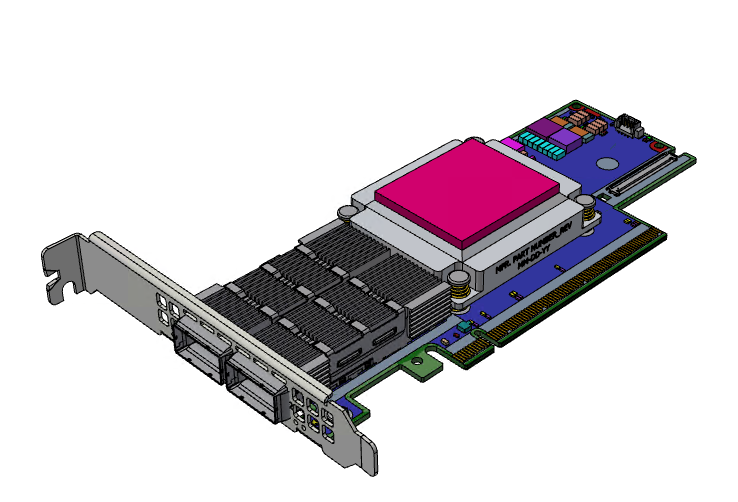

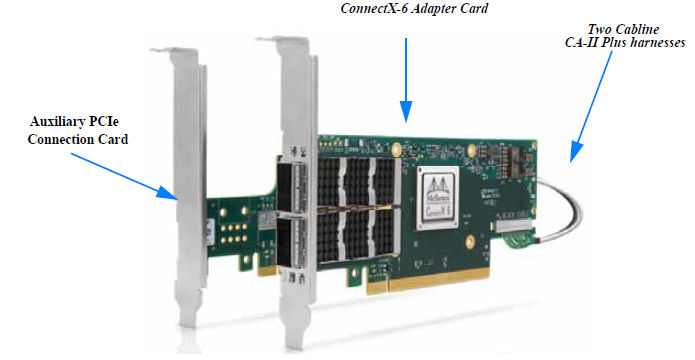

ConnectX-6 Socket Direct cards are available in two configurations: Dual-slot Configuration (2x PCIe x16) and Single-slot Configuration (2x PCIe x8).

ConnectX-6 Dual-slot Socket Direct Cards (2x PCIe x16)

In order to obtain 200Gb/s speed, NVIDIA offers ConnectX-6 Socket Direct that enable 200Gb/s connectivity also for servers with PCIe Gen 3.0 capability. The adapter’s 32-lane PCIe bus is split into two 16-lane buses, with one bus accessible through a PCIe x16 edge connector and the other bus through an x16 Auxiliary PCIe Connection card. The two cards should be installed into two PCIe x16 slots and connected using two Cabline SA-II Plus harnesses, as shown in the below figure.

Part Number | MCX654105A-HCAT | MCX654106A-HCAT | MCX654106A-ECAT | |

Form Factor/Dimensions | Adapter Card: PCIe Half Height, Half Length / 167.65mm x 68.90mm Auxiliary PCIe Connection Card: 5.09 in. x 2.32 in. (129.30mm x 59.00mm) Two 35cm Cabline CA-II Plus harnesses | |||

Data Transmission Rate | Ethernet: 10/25/40/50/100/200 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100, HDR | Ethernet: 10/25/40/50/100 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 | ||

Network Connector Type | Single-port QSFP56 | Dual-port QSFP56 | ||

PCIe x16 through Edge Connector | PCIe Gen 3.0 / 4.0SERDES@ 8.0GT/s / 16.0GT/s | |||

PCIe x16 through Auxiliary Card | PCIe Gen 3.0SERDES@ 8.0GT/s | |||

RoHS | RoHS Compliant | |||

Adapter IC Part Number | MT28908A0-XCCF-HVM | |||

ConnectX-6 Single-slot Socket Direct Cards (2x PCIe x8 in a row)

The PCIe x16 interface comprises two PCIe x8 in a row, such that each of the PCIe x8 lanes can be connected to a dedicated CPU in a dual-socket server. In such a configuration, Socket Direct brings lower latency and lower CPU utilization as the direct connection from each CPU to the network means the interconnect can bypass a QPI (UPI) and the other CPU, optimizing performance and improving latency. CPU utilization is improved as each CPU handles only its own traffic and not traffic from the other CPU.

A system with a custom PCI Express x16 slot that includes special signals is required for installing the card. Please refer to PCI Express Pinouts Description for Single-Slot Socket Direct Card for pinout definitions.

Part Number | MCX653105A-EFAT | MCX653106A-EFAT | |

Form Factor/Dimensions | PCIe Half Height, Half Length / 167.65mm x 68.90mm | ||

Data Transmission Rate | Ethernet: 10/25/40/50/100 Gb/s InfiniBand: SDR, DDR, QDR, FDR, EDR, HDR100 | ||

Network Connector Type | Single-port QSFP56 | Dual-port QSFP56 | |

PCIe x16 through Edge Connector | PCIe Gen 3.0 / 4.0 SERDES @ 8.0GT/s / 16.0GT/s Socket Direct 2x8 in a row | ||

RoHS | RoHS Compliant | ||

Adapter IC Part Number | MT28908A0-XCCF-HVM | ||

ConnectX-6 PCIe x8/x16 Adapter Cards

Applies to MCX651105A-EDAT, MCX653105A-ECAT, MCX653106A-ECAT, MCX653105A-HDAT, MCX653106A-HDAT, MCX653105A-EFAT, MCX653106A-EFAT, and MCX683105AN-HDAT .

Category | Qty | Item |

Cards | 1 | ConnectX-6 adapter card |

Accessories | 1 | Adapter card short bracket |

1 | Adapter card tall bracket (shipped assembled on the card) |

ConnectX-6 PCIe x16 Adapter Card for liquid-cooled Intel® Server System D50TNP Platforms

Applies to MCX653105A-HDAL and MCX653106A-HDAL.

Category | Qty | Item |

Cards | 1 | ConnectX-6 adapter card |

Accessories | 1 | Adapter card short bracket |

1 | Adapter card tall bracket (shipped assembled on the card) | |

1 | Accessory Kit with two 2 TIMs (MEB000386) |

ConnectX-6 Socket Direct Cards (2x PCIe x16)

Applies to MCX654105A-HCAT, MCX654106A-HCAT and MCX654106A-ECAT.

Category | Qty. | Item |

Cards | 1 | ConnectX-6 adapter card |

1 | PCIe Auxiliary Card | |

Harnesses | 1 | 35cm Cabline CA-II Plus harness (white) |

1 | 35cm Cabline CA-II Plus harness (black) | |

|

Accessories | 2 | Retention Clip for Cablline harness (optional accessory) |

1 | Adapter card short bracket | |

1 | Adapter card tall bracket (shipped assembled on the card) | |

1 | PCIe Auxiliary card short bracket | |

1 | PCIe Auxiliary card tall bracket (shipped assembled on the card) |

Make sure to use a PCIe slot that is capable of supplying the required power and airflow to the ConnectX-6 cards as stated in Specifications.

PCI Express (PCIe) | Uses the following PCIe interfaces:

|

200Gb/s InfiniBand/Ethernet Adapter | ConnectX-6 offers the highest throughput InfiniBand/Ethernet adapter, supporting HDR 200b/s InfiniBand and 200Gb/s Ethernet and enabling any standard networking, clustering, or storage to operate seamlessly over any converged network leveraging a consolidated software stack. |

InfiniBand Architecture Specification v1.3 compliant | ConnectX-6 delivers low latency, high bandwidth, and computing efficiency for performance-driven server and storage clustering applications. ConnectX-6 is InfiniBand Architecture Specification v1.3 compliant. |

Up to 200 Gigabit Ethernet | NVIDIA adapters comply with the following IEEE 802.3 standards: 200GbE / 100GbE / 50GbE / 40GbE / 25GbE / 10GbE / 1GbE - IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet - IEEE 802.3by, Ethernet Consortium25, 50 Gigabit Ethernet, supporting all FEC modes - IEEE 802.3ba 40 Gigabit Ethernet - IEEE 802.3by 25 Gigabit Ethernet - IEEE 802.3ae 10 Gigabit Ethernet - IEEE 802.3ap based auto-negotiation and KR startup - IEEE 802.3ad, 802.1AX Link Aggregation - IEEE 802.1Q, 802.1P VLAN tags and priority - IEEE 802.1Qau (QCN) - Congestion Notification - IEEE 802.1Qaz (ETS) - IEEE 802.1Qbb (PFC) - IEEE 802.1Qbg - IEEE 1588v2 - Jumbo frame support (9.6KB) |

InfiniBand HDR100 | A standard InfiniBand data rate, where each lane of a 2X port runs a bit rate of 53.125Gb/s with a 64b/66b encoding, resulting in an effective bandwidth of 100Gb/s. |

InfiniBand HDR | A standard InfiniBand data rate, where each lane of a 4X port runs a bit rate of 53.125Gb/s with a 64b/66b encoding, resulting in an effective bandwidth of 200Gb/s. |

Memory Components |

|

Overlay Networks | In order to better scale their networks, datacenter operators often create overlay networks that carry traffic from individual virtual machines over logical tunnels in encapsulated formats such as NVGRE and VXLAN. While this solves network scalability issues, it hides the TCP packet from the hardware offloading engines, placing higher loads on the host CPU. ConnectX-6 effectively addresses this by providing advanced NVGRE and VXLAN hardware offloading engines that encapsulate and de-capsulate the overlay protocol. |

RDMA and RDMA over Converged Ethernet (RoCE) | ConnectX-6, utilizing IBTA RDMA (Remote Data Memory Access) and RoCE (RDMA over Converged Ethernet) technology, delivers low-latency and high-performance over InfiniBand and Ethernet networks. Leveraging datacenter bridging (DCB) capabilities as well as ConnectX-6 advanced congestion control hardware mechanisms, RoCE provides efficient low-latency RDMA services over Layer 2 and Layer 3 networks. |

NVIDIA PeerDirect™ | PeerDirect™ communication provides high efficiency RDMA access by eliminating unnecessary internal data copies between components on the PCIe bus (for example, from GPU to CPU), and therefore significantly reduces application run time. ConnectX-6 advanced acceleration technology enables higher cluster efficiency and scalability to tens of thousands of nodes. |

CPU Offload | Adapter functionality enables reduced CPU overhead leaving more CPU resources available for computation tasks. Open vSwitch (OVS) offload using ASAP2(TM) • Flexible match-action flow tables • Tunneling encapsulation/decapsulation |

Quality of Service (QoS) | Support for port-based Quality of Service enabling various application requirements for latency and SLA. |

Hardware-based I/O Virtualization | ConnectX-6 provides dedicated adapter resources and guaranteed isolation and protection for virtual machines within the server. |

Storage Acceleration | A consolidated compute and storage network achieves significant cost-performance advantages over multi-fabric networks. Standard block and file access protocols can leverage:

|

SR-IOV | ConnectX-6 SR-IOV technology provides dedicated adapter resources and guaranteed isolation and protection for virtual machines (VM) within the server. |

High-Performance Accelerations |

|

ConnectX-6 Socket Direct cards 2x PCIe x16 (OPNs: MCX654105A-HCAT, MCX654106A-HCAT and MCX654106A-ECAT) are not supported in Windows and WinOF-2.

OpenFabrics Enterprise Distribution (OFED)

RHEL/CentOS

Windows

FreeBSD

VMware

OpenFabrics Enterprise Distribution (OFED)

OpenFabrics Windows Distribution (WinOF-2)

Interoperable with 1/10/25/40/50/100/200 Gb/s InfiniBand and Ethernet switches

Passive copper cable with ESD protection

Powered connectors for optical and active cable support

ConnectX-6 technology maintains support for manageability through a BMC. ConnectX-6 PCIe stand-up adapter can be connected to a BMC using MCTP over SMBus or MCTP over PCIe protocols as if it is a standard NVIDIA PCIe stand-up adapter. For configuring the adapter for the specific manageability solution in use by the server, please contact NVIDIA Support.