Advanced Features

RPC log history (enabled by default) records all the RPC requests (from snap_rpc.py and spdk_rpc.py) sent to the SNAP application and the RPC response for each RPC requests in a dedicated log file, /var/log/snap-log/rpc-log. This file is visible outside the container (i.e., the log file's path on the DPU is /var/log/snap-log/rpc-log as well).

The SNAP_RPC_LOG_ENABLE env can be used to enable (1) or disable (0) this feature.

RPC log history is supported with SPDK version spdk23.01.2-12 and above.

When RPC log history is enabled, the SNAP application writes (in append mode) RPC request and response message to /var/log/snap-log/rpc-log constantly. Pay attention to the size of this file. If it gets too large, delete the file on the DPU before launching the SNAP pod.

SR-IOV configuration depends on the kernel version:

Optimal configuration may be achieved with a new kernel in which the

sriov_drivers_autoprobe sysfsentry exists in/sys/bus/pci/devices/<BDF>/Otherwise, the minimal requirement may be met if the

sriov_totalvfs sysfsentry exists in/sys/bus/pci/devices/<BDF>/

After configuration is finished, no disk is expected to be exposed in the hypervisor. The disk only appears in the VM after the PCIe VF is assigned to it using the virtualization manager. If users want to use the device from the hypervisor, they must bind the PCIe VF manually.

Hot-plug PFs do not support SR-IOV.

It is recommended to add pci=assign-busses to the boot command line when creating more than 127 VFs.

Without this option, the following errors may appear from host and the virtio driver will not probe these devices.

pci 0000:84:00.0: [1af4:1041] type 7f class 0xffffff

pci 0000:84:00.0: unknown header type 7f, ignoring device

Zero-copy is supported on SPDK 21.07 and higher.

SNAP-direct allows SNAP applications to transfer data directly from the host memory to remote storage without using any staging buffer inside the DPU.

SNAP enables the feature according to the SPDK BDEV configuration only when working against an SPDK NVMe-oF RDMA block device.

To enable zero copy, set the environment variable (as it is enabled by default):

SNAP_RDMA_ZCOPY_ENABLE=1

For more info refer to the section SNAP Environment Variables.

NVMe/TCP Zero Copy is implemented as a custom NVDA_TCP transport in SPDK NVMe initiator and it is based on a new XLIO socket layer implementation.

The implementation is different for Tx and Rx:

The NVMe/TCP Tx Zero Copy is similar between RDMA and TCP in that the data is sent from the host memory directly to the wire without an intermediate copy to Arm memory

The NVMe/TCP Rx Zero Copy allows achieving partial zero copy on the Rx flow by eliminating copy from socket buffers (XLIO) to application buffers (SNAP). But data still must be DMA'ed from Arm to host memory.

To enable NVMe/TCP Zero Copy, use SPDK v22.05.nvda --with-xlio (v22.05.nvda or higher).

For more information about XLIO including limitations and bug fixes, refer to the NVIDIA Accelerated IO (XLIO) Documentation.

To enable SNAP TCP XLIO Zero Copy:

SNAP container: Set the environment variables and resources in the YAML file:

resources: requests: memory: "4Gi" cpu: "8" limits: hugepages-2Mi: "4Gi" memory: "6Gi" cpu: "16" ## Set according to the local setup env: - name: APP_ARGS value: "--wait-for-rpc" - name: SPDK_XLIO_PATH value: "/usr/lib/libxlio.so"

SNAP sources: Set the environment variables and resources in the relevant scripts

In

run_snap.sh, edit theAPP_ARGSvariable to use the SPDK command line argument--wait-for-rpc:run_snap.sh

APP_ARGS="--wait-for-rpc"

In

set_environment_variables.sh, uncomment theSPDK_XLIO_PATHenvironment variable:set_environment_variables.sh

export SPDK_XLIO_PATH="/usr/lib/libxlio.so"

NVMe/TCP XLIO requires a BlueField Arm OS hugepage size of 4G (i.e., 2G more hugepages than non-XLIO). For information on configuring the hugepages, refer to sections "Step 1: Allocate Hugepages" and "Adjusting YAML Configuration".

At high scale, it is required to use the global variable XLIO_RX_BUFS=4096 even though it leads to high memory consumption. Using XLIO_RX_BUFS=1024 requires lower memory consumption but limits the ability to scale the workload.

For more info refer to the section "SNAP Environment Variables".

It is recommended to configure NVMe/TCP XLIO with the transport ack timeout option increased to 12.

[dpu] spdk_rpc.py bdev_nvme_set_options --transport-ack-timeout 12

Other bdev_nvme options may be adjusted according to requirements.

Expose an NVMe-oF subsystem with one namespace by using a TCP transport type on the remote SPDK target.

[dpu] spdk_rpc.py sock_set_default_impl -i xlio

[dpu] spdk_rpc.py framework_start_init

[dpu] spdk_rpc.py bdev_nvme_set_options --transport-ack-timeout 12

[dpu] spdk_rpc.py bdev_nvme_attach_controller -b nvme0 -t nvda_tcp -a 3.3.3.3 -f ipv4 -s 4420 -n nqn.2023-01.io.nvmet

[dpu] snap_rpc.py nvme_subsystem_create --nqn nqn.2023-01.com.nvda:nvme:0

[dpu] snap_rpc.py nvme_namespace_create -b nvme0n1 -n 1 --nqn nqn. 2023-01.com.nvda:nvme:0 --uuid 16dab065-ddc9-8a7a-108e-9a489254a839

[dpu] snap_rpc.py nvme_controller_create --nqn nqn.2023-01.com.nvda:nvme:0 --ctrl NVMeCtrl1 --pf_id 0 --suspended --num_queues 16

[dpu] snap_rpc.py nvme_controller_attach_ns -c NVMeCtrl1 -n 1

[dpu] snap_rpc.py nvme_controller_resume -c NVMeCtrl1 -n 1

[host] modprobe -v nvme

[host] fio --filename /dev/nvme0n1 --rw randrw --name=test-randrw --ioengine=libaio --iodepth=64 --bs=4k --direct=1 --numjobs=1 --runtime=63 --time_based --group_reporting --verify=md5

For more information on XLIO, please refer to XLIO documentation.

The SPDK version that comes with SNAP supports hardware encryption/decryption offload. To enable AES/XTS, follow the instructions under section "Modifying SF Trust Level to Enable Encryption".

Zero Copy (SNAP-direct) with Encryption

SNAP offers support for zero copy with encryption for bdev_nvme with an RDMA transport.

If another bdev_nvme transport or base bdev other than NVMe is used, then zero copy flow is not supported, and additional DMA operations from the host to the BlueField Arm are performed.

Refer to section "SPDK Crypto Example" to see how to configure zero copy flow with AES_XTS offload.

|

Command |

Description |

|

|

Accepts a list of devices to be used for the crypto operation |

|

|

Creates a crypto key |

|

|

Constructs NVMe block device |

|

|

Creates a virtual block device which encrypts write IO commands and decrypts read IO commands |

mlx5_scan_accel_module

Accepts a list of devices to use for the crypto operation provided in the --allowed-devs parameter. If no devices are specified, then the first device which supports encryption is used.

For best performance, it is recommended to use the devices with the largest InfiniBand MTU (4096). The MTU size can be verified using the ibv_devinfo command (look for the max and active MTU fields). Normally, the mlx5_2 device is expected to have an MTU of 4096 and should be used as an allowed crypto device.

Command parameters:

|

Parameter |

Mandatory? |

Type |

Description |

|

|

No |

Number |

QP size |

|

|

No |

Number |

Size of the shared requests pool |

|

|

No |

String |

Comma-separated list of allowed device names (e.g., "mlx5_2") Note

Make sure that the device used for RDMA traffic is selected to support zero copy.

|

|

|

No |

Boolean |

Enables accel_mlx5 platform driver. Allows AES_XTS RDMA zero copy. |

accel_crypto_key_create

Creates crypto key. One key can be shared by multiple bdevs.

Command parameters:

|

Parameter |

Mandatory? |

Type |

Description |

|

|

Yes |

Number |

Crypto protocol (AES_XTS) |

|

|

Yes |

Number |

Key |

|

|

Yes |

Number |

Key2 |

|

|

Yes |

String |

Key name |

bdev_nvme_attach_controller

Creates NVMe block device.

Command parameters:

|

Parameter |

Mandatory? |

Type |

Description |

|

|

Yes |

String |

Name of the NVMe controller, prefix for each bdev name |

|

|

Yes |

String |

NVMe-oF target trtype (e.g., rdma, pcie) |

|

|

Yes |

String |

NVMe-oF target address (e.g., an IP address or BDF) |

|

|

No |

String |

NVMe-oF target trsvcid (e.g., a port number) |

|

|

No |

String |

NVMe-oF target adrfam (e.g., ipv4, ipv6) |

|

|

No |

String |

NVMe-oF target subnqn |

bdev_crypto_create

This RPC creates a virtual crypto block device which adds encryption to the base block device.

Command parameters:

|

Parameter |

Mandatory? |

Type |

Description |

|

|

Yes |

String |

Name of the base bdev |

|

|

Yes |

String |

Crypto bdev name |

|

|

Yes |

String |

Name of the crypto key created with |

SPDK Crypto Example

The following is an example of a configuration with a crypto virtual block device created on top of bdev_nvme with RDMA transport and zero copy support:

[dpu] # spdk_rpc.py mlx5_scan_accel_module --allowed-devs "mlx5_2" --enable-driver

[dpu] # spdk_rpc.py framework_start_init

[dpu] # spdk_rpc.py accel_crypto_key_create -c AES_XTS -k 00112233445566778899001122334455 -e 11223344556677889900112233445500 -n test_dek

[dpu] # spdk_rpc.py bdev_nvme_attach_controller -b nvme0 -t rdma -a 1.1.1.1 -f ipv4 -s 4420 -n nqn.2016-06.io.spdk:cnode0

[dpu] # spdk_rpc.py bdev_crypto_create nvme0n1 crypto_0 -n test_dek

[dpu] # snap_rpc.py spdk_bdev_create crypto_0

[dpu] # snap_rpc.py nvme_subsystem_create --nqn nqn.2023-05.io.nvda.nvme:0

[dpu] # snap_rpc.py nvme_controller_create --nqn nqn.2023-05.io.nvda.nvme:0 --pf_id 0 --ctrl NVMeCtrl0 --suspended

[dpu] # snap_rpc.py nvme_namespace_create –nqn nqn.2023-05.io.nvda.nvme:0 --bdev_name crypto_0 –-nsid 1 -–uuid 263826ad-19a3-4feb-bc25-4bc81ee7749e

[dpu] # snap_rpc.py nvme_controller_attach_ns –-ctrl NVMeCtrl0 --nsid 1

[dpu] # snap_rpc.py nvme_controller_resume –-ctrl NVMeCtrl0

Live migration is a standard process supported by QEMU which allows system administrators to pass devices between virtual machines in a live running system. For more information, refer to QEMU VFIO Device Migration documentation.

Live migration is supported for SNAP virtio-blk devices. It can be activated using a driver with proper support (e.g., NVIDIA's proprietary vDPA-based Live Migration Solution).

snap_rpc.py virtio_blk_controller_create --dbg_admin_q …

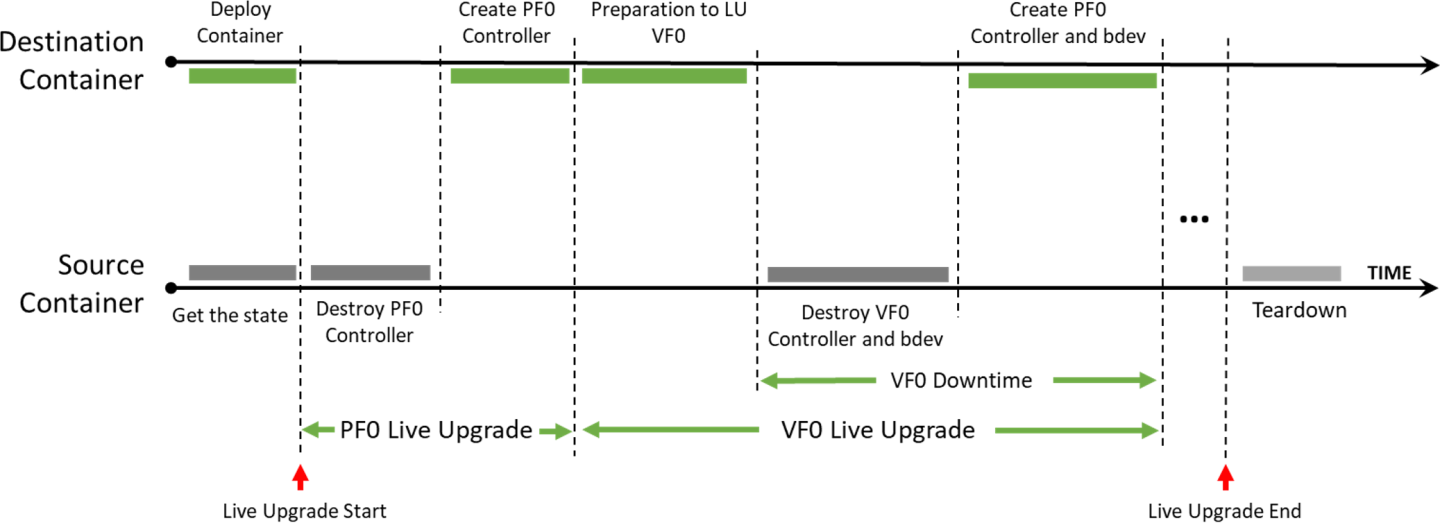

Live upgrade enables updating the SNAP image used by a container without causing SNAP container downtime.

While newer SNAP releases may introduce additional content, potentially causing behavioral differences during the upgrade, the process is designed to ensure backward compatibility. Updates between releases within the same sub-version (e.g., 4.0.0-x to 4.0.0-y) should proceed without issues.

However, updates across different major or minor versions may require changes to system components (e.g., firmware, BFB), which may impact backward compatibility and necessitate a full reboot post update. In those cases, live updates are unnecessary.

Live Upgrade Prerequisites

To enable live upgrade, perform the following modifications:

Allocate double hugepages for the destination and source containers.

Make sure the requested amount of CPU cores is available.

The default YAML configuration sets the container to request a CPU core range of 8-16. This means that the container is not deployed if there are fewer than 8 available cores, and if there are 16 free cores, the container utilizes all 16.

For instance, if a container is currently using all 16 cores and, during a live upgrade, an additional SNAP container is deployed. In this case, each container uses 8 cores during the upgrade process. Once the source container is terminated, the destination container starts utilizing all 16 cores.

NoteFor 8-core DPUs, the

.yamlmust be edited to the range of 4-8 CPU cores.Change the name of the

doca_snap.yamlfile that describes the destination container (e.g.,doca_snap_new.yaml) so as to not overwrite the running container.yaml.Change the name of the new

.yamlpod in line 16 (e.g.,snap-new).Deploy the the destination container by copying the new yaml (e.g.,

doca_snap_new.yaml) to kubelet.

After deploying the destination container, until the live update process is complete, avoid making any configuration changes via RPC. Specifically, do not create or destroy hotplug functions.

When restoring a controller in the destination container during a live update, it is recommended to use the same arguments originally used for controller creation in the source container.

Live Upgrade Flow

The way to live upgrade the SNAP image is to move the SNAP controllers and SPDK block devices between different containers while minimizing the duration of the host VMs impact.

Source container – the running container before live upgrade

Destination container – the running container after live upgrade

SNAP Container Live Upgrade Procedure

Follow the steps in section "Live Upgrade Prerequisites" and deploy the destination SNAP container using the modified

yamlfile.Query the source and destination containers:

crictl ps -r

Check for

SNAP started successfullyin the logs of the destination container, then copy the live update from the container to your environment.[dpu] crictl logs -f <dest-container-id> [dpu] crictl exec <dest-container-id> cp /opt/nvidia/nvda_snap/bin/live_update.py /etc/nvda_snap/

Run the

live_update.pyscript to move all active objects from the source container to the destination container:[dpu] cd /etc/nvda_snap [dpu] ./live_update.py -s <source-container-id> -d <dest-container-id>

Delete the source container.

NoteTo post RPCs, use the crictl tool:

crictl exec -it <container-id X> snap_rpc.py <RPC-method> crictl exec -it <container-id Y> spdk_rpc.py <RPC-method>

NoteTo automate the SNAP configuration (e.g., following failure or reboot) as explained in section "Automate SNAP Configuration (Optional)",

spdk_rpc_init.confandsnap_rpc_init.confmust not include any configs as part of the live upgrade. Then, once the transition to the new container is done,spdk_rpc_init.confandsnap_rpc_init.confcan be modified with the desired configuration.

SNAP Container Live Upgrade Commands

The live update tool is designed to support fast live updates. It iterates over the available emulation functions and performs the following actions for each one:

On the source container:

snap_rpc.py virtio_blk_controller_suspend --ctrl [ctrl_name] --events_only

On the destination container:

spdk_rpc.py bdev_nvme_attach_controller ... snap_rpc.py virtio_blk_controller_create ... --suspended --live_update_listener

On the source container:

snap_rpc.py virtio_blk_controller_destroy --ctrl [ctrl_name] spdk_rpc.py bdev_nvme_detach_controller [bdev_name]

Message Signaled Interrupts eXtended (MSIX) is an interrupt mechanism that allows devices to use multiple interrupt vectors, providing more efficient interrupt handling than traditional interrupt mechanisms such as shared interrupts. In Linux, MSIX is supported in the kernel and is commonly used for high-performance devices such as network adapters, storage controllers, and graphics cards. MSIX provides benefits such as reduced CPU utilization, improved device performance, and better scalability, making it a popular choice for modern hardware.

However, proper configuration and management of MSIX interrupts can be challenging and requires careful tuning to achieve optimal performance, especially in a multi-function environment as SR-IOV.

By default, BlueField distributes MSIX vectors evenly between all virtual PCIe functions (VFs). This approach is not optimal as users may choose to attach VFs to different VMs, each with a different number of resources. Dynamic MSIX management allows the user to manually control of the number of MSIX vectors provided per each VF independently.

Configuration and behavior are similar for all emulation types, and specifically NVMe and virtio-blk.

Dynamic MSIX management is built from several configuration steps:

At this point, and in any other time in the future when no VF controllers are opened (

sriov_numvfs=0), all PF-related MSIX vectors can be reclaimed from the VFs to the PF's free MSIX pool.User must take some of the MSIX from the free pool and give them to a certain VF during VF controller creation.

When destroying a VF controller, the user may choose to release its MSIX back to the pool.

Once configured, the MSIX link to the VFs remains persistent and may change only in the following scenarios:

User explicitly requests to return VF MSIXs back to the pool during controller destruction.

PF explicitly reclaims all VF MSIXs back to the pool.

Arm reboot (FE reset/cold boot) has occurred.

To emphasize, the following scenarios do not change MSIX configuration:

Application restart/crash.

Closing and reopening PF/VFs without dynamic MSIX support.

The following is an NVMe example of dynamic MSIX configuration steps (similar configuration also applies for virtio-blk):

Reclaim all MSIX from VFs to PF's free MSIX pool:

snap_rpc.py nvme_controller_vfs_msix_reclaim <CtrlName>

Query the controller list to get information about the resources constraints for the PF:

# snap_rpc.py nvme_controller_list -c <CtrlName> … 'free_msix': 100, … 'free_queues': 200, … 'vf_min_msix': 2, … 'vf_max_msix': 64, … 'vf_min_queues': 0, … 'vf_max_queues': 31, …

Where:

free_msixstands for the number of total MSIX available in the PF's free pool, to be assigned for VFs, through the parametervf_num_msix(of the<protocol>_controller_createRPC).free_queuesstands for the number of total queues (or "doorbells") available in the PF's free pool, to be assigned for VFs, through the parameternum_queues(of the<protocol>_controller_createRPC).vf_min_msixandvf_max_msixtogether define the available configurable range ofvf_num_msixparameter value which can be passed in<protocol>_controller_createRPC for each VF.vf_min_queuesandvf_max_queuestogether define the available configurable range ofnum_queuesparameter value which can be passed in<protocol>_controller_createRPC for each VF.

Distribute MSIX between VFs during their creation process, considering the PF's limitations:

snap_rpc.py nvme_controller_create_ --vf_num_msix <n> --num_queues <m> …

NoteIt is strongly advised to provide both

vf_num_msixandnum_queuesparameters upon VF controller creation. Providing only one of the values may result in a conflict between MSIX and queue configuration, which may in turn cause the controller/driver to malfunction.TipIn NVMe protocol, MSIX is used by NVMe CQ. Therefore, it is advised to assign 1 MSIX out of the PF's global pool (

free_msix) for each assigned queue.In virtio protocol, MSIX is used by virtqueue and one extra MSIX is required for BAR configuration changes notification. Therefore, it is advised to assign 1 MSIX out of the PF's global pool (

free_msix) for every assigned queue, and one more as configuration MSIX.In summary, the best practice for queues/MSIX ratio configuration is:

For NVMe –

num_queues=vf_num_msixFor virtio –

num_queues=vf_num_msix-1

Upon VF teardown, release MSIX back to the free pool:

snap_rpc.py nvme_controller_destroy_ --release_msix …

Set SR-IOV on the host driver:

echo <N> > /sys/bus/pci/devices/<BDF>/sriov_numvfs

NoteIt is highly advised to open all VF controllers in SNAP in advance before binding VFs to the host/guest driver. That way, for example in case of a configuration mistake which does not leave enough MSIX for all VFs, the configuration remains reversible as MSIX is still modifiable. Otherwise, the driver may try to use the already-configured VFs before all VF configuration has finished but will not be able to use all of them (due to lack of MSIX). The latter scenario may result in host deadlock which, at worst, can be recovered only with cold boot.

NoteThere are several ways to configure dynamic MSIX safely (without VF binding):

Disable kernel driver automatic VF binding to kernel driver:

# echo 0 > /sys/bus/pci/devices/sriov_driver_autoprobe

After finishing MSIX configuration for all VFs, they can then be bound to VMs, or even back to the hypervisor:

echo "0000:01:00.0" > /sys/bus/pci/drivers/nvme/bind

Use VFIO driver (instead of kernel driver) for SR-IOV configuration.

For example:

# echo 0000:af:00.2 > /sys/bus/pci/drivers/vfio-pci/bind # Bind PF to VFIO driver # echo 1 > /sys/module/vfio_pci/parameters/enable_sriov # echo <N> > /sys/bus/pci/drivers/vfio-pci/0000:af:00.2/sriov_numvfs # Create VFs device for it

NVMe Recovery

NVMe recovery allows the NVMe controller to be recovered after a SNAP application is closed whether gracefully or after a crash (e.g., kill -9).

To use NVMe recovery, the controller must be re-created in a suspended state with the same configuration as before the crash (i.e., the same bdevs, num queues, and namespaces with the same uuid, etc).

The controller must be resumed only after all NSs are attached.

NVMe recovery uses files on the BlueField under /dev/shm to recover the internal state of the controller. Shared memory files are deleted when the BlueField is reset. For this reason, recovery is not supported after BF reset.

Virtio-blk Crash Recovery

The following options are available to enable virtio-blk crash recovery.

Virtio-blk Crash Recovery with --force_in_order

For virtio-blk crash recovery with --force_in_order, disable the VBLK_RECOVERY_SHM environment variable and create a controller with the --force_in_order argument.

In virtio-blk SNAP, the application is not guaranteed to recover correctly after a sudden crash (e.g., kill -9).

To enable the virtio-blk crash recovery, set the following:

snap_rpc.py virtio_blk_controller_create --force_in_order …

Setting force_in_order to 1 may impact virtio-blk performance as it will serve the command in-order.

If --force_in_order is not used, any failure or unexpected teardown in SNAP or the driver may result in anomalous behavior because of limited support in the Linux kernel virtio-blk driver.

Virtio-blk Crash Recovery without --force_in_order

For virtio-blk crash recovery without --force_in_order, enable the VBLK_RECOVERY_SHM environment variable and create a controller without the --force_in_order argument.

Virtio-blk recovery allows the virtio-blk controller to be recovered after a SNAP application is closed whether gracefully or after a crash (e.g., kill -9).

To use virtio-blk recovery without --force_in_order flag. VBLK_RECOVERY_SHM must be enabled, the controller must be recreated with the same configuration as before the crash (i.e., same bdevs, num queues, etc).

When VBLK_RECOVERY_SHM is enabled, virtio-blk recovery uses files on the BlueField under /dev/shm to recover the internal state of the controller. Shared memory files are deleted when the BlueField is reset. For this reason, recovery is not supported after BlueField reset.

Improving SNAP Recovery Time

The following table outlines features designed to accelerate SNAP initialization and recovery processes following termination.

|

Feature |

Description |

How to? |

|

SPDK JSON-RPC configuration file |

An initial configuration can be specified for the SPDK configuration in SNAP. The configuration file is a JSON file containing all the SPDK JSON-RPC method invocations necessary for the desired configuration. Moving from posting RPCs to JSON file improves bring-up time. Info

For more information check SPDK JSON-RPC documentation.

|

To generate a JSON-RPC file based on the current configuration, run:

The Note

If SPDK encounters an error while processing the JSON configuration file, the initialization phase fails, causing SNAP to exit with an error code.

|

|

Disable SPDK accel functionality |

The SPDK accel functionality is necessary when using NVMe TCP features. If NVMe TCP is not used, accel should be manually disabled to reduce the SPDK startup time, which can otherwise take few seconds. To disable all accel functionality edit the flags |

Edit the config file as follows:

|

|

Provide the emulation manager name |

If the |

Use |

|

DPU mode for virtio-blk |

DPU mode is supported only with virtio-blk. DPU mode r educes SNAP downtime during crash recovery. |

Set |

The SNAP ML optimizer is a tool designed to fine-tune SNAP’s poller parameters, enhancing SNAP I/O handling performance and increasing controller throughput based on specific environments and workloads.

During workload execution, the optimizer iteratively adjusts configurations (actions) and evaluates their impact on performance (reward). By predicting the best configuration to test next, it efficiently narrows down to the optimal setup without needing to explore every possible combination.

Once the optimal configuration is identified, it can be applied to the target system, improving performance under similar conditions. Currently, the tool supports "IOPS" as the reward metric, which it aims to maximize.

SNAP ML Optimizer Preparation Steps

Machine Requirements

The device should be able to SSH to the BlueField:

Python 3.10 or above

At least 6 GB of free storage

Setting Up SNAP ML Optimizer

To set up the SNAP ML optimizer:

Copy the

snap_mlfolder from the container to the sharednvda_snapfolder and then to the requested machine:crictl exec -it $(crictl ps -s running -q --name snap) cp -r /opt/nvidia/nvda_snap/bin/snap_ml /etc/nvda_snap/

Change directory to the

snap_mlfolder:cd tools/snap_ml

Create a virtual environment for the SNAP ML optimizer.

python3 -m venv snap_ml

This ensures that the required dependencies are installed in an isolated environment.

Activate the virtual environment to start working within this isolated environment:

source snap_ml/bin/activate

Install the Python package requirements:

pip3 install –-no-cache-dir -r requirements.txt

This may take some time depending on your system's performance.

Run the SNAP ML Optimizer.

python3 snap_ml.py --help

Use the

--helpflag to see the available options and usage information:--version Show the version and exit. -f, --framework <TEXT> Name of framework (Recommended: ax , supported: ax, pybo). -t, --total-trials <INTEGER> Number of optimization iterations. The recommended range is 25-60. --filename <TEXT> where to save the results (default: last_opt.json). --remote <TEXT> connect remotely to the BlueField card, format: <bf_name>:<username>:<password> --snap-rpc-path <TEXT> Snap RPC prefix (default: container path). --log-level <TEXT> CRITICAL | ERROR | WARN | WARNING | INFO | DEBUG --log-dir <TEXT> where to save the logs.

SNAP ML Optimizer Related RPCs

snap_actions_set

The snap_actions_set command is used to dynamically adjust SNAP parameters (known as "actions") that control polling behavior. This command is a core feature of SNAP-AI tools, enabling both automated optimization for specific environments and workloads, as well as manual adjustment of polling parameters.

Command parameters:

|

Parameter |

Mandatory? |

Type |

Description |

|

|

No |

Number |

Maximum number of IOs SNAP passes in a single polling cycle (integer; 1-256) |

|

|

No |

Number |

The rate in which SNAP poll cycles occur (float; 0< |

|

|

No |

Number |

Maximum number of in-flight IOs per core (integer; 1-65535) |

|

|

No |

Number |

Maximum fairness batch size (integer; 1-4096) |

|

|

No |

Number |

Maximum number of new IOs to handle in a single poll cycle (integer; 1-4096) |

snap_reward_get

The snap_reward_get command retrieves performance counters, specifically completion counters (or "reward"), which are used by the optimizer to monitor and enhance SNAP performance.

No parameters are required for this command.

Optimizing SNAP Parameters for ML Optimizer

To optimize SNAP’s parameters for your environment, use the following command:

python3 snap_ml.py --framework ax --total-trials 40 --filename example.json --remote <bf_hostname>:<username>:<password> --log-dir <log_directory>

Results and Post-optimization Actions

Once the optimization process is complete, the tool automatically applies the optimized parameters. These parameters are also saved in a example.json file in the following format:

{

"poll_size": 30,

"poll_ratio": 0.6847347955107689,

"max_inflights": 32768,

"max_iog_batch": 512,

"max_new_ios": 32

}

Additionally, the tool documents all iterations, including the actions taken and the rewards received, in a timestamped file named example_<timestamp>.json.

Applying Optimized Parameters Manually

Users can apply the optimized parameters on fresh instances of SNAP service by explicitly calling the snap_actions_set RPC with the optimized parameters as follows:

snap_rpc.py snap_actions_set –poll_size 30 –poll_ratio 0.6847 --max_inflights 32768 –max_iog_batch 512 –max_new_ios 32

It is only recommended to use the optimized parameters if the system is expected to behave similarly to the system on which the SNAP ML optimizer is used.

Deactivating Python Environment

Once users are done using the SNAP ML Optimizer, they can deactivate the Python virtual environment by running:

deactivate