Virtio-net Deployment

To install the BFB bundle on the NVIDIA® BlueField®, run the following command from the Linux hypervisor:

host# sudo bfb-install --rshim <rshimN> --bfb <image_path.bfb>

For more information, refer to section "Deploying BlueField Software Using BFB from Host" in the NVIDIA BlueField DPU BSP documentation.

From the BlueField networking platform, run:

dpu# sudo /opt/mellanox/mlnx-fw-updater/mlnx_fw_updater.pl --force-fw-update

For more information, refer to section "Upgrading Firmware" in the NVIDIA DOCA Installation Guide for Linux.

As default, DPU should be configured in DPU mode. A simple way to confirm DPU is running at DPU mode is to log into the BlueField Arm system and check if p0 and pf0hpf both exists by running command below.

dpu# ip link show

Virtio-net full emulation only works in DPU mode. For more information about DPU mode configuration, please refer to page "Mode of Operation" in the NVIDIA BlueField DPU BSP documentation.

Before enabling the virtio-net service, configure firmware via mlxconfig tool is required. There are examples on typical configurations, the table listed relevant mlxconfig entry descriptions.

For mlxconfig configuration changes to take effect, full power cycle is necessary.

|

Mlxconfig Entries |

Description |

|

VIRTIO_NET_EMULATION_ENABLE |

Must be set to TRUE, for virtio-net to be enabled |

|

VIRTIO_NET_EMULATION_NUM_PF |

Total number of PCIe functions (PFs) exposed by the device for virtio-net emulation. Those functions are persistent along with host/BlueField power cycle. |

|

VIRTIO_NET_EMULATION_NUM_VF |

The max number of virtual functions (VFs) that can be supported for each virtio-net PF |

|

VIRTIO_NET_EMULATION_NUM_MSIX |

Number of MSI-X vectors assigned for each PF/VF of the virtio-net emulation device |

|

PCI_SWITCH_EMULATION_ENABLE |

When TRUE, the device exposes a PCIe switch. All PF configurations are applied on the switch downstream ports. In such case, each PF gets a different PCIe device on the emulated switch. This configuration allows exposing extra network PFs toward the host which can be enabled for virtio-net hot-plug devices. |

|

PCI_SWITCH_EMULATION_NUM_PORT |

The maximum number of emulated switch ports. Each port can hold a single PCIe device (emulated or not). This determines the supported maximum number of hot-plug virtio-net devices. The maximum number depends on hypervisor PCIe resource, and cannot exceed 31. Note

Check system PCIe resource. Changing this entry to a big number may results in the host not booting up, which would necessitate disabling the BlueField device and clearing the host NVRAM.

|

|

PER_PF_NUM_SF |

When TRUE, the SFs configuration is defined by TOTAL_SF and SF_BAR_SIZE for each PF individually. If they are not defined for a PF, device defaults are used. |

|

PF_TOTAL_SF |

The total number of scalable function (SF) partitions that can be supported for the current PF. Valid only when PER_PF_NUM_SF is set to TRUE. This number should be greater than the total number of virtio-net PFs (both static and hotplug) and VFs. Note

This entry differs between the BlueField and host side mlxconfig. It is also a system wide value, which is shared by virtio-net and other users. The DPU normally creates 1 SF as default per port. Consider this default SF into account when reserving the PF_TOTAL_SF.

|

|

PF_SF_BAR_SIZE |

Log (base 2) of the BAR size of a single SF, given in KB. Valid only when PF_TOTAL_SF is non-zero and PER_PF_NUM_SF is set to TRUE. |

|

PF_BAR2_ENABLE |

When TRUE, BAR2 is exposed on all external host PFs (but not on the embedded Arm PFs/ECPFs). The BAR2 size is defined by the log_pf_bar2_size. |

|

SRIOV_EN |

Enable single-root I/O virtualization (SR-IOV) for virtio-net and native PFs |

|

EXP_ROM_VIRTIO_NET_PXE_ENABLE |

Enable expansion ROM option for PXE for virtio-net functions Note

All virtio EXP_ROM options should be configured from host side other than the BlueField platform's side, only static PF is supported.

|

|

EXP_ROM_VIRTIO_NET_UEFI_ARM_ENABLE |

Enable expansion ROM option for UEFI for Arm based host for virtio-net functions |

|

EXP_ROM_VIRTIO_NET_UEFI_x86_ENABLE |

Enable expansion ROM option for UEFI for x86 based host for virtio-net functions |

The maximum number of supported devices is listed below. It does not apply when there are hot-plug and VF created at the same time.

|

Static PF |

Hot-plug PF |

VF |

|

31 |

31 |

1008 |

Static PF

Static PF is defined as virtio-net PFs which are persistent even after DPU or host power cycle. It also supports creating SR-IOV VFs.

The following is an example for enabling the system with 4 static PFs (VIRTIO_NET_EMULATION_NUM_PF) only:

10 SFs (PF_TOTAL_SF) are reserved to take into account other application using the SFs.

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=4 \

VIRTIO_NET_EMULATION_NUM_VF=0 \

VIRTIO_NET_EMULATION_NUM_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=0 \

PCI_SWITCH_EMULATION_NUM_PORT=0 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=64 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

SRIOV_EN=0

Hotplug PF

Hotplug PF is defined as virtio-net PFs which can be hotplugged or unplugged dynamically after the system comes up.

Hotplug PF does not support creating SR-IOV VFs.

The following is an example for enabling 16 hotplug PFs (PCI_SWITCH_EMULATION_NUM_PORT):

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=0 \

VIRTIO_NET_EMULATION_NUM_VF=0 \

VIRTIO_NET_EMULATION_NUM_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=1 \

PCI_SWITCH_EMULATION_NUM_PORT=16 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=64 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

SRIOV_EN=0

SR-IOV VF

SR-IOV VF is defined as virtio-net VFs created on top of PFs. Each VF gets an individual virtio-net PCIe devices.

VFs cannot be dynamically created or destroyed, they can only change from X to 0, or from 0 to X.

VFs will be destroyed when reboot host or unbind PF from virtio-net kernel driver.

The following is an example for enabling 126 VFs per static PF—504 (4 PF x 126) VFs in total:

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=4 \

VIRTIO_NET_EMULATION_NUM_VF=126 \

VIRTIO_NET_EMULATION_NUM_MSIX=64 \

PCI_SWITCH_EMULATION_ENABLE=0 \

PCI_SWITCH_EMULATION_NUM_PORT=0 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=512 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

SRIOV_EN=1

PF/VF Combinations

Creating static/hotplug PFs and VFs at the same time is supported.

The total sum of PCIe functions to the external host must not exceed 256. For example:

If there are 2 PFs with no VFs (NUM_OF_VFS=0) and there is 1 RShim, then the remaining static functions is 253 (256-3).

If 1 virtio-net PF is configured (VIRTIO_NET_EMULATION_NUM_PF=1), then up to 252 virtio-net VFs can be configured (VIRTIO_NET_EMULATION_NUM_VF=252)

If 2 virtio-net PF (VIRTIO_NET_EMULATION_NUM_PF=2), then up to 125 virtio-net VFs can be configured (VIRTIO_NET_EMULATION_NUM_VF=125)

The following is an example for enabling 15 hotplug PFs, 2 static PFs, and 200 VFs (2 PFs x 100):

[dpu]# mlxconfig -d 03:00.0 s \

VIRTIO_NET_EMULATION_ENABLE=1 \

VIRTIO_NET_EMULATION_NUM_PF=2 \

VIRTIO_NET_EMULATION_NUM_VF=100 \

VIRTIO_NET_EMULATION_NUM_MSIX=10 \

PCI_SWITCH_EMULATION_ENABLE=1 \

PCI_SWITCH_EMULATION_NUM_PORT=15 \

PER_PF_NUM_SF=1 \

PF_TOTAL_SF=256 \

PF_BAR2_ENABLE=0 \

PF_SF_BAR_SIZE=8 \

SRIOV_EN=1

In hotplug virtio-net PFs and virtio-net SR-IOV VFs setups, only up to 15 hotplug devices are supported.

Host System Configuration

For hotplug device configuration, it is recommended to modify the hypervisor OS kernel boot parameters and add the options below:

pci=realloc

For SR-IOV configuration, first enable SR-IOV from the host.

Refer to MLNX_OFED documentation under Features Overview and Configuration > Virtualization > Single Root IO Virtualization (SR-IOV) > Setting Up SR-IOV for instructions on how to do that.

Make sure to add the following options to Linux boot parameter.

intel_iommu=on iommu=pt

Add pci=assign-busses to the boot command line when creating more than 127 VFs. Without this option, the following errors may trigger from the host and the virtio driver would not probe those devices.

pci 0000:84:00.0: [1af4:1041] type 7f class 0xffffff

pci 0000:84:00.0: unknown header type 7f, ignoring device

Because the controller from the BlueField side provides hardware resources and acknowledges (ACKs) the request from the host's virtio-net driver, it is mandatory to reboot the host OS first and the BlueField afterwards. This also applies to reconfiguring a controller from the BlueField platform (e.g., reconfiguring LAG). Unloading the virtio-net driver from host OS side is recommended.

BlueField System Configuration

Virtio-net full emulation is based on ASAP^2. For each virtio-net device created from host side, there is an SF representor created to represent the device from the BlueField side. It is necessary to have the SF representor in the same OVS bridge of the uplink representor.

The SF representor name is designed in a fixed pattern to map different type of devices.

|

Static PF |

Hotplug PF |

SR-IOV VF |

|

|

SF Range |

1000-999 |

2000-2999 |

3000 and above |

For example, the first static PF gets the SF representor of en3f0pf0sf1000 and the second hotplug PF gets the SF representor of en3f0pf0sf2001. It is recommended to verify the name of the SF representor from the sf_rep_net_device field in the output of virtnet list.

[dpu]# virtnet list

{

...

"devices": [

{

"pf_id": 0,

"function_type": "static PF",

"transitional": 0,

"vuid": "MT2151X03152VNETS0D0F2",

"msix_num_pool_size": 0,

"min_msix_num": 0,

"max_msix_num": 32,

"bdf": "14:00.2",

"sf_num": 1000,

"sf_parent_device": "mlx5_0",

"sf_parent_device_pci_addr": "0000:03:00.0",

"sf_rep_net_device": "en3f0pf0sf1000",

"sf_rep_net_ifindex": 28,

"sf_rdma_device": "mlx5_4",

"sf_vhca_id": "0x8C",

"msix_config_vector": "0x0",

"num_msix": 64,

"max_queues": 8,

"max_queues_size": 256,

"net_mac": "4A:82:E3:2E:96:AB",

"net_mtu": 1500,

"speed": 25000,

"supported_hash_types": 0,

"rss_max_key_size": 0

}

]

}

Once SF representor name is located, add it to the same OVS bridge of the corresponding uplink representor and make sure the SF representor is up:

[dpu]# ovs-vsctl show

f2c431e5-f8df-4f37-95ce-aa0c7da738e0

Bridge ovsbr1

Port ovsbr1

Interface ovsbr1

type: internal

Port en3f0pf0sf0

Interface en3f0pf0sf0

Port p0

Interface p0

[dpu]# ovs-vsctl add-port ovsbr1 en3f0pf0sf1000

[dpu]# ovs-vsctl show

f2c431e5-f8df-4f37-95ce-aa0c7da738e0

Bridge ovsbr1

Port ovsbr1

Interface ovsbr1

type: internal

Port en3f0pf0sf0

Interface en3f0pf0sf0

Port en3f0pf0sf1000

Interface en3f0pf0sf1000

Port p0

Interface p0

[dpu]# ip link set dev en3f0pf0sf1000 up

After firmware/system configuration and after system power cycle, the virtio-net devices should be ready to deploy.

First, make sure that mlxconfig options take effect correctly by issuing the following command:

The output has a list with 3 columns: default configuration, current configuration, and next-boot configuration. Verify that the values under the 2nd column match the expected configuration.

[dpu]# mlxconfig -d 03:00.0 -e q | grep -i \*

* PER_PF_NUM_SF False(0) True(1) True(1)

* NUM_OF_VFS 16 0 0

* PF_BAR2_ENABLE True(1) False(0) False(0)

* PCI_SWITCH_EMULATION_NUM_PORT 0 8 8

* PCI_SWITCH_EMULATION_ENABLE False(0) True(1) True(1)

* VIRTIO_NET_EMULATION_ENABLE False(0) True(1) True(1)

* VIRTIO_NET_EMULATION_NUM_VF 0 126 126

* VIRTIO_NET_EMULATION_NUM_PF 0 1 1

* VIRTIO_NET_EMULATION_NUM_MSIX 2 64 64

* PF_TOTAL_SF 0 508 508

* PF_SF_BAR_SIZE 0 8 8

If the system is configured correctly, virtio-net-controller service should be up and running. If the service does not appear as active, double check the firmware/system configurations above.

[dpu]# systemctl status virtio-net-controller.service

● virtio-net-controller.service - Nvidia VirtIO Net Controller Daemon

Loaded: loaded (/etc/systemd/system/virtio-net-controller.service; enabled; vendor preset: disabled)

Active: active (running)

Docs: file:/opt/mellanox/mlnx_virtnet/README.md

Main PID: 30715 (virtio_net_mana)

Tasks: 55

Memory: 11.7M

CGroup: /system.slice/virtio-net-controller.service

├─30715 /usr/sbin/virtio_net_manager

└─30859 virtio_net_controller

To reload or restart the service, run:

[dpu]# systemctl restart virtio-net-controller.service

Hotplug PF Devices

Creating PF Devices

To create a hotplug virtio-net device, run:

[dpu]# virtnet hotplug -i mlx5_0 -f 0x0 -m 0C:C4:7A:FF:22:93 -t 1500 -n 3 -s 1024

InfoRefer to "Virtnet CLI Commands" for full usage.

This command creates one hotplug virtio-net device with MAC address 0C:C4:7A:FF:22:93, MTU 1500, and 3 virtio queues with a depth of 1024 entries. The device is created on the physical port of mlx5_0. The device is uniquely identified by its index. This index is used to query and update device attributes. If the device is created successfully, an output similar to the following appears:

{ "bdf": "15:00.0", "vuid": "MT2151X03152VNETS1D0F0", "id": 0, "transitional": 0, "sf_rep_net_device": "en3f0pf0sf2000", "mac": "0C:C4:7A:FF:22:93", "errno": 0, "errstr": "Success" }

Add the representor port of the device to the OVS bridge and bring it up. Run:

[dpu]# ovs-vsctl add-port <bridge> en3f0pf0sf2000 [dpu]# ip link set dev en3f0pf0sf2000 up

Once steps 1-2 are completed, the virtio-net PCIe device should be available from hypervisor OS with the same PCIe BDF.

[host]# lspci | grep -i virtio 15:00.0 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

Probe virtio-net driver (e.g., kernel driver):

[host]# modprobe -v virtio-pci && modprobe -v virtio-net

The virtio-net device should be created. There are two ways to locate the net device:

Check the dmesg from the host side for the corresponding PCIe BDF:

[host]# dmesg | tail -20 | grep 15:00.0 -A 10 | grep virtio_net [3908051.494493] virtio_net virtio2 ens2f0: renamed from eth0

Check all net devices and find the corresponding MAC address:

[host]# ip link show | grep -i "0c:c4:7a:ff:22:93" -B 1 31: ens2f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 0c:c4:7a:ff:22:93 brd ff:ff:ff:ff:ff:ff

Check that the probed driver and its BDF match the output of the hotplug device:

[host]# ethtool -i ens2f0 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: 0000:15:00.0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no

Now the hotplug virtio-net device is ready to use as a common network device.

Destroying PF Devices

To hot-unplug a virtio-net device, run:

[dpu]# virtnet unplug -p 0

{'id': '0x1'}

{

"errno": 0,

"errstr": "Success"

}

The hotplug device and its representor are destroyed.

SR-IOV VF Devices

Creating SR-IOV VF Devices

After configuring the firmware and BlueField/host system with correct configuration, users can create SR-IOV VFs.

The following procedure provides an example of creating one VF on top of one static PF:

Locate the virtio-net PFs exposed to the host side:

[host]# lspci | grep -i virtio 14:00.2 Network controller: Red Hat, Inc. Virtio network device

Verify that the PCIe BDF matches the backend device from the BlueField side:

[dpu]# virtnet list { ... "devices": [ { "pf_id": 0, "function_type": "static PF", "transitional": 0, "vuid": "MT2151X03152VNETS0D0F2", "msix_num_pool_size": 0, "min_msix_num": 0, "max_msix_num": 32, "bdf": "14:00.2", "sf_num": 1000, "sf_parent_device": "mlx5_0", "sf_parent_device_pci_addr": "0000:03:00.0", "sf_rep_net_device": "en3f0pf0sf1000", "sf_rep_net_ifindex": 28, "sf_rdma_device": "mlx5_4", "sf_vhca_id": "0x8C", "msix_config_vector": "0x0", "num_msix": 64, "max_queues": 8, "max_queues_size": 256, "net_mac": "4A:82:E3:2E:96:AB", "net_mtu": 1500, "speed": 25000, "supported_hash_types": 0, "rss_max_key_size": 0 } ] }

Probe virtio_pci and virtio_net modules from the host:

[host]# modprobe -v virtio-pci && modprobe -v virtio-net

The PF net device should be created.

[host]# ip link show | grep -i "4A:82:E3:2E:96:AB" -B 1 21: ens2f2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 4a:82:e3:2e:96:ab brd ff:ff:ff:ff:ff:ff

The MAC address and PCIe BDF should match between the BlueField side (virtnet list) and host side (ethtool).

[host]# ethtool -i ens2f2 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: 0000:14:00.2 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no

To create SR-IOV VF devices on the host, run the following command with the PF PCIe BDF (0000:14:00.2 in this example):

[host]# echo 1 > /sys/bus/pci/drivers/virtio-pci/0000\:14\:00.2/sriov_numvfs

1 extra virtio-net device is created from the host:

[host]# lspci | grep -i virtio 14:00.2 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01) 14:00.4 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

The BlueField side shows the VF information from virtnet list as well:

[dpu]# virtnet list ... { "vf_id": 0, "parent_pf_id": 0, "function_type": "VF", "transitional": 0, "vuid": "MT2151X03152VNETS0D0F2VF1", "bdf": "14:00.4", "sf_num": 3000, "sf_parent_device": "mlx5_0", "sf_parent_device_pci_addr": "0000:03:00.0", "sf_rep_net_device": "en3f0pf0sf3000", "sf_rep_net_ifindex": 17, "sf_rdma_device": "mlx5_5", "sf_vhca_id": "0x8D", "msix_config_vector": "0x0", "num_msix": 12, "max_queues": 8, "max_queues_size": 256, "net_mac": "56:80:48:20:83:50", "net_mtu": 1500, "speed": 25000, "supported_hash_types": 0, "rss_max_key_size": 0 }

Add the corresponding SF representor to the OVS bridge as the virtio-net PF and bring it up. Run:

[dpu]# ovs-vsctl add-port <bridge> en3f0pf0sf3000 [dpu]# ip link set dev en3f0pf0sf3000 up

Now the VF is functional.

SR-IOV enablement from the host side takes a few minutes. For example, it may take 5 minutes to create 504 VFs.

It is recommended to disable VF autoprobe before creating VFs.

[host]# echo 0 > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_drivers_autoprobe

[host]# echo <num_vfs> > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_numvfs

Users can pass through the VFs directly to the VM after finishing. If using the VFs inside the hypervisor OS is required, bind the VF PCIe BDF:

[host]# echo <virtio_vf_bdf> > /sys/bus/pci/drivers/virtio-pci/bind

Keep in mind to reenable the autoprobe for other use cases:

[host]# echo 1 > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_drivers_autoprobe

Creating VFs for the same PF on different threads may cause the hypervisor OS to hang.

Destroying SR-IOV VF Devices

To destroy SR-IOV VF devices on the host, run:

[host]# echo 0 > /sys/bus/pci/drivers/virtio-pci/<virtio_pf_bdf>/sriov_numvfs

When the echo command returns from the host OS, it does not necessarily mean the BlueField side has finished its operations. To verify that the BlueField is done and it is safe to recreate the VFs, either:

Check controller log from the BlueField and make sure you see a log entry similar to the following:

[dpu]# journalctl -u virtio-net-controller.service -n 3 -f virtio-net-controller[5602]: [INFO] virtnet.c:675:virtnet_device_vfs_unload: static PF[0], Unload (1) VFs finished

Query the last VF from the BlueField side:

[dpu]# virtnet query -p 0 -v 0 -b {'all': '0x0', 'vf': '0x0', 'pf': '0x0', 'dbg_stats': '0x0', 'brief': '0x1', 'latency_stats': '0x0', 'stats_clear': '0x0'} { "Error": "Device doesn't exist" }

Once VFs are destroyed, SFs created for virtio-net from the BlueField side are not destroyed but are saved into the SF pool for reuse later.

All virtio-net devices (static/hotplug PF and VF) support PCIe passthrough to a VM. PCIe passthrough allows the device to get better performance in the VM.

Assigning a virtio-net device to a VM can be done via virt-manager or virsh command.

Locating Virtio-net Devices

All virtio-net devices can be scanned by the PCIe subsystem in hypervisor OS and displayed as a standard PCIe device. Run the following command to locate the virtio-net devices devices with its PCIe BDF.

[host]# lspci | grep 'Virtio network'

00:09.1 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

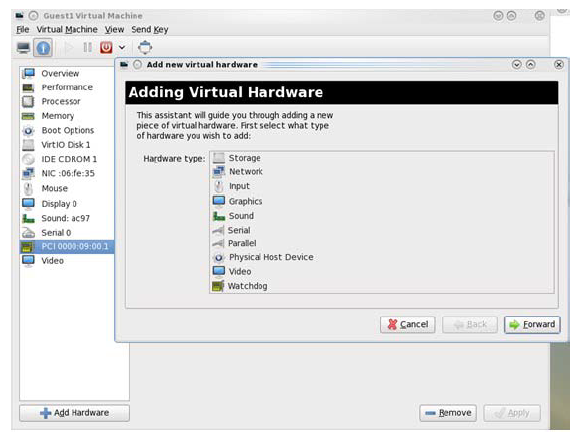

Using virt-manager

Start virt-manager, run the following command:

[host]# virt-manager

Make sure your system has xterm enabled to show the virt-manager GUI.

Double-click the virtual machine and open its Properties. Navigate to Details → Add hardware → PCIe host device.

Choose a virtio-net device virtual function according to its PCIe device (e.g., 00:09.1), reboot or start the VM.

Using virsh Command

Run the following command to get the VM list and select the target VM by Name field:

[host]# virsh list --all Id Name State ---------------------------------------------- 1 host-101-CentOS-8.5 running

Edit the VMs XML file, run:

[host]# virsh edit <VM_NAME>

Assign the target virtio-net device PCIe BDF to the VM, using vfio as driver, replace BUS/SLOT/FUNCTION/BUS_IN_VM/SLOT_IN_VM/FUNCTION_IN_VM with corresponding settings.

<

hostdevmode='subsystem'type='pci'managed='no'> <drivername='vfio'/> <source> <addressdomain='0x0000'bus='<#BUS>' slot='<#SLOT>' function='<#FUNCTION>'/> </source> <addresstype='pci'domain='0x0000'bus='<#BUS_IN_VM>' slot='<#SLOT_IN_VM>' function='<#FUNCTION_IN_VM>'/> </hostdev>For example, assign target device 00.09.1 to the VM and its PCIe BDF within the VM is 01:00.0:

<hostdev mode=

'subsystem'type='pci'managed='no'> <driver name='vfio'/> <source> <address domain='0x0000'bus='0x00'slot='0x09'function='0x1'/> </source> <address type='pci'domain='0x0000'bus='0x01'slot='0x00'function='0x0'/> </hostdev>Destroy the VM if it is already started:

[host]# virsh destory <VM_NAME>

Start the VM with new XML configuration:

[host]# virsh start <VM_NAME>