This release includes the following:

E2E Nav-Stack

Lidar Navigation Stack with Odometry; Advanced Path Planner, Speed Reasoner, Behavior Executor, and enabled by Semantic Maps; Onboard Route Planner

Isaac AMR Cloud Services

Mission Control with Cloud based Route Planner (with cuOpt) + Cloud based Map Server

Tools

Isaac Sight (Visualization & Debug)

Data Recorder and upload

Data Replay

Cloud based Semantic Labeling Tool

Nova Sensor Calibration Tool

Flatsim (2D Dev & Debug)

Nova Carter Demo App provides sensors preview

Developer Experience

Run Navigation Python App with various configs (with/without Semantic Map) in Flatsim, Isaac Sim, and on Robot

Use Isaac Sim (packaged as a container): Nova Sensor (subset) Simulation, Carter Robot Simulation, Warehouse Environment, Integration with Isaac E2E Nav-Stack

Platform

Nav-Stack: Supported on Nova Carter provided by Segway-Ninebot; with Jetpack 5.1.2

Isaac Sim: AWS (A10)

Cloud-Stack: CUDA 11 GPU workstation

Nova Carter Init SW update includes new feature - Pre-Flight Checker

The features italicized above are being released as a “preview”.

| Nova Carter Init | 1.1.1 |

| Jet Pack | 5.1.2 |

| Cuda | 11.4.19 ARM 11.8 ADA (x86) |

| Triton | 2.26.0 |

| TensorRT | 8.6.x ARM |

| Argus | 0.99 |

| VPI | 2.3 |

| GXF | 23.05 |

| WiFi FW | v5.14.0.4-43.20230609_beta |

| RMPLite 220 | v2014 |

| HW | Nova Carter |

| HW | AWS (A10) for running Isaac SIM |

| HW | Cuda 11 GPU workstation for running cloud stack |

Target Operational Design Domains (OODs)

The Navigation App (on a single robot) is tested to operate in the following environments:

Narrow aisles and corridors (~ 3-12 feet wide)

Narrow/Cluttered passages with unmapped obstacles (soft-infrastructure)

Environments with good texture

Environments to avoid:

Large open spaces (such as cross-docking areas in a warehouse; cross-docking areas often undergo rapid changes making maps obsolete, which in turn leads to robots losing their localization)

Environments with poor texture (such as white walls)

Spaces with transparent glass/reflective walls (floor to ceiling)

Spaces with low lying objects

Environment with many dynamic obstacles (high human or other traffic)

Multi-level environments including ramps

Staircase

Controlled access (such as turnstiles, access controlled doors)

Other guidelines to follow:

Do not operate the robot at speeds above 4 kmph (1.1m/s)

When you bootstrap the robot, do it in a very unique environment and avoid an environment that has repeating patterns (such as aisles or office cubicles)

Avoid operating the robot in elevators and other spots where there’s limited WiFi coverage

Record in an environment with good Wi-Fi coverage and speed. Operating in areas with poor Wi-Fi may cause the user interface to become unresponsive. Refresh the browser if this occurs.

Connect to Wired Ethernet before uploading data.

Cloud Limitation and User Advice

Security

The containers provided with the preview of Isaac Cloud Services are intended for local deployment on a developer workstation with limited external network access. To help understand and evaluate the platform, the services do not mutually authenticate or encrypt traffic between each other. As such, they should not be made routable to the public internet. The intended deployment model is for robot and workstation to be on a shared VLAN with egress for Deepmap and cuOpt services only (other configurations are possible and will function with aforementioned threat). Containers should be launched with minimal user permissions.

VDA5050 Support

The GXF Mission Client supports VDA5050 and uses the standard for communication with Mission Dispatch and Mission Control. Map support is pending approval in the VDA5050v3 spec and our Deepmap support is layered on within the 2.0 specification as actions. Interoperability with other VDA5050 services may require falling back to leveraging static maps via URI/file. The OSS released version of Mission Dispatch has had interoperability testing and works with other vendors. We have not verified or certified the additional features in Isaac AMR to work with other vendors’ robots at this time.

Mission Composition

The navigation mission is constructed with a route start location (opt), an end location (opt), route, and iterations (of the route). The route can be arbitrarily large, but the number of iterations/laps are restricted to 100 at this time to avoid the routing problem.

NavStack Limitations and User Advice

Sensor Blind Spot

The Nova Carter’s Lidar sensor has a blind spot zone depicted in Figure 1, which can make it difficult to detect low-lying obstacles in close proximity to the robot. The operator can help to prevent collisions by keeping an eye out for low-lying obstacles and other potential hazards that may be in the blind spot of the robot’s Lidar. In future releases, additional sensors such as cameras will be added to the robot to supplement the Lidar’s field of view and improve the robot’s ability to navigate through complex environments.

Fig. 2 Nova Carter blind spot zone

Slow Reaction to Dynamic Obstacles

The NavStack relies on real-time obstacle mapping to determine the best course of action. Without dynamic obstacle prediction, the robot’s reaction to moving objects may be delayed, which can increase the risk of collisions.

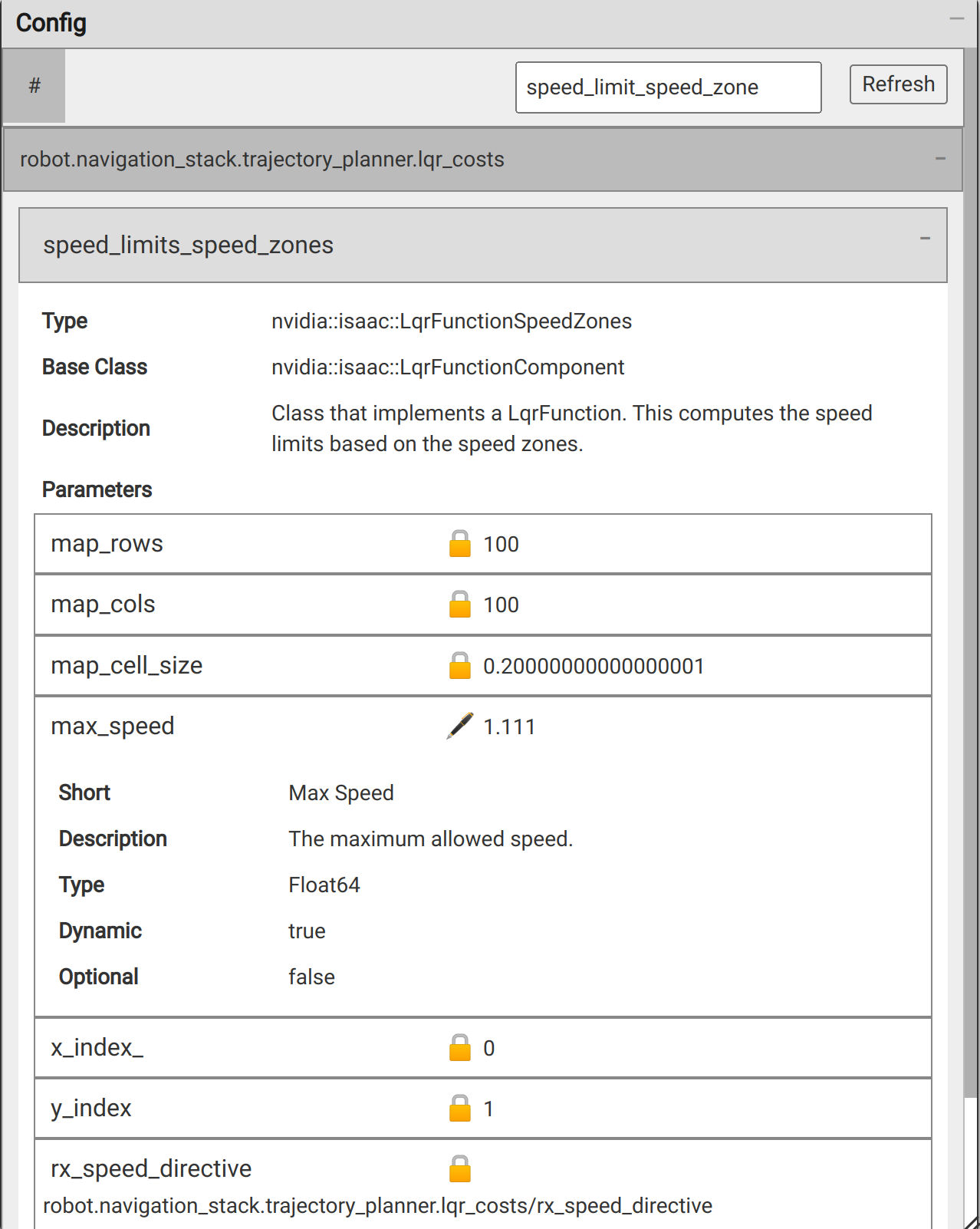

To minimize the risk of collisions with dynamic obstacles, the operator can reduce the robot’s max speed via modifying the config in Sight (see Figure 2) for areas where there may be a high chance of encountering moving objects. We recommend that you keep the speed to under 1.5m/s. You can also try to anticipate potential obstacles based on your own observations of the environment and take over the robot control accordingly.

In future releases, the NavStack will be updated with a speed reasoning logic that will enable the robot to slow down when close to dynamic obstacles and take appropriate action when obstacle prediction is enabled.

Fig. 3 Maximum speed config in Sight

Path Plan Flickers Due to Sensor Noise

To improve the robot’s ability to handle dynamic obstacles, the NavStack is configured to be highly responsive to sensor readings, which may result in more frequent plan adjustments or flickering.

In situations where the robot’s planned path may flicker due to sensor noise, it’s recommended to allow the robot to navigate through the environment at its own pace. The operator can also provide some guidance to the robot, such as using a joystick or other manual control device, to help it stay on track in challenging environments.

In future releases, the NavStack will be upgraded to better understand dynamic obstacles and improve the stability of the robot’s planned path.

Rerouting Failures from Unmapped Blockages

In some cases, the robot may get stuck if it encounters large, unmapped obstacles in tight corridors, for example, aisles. The ability to overcome such blockages by using an adjacent aisle is being worked on, but not fully tested yet.

Fig. 4 Example rerouting failure

Stopping When Obstacles are Close

If the robot determines an obstacle in proximity (closer than 0.15m) on any side of the robot it stops and no longer moves. This is an expected safety feature and SOTIF.

Lidar Based Localization Limitations

Global Localization Failures

The implementation of Lidar-based global localization may not perform well in the following situations:

Repetitive Environments

Fig. 5 Example of repeated environments

Environments such as long repetitive aisles (an office containing cubicles), and Lidar based global localization may face difficulty in accurately providing the initial location. To ensure safe operation, it is recommended that you initiate localization from a unique and easily identifiable location. In the event that the vehicle mistakenly bootstraps to an incorrect position, the continuous localization feature can detect this discrepancy while the vehicle is in motion and automatically trigger a rebootstrap request.

Relocated Obstacles and Dynamic Environments

Fig. 6 Example of a large open area (cross-docking space)

THe 2D Lidar-based global localization can be very sensitive to changes to the environment, such as relocated static obstacles, including chairs, desks, and containers. Static obstacle changes make global localization difficult and reduce the confidence for continuous localization. Large open areas where the map is often rendered obsolete (such as cross-docking areas in a warehouse). To ensure operation in such environments, it is recommended to frequently remap that area or use Teleoperation.

We suggest using one of the following methods for global localization:

Always start the robot in a location with an environment that is unique in the map, and run experiments to ensure that global localization works in this environment.

Provide the current robot location using the Localization Widget.

Camera Based Localization Limitation

Failure in Environments with No Textures

Camera based localization systems may face difficulties when operating in environments where the camera field of view lacks distinguishable patterns, such as flat painted walls. This lack of visual cues leads to ambiguity and can cause cuVSLAM to fail. To ensure safe operation, you are advised to avoid textureless areas or modifying the environment. For example, don’t add paintings, stickers, or tags in the environment.

Localization Failure near Glass Walls

The system may face decreased localization performance in the presence of large glass walls.

Mapping Limitations and User Advice

Real Time Feedback during Data Collection and Missing Coverage

The system lacks real-time feedback on the coverage of data collection. To ensure optimal mapping results, it is advised to proactively create a mapping plan using the layout of the environment. In future releases, coverage planning tools to facilitate this process are expected to be introduced. Furthermore, it is worth noting that inadequate coverage of specific sections within the environment may lead to diminished map quality. To mitigate this, we strongly recommend proactive planning based on the building CAD file or other pertinent information.

Loop Closure

Insufficient loop closing can result in map quality issues. To ensure optimal map generation, it is highly recommended to incorporate a higher number of loops during the data collection process. By including more loops, the system can effectively detect and close loops, leading to improved map accuracy and quality.

Occupancy Map May Contain Noise

In some cases noise artifacts may appear in occupancy map. That might be the result of dynamic objects or in some cases human human trails. To ensure map quality an increase system robustness it is suggested to:

Review 2D Map using the annotation tool and remove such noise if needed to rely on the semantic map.

Use Semantic Map layers to override that noise.

Recorder and Upload Limitations and User Advice

8 Minute Chunks

To keep file sizes manageable, recording files are limited to 8 minutes. Recordings of longer duration are split into multiple files. This makes file upload/download less prone to errors and prevents losing large amounts of data due to file corruption.

Lossy Compression

To reduce file sizes, camera images captured from the HAWK stereo camera are compressed into an H.264 stream. Note that this is a lossy compression that can introduce artifacting in the image. The encoding parameters are adjustable and can be tuned to find a good balance between compression ratio and image quality for a specific use case. Due to limitations in decoding, we only support recording NV12 (YUV420) images from HAWK.

Lag in Camera Stream to GUI for Recorder App

When the robot is taken through areas with poor Wi-Fi Connectivity (such as elevators) the connection between the robot and PC may time out resulting in frozen frames (on the GUI); while the images get buffered on the robot. When the connection is restored, the buffered frames will be rapidly sent to the GUI (PC).

Refreshing the browser can help resolve this issue. If the problem persists, please restart the Recorder App.

What Happens to the Recording When the Connection between PC and Robot Is Lost?

If you lose the connection between Nova Carter and UI (on PC), the recording will continue. The connection will need to be reestablished to stop the current recording. If the Nova Carter loses power during recording, previously recorded 8 minute segments are unaffected. The data in the current 8 minute segment is usually okay up until the point of power loss. To be on the safe side, discard the last chunk of data and re-record the last 8 minutes prior to power loss.

Sight Visualization Limitations and User Advice

The amount of data streamed to sight can quickly grow to an unmanageable number, this will slow down the frontend and add some delays in the rendering. While it’s tempting to send a lot of data to monitor, we rarely need to look at all the data at once or need to save this data. It is recommended to only enable the data that you need (for example the trajectory planner team might not need to get the full lidar beams). To quickly enable what you need and only what you need, you can first unselect every channel in the left panel, and then right click on the renderer you are interested in and select “Enable all channels”.

To mitigate the amount of data streamed to the frontend, it is recommended to downsize some of the information when possible, for example:

Images at full HD will probably take too much bandwidth, especially

if you have a few cameras, downsizing to 640*480 is enough to monitor what the robot is seeing while reducing the bandwidth.

Point Cloud (such as Lidar), it might be possible to drop a

significant amount of points, for example when checking for a good localization, 2000 points are not needed, 200 evenly spread out points should provide already a good estimation.

To quickly identify if the bandwidth is an issue, and which channel is responsible for it, open the Channel statistics, you will find some useful information about the overall system (bandwidth, latency, frame rate) while also a breakdown of each channel.

Meshes are not well supported yet, they are quite slow for big meshes, this will improve in the next release, but for now it’s more an experimental feature.

Other Known Limitations and User Advice

Unreliable Odometry

With its current size and weight it tends to bounce when moving at higher speeds than 1.8m/s. This affects the odometry of the robot, which can result in localization failures. So, we have limited the speed of the robot to:

speed_limit_linear: 1.1 # [m/s]

speed_limit_angular: 1.0 # [rad/s]

Isaac Sim Memory Leak

There is a known memory leak in Isaac Sim when the Quick Search and Curve Editor extensions are enabled. These extensions are automatically disabled on Isaac Sim start when running manually or via Python. Note that, if either extension is enabled, Isaac Sim memory consumption may grow by 50% over 24 hours.

Explanations for Warning and Error Logs

LQR Solver

WARN gems/lqr/lqr_solver.cpp@207: Reached the end of the iteration loop, but were still making progress.

(or)

2023-07-17 12:20:01.426 ERROR gems/lqr/lqr_solver.cpp@157: Failed constraints at 47:

0.00123937 -2.22124 -1.59499 -1.41901

2023-07-17 12:20:01.530 ERROR gems/lqr/lqr_solver.cpp@157: Failed constraints at 47:

0.00131101 -2.22131 -1.57649 -1.43751

2023-07-17 12:20:02.019 ERROR gems/lqr/lqr_solver.cpp@157: Failed constraints at 48:

0.00137276 -2.22137 -1.61296 -1.40104

Explanation

Sometimes, the solver has to return before it finishes to converge (to not exceed the time limit), when it happens, a warning is printed to inform the user that the quality of the trajectory might be suboptimal, and in rare occasions, some constraints might have been slightly violated (in the example above, we exceed the speed limit by 1mm/s). When it happens, the trajectory is discarded, and a new trajectory will be computed in the next iteration.

Argus Camera

2023-07-13 22:41:26.093 ERROR extensions/hawk/argus_camera.cpp@173: Error while listing camera devices: Failed to get ICameraProvider interface

sh: 1: sudo: not found

Explanation

Failure to get ICameraProvider interface can happen for a number of reasons, for example an unplugged Camera or bad connection, a misconfigured Nova Carter, or in some cases an improper shutdown of a previous application.

The first thing to try would be restarting the argus daemon:

sudo systemctl restart nvargus-daemon.service

And then trying the application again. If that fails, checking the system’s camera connectors and then power cycling the vehicle is the recommended course of action.

Unplugging and re-plugging the GMSL connectors on the camera is not suggested as the connectors are very sensitive and are prone to faults/mechanical failures if not handled correctly.