Overview

Welcome to the trial of NVIDIA AI Workflows on NVIDIA LaunchPad.

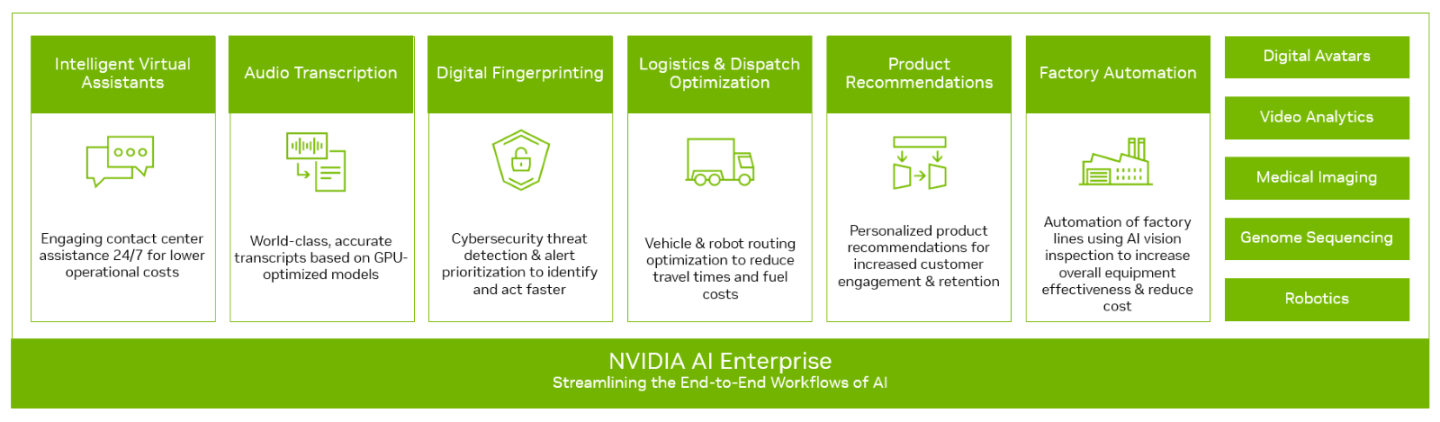

NVIDIA AI Workflows are available as part of NVIDIA AI Enterprise – an end-to-end, secure, cloud-native suite of AI software, enabling organizations to solve new challenges while increasing operational efficiency. Organizations start their AI journey by using the open, freely available NGC libraries and frameworks to experiment and pilot. When they’re ready to move from pilot to production, enterprises can easily transition to a fully managed and secure AI platform with an NVIDIA AI Enterprise subscription. This gives enterprises deploying business-critical AI the assurance of business continuity with NVIDIA Enterprise Support and access to NVIDIA AI experts.

Within this LaunchPad lab, you will gain experience with AI workflows that can accelerate your path to AI outcomes. These are packaged AI workflow examples that include NVIDIA SDKs, AI frameworks, and pre-trained models, as well as resources such as helm charts, Jupyter notebooks, and documentation to help you get started in building AI-based solutions. NVIDIA’s cloud-native AI workflows run as microservices that can be deployed on Kubernetes alone or with other microservices to create production-ready applications.

Key Benefits:

Reduce development time at a lower cost

Improve accuracy and performance

Gain confidence in outcomes by leveraging NVIDIA expertise

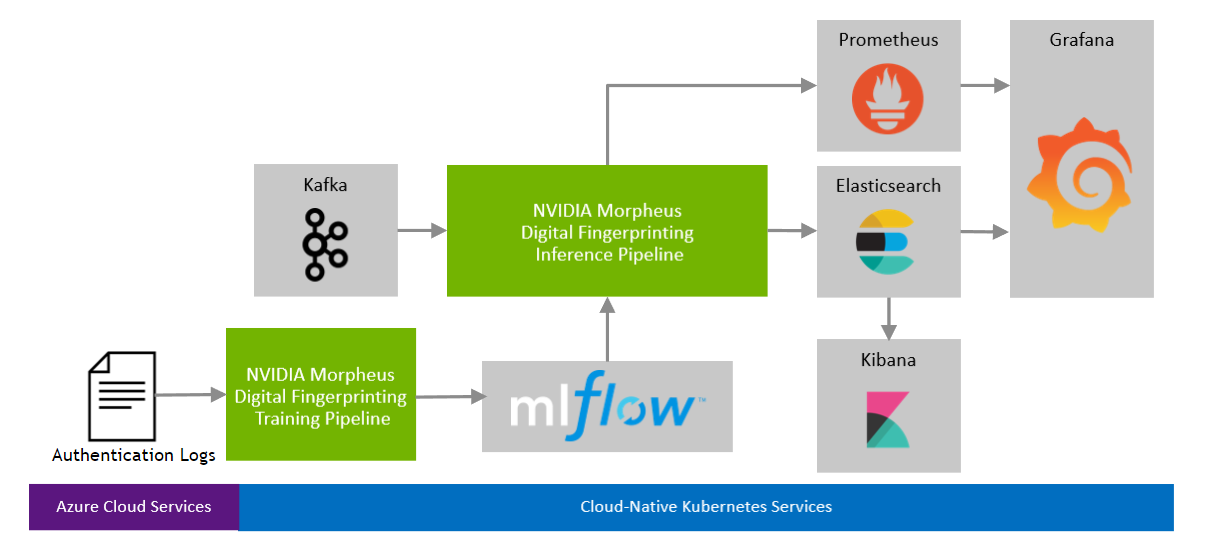

Within this lab, you will use the digital fingerprinting workflow, which illustrates how to leverage NVIDIA Morpheus, a cybersecurity AI framework, to build models and pipelines to pull large volumes of user login data from Azure Cloud Services logs, filter and process them, and classify each with a risk score (z), all in real-time. Anomalous scores are then flagged and presented to the user via example dashboards. A subset of the user login data is used to regularly retrain and fine-tune the models, ensuring that the behavior being analyzed is always up-to-date and accurate.

To assist you in your LaunchPad journey, there are a couple of important links on the left-hand navigation pane of this page. In the following steps, you will use the SSH link.

NVIDIA AI Workflows are intended to provide reference solutions for leveraging NVIDIA frameworks to build AI solutions for solving common use cases. These workflows guide fine-tuning and AI model creation to build upon NVIDIA frameworks. The pipelines to create applications are highlighted, as well as guidance on deploying customized applications and integrating them with various components typically found in enterprise environments, such as components for orchestration and management, storage, security, networking, etc.

NVIDIA AI Workflows are available on NVIDIA NGC for NVIDIA AI Enterprise software customers.

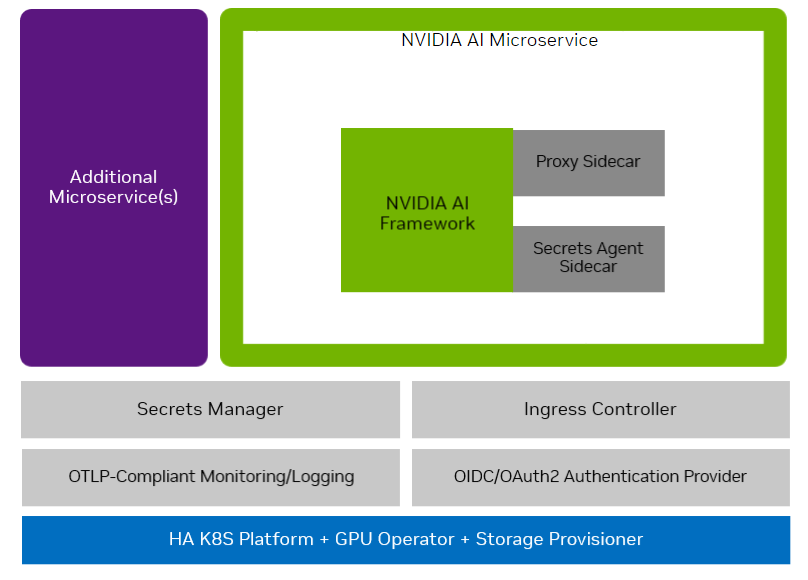

NVIDIA AI Workflows are deployed as a package containing the AI framework and tools for automating a cloud-native solution. AI Workflows also have packaged components that include enterprise-ready implementations with best practices that ensure reliability, security, performance, scalability, and interoperability while allowing a path for you to deviate.

A typical workflow may look like the following diagram:

The components and instructions used in the workflow are intended to be used as examples for integration and may need to be sufficiently production-ready on their own, as stated. The workflow should be customized and integrated into one’s infrastructure, using the workflow as a reference. For example, all of the instructions in these workflows assume a single node infrastructure, whereas production deployments should be performed in a high availability (HA) environment.

Implementing a digital fingerprinting AI workflow for cybersecurity enables organizations to analyze every account login across the network deeply. AI performs massive data filtration and reduction for real-time threat detection by identifying user-specific anti-patterns in behavior rather than traditional methods that utilize generalized organizational rules. With digital fingerprinting, a model is created for every individual user across an enterprise and will flag when user and machine activity patterns shift. Security analysts can rapidly identify critical behavior anomalies to discover and react to threats quickly.

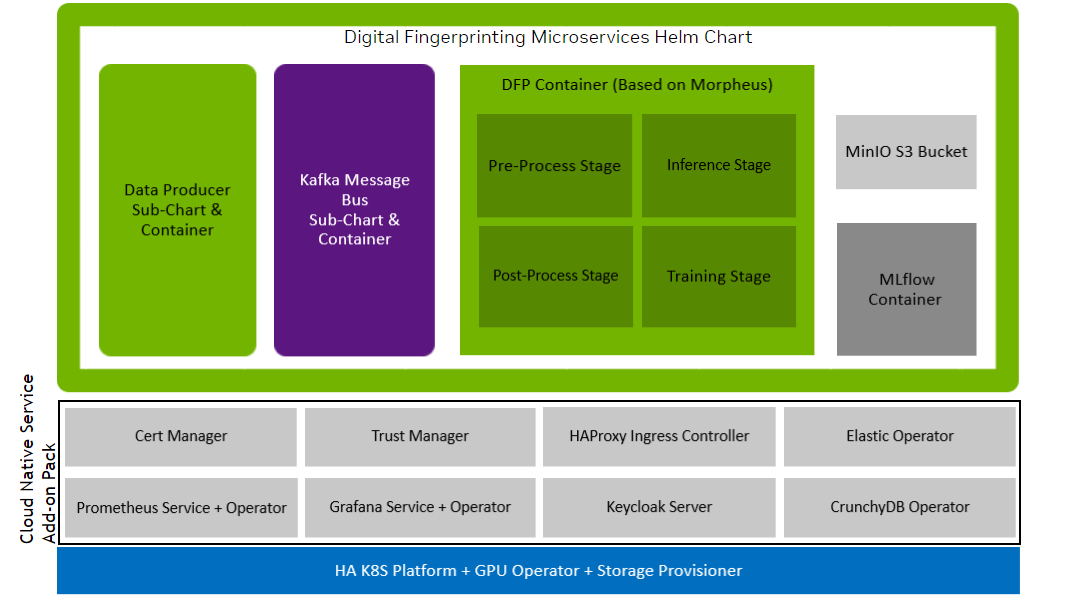

This NVIDIA AI Workflow contains the following:

Training and inference pipelines for Digital Fingerprinting.

A production solution deployment reference includes components like authentication, logging, and monitoring the workflow.

Cloud Native deployable bundle packaged as helm charts.

Using the above assets, this NVIDIA AI Workflow provides a reference for you to build your own AI solution with minimal preparation and includes enterprise-ready implementation best practices which range from authentication, monitoring, reporting, and load balancing, helping you achieve the desired AI outcome faster while still allowing a path for you to deviate.

NVIDIA AI Workflows are designed as microservices. They can be deployed on Kubernetes alone or with other microservices to create a production-ready application for seamless scaling in your enterprise environment.

The following cloud-native Kubernetes services are used with this workflow:

NVIDIA Morpheus

MLflow

Kafka

Prometheus

Elasticsearch

Kibana

Grafana

S3 Compatible Object Storage

These components are used to build and deploy training and inference pipelines, integrated with the additional components as indicated in the below diagram: