Step #2: Start the Triton Inference Server

As noted previously, The Triton Inference server Kubernetes deployment object has already been deployed to the cluster as part of the helm chart installation. But, . Now that we have saved the model as part of Step#1 of the lab (Jupyter notebook), let’s start the Triton Inference Server pod as part of the model deployment. Also, since we only have a single GPU to work with on this environment we need to scale down the training Jupyter notebook pod.

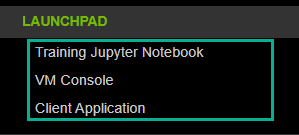

Using the System Console link on the left-hand navigation pane, open the System console. You will use the it to start the Triton Inference Server Pod.

Using the commands below, scale down the Jupyter pod.

oc scale deployments image-classification --replicas=0 -n classification-namespace

Wait for a few seconds and scale up the Triton Inference Server pod using the following command.

oc scale deployments image-classification-tritonserver-image-classification --replicas=1 -n classification-namespace

Keep checking the status of the Triton Inference Server pod using the command below. Only proceed to the next step once the pod is in a Running state. It might take a few minutes to pull the Triton Inference Server container from NGC.

oc get pods -A | grep triton

Once the pod is in a Running state. You can check the logs by running the command below.

oc logs name_of_the_triton_pod_from_previous_command

Within the console output, notice the Triton model repository contains the mobilenet_classifier model which was saved within the Jupyter Notebook and the status is Ready.