MLFlow

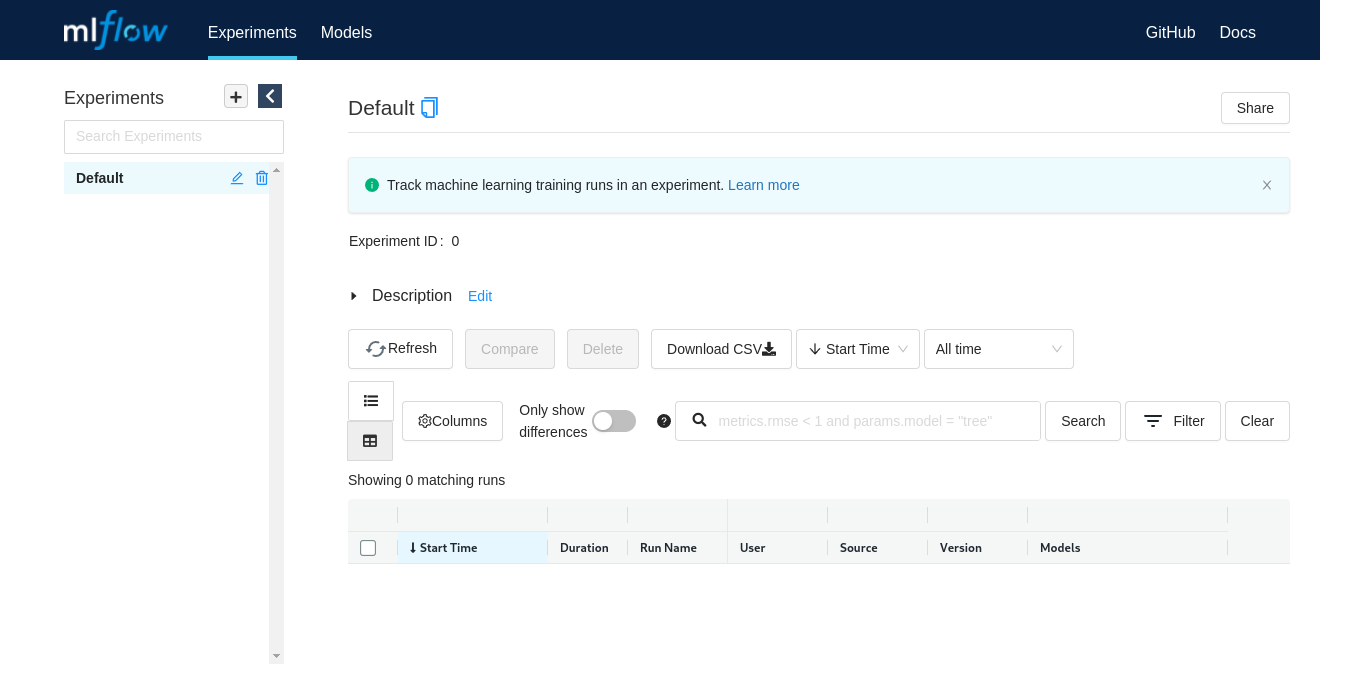

Morpheus uses NVIDIA Triton™ Inference Server to perform real-time inference across massive amounts of cybersecurity data. The Morpheus MLflow Triton Plugin is used to deploy, update, and remove models from the Morpheus AI Engine. This server already has the Triton plugins pre-installed. This environment comes with an MLflow server for the deployment of models to the Triton server.

If necessary, you connect directly to the MLflow container to execute MLflow commands. This can be done from the JupyterLab environment.

From the JupyterLab UI, click the File dropdown on the top left. Navigate to New and select Terminal.

Enter the command below into the terminal.

kubectl exec -it deploy/mlflow -- /bin/bash

Once you have a shell on the MLFlow pod, you can use the mlflow CLI tool to create, modify, and delete deployments. See the provided examples for more information. But you can also use the MLFlow UI to show the status of MLFlow deployments as well as the library of imported models.

To open MLFlow Server UI, use the link in the left-hand navigation pane.