Step #1: Training the Sentiment Analysis Model

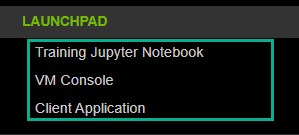

The Sentiment Analysis lab will use three critical links from the left-hand navigation pane throughout the lab. Sentiment Model Training uses the Training Jupyter Notebook link during Step #1, Triton Inference Server Model Deployment uses the VM Console in Step #2, and Client Application in Step #3.

In the first part of this lab, you will run through a Jupyter Notebook and familiarize yourself with RAPIDS GPU data frame library cuDF which is a pandas-like library on GPU. You will use cuDF to ingest data into the GPU and then run data cleanup. This information will then be used to train a PyTorch BERT sentiment analysis model.

Open and run through the Sentiment Analysis Training Jupyter Notebook to train a BERT model on the Amazon Review’s dataset to get started. Tokenization and data cleanup is done using RAPIDS.

To run a cell on the Jupyer Notebook, click on the cell you want to run and press Shift + Enter. Linux bash commands can be run inside the Jupyter Notebook by adding a bang symbol (!) before the command inside the Jupyter Notebook cell.