Live Migration

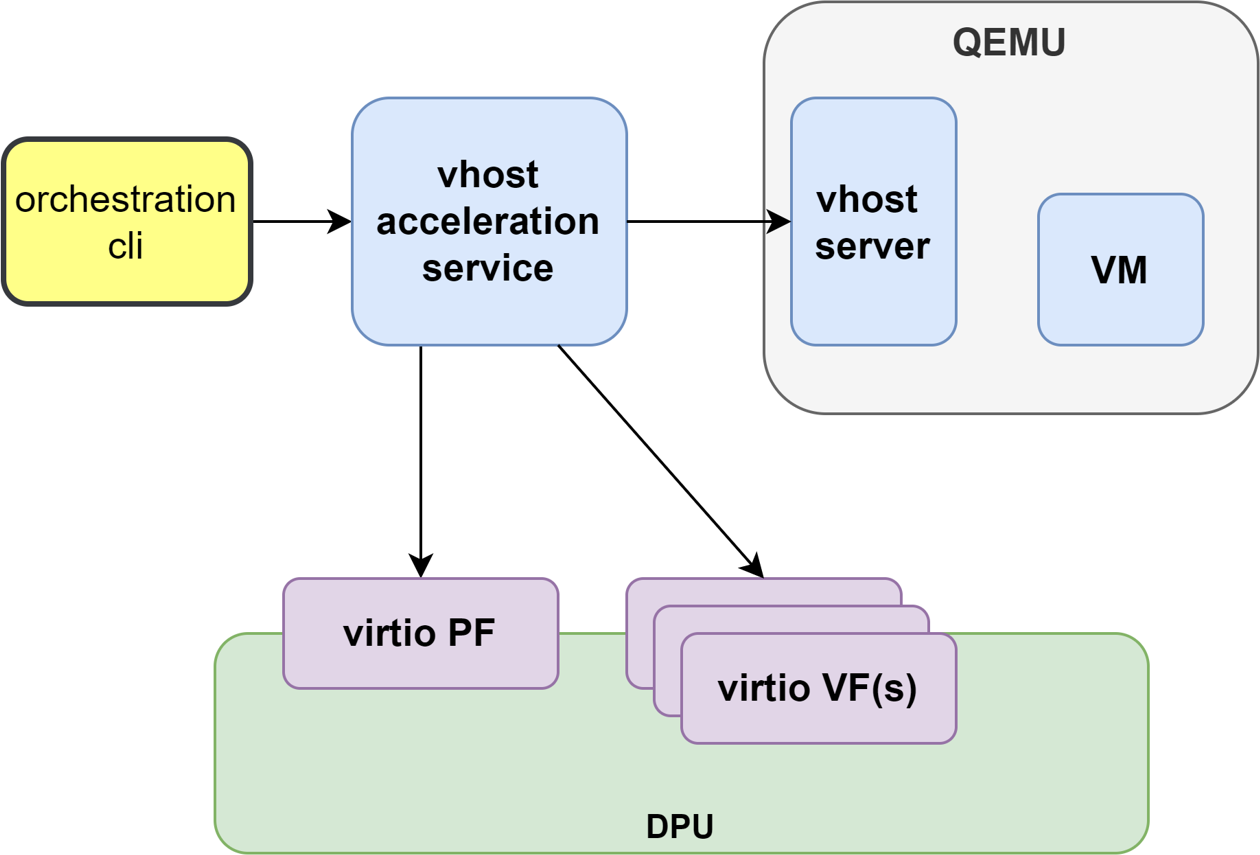

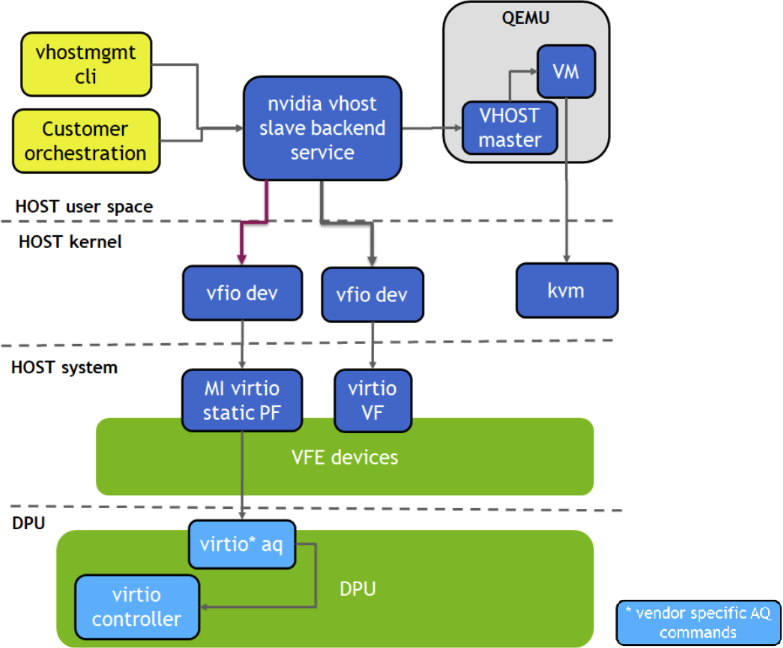

Virtio VF PCIe devices can be attached to the guest VM using the vhost acceleration software stack. This enables performing live migration of guest VMs.

This section provides the steps to enable VM live migration using virtio VF PCIe devices along with vhost acceleration software.

Prerequisites

Minimum hypervisor kernel version – Linux kernel 5.7 (for VFIO SR-IOV support)

To use high-availability (the additional vfe-vhostd-ha service which can persist datapath when vfe-vhostd crashes), this kernel patch must be applied.

Install vHost Acceleration Software Stack

Vhost acceleration software stack is built using open-source BSD licensed DPDK.

To install vhost acceleration software:

Clone the software source code:

[host]# git clone https://github.com/Mellanox/dpdk-vhost-vfe

InfoThe latest release tag is vfe-24.07-rc2.

Build software:

[host]# apt-get install libev-dev -y [host]# apt-get install libev-libevent-dev -y [host]# apt-get install uuid-dev -y [host]# apt-get install libnuma-dev -y [host]# meson build --debug -Denable_drivers=vdpa/virtio,common/virtio,common/virtio_mi,common/virtio_ha [host]# ninja -C build install

To install QEMU:

InfoUpstream QEMU later than 8.1 can be used or the following NVIDIA QEMU.

Clone NVIDIA QEMU sources.

[host]# git clone https://github.com/Mellanox/qemu -b stable-8.1-presetup

InfoLatest release tag is vfe-0.6.

Build NVIDIA QEMU.

[host]# mkdir bin [host]# cd bin [host]# ../configure --target-list=x86_64-softmmu --enable-kvm [host]# make -j24

Configure vHost on Hypervisor

Configure 1G huge pages :

[host]# mkdir /dev/hugepages1G [host]# mount -t hugetlbfs -o pagesize=1G none /dev/hugepages1G [host]# echo 16 > /sys/devices/system/node/node0/hugepages/hugepages-1048576kB/nr_hugepages [host]# echo 16 > /sys/devices/system/node/node1/hugepages/hugepages-1048576kB/nr_hugepages

Enable qemu:commandline in VM XML by adding the xmlns:qemu option:

<

domaintype='kvm'xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'>Assign a memory amount and use 1GB page size for huge pages in VM XML:

<

memoryunit='GiB'>4</memory> <currentMemoryunit='GiB'>4</currentMemory> <memoryBacking> <hugepages> <pagesize='1'unit='GiB'/> </hugepages> </memoryBacking>Set the memory access for the CPUs to be shared:

<

cpumode='custom'match='exact'check='partial'> <modelfallback='allow'>Skylake-Server-IBRS</model> <numa> <cellid='0'cpus='0-1'memory='4'unit='GiB'memAccess='shared'/> </numa> </cpu>Add a virtio-net interface in VM XML:

<

qemu:commandline> <qemu:argvalue='-chardev'/> <qemu:argvalue='socket,id=char0,path=/tmp/vhost-net0,server=on'/> <qemu:argvalue='-netdev'/> <qemu:argvalue='type=vhost-user,id=vhost1,chardev=char0,queues=4'/> <qemu:argvalue='-device'/> <qemu:argvalue='virtio-net-pci,netdev=vhost1,mac=00:00:00:00:33:00,vectors=10,page-per-vq=on,rx_queue_size=1024,tx_queue_size=1024,mq=on,disable-legacy=on,disable-modern=off'/> </qemu:commandline>

Run vHost Acceleration Service

Bind the virtio PF devices to the vfio-pci driver:

[host]# modprobe vfio vfio_pci [host]# echo 1 > /sys/module/vfio_pci/parameters/enable_sriov [host]# echo 0x1af4 0x1041 > /sys/bus/pci/drivers/vfio-pci/new_id [host]# echo 0x1af4 0x1042 > /sys/bus/pci/drivers/vfio-pci/new_id [host]# echo <pf_bdf> > /sys/bus/pci/drivers/virtio-pci/unbind [host]# echo <vf_bdf> > /sys/bus/pci/drivers/virtio-pci/unbind [host]# echo <pf_bdf> > /sys/bus/pci/drivers/vfio-pci/bind [host]# echo <vf_bdf> > /sys/bus/pci/drivers/vfio-pci/bind [host]# lspci -vvv -s <pf_bdf> | grep "Kernel driver" Kernel driver in use: vfio-pci [host]# lspci -vvv -s <vf_bdf> | grep "Kernel driver" Kernel driver in use: vfio-pci

InfoExample of <pf_bdf> or <vf_bdf> format: 0000:af:00.3

Enable SR-IOV and create a VF(s):

[host]# echo 1 > /sys/bus/pci/devices/<pf_bdf>/sriov_numvfs [host]# lspci | grep Virtio 0000:af:00.1 Ethernet controller: Red Hat, Inc. Virtio network device 0000:af:00.3 Ethernet controller: Red Hat, Inc. Virtio network device

Add a VF representor to the OVS bridge on the BlueField:

[dpu]# virtnet query -p 0 -v 0 | grep sf_rep_net_device "sf_rep_net_device": "en3f0pf0sf3000", [dpu]# ovs-vsctl add-port ovsbr1 en3f0pf0sf3000

Run the vhost acceleration software service:

start the vfe-vhostd service:

[host]# systemctl start vfe-vhostd

InfoA log of the service can be viewed by running the following:

[host]# journalctl -u vfe-vhostd

Provision the virtio-net PF and VF:

[host]# /usr/local/bin/vfe-vhost-cli mgmtpf -a <pf_bdf> # Wait on virtio-net-controller finishing handle PF FLR # On BlueField, change VF MAC address or other device options [dpu]# virtnet modify -p 0 -v 0 device -m 00:00:00:00:33:00 # Add VF into vfe-dpdk [host]# /usr/local/bin/vfe-vhost-cli vf -a <vf_bdf> -v /tmp/vhost-net0

NoteIf the SR-IOV is disabled and reenabled, the user must re-provision the VFs. 00:00:00:00:33:00 is a virtual MAC address used in VM XML.

Start the VM

[host]# virsh start <vm_name>

HA Service

Running the vfe-vhostd-ha service allows the datapath to persist should vfe-vhostd crash:

[host]# systemctl start vfe-vhostd-ha

Simple Live Migration

Prepare two identical hosts and perform the provisioning of the virtio device to DPDK on both.

Boot the VM on one server:

[host]# virsh migrate --verbose --live --persistent <vm_name> qemu+ssh://<dest_node_ip_addr>/system --unsafe

Remove Device

When finished with the virtio devices, use following commands to remove them from DPDK:

[host]# /usr/local/bin/vfe-vhost-cli vf -r <vf_bdf>

[host]# /usr/local/bin/vfe-vhost-cli mgmtpf -r <pf_bdf>