Connection Tracking Window Validation

Connection Tracking (CT) is the process of tracking a TCP connection between two endpoints (E.g., between server and client). This involves maintaining the state of the connection, identifying state changing packets, identifying malformed packets, identifying packets that do not conform to the protocol or the current connection state, and more.

CT is an important process used in many networking solutions, that require state awareness. These networking solutions include stateful firewalls, the Linux kernel, and more.

Currently, the CT is executed in the software level, but with the world becoming more and more data driven, networking applications are required to run with higher bandwidth, and software is becoming the bottleneck. This is where DPDK CT comes in, we offer the ability to offload the CT process to the hardware, accommodating our speed of light NICs.

To make the CT offload possible, we added hardware support for the operation (CT HW module), and a software rte_flow API that connects the user to the hardware.

The CT offload can track a single connection only, meaning every connection should be offloaded separately.

The HW module can track a given connection by maintaining a structure in HW referred to as CT context, which holds information about a single TCP connection. As such, every connection being tracked requires its own CT context object. However, the HW does not have the ability to initialize the context.

When the HW module receives a packet that is part of a tracked connection, it checks the packet based on that connection’s context, stores the result for later usage (CT result matching), and then updates the context based on the received packet.

This section explains how for a given connection, the context is initialized, and how to associate that connection’s packets with a context object.

The API brings these main changes:

rte_flow CT Context

The

struct rte_flow_action_conntrackstruct is almost identical to the HW CT context mentioned above. The SW context holds the following two extra fields.peer_port and .is_original_portexplained in details below.

CT rte_flow Item (RTE_FLOW_ITEM_TYPE_CONNTRACK)

This item allows the user to insert an

rte_flowrule that matches the result of the CT HW module (CT action). To insert this rule, make sure that the packet passes through the CT HW module before reaching this rule. You can match on 5 possible results (valid/state_change/error/disabled/bad_packet) or combinations.

CT rte_flow Action (RTE_FLOW_ACTION_TYPE_CONNTRACK)

The action

.conffield points to a SW CT context and activates the CT HW module on this rule. When this action is created, the HW creates its own CT context and copies the values of the SW context.

CT Context Create / Modify

Create:

rte_flow_action_handle_createfunction creates a CT action effectively initializing the HW CT context. The function also returns a handle to the created action. The handle is used later to insert the action as part of multiplerte_flowrules. Meaning the handle can connect a HW context with the packets that match on the rule.Modify:

rte_flow_action_handle_updatefunction allows you to modify the HW context, as well as changing the.is_original_portwhich will be updated separately as explained below.

New Data Structures

struct rte_flow_action_conntrack (SW CT Context)

|

Type |

Name |

Meaning |

|

uint16_t |

peer_port |

Port_id of the port where the reply direction traffic will be received. (SW only field) |

|

uint32_t:1 |

Is_original_dir |

Should be 1 if the items of the rte_flow match the original direction. This only affects rule insertion. (SW only field) |

|

uint32_t:1 |

enable |

Should be 1 to enable CT hardware offload. |

|

uint32_t:1 |

live_connection |

Should be 1 if a 3-way handshake was made. |

|

uint32_t:1 |

selective_ack |

Should be 1 if the connection supports TCP selective ack. |

|

uint32_t:1 |

challenge_ack_passed |

Should be 1 if the challenge ack has passed. |

|

uint32_t:1 |

last_direction |

Should be 0 if the last packet seen came from the original direction. |

|

uint32_t:1 |

liberal_mode |

Should be 1 if you want the CT to track the state changes only (without any other TCP checks). |

|

enum rte_flow_conntrack_state |

state |

The current state of the connection. |

|

uint8_t |

max_ack_window |

Maximum window scale seen (MAX(original.wscale, reply.wscale)). |

|

uint8_t |

retransmission_limit |

The maximum amount of packets that can be retransmitted. |

|

struct rte_flow_tcp_dir_param |

original_dir |

Holds information about the packets received from the original direction. (See below) |

|

struct rte_flow_tcp_dir_param |

reply_dir |

Holds information about the packets received from the reply direction. (see below). |

|

uint16_t |

last_window |

Window of the last packet seen. |

|

enum rte_flow_conntrack_index |

last_index |

The type of the last packet seen. (see below) |

|

uint32_t |

last_seq |

The sequence number of the last packet seen. |

|

uint32_t |

last_ack |

The acknowledgement number of the last packet seen. |

|

uint32_t |

last_end |

The ack that should be sent to acknowledge the last packet seen. (seq + data_len) |

enum rte_flow_conntrack_state (CT state)

|

Value |

Meaning |

|

RTE_FLOW_CONNTRACK_STATE_SYN_RECV |

SYN-ACK packet seen |

|

RTE_FLOW_CONNTRACK_STATE_ESTABLISHED |

ACK packet seen (3-way handshake ACK) |

|

RTE_FLOW_CONNTRACK_STATE_FIN_WAIT |

FIN packet seen |

|

RTE_FLOW_CONNTRACK_STATE_CLOSE_WAIT |

ACK packet seen after FIN |

|

RTE_FLOW_CONNTRACK_STATE_LAST_ACK |

FIN packet seen after FIN |

|

RTE_FLOW_CONNTRACK_STATE_TIME_WAIT |

Last ACK seen after two FINs |

enum rte_flow_conntrack_tcp_last_index (packet type/TCP flags)

|

Value |

Meaning |

|

RTE_FLOW_CONNTRACK_INDEX_NONE |

No TCP flags set. |

|

RTE_FLOW_CONNTRACK_INDEX_SYN |

SYN flag set. |

|

RTE_FLOW_CONNTRACK_INDEX_SYN_ACK |

SYN and ACK flags set. |

|

RTE_FLOW_CONNTRACK_INDEX_FIN |

FIN flag set. |

|

RTE_FLOW_CONNTRACK_INDEX_ACK |

ACK flag set. |

|

RTE_FLOW_CONNTRACK_INDEX_RST |

RST flag set. |

struct rte_flow_tcp_dir_param

|

Type |

Name |

Meaning For context.original_dir |

Meaning For context.reply_dir |

|

uint32_t:4 |

scale |

Original direction window scaling factor. Set to 0xF to disable window scaling. |

Reply direction window scaling factor. Set to 0xF to disable window scaling. |

|

uint32_t:1 |

close_initiated |

Should be 1 if the original direction sent a FIN packet. |

Should be 1 if the reply direction sent a FIN packet. |

|

uint32_t:1 |

last_ack_seen |

Should be 1 if the reply direction sent an ACK packet. |

Should be 1 if the original direction sent an ACK packet. |

|

uint32_t:1 |

data_unacked |

Original direction has some data packets that have not been ACKed yet. |

reply direction has some data packets that have not been ACKed yet. |

|

uint32_t |

sent_end |

The expected ack number to be sent from the reply direction. (orig.seq + orig.data_len) |

The expected ack number to be sent from the original direction. (reply.seq + reply.data_len) |

|

uint32_t |

reply_end |

The maximum seq number that the original direction can send. (reply.ack + reply.actual_window) |

The maximum seq number that the reply direction can send. (orig.ack + orig.actual_window) |

|

uint32_t |

max_win |

The last actual window received from the reply direction. (reply.actual_window) |

The last actual window received from the original direction. (orig.actual_window) |

|

uint32_t |

max_ack |

The last ack number that was sent from the original direction. (orig.ack) |

The last ack number that was sent from the reply direction. (reply.ack) |

The CT action must be wrapped by an action_handle

Retransmission limit checking cannot work

The CT action cannot be inserted in group 0

Cannot track a connection before seeing the first 2 handshake packets (SYN and SYN-ACK)

No direction validation is done for closing sequence (FIN)

Maximum number of supported ports with CT action is 16

For the best flow insertion usage experience, the following actions/steps are recommended:

Use

“hint_num_of_rules_log”to configure the maximum expected number of rules in each group, see Hint to the Driver on the Number of Flow Rules. The value is the log number of the expected rules. For example, assuming cross port and each port is going to have 4M rules, the value should be 22. In case of a single port (meaning we add 2 rules to the same table), the expected number of rules is 8M so the value should be 23.Reuse CT objects, the usage flow should be as follows upon new connection arrival:

Check if there is a free CT object in the app pool. The CT object should match the direction of the original ones that were set when the CT object was created. For example, if the app created a CT with original port = 0 and reply port 1, it can be reused only in the same configuration.

If the CT object is reused, the application should call the modify the action with the new requested state.

If the app does not have a free CT object, then it should allocate a new one by calling the create function.

The app creates the rules just like before with the CT object. To close a connection, the app removes the rules and adds the CT object to the application pool.

Always keep at least one rule per matching criteria to save re-allocation time. This can be a dummy rule that will never be hit, which can be inserted as the first rule.

General Comments

The export part will be changed in the June release and will be part of the rule creation.

A new NV config mode

“icm_cache_mode_large_scale_steering”is added to enable less cache misses and improve performance for cases when working with many steering rules.This capability is enabled by setting the mlxconfig parameter

"ICM_CACHE_MODE"”to"1":mlxconfig -d <device> set ICM_CACHE_MODE=1. Note that this optimization is intended to be used with very large number of steering rules.To save insertion rate time, the CT objects can be pre-allocated at startup.

Querying the CT state will have a huge effect on the performance. All measurements are done without the query.

Before closing the device, all CT objects should be destroyed.

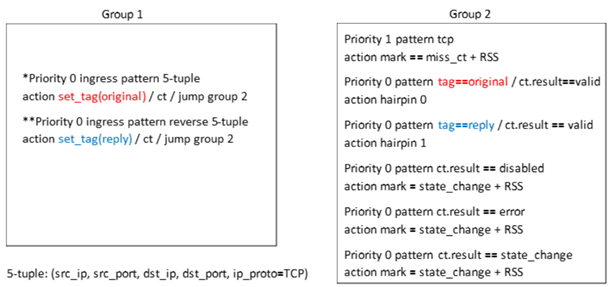

CT is offloaded using the rte_flow rules. Below is explanation on how to create rules in three different groups:

Group 0: Has a single rule that matches on a TCP packet and jumps to group 1.

Group 1: Has two rules, the first one matches on the connection’s 5-tuple, tags the packet with

original, applies CT action, and then jumps to group 2. The second rule is similar to the first however, it matches on the reverse 5-tuple, and tags the packet withreply. Both rules share the same CT action (and HW CT context). These rules should be created for every 5-tuple (connection).Group 2: Has 6 rules, 4 of them are used to inform the application about an event, while the remaining two rules match on a valid packet. There is a rule for every direction where for each direction the packet will be forwarded to a different hairpin queue.

The flows are as follows:

As explained above, the rules in group 1 are inserted for every new connection. We refer to inserting these rules as offloading a connection.

To offload a connection, you must use the API as follows:

Create a SW CT context that matches the connection parameters. It is important to set

.is_original_port= 1.Create a CT action that points to the SW context, and then commit it using

rte_flow_action_handle_createwhich will return anaction_handle.Insert the rule(*) that matches on the 5-tuple of the connection. However, instead of using action CT, you can use the

action_handlecreated and insert that.Modify the CT action that was created in 2, such that

.is_original_port= 0. You can modify the action by usingrte_flow_action_handle_updateand providing thestruct rte_flow_modify_conntrackparameter.Insert the rule(**) that matches on the reverse 5-tuple of the connection. Same as 3.

The below is an example of how the CT context should be initialized (what values to put). In our example we are interested in tracking a TCP connection only after a three-way handshake has passed, as such we will be configuring the CT context based on information gathered from these 3 packets.

The three-way handshake packets are as follows:

handshake_pkts[0] == syn_pkt == S

|

Ethernet Header |

||||||||||||||||||||

|

dst |

src |

type |

||||||||||||||||||

|

00:15:5d:61:d4:61 |

00:15:5d:61:d9:61 |

IPv4 |

||||||||||||||||||

|

IP Header |

||||||||||||||||||||

|

version |

ihl |

tos |

len |

id |

flags |

frag |

ttl |

proto |

chksum |

src |

dst |

|||||||||

|

4L |

5L |

0x10 |

60 |

11800 |

DF |

0L |

64 |

tcp |

0x28ba |

172.17.28.218 |

10.210.16.29 |

|||||||||

|

TCP Header |

||||||||||||||||||||

|

sport |

dpor |

seq |

ack |

dataofs |

reserved |

flags |

window |

chksum |

urgptr |

|||||||||||

|

40314 |

22 |

2632987378 |

0 |

10L |

0L |

S |

65280 |

0x7afb |

0 |

|||||||||||

|

TCP Options |

||||||||||||||||||||

|

[('MSS', 1360), ('SAckOK', ''), ('Timestamp', (3472272931, 0)), ('NOP', None), ('WScale', 7) ] |

||||||||||||||||||||

handshake_pkts[1] == syn_ack_pkt == SA

|

Ethernet Header |

|||||||||||||||||||

|

dst |

src |

type |

|||||||||||||||||

|

00:15:5d:61:d9:61 |

00:15:5d:61:d4:61 |

IPv4 |

|||||||||||||||||

|

IP Header |

|||||||||||||||||||

|

version |

ihl |

tos |

len |

id |

flags |

frag |

ttl |

proto |

chksum |

src |

dst |

||||||||

|

4L |

5L |

0x60 |

60 |

0 |

DF |

0L |

55 |

tcp |

0x5f82 |

10.210.16.29 |

172.17.28.218 |

||||||||

|

TCP Header |

|||||||||||||||||||

|

sport |

dport |

seq |

ack |

dataofs |

rsv |

flags |

window |

chksum |

urgptr |

||||||||||

|

22 |

40314 |

2532480966 |

2632987379 |

10L |

0L |

SA |

28960 |

0x49f5 |

0 |

||||||||||

|

TCP Options |

|||||||||||||||||||

|

[('MSS', 1460), ('SAckOK', ''), ('Timestamp', (1297236582, 3472272931)), ('NOP', None), ('WScale', 7) ] |

|||||||||||||||||||

handshake_pkts[2] == ack_pkt == A

|

Ethernet Header |

||||||||||||||||||||

|

dst |

src |

type |

||||||||||||||||||

|

00:15:5d:61:d4:61 |

00:15:5d:61:d9:61 |

IPv4 |

||||||||||||||||||

|

IP Header |

||||||||||||||||||||

|

version |

ihl |

tos |

len |

id |

flags |

frag |

ttl |

proto |

chksum |

src |

dst |

|||||||||

|

4L |

5L |

0x10 |

52 |

11801 |

DF |

0L |

64 |

tcp |

0x28c1 |

172.17.28.218 |

10.210.16.29 |

|||||||||

|

TCP Header |

||||||||||||||||||||

|

sport |

dport |

seq |

ack |

dataofs |

rsv |

flags |

window |

chksum |

urgptr |

|||||||||||

|

40314 |

22 |

2632987379 |

2532480967 |

8L |

0L |

A |

510 |

0xe7db |

0 |

|||||||||||

|

TCP Options |

||||||||||||||||||||

|

[('NOP', None), ('NOP', None), ('Timestamp', (3472272939, 1297236582))] |

||||||||||||||||||||

SW CT Context Initial Values

|

Field Name |

Real Value |

Semantic Value |

|

|

peer_port |

0 |

||

|

Is_original_dir |

1 |

||

|

enable |

1 |

||

|

live_connection |

1 |

||

|

selective_ack |

1 |

||

|

challenge_ack_passed |

0 |

||

|

last_direction |

0 |

||

|

liberal_mode |

0 |

||

|

state |

RTE_FLOW_CONNTRACK_STATE_ESTABLISHED |

||

|

max_ack_window |

7 |

max( S.tcp_options.wscale , SA.tcp_options.wscale ) |

|

|

retransmission_limit |

5 |

Constant value |

|

|

original_dir |

scale |

7 |

S.tcp_options.wscale |

|

close_initiated |

0 |

||

|

last_ack_seen |

1 |

||

|

data_unacked |

0 |

||

|

sent_end |

2632987379 |

A.seq |

|

|

reply_end |

2632987379 + 28960 |

SA.ack + SA.window |

|

|

max_win |

510 << 7 |

A.window << S.tcp_options.wscale |

|

|

max_ack |

2532480967 |

SA.ack |

|

|

reply_dir |

scale |

7 |

SA.tcp_options.wscale |

|

close_initiated |

0 |

||

|

last_ack_seen |

1 |

||

|

data_unacked |

0 |

||

|

sent_end |

2532480966 + 1 |

SA.seq + 1 |

|

|

reply_end |

2532480967 + ( 510 << 7 ) |

A.ack + ( A.window << S.tcp_options.wscale ) |

|

|

max_win |

28960 |

SA.window |

|

|

max_ack |

2632987379 |

SA.ack |

|

|

last_window |

510 |

A.window |

|

|

last_index |

RTE_FLOW_CONNTRACK_INDEX_ACK |

||

|

last_seq |

2632987379 |

A.seq |

|

|

last_ack |

2532480967 |

A.ack |

|

|

last_end |

2632987379 |

A.seq |

|