Sizing Guide

NVIDIA Blueprint - PDF to Podcast

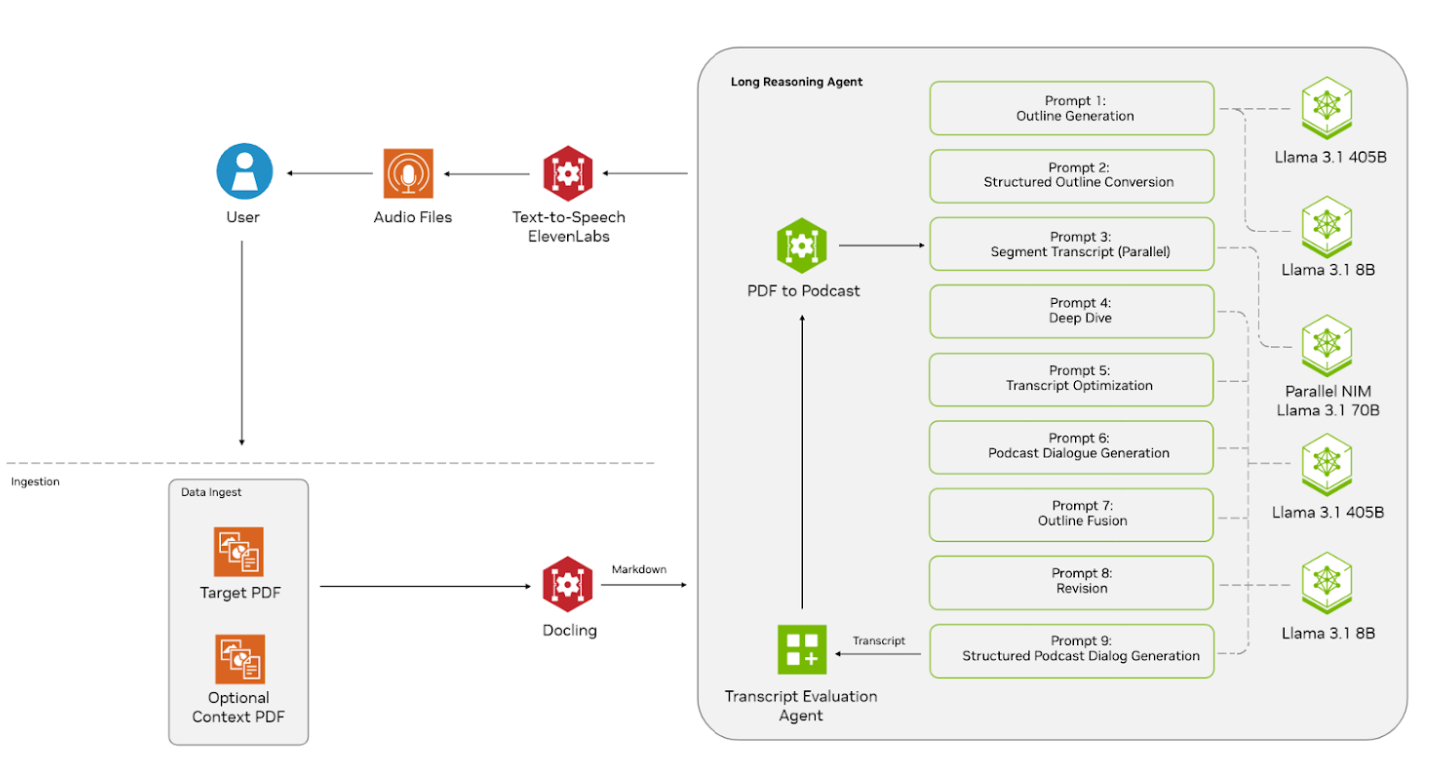

NVIDIA Blueprints are comprehensive reference workflows built with NVIDIA AI libraries, SDKs, and microservices for speeding up the deployment of AI solutions. This toolkit uses the PDF to podcast blueprint that leverages large language models (LLMs), text-to-speech, and NVIDIA NIM microservices to build a generative AI application that transforms PDF data into audio content.

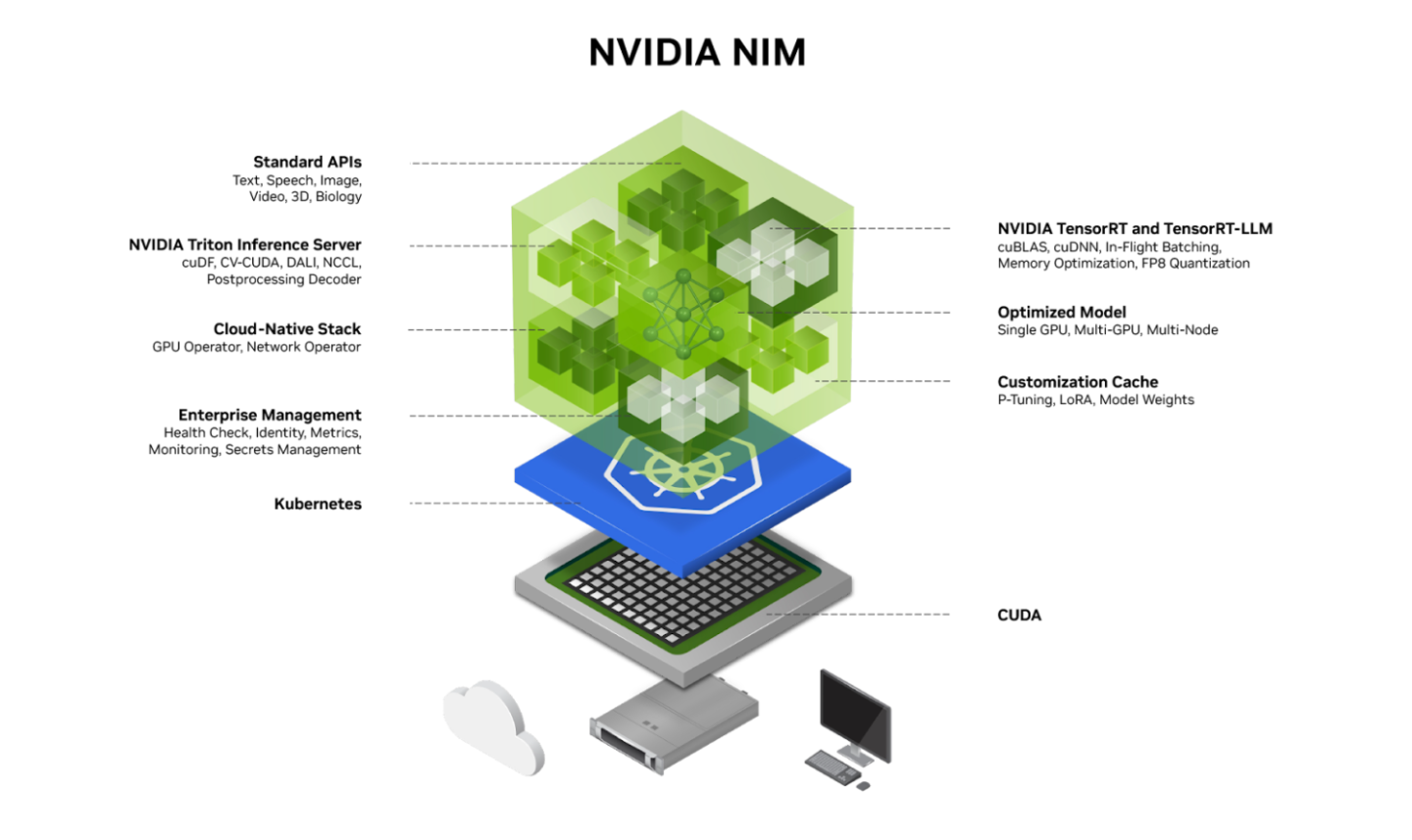

NVIDIA NIM provides containers to self-host GPU-accelerated inferencing microservices for pretrained and customized AI models across clouds and data centers. NIM microservices expose industry-standard APIs for simple integration into AI applications, development frameworks, and workflows. Built on pre-optimized inference engines from NVIDIA and the community, including NVIDIA® TensorRT™ and TensorRT-LLM, NIM microservices optimize response latency and throughput for each combination of foundation model and GPU. NVIDIA NIM for Developer is the edition used in this toolkit.

The NIM microservices used in this toolkit:

Llama 3.1 8B Instruct

Server Configuration

2U NVIDIA-Certified System

Intel Xeon Platinum 8480+ @3.8GHz Turbo (Sapphire Rapids) HT

3.84 TB Solid State Drive (NVMe)

Intel Ethernet Controller X710 for 10GBASE-T

1x NVIDIA GPU: L40S/L40

Hypervisor - VMware ESXi 8.0.3 (for Linux virtual workstation)

Hypervisor - Windows Server 2025 (for Windows virtual workstation)

NVIDIA Host Driver - 550.144.02 (for Linux virtual workstation)

NVIDIA Host Driver - 572.60 (for Windows virtual workstation)

Linux virtual workstation:

OS Version - Ubuntu 24.04

16 vCPU

32GB vRAM

NVIDIA vGPU Guest Driver - 550.144.03

NVIDIA vGPU Profile - 48Q

Windows virtual workstation:

OS Version - Windows 11

16 vCPU

32GB vRAM

NVIDIA vGPU Guest Driver - 572.60

NVIDIA vGPU Profile - 48Q