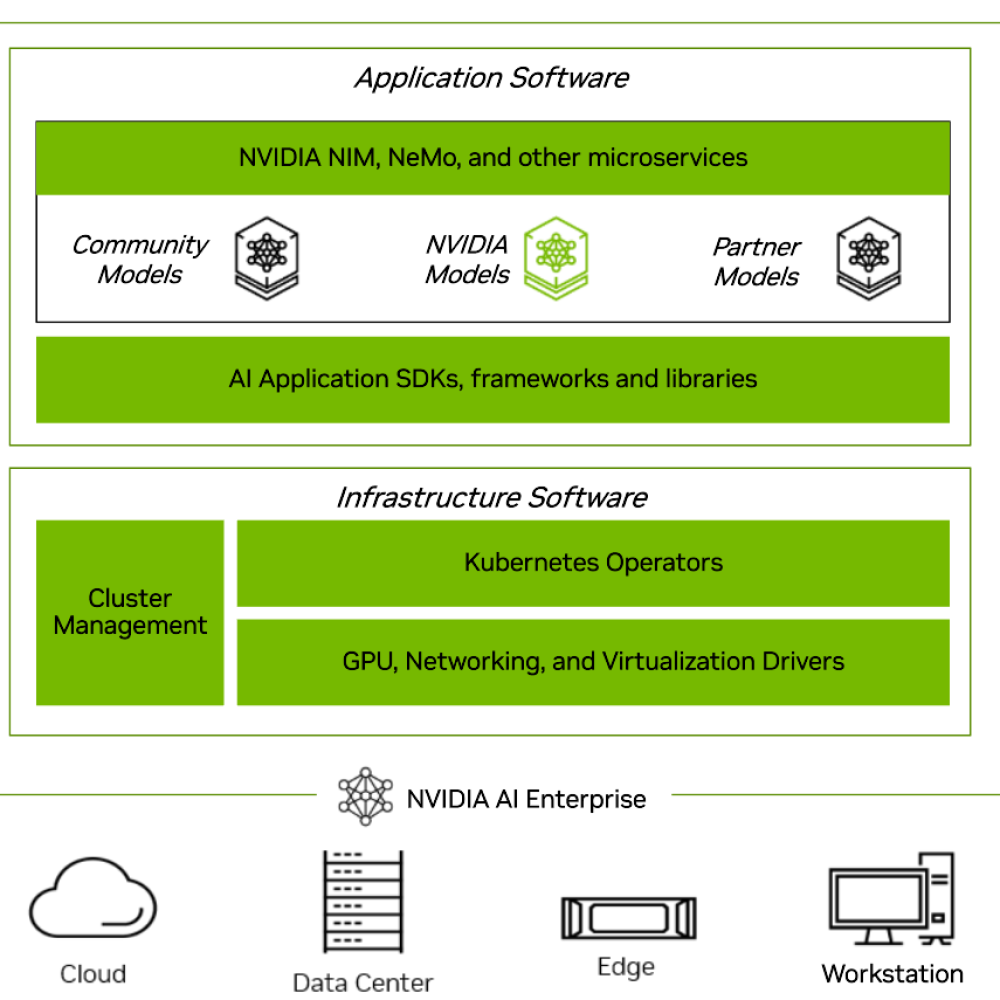

NVIDIA AI Enterprise is a suite of NVIDIA software that is portable across the cloud, data center, and edge. The software is designed to deliver optimized performance, robust security, and stability for development and production AI use cases. It consists of two types of software: Application Software for building AI agents, generative AI, and many other types of AI workflows, and Infrastructure Software such as NVIDIA GPU and Networking drivers and Kubernetes Operators to help optimize and manage the use of hardware accelerators by AI, data science, and HPC applications.

Application Software

- NVIDIA NIM and NVIDIA NeMo microservices enhance model performance and speed time to deployment for generative AI.

- SDKs, frameworks, and libraries across many domains, including speech AI, route optimization, cybersecurity, and vision AI.

- Inference and training runtimes including NVIDIA Triton Inference Server, with multiple optimized backends.

- NVIDIA GPU and NVIDIA Network drivers to run in bare metal or virtualized configurations.

- Kubernetes operators for deployment and lifecycle management of NVIDIA GPU, NVIDIA Networking, NVIDIA NIM, and NVIDIA NeMo.

- Base Command Manager to streamline cluster provisioning and infrastructure monitoring.

Customers who build AI applications or operate AI Infrastructure can use whichever parts of the suite that they wish. NVIDIA has partnered with leading industry partners to ensure integration of NVIDIA software with their platform.

NVIDIA software is available as different branches to meet the needs of the individual use case.

| Software Layer | Description | Components Included | Support Matrix | Documentation |

| Infrastructure Software | The infrastructure software includes various components that ensure efficient deployment, management, and scaling of AI applications. | Infrastructure Software | Infra Support Matrix | Infra Lifecycle documentation |

| Application Software: Production Branch | A Production Branch (PB) contains production-ready AI frameworks and SDK branches to provide API stability and a secure environment for building mission-critical AI applications. | Application Production Branch (PB) Software | Production Branch (PB) documentation | Production Branch (PB) Lifecycle documentation |

| Application Software: Feature Branch | Feature Branches (FB) deliver the latest versions of NVIDIA-built and NVIDIA-optimized AI frameworks, NVIDIA NIM microservices, pre-trained models, and SDKs and provide access to the most recent software features and optimizations. | Application Feature Branch (FB) Software | Feature Branch (FB) documentation | Feature Branch (FB) Lifecycle documentation |

| Application Software: Long-Term Support Branch | A Long-Term Support Branch (LTSB) contains long-term supported AI frameworks and SDKs that provide 36 months of API stability and a secure environment for highly regulated industries. | Application Long Term Supported Branch (LTSB) Software | Long-Term Support Branch (LTSB) documentation | Long-Term Support Branch (LTSB) Lifecycle documentation |

Getting Started with NVIDIA AI Enterprise

NVIDIA AI Enterprise Software on NGC

NVIDIA AI Enterprise Licensing and Support

NVIDIA AI Enterprise Production Branches & Long-Term Supported Branches Release Documentation

Active Production Branches (PB) & Long-Term Supported Branches (LTSB)

| Software Branch | Compatible Infra Release | First Planned Release | Last Planned Release | Planned EOL |

| Production Branch (PB) May 2025 (PB 25h1) | NVIDIA AI Enterprise Infra Release 6.x | May 2025 | December 2025 | January 2026 |

| Production Branch (PB) October 2024 (PB 24h2) | NVIDIA AI Enterprise Infra Releases 5.x and 6.x | October 2024 | June 2025 | July 2025 |

| Long-Term Supported Branch 2 (LTSB 2) | NVIDIA AI Enterprise Infra Release 4.x | November 2024 | August 2026 or later | October 2026 or later |

All Production Branches & & Long-Term Supported Branches (LTSB)

| Software Branch | Compatible Infra Release | First Planned Release | Last Planned Release | Planned EOL |

| Production Branch (PB) May 2024 (PB 24h1) | NVIDIA AI Enterprise Infra Releases 5.x and 4.x | May 2024 | December 2024 | January 2025 |

| Production Branch (PB) October 2023 (PB 23h2) | NVIDIA AI Enterprise Infra Release 3.x | October 2023 | June 2024 | July 2024 |

| Long-Term Supported Branch 1 (LTSB 1) | NVIDIA AI Enterprise Infra Release 1.x | August 2021 | February 2024 | June 2024 |

NVIDIA AI Enterprise Application Release Branches

NVIDIA NIM

NVIDIA AI Enterprise Support Lifecycle Policy

Notices

What’s Included

The following products are part of NVIDIA AI Enterprise Infrastructure software.

Drivers and Driver Containers

- NVIDIA GPU Data Center Driver Container

- NVIDIA vGPU (C-Series Host and Guest Drivers)

- NVIDIA DOCA-OFED Driver for Networking

Operators

- NVIDIA GPU Operator

- NVIDIA Network Operator

- NVIDIA NIM Operator

- NVIDIA Base Command Manager (BCM)

NVIDIA AI Enterprise Infra Release Branches

Active Infra Release Branches

| Software Branch | Driver Branch | Latest Driver in Branch | Branch | Latest Release in Branch | Latest Release Date in Branch | Branch EOL |

|---|---|---|---|---|---|---|

| NVIDIA AI Enterprise Infra 6.x | R570 | 570.158.01 | Feature and Production | 6.4 | June 2025 | February 2026 |

| NVIDIA AI Enterprise Infra 4.x | R535 | 535.247.01 | Long-Term Support | 4.6 | April 2025 | September 2027 |

All Infra Release Branches

| Software Branch | Driver Branch | Latest Driver in Branch | Branch | Latest Release in Branch | Latest Release Date in Branch | Branch EOL |

|---|---|---|---|---|---|---|

| NVIDIA AI Enterprise Infra 5.x | R550 | 550.144.02 | Feature and Production | 5.3 | January 2025 | April 2025 |

| NVIDIA AI Enterprise Infra 3.x | R525 | 525.147.05 | Feature and Production | 3.3 | November 2023 | December 2023 |

| NVIDIA AI Enterprise Infra 2.x | R520 | 520.61.05 | Feature | 2.3 | October 2022 | November 2022 |

| NVIDIA AI Enterprise Infra 1.x | R470 | 470.256.02 | Long-Term Support | 1.9 | July 2024 | September 2024 |

NVIDIA AI Enterprise Infra Release Documentation

Version 6.4 is the latest release.

Version 5.3 is the latest release.

Version 4.6 is the latest release.