DPL Runtime Service

This page explains how to deploy, configure, and operate the DPL Runtime Service on NVIDIA® BlueField® DPUs, enabling runtime management of data plane pipelines compiled using the DOCA Pipeline Language (DPL).

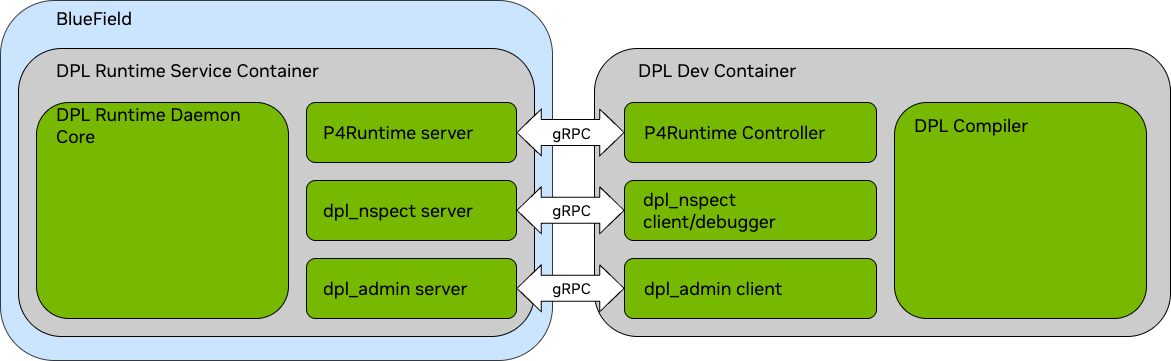

The DPL Runtime Service is responsible for programming and managing the DPU datapath at runtime. It enables dynamic rule insertion, packet monitoring, and forwarding logic through standardized and proprietary APIs. The service is divided into four core components:

Component | Description |

Runtime core | Main manager that handles requests from all interfaces and programs hardware resources |

P4Runtime server | gRPC-based server implementing the P4Runtime 1.3.0 API for remote pipeline configuration and table control |

Nspect server | Provides runtime visibility and debugging through the dpl_nspect client |

Admin server | Offers administrative and configuration control over the daemon via gRPC |

High level system illustration:

Supported Platforms

The following NVIDIA® BlueField® DPUs and SuperNICs are supported with DPL:

NVIDIA SKU | Legacy OPN | PSID | Description |

900-9D3B6-00CV-AA0 | N/A | MT_0000000884 | BlueField-3 B3220 P-Series FHHL DPU; 200GbE (default mode) / NDR200 IB; Dual-port QSFP112; PCIe Gen5.0 x16 with x16 PCIe extension option; 16 Arm cores; 32GB on-board DDR; integrated BMC; Crypto Enabled |

900-9D3B6-00SV-AA0 | N/A | MT_0000000965 | BlueField-3 B3220 P-Series FHHL DPU; 200GbE (default mode) / NDR200 IB; Dual-port QSFP112; PCIe Gen5.0 x16 with x16 PCIe extension option; 16 Arm cores; 32GB on-board DDR; integrated BMC; Crypto Disabled |

900-9D3B6-00CC-AA0 | N/A | MT_0000001024 | BlueField-3 B3210 P-Series FHHL DPU; 100GbE (default mode) / HDR100 IB; Dual-port QSFP112; PCIe Gen5.0 x16 with x16 PCIe extension option; 16 Arm cores; 32GB on-board DDR; integrated BMC; Crypto Enabled |

900-9D3B6-00SC-AA0 | N/A | MT_0000001025 | BlueField-3 B3210 P-Series FHHL DPU; 100GbE (default mode) / HDR100 IB; Dual-port QSFP112; PCIe Gen5.0 x16 with x16 PCIe extension option; 16 Arm cores; 32GB on-board DDR; integrated BMC; Crypto Disabled |

The following NVIDIA DPUs are supported with DOCA on the host:

For the the system requirements, see DPU's hardware user guide .

Component | Minimum Version |

Ubuntu OS | 22.04 |

DOCA BFB Bundle | 2.10.0-xx |

Firmware | 32.44.1000+ |

DOCA Networking | 2.10.0+ |

Firmware is included in the BFB bundle available via the NVIDIA DevZone. Use bfb-install to flash:

bfb-install --bfb bf-fwbundle-2.10.0-28_25.0-ubuntu-22.04-prod.bfb --rshim rshim0

Helpful resources:

Configure the following options via mlxconfig:

FLEX_PARSER_PROFILE_ENABLE=4

PROG_PARSE_GRAPH=true

SRIOV_EN=1 # Only if VFs are used

For details on how to query and adjust these settings, refer to Using mlxconfig.

Some changes require a firmware reset. Refer to mlxfwreset for information.

Prerequisites:

BlueField must be in DPU Mode.

SR-IOV or SFs must be enabled and created (on host side).

DOCA installed on host and BlueField.

Installation:

Download and extract the container bundle from NGC.

InfoRefer to DPL Container Deployment for more information.

Run the setup script:

cddpl_rt_service_<version>/scripts ./dpl_dpu_setup.shInfoThis script:

Configures necessary

mlxconfigsettingsCreates directory structure for config files

Enables SR-IOV virtual function support

Configuration Files

Before launching the container, configuration files must be created on the DPU filesystem. These define:

Interface names and PCI IDs.

Allowed ports and forwarding configuration.

Resource constraints and debug options.

Mount the config path into the container using Docker or Kubernetes volume mounts. Refer to DPL Service Configuration for details.

The following table lists out the possible ingress and egress ports for a given packet that is processed by a BlueField pipeline (DPU mode):

Ingress Port | Egress Port | |||

Wire Port P0 |

|

|

| |

Wire port P0 | Allowed | Allowed | Allowed | Allowed |

| Allowed | Disabled | Allowed | Allowed |

| Allowed | Allowed | Disabled | Allowed |

| Allowed | Allowed | Allowed | Disabled |

Anything that is allowed with SR-IOV VFs in the table above is also allowed with scalable functions (SFs).

Multiport E-Switch Mode

To enable port forwarding across both physical ports (P0 and P1):

mlxconfig -d <pci> s LAG_RESOURCE_ALLOCATION=1

mlxfwreset -d <pci> -y -l 3 --sync 1 r

devlink dev param set <pci> name esw_multiport value 1 cmode runtime

devlink settings are not persistent across reboots.

Refer to the DPL Installation Guide documentation.

Advanced use cases may require custom controllers. These should implement the P4Runtime 1.3.0 gRPC API and connect to the DPL Runtime Server on TCP port 9559.

Resources:

Protobufs: p4lang/p4runtime GitHub

Installed inside container:

/opt/mellanox/third_party/dpl_rt_service/p4runtime/Compile using standard gRPC + protobuf toolchain (e.g., C++)

Checkpoint | Command |

View kubelet logs |

|

List pulled images |

|

List created pods |

|

List running containers |

|

View DPL logs |

|

Also verify:

VFs were created before deploying container

Configuration files exist, are correctly named, and contain valid device IDs

Interface names and MTUs match hardware

Firmware is up-to-date

DPU is in correct mode (check

mlxconfig)