Running Microbenchmark

Connect to System Console using the left-hand menu link.

Connect to the sparkrunner pod.

kubectl exec --stdin --tty sparkrunner-0 -- /bin/bash

cd to /home/spark/spark-scripts and execute the

/home/spark/spark-scripts/lp-runjupyter-etl-gpu.shor/home/spark/spark-scripts/lp-runjupyter-etl-cpu.shin the System Console.In the left menu open up the Desktop link and click the VNC connect button.

Open the web browser in the Linux desktop.

Browse to 172.16.0.10:30002.

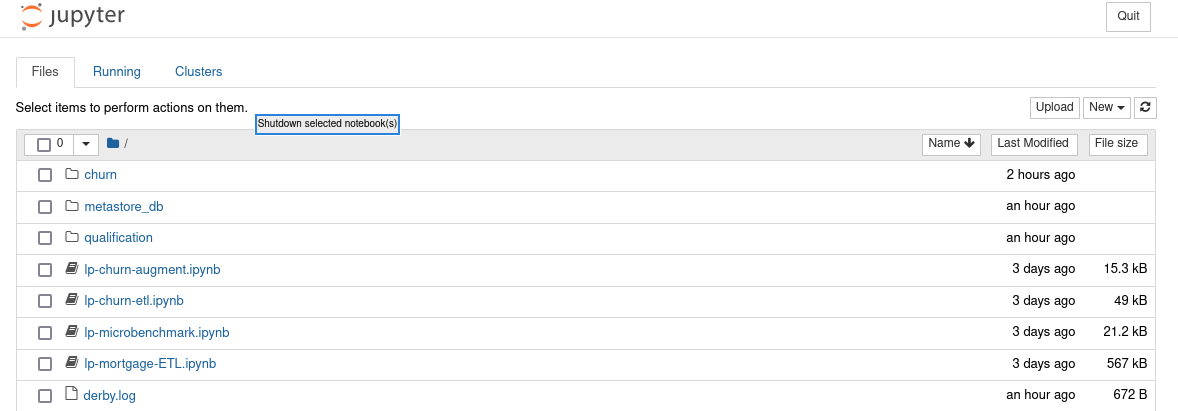

You should see the list above.

Start the Spark History server.

In the Jupyter notebook browser, in the top right select the NEW drop down and select Terminal.

In the newly opened tab, Start the Start the Spark History server with the following command:

bash /opt/spark/sbin/start-history-server.sh

The spark history server can be accessed by browsing to 172.16.0.10:30000

Click the

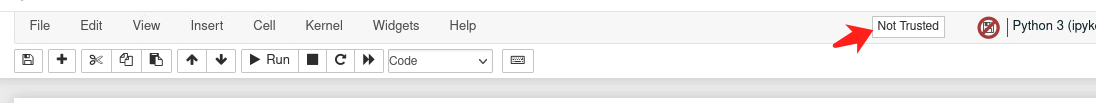

lp-microbenchmark.ipynblink and this should start the Jupyter notebook. Note

NotePlease “trust” the notebook before you run it.

Validate the creation of the microbenchmark pods with the following command.

Open another System Console.

kubectl get pods | grep app-name

The output should look similar to this.

app-name-79d837808b2d2ba5-exec-1 1/1 Running 0 31m app-name-79d837808b2d2ba5-exec-2 1/1 Running 0 31m app-name-79d837808b2d2ba5-exec-3 1/1 Running 0 31m

If you see that your pods are in a PENDING status then the previous pods did not close properly. You can remove those pods with the following command:

kubectl delete pod app-name-XXXX

Run the notebook by clicking Cell -> Run All.

Optional: If you want to try a different dataset there are a few options available.

In the previous example notebook you are using the pre-staged scale factor 1000(1TB) datasets stored on AWS S3 bucket with read-only permission.

If you want to use other dataset on your google storage, you can create k8s secret base on the key of your GCP service account.

kubectl create secret generic gcp-key --from-file=key.json=./your-gcp-key.json

And then update the AWS S3 configs to GCP configs.

--conf spark.kubernetes.driver.secrets.gcp-key=/home/spark/ \ --conf spark.kubernetes.executor.secrets.gcp-key=/home/spark/ \ --conf spark.hadoop.fs.AbstractFileSystem.gs.impl=com.google.cloud.hadoop.fs.gcs.GoogleHadoopFS \ --conf spark.hadoop.google.cloud.auth.service.account.json.keyfile=/your-path/your-gcp-key.json \

Note the timings for the two benchmarks so you can compare to your CPU run times.

Stop the notebook you started in step 1 by pressing ctrl-c in the System Console window that you started the notebook. Answer Y when asked if you want to “Shutdown this notebook server?”.

Run the same microbenchmark using only CPUs.

Execute the

lp-runjupyter-etl-cpu.shscript.

Compare the differences between the two outputs.

You must close the notebook tab and then shutdown the notebook to start another session. If this is not done you will not be able to start another spark session.