Running Qualification & Profiling Tool Benchmark

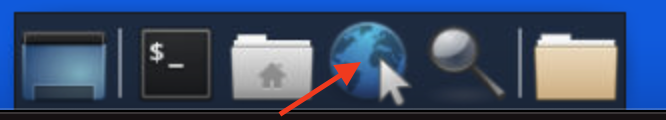

Connect to System Console using the left-hand menu link.

Connect to the sparkrunner pod.

kubectl exec --stdin --tty sparkrunner-0 -- /bin/bash

If you have completed any of the previous labs there should be Spark logs in the following location /data/eventlogs. We will be using some or all of those logs to run the Qualification & Profiling Tool Benchmark. Confirm the existence of Spark logs with the following command:

cd /home/spark ls /data/eventlogs

You should see an output that looks something like this:

From the list above, select one or more files to use with the following command. You can process one or more files by specifying them on the command line or you can process all files by using a wildcard

bash /home/spark/spark-scripts/lp-run-qua-pro.sh qualification /data/eventlogs/*

The output file from the previous command will be located in the /home/spark/qualification directory. There should be a directory for each log that you processed.

The results are in CSV format.

The script also creates a tar file that contains html documents. The output file can be found at the following location

/data/scps/qualification.tar.gz. You can scp the tarball from the Desktop and view the contents with a browser.In the left menu open up the Desktop link and click the VNC connect button.

Open a terminal on the desktop and run the following commands

cd ~/Desktop scp nvidia@172.16.0.10:/data/scps/qualification.tar.gz . tar xfz qualification.tar.gz

Open the web browser in the Linux desktop.

Go the following URL file:///home/nvidia/Desktop/qualification and select one of the application IDs listed. Inside the /ui/html directory open the

index.html.

From the web UI you can find some basic application information such as App Name/App Id/App Duration; the Recommendation column classifies the applications into the following different categories:“Strongly Recommended”, “Recommended”, “Not Recommended”, or “Not Applicable”, the latter indicates that the app has job or stage failures. The Estimated Speed-up column estimates how much faster the application would run on GPU. The speed-up factor is simply the original CPU duration of the app divided by the estimated GPU duration.

To run the Profiling Tool we need to go back to the sparkrunner window and run the following command. You can process one or more files by specifying them on the command line or you can process all files by using a wildcard.

bash /home/spark/lp-run-qua-pro.sh profiling /data/eventlogs/*

The output file from the previous command will be located in the /home/spark/ profiling directory. There should be a directory for each log that you processed. Unlike the Qualification Tool there is no option to view these output files using a browser.