Storage Development Guide

The DPF Storage Subsystem provides a framework for integrating 3rd-party storage plugins with the DPF system. This document outlines the architecture and guidelines for developing a plugin that integrates with the NVIDIA storage emulation service, called DOCA SNAP.

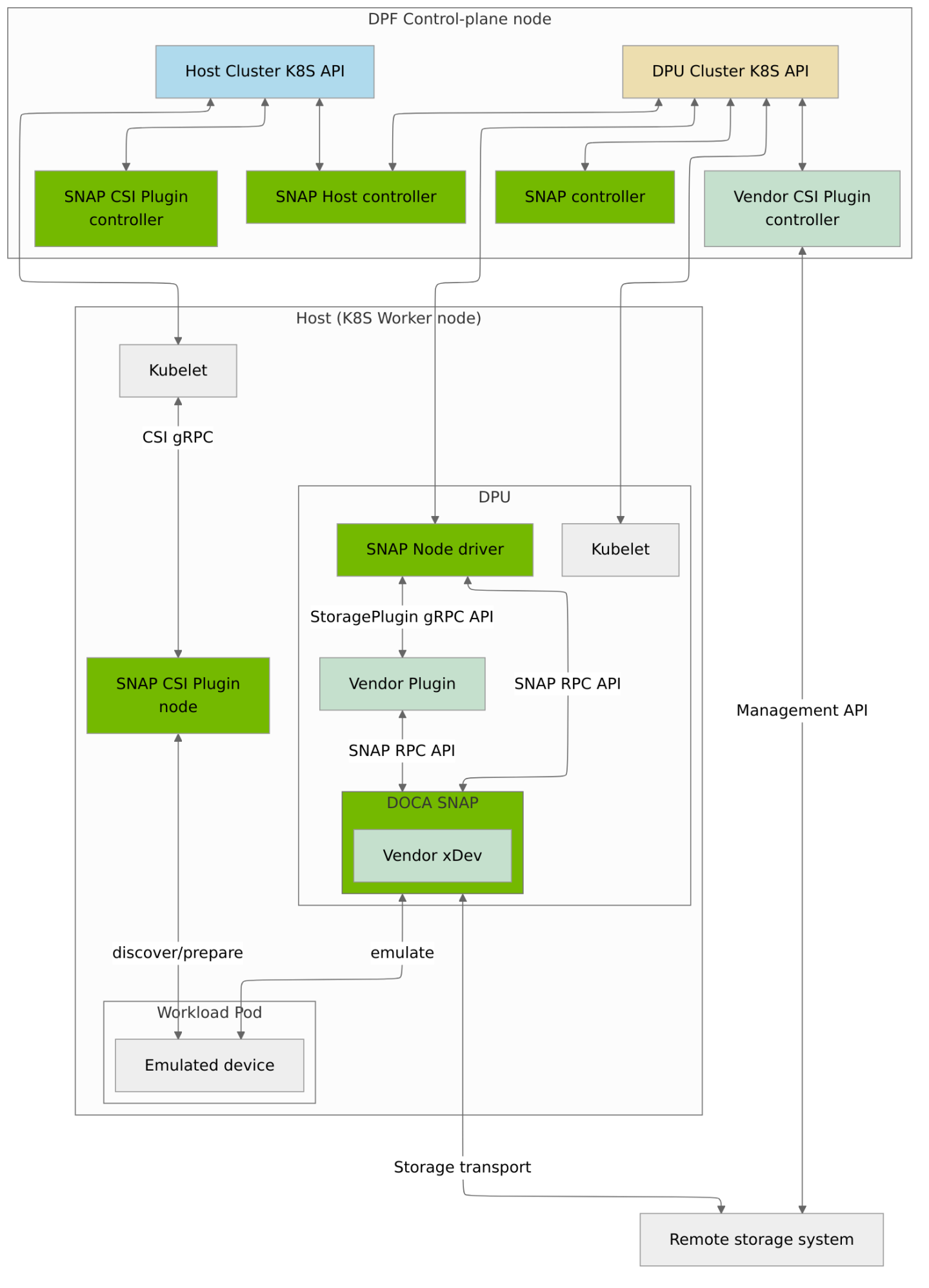

The DPF storage subsystem consists of two types of components: core components provided by NVIDIA and vendor-specific components provided by storage vendors.

Core components are included in the DPF system release and typically require no modifications from end users.

The DPF storage subsystem supports two deployment scenarios:

The set of deployed components, available features, and APIs is different in each scenario.

Kubernetes cluster on Host Trusted mode

In this scenario, hosts function as worker nodes within the DPF management cluster. Users can utilize Kubernetes Storage APIs (StorageClass, PVC, PV, VolumeAttachment) to provision and attach storage to the host. The hosts run the SNAP CSI Plugin, which performs all necessary actions to make storage resources available to the host.

In this scenario, the following emulation methods are supported: * NVMe over VF on top of a static PF

The list below contains the components that are deployed in this scenario.

The core components are:

Vendor-specific components are:

Vendor Plugin (optional)

Vendor xDev (optional)

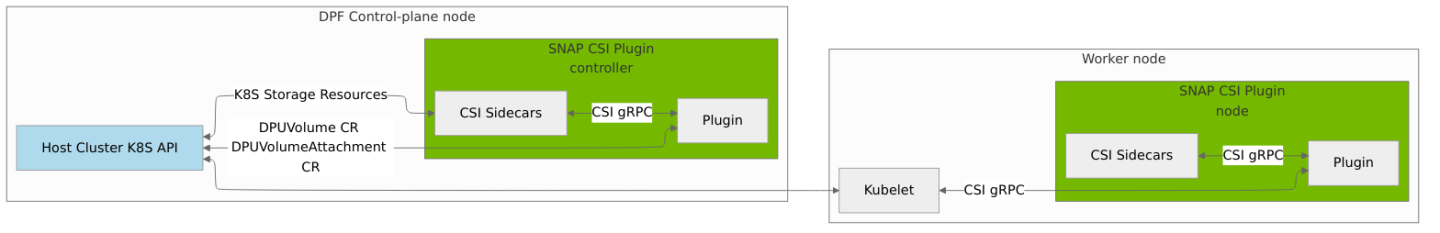

The high-level architecture of the storage subsystem for this scenario is presented in the following diagram.

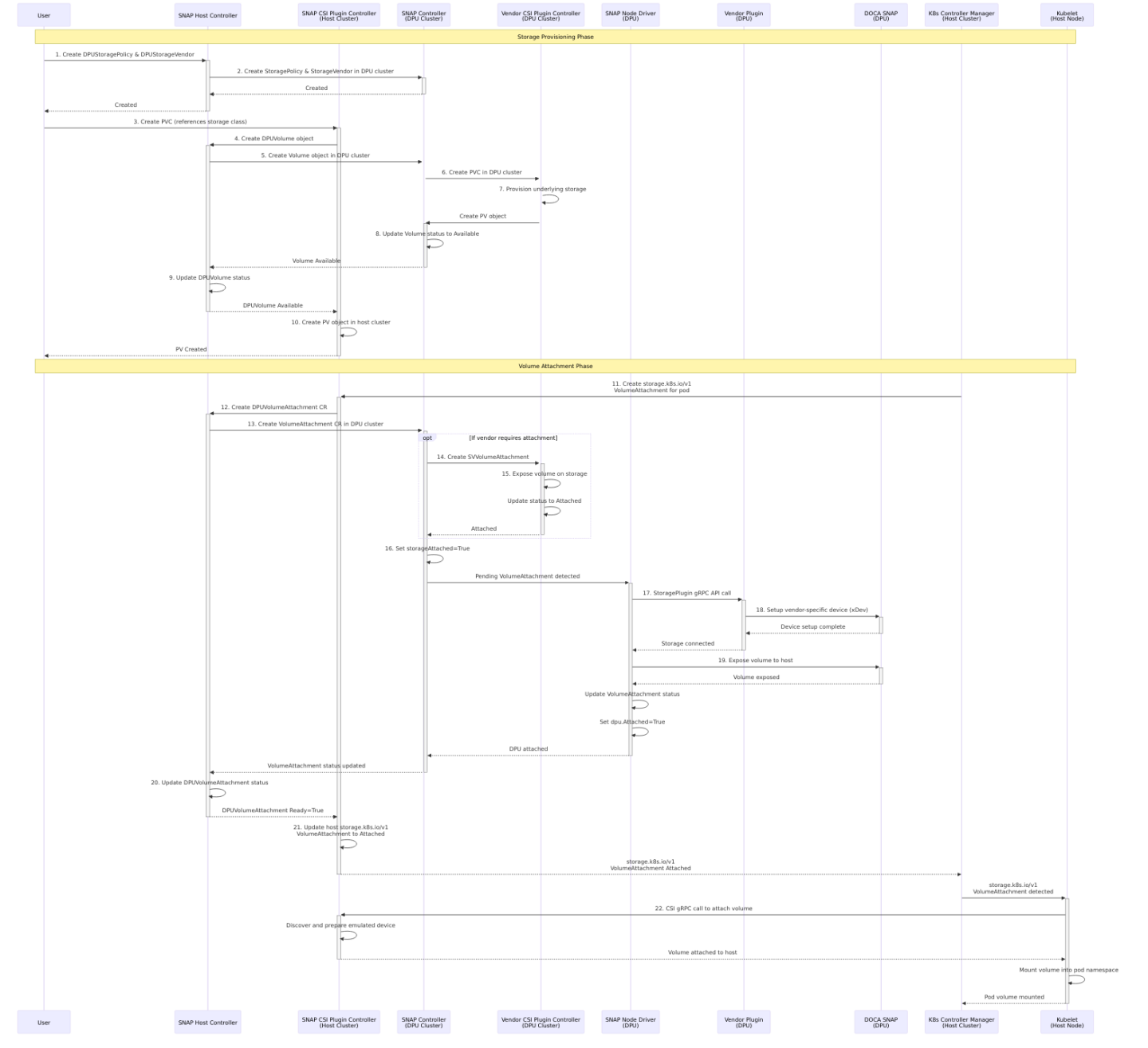

End-to-End Flow for Trusted Kubernetes cluster on host scenario

The following steps outline the end-to-end process for provisioning and attaching storage using the DPF storage subsystem in the Trusted Kubernetes cluster on host scenario:

DPUStoragePolicy and DPUStorageVendor Creation: The user creates a DPUStoragePolicy and DPUStorageVendor object in the host cluster.

StorageVendor and StoragePolicy Creation: The SNAP Host Controller detects the new DPUStoragePolicy and DPUStorageVendor objects in the host cluster and creates the corresponding StoragePolicy and StorageVendor objects in the DPU cluster.

PVC Creation: The user creates a PersistentVolumeClaim (PVC) object in the host cluster. The PVC references a storage class that specifies the SNAP CSI Plugin as its provisioner. The storage class contains parameters that specify the name of a specific DPUStoragePolicy.

DPUVolume Object Creation: The SNAP CSI Plugin Controller in the host cluster handles PVC creation and creates a DPUVolume object in the host cluster. This object includes references to the DPUStoragePolicy and the requested volume parameters from the storage class.

Volume Object Creation in DPU Cluster: The SNAP Host Controller reconciles the DPUVolume object in the host cluster and creates a Volume object in the DPU cluster. The Volume object includes references to the StoragePolicy and the requested volume parameters that are copied from the DPUVolume object.

Storage Vendor Selection: The SNAP Controller detects the new Volume object in the DPU cluster. It selects a StorageVendor that matches the policy specified in the StoragePolicy resource. The controller creates a PVC in the DPU cluster that references the storage class of the selected Vendor CSI Plugin Controller.

Vendor PV Provisioning: The Vendor CSI Plugin Controller controller detects the new PVC in the DPU cluster, provisions the underlying storage, and creates the corresponding PersistentVolume (PV) object.

Volume Availability Update: The SNAP Controller detects the new PV and updates the status of the Volume object in the DPU cluster to Available.

DPUVolume Availability Update: The SNAP Host Controller detects the status change of the Volume CR in the DPU cluster and updates the status of the DPUVolume object in the host cluster.

PV Object Creation: The SNAP CSI Plugin Controller in the host cluster detects the status change of the DPUVolume object and creates the PV object in the host cluster.

Volume Attachment Initiation: The Kubernetes Controller Manager in the host cluster detects the PV object and creates a native Kubernetes VolumeAttachment [storage.k8s.io/v1] object to attach the volume to the user's pod.

DPUVolumeAttachment Object Creation: The SNAP CSI Plugin Controller detects the new native Kubernetes VolumeAttachment [storage.k8s.io/v1] object and creates a corresponding DPUVolumeAttachment CR in the host cluster.

VolumeAttachment Object Creation: The SNAP Host Controller detects the new DPUVolumeAttachment CR in the host cluster and creates a corresponding VolumeAttachment CR in the DPU cluster.

SVVolumeAttachment Creation: The SNAP Controller detects the new VolumeAttachment object in the DPU cluster. If the Vendor CSI Plugin Controller requires attachment, it creates an SVVolumeAttachment object for the Vendor CSI Plugin Controller.

Vendor Volume Attachment: The Vendor CSI Plugin Controller controller detects the new SVVolumeAttachment object and exposes the volume on the underlying storage. Once complete, it updates the status to Attached.

VolumeAttachment Status Update: The SNAP Controller sets the

storageAttachedstatus of the VolumeAttachment to True.Storage Device Connection: The SNAP Node Driver on the DPU detects the pending VolumeAttachment object and calls the Vendor Plugin via the StoragePlugin gRPC API to connect the volume to the underlying storage.

Vendor Plugin Device Setup: The Vendor Plugin connects the volume to the underlying storage (if required) and sets up the vendor-specific device (xDev) inside the DOCA SNAP service.

SNAP Process Volume Exposure: The SNAP Node Driver calls the DOCA SNAP service to expose the volume to the host. Upon completion, the SNAP Node Driver updates the DPU parameters in the status of the VolumeAttachment and sets the

dpu.Attachedstatus to True.DPUVolumeAttachment Availability Update: The SNAP Host Controller detects the status change of the VolumeAttachment CR in the DPU cluster and updates the status of the DPUVolumeAttachment object in the host cluster.

Host VolumeAttachment Update: The SNAP CSI Plugin Controller detects that DPUVolumeAttachment

ReadyCondition isTrueand updates the status of the native Kubernetes VolumeAttachment [storage.k8s.io/v1] object on the host cluster to Attached.Pod Volume Mounting: The kubelet on the host node detects the native Kubernetes VolumeAttachment [storage.k8s.io/v1] object with status Attached. It calls the SNAP CSI Plugin Node to attach the volume to the host and mounts the volume into the pod's namespace.

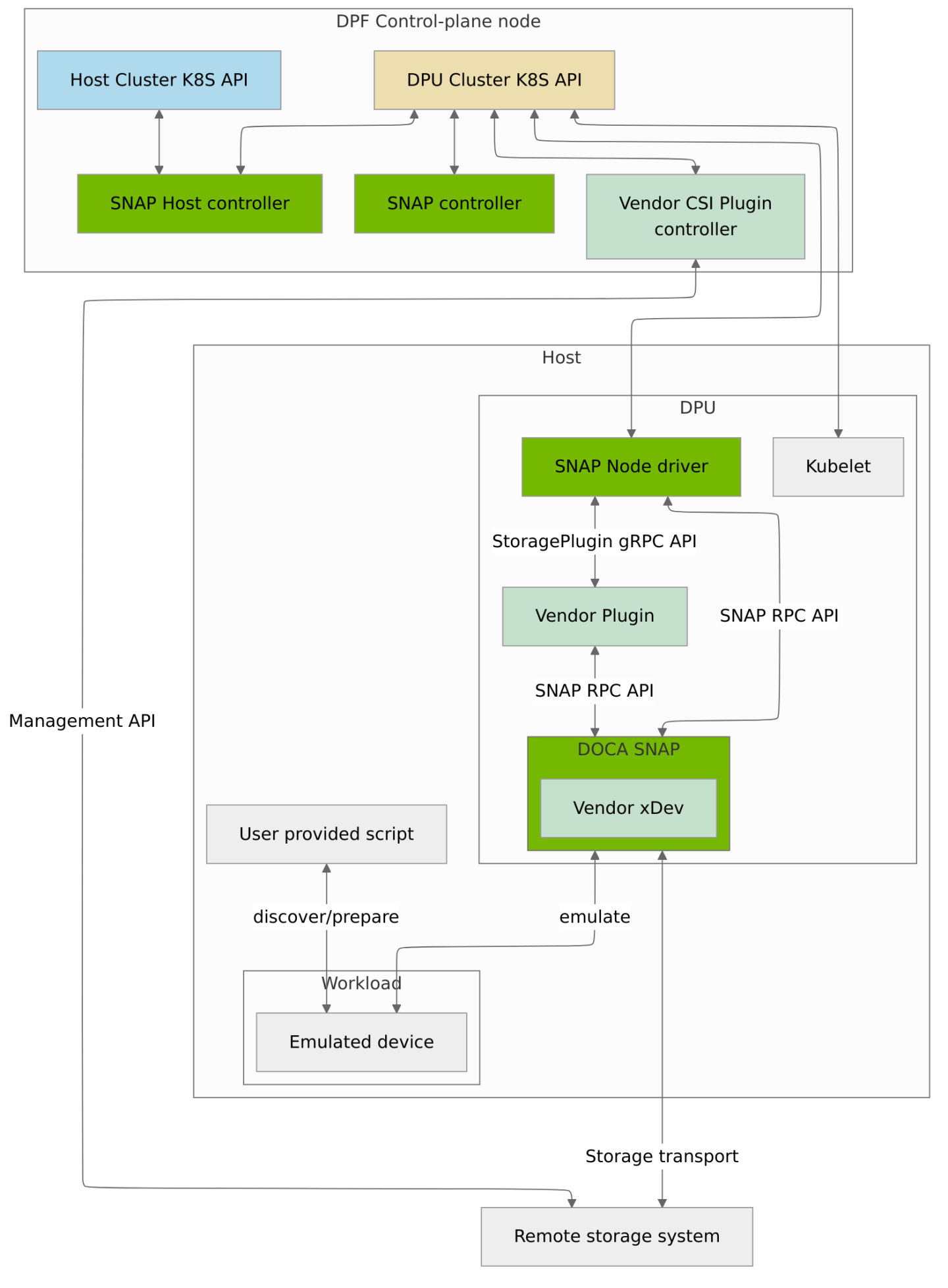

Zero Trust mode

In this scenario, hosts are not trusted and the host OS configuration is not managed by DPF components. Standard Kubernetes Storage APIs (StorageClass, PVC, PV, VolumeAttachment) cannot be used in this scenario. Instead, DPF-specific storage APIs must be used to manage storage operations.

There are no DPF storage components deployed on the host. User-provided applications or scripts are responsible for performing the required actions on the host OS to make storage available to the host.

Refer to the DOCA SNAP documentation for detailed information about required host OS configuration.

In this scenario, the following emulation methods are supported: * NVMe over VF on top of a static PF * NVMe over hot-plugged PF * Virtio-FS over hot-plugged PF

The list below contains the components that are deployed in this scenario.

The core components are:

Vendor-specific components are:

Vendor Plugin (optional)

Vendor xDev (optional)

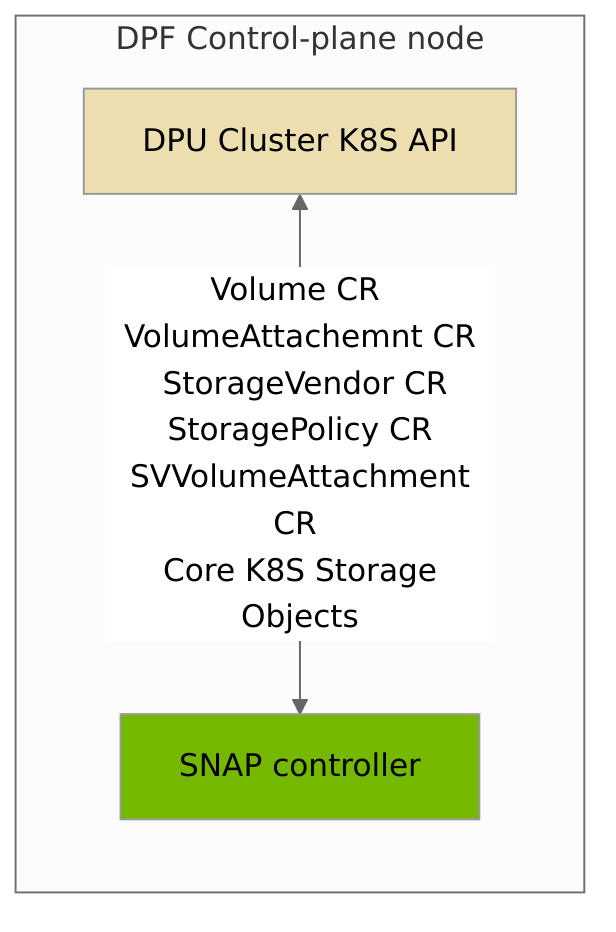

The high-level architecture of the storage subsystem is presented in the following diagram.

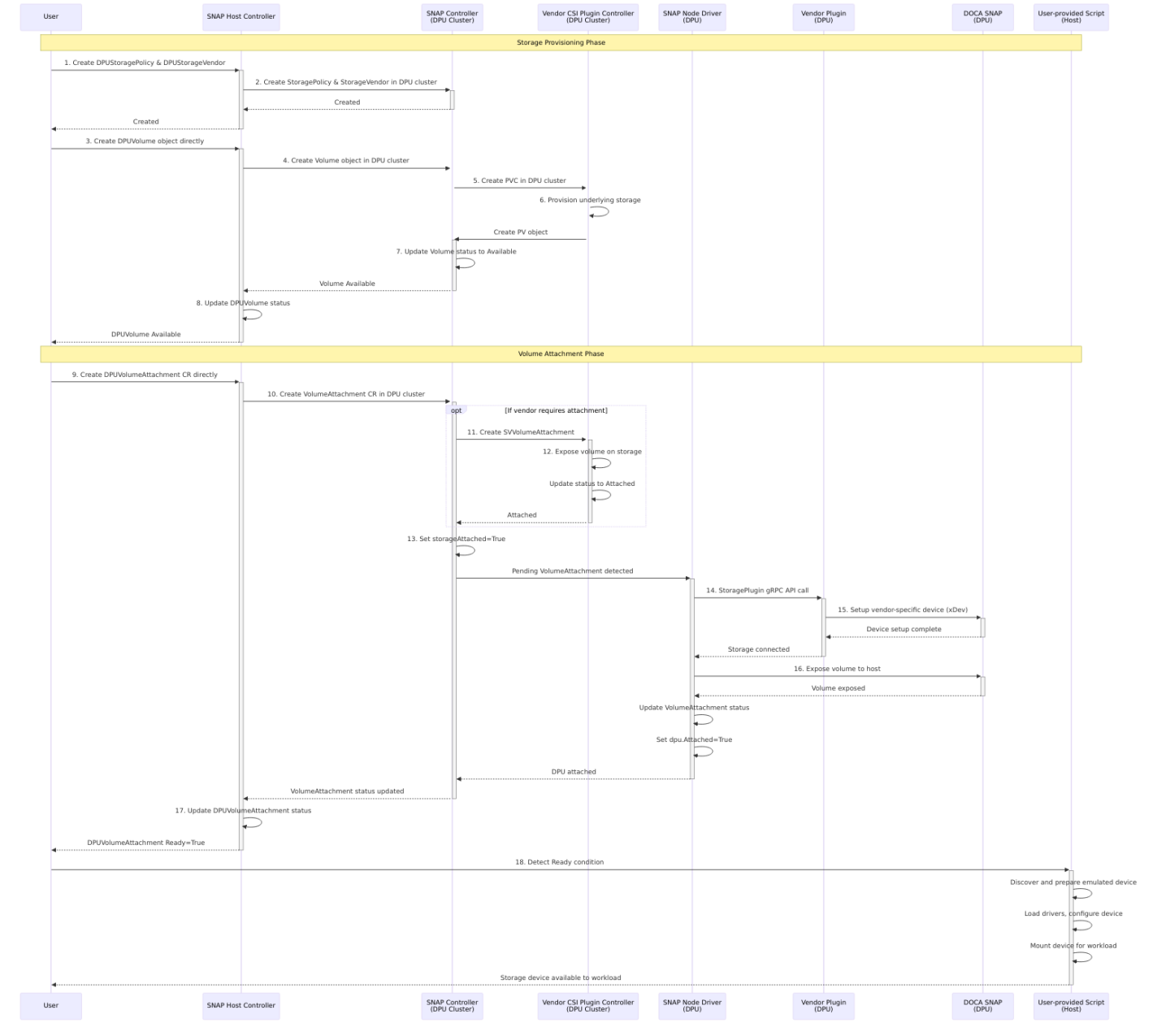

End-to-End Flow Description for Zero Trust scenario

The following steps outline the end-to-end process for provisioning and attaching storage using the DPF storage subsystem in the Zero Trust scenario:

DPUStoragePolicy and DPUStorageVendor Creation: The user creates a DPUStoragePolicy and DPUStorageVendor object in the host cluster.

StorageVendor and StoragePolicy Creation: The SNAP Host Controller detects the new DPUStoragePolicy and DPUStorageVendor objects in the host cluster and creates the corresponding StoragePolicy and StorageVendor objects in the DPU cluster.

DPUVolume Object Creation: The user directly creates a DPUVolume object in the host cluster. This object includes references to the DPUStoragePolicy and the requested volume parameters.

Volume Object Creation in DPU Cluster: The SNAP Host Controller reconciles the DPUVolume object in the host cluster and creates a Volume object in the DPU cluster. The Volume object includes references to the StoragePolicy and the requested volume parameters that are copied from the DPUVolume object.

Storage Vendor Selection: The SNAP Controller detects the new Volume object in the DPU cluster. It selects a StorageVendor that matches the policy specified in the StoragePolicy resource. The controller creates a PVC in the DPU cluster that references the storage class of the selected Vendor CSI Plugin Controller.

Vendor PV Provisioning: The Vendor CSI Plugin Controller controller detects the new PVC in the DPU cluster, provisions the underlying storage, and creates the corresponding PersistentVolume (PV) object.

Volume Availability Update: The SNAP Controller detects the new PV and updates the status of the Volume object in the DPU cluster to Available.

DPUVolume Availability Update: The SNAP Host Controller detects the status change of the Volume CR in the DPU cluster and updates the status of the DPUVolume object in the host cluster.

DPUVolumeAttachment Object Creation: The user directly creates a DPUVolumeAttachment CR in the host cluster to attach the volume to a specific host node.

VolumeAttachment Object Creation: The SNAP Host Controller detects the new DPUVolumeAttachment CR in the host cluster and creates a corresponding VolumeAttachment CR in the DPU cluster.

SVVolumeAttachment Creation: The SNAP Controller detects the new VolumeAttachment object in the DPU cluster. If the Vendor CSI Plugin Controller requires attachment, it creates an SVVolumeAttachment object for the Vendor CSI Plugin Controller.

Vendor Volume Attachment: The Vendor CSI Plugin Controller controller detects the new SVVolumeAttachment object and exposes the volume on the underlying storage. Once complete, it updates the status to Attached.

VolumeAttachment Status Update: The SNAP Controller sets the

storageAttachedstatus of the VolumeAttachment to True.Storage Device Connection: The SNAP Node Driver on the DPU detects the pending VolumeAttachment object and calls the Vendor Plugin via the StoragePlugin gRPC API to connect the volume to the underlying storage.

Vendor Plugin Device Setup: The Vendor Plugin connects the volume to the underlying storage (if required) and sets up the vendor-specific device (xDev) inside the DOCA SNAP service.

SNAP Process Volume Exposure: The SNAP Node Driver calls the DOCA SNAP service to expose the volume to the host. Upon completion, the SNAP Node Driver updates the DPU parameters in the status of the VolumeAttachment and sets the

dpu.Attachedstatus to True.DPUVolumeAttachment Availability Update: The SNAP Host Controller detects the status change of the VolumeAttachment CR in the DPU cluster and updates the status of the DPUVolumeAttachment object in the host cluster to indicate the volume is ready.

Host Volume Preparation: The user-provided script on the host detects that the DPUVolumeAttachment

Readycondition isTrueand performs the necessary operations to discover, prepare, and make the emulated storage device available to the host workload. This includes any required driver loading, device configuration, and mounting operations.

SNAP CSI Plugin

Note: This component is deployed only for the trusted Kubernetes cluster on host scenario.

The SNAP CSI Plugin is a Kubernetes CSI plugin responsible for managing the lifecycle of storage resources within the host cluster. It enables the nodes within the host cluster to consume storage resources provisioned by the DPF storage subsystem.

The SNAP CSI plugin handles the creation and management of Kubernetes storage resources such as Storage Classes, Persistent Volumes (PV), and other Kubernetes Storage objects.

The plugin uses DPF storage APIs by creating DPUVolume and DPUVolumeAttachment custom resources in the host cluster to trigger the creation and attachment of storage resources in the DPU cluster.

The SNAP CSI Plugin consists of a controller and a node component. The controller component is deployed on control-plane nodes, while the node component is deployed on worker nodes. The node component is responsible for discovering and preparing emulated storage devices on the host, and mounting them into Pod namespaces when requested by the kubelet.

The SNAP CSI Plugin currently supports only emulated NVMe block devices with functionType set to vf. Virtio-FS devices are not supported yet.

Example of the StorageClass object:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: snap-nvme

provisioner: csi.snap.nvidia.com

parameters:

policy: "example-policy"

functionType: "vf"

hotplugFunction: "false"

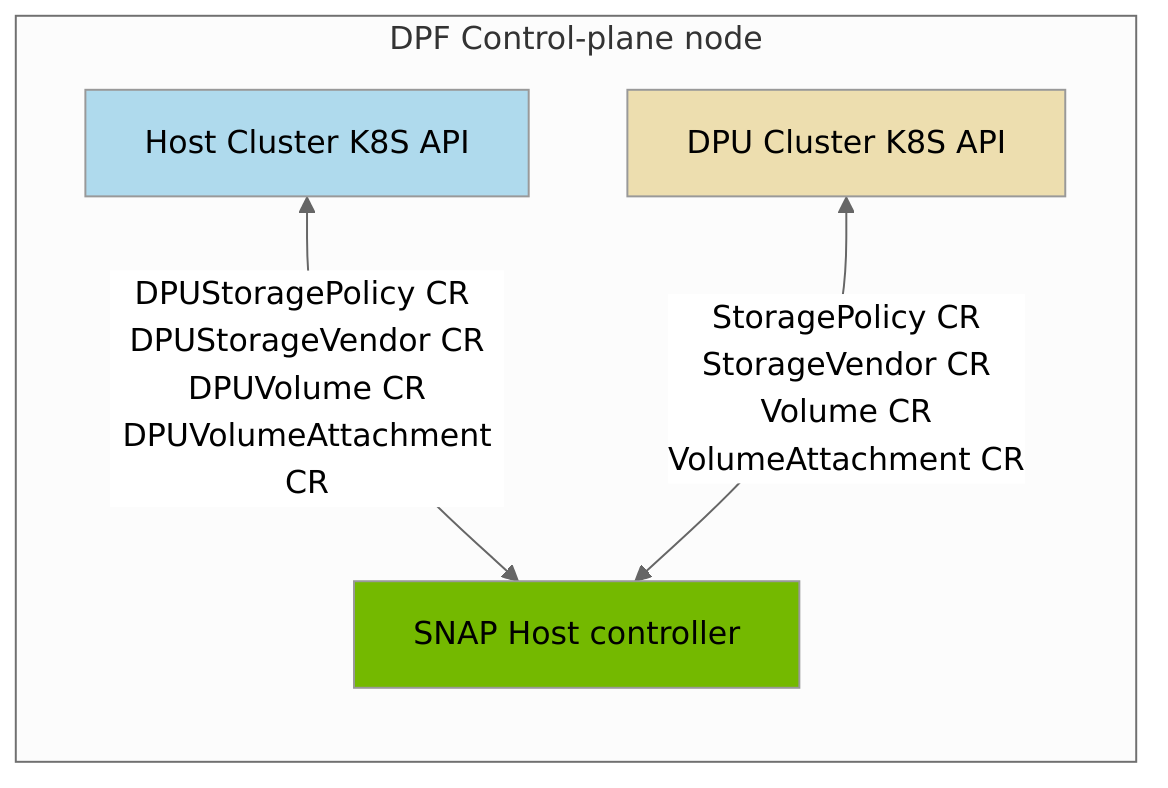

SNAP Host Controller

The SNAP Host Controller implements the user-facing DPF storage APIs and manages the synchronization of storage resources between the host cluster and the DPU cluster across both deployment scenarios. Operating within the DPF control-plane, it:

Reconciles DPUStoragePolicy and DPUStorageVendor objects in the host cluster and creates corresponding StoragePolicy and StorageVendor objects in the DPU cluster.

Reconciles DPUVolume objects in the host cluster and creates corresponding Volume objects in the DPU cluster with the appropriate parameters and references.

Reconciles DPUVolumeAttachment objects in the host cluster and creates corresponding VolumeAttachment objects in the DPU cluster to trigger volume attachment operations.

Monitors the status of storage resources in the DPU cluster and propagates status updates back to the corresponding resources in the host cluster

SNAP Controller

The SNAP Controller implements the business logic of the DPF storage subsystem. Operating within the DPU cluster, it:

Reconciles Volume and VolumeAttachment resources created by the SNAP CSI plugin.

Creates the necessary Kubernetes resources to trigger the Vendor CSI Plugin Controller (that watches resources in the DPU cluster).

Uses StorageVendor and StoragePolicy custom resources to select the appropriate storage vendor and pass required parameters.

If the Vendor CSI Plugin Controller needs ControllerPublishVolume/ ControllerUnpublishVolume operations, the SNAP Controller will create an SVVolumeAttachment custom resource. This resource is similar to the native Kubernetes VolumeAttachment but is not handled by the Kubernetes controllers. Instead, it is managed by the NVIDIA External Attacher sidecar, that is drop-in replacement for upstream external-attacher sidecar.

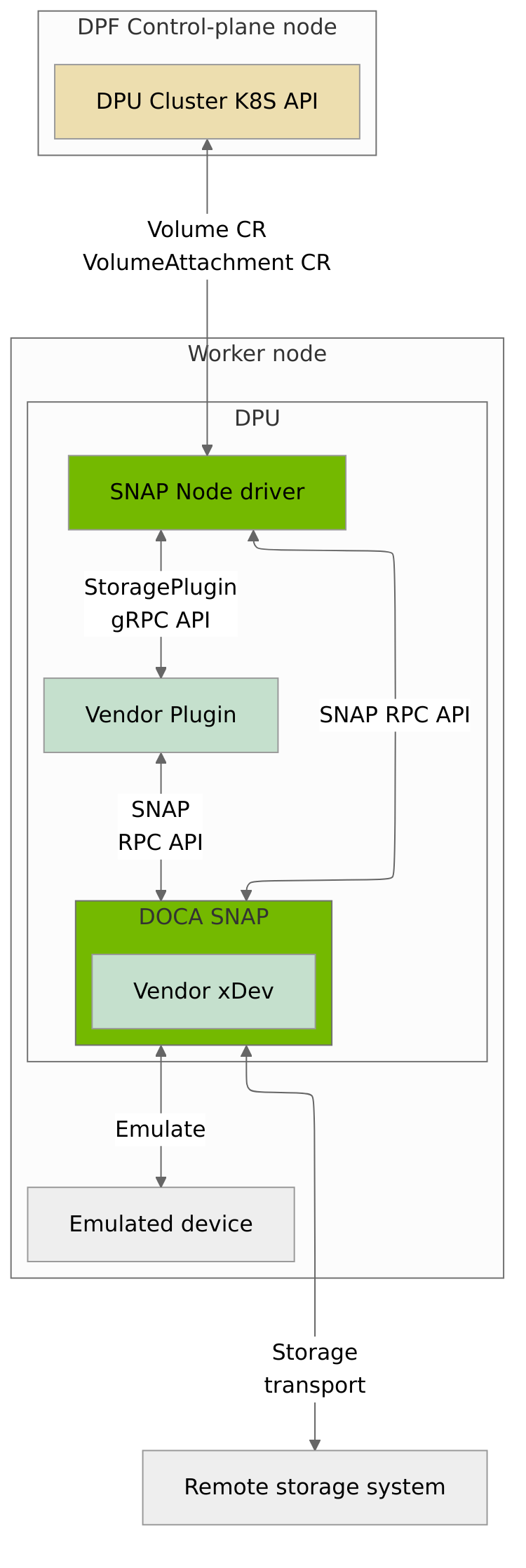

SNAP Node Driver

The SNAP Node Driver runs on each DPU that is used to attach storage. Responsibilities include:

Invoking the Vendor Plugin via the StoragePlugin gRPC API to trigger the attachment of remote storage resources to the DOCA SNAP service.

Interacting directly with the DOCA SNAP service via the SNAP RPC API to make storage resources available to the host.

DOCA SNAP

NVIDIA DOCA SNAP technology encompasses a family of services that enable hardware-accelerated virtualization of local storage running on NVIDIA BlueField products. The SNAP services present networked storage as local block or file system devices to the host, emulating local drives on the PCIe bus. Additional details about the SNAP services can be found in the DOCA SNAP documentation.

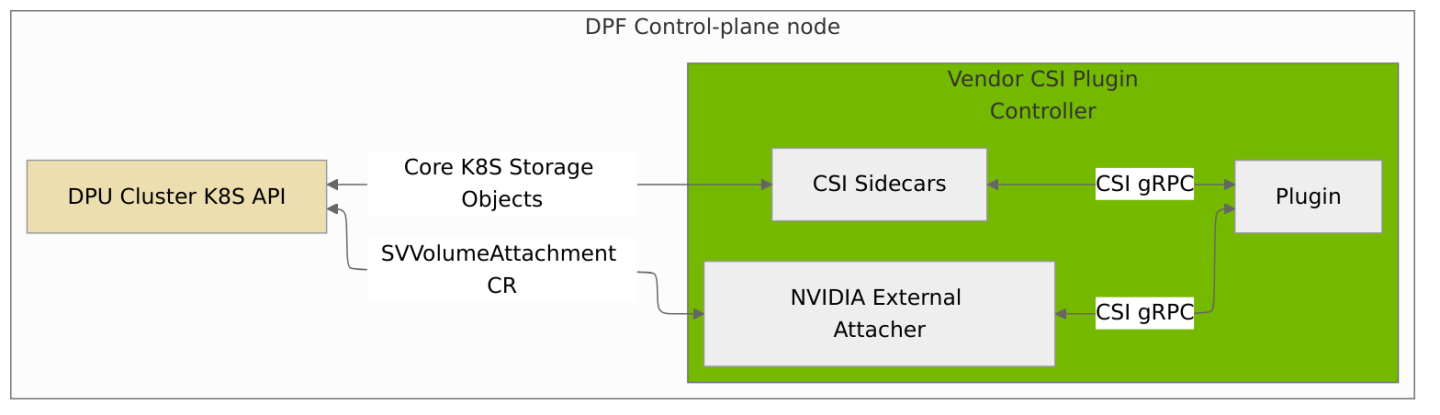

Vendor CSI Plugin Controller

This is the controller part of the standard Kubernetes CSI driver of the storage vendor which is used for any Kubernetes deployment. It should be used to create/delete/manage the volumes on the underlying storage system.

If the storage vendor requires to support the ControllerPublishVolume/ ControllerUnpublishVolume operations, then a small adjustment in the controller deployment mechanism is required, due to the replacement of the Kubernetes native VolumeAttachment [storage.k8s.io/v1] object by the SVVolumeAttachment CRD object.

The original Kubernetes external-attacher sidecar should be replaced by a new external-attacher sidecar, provided by NVIDIA, which monitors the SVVolumeAttachment objects instead of the Kubernetes native VolumeAttachment [storage.k8s.io/v1] objects (see diagram below). The new external-attacher sidecar calls the ControllerPublish and ControllerUnpublish functions of the CSI controller.

An appropriate storage class that represents the CSI controller should be created on the DPU Kubernetes cluster (see example below). The reclaimPolicy field in the storage class MUST be set to Delete. The reason is that the reclaim policy is actually managed by the NVIDIA storage class.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: example-vendor.example

annotations:

storageclass.kubernetes.io/is-default-class: "false"

provisioner: csi-driver.example-vendor.example

reclaimPolicy: Delete

In addition, a DPUStorageVendor CR object should be created in the host cluster, and the name of the StorageClass of the storage vendor (that exists in the DPU cluster) should be set in the storageClassName parameter (see example below).

apiVersion: storage.nvidia.com/v1alpha1

kind: DPUStorageVendor

metadata:

name: example-vendor

namespace: nvidia-storage

spec:

storageClassName: "example-vendor.example"

pluginName: "example-vendor-plugin"

If the storage vendor requires to support the controller attach/detach API then an appropriate CSIDriver object that represents the storage vendor CSI driver should be created on the DPU Kubernetes cluster (see example below), with the property attacheRequired set to True.

This object is used by the SNAP Controller to determine if ControllerPublishVolume/ ControllerUnpublishVolume operations are supported by the storage vendor.

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:

name: csi-driver.example-vendor.example

spec:

attachRequired: true // Indicates this CSI volume driver requires an attach operation

NVIDIA provides the following sample Vendor CSI plugin controllers that can be used as references:

SPDK-CSI plugin can be used as an example for block storage

NFS-CSI plugin can be used as an example for file storage

Note: These plugins are not intended to be used in production environments.

Vendor Plugin

Note: Vendors can use the plugins provided by NVIDIA or implement their own if needed. NVIDIA provides the following plugins:

nvidia-block(uses NVMe-oF, compatible with SPDK-CSI plugin) andnvidia-fs(uses NFS-kernel client, compatible with NFS-CSI plugin).

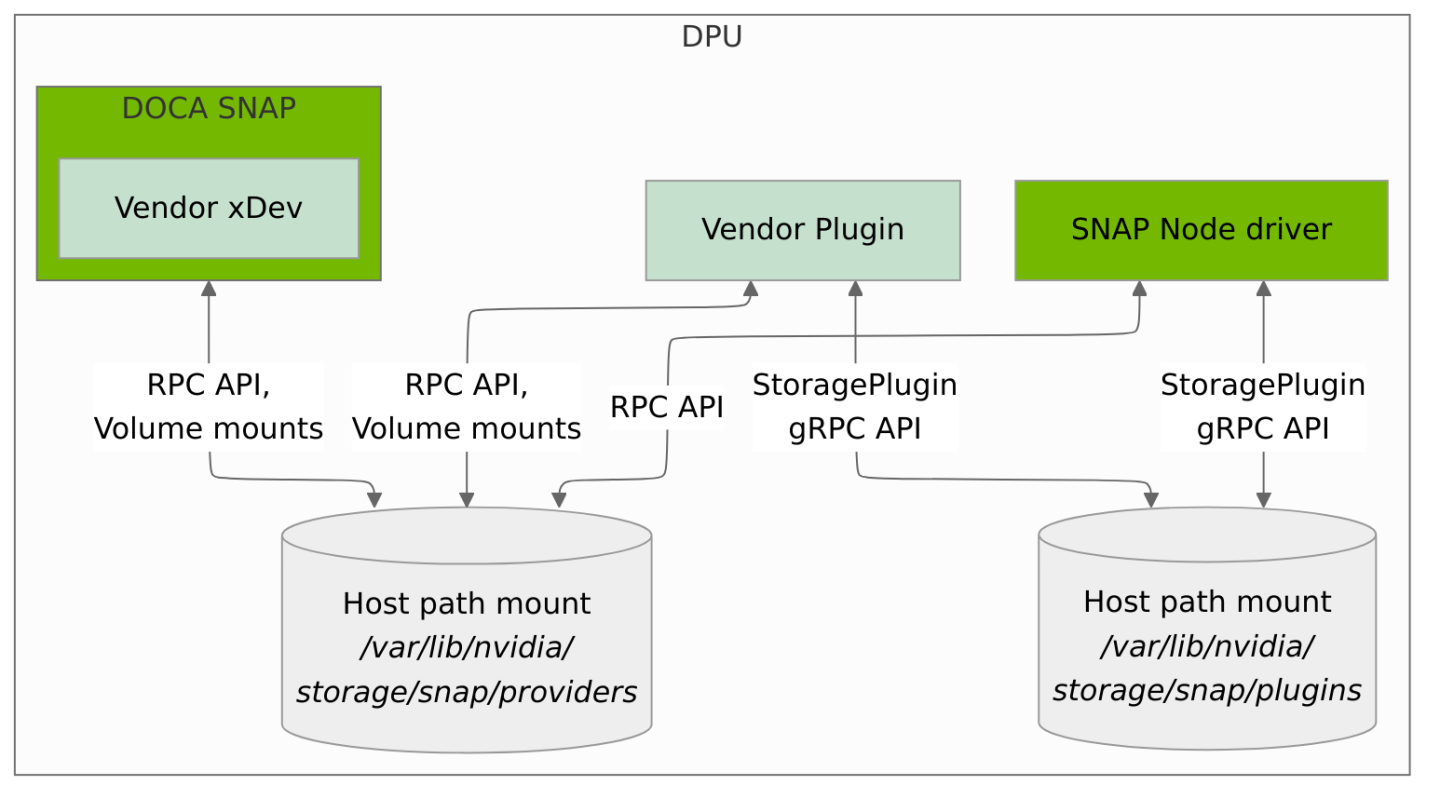

The concept of this component is very similar to the Kubernetes CSI node-driver. It is responsible to translate the StoragePlugin API into the storage vendor specific RPC calls.

The plugin requires direct access to the DPU. It should expose a gRPC interface through a UNIX domain socket which should be shared through a HostPath volume on the DPU worker node. This socket is used by the SNAP Node Driver to make calls to this component. It is responsible for creating the UNIX domain socket in the following shared folder: /var/lib/nvidia/storage/snap/plugins/{plugin-name}/dpu.sock, where plugin-name represent the name of the Vendor plugin.

If a plugin needs to mount filesystems or block devices to the DOCA SNAP Pod before creating a bdev or fsdev, it should use the following path: /var/lib/nvidia/storage/snap/providers/{provider-name}/volumes/{plugin-name}/{volume-id}, where provider-name is the name of the DOCA SNAP provider that the plugin uses, plugin-name is the name of the Vendor plugin, and volume-id is the ID of the volume.

It interacts with the Storage vendor xDev module, linked with the DOCA SNAP, through the SNAP UNIX domain socket which should be also shared through a HostPath volume on the DPU worker node. The connections between the node components are described in the diagram below.

Storage vendor xDev module

This is an optional SPDK device module which provides storage-specific logic inside the SNAP service. If exists - it should be managed by the storage vendor DPU plugin component. The module is deployed as a static or shared library along with the SNAP service.

Public API

These are the APIs that users directly interact with to manage storage resources.

DPUStoragePolicy CRD

Defines a storage policy that maps between a policy and a list of DPU storage vendors.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: DPUStoragePolicy

metadata:

name: example-dpu-storage-policy

spec:

dpuStorageVendors:

- example-vendor-1

- example-vendor-2

selectionAlgorithm: NumberVolumes # Random or NumberVolumes

parameters:

parameter1: value1

parameter2: value2

DPUStorageVendor CRD

Represents a DPU storage vendor. Each storage vendor must have exactly one DPUStorageVendor custom resource.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: DPUStorageVendor

metadata:

name: example-vendor

spec:

storageClassName: example-vendor-storage-class

pluginName: example-vendor-plugin

DPUVolume CRD

Represents a persistent volume that will be provisioned by the DPF storage subsystem.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: DPUVolume

metadata:

name: example-volume

spec:

dpuStoragePolicyName: example-dpu-storage-policy

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

volumeMode: Filesystem # Filesystem or Block

parameters:

parameter1: value1

Note: The

volumeModefield controls which emulation method will be used for the volume. ForFilesystemmode, the volume will be exposed to the host as a Virtio-FS device. ForBlockmode, the volume will be exposed as an emulated NVMe device.

DPUVolumeAttachment CRD

Captures the intent to attach/detach a DPU volume to/from a specific DPU node.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: DPUVolumeAttachment

metadata:

name: example-volume-attachment

spec:

dpuVolumeName: example-volume

dpuNodeName: dpu-node-01

functionType: vf

hotplugFunction: false

Note: The

functionTypeandhotplugFunctionfields control the function type used for volume attachment. Currently supported combinations arevfwithfalse(VF on top of a static PF) andpfwithtrue(hot-plugged PF).

Internal API

These APIs are not intended to be directly used by end users. They are included here for reference only.

SVVolumeAttachment CRD

Captures the intent to attach/detach the specified volume to/from the specified node for the storage vendor.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: SVVolumeAttachment

metadata:

name: sv-volume-attachment-example

spec:

attacher: vendor-plugin

nodeName: node01

source:

persistentVolumeName: pv-example

StoragePolicy CRD

Defines a storage policy that maps between a policy and a list of storage vendors.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: StoragePolicy

metadata:

name: example-storage-policy

spec:

storageVendors:

- vendor1

- vendor2

# supported modes are Random and LocalNVolumes

storageSelectionAlg: LocalNVolumes

storageParameters:

parameter1: value1

parameter2: value2

status:

state: Valid

message: "Storage policy is valid."

StorageVendor CRD

Represents a storage vendor. Each storage vendor must have exactly one StorageVendor custom resource.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: StorageVendor

metadata:

name: vendor1

spec:

pluginName: vendor-plugin

storageClassName: vendor-storage-class

VolumeAttachment CRD

Captures the intent to attach/detach the specified NVIDIA volume to/from the specified node.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: VolumeAttachment

metadata:

name: nv-volume-attachment-example

spec:

nodeName: node01

source:

volumeRef:

name: volume-example

namespace: default

parameters:

parameter1: value1

functionType: vf # pf or vf

hotplugFunction: false

status:

storageAttached: true

dpu:

attached: true

pciDeviceAddress: "0000:03:00.0"

deviceName: nvme0n1

bdevAttrs:

nvmeNsID: 1

nvmeUUID: "12345678-1234-1234-1234-123456789012"

Volume CRD

Represents a persistent volume on the DPU cluster, mapping between the tenant K8s PV object and the actual volume on the DPU cluster.

apiVersion: storage.dpu.nvidia.com/v1alpha1

kind: Volume

metadata:

name: volume-example

spec:

request:

capacityRange:

request: "10Gi"

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

storagePolicyRef:

name: example-storage-policy

namespace: default

storageParameters:

policy: example-storage-policy

parameter1: value1

volume:

id: volume-12345

capacity: "10Gi"

accessModes:

- ReadWriteOnce

reclaimPolicy: Delete

storageVendorName: example-vendor

storageVendorPluginName: example-vendor-plugin

status:

state: Available

Each vendor plugin must implement the following gRPC API to interact with the DPF storage subsystem:

Services

syntax = "proto3";

package nvidia.storage.plugins.v1;

import "google/protobuf/wrappers.proto";

// The Identity service provides APIs to identify the plugin and verify its health.

service IdentityService {

// GetPluginInfo returns the name and version of the plugin.

rpc GetPluginInfo(GetPluginInfoRequest) returns (GetPluginInfoResponse);

// Probe checks the health and readiness of the plugin.

rpc Probe(ProbeRequest) returns (ProbeResponse);

}

// The StoragePlugin service provides APIs to manage storage devices.

service StoragePluginService {

// StoragePluginGetCapabilities returns the capabilities supported by the plugin.

rpc StoragePluginGetCapabilities(StoragePluginGetCapabilitiesRequest)

returns (StoragePluginGetCapabilitiesResponse);

// GetSNAPProvider retrieves the name of the SNAP provider used by the plugin.

rpc GetSNAPProvider(GetSNAPProviderRequest) returns (GetSNAPProviderResponse);

// CreateDevice creates a new storage device and exposes it.

rpc CreateDevice(CreateDeviceRequest) returns (CreateDeviceResponse);

// DeleteDevice removes a storage device.

rpc DeleteDevice(DeleteDeviceRequest) returns (DeleteDeviceResponse);

// GetDevice gets a storage device.

rpc GetDevice(GetDeviceRequest) returns (GetDeviceResponse);

// ListDevices list all devices information

rpc ListDevices(ListDevicesRequest) returns (ListDevicesResponse);

}

All the RPC methods that are not implemented by the plugin MUST reply with the non-ok gRPC status code, 12 UNIMPLEMENTED.

Any of the RPCs defined above may timeout and may be retried. The SNAP Node Driver may choose the maximum time it is willing to wait for a call, how long it waits between retries, and how many times it retries (these values are not negotiated between plugin and SNAP Node Driver).

Idempotency requirements ensure that a retried call with the same fields continues where it left off when retried. The only way to cancel a call is to issue a "negation" call if one exists. For example, issue a DeleteDevice call to cancel a pending CreateDevice operation.

GetPluginInfo

This method should return the name of the plugin (e.g., example-vendor-plugin.example) and the version of the plugin (e.g., 1.0). On success, the method should reply 0 OK in the gRPC status code.

If the plugin is unable to complete the GetPluginInfo call successfully, it MUST reply a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// GetPluginInfoRequest is used to request the plugin's information.

message GetPluginInfoRequest {}

// GetPluginInfoResponse provides the plugin's name, version, and manifest details.

message GetPluginInfoResponse {

// The name of the plugin. It must follow domain name notation format

// (https://tools.ietf.org/html/rfc1035#section-2.3.1) and must be 63 characters

// or less. This field is required.

string name = 1;

// The version of the plugin. This field is required.

string vendor_version = 2;

// The manifest provides additional opaque information about the plugin.

// This field is required.

map<string, string> manifest = 3;

}

Probe

This API is called to verify that the plugin is in a healthy and ready state. If an unhealthy state is reported, via a non-success response, the CO (in our case Kubernetes) may take action with the intent to bring the plugin to a healthy state (e.g., restarting the plugin container).

The plugin should verify its internal state and returns 0 OK if the validation succeeds. If the plugin is still initializing, but is otherwise perfectly healthy, it shall return 0 OK with the ready field set to False.

Return codes:

Number | Code | Description |

0 | OK | |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// ProbeRequest is used to check the plugin's health and readiness.

message ProbeRequest {}

// ProbeResponse indicates the plugin's health and readiness status.

message ProbeResponse {

// Ready indicates whether the plugin is healthy and ready.

google.protobuf.BoolValue ready = 1;

}

StoragePluginGetCapabilities

This API allows the SNAP Node-Driver to check the supported capabilities of the storage service provided by the plugin.

If the plugin is unable to complete the StoragePluginGetCapabilities call successfully, it MUST return a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// StoragePluginGetCapabilitiesRequest is used to request the plugin's capabilities.

message StoragePluginGetCapabilitiesRequest {}

// StoragePluginGetCapabilitiesResponse provides the list of supported capabilities.

message StoragePluginGetCapabilitiesResponse {

// Capabilities supported by the plugin.

repeated StoragePluginServiceCapability capabilities = 1;

}

// StoragePluginServiceCapability describes a capability of the storage plugin service.

message StoragePluginServiceCapability {

// RPC specifies an RPC capability type.

message RPC {

// Type defines the specific capability.

enum Type {

// UNSPECIFIED indicates an undefined capability.

TYPE_UNSPECIFIED = 0;

// CREATE_DELETE_BLOCK_DEVICE indicates support for block device creation and deletion.

TYPE_CREATE_DELETE_BLOCK_DEVICE = 1;

// CREATE_DELETE_FS_DEVICE indicates support for filesystem device creation and deletion.

TYPE_CREATE_DELETE_FS_DEVICE = 2;

// GET_DEVICE_STATS indicates support for retrieving device statistics.

TYPE_GET_DEVICE_STATS = 3;

// LIST_DEVICES indicates support for listing devices.

TYPE_LIST_DEVICES = 4;

}

// The type of the capability.

Type type = 1;

}

// The specific type of capability.

oneof type {

// Specifies the RPC capabilities of the service.

RPC rpc = 1;

}

}

GetSNAPProvider

The API is called by the SNAP Node Driver to retrieve the name of the SNAP provider used by the plugin. If the plugin uses the default SNAP process (provided by NVIDIA) then an empty string should be returned. Otherwise, a unique name represents the SNAP process used by this plugin should be returned. The name is used to identify the UNIX domain socket used by both the plugin and the SNAP Node Driver to communicate with the SNAP process.

If the plugin is unable to complete the GetSNAPProvider call successfully, it MUST return a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// GetSNAPProviderRequest is used to retrieve the SNAP provider's name.

message GetSNAPProviderRequest {}

// GetSNAPProviderResponse provides the SNAP provider's name.

message GetSNAPProviderResponse {

// The name of the SNAP provider. If this field is empty, the default provider

// (e.g., NVIDIA) is used. This field is optional.

string provider_name = 1;

}

CreateDevice

The API is called by the SNAP Node Driver prior to the volume being exposed by SNAP.

The operation must be idempotent. If the device corresponding to the volume_id is already created and is identical to the specified access_modes and volume_mode the plugin must reply 0 OK with the corresponding CreateDeviceResponse message.

Business logic required by the plugin: 1. Allocate a unique device name to be used by SNAP 2. Connect the device to the underlying storage system by using the storage vendor specific APIs (if needed). 3. Provide the device to the SNAP process by using the SNAP JSON-RPC APIs. For example: fsdev_aio_create aio0 /host-folder 4. Return the device name

If the plugin is unable to complete the CreateDevice call successfully, it MUST return a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// CreateDeviceRequest is used to create a new storage device.

message CreateDeviceRequest {

// The unique identifier for the volume.

string volume_id = 1;

// The access modes for the volume.

repeated AccessMode access_modes = 2;

// The volume mode, either Filesystem or Block. Default is Filesystem.

string volume_mode = 3;

// Static properties of the volume. This field is optional.

map<string, string> publish_context = 4;

// Static properties of the volume. This field is optional.

map<string, string> volume_context = 5;

// Static properties of the storage class. This field is optional.

map<string, string> storage_parameters = 6;

}

// CreateDeviceResponse provides the details of the created device.

message CreateDeviceResponse {

// The name of the created device (e.g., SPDK FSdev/Bdev name).

string device_name = 1;

}

// AccessMode specifies how a volume can be accessed.

enum AccessMode {

// ACCESS_MODE_UNSPECIFIED indicates an unspecified access mode.

ACCESS_MODE_UNSPECIFIED = 0;

// ACCESS_MODE_RWO indicates read/write on a single node.

ACCESS_MODE_RWO = 1;

// ACCESS_MODE_ROX indicates read-only on multiple nodes.

ACCESS_MODE_ROX = 2;

// ACCESS_MODE_RWX indicates read/write on multiple nodes.

ACCESS_MODE_RWX = 3;

// ACCESS_MODE_RWOP indicates read/write on a single pod.

ACCESS_MODE_RWOP = 4;

}

DeleteDevice

The API is called by the SNAP Node Driver after the device has been deleted from SNAP.

The operation must be idempotent. If the device corresponding to the volume_id and the device_name does not exist, the plugin must reply 0 OK.

Business logic required by the plugin: 1. Remove the device from the SNAP process. 2. Disconnect the device from the underlying storage system by using the storage vendor specific APIs (if needed).

If the plugin is unable to complete the DeleteDevice call successfully, it MUST return a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// DeleteDeviceRequest is used to delete a storage device.

message DeleteDeviceRequest {

// The unique identifier for the volume.

string volume_id = 1;

// The name of the device to be deleted.

string device_name = 2;

}

// DeleteDeviceResponse is the response for deleting a device.

message DeleteDeviceResponse {}

ListDevices

A Storage vendor plugin must implement this API if it has LIST_DEVICES capability. The plugin shall return the information about all the devices that it knows about. If devices are created and/or deleted while the SNAP Node Driver is concurrently paging through ListDevices results then it is possible that the SNAP Node Driver may either witness duplicate devices in the list, not witness existing devices, or both. The SNAP Node Driver shall not expect a consistent "view" of all devices when paging through the device list via multiple calls to ListDevices.

If the plugin is unable to complete the ListDevices call successfully, it MUST return a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

10 | ABORTED | Indicates that starting_token is not valid |

message ListDevicesRequest {

// number of entries that can be returned.

int32 max_entries = 1;

// A token to specify where to start paginating. Set this field to

// `next_token` returned by a previous `ListDevices` call to get the

// next page of entries.

string starting_token = 2;

}

message ListDevicesResponse {

message Entry {

string volume_id = 1;

string device_name = 2;

}

repeated Entry entries = 1;

// This token allows the caller to get the next page of entries for

// `ListDevices` request.

string next_token = 2;

}

GetDevice

A Storage vendor plugin must implement this API if it has GET_DEVICE_STATS capability. The plugin shall return the information about the device corresponding to the volume_id and the device_name.

If the device corresponding to the volume_id and the device_name does not exist, the plugin must reply 5 NOT_FOUND.

If the plugin is unable to complete the GetDevice call successfully, it MUST return a non-ok gRPC code in the gRPC status.

Return codes:

Number | Code | Description |

0 | OK | |

5 | NOT_FOUND | The device was not found |

9 | FAILED_PRECONDITION | Plugin is unable to complete the call successfully |

// GetDeviceRequest is used to get information about the device

message GetDeviceRequest {

// volume identifier

string volume_id = 1;

// The device name. For example, SPDK FSdev/Bdev name

string device_name = 2;

}

message GetDeviceResponse {

// list of access modes for the volume.

repeated AccessMode access_modes = 1;

// Indicates the volume mode. Either Filesystem or Block. Default value is Filesystem. This field is OPTIONAL

string volume_mode = 2;

// Opaque static publish properties of the volume. This field is OPTIONAL

map<string, string> publish_context = 3;

// Opaque static properties of the volume. This field is OPTIONAL

map<string, string> volume_context = 4;

// Opaque static properties of the storage class. This field is OPTIONAL

map<string, string> storage_parameters = 5;

}

Note: this plugin is provided only as an example Vendor CSI Plugin implementation for demonstration purposes. It is not intended or supported for production use cases.

The plugin is provided as an example of how to implement a Vendor CSI Plugin Controller for block storage.

The SPDK-CSI plugin is not shipped as part of the DPF release. It is expected that users will build the plugin from source code. The DPF repository contains a specific Helm chart for the SPDK-CSI.

The instructions for building the SPKD-CSI image and helm chart can be found at the DPF repo under dpuservices/storage/examples/spdk-csi/README.md.

Note: this plugin is provided only as an example Vendor CSI Plugin implementation for demonstration purposes. It is not intended or supported for production use cases.

The plugin is provided as an example of how to implement a Vendor CSI Plugin Controller for file storage.

The DPF repository contains a specific Helm chart for the NFS-CSI plugin. The chart is compatible with the upstream NFS-CSI plugin image.

The instructions for building the NFS-CSI helm chart can be found at the DPF repo under dpuservices/storage/examples/nfs-csi/README.md.

Example manifests for DPF storage subsystem components can be found DPF repo under dpuservices/storage/examples.