RDG for DPF Zero Trust (DPF-ZT)

Created Sep 08, 2025

Scope

This Reference Deployment Guide (RDG) provides comprehensive instructions for deploying the NVIDIA DOCA Platform Framework (DPF) on high-performance, bare-metal infrastructure in Zero-Trust mode. It focuses on the setup and use of DPU-based services on NVIDIA® BlueField®-3 DPUs to deliver secure, isolated, and hardware-accelerated environments.

The guide is intended for experienced system administrators, systems engineers, and solution architects who build highly secure bare-metal environments using NVIDIA BlueField DPUs for acceleration, isolation, and infrastructure offload.

This reference implementation, as the name implies, is a specific, opinionated deployment example designed to address the use case described above.

Although other approaches may exist for implementing similar solutions, this document provides a detailed guide for this specific method.

Abbreviations and Acronyms

Term | Definition | Term | Definition |

BFB | BlueField Bootstream | NGC | NVIDIA GPU Cloud |

DOCA | Data Center Infrastructure-on-a-Chip Architecture | NFS | Network File System |

DPF | DOCA Platform Framework | OOB | Out-of-Band |

DPU | Data Processing Unit | PF | Physical Function |

K8S | Kubernetes | RDG | Reference Deployment Guide |

KVM | Kernel-based Virtual Machine | RDMA | Remote Direct Memory Access |

MAAS | Metal as a Service | RoCE | RDMA over Converged Ethernet |

MTU | Maximum Transmission Unit | ZT | Zero Trust |

Introduction

The NVIDIA BlueField-3 Data Processing Unit (DPU) is a 400 Gb/s infrastructure compute platform designed for line-rate processing of software-defined networking, storage, and cybersecurity workloads. It combines powerful compute resources, high-speed networking, and advanced programmability to deliver hardware-accelerated, software-defined solutions for modern data centers.

NVIDIA DOCA unleashes the full potential of the BlueField platform by enabling rapid development of applications and services that offload, accelerate, and isolate data center workloads.

However, deploying and managing DPUs, especially at scale, presents operational challenges. Without a robust provisioning and orchestration system, tasks such as lifecycle management, service deployment, and network configuration for service function chaining (SFC) can quickly become complex and error prone. This is where the DOCA Platform Framework (DPF) comes into play.

DPF automates the full DPU lifecycle, and simplifies advanced network configurations. With DPF, services can be deployed seamlessly, allowing for efficient offloading and intelligent routing of traffic through the DPU data plane.

By leveraging DPF, users can scale and automate DPU management across Bare Metal, Virtual, and Kubernetes customer environments - optimizing performance while simplifying operations.

DPF supports multiple deployment models. This guide focuses on the Zero Trust bare-metal deployment model. In this scenario:

- The DPU is managed through its Baseboard Management Controller (BMC)

- All management traffic occurs over the DPU's out-of-band (OOB) network

- The host is considered as an untrusted entity towards the data center network. The DPU acts as a barrier between the host and the network.

- The host sees the DPU as a standard NIC, with no access to the internal DPU management plane (Zero Trust Mode)

This Reference Deployment Guide (RDG) provides a step-by-step example for installing DPF in Zero-Trust mode. It also includes practical demonstrations of performance optimization, validated using standard RDMA and TCP workloads.

As part of the reference implementation, open-source components outside the scope of DPF (e.g., MAAS, pfSense, Kubespray) are used to simulate a realistic customer deployment environment. The guide includes the full end-to-end deployment process, including:

- Infrastructure provisioning

- DPF deployment

- DPU provisioning (redfish)

- Service configuration and deployment

- Service chaining.

References

- NVIDIA BlueField DPU

- NVIDIA DOCA

- NVIDIA DPF Release Notes

- NVIDIA DPF GitHub Repository

- NVIDIA DPF System Overview

- NVIDIA Ethernet Switching

- NVIDIA Cumulus Linux

- What is K8s?

- Kubespray

Solution Architecture

Key Components and Technologies

NVIDIA BlueField® Data Processing Unit (DPU)

The NVIDIA® BlueField® data processing unit (DPU) ignites unprecedented innovation for modern data centers and supercomputing clusters. With its robust compute power and integrated software-defined hardware accelerators for networking, storage, and security, BlueField creates a secure and accelerated infrastructure for any workload in any environment, ushering in a new era of accelerated computing and AI.

NVIDIA DOCA Software Framework

NVIDIA DOCA™ unlocks the potential of the NVIDIA® BlueField® networking platform. By harnessing the power of BlueField DPUs and SuperNICs, DOCA enables the rapid creation of applications and services that offload, accelerate, and isolate data center workloads. It lets developers create software-defined, cloud-native, DPU- and SuperNIC-accelerated services with zero-trust protection, addressing the performance and security demands of modern data centers.

10/25/40/50/100/200 and 400G Ethernet Network Adapters

The industry-leading NVIDIA® ConnectX® family of smart network interface cards (SmartNICs) offer advanced hardware offloads and accelerations.

NVIDIA Ethernet adapters enable the highest ROI and lowest Total Cost of Ownership for hyperscale, public and private clouds, storage, machine learning, AI, big data, and telco platforms.

The NVIDIA® LinkX® product family of cables and transceivers provides the industry’s most complete line of 10, 25, 40, 50, 100, 200, and 400GbE in Ethernet and 100, 200 and 400Gb/s InfiniBand products for Cloud, HPC, hyperscale, Enterprise, telco, storage and artificial intelligence, data center applications.

NVIDIA Spectrum Ethernet Switches

Flexible form-factors with 16 to 128 physical ports, supporting 1GbE through 400GbE speeds.

Based on a ground-breaking silicon technology optimized for performance and scalability, NVIDIA Spectrum switches are ideal for building high-performance, cost-effective, and efficient Cloud Data Center Networks, Ethernet Storage Fabric, and Deep Learning Interconnects.

NVIDIA combines the benefits of NVIDIA Spectrum™ switches, based on an industry-leading application-specific integrated circuit (ASIC) technology, with a wide variety of modern network operating system choices, including NVIDIA Cumulus® Linux , SONiC and NVIDIA Onyx®.

NVIDIA® Cumulus® Linux is the industry's most innovative open network operating system that allows you to automate, customize, and scale your data center network like no other.

Kubernetes is an open-source container orchestration platform for deployment automation, scaling, and management of containerized applications.

Kubespray is a composition of Ansible playbooks, inventory, provisioning tools, and domain knowledge for generic OS/Kubernetes clusters configuration management tasks and provides:

- A highly available cluster

- Composable attributes

- Support for most popular Linux distributions

Solution Design

Solution Logical Design

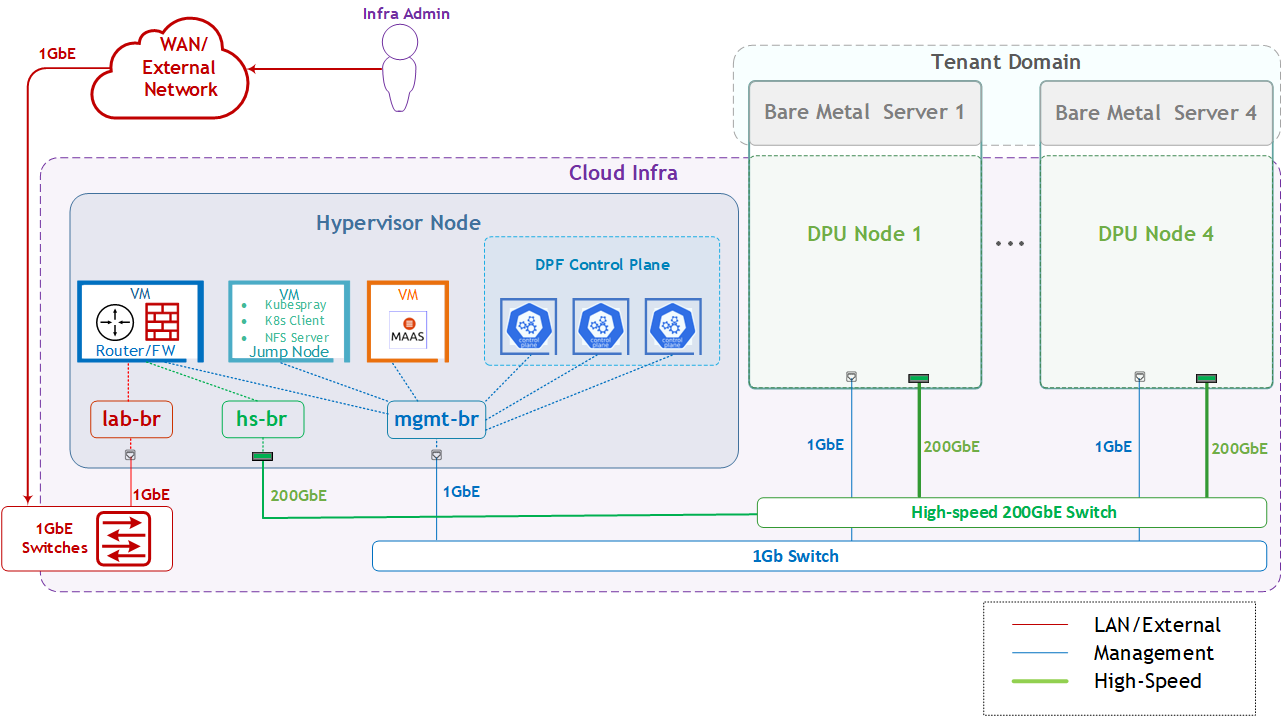

The logical design includes the following components:

1 x Hypervisor node (KVM-based) with ConnectX-7:

- 1 x Firewall VM

- 1 x Jump Node VM

- 1 x MaaS VM

- 3 x K8s Master VMs running all K8s management components

- 4 x Worker nodes (PCI Gen5), each with a 1 x BlueField-3 NIC

- Single High-Speed (HS) switch

- 1 Gb Host Management network

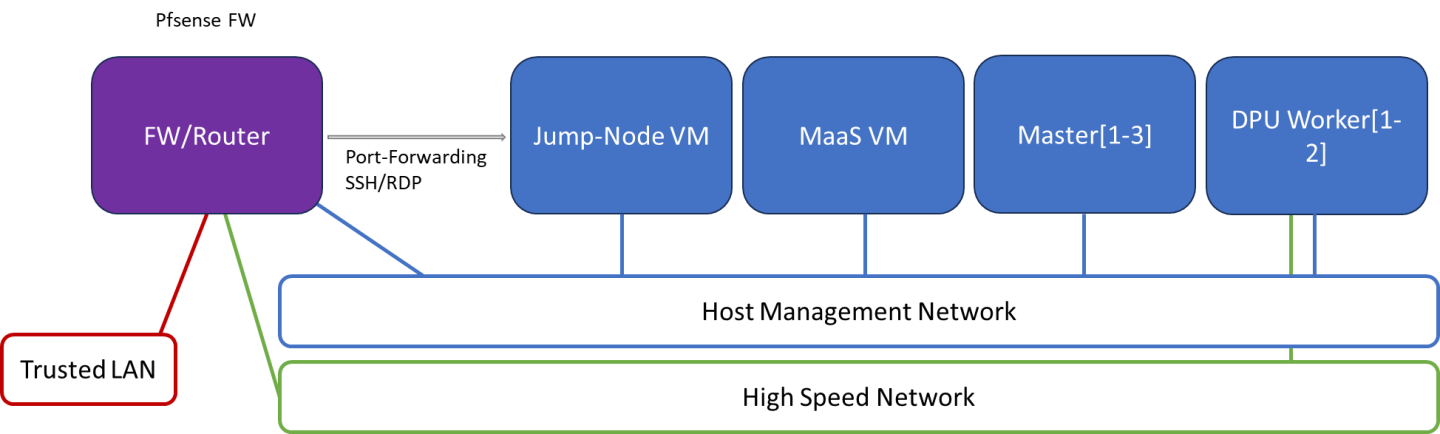

Firewall Design

The pfSense firewall in this solution serves a dual purpose:

- Firewall—provides an isolated environment for the DPF system, ensuring secure operations

- Router—enables Internet access for the management network

Port-forwarding rules for SSH and RDP are configured on the firewall to route traffic to the jump node’s IP address in the host management network. From the jump node, administrators can manage and access various devices in the setup, as well as handle the deployment of the Kubernetes (K8s) cluster and DPF components.

The following diagram illustrates the firewall design used in this solution:

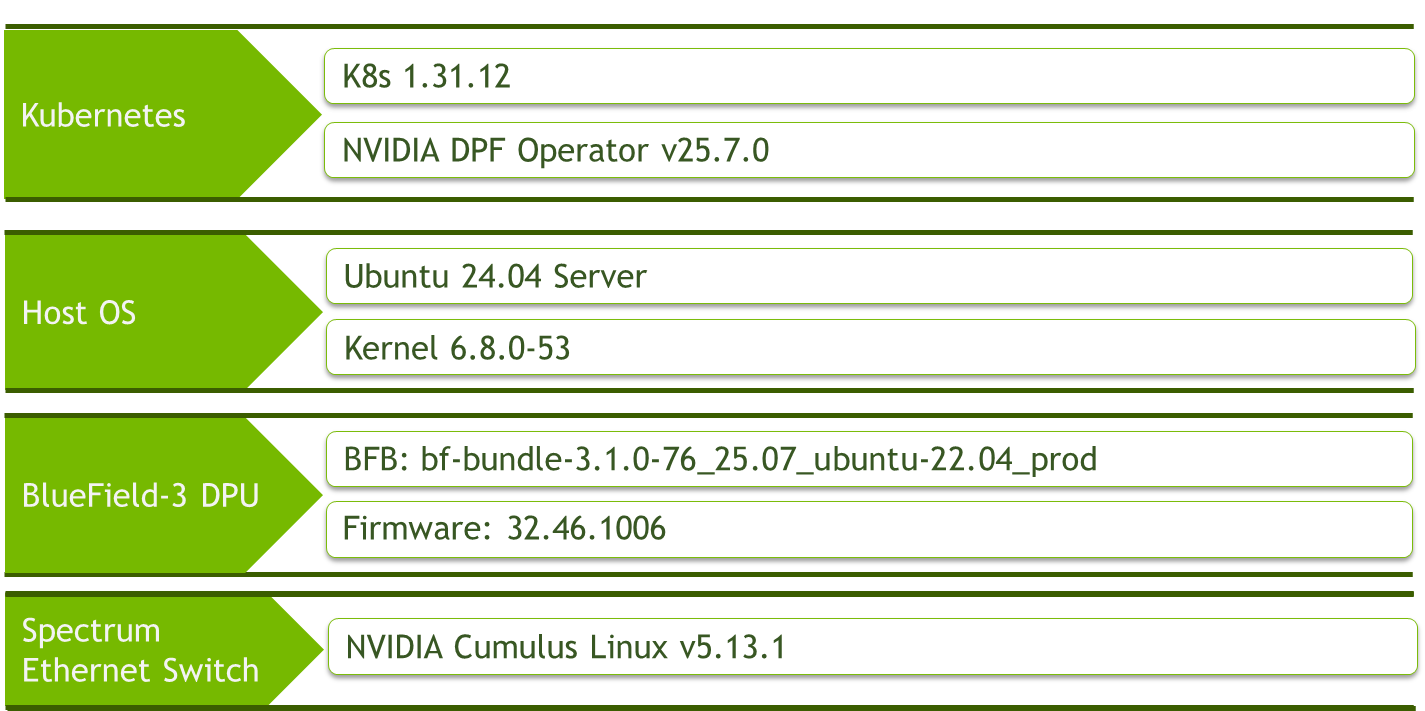

Software Stack Components

Make sure to use the exact same versions for the software stack as described above.

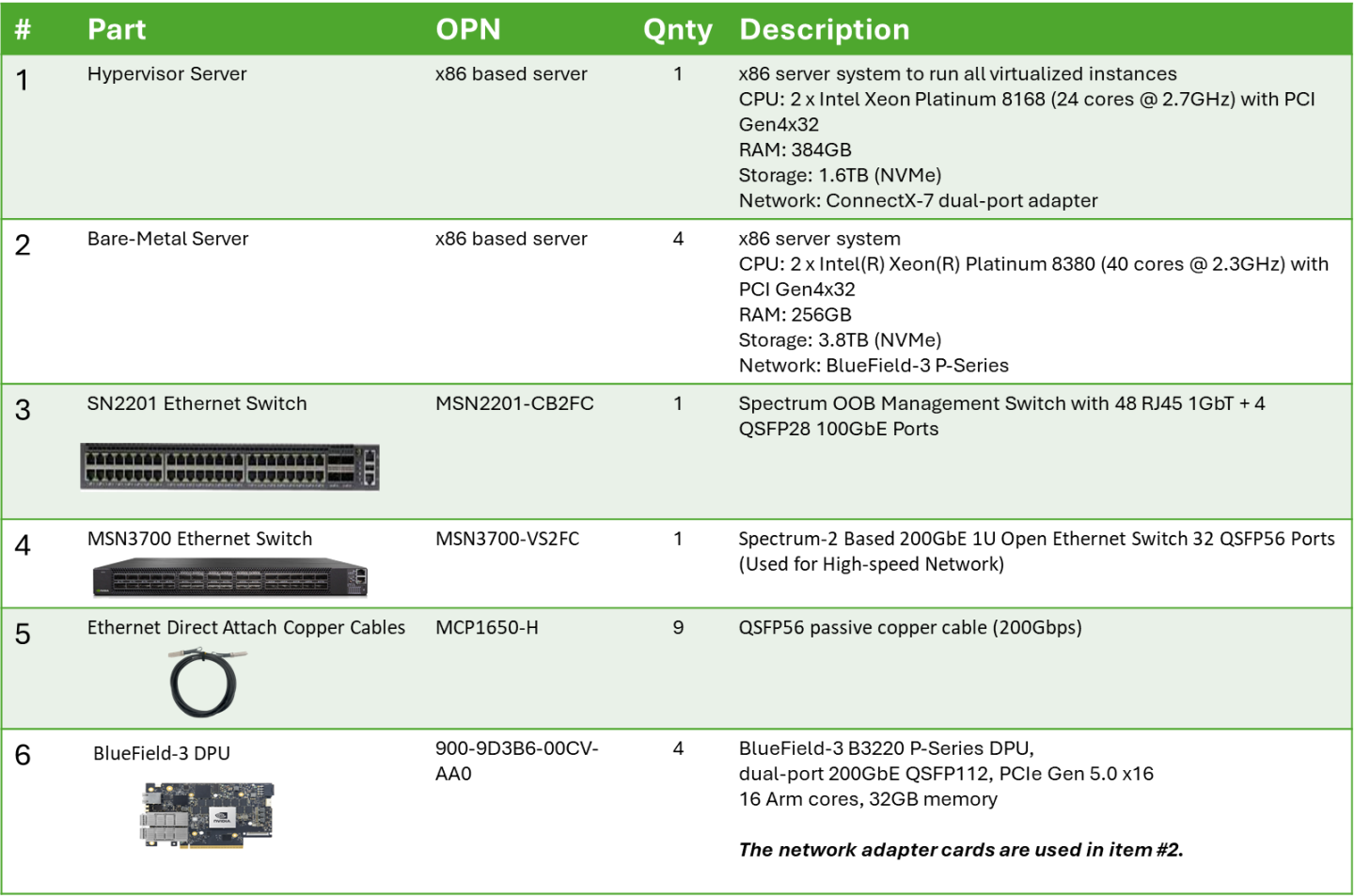

Bill of Materials

Deployment and Configuration

Node and Switch Definitions

These are the definitions and parameters used for deploying the demonstrated fabric:

Switches Ports Usage | ||

Hostname | Rack ID | Ports |

| 1 | swp1-5 |

| 1 | swp1-9 |

Hosts | |||||

Rack | Server Type | Server Name | Switch Port | IP and NICs | Default Gateway |

Rack1 | Hypervisor Node |

| mgmt-switch: hs-switch: | lab-br (interface eno1): Trusted LAN IP mgmt-br (interface eno2): - hs-br (interface enp1s0): - | Trusted LAN GW |

Rack1 | Firewall (Virtual) |

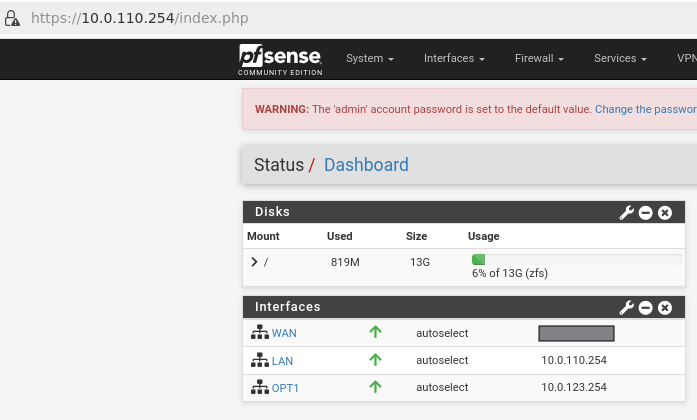

| - | WAN (lab-br): Trusted LAN IP LAN (mgmt-br): 10.0.110.254/24 OPT1(hs-br): 10.0.123.254/22 | Trusted LAN GW |

Rack1 | Jump Node (Virtual) |

| - | enp1s0: 10.0.110.253/24 | 10.0.110.254 |

Rack1 | MaaS (Virtual) |

| - | enp1s0: 10.0.110.252/24 | 10.0.110.254 |

Rack1 | Master Node (Virtual) |

| - | enp1s0: 10.0.110.1/24 | 10.0.110.254 |

Rack1 | Master Node (Virtual) |

| - | enp1s0: 10.0.110.2/24 | 10.0.110.254 |

Rack1 | Master Node (Virtual) |

| - | enp1s0: 10.0.110.3/24 | 10.0.110.254 |

Rack1 | Worker Node |

| mgmt-switch: hs-switch: | dpubmc: 10.0.110.201/24 dpuoob: 10.0.110.211/24 ens1f0np0/ens1f1np1: 10.0.120.0/22 | 10.0.110.254 |

Rack1 | Worker Node |

| mgmt-switch: hs-switch: | dpubmc: 10.0.110.202/24 dpuoob: 10.0.110.212/24 ens1f0np0/ens1f1np1: 10.0.120.0/22 | 10.0.110.254 |

Rack1 | Worker Node |

| mgmt-switch: hs-switch: | dpubmc: 10.0.110.203/24 dpuoob: 10.0.110.213/24 ens1f0np0/ens1f1np1: 10.0.120.0/22 | 10.0.110.254 |

Rack1 | Worker Node |

| mgmt-switch: hs-switch: | dpubmc: 10.0.110.204/24 dpuoob: 10.0.110.214/24 ens1f0np0/ens1f1np1: 10.0.120.0/22 | 10.0.110.254 |

Wiring

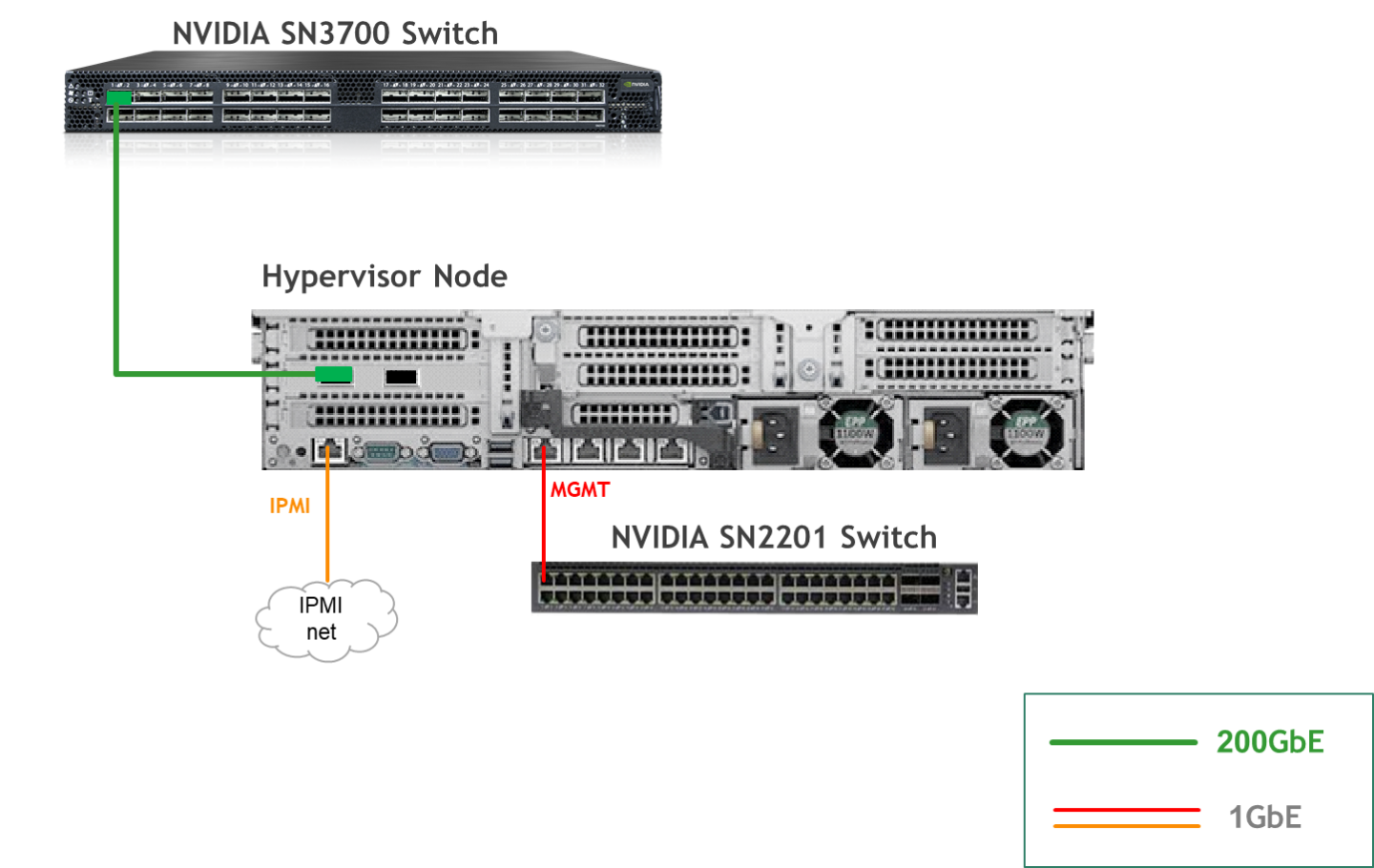

Hypervisor Node

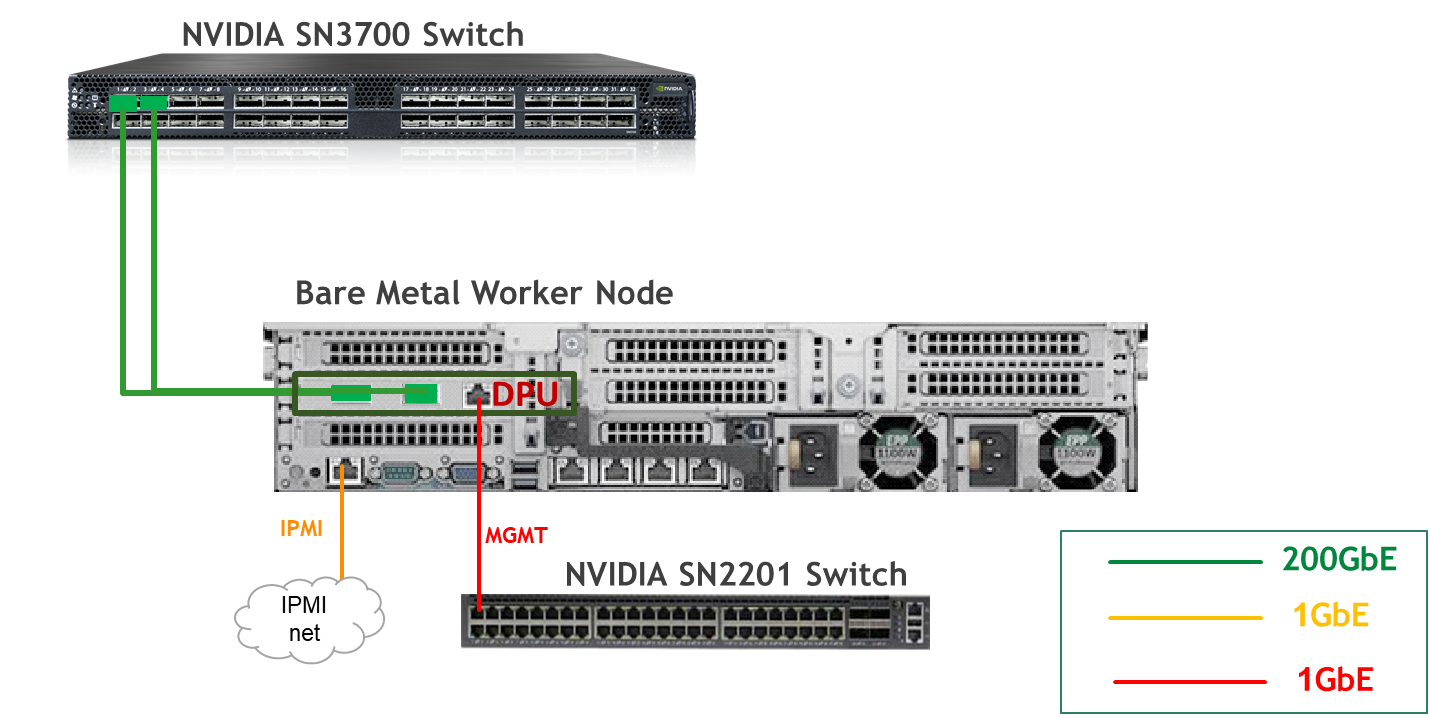

Bare Metal Worker Node

Fabric Configuration

Updating Cumulus Linux

As a best practice, make sure to use the latest released Cumulus Linux NOS version.

For information on how to upgrade Cumulus Linux, refer to the Cumulus Linux User Guide.

Configuring the Cumulus Linux Switch

The SN3700 switch (hs-switch), is configured as follows:

SN3700 Switch Console

nv set bridge domain br_hs untagged 1

nv set interface swp1-5 bridge domain br_hs

nv set interface swp1-5 link state up

nv set interface swp1-5 type swp

nv config apply -y

nv config save -y

The SN2201 switch (mgmt-switch) is configured as follows:

SN2201 Switch Console

nv set interface swp1-3 link state up

nv set interface swp1-3 type swp

nv set interface swp1-3 bridge domain br_default

nv set bridge domain br_default untagged 1

nv config apply

nv config save -y

Host Configuration

Make sure that the BIOS settings on the worker node servers have SR-IOV enabled and that the servers are tuned for maximum performance.

All worker nodes must have the same PCIe placement for the BlueField-3 NIC and must display the same interface name.

Make sure that you have DPU BMC and OOB MAC addresses.

Hypervisor Installation and Configuration

The hypervisor used in this Reference Deployment Guide (RDG) is based on Ubuntu 24.04 with KVM.

While this document does not detail the KVM installation process, it is important to note that the setup requires the following ISOs to deploy the Firewall, Jump, and MaaS virtual machines (VMs):

- Ubuntu 24.04

- pfSense-CE-2.7.2

To implement the solution, three Linux bridges must be created on the hypervisor:

Ensure a DHCP record is configured for the lab-br bridge interface in your trusted LAN to assign it an IP address.

lab-br– connects the Firewall VM to the trusted LAN.mgmt-br– Connects the various VMs to the host management network.- hs-br – Connects the Firewall VM to the high-speed network.

Additionally, an MTU of 9000 must be configured on the management and high-speed bridges ( mgmt-br and hs-br ) as well as their uplink interfaces to ensure optimal performance.

Hypervisor netplan configuration

network:

ethernets:

eno1:

dhcp4: false

eno2:

dhcp4: false

mtu: 9000

ens2f0np0:

dhcp4: false

mtu: 9000

bridges:

lab-br:

interfaces: [eno1]

dhcp4: true

mgmt-br:

interfaces: [eno2]

dhcp4: false

mtu: 9000

hs-br:

interfaces: [ens2f0np0]

dhcp4: false

mtu: 9000

version: 2

Apply the configuration:

Hypervisor Console

$ sudo netplan apply

Prepare Infrastructure Servers

Firewall VM - pfSense Installation and Interface Configuration

Download the pfSense CE (Community Edition) ISO to your hypervisor and proceed with the software installation.

Suggested spec:

- vCPU: 2

- RAM: 2GB

- Storage: 10GB

Network interfaces

- Bridge device connected to lab-br

- Bridge device connected to mgmt-br

- Bridge device connected to hs-br

The Firewall VM must be connected to all three Linux bridges on the hypervisor. Before beginning the installation, ensure that three virtual network interfaces of type "Bridge device" are configured. Each interface should be connected to a different bridge (lab-br, mgmt-br, and hs-br) as illustrated in the diagram below.

After completing the installation, the setup wizard displays a menu with several options, such as "Assign Interfaces" and "Reboot System." During this phase, you must configure the network interfaces for the Firewall VM.

Select Option 2: "Set interface(s) IP address" and configure the interfaces as follows:

- WAN (lab-br) – Trusted LAN IP (Static/DHCP)

- LAN (mgmt-br) – Static IP 10.0.110.254/24

- OPT1 (hs-br) – Static IP 10.0.123.254/22

- Once the interface configuration is complete, use a web browser within the host management network to access the Firewall web interface and finalize the configuration.

Next, proceed with installing the Jump VM. This VM serves as a platform for running a browser for accessing the firewall’s web interface (UI) for post-installation configuration.

Jump VM

Suggested specifications:

- vCPU: 4

- RAM: 8GB

- Storage: 100GB

- Network interface: Bridge device, connected to

mgmt-br

Procedure:

Proceed with a standard Ubuntu 24.04 installation. Use the following login credentials across all hosts in this setup:

Username

Password

depuser

user

Enable internet connectivity and DNS resolution by creating the following Netplan configuration:

NoteUse

10.0.110.254as a temporary DNS nameserver until the MaaS VM is installed and configured. After completing the MaaS installation, update the Netplan file to replace this address with the MaaS IP:10.0.110.252.Jump Node netplan

network: ethernets: enp1s0: dhcp4:

falseaddresses: [10.0.110.253/24] nameservers: search: [dpf.rdg.local.domain] addresses: [10.0.110.254] routes: - to:defaultvia:10.0.110.254version:2Apply the configuration:

Jump Node Console

depuser@jump:~$ sudo netplan apply

Update and upgrade the system:

Jump Node Console

depuser@jump:~$ sudo apt update -y depuser@jump:~$ sudo apt upgrade -y

Install and configure the Xfce desktop environment and XRDP (complementary packages for RDP):

Jump Node Console

depuser@jump:~$ sudo apt install -y xfce4 xfce4-goodies depuser@jump:~$ sudo apt install -y lightdm-gtk-greeter depuser@jump:~$ sudo apt install -y xrdp depuser@jump:~$ echo "xfce4-session" | tee .xsession depuser@jump:~$ sudo systemctl restart xrdp

Install Firefox for accessing the Firewall web interface:

Jump Node Console

$ sudo apt install -y firefox

Install and configure an NFS server with the

/mnt/dpf_sharedirectory:Jump Node Console

$ sudo apt install -y nfs-server $ sudo mkdir -m 777 /mnt/dpf_share $ sudo vi /etc/exports

Add the following line to

/etc/exports:Jump Node Console

/mnt/dpf_share 10.0.110.0/24(rw,sync,no_subtree_check)

Restart the NFS server:

Jump Node Console

$ sudo systemctl restart nfs-server

Create the directory

bfbunder/mnt/dpf_sharewith the same permissions as the parent directory:Jump Node Console

$ sudo mkdir -m 777 /mnt/dpf_share/bfb

Generate an SSH key pair for

depuserin the jump node (later on will be imported to the admin user in MaaS to enable password-less login to the provisioned servers):Jump Node Console

depuser@jump:~$ ssh-keygen -t rsa

Reboot the jump node to display the graphical user interface:

Jump Node Console

depuser@jump:~$ sudo reboot

NoteAfter setting up port-forwarding rules on the firewall (next steps), remote login to the graphical interface of the Jump node will be available.

Concurrent login to the local graphical console and using RDP isn't possible, make sure to first log out from the local console when switching to RDP connection.

Firewall VM – Web Configuration

From your Jump node, open a Firefox web browser and navigate to the pfSense web UI (http://10.0.110.254. The default login credentials are admin/pfsense). The login page should appear as follows:

The IP addresses from the trusted LAN network under "DNS servers" and "Interfaces - WAN" are blurred.

Proceed with the following configurations:

The following screenshots display only a part of the configuration view. Make sure to not miss any of the steps mentioned below!

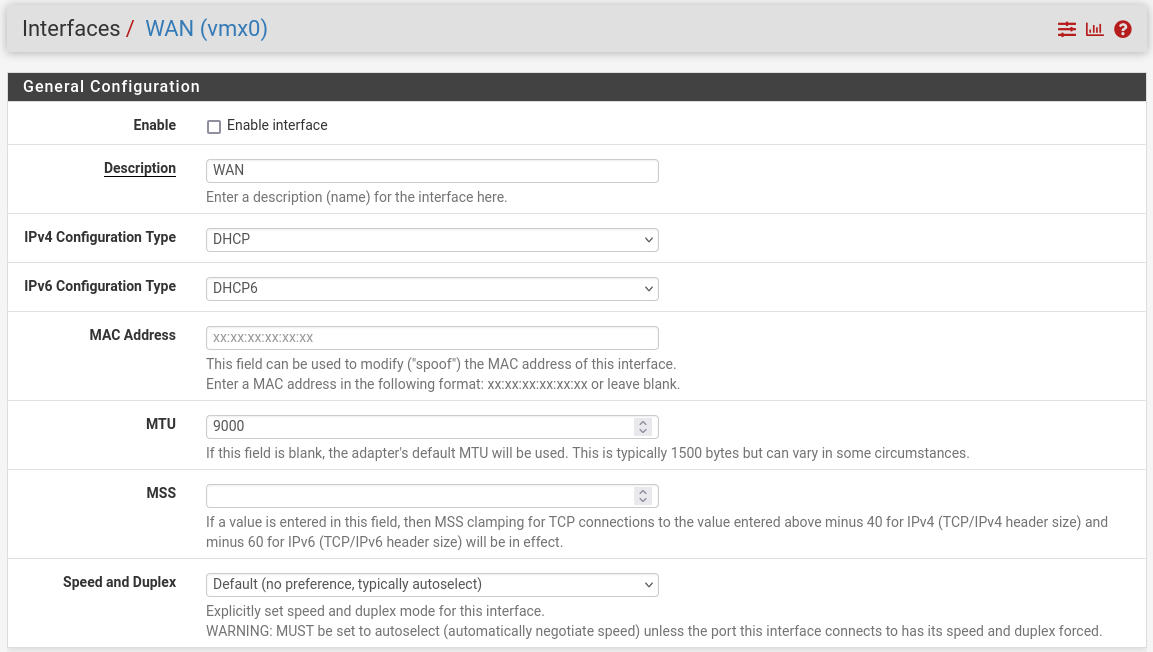

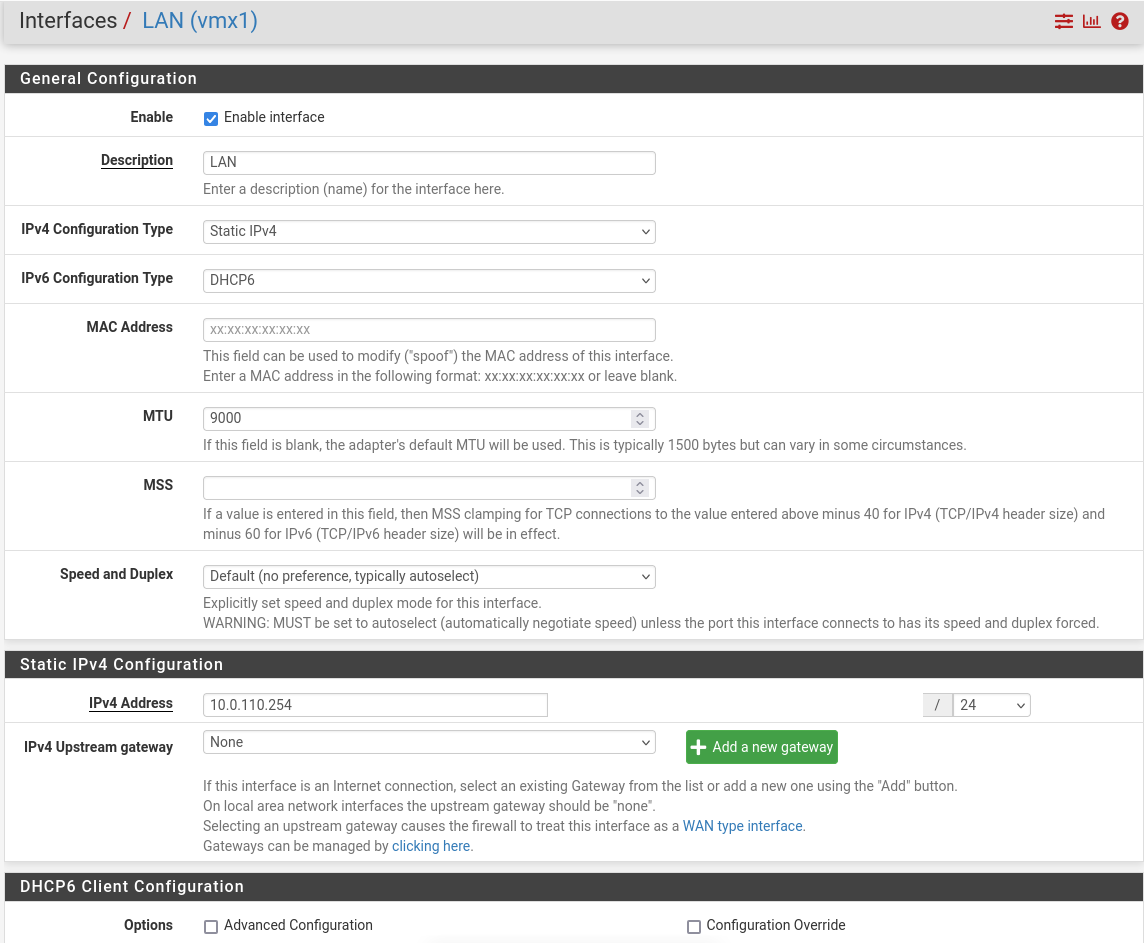

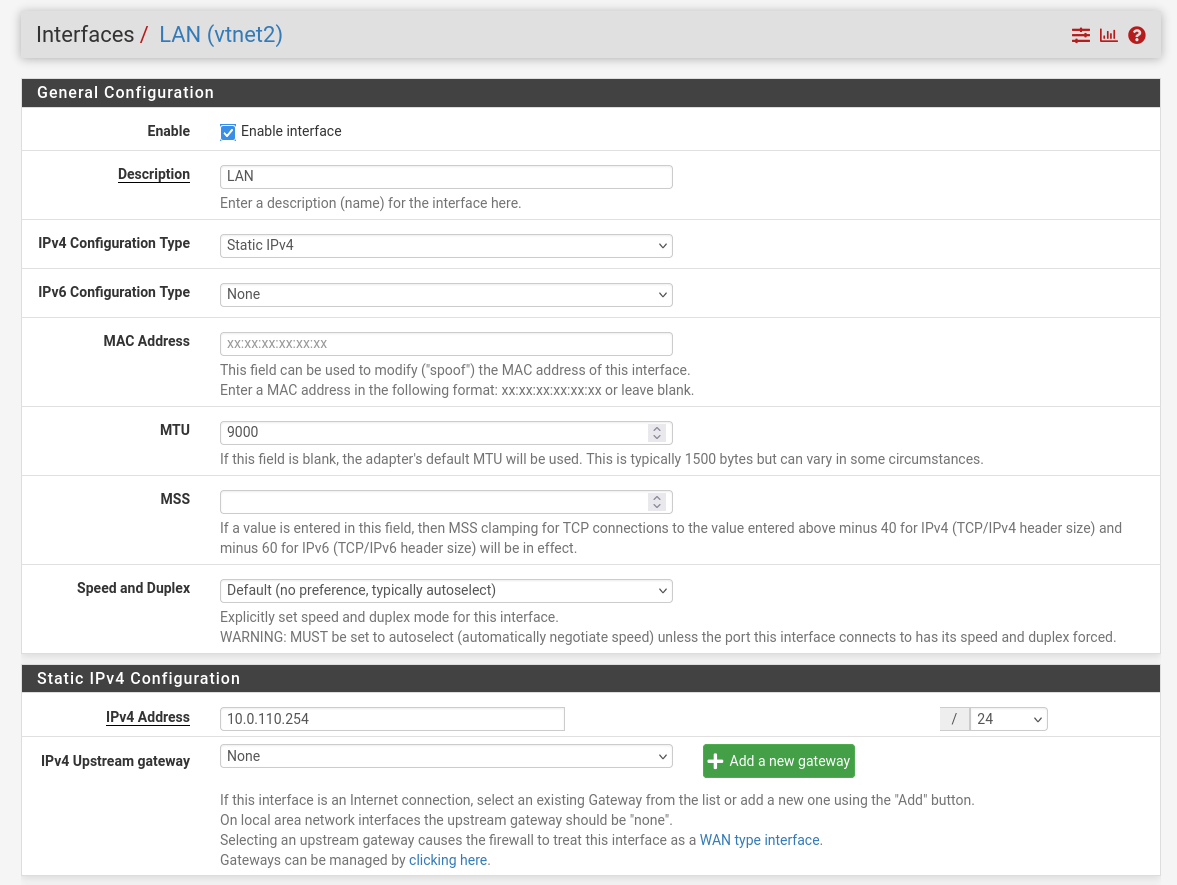

Interfaces:

- WAN (lab-br) – mark “Enable interface”, unmark “Block private networks and loopback addresses”

- LAN (mgmt-br) – mark “Enable interface”, “IPv4 configuration type”: Static IPv4 ("IPv4 Address": 10.0.110.254/24, "IPv4 Upstream Gateway": None), “MTU”: 9000

- OPT1 (hs-br) – mark “Enable interface”, “IPv4 configuration type”: Static IPv4 ("IPv4 Address": 10.0.123.254/22, "IPv4 Upstream Gateway": None), “MTU”: 9000

Firewall:

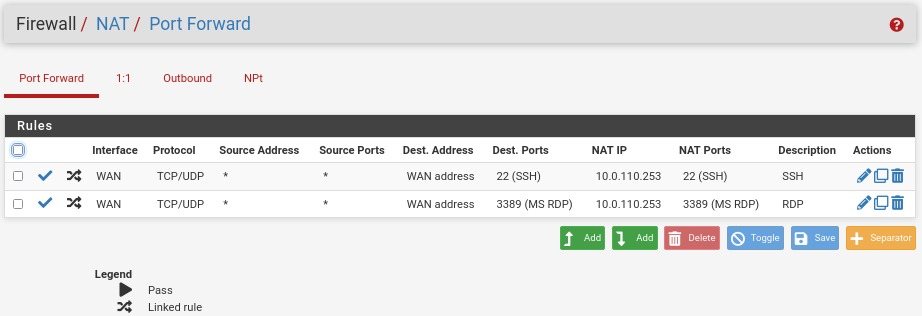

NAT -> Port Forward -> Add rule -> “Interface”: WAN, “Address Family”: IPv4, “Protocol”: TCP, “Destination”: WAN address, “Destination port range”: (“From port”: SSH, “To port”: SSH), “Redirect target IP”: (“Type”: Address or Alias, “Address”: 10.0.110.253), “Redirect target port”: SSH, “Description”: NAT SSH

NAT -> Port Forward -> Add rule -> “Interface”: WAN, “Address Family”: IPv4, “Protocol”: TCP, “Destination”: WAN address, “Destination port range”: (“From port”: MS RDP, “To port”: MS RDP), “Redirect target IP”: (“Type”: Address or Alias, “Address”: 10.0.110.253), “Redirect target port”: MS RDP, “Description”: NAT RDP

Rules -> OPT1 -> Add rule -> “Action”: Pass , “Interface”: OPT1 , “Address Family”: IPv4+IPv6 , “Protocol”: Any , “Source”: Any , “Destination”: Any

MaaS VM

Suggested specifications:

- vCPU: 4

- RAM: 4 GB

- Storage: 100 GB

- Network interface: Bridge device, connected to

mgmt-br

Procedure:

- Perform a regular Ubuntu installation on the MaaS VM.

Create the following Netplan configuration to enable internet connectivity and DNS resolution:

NoteUse

10.0.110.254as a temporary DNS nameserver. After the MaaS installation, replace this with the MaaS IP address (10.0.110.252) in both the Jump and MaaS VM Netplan files.MaaS netplan

network: ethernets: enp1s0: dhcp4:

falseaddresses: [10.0.110.252/24] nameservers: search: [dpf.rdg.local.domain] addresses: [10.0.110.254] routes: - to:defaultvia:10.0.110.254version:2Apply the netplan configuration:

MaaS Console

depuser@maas:~$ sudo netplan apply

Update and upgrade the system:

MaaS Console

depuser@maas:~$ sudo apt update -y depuser@maas:~$ sudo apt upgrade -y

Install PostgreSQL and configure the database for MaaS:

MaaS Console

$ sudo -i # apt install -y postgresql # systemctl disable --now systemd-timesyncd # export MAAS_DBUSER=maasuser # export MAAS_DBPASS=maaspass # export MAAS_DBNAME=maas # sudo -i -u postgres psql -c "CREATE USER \"$MAAS_DBUSER\" WITH ENCRYPTED PASSWORD '$MAAS_DBPASS'" # sudo -i -u postgres createdb -O "$MAAS_DBUSER" "$MAAS_DBNAME"

Install MaaS:

MaaS Console

# snap install maas

Initialize MaaS:

MaaS Console

# maas init region+rack --maas-url http://10.0.110.252:5240/MAAS --database-uri "postgres://$MAAS_DBUSER:$MAAS_DBPASS@localhost/$MAAS_DBNAME"

Create an admin account:

MaaS Console

# maas createadmin --username admin --password admin --email admin@example.com

Save the admin API key:

MaaS Console

# maas apikey --username admin > admin-apikey

Log in to the MaaS server:

MaaS Console

# maas login admin http://localhost:5240/MAAS "$(cat admin-apikey)"

Configure MaaS (Substitute <Trusted_LAN_NTP_IP> and <Trusted_LAN_DNS_IP> with the IP addresses in your environment):

MaaS Console

# maas admin domain update maas name="dpf.rdg.local.domain" # maas admin maas set-config name=ntp_servers value="<Trusted_LAN_NTP_IP>" # maas admin maas set-config name=network_discovery value="disabled" # maas admin maas set-config name=upstream_dns value="<Trusted_LAN_DNS_IP>" # maas admin maas set-config name=dnssec_validation value="no" # maas admin maas set-config name=default_osystem value="ubuntu"

Define and configure IP ranges and subnets:

MaaS Console

# maas admin ipranges create type=dynamic start_ip="10.0.110.51" end_ip="10.0.110.120" # maas admin ipranges create type=dynamic start_ip="10.0.110.225" end_ip="10.0.110.240" # maas admin ipranges create type=reserved start_ip="10.0.110.10" end_ip="10.0.110.10" comment="c-plane VIP" # maas admin ipranges create type=reserved start_ip="10.0.110.200" end_ip="10.0.110.200" comment="kamaji VIP" # maas admin ipranges create type=reserved start_ip="10.0.110.251" end_ip="10.0.110.254" comment="dpfmgmt" # maas admin vlan update 0 untagged dhcp_on=True primary_rack=maas mtu=9000 # maas admin dnsresources create fqdn=kube-vip.dpf.rdg.local.domain ip_addresses=10.0.110.10 # maas admin dnsresources create fqdn=jump.dpf.rdg.local.domain ip_addresses=10.0.110.253 # maas admin dnsresources create fqdn=fw.dpf.rdg.local.domain ip_addresses=10.0.110.254 # maas admin fabrics create Success. Machine-readable output follows: { "class_type": null, "name": "fabric-1", "id": 1, ... # maas admin subnets create name="fake-dpf" cidr="20.20.20.0/24" fabric=1

Configure static DHCP leases for the worker nodes (replace MAC address as appropriate with your workers DPU BMC/OOB interface MAC):

MaaS Console

# maas admin reserved-ips create ip="10.0.110.201" mac_address="58:a2:e1:73:6a:0b" comment="dpu-worker1-bmc" # maas admin reserved-ips create ip="10.0.110.211" mac_address="58:a2:e1:73:6a:0a" comment="dpu-worker1-oob" # maas admin reserved-ips create ip="10.0.110.202" mac_address="58:a2:e1:73:6a:7d" comment="dpu-worker2-bmc" # maas admin reserved-ips create ip="10.0.110.212" mac_address="58:a2:e1:73:6a:7c" comment="dpu-worker2-oob" # maas admin reserved-ips create ip="10.0.110.203" mac_address="58:a2:e1:73:6a:a7" comment="dpu-worker3-bmc" # maas admin reserved-ips create ip="10.0.110.213" mac_address="58:a2:e1:73:6a:a6" comment="dpu-worker3-oob" # maas admin reserved-ips create ip="10.0.110.204" mac_address="58:a2:e1:73:6a:dd" comment="dpu-worker4-bmc" # maas admin reserved-ips create ip="10.0.110.214" mac_address="58:a2:e1:73:6a:dc" comment="dpu-worker4-oob"

Complete MaaS setup:

- Connect to the Jump node GUI and access the MaaS UI at

http://10.0.110.252:5240/MAAS. - On the first page, verify the "Region Name" and "DNS Forwarder," then continue.

On the image selection page, select Ubuntu 24.04 LTS (amd64) and sync the image.

Import the previously generated SSH key (

id_rsa.pub) for thedepuserinto the MaaS admin user profile and finalize the setup.

- Connect to the Jump node GUI and access the MaaS UI at

- Update the DNS nameserver IP address in the Netplan files for both the Jump and MaaS VMs from

10.0.110.254to10.0.110.252, then reapply the configuration.

K8s Master VMs

Suggested specifications:

- vCPU: 8

- RAM: 16GB

- Storage: 100GB

- Network interface: Bridge device, connected to

mgmt-br

Before provisioning the Kubernetes (K8s) Master VMs with MaaS, create the required virtual disks with empty storage. Use the following one-liner to create three 100 GB QCOW2 virtual disks:

Hypervisor Console

$ for i in $(seq 1 3); do qemu-img create -f qcow2 /var/lib/libvirt/images/master$i.qcow2 100G; done

This command generates the following disks in the

/var/lib/libvirt/images/directory:master1.qcow2master2.qcow2master3.qcow2

Configure VMs in virt-manager:

Open virt-manager and create three virtual machines:

- Assign the corresponding virtual disk (

master1.qcow2,master2.qcow2, ormaster3.qcow2) to each VM. - Configure each VM with the suggested specifications (vCPU, RAM, storage, and network interface).

- Assign the corresponding virtual disk (

- During the VM setup, ensure the NIC is selected under the Boot Options tab. This ensures the VMs can PXE boot for MaaS provisioning.

- Once the configuration is complete, shut down all the VMs.

- After the VMs are created and configured, proceed to provision them via the MaaS interface. MaaS will handle the OS installation and further setup as part of the deployment process.

Provision Master VMs Using MaaS

Install virsh and Set Up SSH Access

SSH to the MaaS VM from the Jump node:

MaaS Console

depuser@jump:~$ ssh maas depuser@maas:~$ sudo -i

Install the

virshclient to communicate with the hypervisor:MaaS Console

# apt install -y libvirt-clients

Generate an SSH key for the

rootuser and copy it to the hypervisor user in thelibvirtdgroup:MaaS Console

# ssh-keygen -t rsa # ssh-copy-id ubuntu@<hypervisor_MGMT_IP>

Verify SSH access and

virshcommunication with the hypervisor:MaaS Console

# virsh -c qemu+ssh://ubuntu@<hypervisor_MGMT_IP>/system list --all

Expected output:

MaaS Console

Id Name State ------------------------------ 1 fw running 2 jump running 3 maas running - master1 shut off - master2 shut off - master3 shut off

Copy the SSH key to the required MaaS directory (for snap-based installations):

MaaS Console

# mkdir -p /var/snap/maas/current/root/.ssh # cp .ssh/id_rsa* /var/snap/maas/current/root/.ssh/

Get MAC Addresses of the Master VMs

Retrieve the MAC addresses of the Master VMs:

MaaS Console

# for i in $(seq 1 3); do virsh -c qemu+ssh://ubuntu@<hypervisor_MGMT_IP>/system dumpxml master$i | grep 'mac address'; done

Example output:

MaaS Console

<mac address='52:54:00:a9:9c:ef'/>

<mac address='52:54:00:19:6b:4d'/>

<mac address='52:54:00:68:39:7f'/>

Add Master VMs to MaaS

Add the Master VMs to MaaS:

InfoOnce added, MaaS will automatically start the newly added VMs commissioning (discovery and introspection).

MaaS Console

# maas admin machines create hostname=master1 architecture=amd64/generic mac_addresses='52:54:00:a9:9c:ef' power_type=virsh power_parameters_power_address=qemu+ssh://ubuntu@<hypervisor_MGMT_IP>/system power_parameters_power_id=master1 skip_bmc_config=1 testing_scripts=none Success. Machine-readable output follows: { "description": "", "status_name": "Commissioning", ... "status": 1, ... "system_id": "c3seyq", ... "fqdn": "master1.dpf.rdg.local.domain", "power_type": "virsh", ... "status_message": "Commissioning", "resource_uri": "/MAAS/api/2.0/machines/c3seyq/" } # maas admin machines create hostname=master2 architecture=amd64/generic mac_addresses='52:54:00:19:6b:4d' power_type=virsh power_parameters_power_address=qemu+ssh://ubuntu@<hypervisor_MGMT_IP>/system power_parameters_power_id=master2 skip_bmc_config=1 testing_scripts=none # maas admin machines create hostname=master3 architecture=amd64/generic mac_addresses='52:54:00:68:39:7f' power_type=virsh power_parameters_power_address=qemu+ssh://ubuntu@<hypervisor_MGMT_IP>/system power_parameters_power_id=master3 skip_bmc_config=1 testing_scripts=none

- Repeat the command for

master2andmaster3with their respective MAC addresses. Verify commissioning by waiting for the status to change to "Ready" in MaaS.

After commissioning, the next phase is deployment (OS provisioning).

Configure Master VMs Network

To ensure persistence across reboots, assign a static IP address to the management interface of the master nodes.

For each Master VM:

Navigate to Network and click "actions" near the management interface (a small arrowhead pointing down), then select "Edit Physical".

Configure as follows:

- Subnet: 10.0.110.0/24

- IP Mode: Static Assign

Address: Assign

10.0.110.1formaster1,10.0.110.2formaster2, and10.0.110.3formaster3.

- Save the interface settings for each VM.

Deploy Master VMs Using Cloud-Init

Use the following cloud-init script to configure the necessary software and ensure persistency:

Master nodes cloud-init

#cloud-config system_info: default_user: name: depuser passwd:

"$6$jOKPZPHD9XbG72lJ$evCabLvy1GEZ5OR1Rrece3NhWpZ2CnS0E3fu5P1VcZgcRO37e4es9gmriyh14b8Jx8gmGwHAJxs3ZEjB0s0kn/"lock_passwd:falsegroups: [adm, audio, cdrom, dialout, dip, floppy, lxd, netdev, plugdev, sudo, video] sudo: ["ALL=(ALL) NOPASSWD:ALL"] shell: /bin/bash ssh_pwauth: True package_upgrade:trueruncmd: - apt-get update - apt-get -y install nfs-commonDeploy the master VMs:

- Select all three Master VMs → Actions → Deploy.

- Toggle Cloud-init user-data and paste the cloud-init script.

Start the deployment and wait for the status to change to "Ubuntu 24.04 LTS".

Verify Deployment

SSH into the Master VMs from the Jump node:

Jump Node Console

depuser@jump:~$ ssh master1 depuser@master1:~$

Run

sudowithout a password:Master1 Console

depuser@master1:~$ sudo -i root@master1:~#

Verify installed packages:

Master1 Console

root@master1:~# apt list --installed | egrep 'nfs-common' nfs-common/noble,now 1:2.6.4-3ubuntu5 amd64 [installed]

Reboot the Master VMs to complete the provisioning.

Master1 Console

root@master1:~# reboot

Repeat the verification commands for master2 and master3.

K8s Cluster Deployment and Configuration

Kubespray Deployment and Configuration

In this solution, the Kubernetes (K8s) cluster is deployed using a modified Kubespray (based on release v2.28.1) with a non-root depuser account from the Jump Node. The modifications in Kubespray are designed to meet the DPF prerequisites as described in the User Manual and facilitate cluster deployment and scaling.

- Download the modified Kubespray archive: modified_kubespray_v2.28.1.tar.gz.

Extract the contents and navigate to the extracted directory:

Jump Node Console

$ tar -xzf /home/depuser/modified_kubespray_v2.28.1.tar.gz $ cd kubespray/ depuser@jump:~/kubespray$

Set the K8s API VIP address and DNS record. Replace it with your own IP address and DNS record if different:

Jump Node Console

depuser@jump:~/kubespray$ sed -i '/# kube_vip_address:/s/.*/kube_vip_address: 10.0.110.10/' inventory/mycluster/group_vars/k8s_cluster/addons.yml depuser@jump:~/kubespray$ sed -i '/apiserver_loadbalancer_domain_name:/s/.*/apiserver_loadbalancer_domain_name: "kube-vip.dpf.rdg.local.domain"/' roles/kubespray_defaults/defaults/main/main.yml

Install the necessary dependencies and set up the Python virtual environment:

Jump Node Console

depuser@jump:~/kubespray$ sudo apt -y install python3-pip jq python3.12-venv depuser@jump:~/kubespray$ python3 -m venv .venv depuser@jump:~/kubespray$ source .venv/bin/activate (.venv) depuser@jump:~/kubespray$ python3 -m pip install --upgrade pip (.venv) depuser@jump:~/kubespray$ pip install -U -r requirements.txt (.venv) depuser@jump:~/kubespray$ pip install ruamel-yaml

Verify that the

kube_network_pluginis set toflanneland thatkube_proxy_removeis set tofalsein theinventory/mycluster/group_vars/k8s_cluster/k8s-cluster.ymlfile.inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

[depuser@jump kubespray-2.28.0]$ vim inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml ..... # Choose network plugin (cilium, calico, kube-ovn, weave or flannel. Use cni for generic cni plugin) # Can also be set to 'cloud', which lets the cloud provider setup appropriate routing kube_network_plugin: flannel .... # Kube-proxy proxyMode configuration. # Can be ipvs, iptables kube_proxy_remove: false kube_proxy_mode: ipvs .....

Review and edit the

inventory/mycluster/hosts.yamlfile to define the cluster nodes. The following is the configuration for this deployment:inventory/mycluster/hosts.yaml

all: hosts: master1: ansible_host:

10.0.110.1ip:10.0.110.1access_ip:10.0.110.1node_labels:"k8s.ovn.org/zone-name":"master1"master2: ansible_host:10.0.110.2ip:10.0.110.2access_ip:10.0.110.2node_labels:"k8s.ovn.org/zone-name":"master2"master3: ansible_host:10.0.110.3ip:10.0.110.3access_ip:10.0.110.3node_labels:"k8s.ovn.org/zone-name":"master3"children: kube_control_plane: hosts: master1: master2: master3: kube_node: hosts: etcd: hosts: master1: master2: master3: k8s_cluster: children: kube_control_plane:

Deploying Cluster Using Kubespray Ansible Playbook

Run the following command from the Jump Node to initiate the deployment process:

NoteEnsure you are in the Python virtual environment (

.venv) when running the command.Jump Node Console

(.venv) depuser@jump:~/kubespray$ ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root cluster.yml

It takes a while for this deployment to complete. Make sure there are no errors. Successful result example:

Tip

TipIt is recommended to keep the shell from which Kubespray has been running open, later on it will be useful when performing cluster scale out to add the worker nodes.

K8s Deployment Verification

To simplify managing the K8s cluster from the Jump Host, set up kubectl with bash auto-completion.

Copy

kubectland the kubeconfig file frommaster1to the Jump Host:Jump Node Console

## Connect to master1 depuser@jump:~$ ssh master1 depuser@master1:~$ cp /usr/local/bin/kubectl /tmp/ depuser@master1:~$ sudo cp /root/.kube/config /tmp/kube-config depuser@master1:~$ sudo chmod 644 /tmp/kube-config

In another terminal tab, copy the files to the Jump Host:

Jump Node Console

depuser@jump:~$ scp master1:/tmp/kubectl /tmp/ depuser@jump:~$ sudo chown root:root /tmp/kubectl depuser@jump:~$ sudo mv /tmp/kubectl /usr/local/bin/ depuser@jump:~$ mkdir -p ~/.kube depuser@jump:~$ scp master1:/tmp/kube-config ~/.kube/config depuser@jump:~$ chmod 600 ~/.kube/config

Enable bash auto-completion for

kubectl:Verify if bash-completion is installed:

Jump Node Console

depuser@jump:~$ type _init_completion

If installed, the output includes:

Jump Node Console

_init_completion is a function

If not installed, install it:

Jump Node Console

depuser@jump:~$ sudo apt install -y bash-completion

Set up the

kubectlcompletion script:Jump Node Console

depuser@jump:~$ kubectl completion bash | sudo tee /etc/bash_completion.d/kubectl > /dev/null depuser@jump:~$ bash

Check the status of the nodes in the cluster:

Jump Node Console

depuser@jump:~$ kubectl get nodes

Expected output:

Jump Node Console

NAME STATUS ROLES AGE VERSION master1 Ready control-plane 6m v1.31.12 master2 Ready control-plane 6m v1.31.12 master3 Ready control-plane 7m v1.31.12

Check the pods in all namespaces:

Jump Node Console

depuser@jump:~$ kubectl get pods -A

Expected output:

Jump Node Console

[depuser@setup5-jump ~]$ kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-d665d669-l4xpx 1/1 Running 0 12m kube-system coredns-d665d669-vzb8s 1/1 Running 0 12m kube-system dns-autoscaler-79f85f486f-4m97k 1/1 Running 0 12m kube-system kube-apiserver-master1 1/1 Running 0 13m kube-system kube-apiserver-master2 1/1 Running 0 13m kube-system kube-apiserver-master3 1/1 Running 0 13m kube-system kube-controller-manager-master1 1/1 Running 1 13m kube-system kube-controller-manager-master2 1/1 Running 1 13m kube-system kube-controller-manager-master3 1/1 Running 1 13m kube-system kube-flannel-87xxp 1/1 Running 0 12m kube-system kube-flannel-f9cdj 1/1 Running 0 12m kube-system kube-flannel-w5qdx 1/1 Running 0 12m kube-system kube-proxy-jjfpc 1/1 Running 0 13m kube-system kube-proxy-kqxdz 1/1 Running 0 13m kube-system kube-proxy-t7hmt 1/1 Running 0 13m kube-system kube-scheduler-master1 1/1 Running 1 13m kube-system kube-scheduler-master2 1/1 Running 1 13m kube-system kube-scheduler-master3 1/1 Running 1 13m kube-system kube-vip-master1 1/1 Running 0 13m kube-system kube-vip-master2 1/1 Running 0 13m kube-system kube-vip-master3 1/1 Running 0 13m

DPF Installation

Software Prerequisites and Required Variables

Start by installing the remaining software perquisites.

Jump Node Console

## Connect to master1 to copy helm client utility that was installed during kubespray deployment $ depuser@jump:~$ ssh master1 depuser@master1:~$ cp /usr/local/bin/helm /tmp/ ## In another tab depuser@jump:~$ scp master1:/tmp/helm /tmp/ depuser@jump:~$ sudo chown root:root /tmp/helm depuser@jump:~$ sudo mv /tmp/helm /usr/local/bin/ ## Verify that envsubst utility is installed depuser@jump:~$ which envsubst /usr/bin/envsubst

Proceed to clone the doca-platform Git repository:

Jump Node Console

$ git clone https://github.com/NVIDIA/doca-platform.git

Change directory to doca-platform and checkout to tag v25.7.0:

Jump Node Console

$ cd doca-platform/ $ git checkout v25.7.0

Change directory to readme.md from where all the commands will be run:

Jump Node Console

$ cd docs/public/user-guides/zero-trust/use-cases/hbn/

Modify the variables in

manifests/00-env-vars/envvars.envto fit your environment, then source the file:WarningReplace the values for the variables in the following file with the values that fit your setup. Specifically, pay attention to

DPUCLUSTER_INTERFACEandBMC_ROOT_PASSWORD.manifests/00-env-vars/envvars.env

## IP Address for the Kubernetes API server of the target cluster on which DPF is installed.## This should never include a scheme or a port.## e.g. 10.10.10.10exportTARGETCLUSTER_API_SERVER_HOST=10.0.110.10## Virtual IP used by the load balancer for the DPU Cluster. Must be a reserved IP from the management subnet and not## allocated by DHCP.exportDPUCLUSTER_VIP=10.0.110.200## Interface on which the DPUCluster load balancer will listen. Should be the management interface of the control plane node.exportDPUCLUSTER_INTERFACE=eno1## IP address to the NFS server used as storage for the BFB.exportNFS_SERVER_IP=10.0.110.253## The repository URL for the NVIDIA Helm chart registry.## Usually this is the NVIDIA Helm NGC registry. For development purposes, this can be set to a different repository.exportHELM_REGISTRY_REPO_URL=https://helm.ngc.nvidia.com/nvidia/doca## The repository URL for the HBN container image.## Usually this is the NVIDIA NGC registry. For development purposes, this can be set to a different repository.exportHBN_NGC_IMAGE_URL=nvcr.io/nvidia/doca/doca_hbn## The DPF REGISTRY is the Helm repository URL where the DPF Operator Chart resides.## Usually this is the NVIDIA Helm NGC registry. For development purposes, this can be set to a different repository.exportREGISTRY=https://helm.ngc.nvidia.com/nvidia/doca## The DPF TAG is the version of the DPF components which will be deployed in this guide.exportTAG=v25.7.0## URL to the BFB used in the `bfb.yaml` and linked by the DPUSet.exportBFB_URL="https://content.mellanox.com/BlueField/BFBs/Ubuntu22.04/bf-bundle-3.1.0-76_25.07_ubuntu-22.04_prod.bfb"## IP_RANGE_START and IP_RANGE_END## These define the IP range for DPU discovery via Redfish/BMC interfaces## Example: If your DPUs have BMC IPs in range 10.0.110.201-240## export IP_RANGE_START=10.0.110.201## export IP_RANGE_END=10.0.110.204## Start of DPUDiscovery IpRangeexportIP_RANGE_START=10.0.110.201## End of DPUDiscovery IpRangeexportIP_RANGE_END=10.0.110.204# The password used for DPU BMC root login, must be the same for all DPUs# For more information on how to set the BMC root password refer to BlueField DPU Administrator Quick Start Guide.exportBMC_ROOT_PASSWORD=<setyour BMC_ROOT_PASSWORD>## Serial number of DPUs. If you have more than 2 DPUs, you will need to parameterize the system accordingly and expose## additional variables.## All serial numbers must be in lowercase.## Serial number of DPU1exportDPU1_SERIAL=mt2402xz0f7x## Serial number of DPU2exportDPU2_SERIAL=mt2402xz0f80## Serial number of DPU3exportDPU3_SERIAL=mt2402xz0f8g## Serial number of DPU4exportDPU4_SERIAL=mt2402xz0f9nExport environment variables for the installation:

Jump Node Console

$ source manifests/00-env-vars/envvars.env

DPF Operator Installation

Create Storage Required by the DPF Operator

Create the NS for the operator:

Jump Node Console

$ kubectl create ns dpf-operator-system

The following YAML file defines storage (for the BFB image) that is required by the DPF operator.

manifests/01-dpf-operator-installation/nfs-storage-for-bfb-dpf-ga.yaml

--- apiVersion: v1 kind: PersistentVolume metadata: name: bfb-pv spec: capacity: storage: 10Gi volumeMode: Filesystem accessModes: - ReadWriteMany nfs: path: /mnt/dpf_share/bfb server: $NFS_SERVER_IP persistentVolumeReclaimPolicy: Delete --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: bfb-pvc namespace: dpf-operator-system spec: accessModes: - ReadWriteMany resources: requests: storage: 10Gi volumeMode: Filesystem storageClassName:

""

Run the following command to substitute the environment variables using

envsubstand apply the yaml file:Jump Node Console

$ cat manifests/01-dpf-operator-installation/*.yaml | envsubst | kubectl apply -f -

Create DPU BMC shared password secret

In Zero Trust mode, provisioning DPUs requires authentication with Redfish. In order to do that, you must set the same root password to access the BMC for all DPUs DPF is going to manage.

For more information on how to set the BMC root password refer to BlueField DPU Administrator Quick Start Guide.

Connect to the first DPU BMC over SSH to change the BMC root's password:

Jump Node Console

$ ssh root@10.0.110.201 root@10.0.110.201's password: <BMC Root Password. Default root/0penBmc. need to change first time to $BMC_ROOT_PASSWORD in the manifests/00-env-vars/envvars.env file>

Verify that the

rshimservice is runnging or run if not.Jump Node Console

root@dpu-bmc:~# systemctl status rshim * rshim.service - rshim driver for BlueField SoC Loaded: loaded (/usr/lib/systemd/system/rshim.service; enabled; preset: disabled) Active: active (running) since Mon 2025-07-14 13:21:34 UTC; 18h ago Docs: man:rshim(8) Process: 866 ExecStart=/usr/sbin/rshim $OPTIONS (code=exited, status=0/SUCCESS) Main PID: 874 (rshim) CPU: 2h 43min 44.730s CGroup: /system.slice/rshim.service `-874 /usr/sbin/rshim Jul 14 13:21:34 dpu-bmc (rshim)[866]: rshim.service: Referenced but unset environment variable evaluates to an empty string: OPTIONS Jul 14 13:21:34 dpu-bmc rshim[874]: Created PID file: /var/run/rshim.pid Jul 14 13:21:34 dpu-bmc rshim[874]: USB device detected Jul 14 13:21:38 dpu-bmc rshim[874]: Probing usb-2.1 Jul 14 13:21:38 dpu-bmc rshim[874]: create rshim usb-2.1 Jul 14 13:21:39 dpu-bmc rshim[874]: rshim0 attached root@dpu-bmc:~# ls /dev/rshim0 boot console misc rshim # To start the rshim, if not runnging root@dpu-bmc:~# systemctl enable rshim root@dpu-bmc:~# systemctl start rshim root@dpu-bmc:~# systemctl status rshim root@dpu-bmc:~# ls /dev/rshim0 root@dpu-bmc:~# exit

- Repeat the step 3-4 on all your DPUs.

The password is provided to DPF by creating the following secret:

It is necessary to set several environment variables before running this command.

$ source manifests/00-env-vars/envvars.env

Jump Node Console

$ kubectl create secret generic -n dpf-operator-system bmc-shared-password --from-literal=password=$BMC_ROOT_PASSWORD

Additional Dependencies

The DPF Operator requires several prerequisite components to function properly in a Kubernetes environment. Starting with DPF v25.7, all Helm dependencies have been removed from the DPF chart. This means that all dependencies must be installed manually before installing the DPF chart itself. The following commands describe an opiniated approach to install those dependencies (for more information, check: Helm Prerequisites - NVIDIA Docs).

Install

helmfilebinary:Jump Node Console

$ wget https://github.com/helmfile/helmfile/releases/download/v1.1.2/helmfile_1.1.2_linux_amd64.tar.gz $ tar -xvf helmfile_1.1.2_linux_amd64.tar.gz $ sudo mv ./helmfile /usr/local/bin/

Change directory to doca-platform:

TipUse another shell from the one where you run all the other installation commands for DPF.

Jump Node Console

$ cd /home/depuser/doca-platform

Change directory to deploy/helmfiles/ from where the prerequisites will be installed:

Jump Node Console

$ cd deploy/helmfiles/

Install

local-path-provisionermanually using the following commands:Jump Node Console

$ curl https://codeload.github.com/rancher/local-path-provisioner/tar.gz/v0.0.31 | tar -xz --strip=3 local-path-provisioner-0.0.31/deploy/chart/local-path-provisioner/ $ kubectl create ns local-path-provisioner $ helm upgrade --install -n local-path-provisioner local-path-provisioner ./local-path-provisioner --version 0.0.31 \ --set 'tolerations[0].key=node-role.kubernetes.io/control-plane' \ --set 'tolerations[0].operator=Exists' \ --set 'tolerations[0].effect=NoSchedule' \ --set 'tolerations[1].key=node-role.kubernetes.io/master' \ --set 'tolerations[1].operator=Exists' \ --set 'tolerations[1].effect=NoSchedule'

Ensure that the pod in local-path-provisioner namespace is in ready state:

Jump Node Console

$ kubectl wait --for=condition=ready --namespace local-path-provisioner pods --all pod/local-path-provisioner-59d776bf47-7qbql condition met

Install Helm dependencies using the following commands:

Jump Node Console

$ helmfile init --force $ helmfile apply -f prereqs.yaml --color --suppress-diff --skip-diff-on-install --concurrency 0 --hide-notes ..... UPDATED RELEASES: NAME NAMESPACE CHART VERSION DURATION node-feature-discovery dpf-operator-system node-feature-discovery/node-feature-discovery 0.17.1 24s cert-manager cert-manager jetstack/cert-manager v1.18.1 38s argo-cd dpf-operator-system argoproj/argo-cd 7.8.2 1m42s maintenance-operator dpf-operator-system oci://ghcr.io/mellanox/maintenance-operator-chart 0.2.0 26s kamaji dpf-operator-system oci://ghcr.io/nvidia/charts/kamaji 1.1.0 2m48s

Ensure that the

KUBERNETES_SERVICE_HOSTandKUBERNETES_SERVICE_PORTenvironment variables are set in the node-feature-discovery-worker DaemonSet:NoteRun this command from the previous shell where the environment variables were exported.

Jump Node Console

$ kubectl -n dpf-operator-system set env daemonset/node-feature-discovery-worker \ KUBERNETES_SERVICE_HOST=$TARGETCLUSTER_API_SERVER_HOST \ KUBERNETES_SERVICE_PORT=$TARGETCLUSTER_API_SERVER_PORT

DPF Operator Deployment

Run the following commands to substitute the environment variables and install the DPF Operator:

Jump Node Console

$ helm repo add --force-update dpf-repository ${REGISTRY} $ helm repo update $ helm upgrade --install -n dpf-operator-system dpf-operator dpf-repository/dpf-operator --version=$TAG Release "dpf-operator" does not exist. Installing it now. I0908 15:14:00.596313 78290 warnings.go:110] "Warning: spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[0].key: node-role.kubernetes.io/master is use \"node-role.kubernetes.io/control-plane\" instead" I0908 15:14:00.630482 78290 warnings.go:110] "Warning: spec.jobTemplate.spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[0].key: node-role.kubernetes.io/master is use \"node-role.kubernetes.io/control-plane\" instead" NAME: dpf-operator LAST DEPLOYED: Mon Sep 8 15:13:58 2025 NAMESPACE: dpf-operator-system STATUS: deployed REVISION: 1 TEST SUITE: None

Verify the DPF Operator installation by ensuring the deployment is available and all the pods are ready:

NoteThe following verification commands may need to be run multiple times to ensure the conditions are met.

Jump Node Console

## Ensure the DPF Operator deployment is available. $ kubectl rollout status deployment --namespace dpf-operator-system dpf-operator-controller-manager deployment "dpf-operator-controller-manager" successfully rolled out ## Ensure all pods in the DPF Operator system are ready. $ kubectl wait --for=condition=ready --namespace dpf-operator-system pods --all pod/argo-cd-argocd-application-controller-0 condition met pod/argo-cd-argocd-redis-6c6b84f6fb-ljb27 condition met pod/argo-cd-argocd-repo-server-65cfb96746-hnsxv condition met pod/argo-cd-argocd-server-5bbdb4b6b9-dtp4x condition met pod/dpf-operator-controller-manager-5dd7555c6d-469kx condition met pod/kamaji-95587fbc7-bfkww condition met pod/kamaji-etcd-0 condition met pod/kamaji-etcd-1 condition met pod/kamaji-etcd-2 condition met pod/maintenance-operator-74bd5774b7-c4d6f condition met pod/node-feature-discovery-gc-6b48f49cc4-hfgmw condition met pod/node-feature-discovery-master-747d789485-ct9hx condition met

DPF System Installation

This section involves creating the DPF system components and some basic infrastructure required for a functioning DPF-enabled cluster.

Change directory back to :

Jump Node Console

$ cd /home/depuser/doca-platform/docs/public/user-guides/zero-trust/use-cases/hbn/

The following YAML files define the DPFOperatorConfig to install the DPF System components, the DPUCluster to serve as the Kubernetes control plane for DPU nodes, and DPUDiscovery to discover DPUDevices and DPUNodes.

NoteNote that to achieve high performance results you need to adjust the

operatorconfig.yamlto support MTU 9000.Note that we deploy BareMetal ZT mode and we need to add

dpuDetectordisable parameter.manifests/02-dpf-system-installation/operatorconfig.yaml

--- apiVersion: operator.dpu.nvidia.com/v1alpha1 kind: DPFOperatorConfig metadata: name: dpfoperatorconfig namespace: dpf-operator-system spec: dpuDetector: disable:

trueprovisioningController: bfbPVCName:"bfb-pvc"dmsTimeout:900installInterface: installViaRedfish: # Setthisto the IP of one of your control plane nodes +8080port bfbRegistryAddress:"$TARGETCLUSTER_API_SERVER_HOST:8080"kamajiClusterManager: disable:falsenetworking: highSpeedMTU:9000manifests/02-dpf-system-installation/dpucluster.yaml

--- apiVersion: provisioning.dpu.nvidia.com/v1alpha1 kind: DPUCluster metadata: name: dpu-cplane-tenant1 namespace: dpu-cplane-tenant1 spec: type: kamaji maxNodes:

10clusterEndpoint: # deploy keepalived instances on the nodes that match the given nodeSelector. keepalived: #interfaceon which keepalived will listen. Should be the oobinterfaceof the control plane node.interface: $DPUCLUSTER_INTERFACE # Virtual IP reservedforthe DPU Cluster load balancer. Must not be allocatable by DHCP. vip: $DPUCLUSTER_VIP # virtualRouterID must be in range [1,255], make sure the given virtualRouterID does not duplicate with any existing keepalived process running on the host virtualRouterID:126nodeSelector: node-role.kubernetes.io/control-plane:""manifests/02-dpf-system-installation/dpudiscovery.yaml

--- apiVersion: provisioning.dpu.nvidia.com/v1alpha1 kind: DPUDiscovery metadata: name: dpu-discovery namespace: dpf-operator-system spec: # Define the IP range to scan ipRangeSpec: ipRange: startIP: $IP_RANGE_START endIP: $IP_RANGE_END # Optional: Set scan interval scanInterval:

"60m"Create NS for the Kubernetes control plane of the DPU nodes:

Jump Node Console

$ kubectl create ns dpu-cplane-tenant1

Apply the previous YAML files:

Jump Node Console

$ cat manifests/02-dpf-system-installation/*.yaml | envsubst | kubectl apply -f -

Verify the DPF system by ensuring that the provisioning and DPUService controller manager deployments are available, that all other deployments in the DPF Operator system are available, and that the DPUCluster is ready for nodes to join.

Jump Node Console

## Ensure the provisioning and DPUService controller manager deployments are available. $ kubectl rollout status deployment --namespace dpf-operator-system dpf-provisioning-controller-manager dpuservice-controller-manager deployment "dpf-provisioning-controller-manager" successfully rolled out deployment "dpuservice-controller-manager" successfully rolled out ## Ensure all other deployments in the DPF Operator system are Available. $ kubectl rollout status deployment --namespace dpf-operator-system deployment "argo-cd-argocd-applicationset-controller" successfully rolled out deployment "argo-cd-argocd-redis" successfully rolled out deployment "argo-cd-argocd-repo-server" successfully rolled out deployment "argo-cd-argocd-server" successfully rolled out deployment "dpf-operator-controller-manager" successfully rolled out deployment "dpf-provisioning-controller-manager" successfully rolled out deployment "dpuservice-controller-manager" successfully rolled out deployment "kamaji" successfully rolled out deployment "kamaji-cm-controller-manager" successfully rolled out deployment "maintenance-operator" successfully rolled out deployment "node-feature-discovery-gc" successfully rolled out deployment "node-feature-discovery-master" successfully rolled out deployment "servicechainset-controller-manager" successfully rolled out ## Ensure the DPUCluster is ready for nodes to join. $ kubectl wait --for=condition=ready --namespace dpu-cplane-tenant1 dpucluster --all dpucluster.provisioning.dpu.nvidia.com/dpu-cplane-tenant1 condition met

Verify the DPF system by ensuring that the

DPUDevicesexist:Jump Node Console

$ kubectl get dpudevices -n dpf-operator-system NAME AGE mt2402xz0f7x 2m13s mt2402xz0f80 2m12s mt2402xz0f8g 2m10s mt2402xz0f9n 2m11s

Verify the DPF system by ensuring that the

DPUNodesexist.Jump Node Console

$ kubectl get dpunodes -n dpf-operator-system NAME AGE dpu-node-mt2402xz0f7x 2m23s dpu-node-mt2402xz0f80 2m22s dpu-node-mt2402xz0f8g 2m20s dpu-node-mt2402xz0f9n 2m21s

Congratulations, the DPF system has been successfully installed!

Verification

Use the following YAML to define a

BFBresource that downloads the Bluefield Bitstream to a shared volume:manifests/03.1-dpudeployment-installation-pf/bfb.yaml

--- apiVersion: provisioning.dpu.nvidia.com/v1alpha1 kind: BFB metadata: name: bf-bundle namespace: dpf-operator-system spec: url: $BFB_URL

Run the command to create the

BFB:Jump Node Console

$ cat manifests/03.1-dpudeployment-installation-pf/bfb.yaml | envsubst |kubectl apply -f -

Create the following YAML to define a

DPUSet:manifests/03.1-dpudeployment-installation-pf/create-dpu-set.yaml

--- apiVersion: provisioning.dpu.nvidia.com/v1alpha1 kind: DPUSet metadata: name: dpuset namespace: dpf-operator-system spec: dpuNodeSelector: matchLabels: feature.node.kubernetes.io/dpu-enabled:

"true"strategy: rollingUpdate: maxUnavailable:"10%"type: RollingUpdate dpuTemplate: spec: dpuFlavor: dpf-provisioning-hbn-ovn bfb: name: bf-bundle nodeEffect: noEffect:trueRun the command to create a

DPUSet:Jump Node Console

$ kubectl apply -f manifests/03.1-dpudeployment-installation-pf/create-dpu-set.yaml

To follow the progress of DPU provisioning, run the following command to check its current phase:

Jump Node Console

$ watch -n10 "kubectl describe dpu -n dpf-operator-system | grep 'Node Name\|Type\|Last\|Phase'" Every 10.0s: kubectl describe dpu -n dpf-operator-system | grep 'Node Name\|Type\|Last\|Phase' Dpu Node Name: dpu-node-mt2402xz0f7x Last Transition Time: 2025-09-08T13:25:38Z Type: Initialized Last Transition Time: 2025-09-08T13:25:38Z Type: BFBReady Last Transition Time: 2025-09-08T13:25:38Z Type: NodeEffectReady Last Transition Time: 2025-09-08T13:25:44Z Type: InterfaceInitialized Last Transition Time: 2025-09-08T13:25:49Z Type: FWConfigured Last Transition Time: 2025-09-08T13:25:51Z Type: BFBPrepared Last Transition Time: 2025-09-08T13:36:29Z Type: OSInstalled Last Transition Time: 2025-09-08T13:39:33Z Type: Rebooted Phase: Rebooting Dpu Node Name: dpu-node-mt2402xz0f80 Last Transition Time: 2025-09-08T13:25:38Z Type: Initialized Last Transition Time: 2025-09-08T13:25:38Z Type: BFBReady Last Transition Time: 2025-09-08T13:25:38Z Type: NodeEffectReady Last Transition Time: 2025-09-08T13:25:47Z Type: InterfaceInitialized Last Transition Time: 2025-09-08T13:25:50Z Type: FWConfigured Last Transition Time: 2025-09-08T13:25:51Z Type: BFBPrepared Last Transition Time: 2025-09-08T13:37:01Z Type: OSInstalled Last Transition Time: 2025-09-08T13:40:02Z Type: Rebooted .....

Wait for the Rebooted stage and then Power Cycle the bare-metal host manual.

After the DPU is up, run following command for each DPU worker:

Jump Node Console

$ kubectl annotate dpunodes -n dpf-operator-system dpu-node-mt2402xz0f7x provisioning.dpu.nvidia.com/dpunode-external-reboot-required- dpunode.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f7x annotated $ kubectl annotate dpunodes -n dpf-operator-system dpu-node-mt2402xz0f80 provisioning.dpu.nvidia.com/dpunode-external-reboot-required- dpunode.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f80 annotated $ kubectl annotate dpunodes -n dpf-operator-system dpu-node-mt2402xz0f8g provisioning.dpu.nvidia.com/dpunode-external-reboot-required- dpunode.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f8g annotated $ kubectl annotate dpunodes -n dpf-operator-system dpu-node-mt2402xz0f9n provisioning.dpu.nvidia.com/dpunode-external-reboot-required- dpunode.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f9n annotated

At this point, the DPU workers should be added to the cluster. As they being added to the cluster, the DPUs are provisioned.

Jump Node Console

$ watch -n10 "kubectl describe dpu -n dpf-operator-system | grep 'Node Name\|Type\|Last\|Phase'" Every 10.0s: kubectl describe dpu -n dpf-operator-system | grep 'Node Name\|Type\|Last\|Phase' Dpu Node Name: dpu-node-mt2402xz0f7x Type: InternalIP Type: Hostname Last Transition Time: 2025-09-08T13:25:38Z Type: Initialized Last Transition Time: 2025-09-08T13:25:38Z Type: BFBReady Last Transition Time: 2025-09-08T13:25:38Z Type: NodeEffectReady Last Transition Time: 2025-09-08T13:25:44Z Type: InterfaceInitialized Last Transition Time: 2025-09-08T13:25:49Z Type: FWConfigured Last Transition Time: 2025-09-08T13:25:51Z Type: BFBPrepared Last Transition Time: 2025-09-08T13:36:29Z Type: OSInstalled Last Transition Time: 2025-09-08T14:09:51Z Type: Rebooted Last Transition Time: 2025-09-08T14:09:51Z Type: DPUClusterReady Last Transition Time: 2025-09-08T14:09:52Z Type: Ready Phase: Ready Dpu Node Name: dpu-node-mt2402xz0f80 Type: InternalIP Type: Hostname Last Transition Time: 2025-09-08T13:25:38Z Type: Initialized Last Transition Time: 2025-09-08T13:25:38Z Type: BFBReady Last Transition Time: 2025-09-08T13:25:38Z Type: NodeEffectReady Last Transition Time: 2025-09-08T13:25:47Z Type: InterfaceInitialized Last Transition Time: 2025-09-08T13:25:50Z Type: FWConfigured Last Transition Time: 2025-09-08T13:25:51Z Type: BFBPrepared Last Transition Time: 2025-09-08T13:37:01Z Type: OSInstalled Last Transition Time: 2025-09-08T14:10:04Z Type: Rebooted Last Transition Time: 2025-09-08T14:10:04Z Type: DPUClusterReady Last Transition Time: 2025-09-08T14:10:04Z Type: Ready .....

Finally, validate that all the different DPU-related objects are now in the Ready state:

Jump Node Console

$ kubectl get secrets -n dpu-cplane-tenant1 dpu-cplane-tenant1-admin-kubeconfig -o json | jq -r '.data["admin.conf"]' | base64 --decode > /home/depuser/dpu-cluster.config $ echo "alias ki='KUBECONFIG=/home/depuser/dpu-cluster.config kubectl'" >> ~/.bashrc $ echo 'alias dpfctl="kubectl -n dpf-operator-system exec deploy/dpf-operator-controller-manager -- /dpfctl "' >> ~/.bashrc $ dpfctl describe dpudeployments NAME NAMESPACE STATUS REASON SINCE MESSAGE DPFOperatorConfig/dpfoperatorconfig dpf-operator-system Ready: True Success 21m $ ki get node -A NAME STATUS ROLES AGE VERSION dpu-node-mt2402xz0f7x-mt2402xz0f7x Ready <none> 25m v1.33.2 dpu-node-mt2402xz0f80-mt2402xz0f80 Ready <none> 25m v1.33.2 dpu-node-mt2402xz0f8g-mt2402xz0f8g Ready <none> 25m v1.33.2 dpu-node-mt2402xz0f9n-mt2402xz0f9n Ready <none> 25m v1.33.2 $ kubectl get dpu -A NAMESPACE NAME READY PHASE AGE dpf-operator-system dpu-node-mt2402xz0f7x-mt2402xz0f7x True Ready 47m dpf-operator-system dpu-node-mt2402xz0f80-mt2402xz0f80 True Ready 47m dpf-operator-system dpu-node-mt2402xz0f8g-mt2402xz0f8g True Ready 47m dpf-operator-system dpu-node-mt2402xz0f9n-mt2402xz0f9n True Ready 47m $ kubectl wait --for=condition=ready --namespace dpf-operator-system dpu --all dpu.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f7x-mt2402xz0f7x condition met dpu.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f80-mt2402xz0f80 condition met dpu.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f8g-mt2402xz0f8g condition met dpu.provisioning.dpu.nvidia.com/dpu-node-mt2402xz0f9n-mt2402xz0f9n condition met

Run the command to delete a

DPUSetandBFB:Jump Node Console

$ kubectl delete -f manifests/03.1-dpudeployment-installation-pf/create-dpu-set.yaml dpuset.provisioning.dpu.nvidia.com "dpuset" deleted $ cat manifests/03.1-dpudeployment-installation-pf/bfb.yaml | envsubst |kubectl delete -f - bfb.provisioning.dpu.nvidia.com "bf-bundle" deleted

Optional

To deploy DPF Zero Trust (DPF-ZT) with:

- VPC OVN DPU Service

- HBN DPU Service

- Argus DPU Service

- VPC OVN and Argus DPU Services

- HBN and Argus DPU Services

Authors

| Boris Kovalev Boris Kovalev has worked for the past several years as a Solutions Architect, focusing on NVIDIA Networking/Mellanox technology, and is responsible for complex machine learning, Big Data and advanced VMware-based cloud research and design. Boris previously spent more than 20 years as a senior consultant and solutions architect at multiple companies, most recently at VMware. He has written multiple reference designs covering VMware, machine learning, Kubernetes, and container solutions which are available at the NVIDIA Documents website. |

NVIDIA, the NVIDIA logo, and BlueField are trademarks and/or registered trademarks of NVIDIA Corporation in the U.S. and other countries. Other company and product names may be trademarks of the respective companies with which they are associated. TM

© 2025 NVIDIA Corporation. All rights reserved.

This document is provided for information purposes only and shall not be regarded as a warranty of a certain functionality, condition, or quality of a product. NVIDIA Corporation (“NVIDIA”) makes no representations or warranties, expressed or implied, as to the accuracy or completeness of the information contained in this document and assumes no responsibility for any errors contained herein. NVIDIA shall have no liability for the consequences or use of such information or for any infringement of patents or other rights of third parties that may result from its use. This document is not a commitment to develop, release, or deliver any Material (defined below), code, or functionality. NVIDIA reserves the right to make corrections, modifications, enhancements, improvements, and any other changes to this document, at any time without notice. Customer should obtain the latest relevant information before placing orders and should verify that such information is current and complete. NVIDIA products are sold subject to the NVIDIA standard terms and conditions of sale supplied at the time of order acknowledgement, unless otherwise agreed in an individual sales agreement signed by authorized representatives of NVIDIA and customer (“Terms of Sale”). NVIDIA hereby expressly objects to applying any customer general terms and conditions with regards to the purchase of the NVIDIA product referenced in this document. No contractual obligations are formed either directly or indirectly by this document.