AR SDK Programming Guide

AR SDK Programming Guide

The AR SDK programming guide provides information about how to use the AR SDK in your applications and provides a list of the API references.

This section provides information about the NVIDIA® AR SDK API architecture.

1.1. Using the NVIDIA AR SDK in Applications

Use the AR SDK to enable an application to use the face tracking, facial landmark tracking, 3D face mesh tracking, and 3D Body Pose tracking features of the SDK.

1.2. Creating an Instance of a Feature Type

The feature type is a predefined structure that is used to access the SDK features. Each feature requires an instantiation of the feature type.

Creating an instance of a feature type provides access to configuration parameters that are used when loading an instance of the feature type and the input and output parameters that are provided at runtime when instances of the feature type are run.

- Allocate memory for an

NvAR_FeatureHandlestructure.NvAR_FeatureHandle faceDetectHandle{};

- Call the

NvAR_Create()function. In the call to the function, pass the following information:- A value of the

NvAR_FeatureIDenumeration to identify the feature type. - A pointer to the variable that you declared to allocate memory for an

NvAR_FeatureHandlestructure.

- A value of the

- To create an instance of the face detection feature type, run the following example:

NvAR_Create(NvAR_Feature_FaceDetection, &faceDetectHandle)

This function creates a handle to the feature instance, which is required in function calls to get and set the properties of the instance and to load, run, or destroy the instance.

1.3. Getting and Setting Properties for a Feature Type

To prepare to load and run an instance of a feature type, you need to set the properties that the instance requires.

Here are some of the properties:

- The configuration properties that are required to load the feature type.

- Input and output properties are provided at runtime when instances of the feature type are run.

Refer to Key Values in the Properties of a Feature Type for a complete list of properties.

To set properties, NVIDIA AR SDK provides type-safe set accessor functions. If you need the value of a property that has been set by a set accessor function, use the corresponding get accessor function. Refer to Summary of NVIDIA AR SDK Accessor Functions for a complete list of get and set functions.

1.3.1. Setting Up the CUDA Stream

Some SDK features require a CUDA stream in which to run. Refer to the NVIDIA CUDA Toolkit Documentation for more information.

- Initialize a CUDA stream by calling one of the following functions:

- The CUDA Runtime API function

cudaStreamCreate() -

NvAR_CudaStreamCreate()

You can use the second function to avoid linking with the NVIDIA CUDA Toolkit libraries.

- The CUDA Runtime API function

- Call the

NvAR_SetCudaStream()function and provide the following information as parameters:- The created filter handle.

Refer to Creating an Instance of a Feature Type for more information.

- The key value

NVAR_Parameter_Config(CUDAStream).Refer Key Values in the Properties of a Feature Type for more information.

- The CUDA stream that you created in the previous step.

This example sets up a CUDA stream that was created by calling the

NvAR_CudaStreamCreate()function:CUstream stream; nvErr = NvAR_CudaStreamCreate (&stream); nvErr = NvAR_SetCudaStream(featureHandle, NVAR_Parameter_Config(CUDAStream), stream);

- The created filter handle.

1.3.2. Summary of NVIDIA AR SDK Accessor Functions

The following table provides the details about the SDK accessor functions.

| Property Type | Data Type | Set and Get Accessor Function |

|---|---|---|

| 32-bit unsigned integer | unsigned int | NvAR_SetU32() |

| NvAR_GetU32() | ||

| 32-bit signed integer | int | NvAR_SetS32() |

| NvAR_GetS32() | ||

| Single-precision (32-bit) floating-point number | float | NvAR_SetF32() |

| NvAR_GetF32() | ||

| Double-precision (64-bit) floating point number | double | NvAR_SetF64() |

| NvAR_GetF64() | ||

| 64-bit unsigned integer | unsigned long long | NvAR_SetU64() |

| NvAR_GetU64() | ||

| Floating-point array | float* | NvAR_SetFloatArray() |

| NvAR_GetFloatArray() | ||

| Object | void* | NvAR_SetObject() |

| NvAR_GetObject() | ||

| Character string | const char* | NvAR_SetString() |

| NvAR_GetString() | ||

| CUDA stream | CUstream | NvAR_SetCudaStream() |

| NvAR_GetCudaStream() |

1.3.3. Key Values in the Properties of a Feature Type

The key values in the properties of a feature type identify the properties that can be used with each feature type. Each key has a string equivalent and is defined by a macro that indicates the category of the property and takes a name as an input to the macro.

Here are the macros that indicate the category of a property:

NvAR_Parameter_Configindicates a configuration property.Refer to Configuration Properties for more information.

NvAR_Parameter_Inputindicates an input property.Refer to Input Properties for more information.

NvAR_Parameter_Outputindicates an output property.Refer to Output Properties for more information.

The names are fixed keywords and are listed in nvAR_defs.h. The keywords might be reused with different macros, depending on whether a property is an input, an output, or a configuration property.

The property type denotes the accessor functions to set and get the property as listed in the Summary of NVIDIA AR SDK Accessor Functions table.

1.3.3.1. Configuration Properties

Here are the configuration properties in the AR SDK:

- NvAR_Parameter_Config(FeatureDescription)

-

A description of the feature type.

String equivalent:

NvAR_Parameter_Config_FeatureDescriptionProperty type: character string (

const char*) - NvAR_Parameter_Config(CUDAStream)

-

The CUDA stream in which to run the feature.

String equivalent:

NvAR_Parameter_Config_CUDAStreamProperty type: CUDA stream (

CUstream) - NvAR_Parameter_Config(ModelDir)

-

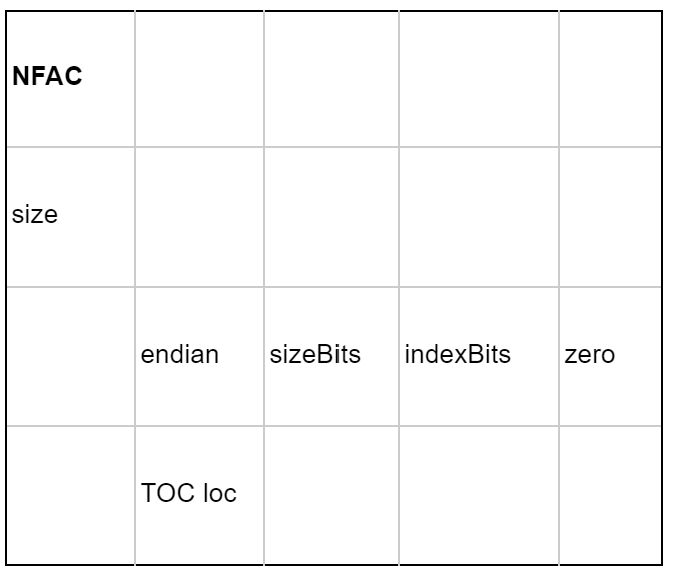

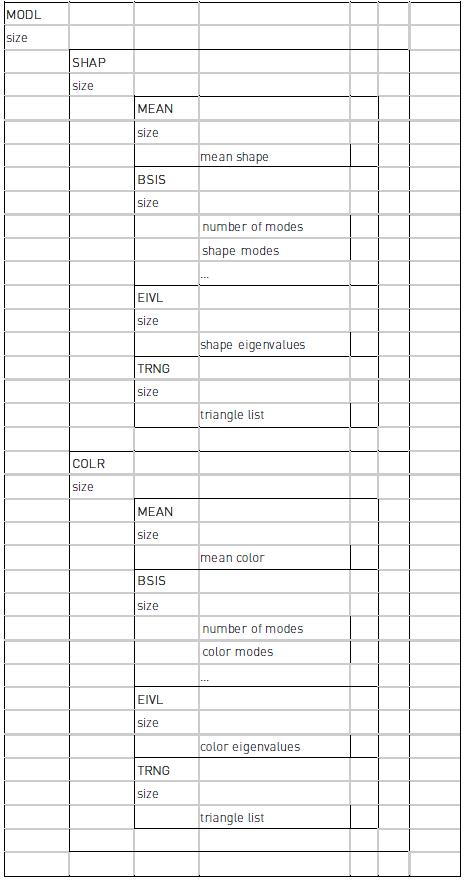

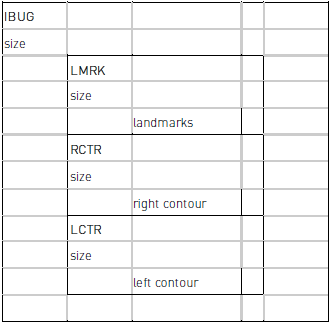

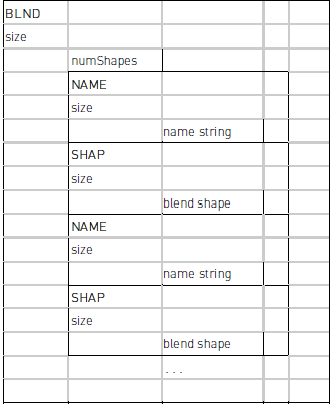

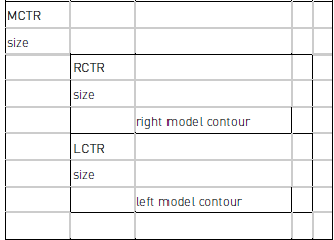

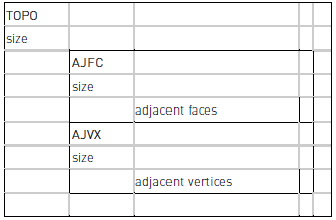

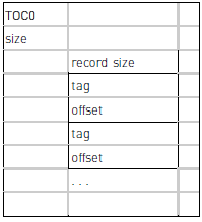

The path to the directory that contains the TensorRT model files that will be used to run inference for face detection or landmark detection, and the .nvf file that contains the 3D Face model, excluding the model file name. For details about the format of the .nvf file, Refer to NVIDIA 3DMM File Format.

String equivalent:

NvAR_Parameter_Config_ModelDirProperty type: character string (

const char*) - NvAR_Parameter_Config(BatchSize)

-

The number of inferences to be run at one time on the GPU.

String equivalent:

NvAR_Parameter_Config_BatchSizeProperty type:

unsigned integer - NvAR_Parameter_Config(Landmarks_Size)

-

The length of the output buffer that contains the X and Y coordinates in pixels of the detected landmarks. This property applies only to the landmark detection feature.

String equivalent:

NvAR_Parameter_Config_Landmarks_SizeProperty type:

unsigned integer - NvAR_Parameter_Config(LandmarksConfidence_Size)

-

The length of the output buffer that contains the confidence values of the detected landmarks. This property applies only to the landmark detection feature.

String equivalent:

NvAR_Parameter_Config_LandmarksConfidence_SizeProperty type:

unsigned integer - NvAR_Parameter_Config(Temporal)

-

Flag to enable optimization for temporal input frames. Enable the flag when the input is a video.

String equivalent:

NvAR_Parameter_Config_TemporalProperty type:

unsigned integer - NvAR_Parameter_Config(ShapeEigenValueCount)

-

The number of eigenvalues used to describe shape. In the supplied

face_model2.nvf, there are 100 shapes (also known as identity) eigenvalues, but theShapeEigenValueCountshould be queried when you allocate an array to receive the eigenvalues.String equivalent:

NvAR_Parameter_Config_ShapeEigenValueCountProperty type:

unsigned integer - NvAR_Parameter_Config(ExpressionCount)

-

The number of coefficients used to represent expression. In the supplied

face_model2.nvf, there are 53 expression blendshape coefficients, but theExpressionCountshould be queried when allocating an array to receive the coefficients.String equivalent:

NvAR_Parameter_Config_ExpressionCountProperty type:

unsigned integer - NvAR_Parameter_Config(UseCudaGraph)

-

Flag to enable CUDA Graph optimization. The CUDA graph reduces the overhead of GPU operation submission of 3D body tracking.

String equivalent:

NvAR_Parameter_Config_UseCudaGraphProperty type:

bool - NvAR_Parameter_Config(Mode)

-

Mode to select High Performance or High Quality for 3D Body Pose or Facial Landmark Detection.

String equivalent:

NvAR_Parameter_Config_ModeProperty type:

unsigned int - NvAR_Parameter_Config(ReferencePose)

-

CPU buffer of type NvAR_Point3f to hold the Reference Pose for Joint Rotations for 3D Body Pose.

String equivalent:

NvAR_Parameter_Config_ReferencePoseProperty type: object (

void*) - NvAR_Parameter_Config(TrackPeople) (Windows only)

-

Flag to select Multi-Person Tracking for 3D Body Pose Tracking.

String equivalent:

NvAR_Parameter_Config_TrackPeopleProperty type: object (

unsigned integer) - NvAR_Parameter_Config(ShadowTrackingAge)(Windows only)

-

The age after which the multi-person tracker no longer tracks the object in shadow mode. This property is measured in the number of frames.

Flag to select Multi-Person Tracking for 3D Body Pose Tracking.

String equivalent:

NvAR_Parameter_Config_ShadowTrackingAgeProperty type: object (

unsigned integer) - NvAR_Parameter_Config(ProbationAge)(Windows only)

-

The age after which the multi-person tracker marks the object valid and assigns an ID for tracking. This property is measured in the number of frames.

String equivalent:

NvAR_Parameter_Config_ProbationAgeProperty type: object (

unsigned integer) - NvAR_Parameter_Config(MaxTargetsTracked)(Windows only)

-

The maximum number of targets to be tracked by the multi-person tracker. After the new maximum target tracked limit is met, any new targets will be discarded.

String equivalent:

NvAR_Parameter_Config_MaxTargetsTrackedProperty type: object (

unsigned integer)

1.3.3.2. Input Properties

Here are the input properties in the AR SDK:

- NvAR_Parameter_Input(Image)

-

GPU input image buffer of type

NvCVImage.String equivalent:

NvAR_Parameter_Input_ImageProperty type: object (

void*) - NvAR_Parameter_Input(Width)

-

The width of the input image buffer in pixels.

String equivalent:

NvAR_Parameter_Input_WidthProperty type:

integer - NvAR_Parameter_Input(Height)

-

The height of the input image buffer in pixels.

String equivalent:

NvAR_Parameter_Input_HeightProperty type:

integer - NvAR_Parameter_Input(Landmarks)

-

CPU input array of type

NvAR_Point2fthat contains the facial landmark points.String equivalent:

NvAR_Parameter_Input_LandmarksProperty type: object (

void*) - NvAR_Parameter_Input(BoundingBoxes)

-

Bounding boxes that determine the region of interest (ROI) of an input image that contains a face of type

NvAR_BBoxes.String equivalent:

NvAR_Parameter_InputBoundingBoxesProperty type: object (

void*) - NvAR_Parameter_Input(FocalLength)

-

The focal length of the camera used for 3D Body Pose.

String equivalent:

NvAR_Parameter_Input_FocalLengthProperty type: object (

float)

1.3.3.3. Output Properties

Here are the output properties in the AR SDK:

- NvAR_Parameter_Output(BoundingBoxes)

-

CPU output bounding boxes of type NvAR_BBoxes.

String equivalent:

NvAR_Parameter_Output_BoundingBoxesProperty type: object (

void*) - NvAR_Parameter_Output(TrackingBoundingBoxes)(Windows only)

-

CPU output tracking bounding boxes of type NvAR_TrackingBBoxes.

String equivalent:

NvAR_Parameter_Output_TrackingBBoxesProperty type: object (

object (void*)) - NvAR_Parameter_Output(BoundingBoxesConfidence)

-

Float array of confidence values for each returned bounding box.

String equivalent:

NvAR_Parameter_Output_BoundingBoxesConfidenceProperty type: floating point array

- NvAR_Parameter_Output(Landmarks)

-

CPU output buffer of type

NvAR_Point2fto hold the output detected landmark key points. Refer to Facial point annotations for more information. The order of the points in the CPU buffer follows the order in MultiPIE 68-point markups, and the 126 points cover more points along the cheeks, the eyes, and the laugh lines.String equivalent:

NvAR_Parameter_Output_LandmarksProperty type: object (

void*) - NvAR_Parameter_Output(LandmarksConfidence)

-

Float array of confidence values for each detected landmark point.

String equivalent:

NvAR_Parameter_Output_LandmarksConfidenceProperty type: floating point array

- NvAR_Parameter_Output(Pose)

-

CPU array of type

NvAR_Quaternionto hold the output-detected pose as an XYZW quaternion.String equivalent:

NvAR_Parameter_Output_PoseProperty type: object (

void*) - NvAR_Parameter_Output(FaceMesh)

-

CPU 3D face Mesh of type

NvAR_FaceMesh.String equivalent:

NvAR_Parameter_Output_FaceMeshProperty type: object (

void*) - NvAR_Parameter_Output(RenderingParams)

-

CPU output structure of type

NvAR_RenderingParamsthat contains the rendering parameters that might be used to render the 3D face mesh.String equivalent:

NvAR_Parameter_Output_RenderingParamsProperty type: object (

void*) - NvAR_Parameter_Output(ShapeEigenValues)

-

Float array of shape eigenvalues. Get

NvAR_Parameter_Config(ShapeEigenValueCount)to determine how many eigenvalues there are.String equivalent:

NvAR_Parameter_Output_ShapeEigenValuesProperty type: const floating point array

- NvAR_Parameter_Output(ExpressionCoefficients)

-

Float array of expression coefficients. Get

NvAR_Parameter_Config(ExpressionCount)to determine how many coefficients there are.String equivalent:

NvAR_Parameter_Output_ExpressionCoefficientsProperty type: const floating point array

- NvAR_Parameter_Output(KeyPoints)

-

CPU output buffer of type

NvAR_Point2fto hold the output detected 2D Keypoints for Body Pose. Refer to 3D Body Pose Keypoint Format for information about the Keypoint names and the order of Keypoint output.String equivalent:

NvAR_Parameter_Output_KeyPointsProperty type: object (

void*) - NvAR_Parameter_Output(KeyPoints3D)

-

CPU output buffer of type

NvAR_Point3fto hold the output detected 3D Keypoints for Body Pose. Refer to 3D Body Pose Keypoint Format for information about the Keypoint names and the order of Keypoint output.String equivalent:

NvAR_Parameter_Output_KeyPoints3DProperty type: object (

void*) - NvAR_Parameter_Output(JointAngles)

-

CPU output buffer of type

NvAR_Point3fto hold the joint angles in axis-angle format for the Keypoints for Body Pose.String equivalent:

NvAR_Parameter_Output_JointAnglesProperty type: object (

void*) - NvAR_Parameter_Output(KeyPointsConfidence)

-

Float array of confidence values for each detected keypoints.

String equivalent:

NvAR_Parameter_Output_KeyPointsConfidenceProperty type: floating point array

- NvAR_Parameter_Output(OutputHeadTranslation)

-

Float array of three values that represent the x, y and z values of head translation with respect to the camera for Eye Contact.

String equivalent:

NvAR_Parameter_Output_OutputHeadTranslationProperty type: floating point array

- NvAR_Parameter_Output(OutputGazeVector)

-

Float array of two values that represent the yaw and pitch angles of the estimated gaze for Eye Contact.

String equivalent:

NvAR_Parameter_Output_OutputGazeVectorProperty type: floating point array

- NvAR_Parameter_Output(HeadPose)

-

CPU array of type NvAR_Quaternion to hold the output-detected head pose as an XYZW quaternion in Eye Contact. This is an alternative to the head pose that was obtained from the facial landmarks feature. This head pose is obtained using the PnP algorithm over the landmarks.

String equivalent:

NvAR_Parameter_Output_HeadPoseProperty type:

object (void*) - NvAR_Parameter_Output(GazeDirection)

-

Float array of two values that represent the yaw and pitch angles of the estimated gaze for Eye Contact.

String equivalent:

NvAR_Parameter_Output_GazeDirectionProperty type: floating point array

1.3.4. Getting the Value of a Property of a Feature

To get the value of a property of a feature, call the get accessor function that is appropriate for the data type of the property.

In the call to the function, pass the following information:

- The feature handle to the feature instance.

- The key value that identifies the property that you are getting.

- The location in memory where you want the value of the property to be written.

This example determines the length of the NvAR_Point2f output buffer that was returned by the landmark detection feature:

unsigned int OUTPUT_SIZE_KPTS;

NvAR_GetU32(landmarkDetectHandle, NvAR_Parameter_Config(Landmarks_Size), &OUTPUT_SIZE_KPTS);

1.3.5. Setting a Property for a Feature

The following steps explain how to set a property for a feature.

- Allocate memory for all inputs and outputs that are required by the feature and any other properties that might be required.

- Call the set accessor function that is appropriate for the data type of the property. In the call to the function, pass the following information:

- The feature handle to the feature instance.

- The key value that identifies the property that you are setting.

- A pointer to the value to which you want to set the property.

This example sets the file path to the file that contains the output 3D face model:

const char *modelPath = "file/path/to/model"; NvAR_SetString(landmarkDetectHandle, NvAR_Parameter_Config(ModelDir), modelPath);

This example sets up the input image buffer in GPU memory, which is required by the face detection feature:Note:It sets up an 8-bit chunky/interleaved BGR array.

NvCVImage InputImageBuffer; NvCVImage_Alloc(&inputImageBuffer, input_image_width, input_image_height, NVCV_BGR, NVCV_U8, NVCV_CHUNKY, NVCV_GPU, 1) ; NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Input(Image), &InputImageBuffer, sizeof(NvCVImage));

Refer to List of Properties for AR Features for more information about the properties and input and output requirements for each feature.

Note:The listed property name is the input to the macro that defines the key value for the property.

1.3.6. Loading a Feature Instance

You can load the feature after setting the configuration properties that are required to load an instance of a feature type.

To load a feature instance, call the NvAR_Load() function and specify the handle that was created for the feature instance when the instance was created. Refer to Creating an Instance of a Feature Type for more information.

This example loads an instance of the face detection feature type:

NvAR_Load(faceDetectHandle);

1.3.7. Running a Feature Instance

Before you can run the feature instance, load an instance of a feature type and set the user-allocated input and output memory buffers that are required when the feature instance is run.

To run a feature instance, call the NvAR_Run() function and specify the handle that was created for the feature instance when the instance was created. Refer to Creating an Instance of a Feature Type for more information.

This example shows how to run a face detection feature instance:

NvAR_Run(faceDetectHandle);

1.3.8. Destroying a Feature Instance

When a feature instance is no longer required, you need to destroy it to free the resources and memory that the feature instance allocated internally.

Memory buffers are provided as input and to hold the output of a feature and must be separately deallocated.

To destroy a feature instance, call the NvAR_Destroy() function and specify the handle that was created for the feature instance when the instance was created. Refer to Creating an Instance of a Feature Type for more information.

NvAR_Destroy(faceDetectHandle);

1.4. Working with Image Frames on GPU or CPU Buffers

Effect filters accept image buffers as NvCVImage objects. The image buffers can be CPU or GPU buffers, but for performance reasons, the effect filters require GPU buffers. The AR SDK provides functions for converting an image representation to NvCVImage and transferring images between CPU and GPU buffers.

For more information about NvCVImage, refer to NvCVImage API Guide. This section provides a synopsis of the most frequently used functions with the AR SDK.

1.4.1. Converting Image Representations to NvCVImage Objects

The AR SDK provides functions for converting OpenCV images and other image representations to NvCVImage objects. Each function places a wrapper around an existing buffer. The wrapper prevents the buffer from being freed when the destructor of the wrapper is called.

1.4.1.1. Converting OpenCV Images to NvCVImage Objects

You can use the wrapper functions that the AR SDK provides specifically for RGB OpenCV images.

The AR SDK provides wrapper functions only for RGB images. No wrapper functions are provided for YUV images.

- To create an

NvCVImageobject wrapper for an OpenCV image, use theNVWrapperForCVMat()function.//Allocate source and destination OpenCV images cv::Mat srcCVImg( ); cv::Mat dstCVImg(...); // Declare source and destination NvCVImage objects NvCVImage srcCPUImg; NvCVImage dstCPUImg; NVWrapperForCVMat(&srcCVImg, &srcCPUImg); NVWrapperForCVMat(&dstCVImg, &dstCPUImg);

- To create an OpenCV image wrapper for an

NvCVImageobject, use theCVWrapperForNvCVImage()function.// Allocate source and destination NvCVImage objects NvCVImage srcCPUImg(...); NvCVImage dstCPUImg(...); //Declare source and destination OpenCV images cv::Mat srcCVImg; cv::Mat dstCVImg; CVWrapperForNvCVImage (&srcCPUImg, &srcCVImg); CVWrapperForNvCVImage (&dstCPUImg, &dstCVImg);

1.4.1.2. Converting Other Image Representations to NvCVImage Objects

To convert other image representations, call the NvCVImage_Init() function to place a wrapper around an existing buffer (srcPixelBuffer).

NvCVImage src_gpu;

vfxErr = NvCVImage_Init(&src_gpu, 640, 480, 1920, srcPixelBuffer, NVCV_BGR, NVCV_U8, NVCV_INTERLEAVED, NVCV_GPU);

NvCVImage src_cpu;

vfxErr = NvCVImage_Init(&src_cpu, 640, 480, 1920, srcPixelBuffer, NVCV_BGR, NVCV_U8, NVCV_INTERLEAVED, NVCV_CPU);

1.4.1.3. Converting Decoded Frames from the NvDecoder to NvCVImage Objects

To convert decoded frames from the NVDecoder to NvCVImage objects, call the NvCVImage_Transfer() function to convert the decoded frame that is provided by the NvDecoder from the decoded pixel format to the format that is required by a feature of the AR SDK.

The following sample shows a decoded frame that was converted from the NV12 to the BGRA pixel format.

NvCVImage decoded_frame, BGRA_frame, stagingBuffer;

NvDecoder dec;

//Initialize decoder...

//Assuming dec.GetOutputFormat() == cudaVideoSurfaceFormat_NV12

//Initialize memory for decoded frame

NvCVImage_Init(&decoded_frame, dec.GetWidth(), dec.GetHeight(), dec.GetDeviceFramePitch(), NULL, NVCV_YUV420, NVCV_U8, NVCV_NV12, NVCV_GPU, 1);

decoded_frame.colorSpace = NVCV_709 | NVCV_VIDEO_RANGE | NVCV_CHROMA_COSITED;

//Allocate memory for BGRA frame, and set alpha opaque

NvCVImage_Alloc(&BGRA_frame, dec.GetWidth(), dec.GetHeight(), NVCV_BGRA, NVCV_U8, NVCV_CHUNKY, NVCV_GPU, 1);

cudaMemset(BGRA_frame.pixels, -1, BGRA_frame.pitch * BGRA_frame.height);

decoded_frame.pixels = (void*)dec.GetFrame();

//Convert from decoded frame format(NV12) to desired format(BGRA)

NvCVImage_Transfer(&decoded_frame, &BGRA_frame, 1.f, stream, & stagingBuffer);

The sample above assumes the typical colorspace specification for HD content. SD typically uses NVCV_601. There are eight possible combinations, and you should use the one that matches your video as described in the video header or proceed by trial and error.

Here is some additional information:

- If the colors are incorrect, swap 709<->601.

- If they are washed out or blown out, swap VIDEO<->FULL.

- If the colors are shifted horizontally, swap INTSTITIAL<->COSITED.

1.4.1.4. Converting an NvCVImage Object to a Buffer that can be Encoded by NvEncoder

To convert the NvCVImage to the pixel format that is used during encoding via NvEncoder, if necessary, call the NvCVImage_Transfer() function.

The following sample shows a frame that is encoded in the BGRA pixel format.

convert-nvcvimage-obj-buffer-encoded-nvencoderThe following sample shows a frame that is encoded in the BGRA pixel format.

//BGRA frame is 4-channel, u8 buffer residing on the GPU

NvCVImage BGRA_frame;

NvCVImage_Alloc(&BGRA_frame, dec.GetWidth(), dec.GetHeight(), NVCV_BGRA, NVCV_U8, NVCV_CHUNKY, NVCV_GPU, 1);

//Initialize encoder with a BGRA output pixel format

using NvEncCudaPtr = std::unique_ptr<NvEncoderCuda, std::function<void(NvEncoderCuda*)>>;

NvEncCudaPtr pEnc(new NvEncoderCuda(cuContext, dec.GetWidth(), dec.GetHeight(), NV_ENC_BUFFER_FORMAT_ARGB));

pEnc->CreateEncoder(&initializeParams);

//...

std::vector<std::vector<uint8_t>> vPacket;

//Get the address of the next input frame from the encoder

const NvEncInputFrame* encoderInputFrame = pEnc->GetNextInputFrame();

//Copy the pixel data from BGRA_frame into the input frame address obtained above

NvEncoderCuda::CopyToDeviceFrame(cuContext,

BGRA_frame.pixels,

BGRA_frame.pitch,

(CUdeviceptr)encoderInputFrame->inputPtr,

encoderInputFrame->pitch,

pEnc->GetEncodeWidth(),

pEnc->GetEncodeHeight(),

CU_MEMORYTYPE_DEVICE,

encoderInputFrame->bufferFormat,

encoderInputFrame->chromaOffsets,

encoderInputFrame->numChromaPlanes);

pEnc->EncodeFrame(vPacket);

1.4.2. Allocating an NvCVImage Object Buffer

You can allocate the buffer for an NvCVImage object by using theNvCVImage allocation constructor or image functions. In both options, the buffer is automatically freed by the destructor when the images go out of scope.

1.4.2.1. Using the NvCVImage Allocation Constructor to Allocate a Buffer

The NvCVImage allocation constructor creates an object to which memory has been allocated and that has been initialized. Refer to Allocation Constructor for more information.

The final three optional parameters of the allocation constructor determine the properties of the resulting NvCVImage object:

- The pixel organization determines whether blue, green, and red are in separate planes or interleaved.

- The memory type determines whether the buffer resides on the GPU or the CPU.

- The byte alignment determines the gap between consecutive scanlines.

The following examples show how to use the final three optional parameters of the allocation constructor to determine the properties of the NvCVImage object.

- This example creates an object without setting the final three optional parameters of the allocation constructor. In this object, the blue, green, and red components interleaved in each pixel, the buffer resides on the CPU, and the byte alignment is the default alignment.

NvCVImage cpuSrc( srcWidth, srcHeight, NVCV_BGR, NVCV_U8 );

- This example creates an object with identical pixel organization, memory type, and byte alignment to the previous example by setting the final three optional parameters explicitly. As in the previous example, the blue, green, and red components are interleaved in each pixel, the buffer resides on the CPU, and the byte alignment is the default, that is, optimized for maximum performance.

NvCVImage src( srcWidth, srcHeight, NVCV_BGR, NVCV_U8, NVCV_INTERLEAVED, NVCV_CPU, 0 );

- This example creates an object in which the blue, green, and red components are in separate planes, the buffer resides on the GPU, and the byte alignment ensures that no gap exists between one scanline and the next scanline.

NvCVImage gpuSrc( srcWidth, srcHeight, NVCV_BGR, NVCV_U8, NVCV_PLANAR, NVCV_GPU, 1 );

1.4.2.2. Using Image Functions to Allocate a Buffer

By declaring an empty image, you can defer buffer allocation.

- Declare an empty

NvCVImageobject.NvCVImage xfr;

- Allocate or reallocate the buffer for the image.

- To allocate the buffer, call the

NvCVImage_Alloc()function.Allocate a buffer this way when the image is part of a state structure, where you will not know the size of the image until later.

- To reallocate a buffer, call

NvCVImage_Realloc().This function checks for an allocated buffer and reshapes the buffer if it is big enough before freeing the buffer and calling

NvCVImage_Alloc().

- To allocate the buffer, call the

1.4.3. Transferring Images Between CPU and GPU Buffers

If the memory types of the input and output image buffers are different, an application can transfer images between CPU and GPU buffers.

1.4.3.1. Transferring Input Images from a CPU Buffer to a GPU Buffer

Here are the steps to transfer input images from a CPU buffer to a GPU buffer.

- Create an

NvCVImageobject to use as a staging GPU buffer that has the same dimensions and format as the source CPU buffer.NvCVImage srcGpuPlanar(inWidth, inHeight, NVCV_BGR, NVCV_F32, NVCV_PLANAR, NVCV_GPU,1)

- Create a staging buffer in one of the following ways:

- To avoid allocating memory in a video pipeline, create a GPU buffer that has the same dimensions and format as required for input to the video effect filter.

NvCVImage srcGpuStaging(inWidth, inHeight, srcCPUImg.pixelFormat, srcCPUImg.componentType, srcCPUImg.planar, NVCV_GPU)

- To simplify your application program code, declare an empty staging buffer.

NvCVImage srcGpuStaging;

An appropriate buffer will be allocated or reallocated as needed.

- To avoid allocating memory in a video pipeline, create a GPU buffer that has the same dimensions and format as required for input to the video effect filter.

- Call the

NvCVImage_Transfer()function to copy the source CPU buffer contents into the final GPU buffer via the staging GPU buffer.//Read the image into srcCPUImg NvCVImage_Transfer(&srcCPUImg, &srcGPUPlanar, 1.0f, stream, &srcGPUStaging)

1.4.3.2. Transferring Output Images from a GPU Buffer to a CPU Buffer

Here are the steps to transfer output images from a CPU buffer to a GPU buffer.

- Create an

NvCVImageobject to use as a staging GPU buffer that has the same dimensions and format as the destination CPU buffer.NvCVImage dstGpuPlanar(outWidth, outHeight, NVCV_BGR, NVCV_F32, NVCV_PLANAR, NVCV_GPU, 1)

For more information about

NvCVImage, refer to the NvCVImage API Guide. - Create a staging buffer in one of the following ways:

- To avoid allocating memory in a video pipeline, create a GPU buffer that has the same dimensions and format as the output of the video effect filter.

NvCVImage dstGpuStaging(outWidth, outHeight, dstCPUImg.pixelFormat, dstCPUImg.componentType, dstCPUImg.planar, NVCV_GPU)

- To simplify your application program code, declare an empty staging buffer:

NvCVImage dstGpuStaging;

An appropriately sized buffer will be allocated as needed.

- To avoid allocating memory in a video pipeline, create a GPU buffer that has the same dimensions and format as the output of the video effect filter.

- Call the

NvCVImage_Transfer()function to copy the GPU buffer contents into the destination CPU buffer via the staging GPU buffer.//Retrieve the image from the GPU to CPU, perhaps with conversion. NvCVImage_Transfer(&dstGpuPlanar, &dstCPUImg, 1.0f, stream, &dstGpuStaging);

1.5. List of Properties for the AR SDK Features

This section provides the properties and their values for the features in the AR SDK.

1.5.1. Face Detection and Tracking Property Values

The following tables list the values for the configuration, input, and output properties for Face Detection and Tracking.

| Property Name | Value |

|---|---|

FeatureDescription |

String is free-form text that describes the feature. The string is set by the SDK and cannot be modified by the user. |

CUDAStream |

The CUDA stream, which is set by the user. |

ModelDir |

String that contains the path to the folder that contains the TensorRT package files. Set by the user. |

Temporal |

Unsigned integer, 1/0 to enable/disable the temporal optimization of face detection. If enabled, only one face is returned. Refer to Face Detection and Tracking for more information. Set by the user. |

| Property Name | Value |

|---|---|

BoundingBoxes |

To be allocated by the user. |

BoundingBoxesConfidence |

An array of single-precision (32-bit) floating-point numbers that contain the confidence values for each detected face box. To be allocated by the user. |

1.5.2. Landmark Tracking Property Values

The following tables list the values for the configuration, input, and output properties for landmark tracking.

| Property Name | Value |

|---|---|

FeatureDescription |

String that describes the feature. |

CUDAStream |

The CUDA stream. Set by the user. |

ModelDir |

String that contains the path to the folder that contains the TensorRT package files. Set by the user. |

BatchSize |

The number of inferences to be run at one time on the GPU. The maximum value is 8. Temporal optimization of landmark detection is only supported for |

Landmarks_Size |

Unsigned integer, 68 or 126. Specifies the number of landmark points (X and Y values) to be returned. Set by the user. |

LandmarksConfidence_Size |

Unsigned integer, 68 or 126. Specifies the number of landmark confidence values for the detected keypoints to be returned. Set by the user. |

Temporal |

Unsigned integer, 1/0 to enable/disable the temporal optimization of landmark detection. If enabled, only one input bounding box is supported as the input. Refer to Landmark Detection and Tracking for more information. Set by the user. |

Mode |

(Optional) Unsigned integer. Set 0 to enable Performance mode (default) or 1 to enable Quality mode for Landmark detection. Set by the user. |

| Property Name | Value |

|---|---|

Image |

Interleaved (or chunky) 8-bit BGR input image in a CUDA buffer of type To be allocated and set by the user. |

BoundingBoxes |

If not specified as an input property, face detection is automatically run on the input image. Refer to Landmark Detection and Tracking for more information. To be allocated by the user. |

| Property Name | Value |

|---|---|

Landmarks |

To be allocated by the user. |

Pose |

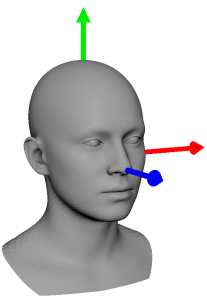

The coordinate convention is followed as per OpenGL standards. For example, when seen from the camera, X is right, Y is up , and Z is back/towards the camera. To be allocated by the user. |

LandmarksConfidence |

An array of single-precision (32-bit) floating-point numbers, which must be large enough to hold the number of confidence values given by the product of the following:

To be allocated by the user. |

BoundingBoxes |

To be allocated by the user. |

1.5.3. Face 3D Mesh Tracking Property Values

The following tables list the values for the configuration, input, and output properties for Face 3D Mesh tracking.

| Property Name | Value |

|---|---|

FeatureDescription |

String that describes the feature. This property is read-only. |

ModelDir |

String that contains the path to the face model, and the TensorRT package files. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. Set by the user. |

CUDAStream |

(Optional) The CUDA stream. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. Set by the user. |

Temporal |

(Optional) Unsigned integer, 1/0 to enable/disable the temporal optimization of face and landmark detection. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. Set by the user. |

Mode |

(Optional) Unsigned integer. Set 0 to enable Performance mode (default) or 1 to enable Quality mode for Landmark detection. Set by the user. |

Landmarks_Size |

Unsigned integer, 68 or 126. If landmark detection is run internally, the confidence values for the detected key points are returned. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. |

ShapeEigenValueCount |

The number of eigenvalues that describe the identity shape. Query this to determine how big the eigenvalue array should be, if that is a desired output. This property is read-only. |

ExpressionCount |

The number of expressions available in the chosen model. Query this to determine how big the expression coefficient array should be, if that is the desired output. This property is read-only. |

VertexCount |

The number of vertices in the chosen model. Query this property to determine how big the vertex array should be, where This property is read-only. |

TriangleCount |

The number of triangles in the chosen model. Query this property to determine how big the triangle array should be, where This property is read-only. |

GazeMode |

Flag to toggle gaze mode. The default value is 0. If the value is 1, gaze estimation will be explicit. |

| Property Name | Value |

|---|---|

Width |

The width of the input image buffer that contains the face to which the face model will be fitted. Set by the user. |

Height |

The height of the input image buffer that contains the face to which the face model will be fitted. Set by the user. |

Landmarks |

An NvAR_Point2f array that contains the landmark points of size If landmarks are not provided to this feature, an input image must be provided. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. To be allocated by the user. |

Image |

An interleaved (or chunky) 8-bit BGR input image in a CUDA buffer of type If an input image is not provided as input, the landmark points must be provided to this feature as input. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. To be allocated by the user. |

| Property Name | Value |

|---|---|

FaceMesh |

To be allocated by the user. Query |

RenderingParams |

To be allocated by the user. |

Landmarks |

An Refer to Alternative Usage of the Face 3D Mesh Feature for more information. To be allocated by the user. |

Pose |

The coordinate convention is followed as per OpenGL standards. For example, when seen from the camera, X is right, Y is up , and Z is back/towards the camera. To be allocated by the user. |

LandmarksConfidence |

An array of single-precision (32-bit) floating-point numbers, which must be large enough to hold the number of confidence values of size To be allocated by the user. |

BoundingBoxes |

To be allocated by the user. |

BoundingBoxesConfidence |

An array of single-precision (32-bit) floating-point numbers that contain the confidence values for each detected face box. Refer to Alternative Usage of the Face 3D Mesh Feature for more information. To be allocated by the user. |

ShapeEigenValues |

Optional: The array into which the shape eigenvalues will be placed, if desired. Query To be allocated by the user. |

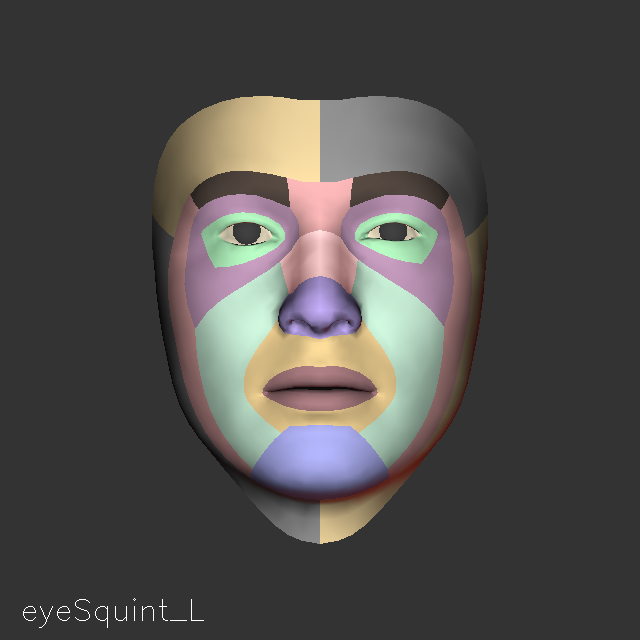

ExpressionCoefficients |

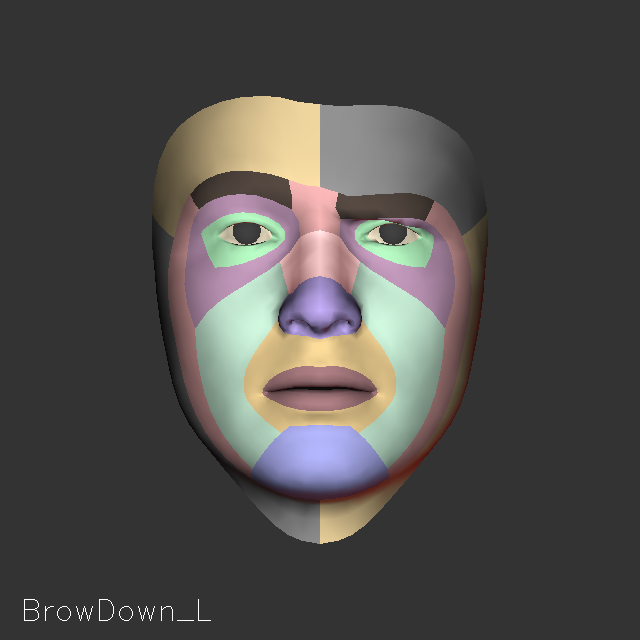

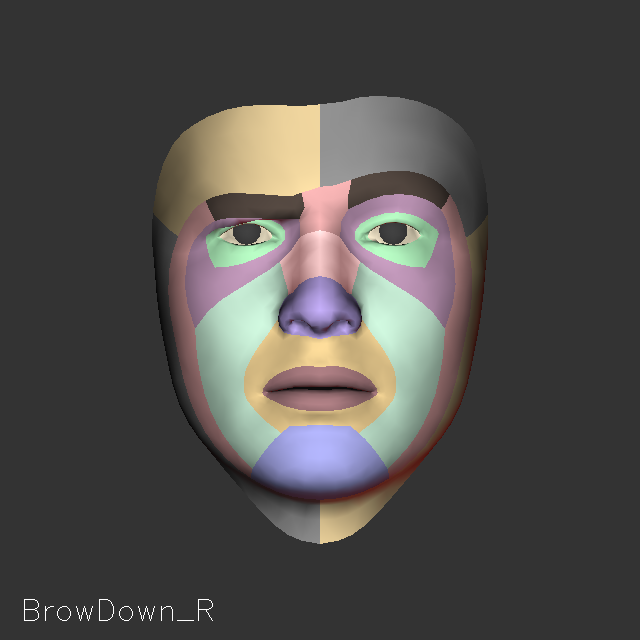

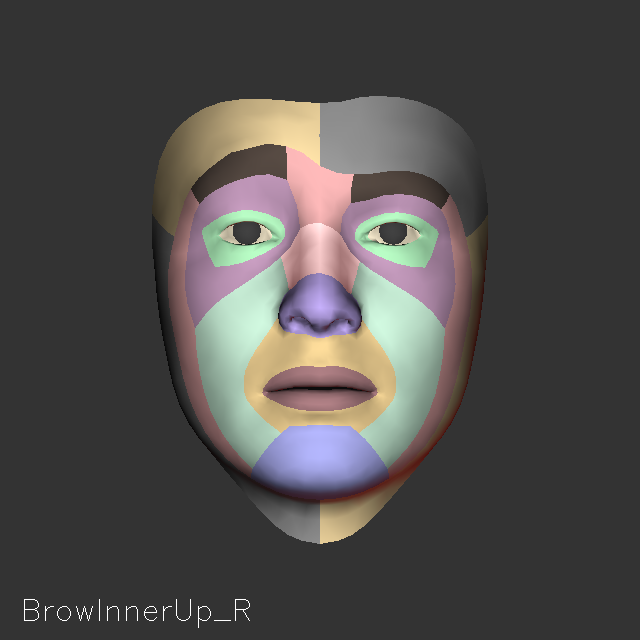

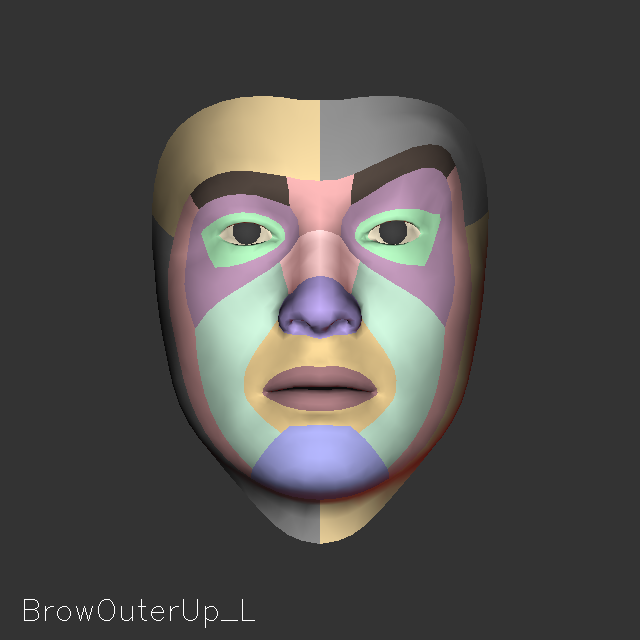

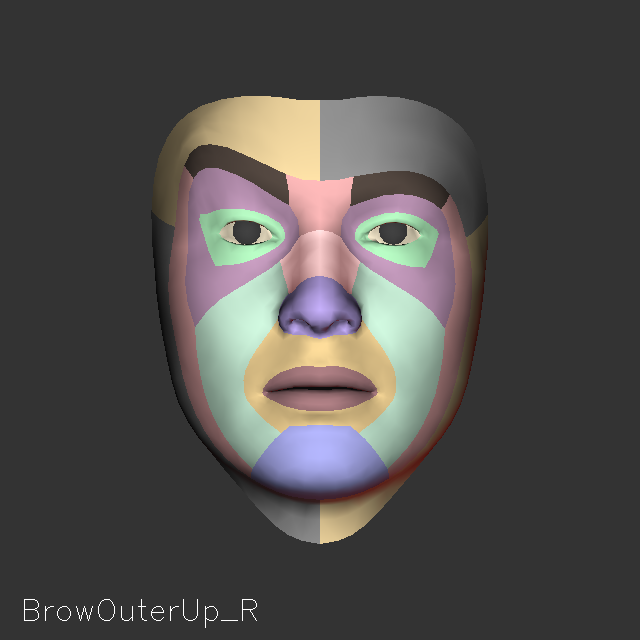

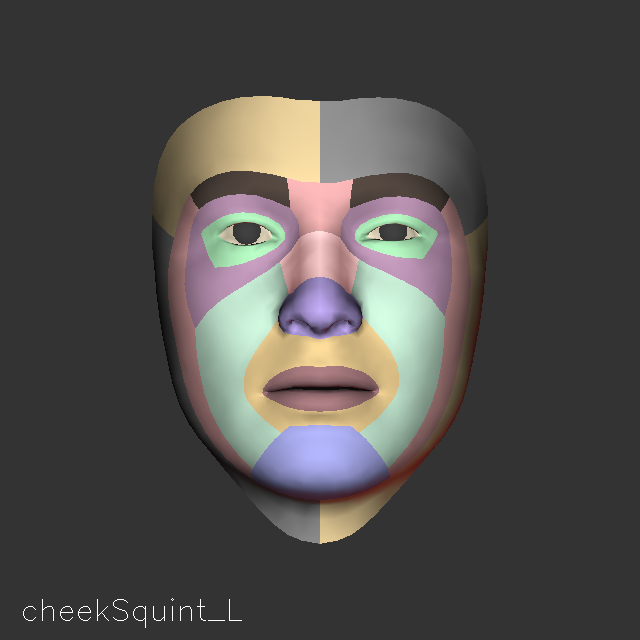

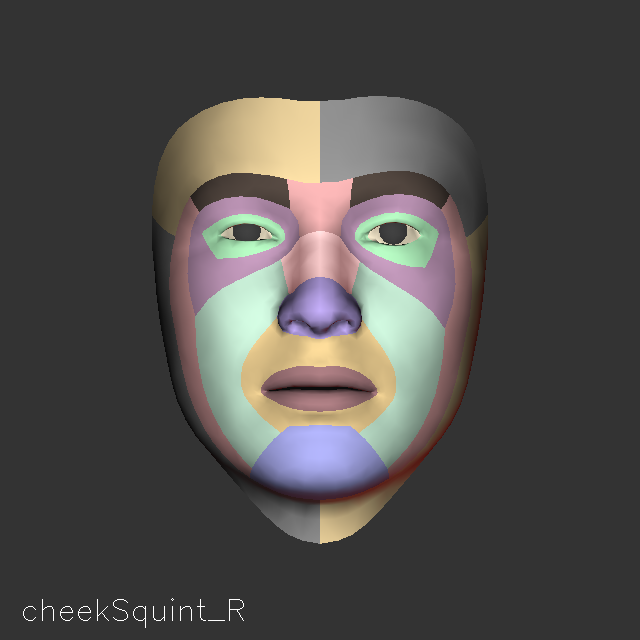

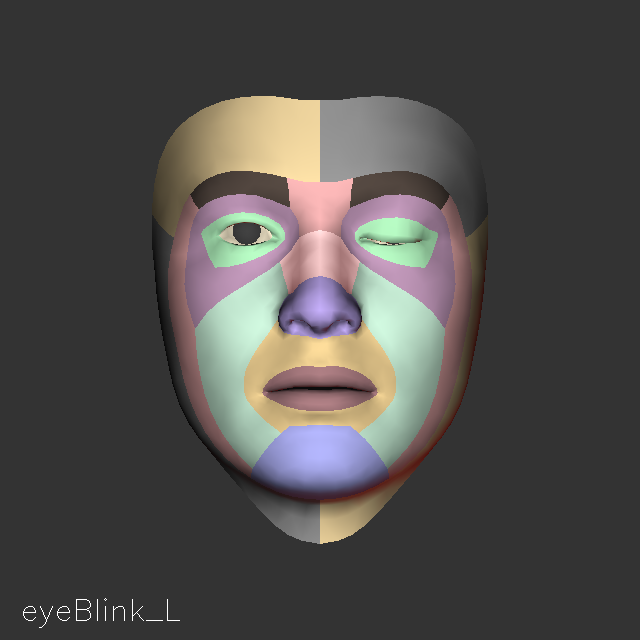

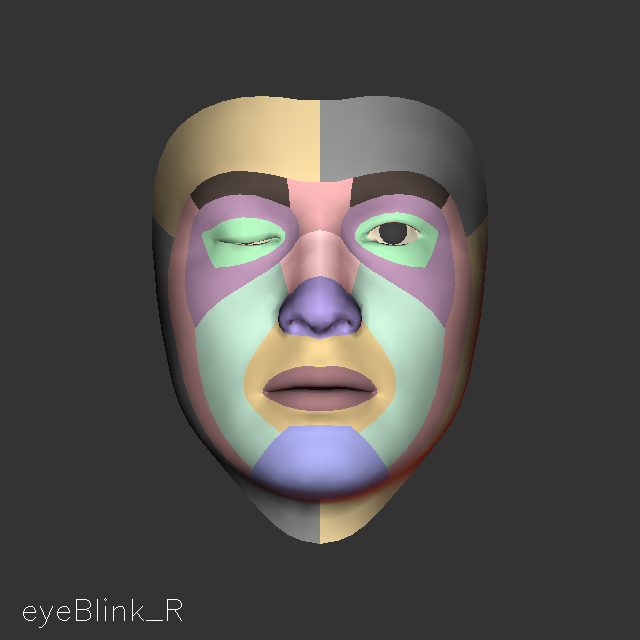

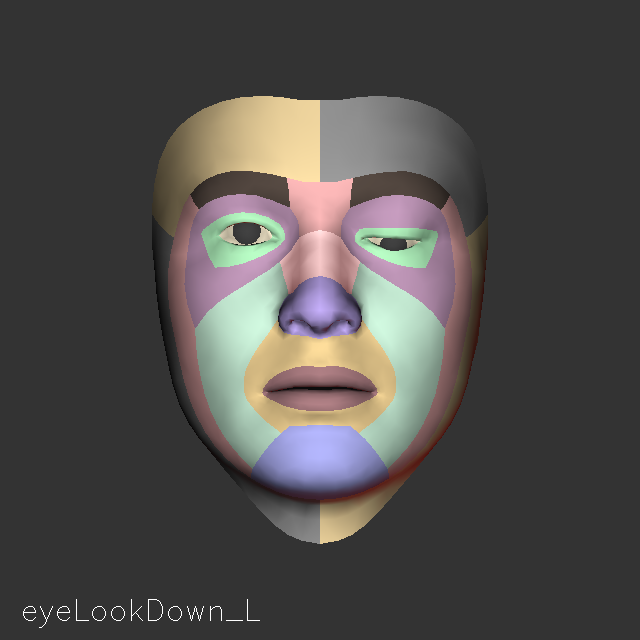

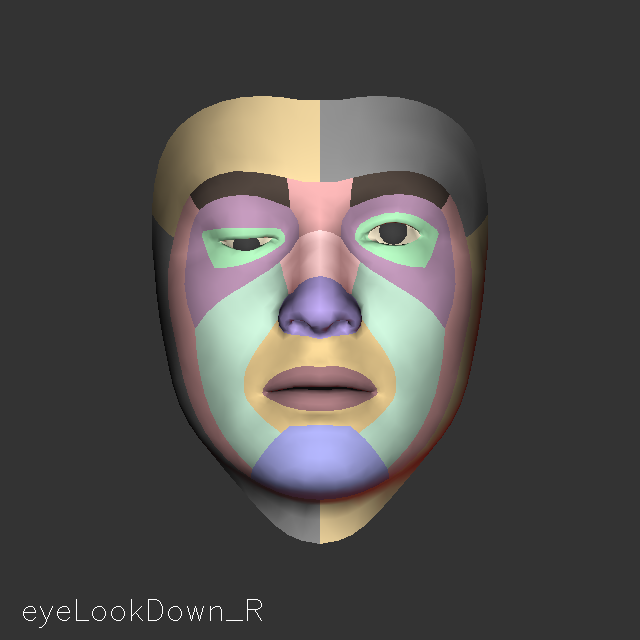

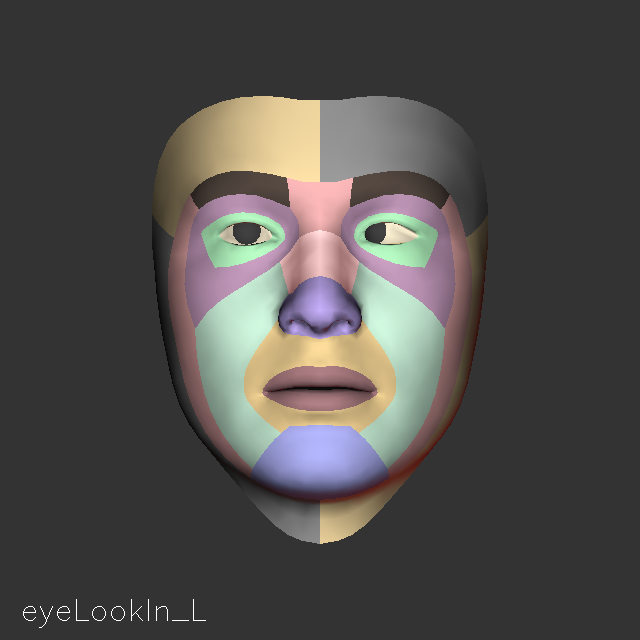

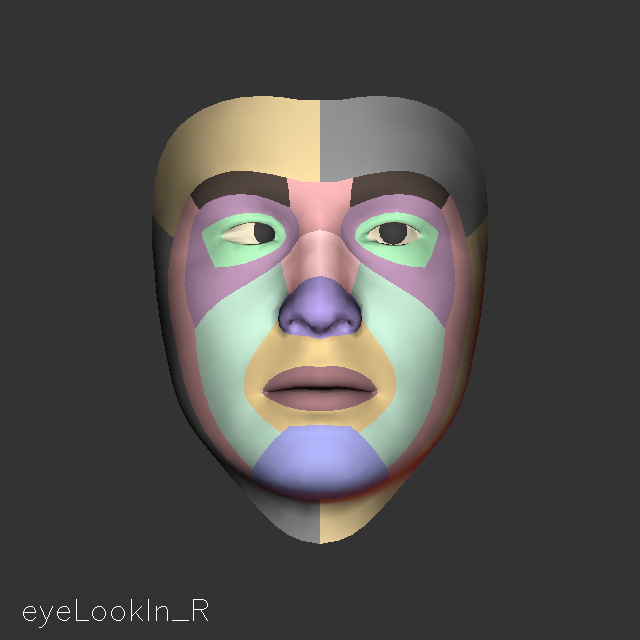

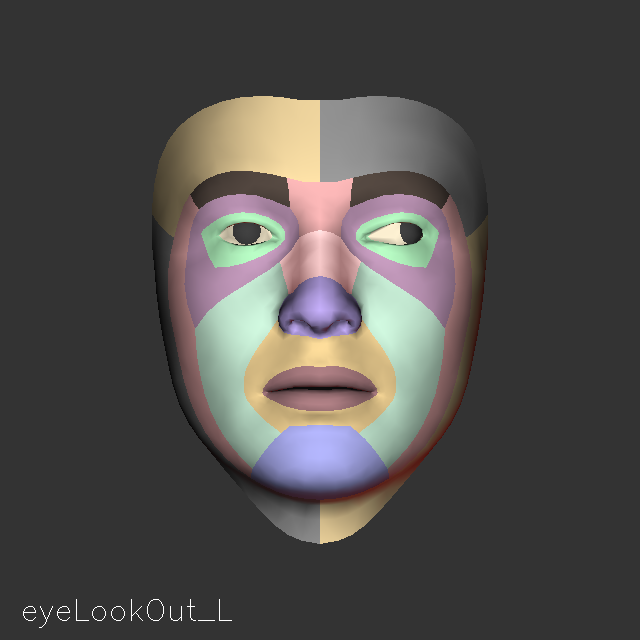

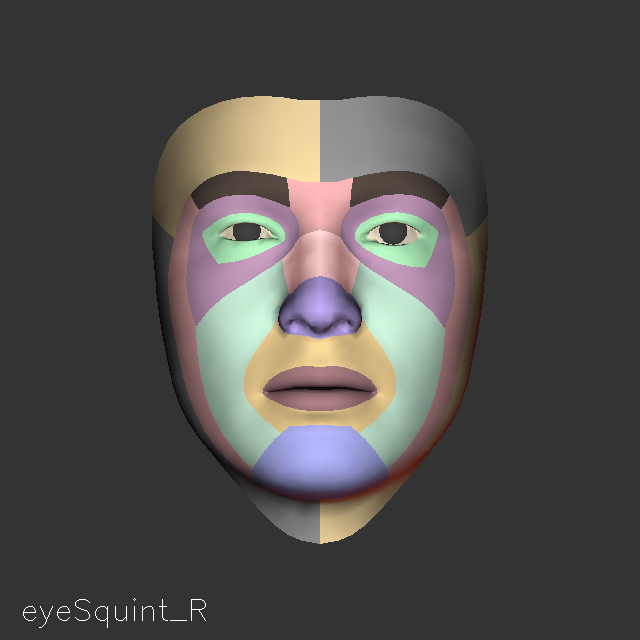

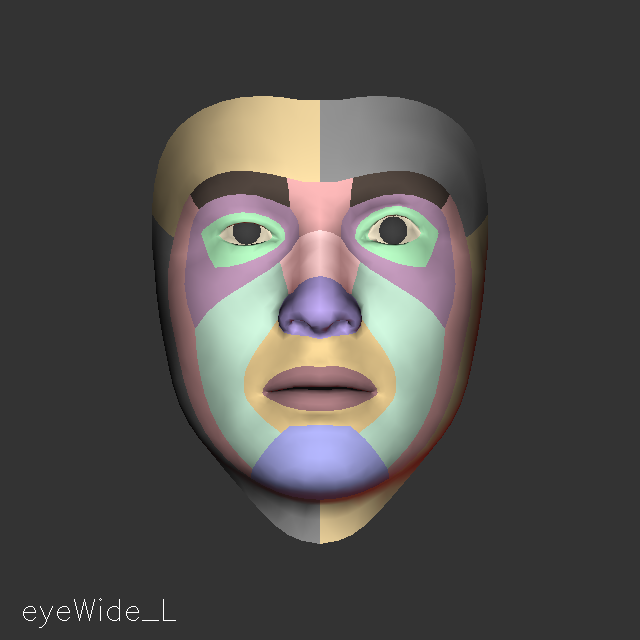

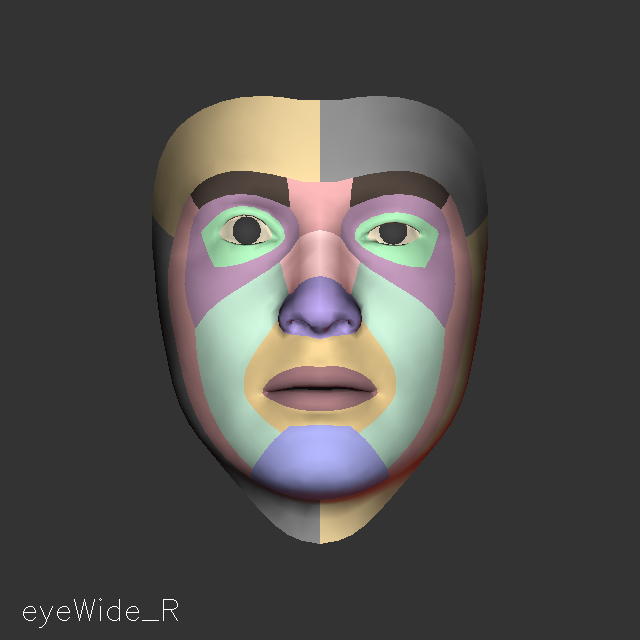

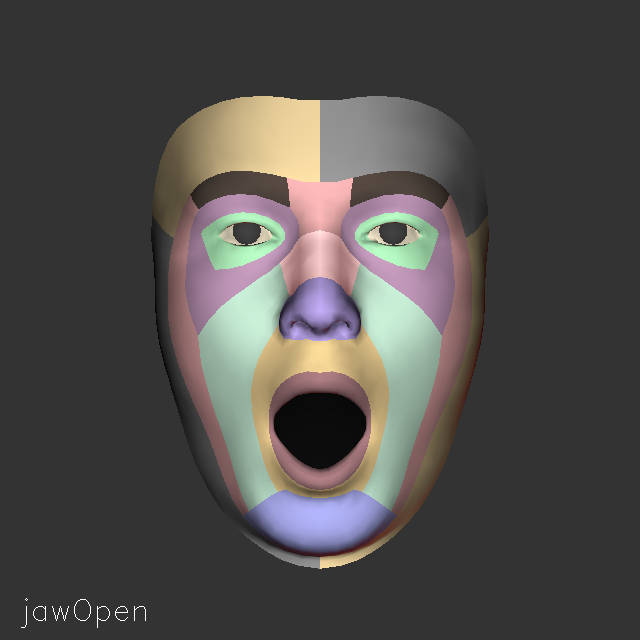

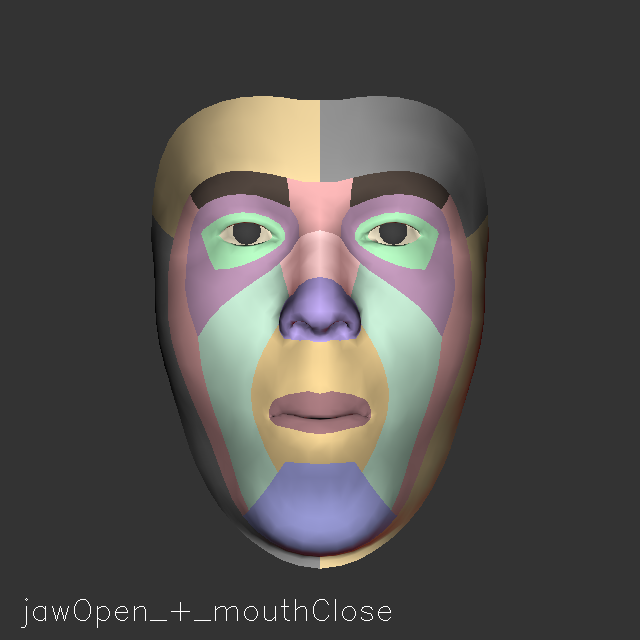

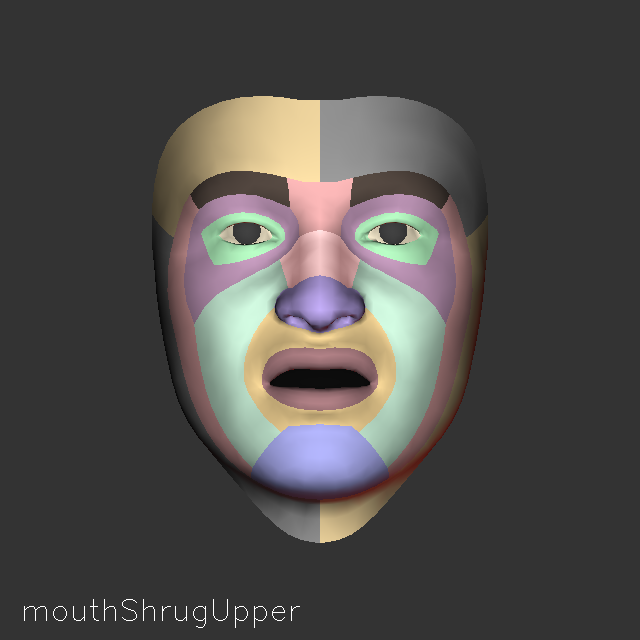

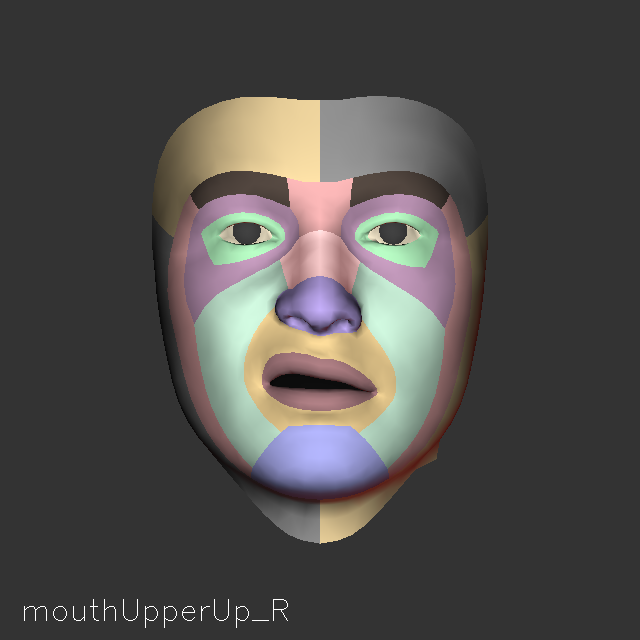

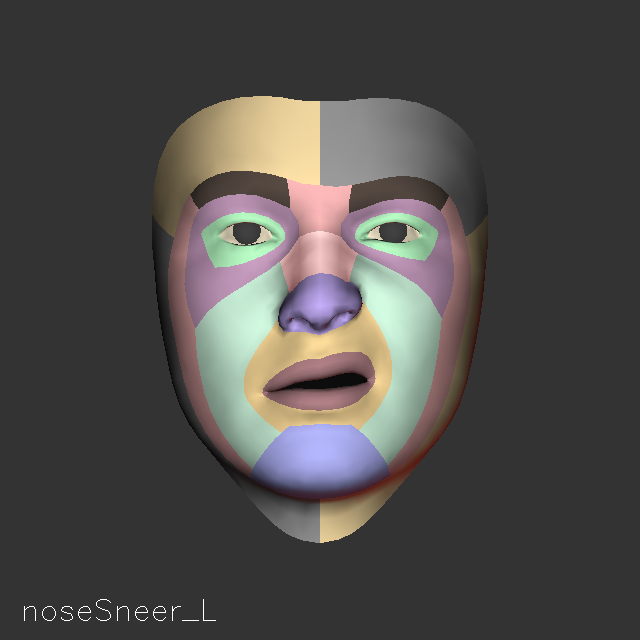

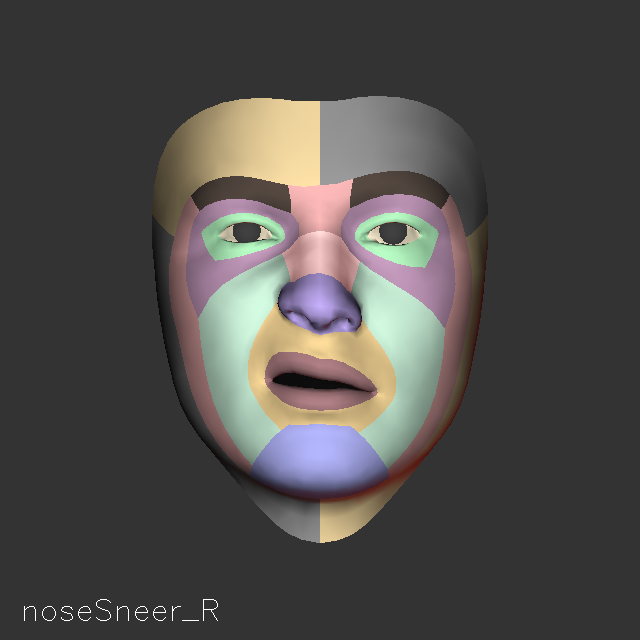

Optional: The array into which the expression coefficients will be placed, if desired. Query To be allocated by the user. The corresponding expression shapes for face_model2.nvf are in the following order:

|

1.5.4. Eye Contact Property Values

The following tables list the values for gaze redirection.

| Property Name | Value |

|---|---|

FeatureDescription |

String that describes the feature. |

CUDAStream |

The CUDA stream. Set by the user. |

ModelDir |

String that contains the path to the folder that contains the TensorRT package files. Set by the user. |

BatchSize |

The number of inferences to be run at one time on the GPU. The maximum value is 1. |

Landmarks_Size |

Unsigned integer, 68 or 126. Specifies the number of landmark points (X and Y values) to be returned. Set by the user. |

LandmarksConfidence_Size |

Unsigned integer, 68 or 126. Specifies the number of landmark confidence values for the detected keypoints to be returned. Set by the user. |

GazeRedirect |

Flag to enable or disable gaze redirection. When enabled, the gaze is estimated, and the redirected image is set as the output. When disabled, the gaze is estimated, but redirection does not occur. |

Temporal |

Unsigned integer and 1/0 to enable/disable the temporal optimization of landmark detection. Set by the user. |

DetectClosure |

Flag to toggle detection of eye closure and occlusion on/off. Default - ON |

EyeSizeSensitivity |

Unsigned integer in the range of 2-5 to increase the sensitivity of the algorithm to the redirected eye size. 2 uses a smaller eye region and 5 uses a larger eye size. |

UseCudaGraph |

Bool, True or False. Default is False Flag to use CUDA Graphs for optimization. Set by the user. |

| Property Name | Value |

|---|---|

Image |

Interleaved (or chunky) 8-bit BGR input image in a CUDA buffer of type To be allocated and set by the user. |

Width |

The width of the input image buffer that contains the face to which the face model will be fitted. Set by the user. |

Height |

The height of the input image buffer that contains the face to which the face model will be fitted. Set by the user. |

Landmarks |

Optional: An NvAR_Point2f array that contains the landmark points of size If landmarks are not provided to this feature, an input image must be provided. See Alternative Usage of the Face 3D Mesh Feature for more information. To be allocated by the user. |

| Property Name | Value |

|---|---|

Landmarks |

NvAR_Point2f array, which must be large enough to hold the number of points given by the product of the following:

To be allocated by the user. |

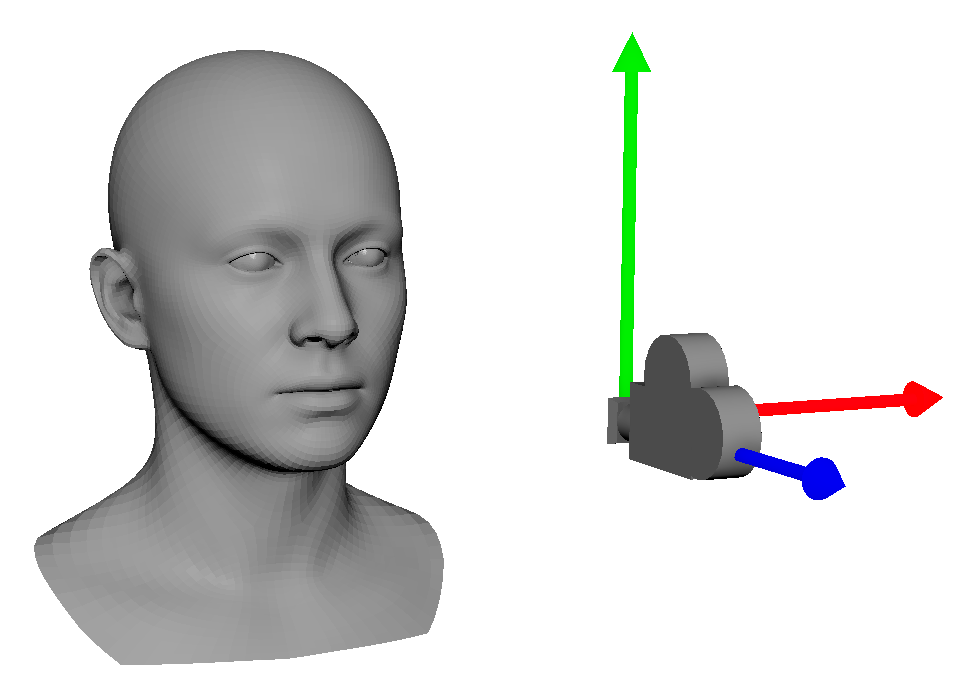

HeadPose |

(Optional) NvAR_Quaternion array, which must be large enough to hold the number of quaternions equal to NvAR_Parameter_Config(BatchSize). The OpenGL standards coordinate convention is used, which is when you look up from a camera, the coordinates are camera X - right, Y - up , and Z - back/towards the camera. To be allocated by the user. |

LandmarksConfidence |

Optional: An array of single-precision (32-bit) floating-point numbers, which must be large enough to hold the number of confidence values given by the product of the following:

To be allocated by the user. |

BoundingBoxes |

Optional: NvAR_BBoxes structure that contains the detected face through face detection performed by the landmark detection feature. Refer to Landmark Detection and Tracking for more information. To be allocated by the user. |

OutputGazeVector |

Float array, which must be large enough to hold two values (pitch and yaw) for the gaze angle in radians per image. For batch sizes larger than 1, it should hold To be allocated by the user. |

OutputHeadTranslation |

Optional: Float array, which must be large enough to hold the (x,y,z) head translations per image. For batch sizes larger than 1 it should hold To be allocated by the user. |

GazeDirection |

Each element contains two To be allocated by the user. |

1.5.5. Body Detection Property Values

The following tables list the values for the configuration, input, and output properties for Body Detection racking.

| Property Name | Name |

|---|---|

FeatureDescription |

String is free-form text that describes the feature. The string is set by the SDK and cannot be modified by the user. |

CUDAStream |

The CUDA stream, which is set by the user. |

ModelDir |

String that contains the path to the folder that contains the TensorRT package files. Set by the user. |

Temporal |

Unsigned integer, 1/0 to enable/disable the temporal optimization of Body Pose Tracking. Set by the user. |

| Property Name | Value |

|---|---|

BoundingBoxes |

To be allocated by the user. |

BoundingBoxesConfidence |

An array of single-precision (32-bit) floating-point numbers that contain the confidence values for each detected body box. To be allocated by the user. |

1.5.6. 3D Body Pose Keypoint Tracking Property Values

The following tables list the values for the configuration, input, and output properties for 3D Body Pose Keypoint Tracking racking.

| Property Name | Value |

|---|---|

FeatureDescription |

String that describes the feature. |

CUDAStream |

The CUDA stream. Set by the user. |

ModelDir |

String that contains the path to the folder that contains the TensorRT package files. Set by the user. |

BatchSize |

The number of inferences to be run at one time on the GPU. The maximum value is 1. |

Mode |

Unsigned integer, 0 or 1. Default is 1. Selects the High Performance (1) mode or High Quality (0) mode Set by the user. |

UseCudaGraph |

Bool, True or False. Default is True Flag to use CUDA Graphs for optimization. Set by the user. |

Temporal |

Unsigned integer and 1/0 to enable/disable the temporal optimization of Body Pose tracking. Set by the user. |

NumKeyPoints |

Unsigned integer. Specifies the number of keypoints available, which is currently 34. |

ReferencePose |

Specifies the Reference Pose used to compute the joint angles. |

TrackPeople |

Unsigned integer and 1/0 to enable/disable multi-person tracking in Body Pose. Set by the user. Available only on Windows. |

ShadowTrackingAge |

Unsigned integer. Specifies the period after which the multi-person tracker stops tracking the object in shadow mode. This value is measured in the number of frames. Set by the user, and the default value is 90. Available only on Windows. |

ProbationAge |

Unsigned integer. Specifies the period after which the multi-person tracker marks the object valid and assigns an ID for tracking. This value is measured in the number of frames. Set by the user, and the default value is 10. Available only on Windows. |

MaxTargetsTracked |

Unsigned integer. Specifies the maximum number of targets to be tracked by the multi-person tracker. After the tracking is complete, the new targets are discarded. Set by the user, and the default value is 30. Available only on Windows. |

| Property Name | Value |

|---|---|

Image |

Interleaved (or chunky) 8-bit BGR input image in a CUDA buffer of type To be allocated and set by the user. |

FocalLength |

Float. Default is 800.79041 Specifies the focal length of the camera to be used for 3D Body Pose. Set by the user. |

BoundingBoxes |

If not specified as an input property, body detection is automatically run on the input image. To be allocated by the user. |

1.5.7. Facial Expression Estimation Property Values

The following tables list the values for the configuration, input, and output properties for Facial Expression Estimation.

| Property Name | Value |

|---|---|

FeatureDescription |

String that describes the feature. This property is read-only. |

ModelDir |

String that contains the path to the folder that contains the TensorRT package files. Set by the user. |

CUDAStream |

(Optional) The CUDA stream. Set by the user. |

Temporal |

(Optional) Bitfield to control temporal filtering.

Set by the user. |

Landmarks_Size |

Unsigned integer, 68 or 126. Required array size of detected facial landmark points. To accommodate the {x,y} location of each of the detected points, the length of the array must be 126. |

ExpresionCount |

Unsigned integer. The number of expressions in the face model. |

PoseMode |

Determines how to compute pose. 0=3DOF implicit (default), 1=6DOF explicit. 6DOF (6 degrees of freedom) is required for 3D translation output. |

Mode |

Flag to toggle landmark mode. Set 0 to enable Performance model for landmark detection. Set 1 to enable Quality model for landmark detection for higher accuracy. Default - 1. |

EnableCheekPuff |

(Experimental) Enables cheek puff blendshapes |

| Property Name | Value |

|---|---|

Landmarks |

(Optional) An If landmarks are not provided to this feature, an input image must be provided. To be allocated by the user. |

Image |

(Optional) An interleaved (or chunky) 8-bit BGR input image in a CUDA buffer of type NvCVImage. If an input image is not provided as input, the landmark points must be provided to this feature as input. To be allocated by the user. |

CameraIntrinsicParams |

Optional: Camera intrinsic parameters. Three element float array with elements corresponding to focal length, cx, cy intrinsics respectively, of an ideal perspective camera. Any barrel or fisheye distortion should be removed or considered negligible. Only used if PoseMode = 1. |

| Property Name | Value |

|---|---|

Landmarks |

(Optional) An NvAR_Point2f array, which must be large enough to hold the number of points of size NvAR_Parameter_Config(Landmarks_Size). |

Pose |

(Optional) To be allocated by the user. |

PoseTranslation |

Optional: To be allocated by the user. |

LandmarksConfidence |

(Optional) An array of single-precision (32-bit) floating-point numbers, which must be large enough to hold the number of confidence values of size To be allocated by the user. |

BoundingBoxes |

(Optional) To be allocated by the user. |

BoundingBoxesConfidence |

(Optional) An array of single-precision (32-bit) floating-point numbers that contain the confidence values for each detected face box. To be allocated by the user. |

ExpressionCoefficients |

The array into which the expression coefficients will be placed, if desired. Query To be allocated by the user. The corresponding expression shapes are in the following order:

|

1.6. Using the AR Features

This section provides information about how to use the AR features.

1.6.1. Face Detection and Tracking

This section provides information about how to use the Face Detection and Tracking feature.

1.6.1.1. Face Detection for Static Frames (Images)

To obtain detected bounding boxes, you can explicitly instantiate and run the face detection feature as below, with the feature taking an image buffer as input.

This example runs the Face Detection AR feature with an input image buffer and output memory to hold bounding boxes:

//Set input image buffer

NvAR_SetObject(faceDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Set output memory for bounding boxes

NvAR_BBoxes = output_boxes{};

output_bboxes.boxes = new NvAR_Rect[25];

output_bboxes.max_boxes = 25;

NvAR_SetObject(faceDetectHandle, NvAR_Parameter_Output(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes));

//OPTIONAL – Set memory for bounding box confidence values if desired

NvAR_Run(faceDetectHandle);

1.6.1.2. Face Tracking for Temporal Frames (Videos)

If Temporal is enabled, for example when you process a video frame instead of an image, only one face is returned. The largest face appears for the first frame, and this face is subsequently tracked over the following frames.

However, explicitly calling the face detection feature is not the only way to obtain a bounding box that denotes detected faces. Refer to Landmark Detection and Tracking and Face 3D Mesh and Tracking for more information about how to use the Landmark Detection or Face3D Reconstruction AR features and return a face bounding box.

1.6.2. Landmark Detection and Tracking

This section provides information about how to use the Landmark Detection and Tracking feature.

1.6.2.1. Landmark Detection for Static Frames (Images)

Typically, the input to the landmark detection feature is an input image and a batch (up to 8) of bounding boxes. Currently, the maximum value is 1. These boxes denote the regions of the image that contain the faces on which you want to run landmark detection.

This example runs the Landmark Detection AR feature after obtaining bounding boxes from Face Detection:

//Set input image buffer

NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Pass output bounding boxes from face detection as an input on which //landmark detection is to be run

NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Input(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes));

//Set landmark detection mode: Performance[0] (Default) or Quality[1]

unsigned int mode = 0; // Choose performance mode

NvAR_SetU32(landmarkDetectHandle, NvAR_Parameter_Config(Mode), mode);

//Set output buffer to hold detected facial keypoints

std::vector<NvAR_Point2f> facial_landmarks;

facial_landmarks.assign(OUTPUT_SIZE_KPTS, {0.f, 0.f});

NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Output(Landmarks), facial_landmarks.data(),sizeof(NvAR_Point2f));

NvAR_Run(landmarkDetectHandle);

1.6.2.2. Alternative Usage of Landmark Detection

As described in the Configuration Properties for Landmark Tracking table in Landmark Tracking Property Values, the Landmark Detection AR feature supports some optional parameters that determine how the feature can be run.

If bounding boxes are not provided to the Landmark Detection AR feature as inputs, face detection is automatically run on the input image, and the largest face bounding box is selected on which to run landmark detection.

If BoundingBoxes is set as an output property, the property is populated with the selected bounding box that contains the face on which the landmark detection was run. Landmarks is not an optional property and, to explicitly run this feature, this property must be set with a provided output buffer.

1.6.2.3. Landmark Tracking for Temporal Frames (Videos)

Additionally, if Temporal is enabled for example when you process a video stream and face detection is run explicitly, only one bounding box is supported as an input for landmark detection.

When face detection is not explicitly run, by providing an input image instead of a bounding box, the largest detected face is automatically selected. The detected face and landmarks are then tracked as an optimization across temporally related frames.

The internally determined bounding box can be queried from this feature but is not required for the feature to run.

This example uses the Landmark Detection AR feature to obtain landmarks directly from the image, without first explicitly running Face Detection:

//Set input image buffer

NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Set output memory for landmarks

std::vector<NvAR_Point2f> facial_landmarks;

facial_landmarks.assign(batchSize * OUTPUT_SIZE_KPTS, {0.f, 0.f});

NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Output(Landmarks), facial_landmarks.data(),sizeof(NvAR_Point2f));

//Set landmark detection mode: Performance[0] (Default) or Quality[1]

unsigned int mode = 0; // Choose performance mode

NvAR_SetU32(landmarkDetectHandle, NvAR_Parameter_Config(Mode), mode);

//OPTIONAL – Set output memory for bounding box if desired

NvAr_BBoxes = output_boxes{};

output_bboxes.boxes = new NvAR_Rect[25];

output_bboxes.max_boxes = 25;

NvAR_SetObject(landmarkDetectHandle, NvAR_Parameter_Output(BoundingBoxes), &output_bboxes, sizeof(NvAr_BBoxes));

//OPTIONAL – Set output memory for pose, landmark confidence, or even bounding box confidence if desired

NvAR_Run(landmarkDetectHandle);

1.6.3. Face 3D Mesh and Tracking

This section provides information about how to use the Face 3D Mesh and Tracking feature.

1.6.3.1. Face 3D Mesh for Static Frames (Images)

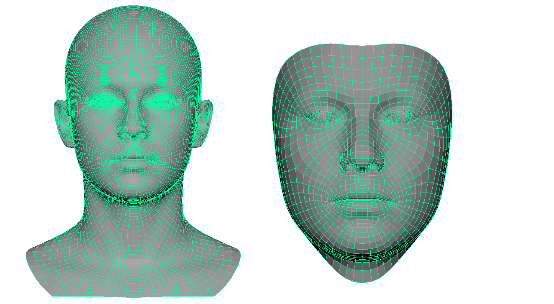

Typically, the input to Face 3D Mesh feature is an input image and a set of detected landmark points corresponding to the face on which we want to run 3D reconstruction.

Here is the typical usage of this feature, where the detected facial keypoints from the Landmark Detection feature are passed as input to this feature:

//Set facial keypoints from Landmark Detection as an input

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Input(Landmarks), facial_landmarks.data(),sizeof(NvAR_Point2f));

//Set output memory for face mesh

NvAR_FaceMesh face_mesh = new NvAR_FaceMesh();

face_mesh->vertices = new NvAR_Vector3f[FACE_MODEL_NUM_VERTICES];

face_mesh->tvi = new NvAR_Vector3u16[FACE_MODEL_NUM_INDICES];

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Output(FaceMesh), face_mesh, sizeof(NvAR_FaceMesh));

//Set output memory for rendering parameters

NvAR_RenderingParams rendering_params = new NvAR_RenderingParams();

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Output(RenderingParams), rendering_params, sizeof(NvAR_RenderingParams));

NvAR_Run(faceFitHandle);

1.6.3.2. Alternative Usage of the Face 3D Mesh Feature

Similar to the alternative usage of the Landmark detection feature, the Face 3D Mesh AR feature can be used to determine the detected face bounding box, the facial keypoints, and a 3D face mesh and its rendering parameters.

Instead of the facial keypoints of a face, if an input image is provided, the face and the facial keypoints are automatically detected and used to run the face mesh fitting. When run this way, if BoundingBoxes and/or Landmarks are set as optional output properties for this feature, these properties will be populated with the bounding box that contains the face and the detected facial keypoints respectively.

FaceMesh and RenderingParams are not optional properties for this feature, and to run the feature, these properties must be set with user-provided output buffers.

Additionally, if this feature is run without providing facial keypoints as an input, the path pointed to by theModelDir config parameter must also contain the face and landmark detection TRT package files. Optionally, the CUDAStream and the Temporal flag can be set for those features.

1.6.3.3. Face 3D Mesh Tracking for Temporal Frames (Videos)

If the Temporal flag is set and face and landmark detection are run internally, these features will be optimized for temporally related frames

This means that face and facial keypoints will be tracked across frames, and only one bounding box will be returned, if requested, as an output. The Temporal flag is not supported by the Face 3D Mesh feature if Landmark Detection and/or Face Detection features are called explicitly. In that case, you will have to provide the flag directly to those features.

The facial keypoints and/or the face bounding box that were determined internally can be queried from this feature but are not required for the feature to run.

This example uses the Mesh Tracking AR feature to obtain the face mesh directly from the image, without explicitly running Landmark Detection or Face Detection:

//Set input image buffer instead of providing facial keypoints

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Set output memory for face mesh

NvAR_FaceMesh face_mesh = new NvAR_FaceMesh();

unsigned int n;

err = NvAR_GetU32(faceFitHandle, NvAR_Parameter_Config(VertexCount), &n);

face_mesh->num_vertices = n;

err = NvAR_GetU32(faceFitHandle, NvAR_Parameter_Config(TriangleCount), &n);

face_mesh->num_triangles = n;

face_mesh->vertices = new NvAR_Vector3f[face_mesh->num_vertices];

face_mesh->tvi = new NvAR_Vector3u16[face_mesh->num_triangles];

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Output(FaceMesh), face_mesh, sizeof(NvAR_FaceMesh));

//Set output memory for rendering parameters

NvAR_RenderingParams rendering_params = new NvAR_RenderingParams();

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Output(RenderingParams), rendering_params, sizeof(NvAR_RenderingParams));

//OPTIONAL - Set facial keypoints as an output

NvAR_SetObject(faceFitHandle, NvAR_Parameter_Output(Landmarks), facial_landmarks.data(),sizeof(NvAR_Point2f));

//OPTIONAL – Set output memory for bounding boxes, or other parameters, such as pose, bounding box/landmarks confidence, etc.

NvAR_Run(faceFitHandle);

1.6.4. Eye Contact

This feature estimates the gaze of a person from an eye patch that was extracted using landmarks and redirects the eyes to make the person look at the camera in a permissible range of eye and head angles.

The feature also supports a mode where the estimation can be obtained without redirection. The eye contact feature can be invoked by using the GazeRedirection feature ID. Eye contact feature has the following options:

- Gaze Estimation

- Gaze Redirection

In this release, gaze estimation and redirection of only one face in the frame is supported.

1.6.4.1. Gaze Estimation

The estimation of gaze requires face detection and landmarks as input. The inputs to the gaze estimator are an input image buffer and buffers to hold facial landmarks and confidence scores. The output of gaze estimation is the gaze vector (pitch, yaw) values in radians. A float array needs to be set as the output buffer to hold estimated gaze. The GazeRedirect parameter must be set to false.

This example runs the Gaze Estimation with an input image buffer and output memory to hold the estimated gaze vector:

bool bGazeRedirect=false

NvAR_SetU32(gazeRedirectHandle, NvAR_Parameter_Config(GazeRedirect), bGazeRedirect);

//Set input image buffer

NvAR_SetObject(gazeRedirectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Set output memory for gaze vector

float gaze_angles_vector[2];

NvvAR_SetF32Array(gazeRedirectHandle, NvAR_Parameter_Output(OutputGazeVector), gaze_angles_vector, batchSize * 2);

//OPTIONAL – Set output memory for landmarks, head pose, head translation and gaze direction if desired

std::vector<NvAR_Point2f> facial_landmarks;

facial_landmarks.assign(batchSize * OUTPUT_SIZE_KPTS, {0.f, 0.f});

NvAR_SetObject(gazeRedirectHandle, NvAR_Parameter_Output(Landmarks), facial_landmarks.data(),sizeof(NvAR_Point2f));

NvAR_Quaternion head_pose;

NvAR_SetObject(gazeRedirectHandle, NvAR_Parameter_Output(HeadPose), &head_pose, sizeof(NvAR_Quaternion));

float head_translation[3] = {0.f};

NvAR_SetF32Array(gazeRedirectHandle, NvAR_Parameter_Output(OutputHeadTranslation), head_translation,

batchSize * 3);

NvAR_Run(gazeRedirectHandle);

1.6.4.2. Gaze Redirection

Gaze redirection takes identical inputs as the gaze estimation. In addition to the outputs of gaze estimation, to store the gaze redirected image, an output image buffer of the same size as the input image buffer needs to be set.

The gaze will be redirected to look at the camera within a certain range of gaze angles and head poses. Outside this range, the feature disengages. Head pose, head translation, and gaze direction can be optionally set as outputs. The GazeRedirect parameter must be set to true.

bool bGazeRedirect=true;

NvAR_SetU32(gazeRedirectHandle, NvAR_Parameter_Config(GazeRedirect), bGazeRedirect);

//Set input image buffer

NvAR_SetObject(gazeRedirectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Set output memory for gaze vector

float gaze_angles_vector[2];

NvvAR_SetF32Array(gazeRedirectHandle, NvAR_Parameter_Output(OutputGazeVector), gaze_angles_vector, batchSize * 2);

//Set output image buffer

NvAR_SetObject(gazeRedirectHandle, NvAR_Parameter_Output(Image), &outputImageBuffer, sizeof(NvCVImage));

NvAR_Run(gazeRedirectHandle);

1.6.5. 3D Body Pose Tracking

This feature relies on temporal information to track the person in the scene, where the keypoints information from the previous frame is used to estimate the keypoints of the next frame.

3D Body Pose Tracking consists of the following parts:

- Body Detection

- 3D Keypoint Detection

In this release, we support only one person in the frame, and when the full body (head to toe) is visible. The feature will still work if a part of the body, such as an arm or a foot, is occluded/truncated.

1.6.5.1. 3D Body Pose Tracking for Static Frames (Images)

You can obtain the bounding boxes that encapsulate the people in the scene. To obtain detected bounding boxes, you can explicitly instantiate and run body detection as shown in the example below and pass the image buffer as input.

- This example runs the Body Detection with an input image buffer and output memory to hold bounding boxes:

//Set input image buffer NvAR_SetObject(bodyDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage)); //Set output memory for bounding boxes NvAR_BBoxes = output_boxes{}; output_bboxes.boxes = new NvAR_Rect[25]; output_bboxes.max_boxes = 25; NvAR_SetObject(bodyDetectHandle, NvAR_Parameter_Output(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes)); //OPTIONAL – Set memory for bounding box confidence values if desired NvAR_Run(bodyDetectHandle);

- The input to 3D Body Keypoint Detection is an input image. It outputs the 2D Keypoints, 3D Keypoints, Keypoints confidence scores, and bounding box encapsulating the person.

This example runs the 3D Body Pose Detection AR feature:

//Set input image buffer NvAR_SetObject(keypointDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage)); //Pass output bounding boxes from body detection as an input on which //landmark detection is to be run NvAR_SetObject(keypointDetectHandle, NvAR_Parameter_Input(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes)); //Set output buffer to hold detected keypoints std::vector<NvAR_Point2f> keypoints; std::vector<NvAR_Point3f> keypoints3D; std::vector<NvAR_Point3f> jointAngles; std::vector<float> keypoints_confidence; // Get the number of keypoints unsigned int numKeyPoints; NvAR_GetU32(keyPointDetectHandle, NvAR_Parameter_Config(NumKeyPoints), &numKeyPoints); keypoints.assign(batchSize * numKeyPoints , {0.f, 0.f}); keypoints3D.assign(batchSize * numKeyPoints , {0.f, 0.f, 0.f}); jointAngles.assign(batchSize * numKeyPoints , {0.f, 0.f, 0.f}); NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(KeyPoints), keypoints.data(), sizeof(NvAR_Point2f)); NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(KeyPoints3D), keypoints3D.data(), sizeof(NvAR_Point3f)); NvAR_SetF32Array(keyPointDetectHandle, NvAR_Parameter_Output(KeyPointsConfidence), keypoints_confidence.data(), batchSize * numKeyPoints); NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(JointAngles), jointAngles.data(), sizeof(NvAR_Point3f)); //Set output memory for bounding boxes NvAR_BBoxes = output_boxes{}; output_bboxes.boxes = new NvAR_Rect[25]; output_bboxes.max_boxes = 25; NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes)); NvAR_Run(keyPointDetectHandle);

1.6.5.2. 3D Body Pose Tracking for Temporal Frames (Videos)

The feature relies on temporal information to track the person in the scene. The keypoints information from the previous frame is used to estimate the keypoints of the next frame.

This example uses the 3D Body Pose Tracking AR feature to obtain 3D Body Pose Keypoints directly from the image:

//Set input image buffer

NvAR_SetObject(keypointDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

//Pass output bounding boxes from body detection as an input on which //landmark detection is to be run

NvAR_SetObject(keypointDetectHandle, NvAR_Parameter_Input(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes));

//Set output buffer to hold detected keypoints

std::vector<NvAR_Point2f> keypoints;

std::vector<NvAR_Point3f> keypoints3D;

std::vector<NvAR_Point3f> jointAngles;

std::vector<float> keypoints_confidence;

// Get the number of keypoints

unsigned int numKeyPoints;

NvAR_GetU32(keyPointDetectHandle, NvAR_Parameter_Config(NumKeyPoints), &numKeyPoints);

keypoints.assign(batchSize * numKeyPoints , {0.f, 0.f});

keypoints3D.assign(batchSize * numKeyPoints , {0.f, 0.f, 0.f});

jointAngles.assign(batchSize * numKeyPoints , {0.f, 0.f, 0.f});

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(KeyPoints), keypoints.data(), sizeof(NvAR_Point2f));

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(KeyPoints3D), keypoints3D.data(), sizeof(NvAR_Point3f));

NvAR_SetF32Array(keyPointDetectHandle, NvAR_Parameter_Output(KeyPointsConfidence), keypoints_confidence.data(), batchSize * numKeyPoints);

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(JointAngles), jointAngles.data(), sizeof(NvAR_Point3f));

//Set output memory for bounding boxes

NvAR_BBoxes = output_boxes{};

output_bboxes.boxes = new NvAR_Rect[25];

output_bboxes.max_boxes = 25;

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(BoundingBoxes), &output_bboxes, sizeof(NvAR_BBoxes));

NvAR_Run(keyPointDetectHandle);

1.6.5.3. Multi-Person Tracking for 3D Body Pose Tracking (Windows Only)

The feature relies on temporal information to track the person in the scene. The keypoints information from the previous frame is used to estimate the keypoints of the next frame.

The feature provides the ability to track multiple people in the following ways:

- In the scene across different frames.

- When they leave the scene and enter the scene again.

- When they are completely occluded by an object or another person and reappear (controlled using Shadow Tracking Age).

- Shadow Tracking Age

This option represents the period of time where a target is still being tracked in the background even when the target is not associated with a detector object. When a target is not associated with a detector object for a time frame, shadowTrackingAge, an internal variable of the target, is incremented. After the target is associated with a detector object, shadowTrackingAge will be reset to zero. When the target age reaches the shadow tracking age, the target is discarded and is no longer tracked. This is measured by the number of frames, and the default is 90.

- Probation Age

This option is the length of probationary period. After an object reaches this age, it is considered to be valid and is appointed an ID. This will help with false positives, where false objects are detected only for a few frames. This is measured by the number of frames, and the default is 10.

- Maximum Targets Tracked

This option is the maximum number of targets to be tracked, which can be composed of the targets that are active in the frame and ones in shadow tracking mode. When you select this value, keep the active and inactive targets in mind. The minimum is 1 and the default is 30.

Currently, we only actively track eight people in the scene. There can be more than eight people throughout the video but only a maximum of eight people in a given frame. Temporal mode is not supported for Multi-Person Tracking. The batch size should be 8 when Multi-Person Tracking is enabled. This feature is currently Windows only.

This example uses the 3D Body Pose Tracking AR feature to enable multi-person tracking and object the tracking ID for each person:

//Set input image buffer

NvAR_SetObject(keypointDetectHandle, NvAR_Parameter_Input(Image), &inputImageBuffer, sizeof(NvCVImage));

// Enable Multi-Person Tracking

NvAR_SetU32(keyPointDetectHandle, NvAR_Parameter_Config(TrackPeople), bEnablePeopleTracking);

// Set Shadow Tracking Age

NvAR_SetU32(keyPointDetectHandle, NvAR_Parameter_Config(ShadowTrackingAge), shadowTrackingAge);

// Set Probation Age

NvAR_SetU32(keyPointDetectHandle, NvAR_Parameter_Config(ProbationAge), probationAge);

// Set Maximum Targets to be tracked

NvAR_SetU32(keyPointDetectHandle, NvAR_Parameter_Config(MaxTargetsTracked), maxTargetsTracked);

//Set output buffer to hold detected keypoints

std::vector<NvAR_Point2f> keypoints;

std::vector<NvAR_Point3f> keypoints3D;

std::vector<NvAR_Point3f> jointAngles;

std::vector<float> keypoints_confidence;

// Get the number of keypoints

unsigned int numKeyPoints;

NvAR_GetU32(keyPointDetectHandle, NvAR_Parameter_Config(NumKeyPoints), &numKeyPoints);

keypoints.assign(batchSize * numKeyPoints , {0.f, 0.f});

keypoints3D.assign(batchSize * numKeyPoints , {0.f, 0.f, 0.f});

jointAngles.assign(batchSize * numKeyPoints , {0.f, 0.f, 0.f});

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(KeyPoints), keypoints.data(), sizeof(NvAR_Point2f));

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(KeyPoints3D), keypoints3D.data(), sizeof(NvAR_Point3f));

NvAR_SetF32Array(keyPointDetectHandle, NvAR_Parameter_Output(KeyPointsConfidence), keypoints_confidence.data(), batchSize * numKeyPoints);

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(JointAngles), jointAngles.data(), sizeof(NvAR_Point3f));

//Set output memory for tracking bounding boxes

NvAR_TrackingBBoxes output_tracking_bboxes{};

std::vector<NvAR_TrackingBBox> output_tracking_bbox_data;

output_tracking_bbox_data.assign(maxTargetsTracked, { 0.f, 0.f, 0.f, 0.f, 0 });

output_tracking_bboxes.boxes = output_tracking_bbox_data.data();

output_tracking_bboxes.max_boxes = (uint8_t)output_tracking_bbox_size;

NvAR_SetObject(keyPointDetectHandle, NvAR_Parameter_Output(TrackingBoundingBoxes), &output_tracking_bboxes, sizeof(NvAR_TrackingBBoxes));

NvAR_Run(keyPointDetectHandle);

1.6.6. Facial Expression Estimation

This section provides information about how to use the Facial Expression Estimation feature.

1.6.6.1. Facial Expression Estimation for Static Frames (Images)

Typically, the input to the Facial Expression Estimation feature is an input image and a set of detected landmark points corresponding to the face on which we want to estimate face expression coefficients.

Here is the typical usage of this feature, where the detected facial keypoints from the Landmark Detection feature are passed as input to this feature:

//Set facial keypoints from Landmark Detection as an input

err = NvAR_SetObject(faceExpressionHandle, NvAR_Parameter_Input(Landmarks), facial_landmarks.data(), sizeof(NvAR_Point2f));

//Set output memory for expression coefficients

unsigned int expressionCount;

err = NvAR_GetU32(faceExpressionHandle, NvAR_Parameter_Config(ExpressionCount), &expressionCount);

float expressionCoeffs = new float[expressionCount];

err = NvAR_SetF32Array(faceExpressionHandle, NvAR_Parameter_Output(ExpressionCoefficients), expressionCoeffs, expressionCount);

//Set output memory for pose rotation quaternion

NvAR_Quaternion pose = new NvAR_Quaternion();

err = NvAR_SetObject(faceExpressionHandle, NvAR_Parameter_Output(Pose), pose, sizeof(NvAR_Quaternion));

//OPTIONAL – Set output memory for bounding boxes, and their confidences if desired

err = NvAR_Run(faceExpressionHandle);

1.6.6.2. Alternative Usage of the Facial Expression Estimation Feature

Similar to the alternative usage of the Landmark detection feature and the Face 3D Mesh Feature, the Facial Expression Estimation feature can be used to determine the detected face bounding box, the facial keypoints, and a 3D face mesh and its rendering parameters.

Instead of the facial keypoints of a face, if an input image is provided, the face and the facial keypoints are automatically detected and used to run the expression estimation. When run this way, if BoundingBoxes and/or Landmarks are set as optional output properties for this feature, these properties will be populated with the bounding box that contains the face and the detected facial keypoints, respectively.

ExpressionCoefficients and Pose are not optional properties for this feature, and to run the feature, these properties must be set with user-provided output buffers.

Additionally, if this feature is run without providing facial keypoints as an input, the path pointed to by the ModelDir config parameter must also contain the face and landmark detection TRT package files. Optionally, the CUDAStream and the Temporal flag can be set for those features.

The expression coefficients can be used to drive the expressions of an avatar.

The facial keypoints and/or the face bounding box that were determined internally can be queried from this feature but are not required for the feature to run.

This example uses the Facial Expression Estimation feature to obtain the face expression coefficients directly from the image, without explicitly running Landmark Detection or Face Detection:

//Set input image buffer instead of providing facial keypoints