Sizing Methodology

Before deploying NVIDIA virtual GPU (vGPU) technology, conducting a proof of concept (POC) is highly recommended. This initial phase allows you to gain insights into user workflows, assess GPU resource requirements, and gather feedback to optimize configuration settings for optimal performance and scalability. Benchmarking examples provided in subsequent sections of this guide offer valuable insights for sizing deployments.

User behavior varies significantly and plays a pivotal role in determining the appropriate GPU and vGPU profile sizes. Typically, recommendations are categorized into three user types: light, medium, and heavy, based on their workflow demands and data/model sizes. For instance, heavy users handle advanced graphics and larger datasets, while light and medium users require less intensive graphics and work with smaller models.

The following sections delve into methodologies and considerations for sizing deployments, ensuring alignment with user requirements and performance expectations.

NVIDIA vGPU software enables the partitioning or fractionalization of an NVIDIA data center GPU. These virtual GPU resources are allocated to virtual machines (VMs) via vGPU profiles in the hypervisor management console.

vGPU profiles determine the allocation of GPU frame buffer to VMs, significantly impacting total cost of ownership, scalability, stability, and performance in VDI environments.

Each vGPU profile features a specific frame buffer size, supports multiple display heads, and offers maximum resolutions. These profiles are categorized into different series, each optimized for various classes of workloads. A profile is a combination of a vGPU type (such as A, B, Q) and a vGPU size (the amount of GPU memory in gigabytes). Further details and a list of available vGPU profiles across all license levels are provided in the table below.

vGPU Type | Optimal Workload |

|---|---|

| Q-profile 6 | Virtual workstations for creative and technical professionals who require the performance and features of Quadro technology |

| B-profile | Virtual desktops for business professionals and knowledge workers |

| A-profile | App streaming or session-based solutions for virtual applications users |

Avoid using 1A, 2A, and 4A vGPU profiles for vApps, as they are not suitable and may lead to misconfigurations.

For more information regarding vGPU types, please refer to the vGPU software user guide.

Choosing the appropriate vGPU profile for deployment is crucial as it dictates the number of vGPU-backed VMs that can be deployed.

Two types of deployment configurations are supported:

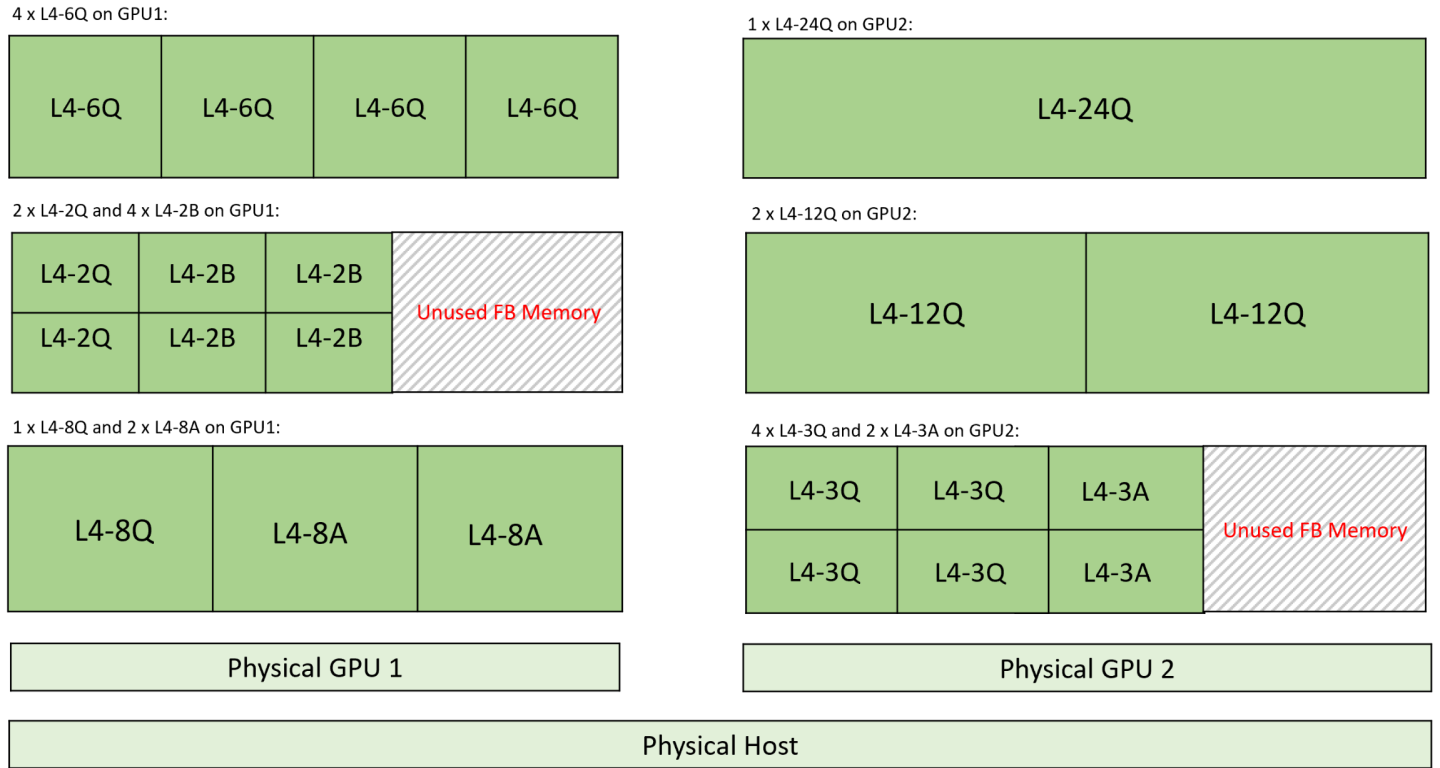

Equal-Size Mode: A configuration where a physical GPU is fractionalized into vGPUs that have the same amounts of frame buffers. All vGPUs hosted on a physical GPU must have the same profile size (same frame buffer size), but are allowed to have different vGPU types (for example, 2Q & 2B can be hosted on the same physical GPU). Figure 7 illustrates some valid configurations for equal-size mode on an L4 GPU.

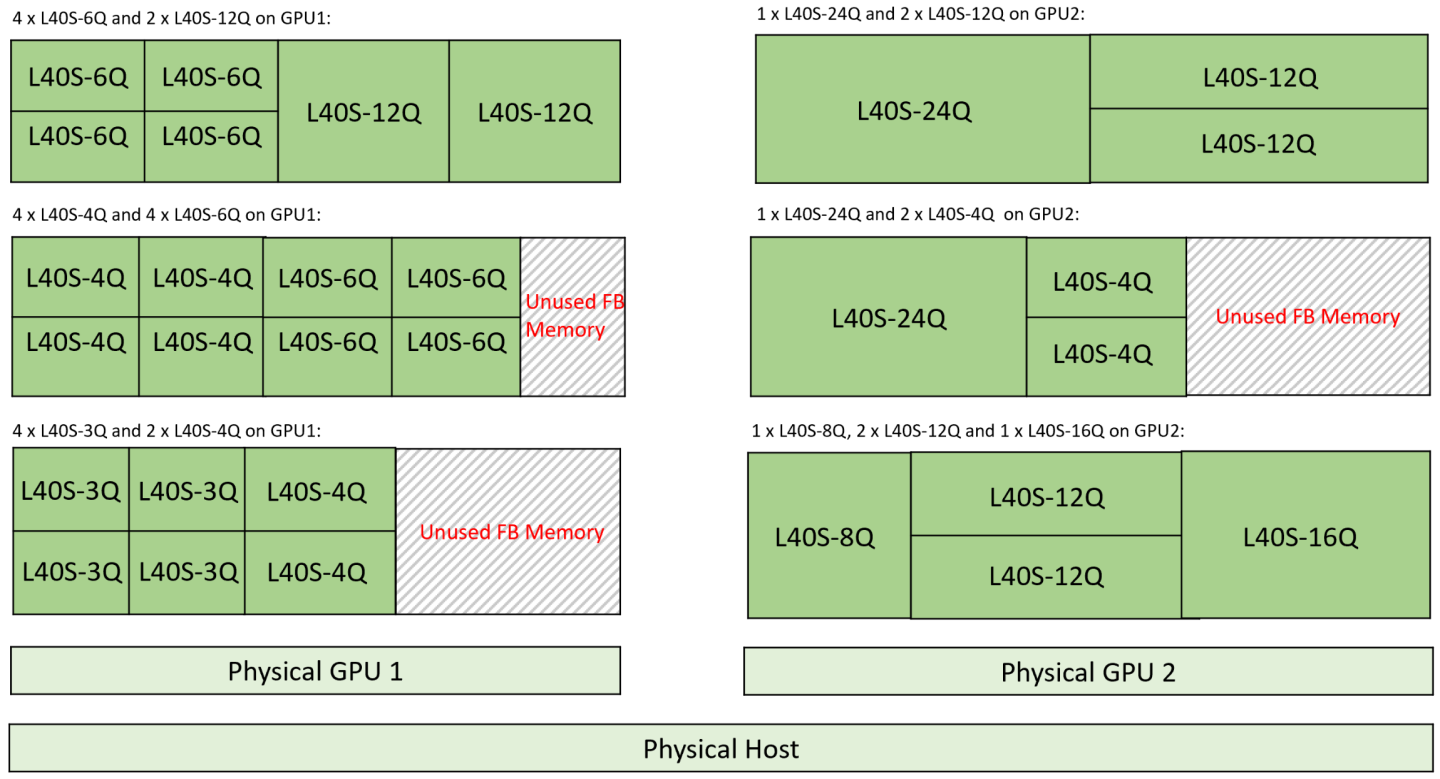

Mixed-Size Mode: A configuration that allows a physical GPU to support vGPUs with different vGPU profile sizes (different amounts of frame buffer) simultaneously. This configuration allows for more flexible and efficient use of GPU resources, as different VMs can have different GPU requirements. Figure 8 illustrates some valid configurations for mixed-size mode on an L40S GPU. This feature was introduced in vGPU 17.0 and is supported on KVM and vSphere 8U3, ensuring broad compatibility and enhanced resource utilization.

Example Equal-Size Mode Configurations for NVIDIA L4

Figure 7 Example Equal-Size Mode Configurations for NVIDIA L4

Example Mixed-Size Mode Configurations for NVIDIA L40S

Figure 8 Example Mixed-Size Mode Configurations for NVIDIA L40S

Mixed-size mode allows support of different vGPU types (A, B, and Q series) as well as different vGPU sizes on the same physical GPU. For example, an L4 GPU in mixed-size mode can host an L4-8Q and L4-2B vGPU instances. However, the maximum number of vGPU instances of a given size that can be supported is the closest power-of-2 to the number of instances in equal-size mode.

In the below example, we see that an L40S GPU with 48 GB of GPU memory can support:

6 instances of the L40S-8Q profile in equal-size mode

4 instances of the L40S-8Q profile in mixed-size mode

Virtual GPU Type | Frame Buffer (MB) | Maximum vGPUs per GPU in Equal-Size Mode | Maximum vGPUs per GPU in Mixed-Size Mode |

|---|---|---|---|

| L40S-8Q | 8192 | 6 | 4 |

For more information, refer to Valid Time-Sliced Virtual GPU Configurations on a Single GPU.

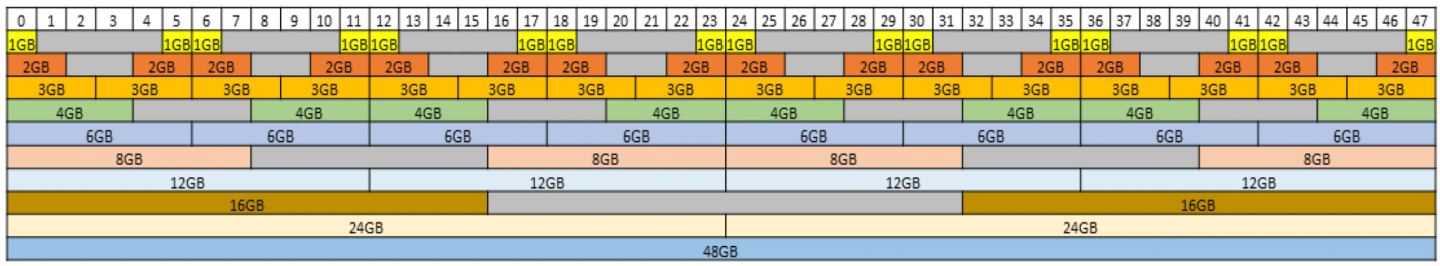

The following diagram shows the supported placements for each size of vGPU on a GPU based on the Ada Lovelace GPU architecture with a total of 48 GB of frame buffer in mixed-size mode:

Figure 9 vGPU Placements for Ada Lovelace GPUs with 48 GB Frame Buffer

For more details, refer to vGPU Placements for GPUs in Mixed-Size Mode.

Multi-session desktops require careful consideration of GPU memory. We suggest selecting a large vGPU profile size based on the results of POC testing. Conducting POCs is crucial for identifying the appropriate vGPU profile size, addressing potential bottlenecks, and ensuring that the deployed solution meets the desired performance criteria.

Many modern server CPUs and hypervisor CPU schedulers support features such as Intel’s Hyperthreading or AMD’s Simultaneous Multithreading, allowing for over-committing or oversubscribing CPU resources. This capability enables the total number of virtualized CPUs (vCPUs) to exceed the number of physical CPU cores in a server. The oversubscription ratio significantly affects the performance and scalability of your NVIDIA RTX vWS implementation. Generally, a 2:1 CPU oversubscription ratio is a recommended starting point, which means that for every physical CPU core, two virtual CPUs are allocated. However, actual ratios should be tailored based on specific application requirements and workflows.

vGPU oversubscription is currently not available.

The Q-profile requires an NVIDIA RTX vWS license.