Overview

The NVIDIA virtual GPU (vGPU) solution provides a flexible way to accelerate workloads in virtualized environments. This solution leverages NVIDIA graphics processing units (GPUs) for virtualization and NVIDIA software to virtualize these GPUs, serving a wide range of business needs and workloads.

Choosing the proper GPU hardware and virtual GPU software enables customers to benefit from innovative features delivered regularly via software updates, eliminating the need for frequent hardware purchases. This flexibility also allows IT departments to architect an optimal solution tailored to specific user needs and workloads.

A common question is, “How do I select the optimal combination of NVIDIA GPUs and virtualization software to best meet the needs of my workloads?” This document provides guidance to help answer this question by considering factors such as raw performance, performance per dollar 1, and overall cost-effectiveness.

This guide also offers best practices on how to deploy NVIDIA® RTX™ Virtual Workstation (RTX vWS) software for creative and technical professionals. It addresses key questions:

Which NVIDIA GPU is best for my business?

How do I choose the right NVIDIA virtual GPU (vGPU) profile(s) for my users?

How do I properly size my Virtual Workstation environment?

To determine the NVIDIA virtual GPU solution that best meets your needs, testing with real-world workloads is essential. Successful deployments typically begin with a POC, followed by continuous monitoring to ensure that changing user behavior or application requirements are addressed. For example, a light graphics user may shift to a heavy graphics user as tasks evolve or new applications are introduced.

Management and monitoring tools are critical for maintaining an optimized deployment. This document outlines these tools and key resource usage metrics to track during the POC and throughout the lifecycle of your deployment. Additionally, it covers important considerations such as selecting the right NVIDIA vGPU-certified OEM server, understanding supported NVIDIA GPUs, and accounting for power and cooling constraints.

Table 1 summarizes the NVIDIA vGPU solutions for virtualized workloads.

Workloads | GPU Virtualization Software | Best Raw Performance GPU | Most Cost-Effective GPU |

|---|---|---|---|

| Knowledge worker VDI | NVIDIA vPC | NVIDIA L4 | NVIDIA A16 |

| Professional graphics | NVIDIA RTX vWS | NVIDIA L40S | NVIDIA L4 |

Cloud Service Providers offer recommendations for sizing with NVIDIA GPUs to optimize performance for professional visualization workloads. These recommendations include details on GPU instance configurations, use cases, and deployment guidelines. Check out some of our CSPs for more information:

NVIDIA RTX™ Virtual Workstation software enables powerful virtual workstations from the data center, allowing professionals to work from anywhere on any device with access to familiar tools. Supported by all major public cloud vendors, RTX vWS is the industry standard for virtualized enterprises.

NVIDIA RTX vWS virtualizes professional visualization applications using NVIDIA RTX Enterprise drivers, ISV certifications, NVIDIA CUDA®, OpenCL, higher resolution displays, and larger GPU profile sizes.

NVIDIA RTX vWS software is built on NVIDIA virtual GPU (vGPU) technology and integrates the NVIDIA RTX Enterprise driver, essential for graphics-intensive applications. RTX vWS uses NVIDIA vGPU guest drivers, ensuring high-performance graphics, application compatibility, cost-effectiveness, and scalability. This flexibility allows VMs to be tailored to specific tasks that vary in GPU compute or memory requirements.

NVIDIA RTX technology represents a significant leap forward in computer graphics, revolutionizing applications that simulate the physical world with unprecedented speed. With advancements in AI, ray tracing, and simulation, RTX technology facilitates rapid creation of stunning 3D designs, photorealistic simulations, and breathtaking visual effects. This technology accelerates the rendering of real-time cinematic-quality environments, achieving precise shadows, reflections, and refractions to empower artists and creators to produce high-fidelity content faster than ever before.

NVIDIA RTX Virtual Workstations leverage the advancements of NVIDIA RTX technology. Using NVIDIA RTX vWS grants access to powerful GPUs in a virtualized environment, alongside vGPU software features such as:

Management and monitoring: Simplify data center management with hypervisor-based tools.

Live Migration: Seamlessly migrate GPU-accelerated VMs without disruption, easing maintenance and upgrades.

Security: Extend server virtualization benefits to GPU workloads, enhancing data protection.

Multi-Tenancy: Isolate workloads securely and support multiple users simultaneously.

During a Proof of Concept (POC), considerations include selecting an NVIDIA vGPU certified OEM server, verifying NVIDIA GPU compatibility, and assessing power and cooling constraints within the data center.

NVIDIA vGPU is a licensed product on all supported GPU boards. A software license is required to enable all vGPU features within the guest VM. The type of license required depends on the vGPU type.

Q-series vGPU types require a vWS license.

B-series vGPU types require a vPC license but can also be used with a vWS license.

A-series vGPU types require a vApps license.

The End User License Agreement (EULA) requires a separate license for each individual user or session. Compliance with this requirement is mandatory to ensure proper usage and adherence to the licensing terms.

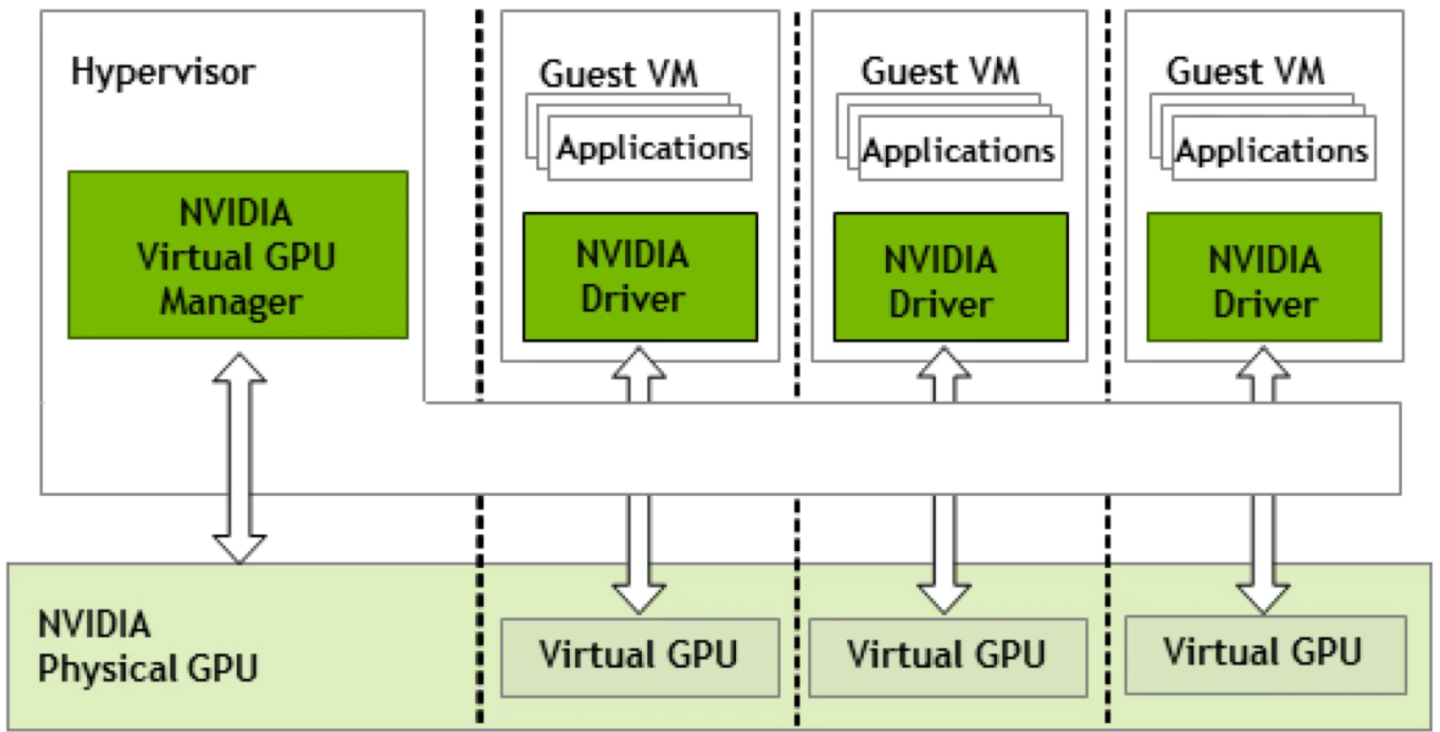

Figure 1 illustrates the high-level architecture of an NVIDIA virtual GPU. NVIDIA GPUs are installed within the server, accompanied by the NVIDIA vGPU manager software installed on the host server. This software facilitates the sharing of a single GPU among multiple VMs. Alternatively, vGPU technology allows a single VM to utilize multiple vGPUs from one or more physical GPUs.

Physical NVIDIA GPUs can support multiple virtual GPUs (vGPUs), which are allocated directly to guest VMs under the control of NVIDIA’s Virtual GPU Manager running in the hypervisor. Guest VMs interact with NVIDIA vGPUs similarly to how they would with a directly passed-through physical GPU managed by the hypervisor.

Figure 1 NVIDIA vGPU System Architecture

In NVIDIA vGPU deployments, the appropriate vGPU license is identified based on the assigned vGPU profile for each VM. Each NVIDIA vGPU behaves similarly to a conventional GPU, featuring a fixed amount of GPU memory and supporting one or more virtual display outputs or heads. Multiple heads can accommodate multiple displays. The vGPU memory allocation is managed by the NVIDIA vGPU Manager installed in the hypervisor, utilizing the physical GPU frame buffer at creation and retaining exclusive use of that GPU memory until termination.

All vGPUs sharing a physical GPU have access to its engines, including graphics (3D), video decode, and encode engines. For optimal performance and critical paths, a VM’s guest OS leverages direct access to the GPU, while non-critical management operations utilize a para-virtualized interface to the NVIDIA Virtual GPU Manager.

Performance-per-dollar measures how much performance you get for the cost, calculated by dividing a system’s performance by its total cost, including hardware and software. This helps evaluate the cost-effectiveness of different vGPU solutions.