RDMA over Converged Ethernet (RoCE)

RoCE v2 is currently supported in FreeBSD 11.0 and above.

Remote Direct Memory Access (RDMA) is the remote memory management capability that allows server-to-server data movement directly between application memory without any CPU involvement. RDMA over Converged Ethernet (RoCE) is a mechanism to provide this efficient data transfer with very low latencies on lossless Ethernet networks. With advances in data center convergence over reliable Ethernet, ConnectX® Ethernet adapter cards family with RoCE uses the proven and efficient RDMA transport to provide the platform for deploying RDMA technology in mainstream data center application at 10GigE, 25GigE, 40GigE, 50GigE, and 100 GigE link-speed. ConnectX® Ethernet adapter cards family with its hardware offload support takes advantage of this efficient RDMA transport (InfiniBand) services over Ethernet to deliver ultra-low latency for performance-critical and transaction intensive applications such as financial, database, storage, and content delivery networks.

When working with RDMA applications over Ethernet link layer the following points should be noted:

The presence of a Subnet Manager (SM) is not required in the fabric. Thus, operations that require communication with the SM are managed in a different way in RoCE. This does not affect the API but only the actions such as joining multicast group, that need to be taken when using the API

Since LID is a layer 2 attribute of the InfiniBand protocol stack, it is not set for a port and is displayed as zero when querying the port

With RoCE, the alternate path is not set for RC QP. Therefore, APM (another type of High Availability and part of the InfiniBand protocol) is not supported

Since the SM is not present, querying a path is impossible. Therefore, the path record structure must be filled with the relevant values before establishing a connection. Hence, it is recommended working with RDMA-CM to establish a connection as it takes care of filling the path record structure

VLAN tagged Ethernet frames carry a 3-bit priority field. The value of this field is derived from the IB SL field by taking the 3 least significant bits of the SL field

RoCE traffic is not shown in the associated Ethernet device's counters since it is offloaded by the hardware and does not go through Ethernet network driver. RoCE traffic is counted in the same place where InfiniBand traffic is counted;

sysctl sys.class.infiniband.<device>.ports.<port number>.counters

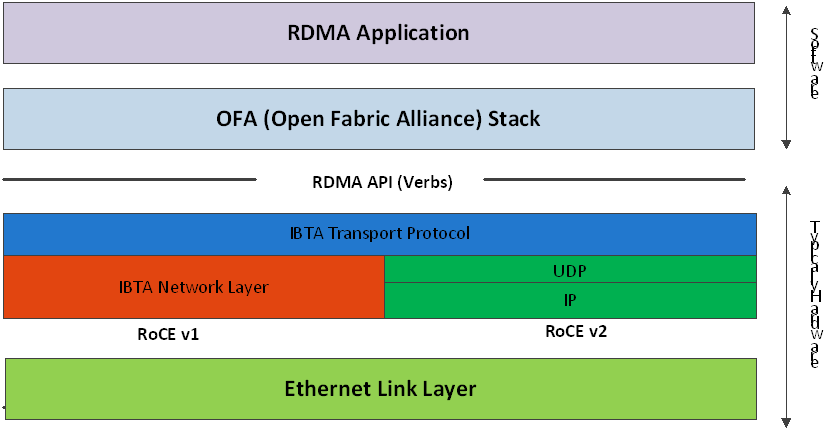

RoCE encapsulates IB transport in one of the following Ethernet packet

RoCE v1 - dedicated ether type (0x8915)

RoCE v2 - UDP and dedicated UDP port (4791)

RoCE v1

RoCE v1 protocol is defined as RDMA over Ethernet header (as shown in the figure above). It uses ethertype 0x8915 and can be used with or without the VLAN tag. The regular Ethernet MTU applies on the RoCE frame.

RoCE v2

A straightforward extension of the RoCE protocol enables traffic to operate in IP layer 3 environments. This capability is obtained via a simple modification of the RoCE packet format. Instead of the GRH used in RoCE, IP routable RoCE packets carry an IP header which allows traversal of IP L3 Routers and a UDP header (RoCE v2 only) that serves as a stateless encapsulation layer for the RDMA Transport Protocol Packets over IP.

The proposed RoCE v2 packets use a well-known UDP destination port value that unequivocally distinguishes the datagram. Similar to other protocols that use UDP encapsulation, the UDP source port field is used to carry an opaque flow-identifier that allows network devices to implement packet forwarding optimizations (e.g. ECMP) while staying agnostic to the specifics of the protocol header format.

Furthermore, since this change exclusively affects the packet format on the wire, and due to the fact that with RDMA semantics packets are generated and consumed below the AP, applications can seamlessly operate over any form of RDMA service, in a completely transparent way.

RoCE Modes Parameters

ConnectX-4 and above adapter cards families support both RoCE v1 and RoCE v2. By default, the driver associates all GID indexes to RoCE v1 and RoCE v2, thus, a single entry for each RoCE version.

GID table entries are created whenever an IP address is configured on one of the Ethernet devices of the NIC's ports. Each entry in the GID table for RoCE ports has the following fields:

GID value

GID type

Network device

The GID table is occupied with two GID entries, both with the same GID value but with different types. The Network device in an entry is the Ethernet device with the IP address that GID is associated with. The GID format can be of 2 types, IPv4 and IPv6. IPv4 GID is an IPv4-mapped IPv6 address while IPv6 GID is the IPv6 address itself. Layer 3 header for packets associated with IPv4 GIDs will be IPv4 (for RoCE v2) and IPv6/GRH for packets associated with IPv6 GIDs and IPv4 GIDs for RoCE v1.

The number of entries in the GID table is equal to N (K+1) (see relevant notes below) where N is the number of IP addresses that are assigned to all network devices associated with the port, including VLAN devices and alias devices, but excluding bonding masters, since RoCE LAG is not supported in FreeBSD. Link local IPv6 addresses are excluded from this count since the GID for them is always set in advance (the default GIDs) at the beginning of each table. K is the number of the supported RoCE types. Since the number of entries in the hardware is limited to 128 for each port, it is important to understand the limitations on N. MLNX_OFED provides a script called show_gids to view the GID table conveniently.

When the mode of the device is RoCE v1/RoCE v2, each entry in the GID table occupies 2 entries in the hardware. In other modes, each entry in the GID table occupies a single entry in the hardware.

In multifunction configuration, the PF gets 16 entries in the hardware while each VF gets 112/F where F is the number of virtual functions on the port. If 112/F is not an integer, some functions will have 1 less entries than others. Note that when F is larger than 56, some VFs will get only one entry in the GID table.

GID Table in sysctl Tree

GID table is exposed to user space via the sysctrl tree.

GID values can be read from:

sysctl sys.

class.infiniband.{device}.ports.{port}.gids.{index}GID type can be read from:

sysctl sys.

class.infiniband.{device}.ports.{port}.gid_attrs.types.{index}GID net_device can be read from:

sysctl sys.

class.infiniband.{device}.ports.{port}.gid_attrs.ndevs.{index}

GID Table Example

The following is an example of the GID table.

DEV PORT INDEX GID IPv4 VER DEV

---- ---- ----- ---- ----- ----- -------

mlx5_0 1 0 fe80:0000:0000:0000:0202:c9ff:feb6:7c70 V2 mce1

mlx5_0 1 1 fe80:0000:0000:0000:0202:c9ff:feb6:7c70 V1 mce1

mlx5_0 1 2 0000:0000:0000:0000:0000:ffff:c0a8:0146 192.168.1.70 V2 mce1

mlx5_0 1 3 0000:0000:0000:0000:0000:ffff:c0a8:0146 192.168.1.70 V1 mce1

mlx5_0 1 4 0000:0000:0000:0000:0000:ffff:c1a8:0146 193.168.1.70 V2 mce1.100

mlx5_0 1 5 0000:0000:0000:0000:0000:ffff:c1a8:0146 193.168.1.70 V1 mce1.100

mlx5_0 1 6 1234:0000:0000:0000:0000:0000:0000:0070 V2 mce1

mlx5_0 1 7 1234:0000:0000:0000:0000:0000:0000:0070 V1 mce1

mlx5_0 2 0 fe80:0000:0000:0000:0202:c9ff:feb6:7c71 V2 mce1

mlx5_0 2 1 fe80:0000:0000:0000:0202:c9ff:feb6:7c71 V1 mce1

where:

Entries on port 1 index 0/1 are the default GIDs, one for each supported RoCE type

Entries on port 1 index 2/3 belong to IP address 192.168.1.70 on eth1.

Entries on port 1 index 4/5 belong to IP address 193.168.1.70 on eth1.100.

Packets from a QP that is associated with these GID indexes will have a VLAN header (VID=100)

Entries on port 1 index 6/7 are IPv6 GID. Packets from a QP that is associated with these GID indexes will have an IPv6 header

RoCE packets can be sniffed using tcpdump tool using tcpdump -i <RDMA device>.

Example:

# tcpdump -i mlx5_0

Perftest is a collection of tests for RDMA micro-benchmarking that can be installed via ports: benchmarks/perftest.