GPUDirect RDMA

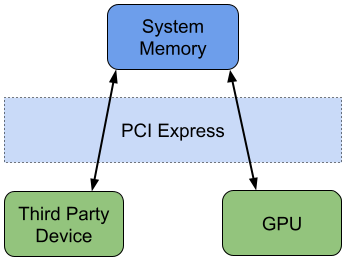

Copying data between a third party PCIe device and a GPU traditionally requires two DMA operations: first the data is copied from the PCIe device to system memory, then it’s copied from system memory to the GPU.

Fig. 30 Data Transfer Between PCIe Device and GPU Without GPUDirect RDMA

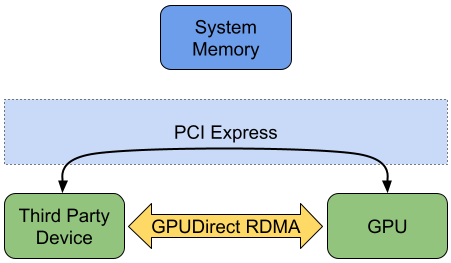

For data that will be processed exclusively by the GPU, this additional data copy to system memory goes unused and wastes both time and system resources. GPUDirect RDMA optimizes this use case by enabling third party PCIe devices to DMA directly to or from GPU memory, bypassing the need to first copy to system memory.

Fig. 31 Data Transfer Between PCIe Device and GPU With GPUDirect RDMA

NVIDIA takes advantage of RDMA in many of its SDKs, including Rivermax for GPUDirect support with ConnectX network adapters, and GPUDirect Storage for transfers between a GPU and storage device. NVIDIA is also committed to supporting hardware vendors enable RDMA within their own drivers, an example of which is provided by AJA Video Systems as part of a partnership with NVIDIA for the Clara Holoscan SDK. The AJASource extension is an example of how the SDK can leverage RDMA.

For more information about GPUDirect RDMA, see the following:

Minimal GPUDirect RDMA Demonstration source code, which provides a real hardware example of using RDMA and includes both kernel drivers and userspace applications for the RHS Research PicoEVB and HiTech Global HTG-K800 FPGA boards.