DOCA DPA

The quality status of DOCA libraries is listed here.

This chapter provides an overview and configuration instr uctions for DOCA DPA API.

The DOCA DPA libraries offer a programming model for offloading communication-centric user code to run on the DPA processor on NVIDIA® BlueField®-3 networking platform. DOCA DPA provides a high-level programming interface to the DPA processor.

DOCA DPA offers:

Full control over DPA threads –

The u ser can control the thread function (kernel) that runs on DPA and t heir placement on DPA EUs

The user can associate a DPA thread with a DPA Completion Context. When the completion context receives a notification, the DPA thread is scheduled.

Abstraction to allow a DPA thread to issue asynchronous operations

Abstraction to execute a blocking one-time call from host application to execute the kernel on the DPA from the host application (RPC)

Abstraction for memory services

Abstraction for remote communication primitives (integrated with remote event signaling)

Full control on execution-ordering and notifications/synchronization of the DPA and host/Target BlueField

A set of debugging APIs that allow diagnosing and troubleshooting any issue on the device, as well as accessing real-time information from the running application

C API for application developers

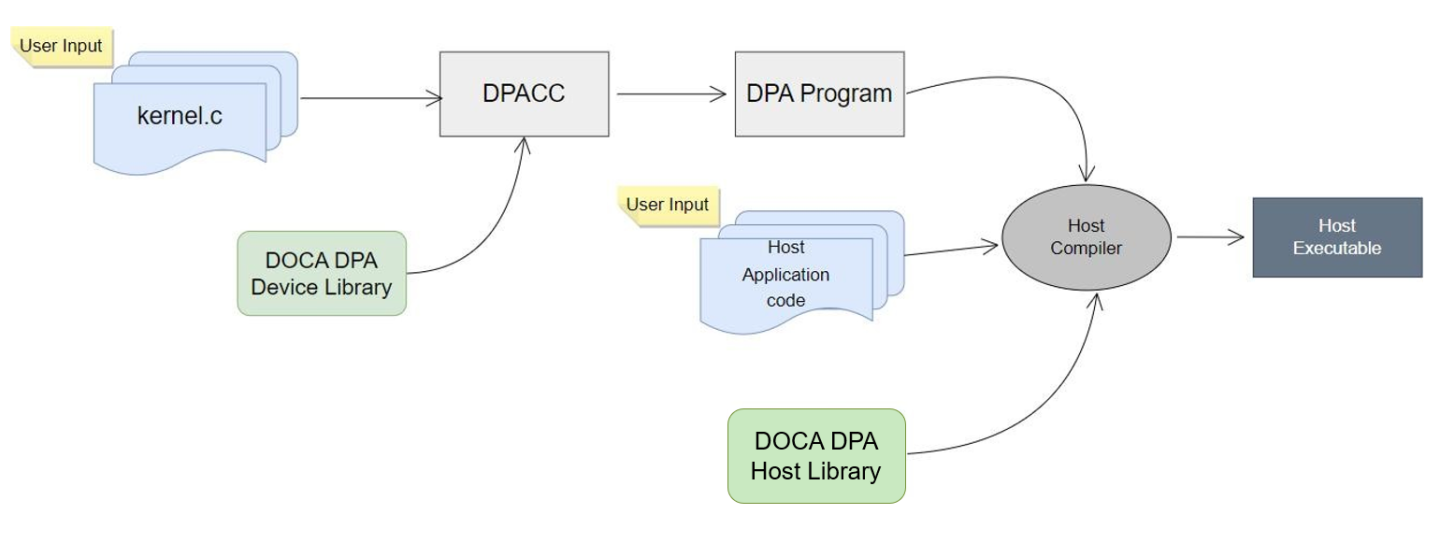

DPACC is used to compile and link kernels with DOCA DPA device libraries to get DPA applications that can be loaded from the host program to execute on the DPA (similar to CUDA usage with NVCC). For more information on DPACC, refer to the DOCA DPACC Compiler.

DOCA DPA applications can run either on the host or on the Target BlueField. Running on the host machine requires EU pre-configuration using the dpaeumgmt tool.

For more information, please refer to NVIDIA DOCA DPA EU Management Tool.

Changes in 2.9.0

The following subsection(s) detail the doca_dpa library updates in version 2.9.0.

Added

doca_dpa_dev.hAdded

enum doca_dpa_dev_submit_flagto be used in the new parameteruint32_t flagsin DPA RDMA device and DPA memcpy APIs

Removed

doca_dpa_dev_rdma.hvoid doca_dpa_dev_rdma_synchronize(doca_dpa_dev_rdma_t rdma);void doca_dpa_dev_rdma_srq_post_receive(doca_dpa_dev_rdma_srq_t rdma_srq, uint32_t next_index);void doca_dpa_dev_rdma_srq_receive_ack(doca_dpa_dev_rdma_srq_t rdma_srq, uint32_t num_acked);

Changed

doca_dpa_dev_rdma.hAdded

uint32_t connection_idparameter to all DPA RDMA device APIsChanged

uint32_t completion_requestedparameter touint32_t flagsin all DPA RDMA APIs

doca_dpa_dev_buf.hChanged

uint32_t completion_requestedparameter touint32_t flagsin all DPA memcpy APIs

DOCA enables developers to program the DPA processor using both DOCA DPA libraries and a suite of other tools (mainly DPACC).

The following are the main steps to start DPA offload programming:

Write DPA device code, or kernels, (

.cfiles) with:Use DPACC to build a DPA program (i.e., a host library which contains an embedded device executable). Inputs for DPACC are:

Kernels from the previous step

DOCA DPA device libraries

Build host executable using a host compiler. Inputs for the host compiler are:

DPA program from the previous step

User host application source files

DOCA DPA host library

DPACC is provided by the DOCA SDK installation . For more information, please refer to the DOCA DPACC Compiler.

DPA Queries

Before invoking the DPA API, make sure that DPA is indeed supported on the relevant device. The API which checks whether a device supports DPA is:

doca_error_t doca_devinfo_get_is_dpa_supported(

conststruct doca_devinfo *devinfo)InfoOnly if this call returns

DOCA_SUCCESScan the user invoke DOCA DPA API on the device.To use a valid EU ID for the DPA EU Affinity of a DPA thread, use the following APIs to query EU ID and core valid values:

doca_error_t doca_dpa_get_core_num(struct doca_dpa *dpa, unsigned

int*num_cores) doca_error_t doca_dpa_get_num_eus_per_core(struct doca_dpa *dpa, unsignedint*eus_per_core) doca_error_t doca_dpa_get_total_num_eus_available(struct doca_dpa *dpa, unsignedint*total_num_eus)There is a limitation on the maximum number of DPA threads that can run a single kernel. This can be retrieved by calling the host API:

doca_error_t doca_dpa_get_max_threads_per_kernel(struct doca_dpa *dpa, unsigned

int*value)Each kernel launched into the DPA has a maximum runtime limit. This can be retrieved by calling the host API:

doca_error_t doca_dpa_get_kernel_max_run_time(struct doca_dpa *dpa, unsigned

longlong*value)NoteIf the kernel execution time on the DPA exceeds this maximum runtime limit, it may be terminated and cause a fatal error. To recover, the application must destroy the DPA context and create a new one.

Initialization

The DPA context encapsulates the DPA device and a DPA process (program). Within this context, the application creates various DPA SDK objects and controls them. After verifying DPA is supported for the chosen device, the DPA context is created.

Use the following host-side APIs to create/set the DPA context and it is expected to be the first programming step:

To create/destroy DPA context:

doca_error_t doca_dpa_create(struct doca_dev *dev, struct doca_dpa **dpa) doca_error_t doca_dpa_destroy(struct doca_dpa *dpa)

NotePlease note that the

devused to create the "base" DPA context must be a PF DOCA device.To start/stop DPA context:

doca_error_t doca_dpa_start(struct doca_dpa *dpa) doca_error_t doca_dpa_stop(struct doca_dpa *dpa)

Interface to DPACC

DPA Application

To associate a DPA program (app) with a DPA context, use the following host-side APIs:

doca_error_t doca_dpa_set_app(struct doca_dpa *dpa, struct doca_dpa_app *app)

doca_error_t doca_dpa_get_app(struct doca_dpa *dpa, struct doca_dpa_app **app)

doca_error_t doca_dpa_app_get_name(struct doca_dpa_app *app, char *app_name, size_t *app_name_len)

The app variable name used in doca_dpa_set_app() API must be the token passed to DPACC --app-name parameter.

Example (Pseudo Code)

For example, when using the following dpacc command line:

dpacc \

kernels.c \

-o dpa_program.a \

-hostcc=gcc \

-hostcc-options="..." \

--devicecc-options="..." \

-device-libs="-L/opt/mellanox/doca/include -ldoca_dpa_dev -ldoca_dpa_dev_comm" \

--app-name="dpa_example_app"

The user must use the following commands to set the app of a DPA context:

extern struct doca_dpa_app *dpa_example_app;

doca_dpa_create(&dpa);

doca_dpa_set_app(dpa, dpa_example_app);

doca_dpa_start(dpa);

Overview of DOCA DPA Software Objects

Term | Definition |

DPA context | Software construct for the host process that encapsulates the state associated with a DPA process (on a specific device). DPA context must be associated with a PF device. |

Extended DPA context | A DPA context associated with a VF/SF device to be used for RDMA utilities. This to allow creation of DPA resources such as RDMA/DPA completion/DPA Async ops... contexts on the VF/SF device. |

DPA Application | Interface with the DPACC compiler to produce a DPA program (app) which is obtained by the DPA context to begin working on DPA. |

Kernel | User function (and its arguments) to be executed on DPA. A kernel may be executed by one or more DPA threads. |

DPA EU Affinity | An object used to control which EU to use for DPA thread. |

DPA Thread | DOCA DPA provides APIs to create/manage DPA thread which runs a given kernel. |

DPA Completion Context | An object used to receive/handle a completion notification. The user can associate a DPA thread with a completion context. When the completion context receives a notification, DPA thread is scheduled. |

DPA Thread Notification | A mechanism for one DPA thread to notify another DPA thread. |

DPA Async Ops | An object used to allow a DPA thread to issue asynchronous operations, like memcpy or post_wait operations. |

DPA RPC | A blocking one-time call from host application to execute a kernel on DPA. RPC is mainly used for control path. The RPC's return value is reported back to the host application. |

DPA Memory | DOCA DPA provides an API to allocate/manage DPA memory, as well as handling host/Target BlueField memory that has been exported to DPA. |

Sync Event | Data structure in either CPU, Target BlueField, GPU, or DPA-heap. An event contains a counter that can be updated and waited on. |

RDMA | Abstraction around a network transport object. Allows executing various RDMA operations. |

DPA Hash Table | DOCA DPA provides an API to create a Hash Table on DPA. This data structure is managed on DPA using relevant device APIs. |

DPA Logger/Tracer | DOCA DPA provides a set of debugging APIs to allow the user to diagnose and troubleshoot any issue on the device, as well as accessing real-time information from the running application. |

Deployment View

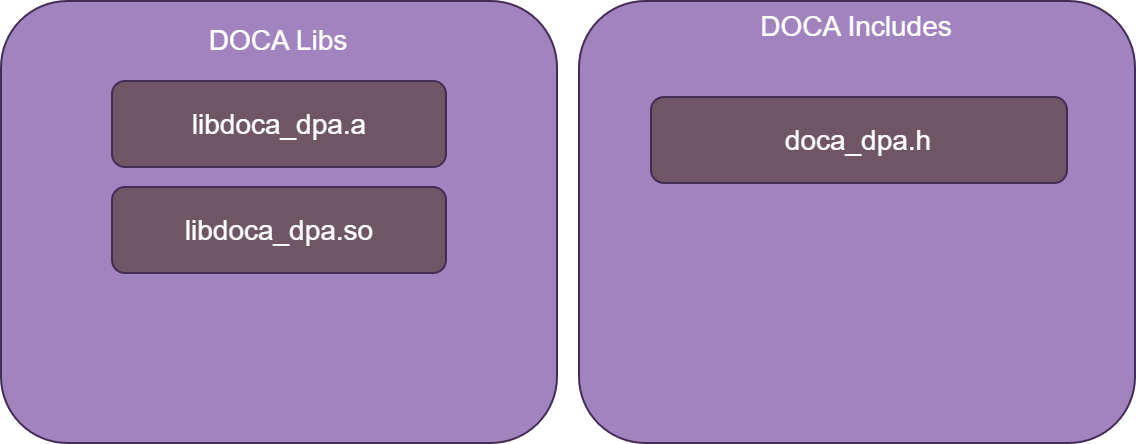

DOCA DPA comprises two main components which are part of the DOCA SDK installation package:

The DOCA DPA SDK does not use any means of multi-thread synchronization primitives. All DOCA DPA objects are non-thread-safe. Developers should make sure the user program and kernels are written to avoid race conditions.

DOCA DPA Host Library

Host library and header files:

The host library offers an interface for managing the following components:

Affinity

Users can control which EU is assigned to a DPA thread by using a DPA EU affinity object.

A DPA EU affinity object can be configured to target a single EU ID at a time.

Use the following host-side APIs to manage it:

To create/destroy DPA EU affinity object:

doca_error_t doca_dpa_eu_affinity_create(struct doca_dpa *dpa, struct doca_dpa_eu_affinity **affinity) doca_error_t doca_dpa_eu_affinity_destroy(struct doca_dpa_eu_affinity *affinity)

To set/clear EU ID in DPA EU affinity object:

doca_error_t doca_dpa_eu_affinity_set(struct doca_dpa_eu_affinity *affinity, unsigned

inteu_id) doca_error_t doca_dpa_eu_affinity_clear(struct doca_dpa_eu_affinity *affinity)To get EU ID of a DPA EU affinity object:

doca_error_t doca_dpa_eu_affinity_get(struct doca_dpa_eu_affinity *affinity, unsigned

int*eu_id)

DPA Thread

DOCA DPA thread used to run a user function “DPA kernel” on DPA.

User can control on which EU to run DPA kernel by attaching a DPA EU affinity object to the thread.

The thread can be triggered on DPA using two methods:

DPA Thread Notification - Notifying one DPA thread from another DPA thread.

DPA Completion Context - A completion is arrived at a DPA completion context which is attached to the thread.

DPA thread operations:

To create/destroy DPA thread:

doca_error_t doca_dpa_thread_create(struct doca_dpa *dpa, struct doca_dpa_thread **dpa_thread) doca_error_t doca_dpa_thread_destroy(struct doca_dpa_thread *dpa_thread)

To set/get thread user function and its argument:

doca_error_t doca_dpa_thread_set_func_arg(struct doca_dpa_thread *thread, doca_dpa_func_t *func, uint64_t arg) doca_error_t doca_dpa_thread_get_func_arg(struct doca_dpa_thread *dpa_thread, doca_dpa_func_t **func, uint64_t *arg)

To set/get DPA EU Affinity:

doca_error_t doca_dpa_thread_set_affinity(struct doca_dpa_thread *thread, struct doca_dpa_eu_thread_affinity *eu_affinity) doca_error_t doca_dpa_thread_get_affinity(struct doca_dpa_thread *dpa_thread,

conststruct doca_dpa_eu_affinity **affinity)Thread Local Storage (TLS)

User can ask to store an opaque for a DPA thread in host side application using the following API:

doca_error_t doca_dpa_thread_set_local_storage(struct doca_dpa_thread *dpa_thread, doca_dpa_dev_uintptr_t dev_ptr) doca_error_t doca_dpa_thread_get_local_storage(struct doca_dpa_thread *dpa_thread, doca_dpa_dev_uintptr_t *dev_ptr)

dev_ptris a pre-allocated DPA memory.In kernel, user can retrieve the stored opaque using the relevant device API (see below API).

This opaque is stored/retrieved using the Thread Local Storage (TLS) mechanism.

To start/stop DPA thread:

doca_error_t doca_dpa_thread_start(struct doca_dpa_thread *thread) doca_error_t doca_dpa_thread_stop(struct doca_dpa_thread *dpa_thread)

To run DPA thread:

doca_error_t doca_dpa_thread_run(struct doca_dpa_thread *dpa_thread)

This API sets the thread to run state.

This function must be called after DPA thread is:

Created, set and started.

In case of DPA thread is attached to DPA Completion Context, the completion context must be started before.

Example (host-side pseudo code):

extern doca_dpa_func_t hello_kernel;

// create DPA thread

doca_dpa_thread_create(&dpa_thread);

// set thread kernel

doca_dpa_thread_set_func_arg(dpa_thread, &hello_kernel, func_arg);

// set thread affinity

doca_dpa_eu_affinity_create(&eu_affinity);

doca_dpa_eu_affinity_set(eu_affinity, 10 /* EU ID */);

doca_dpa_thread_set_affinity(dpa_thread, eu_affinity);

// set thread local storage

doca_dpa_mem_alloc(&tls_dev_ptr);

doca_dpa_thread_set_local_storage(dpa_thread, tls_dev_ptr);

// start thread

doca_dpa_thread_start(dpa_thread);

// create and initialize DPA Completion Context

doca_dpa_completion_create(&dpa_comp);

doca_dpa_completion_set_thread(dpa_comp, dpa_thread);

doca_dpa_completion_start(dpa_comp);

// run thread only after both thread is started and the attached completion context is started

doca_dpa_thread_run(dpa_thread);

Completion Context

To tie the user application closely with the DPA native model of event-driven scheduling/computation, we introduced DPA Completion Context.

User associates a DPA Thread with a completion context. When the completion context receives a notification, DPA Thread is triggered.

User can choose not to associate it with DPA Thread and to poll it manually.

User has the option to continue receiving new notifications or ignore them.

DOCA DPA provides a generic completion context that can be shared for Message Queues, RDMA, Ethernet and as well as DPA Async Ops.

To create/destroy DPA Completion Context:

doca_error_t doca_dpa_completion_create(struct doca_dpa *dpa, unsigned

intqueue_size, struct doca_dpa_completion **dpa_comp) doca_error_t doca_dpa_completion_destroy(struct doca_dpa_completion *dpa_comp)To get queue size:

doca_error_t doca_dpa_completion_get_queue_size(struct doca_dpa_completion *dpa_comp, unsigned

int*size)To attach to a DPA Thread:

doca_error_t doca_dpa_completion_set_thread(struct doca_dpa_completion *dpa_comp, struct doca_dpa_thread *thread) doca_error_t doca_dpa_completion_get_thread(struct doca_dpa_completion *dpa_comp, struct doca_dpa_thread **thread)

Attaching to a thread is only required if the user wants triggering of the thread when a completion is arrived at the completion context.

To start/stop DPA Completion Context:

doca_error_t doca_dpa_completion_start(struct doca_dpa_completion *dpa_comp) doca_error_t doca_dpa_completion_stop(struct doca_dpa_completion *dpa_comp)

To get DPA handle:

doca_error_t doca_dpa_completion_get_dpa_handle(struct doca_dpa_completion *dpa_comp, doca_dpa_dev_completion_t *handle)

Use output parameter

handlefor below device APIs which can be used in thread kernel.

Thread Notification

Thread Activation is a mechanism for one DPA thread to trigger another DPA Thread.

Thread activation is done without receiving a completion on the attached thread. Therefore it is expected that user of this method of thread activation passes the message in another fashion – such as shared memory.

Thread Activation can be achieved using DPA Notification Completion object.

To create/destroy DPA Notification Completion:

doca_error_t doca_dpa_notification_completion_create(struct doca_dpa *dpa, struct doca_dpa_thread *dpa_thread, struct doca_dpa_notification_completion **notify_comp) doca_error_t doca_dpa_notification_completion_destroy(struct doca_dpa_notification_completion *notify_comp)

Attaching DPA Notification Completion to a DPA Thread is done using the given parameter

dpa_thread.To get attached DPA Thread:

doca_error_t doca_dpa_notification_completion_get_thread(struct doca_dpa_notification_completion *notify_comp, struct doca_dpa_thread **dpa_thread)

To start/stop DPA Notification Completion:

doca_error_t doca_dpa_notification_completion_start(struct doca_dpa_notification_completion *notify_comp) doca_error_t doca_dpa_notification_completion_stop(struct doca_dpa_notification_completion *notify_comp)

To get DPA handle:

doca_error_t doca_dpa_notify_completion_get_dpa_handle(struct doca_dpa_notification_completion *notify_comp, doca_dpa_dev_notification_completion_t *comp_handle)

Use output parameter

comp_handlefor below device API which can be used in thread kernel.

Example (host-side pseudo code):

extern doca_dpa_func_t hello_kernel;

// create DPA thread

doca_dpa_thread_create(&dpa_thread);

// set thread kernel

doca_dpa_thread_set_func_arg(dpa_thread, &hello_kernel, func_arg);

// start thread

doca_dpa_thread_start(dpa_thread);

// create and start DPA notification completion

doca_dpa_notification_completion_create(dpa, dpa_thread, ¬ify_comp);

doca_dpa_notification_completion_start(notify_comp);

// get its DPA handle

doca_dpa_notification_completion_get_dpa_handle(notify_comp, ¬ify_comp_handle);

// run thread only after both thread is started and attached notification completion is started

doca_dpa_thread_run(dpa_thread);

Asynchronous Ops

DPA Async Ops allows DPA Thread to issue asynchronous operations, like memcpy or post_wait.

This feature requires the user to create an “asynchronous ops” context and attach to a completion context.

User is expected to adhere to `queue_size` limit on the device when posting operations.

The completion context can raise activation if it is attached to a DPA Thread.

User can also choose to progress the completion context via polling it manually.

User can provide DPA Async Ops `user_data`, and retrieve this metadata in device using relevant device API.

To create/destroy DPA Async Ops:

doca_error_t doca_dpa_async_ops_create(struct doca_dpa *dpa, unsigned

intqueue_size, uint64_t user_data, struct doca_dpa_async_ops **async_ops) doca_error_t doca_dpa_async_ops_destroy(struct doca_dpa_async_ops *async_ops)Please use the following define for valid user_data values:

#define DOCA_DPA_COMPLETION_LOG_MAX_USER_DATA (

24)To get queue size/user_data:

doca_error_t doca_dpa_async_ops_get_queue_size(struct doca_dpa_async_ops *async_ops, unsigned

int*queue_size) doca_error_t doca_dpa_async_ops_get_user_data(struct doca_dpa_async_ops *async_ops, uint64_t *user_data)To attach to a DPA Completion Context:

doca_error_t doca_dpa_async_ops_attach(struct doca_dpa_async_ops *async_ops, struct doca_dpa_completion *dpa_comp)

To start/stop DPA Async Ops:

doca_error_t doca_dpa_async_ops_start(struct doca_dpa_async_ops *async_ops) doca_error_t doca_dpa_async_ops_stop(struct doca_dpa_async_ops *async_ops)

To get DPA handle:

doca_error_t doca_dpa_async_ops_get_dpa_handle(struct doca_dpa_async_ops *async_ops, doca_dpa_dev_async_ops_t *handle)

Use output parameter

handlefor below device API which can be used in thread kernel.

Example (host-side pseudo code):

doca_dpa_thread_create(&dpa_thread);

doca_dpa_thread_set_func_arg(dpa_thread);

doca_dpa_thread_start(dpa_thread);

doca_dpa_completion_create(&dpa_comp);

doca_dpa_completion_set_thread(dpa_comp, dpa_thread);

doca_dpa_completion_start(dpa_comp);

doca_dpa_thread_run(dpa_thread);

doca_dpa_async_ops_create(&async_ops);

doca_dpa_async_ops_attach(async_ops, dpa_comp);

doca_dpa_async_ops_start(async_ops);

doca_dpa_async_ops_get_dpa_handle(async_ops, &handle); // use this handle in relevant Async Ops device APIs

Thread Group

Thread group is used to aggregate individual DPA threads to a single group.

To create/destroy DPA Thread Group:

doca_error_t doca_dpa_thread_group_create(struct doca_dpa *dpa, unsigned

intnum_threads, struct doca_dpa_tg **tg) doca_error_t doca_dpa_thread_group_destroy(struct doca_dpa_tg *tg)To get number of threads:

doca_error_t doca_dpa_thread_group_get_num_threads(struct doca_dpa_tg *tg, unsigned

int*num_threads);To set DPA Thread at 'rank' in DPA Thread Group:

doca_error_t doca_dpa_thread_group_set_thread(struct doca_dpa_tg *tg, struct doca_dpa_thread *thread, unsigned

intrank)Thread rank is an index of the thread (between 0 and (num_threads - 1)) within the group.

To start/stop DPA Thread Group:

doca_error_t doca_dpa_thread_group_start(struct doca_dpa_tg *tg) doca_error_t doca_dpa_thread_group_stop(struct doca_dpa_tg *tg)

Memory Subsystem

The user can allocate (from the host API) and access (from both the host and device API) several memory locations using the relevant DOCA DPA API.

DOCA DPA supports access from the host/Target BlueField to DPA heap memory and also enables device access to host memory (e.g., kernel writes to host memory).

The normal memory usage flow would be to:

Allocate memory (Host/Target BlueField/DPA).

Register the memory.

Get a DPA handle for the registered memory so it can be accessed by DPA kernels.

Access/use the memory from the kernel (see relevant device-side APIs).

To allocate DPA heap memory:

doca_dpa_mem_alloc(doca_dpa_t dpa, size_t size, doca_dpa_dev_uintptr_t *dev_ptr)

To free previously allocated DPA memory:

doca_dpa_mem_free(doca_dpa_dev_uintptr_t dev_ptr)

To copy previously allocated memory from a host pointer to a DPA heap device pointer:

doca_dpa_h2d_memcpy(doca_dpa_t dpa, doca_dpa_dev_uintptr_t src_ptr,

void*dst_ptr, size_t size)To copy previously allocated memory from a DOCA Buffer to a DPA heap device pointer:

doca_error_t doca_dpa_h2d_buf_memcpy(struct doca_dpa *dpa, doca_dpa_dev_uintptr_t dst_ptr, struct doca_buf *buf, size_t size)

To copy previously allocated memory from a DPA heap device pointer to a host pointer:

doca_dpa_d2h_memcpy(doca_dpa_t dpa,

void*dst_ptr, doca_dpa_dev_uintptr_t src_ptr, size_t size)To copy previously allocated memory from a DPA heap device pointer to a DOCA Buffer:

doca_error_t doca_dpa_d2h_buf_memcpy(struct doca_dpa *dpa, struct doca_buf *buf, doca_dpa_dev_uintptr_t src_ptr, size_t size)

To set memory:

doca_dpa_memset(doca_dpa_t dpa, doca_dpa_dev_uintptr_t dev_ptr,

intvalue, size_t size)To get a DPA handle to use in kernels, the user must use a DOCA Core Memory Inventory Object in the following manner (refer to "DOCA Memory Subsystem"):

When the user wants to use device APIs with DOCA Buffer, use the following pseudo code:

doca_buf_arr_create(&buf_arr); doca_buf_arr_set_target_dpa(buf_arr, doca_dpa); doca_buf_arr_start(buf_arr); doca_buf_arr_get_dpa_handle(buf_arr, &handle);

Use output parameter

handlein relevant device APIs in thread kernel.When the user wants to use device APIs with DOCA Mmap, use the following pseudo code:

doca_mmap_create(&mmap); doca_mmap_set_dpa_memrange(mmap, doca_dpa, dev_ptr, dev_ptr_len);

// dev-ptr is a pre-allocated DPA memorydoca_mmap_start(mmap); doca_mmap_dev_get_dpa_handle(mmap, doca_dev, &handle);Use output parameter

handlein relevant device APIs in thread kernel.

Extended DOCA DPA Context

A base DOCA DPA context is a context created on a PF DOCA device using:

doca_dpa_create(pf_doca_dev, &base_dpa_ctx);

To enable creating DPA resources (e.g., RDMA/DPA completion/DPA Async ops contexts) on VF/SF DOCA device, an extended DOCA DPA context is required.

DOCA DPA provides the following host API to extend a base DOCA DPA context (created on a PF DOCA device) to an SF/VF DOCA device:

doca_error_t doca_dpa_device_extend(struct doca_dpa *dpa, struct doca_dev *other_dev, struct doca_dpa **extended_dpa)

The extended DPA context can be used later on for creation of DPA resources (e.g., as RDMA/DPA completion/DPA Async ops contexts) on the other DOCA device (SF/VF).

Note that:

The extended DPA context is already started.

The extended DPA context can be used later for all DOCA DPA APIs (e.g., creating DPA memory, DPA completion context) or within kernel launch flow.

When running from the DPU, DOCA RDMA context (on DPA datapath) must be created on SF DOCA device. Therefore, it must be created using an extended DOCA DPA context (created on the same SF DOCA device).

To obtain a DPA handle for a DOCA DPA context (both base or extended):

doca_error_t doca_dpa_get_dpa_handle(struct doca_dpa *dpa, doca_dpa_dev_t *handle)

Use output parameter handle in the relevant device's APIs in the thread kernel.

When creating a DOCA RDMA context with an extended DOCA DPA context and that RDMA context is attached to a DPA completion context and a DPA thread, all DOCA RDMA, DPA completion context, and the DPA thread must be created on the same extended DOCA DPA context.

Example (host-side pseudo code):

// create a base DPA context

doca_dpa_create(pf_doca_dev, &base_dpa_ctx);

// call base_dpa_ctx setters

doca_dpa_start(base_dpa_ctx);

// create an extended DPA context

doca_dpa_device_extend(base_dpa_ctx, sf_doca_dev, &extended_dpa_ctx);

doca_dpa_get_dpa_handle(extended_dpa_ctx, &extended_dpa_ctx_handle);

// create DPA thread on extended DPA context

doca_dpa_thread_create(extended_dpa_ctx, &dpa_thread);

// call dpa_thread setters

doca_dpa_thread_start(dpa_thread);

// create DPA completion context on extended DPA context

doca_dpa_completion_create(extended_dpa_ctx, &dpa_completion);

doca_dpa_completion_set_thread(dpa_completion, dpa_thread);

doca_dpa_completion_start(dpa_completion);

// create DOCA RDMA context on SF DOCA device and extended DPA context

doca_rdma_create(sf_doca_dev, &rdma);

doca_ctx_set_datapath_on_dpa(rdma_as_ctx, extended_dpa_ctx);

doca_rdma_dpa_completion_attach(rdma, dpa_completion);

// other rdma setters

doca_ctx_start(rdma_as_ctx);

doca_rdma_get_dpa_handle(rdma, rdma_dpa_handle);

Data Structures

Hash Table

DOCA DPA provides an API to create a hash table on DPA. This data structure is managed on DPA using relevant device APIs.

To create a hash table on DPA:

doca_error_t doca_dpa_hash_table_create(struct doca_dpa *dpa, unsigned

intnum_entries, struct doca_dpa_hash_table **ht)To destroy a hash table:

doca_error_t doca_dpa_hash_table_destroy(struct doca_dpa_hash_table *ht)

To obtain a DPA handle:

doca_error_t doca_dpa_hash_table_get_dpa_handle(struct doca_dpa_hash_table *ht, doca_dpa_dev_hash_table_t *handle)

Use output parameter

handlein relevant device APIs in the thread kernel.

RPC

A blocking one-time call from the host application to execute a kernel on DPA.

RPC is mainly used for control path.

The RPC's return value is reported back to the host application.

doca_error_t doca_dpa_rpc(struct doca_dpa *dpa, doca_dpa_func_t *func, uint64_t *retval, … /* func arguments */)

Example:

Device-side – DPA device

funcmust be annotated with__dpa_rpc__annotation, such as:__dpa_rpc__ uint64_t hello_rpc(

intarg) { ... }Host-side:

extern doca_dpa_func_t hello_rpc; uint64_t retval; doca_dpa_rpc(dpa, &hello_rpc, &retval,

10);

Kernel Launch

DOCA DPA provides an API which enables full control for launching and monitoring kernels.

Since DOCA DPA libraries are not thread-safe, it is up to the programmer to make sure the kernel is written to allow it to run in a multi-threaded environment. For example, to program a kernel that uses RDMAs with 16 concurrent threads, the user should pass an array of 16 RDMAs to the kernel so that each thread can access its RDMA using its rank (doca_dpa_dev_thread_rank()) as an index to the array.

doca_dpa_kernel_launch_update_<add|set>(struct doca_dpa *dpa, struct doca_sync_event *wait_event, uint64_t wait_threshold, struct doca_sync_event *comp_event, uint64_t comp_count, unsigned int num_threads, doca_dpa_func_t *func, ... /* args */)

This function asks DOCA DPA to run

funcin DPA bynum_threadsand give it the supplied list of arguments (variadic list of arguments).This function is asynchronous so when it returns, it does not mean that

funcstarted/ended its execution.To add control or flow/ordering to these asynchronous kernels, two optional parameters for launching kernels are available:

wait_event– the kernel does not start its execution until the event is signaled (if NULL, the kernel starts once DOCA DPA has an available EU to run on it) which means that DOCA DPA would not run the kernel until the event's counter is bigger thanwait_threshold.NotePlease note that the valid values for

wait_thresholdandwait_eventcounter and are [0-254]. Values out of this range might cause anomalous behavior.comp_event– once the last thread running the kernel is done, DOCA DPA updates this event (either sets or adds to its current counter value withcomp_count).

DOCA DPA takes care of packing (on host/Target BlueField) and unpacking (in DPA) the kernel parameters.

funcmust be prefixed with the__dpa_global__macro for DPACC to compile it as a kernel (and add it to DPA executable binary) and not as part of host application binary.The programmer must declare

funcin their application also by adding the lineextern doca_dpa_func_t func.

The following APIs are only relevant for a kernel used in kernel_launch APIs. These APIs are not relevant in doca_dpa_thread kernel.

To retrieve the running thread's rank for a given kernel on the DPA. If, for example, a kernel is launched to run with 16 threads, each thread running this kernel is assigned a rank ranging from 0 to 15 within this kernel. This is helpful for making sure each thread in the kernel only accesses data relevant for its execution to avoid data-races:

unsigned

intdoca_dpa_dev_thread_rank()To return the number of threads running current kernel:

unsigned

intdoca_dpa_dev_num_threads()To yield the thread which runs the kernel:

voiddoca_dpa_dev_yield(void)

Examples

Linear Execution Example

Device-side pseudo code:

#include "doca_dpa_dev.h"

#include "doca_dpa_dev_sync_event.h"

__dpa_global__ void

linear_kernel(doca_dpa_dev_sync_event_t wait_ev, doca_dpa_dev_sync_event_t comp_ev)

{

if (wait_ev)

doca_dpa_dev_sync_event_wait_gt(wait_ev, wait_th = 0);

doca_dpa_dev_sync_event_update_add(comp_ev, comp_count = 1);

}

Host-side pseudo code:

#include <doca_dev.h>

#include <doca_error.h>

#include <doca_sync_event.h>

#include <doca_dpa.h>

int main(int argc, char **argv)

{

/*

A

|

B

|

C

*/

/* Open DOCA device */

open_doca_dev(&doca_dev);

/* Create doca dpa conext */

doca_dpa_create(doca_dev, dpa_linear_app, &dpa_ctx, 0);

/* Create event A - subscriber is DPA and publisher is CPU */

doca_sync_event_create(&ev_a);

doca_sync_event_add_publisher_location_cpu(ev_a, doca_dev);

doca_sync_event_add_subscriber_location_dpa(ev_a, dpa_ctx);

doca_sync_event_start(ev_a);

/* Create event B - subscriber and publisher are DPA */

doca_sync_event_create(&ev_b);

doca_sync_event_add_publisher_location_dpa(ev_b, dpa_ctx);

doca_sync_event_add_subscriber_location_dpa(ev_b, dpa_ctx);

doca_sync_event_start(ev_b);

/* Create event C - subscriber and publisher are DPA */

doca_sync_event_create(&ev_c);

doca_sync_event_add_publisher_location_dpa(ev_c, dpa_ctx);

doca_sync_event_add_subscriber_location_dpa(ev_c, dpa_ctx);

doca_sync_event_start(ev_c);

/* Create completion event for last kernel - subscriber is CPU and publisher is DPA */

doca_sync_event_create(&comp_ev);

doca_sync_event_add_publisher_location_dpa(comp_ev, dpa_ctx);

doca_sync_event_add_subscriber_location_cpu(comp_ev, doca_dev);

doca_sync_event_start(comp_ev);

/* Export kernel events and acquire their handles */

doca_sync_event_get_dpa_handle(ev_b, dpa_ctx, &ev_b_handle);

doca_sync_event_get_dpa_handle(ev_c, dpa_ctx, &ev_c_handle);

doca_sync_event_get_dpa_handle(comp_ev, dpa_ctx, &comp_ev_handle);

/* Launch kernels */

doca_dpa_kernel_launch_update_add(wait_ev = ev_a, wait_threshold = 1, num_threads = 1, &linear_kernel, kernel_args: NULL, ev_b_handle);

doca_dpa_kernel_launch_update_add(wait_ev = NULL, num_threads = 1, &linear_kernel, kernel_args: ev_b_handle, ev_c_handle);

doca_dpa_kernel_launch_update_add(wait_ev = NULL, &linear_kernel, num_threads = 1, kernel_args: ev_c_handle, comp_ev_handle);

/* Update host event to trigger kernels to start executing in a linear manner */

doca_sync_event_update_set(ev_a, 1)

/* Wait for completion of last kernel */

doca_sync_event_wait_gt(comp_ev, 0);

/* Tear Down... */

teardown_resources();

}

Diamond Execution Example

Device-side pseudo code:

#include "doca_dpa_dev.h"

#include "doca_dpa_dev_sync_event.h"

__dpa_global__ void

diamond_kernel(doca_dpa_dev_sync_event_t wait_ev, uint64_t wait_th, doca_dpa_dev_sync_event_t comp_ev1, doca_dpa_dev_sync_event_t comp_ev2)

{

if (wait_ev)

doca_dpa_dev_sync_event_wait_gt(wait_ev, wait_th);

doca_dpa_dev_sync_event_update_add(comp_ev1, comp_count = 1);

if (comp_ev2) // can be 0 (NULL)

doca_dpa_dev_sync_event_update_add(comp_ev2, comp_count = 1);

}

Host-side pseudo code:

#include <doca_dev.h>

#include <doca_error.h>

#include <doca_sync_event.h>

#include <doca_dpa.h>

int main(int argc, char **argv)

{

/*

A

/ \

C B

/ /

D /

\ /

E

*/

/* Open DOCA device */

open_doca_dev(&doca_dev);

/* Create doca dpa conext */

doca_dpa_create(doca_dev, dpa_diamond_app, &dpa_ctx, 0);

/* Create root event A that will signal from the host the rest to start */

doca_sync_event_create(&ev_a);

// set publisher to CPU, subscriber to DPA and start event

/* Create events B,C,D,E */

doca_sync_event_create(&ev_b);

doca_sync_event_create(&ev_c);

doca_sync_event_create(&ev_d);

doca_sync_event_create(&ev_e);

// for events B,C,D,E, set publisher & subscriber to DPA and start event

/* Create completion event for last kernel */

doca_sync_event_create(&comp_ev);

// set publisher to DPA, subscriber to CPU and start event

/* Export kernel events and acquire their handles */

doca_sync_event_get_dpa_handle(&ev_b_handle, &ev_c_handle, &ev_d_handle, &ev_e_handle, &comp_ev_handle);

/* wait threshold for each kernel is the number of parent nodes */

constexpr uint64_t wait_threshold_one_parent {1};

constexpr uint64_t wait_threshold_two_parent {2};

/* launch diamond kernels */

doca_dpa_kernel_launch_update_set(wait_ev = ev_a, wait_threshold = 1, num_threads = 1, &diamond_kernel, kernel_args: NULL, 0, ev_b_handle, ev_c_handle);

doca_dpa_kernel_launch_update_set(wait_ev = NULL, num_threads = 1, &diamond_kernel, kernel_args: ev_b_handle, wait_threshold_one_parent, ev_e_handle, NULL);

doca_dpa_kernel_launch_update_set(wait_ev = NULL, num_threads = 1, &diamond_kernel, kernel_args: ev_c_handle, wait_threshold_one_parent, ev_d_handle, NULL);

doca_dpa_kernel_launch_update_set(wait_ev = NULL, num_threads = 1, &diamond_kernel, kernel_args: ev_d_handle, wait_threshold_one_parent, ev_e_handle, NULL);

doca_dpa_kernel_launch_update_set(wait_ev = NULL, num_threads = 1, &diamond_kernel, kernel_args: ev_e_handle, wait_threshold_two_parent, comp_ev_handle, NULL);

/* Update host event to trigger kernels to start executing in a diamond manner */

doca_sync_event_update_set(ev_a, 1);

/* Wait for completion of last kernel */

doca_sync_event_wait_gt(comp_ev, 0);

/* Tear Down... */

teardown_resources();

}

Performance Optimizations

The time interval between a kernel launch call from the host and the start of its execution on the DPA is significantly optimized when the host application calls

doca_dpa_kernel_launch_update_<add|set>()repeatedly to execute with the same number of DPA threads. So, if the application callsdoca_dpa_kernel_launch_update_<add|set>(..., num_threads = x), the next call withnum_threads = xwould have a shorter latency (as low as ~5-7 microseconds) for the start of the kernel's execution.Applications calling for kernel launch with a wait event (i.e., the completion event of a previous kernel) also have significantly lower latency in the time between the host launching the kernel and the start of the execution of the kernel on the DPA. So, if the application calls

doca_dpa_kernel_launch_update_<add|set>( ..., completion event = m_ev, ...)and thendoca_dpa_kernel_launch_update_<add|set>( wait event = m_ev, ...), the latter kernel launch call would have shorter latency (as low as ~3 microseconds) for the start of the kernel's execution.

Limitations

The order in which kernels are launched is important. If an application launches K1 and then K2, K1 must not depend on K2's completion (e.g., wait on its wait event that K2 should update).

Not following this guideline leads to unpredictable results (at runtime) for the application and might require restarting the DOCA DPA context (i.e., destroying, reinitializing, and rerunning the workload).

DPA threads are an actual hardware resource and are, therefore, limited in number to 256 (including internal allocations and allocations explicitly requested by the user as part of the kernel launch API)

DOCA DPA does not check these limits. It is up to the application to adhere to this number and track thread allocation across different DPA contexts.

Each

doca_dpa_dev_rdma_tconsumes one thread.

The DPA has an internal watchdog timer to make sure threads do not block indefinitely. Kernel execution time must be finite and not exceed the time returned. by

doca_dpa_get_kernel_max_run_time.The

num_threadsparameter in thedoca_dpa_kernel_launchcall cannot exceed the maximum allowed number of threads to run a kernel returned. bydoca_dpa_get_max_threads_per_kernel.

Logging and Tracing

DOCA DPA provides a set of debugging APIs to allow diagnosing and troubleshooting any issues on the device, as well as accessing real-time information from the running application.

Logging in the data path has significant impact on an application's performance. While the tracer provided by the library is of high-frequency and is designed to prevent significant impact on the application's performance.

Therefore, its recommended to use:

Logging in the control path

Tracing in the data path

User can control the log/trace file path and device log verbosity.

To set/get the trace file path:

doca_error_t doca_dpa_trace_file_set_path(struct doca_dpa *dpa,

constchar*file_path) doca_error_t doca_dpa_trace_file_get_path(struct doca_dpa *dpa,char*file_path, uint32_t *file_path_len);To set/get the log file path:

doca_error_t doca_dpa_log_file_set_path(struct doca_dpa *dpa,

constchar*file_path) doca_error_t doca_dpa_log_file_get_path(struct doca_dpa *dpa,char*file_path, uint32_t *file_path_len)To set/get device log verbosity:

doca_error_t doca_dpa_set_log_level(struct doca_dpa *dpa, doca_dpa_dev_log_level_t log_level) doca_error_t doca_dpa_get_log_level(struct doca_dpa *dpa, doca_dpa_dev_log_level_t *log_level)

Error Handling

DPA context can enter an error state caused by the device flow. The application can check this error state by calling the following host API:

doca_error_t doca_dpa_peek_at_last_error(const struct doca_dpa *dpa)

If a fatal error core dump and crash occur, data is written to the file path /tmp/doca_dpa_fatal or to the file path set by the API doca_dpa_log_file_set_path(), with the suffixes .PID.core and .PID.crash respectively, where PID is the process ID. The data written to the file would include a memory snapshot at the time of the crash, which would contain information instrumental in pinpointing the cause of a crash (e.g., the program's state, variable values, and the call stack).

Creating core dump files can be done after the DPA application has crashed.

This call does not reset the error state.

If an error occurred, DPA context enters a fatal state and must be destroyed by the user.

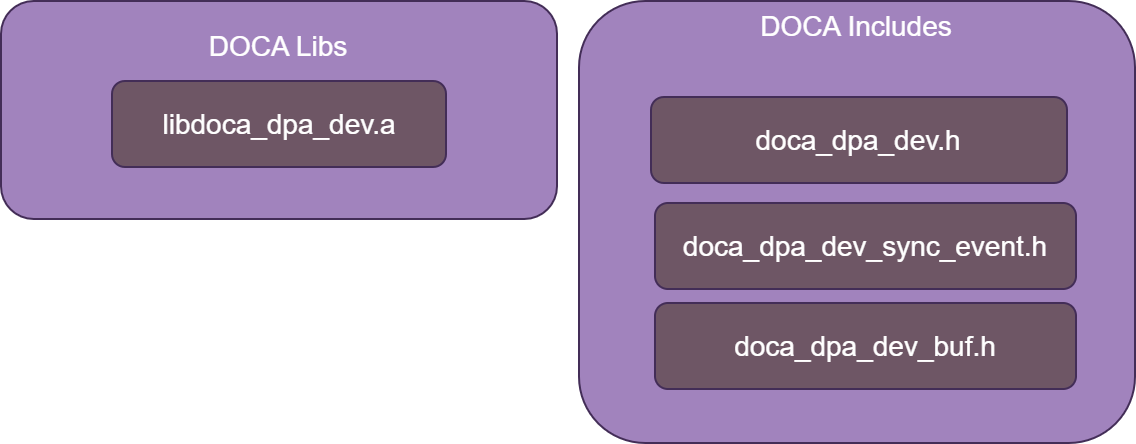

DOCA DPA Device Library

DOCA DPA device library offers an interface for common utilities such as:

Managing DPA thread

Managing DPA completion context

Managing DPA hash table

Log and trace

Managing DPA sync event

Managing DPA DOCA buf and mmap

DPA device library and header files:

DPA Thread

Thread restart APIs

DPA thread can ends its run using one of the following device APIs:

Reschedule API:

voiddoca_dpa_dev_thread_reschedule(void)DPA thread is still active

DPA thread resources are back to RTOS

DPA thread can be triggered again

Finish API:

voiddoca_dpa_dev_thread_finish(void)DPA thread is marked as finished

DPA thread resources are back to RTOS

DPA thread cannot be triggered again

To get TLS:

doca_dpa_dev_uintptr_t doca_dpa_dev_thread_get_local_storage(

void)This function returns DPA thread local storage previously set using the host API

doca_dpa_thread_set_local_storage().

DPA thread device APIs cannot be used in the user's written kernel which is used in the DOCA DPA kernel launch API.

Completion Context

Kernels get doca_dpa_dev_completion_t handle and invoke the following API:

To get a completion element:

intdoca_dpa_dev_get_completion(doca_dpa_dev_completion_t dpa_comp_handle, doca_dpa_dev_completion_element_t *comp_element)Use the returned

comp_elementto retrieve completion info using the APIs below.To get completion element type:

typedef

enum{ DOCA_DPA_DEV_COMP_SEND =0x0,/**< Send completion */DOCA_DPA_DEV_COMP_RECV_RDMA_WRITE_IMM =0x1,/**< Receive RDMA Write with Immediate completion */DOCA_DPA_DEV_COMP_RECV_SEND =0x2,/**< Receive Send completion */DOCA_DPA_DEV_COMP_RECV_SEND_IMM =0x3,/**< Receive Send with Immediate completion */DOCA_DPA_DEV_COMP_SEND_ERR =0xD,/**< Send Error completion */DOCA_DPA_DEV_COMP_RECV_ERR =0xE/**< Receive Error completion */} doca_dpa_dev_completion_type_t; doca_dpa_dev_completion_type_t doca_dpa_dev_get_completion_type(doca_dpa_dev_completion_element_t comp_element)To get completion element user data:

uint32_t doca_dpa_dev_get_completion_user_data(doca_dpa_dev_completion_element_t comp_element)

This API returns user data which:

Was set previously in host API

doca_dpa_async_ops_create(..., user_data, ...)When DPA completion context is attached to the DPA async ops.

Equivalent to

connection_idindoca_error_t doca_rdma_connection_get_id(const struct doca_rdma_connection *rdma_connection, uint32_t *connection_id)When DPA completion context is attached to DOCA RDMA context.

To get completion element immediate data:

uint32_t doca_dpa_dev_get_completion_immediate(doca_dpa_dev_completion_element_t comp_element)

This API returns immediate data for a completion element of type:

DOCA_DPA_DEV_COMP_RECV_RDMA_WRITE_IMMDOCA_DPA_DEV_COMP_RECV_SEND_IMM

To acknowledge that the completions have been read on the DPA completion context:

voiddoca_dpa_dev_completion_ack(doca_dpa_dev_completion_t dpa_comp_handle, uint64_t num_comp)This API releases resources of the acked completion elements in the completion context. This acknowledgment enables receiving new

num_compcompletions.To request notification on the DPA completion context:

voiddoca_dpa_dev_completion_request_notification(doca_dpa_dev_completion_t dpa_comp_handle)This API enables requesting new notifications on the DPA completion context. If this function is not called, the DPA completion context is not notified on newly arrived completion elements. Therefore, new completions are not populated in DPA completion context.

Example (device-side pseudo code):

__dpa_global__ void hello_kernel(uint64_t arg)

{

// User is expected to pass in some way the attached completion context handle "dpa_comp_handle" to kernel such as func_arg or a shared memory.

DOCA_DPA_DEV_LOG_INFO("Hello from kernel\n");

doca_dpa_dev_completion_element_t comp_element;

found = doca_dpa_dev_get_completion(dpa_comp_handle, &comp_element);

if (found) {

comp_type = doca_dpa_dev_get_completion_type(comp_element);

// process the completion according to completion type...

// ack on 1 completion

doca_dpa_dev_completion_ack(dpa_comp_handle, 1);

// enable getting more completions and triggering the thread

doca_dpa_dev_completion_request_notification(dpa_comp_handle);

}

// reschedule thread

doca_dpa_dev_thread_reschedule();

}

Thread Notification

Kernels get doca_dpa_dev_notification_completion_t handle and invoke the following API:

void doca_dpa_dev_thread_notify(doca_dpa_dev_notification_completion_t comp_handle)

Calling this API triggers the attached DPA Thread (the one that is specified in dpa_thread parameter in host-side API doca_dpa_notification_completion_create()).

Asynchronous Ops

Kernels get the doca_dpa_dev_async_ops_t handle and invoke the APIs listed below.

Users may control work request submit configuration using the following enum:

/**

* @brief DPA submit flag type

*/

__dpa_global__ enum doca_dpa_dev_submit_flag {

DOCA_DPA_DEV_SUBMIT_FLAG_NONE = 0U,

DOCA_DPA_DEV_SUBMIT_FLAG_FLUSH = (1U << 0), /**

* Use flag to inform related DPA context

* (such as RDMA or DPA Async ops) to flush related

* operation and previous operations to HW,

* otherwise the context may aggregate the operation

* and not flush it immediately

*/

DOCA_DPA_DEV_SUBMIT_FLAG_OPTIMIZE_REPORTS = (1U << 1), /**

* Use flag to inform related DPA context that it may

* defer completion of the operation to a later time. If

* flag is not provided then a completion will be raised

* as soon as the operation is finished, and any

* preceding completions that were deferred will also be

* raised. Use this flag to optimize the amount of

* completion notifications it receives from HW when

* submitting a batch of operations, by receiving a

* single completion notification on the entire batch.

*/

};

This enum can be used in the flags parameter in the following device APIs:

To post a memcpy operation using

doca_buf:voiddoca_dpa_dev_post_buf_memcpy(doca_dpa_dev_async_ops_t async_ops_handle, doca_dpa_dev_buf_t dst_buf_handle, doca_dpa_dev_buf_t src_buf_handle, uint32_t flags)This API copies data between two DOCA buffers. The destination buffer, specified by

dst_buf_handle, will contain the copied data after memory copy is complete. This is a non-blocking routine.To post a memcpy operation using

doca_mmapand an explicit address:voiddoca_dpa_dev_post_memcpy(doca_dpa_dev_async_ops_t async_ops_handle, doca_dpa_dev_mmap_t dst_mmap_handle, uint64_t dst_addr, doca_dpa_dev_mmap_t src_mmap_handle, uint64_t src_addr, size_t length, uint32_t flags)This API copies data between two DOCA Mmaps. The destination DOCA Mmap (

dst_addr), specified bydst_mmap_handle, will contain the copied data in source DOCA Mmap specified bysrc_mmap_handle,src_addr, andlengthafter memory copy is complete. This is a non-blocking routine.InfoUse this API for memcpy instead of using the

doca_buf memcpyAPI to gain better performance.To post a wait greater operation on a DOCA Sync Event:

voiddoca_dpa_dev_sync_event_post_wait_gt(doca_dpa_dev_async_ops_t async_ops_handle, doca_dpa_dev_sync_event_t wait_se_handle, uint64_t value)This function posts a wait operation on the DOCA Sync Event using DPA async ops to obtain DPA thread activation. The attached thread is activated when the value of a DOCA Sync Event is greater than a given value. This is a non-blocking routine.

NoteValid values must be in the range [0, 254] and can be called for an event with a value in the range [0, 254]. Invalid values leads to anomalous behavior.

To post a wait not equal operation on a DOCA Sync Event:

voiddoca_dpa_dev_sync_event_post_wait_ne(doca_dpa_dev_async_ops_t async_ops_handle, doca_dpa_dev_sync_event_t wait_se_handle, uint64_t value)This function posts a wait operation on the DOCA Sync Event using the DPA async ops to obtain DPA thread activation. The attached thread is activated when the value of DOCA Sync Event is not equal to a given value. This is a non-blocking routine.

Memory Subsystem

Memory APIs supplied by the DOCA DPA SDK are all asynchronous (i.e., non-blocking).

The user can acquire either:

Pre-configured DOCA Buffers (previously configured with

doca_buf_arr_set_params).Non-configured DOCA Buffers and use below device setters to configure them.

Device-side API operations :

To obtain a single buffer handle from the buf array handle:

doca_dpa_dev_buf_t doca_dpa_dev_buf_array_get_buf(doca_dpa_dev_buf_arr_t buf_arr,

constuint64_t buf_idx)To set/get the address pointed to by the buffer handle:

voiddoca_dpa_dev_buf_set_addr(doca_dpa_dev_buf_t buf, uintptr_t addr) uintptr_t doca_dpa_dev_buf_get_addr(doca_dpa_dev_buf_t buf)To set/get the length of the buffer:

voiddoca_dpa_dev_buf_set_len(doca_dpa_dev_buf_t buf, size_t len) uint64_t doca_dpa_dev_buf_get_len(doca_dpa_dev_buf_t buf)To set/get the DOCA Mmap associated with the buffer:

voiddoca_dpa_dev_buf_set_mmap(doca_dpa_dev_buf_t buf, doca_dpa_dev_mmap_t mmap) doca_dpa_dev_mmap_t doca_dpa_dev_buf_get_mmap(doca_dpa_dev_buf_t buf)To get a pointer to external memory registered on the host using DOCA Buffer:

doca_dpa_dev_uintptr_t doca_dpa_dev_buf_get_external_ptr(doca_dpa_dev_buf_t buf)

InfoAfter calling this API, users can read/write the memory indicated by the returned pointer from the DPA kernel.

To get a pointer to external memory registered on the host using an explicit address and DOCA Mmap:

doca_dpa_dev_uintptr_t doca_dpa_dev_mmap_get_external_ptr(doca_dpa_dev_mmap_t mmap_handle, uint64_t addr)

InfoAfter calling this API, users can read/write the memory indicated by the returned pointer from the DPA kernel.

Sync Events

Sync events fulfill the following roles:

DOCA DPA execution model is asynchronous and sync events are used to control various threads running in the system (allowing order and dependency)

DOCA DPA supports remote sync events, so the programmer is capable of invoking remote nodes by means of DOCA sync events

For host-side APIs, refer to "DOCA Sync Event".

To get the current event value:

doca_dpa_dev_sync_event_get(doca_dpa_dev_sync_event_t event, uint64_t *value)

To add/set to the current event value:

doca_dpa_dev_sync_event_update_<add|set>(doca_dpa_dev_sync_event_t event, uint64_t value)

To wait until event is greater than threshold:

doca_dpa_dev_sync_event_wait_gt(doca_dpa_dev_sync_event_t event, uint64_t value, uint64_t mask)

Use mask to apply bitwise AND on the DOCA Sync Event value for comparison with the wait threshold.

Extended DOCA DPA Context

To work with a DPA resource created on an extended DOCA DPA context, DOCA DPA offers the following device API:

void doca_dpa_dev_device_set(doca_dpa_dev_t dpa_handle)

This function must be called before calling any related device API of a DPA resource (e.g., DPA RDMA) created on an extended DOCA DPA context.

Note:

When creating only a DPA base context without an extended DPA context in this application, there is no need to call this API

When creating DPA resources on both base and extended DPA contexts, the proper DPA device (and DPA context) must be set before calling the relevant DPA resource API

Example (device-side pseudo code):

__dpa_global__ void kernel(uint64_t thread_arg)

{

// parse completion...

// set extended device before executing RDMA operation

doca_dpa_dev_device_set(thread_arg->extended_dpa_ctx_handle);

doca_dpa_dev_rdma_post_send(rdma_dpa_handle);

...

}

Data Structures

Hash Table

This data structure is managed on DPA using the following device APIs:

To add a new entry to the hash table:

voiddoca_dpa_dev_hash_table_add(doca_dpa_dev_hash_table_t ht_handle, uint32_t key, uint64_t value)NoteAdding a new key when the hash table is full causes anomalous behavior.

To remove an entry from the hash table:

voiddoca_dpa_dev_hash_table_remove(doca_dpa_dev_hash_table_t ht_handle, uint32_t key)To return the value to which the specified key is mapped in the hash table:

intdoca_dpa_dev_hash_table_find(doca_dpa_dev_hash_table_t ht_handle, uint32_t key, uint64_t *value)

Logging and Tracing

Log to host:

typedef

enumdoca_dpa_dev_log_level { DOCA_DPA_DEV_LOG_LEVEL_DISABLE =10,/**< Disable log messages */DOCA_DPA_DEV_LOG_LEVEL_CRIT =20,/**< Critical log level */DOCA_DPA_DEV_LOG_LEVEL_ERROR =30,/**< Error log level */DOCA_DPA_DEV_LOG_LEVEL_WARNING =40,/**< Warning log level */DOCA_DPA_DEV_LOG_LEVEL_INFO =50,/**< Info log level */DOCA_DPA_DEV_LOG_LEVEL_DEBUG =60,/**< Debug log level */} doca_dpa_dev_log_level_t;voiddoca_dpa_dev_log(doca_dpa_dev_log_level_t log_level,constchar*format, ...)Log macros:

DOCA_DPA_DEV_LOG_CRIT(...) DOCA_DPA_DEV_LOG_ERR(...) DOCA_DPA_DEV_LOG_WARN(...) DOCA_DPA_DEV_LOG_INFO(...) DOCA_DPA_DEV_LOG_DBG(...)

To create a trace message entry with arguments:

voiddoca_dpa_dev_trace(uint64_t arg1, uint64_t arg2, uint64_t arg3, uint64_t arg4, uint64_t arg5)To flush the trace message buffer to host:

voiddoca_dpa_dev_trace_flush(void)

The provided example code demonstrates the basic functionality and flow of the DPA but is not intended to be executed as-is due to missing initializations. For complete and runnable DPA examples, refer to the full samples in the Samples section.

Procedure Outline

Write DPA device code (i.e., kernels or

.cfiles).Use DPACC to build a DPA program (i.e., a host library which contains an embedded device executable). Input for DPACC:

Kernels from step 1.

DOCA DPA device library.

Build host executable using a host compiler. Input for the host compiler:

DPA program.

User host application

.c/.cppfiles.

Run host executable.

Procedure Steps

The following code snippets show a basic DPA code that eventually prints "Hello World" to stdout.

This is achieved using:

A DPA Thread which prints the string and signals a DOCA Sync Event to indicate completion to host application

A DPA RPC to notify DPA Thread

The steps are elaborated in the following subsections.

Prepare Kernels Code

#include "doca_dpa_dev.h"

#include "doca_dpa_dev_sync_event.h"

__dpa_global__ void hello_world_thread_kernel(uint64_t arg)

{

DOCA_DPA_DEV_LOG_INFO("Hello World From DPA Thread!\n");

doca_dpa_dev_sync_event_update_set(arg, 1);

doca_dpa_dev_thread_finish();

}

__dpa_rpc__ uint64_t hello_world_thread_notify_rpc(doca_dpa_dev_notification_completion_t comp_handle)

{

DOCA_DPA_DEV_LOG_INFO("Notifying DPA Thread From RPC\n");

doca_dpa_dev_thread_notify(comp_handle);

return 0;

}

Prepare Host Application Code

#include <stdio.h>

#include <unistd.h>

#include <doca_dev.h>

#include <doca_error.h>

#include <doca_sync_event.h>

#include <doca_dpa.h>

/**

* A struct that includes all needed info on registered kernels and is initialized during linkage by DPACC.

* Variable name should be the token passed to DPACC with --app-name parameter.

*/

extern struct doca_dpa_app *dpa_hello_world_app;

/**

* kernel declaration that the user must declare for each kernel and DPACC is responsible to initialize.

* Only then, user can use this variable in relevant host APIs

*/

doca_dpa_func_t hello_world_thread_kernel;

doca_dpa_func_t hello_world_thread_notify_rpc;

int main(int argc, char **argv)

{

struct doca_dev *doca_dev = NULL;

struct doca_dpa *dpa_ctx = NULL;

struct doca_sync_event *cpu_se = NULL;

doca_dpa_dev_sync_event_t cpu_se_handle = 0;

struct doca_dpa_thread *dpa_thread = NULL;

struct doca_dpa_notification_completion *notify_comp = NULL;

doca_dpa_dev_notification_completion_t notify_comp_handle = 0;

uint64_t retval = 0;

printf("\n----> Open DOCA Device\n");

/* Open appropriate DOCA device doca_dev */

printf("\n----> Initialize DOCA DPA Context\n");

doca_dpa_create(doca_dev, &dpa_ctx);

doca_dpa_set_app(dpa_ctx, dpa_hello_world_app);

doca_dpa_start(dpa_ctx);

printf("\n----> Initialize DOCA Sync Event\n");

doca_sync_event_create(&cpu_se);

doca_sync_event_add_publisher_location_dpa(cpu_se, dpa_ctx);

doca_sync_event_add_subscriber_location_cpu(cpu_se, doca_dev);

doca_sync_event_start(cpu_se);

doca_sync_event_get_dpa_handle(cpu_se, dpa_ctx, &cpu_se_handle);

printf("\n----> Initialize DOCA DPA Thread\n");

doca_dpa_thread_create(dpa_ctx, &dpa_thread);

doca_dpa_thread_set_func_arg(dpa_thread, &hello_world_thread_kernel, cpu_se_handle);

doca_dpa_thread_start(dpa_thread);

printf("\n----> Initialize DOCA DPA Notification Completion\n");

doca_dpa_notification_completion_create(dpa_ctx, dpa_thread, ¬ify_comp);

doca_dpa_notification_completion_start(notify_comp);

doca_dpa_notification_completion_get_dpa_handle(notify_comp, ¬ify_comp_handle);

printf("\n----> Run DOCA DPA Thread\n");

doca_dpa_thread_run(dpa_thread);

printf("\n----> Trigger DPA RPC\n");

doca_dpa_rpc(dpa_ctx, &hello_world_thread_notify_rpc, &retval, notify_comp_handle);

printf("\n----> Waiting For hello_world_thread_kernel To Finish\n");

doca_sync_event_wait_gt(cpu_se, 0, 0xFFFFFFFFFFFFFFFF);

printf("\n----> Destroy DOCA DPA Notification Completion\n");

doca_dpa_notification_completion_destroy(notify_comp);

printf("\n----> Destroy DOCA DPA Thread\n");

doca_dpa_thread_destroy(dpa_thread);

printf("\n----> Destroy DOCA DPA event\n");

doca_sync_event_destroy(cpu_se);

printf("\n----> Destroy DOCA DPA context\n");

doca_dpa_destroy(dpa_ctx);

printf("\n----> Destroy DOCA device\n");

doca_dev_close(doca_dev);

printf("\n----> DONE!\n");

return 0;

}

Build DPA Program

/opt/mellanox/doca/tools/dpacc \

kernel.c \

-o dpa_program.a \

-hostcc=gcc \

-hostcc-options="-Wno-deprecated-declarations -Werror -Wall -Wextra -W" \

--devicecc-options="-D__linux__ -Wno-deprecated-declarations -Werror -Wall -Wextra -W" \

--app-name="dpa_hello_world_app" \

--mcpu=nv-dpa-bf3 \

--device-libs="-L/opt/mellanox/doca/lib/x86_64-linux-gnu/ -ldoca_dpa_dev" \

-I/opt/mellanox/doca/include/

Build Host Application

gcc hello_world.c -o hello_world \

dpa_program.a \

-I/opt/mellanox/doca/include/ \

-DDOCA_ALLOW_EXPERIMENTAL_API \

-L/opt/mellanox/doca/lib/x86_64-linux-gnu/ -ldoca_dpa -ldoca_common \

-L/opt/mellanox/flexio/lib/ -lflexio \

-lstdc++ -libverbs -lmlx5

Execution

$ ./hello_world

----> Open DOCA Device

----> Initialize DOCA DPA Context

----> Initialize DOCA Sync Event

----> Initialize DOCA DPA Thread

----> Initialize DOCA DPA Notification Completion

----> Run DOCA DPA Thread

----> Trigger DPA RPC

/ 10/[DOCA][DPA DEVICE][INF] Notifying DPA Thread From RPC

/ 8/[DOCA][DPA DEVICE][INF] Hello World From DPA Thread!

----> Waiting For hello_world_thread_kernel To Finish

----> Destroy DOCA DPA Notification Completion

----> Destroy DOCA DPA Thread

----> Destroy DOCA DPA event

----> Destroy DOCA DPA context

----> Destroy DOCA device

----> DONE!

This section describes sample DPA implementations on the BlueField-3 networking platform.

All DOCA samples in this section are provided under the BSD-3 software license and are also available on GitHub.

DPA samples can be executed from either the host or the DPU.

When running from the DPU and using RDMA utilities, the RDMA DOCA device (SF device) must be provided as a parameter. If not specified, a random RDMA device is selected.

In this case:

The DOCA DPA context is created on the PF device.

An extended DPA context is created on the SF device.

The DOCA RDMA context is created on the SF device.

All DPA resources (e.g., DPA threads, DPA completion contexts) associated with the SF RDMA instance must be created using the extended DPA context.

To run DPA samples:

Refer to the following documents:

DOCA Installation Guide for Linux for details on how to install BlueField-related software.

NVIDIA BlueField Platform Software Troubleshooting Guide for any issue you may encounter with the installation, compilation, or execution of DOCA samples.

To build a given sample, run the following command. If you downloaded the sample from GitHub, update the path in the first line to reflect the location of the sample file:

cd /opt/mellanox/doca/samples/doca_dpa/<sample_name> meson /tmp/build ninja -C /tmp/build

InfoThe binary

doca_<sample_name>is created under/tmp/build/.Sample (e.g.,

dpa_initiator_target) usage:Usage: doca_dpa_initiator_target [DOCA Flags] [Program Flags] DOCA Flags: -h, --help Print a help synopsis -v, --version Print program version information -l, --log-level Set the (numeric) log level

forthe program <10=DISABLE,20=CRITICAL,30=ERROR,40=WARNING,50=INFO,60=DEBUG,70=TRACE> --sdk-log-level Set the SDK (numeric) log levelforthe program <10=DISABLE,20=CRITICAL,30=ERROR,40=WARNING,50=INFO,60=DEBUG,70=TRACE> -j, --json <path> Parse all command flags from an input json file Program Flags: -pf_dev, --pf-device <PF DOCA device name> PF device name that supports DPA (optional). If not provided then a random device will be chosen -rdma_dev, --rdma-device <RDMA DOCA device name> device name that supports RDMA (optional). If not provided then a random device will be chosen --dpa-resources <DPA resources file> Path to a DPA resources .yaml file --dpa-app-key <DPA application key> Application key in specified DPA resources .yaml file -g, --gid-index GID indexforDOCA RDMA (optional)For additional information per sample, use the

-hoption:/tmp/build/doca_<sample_name> -h

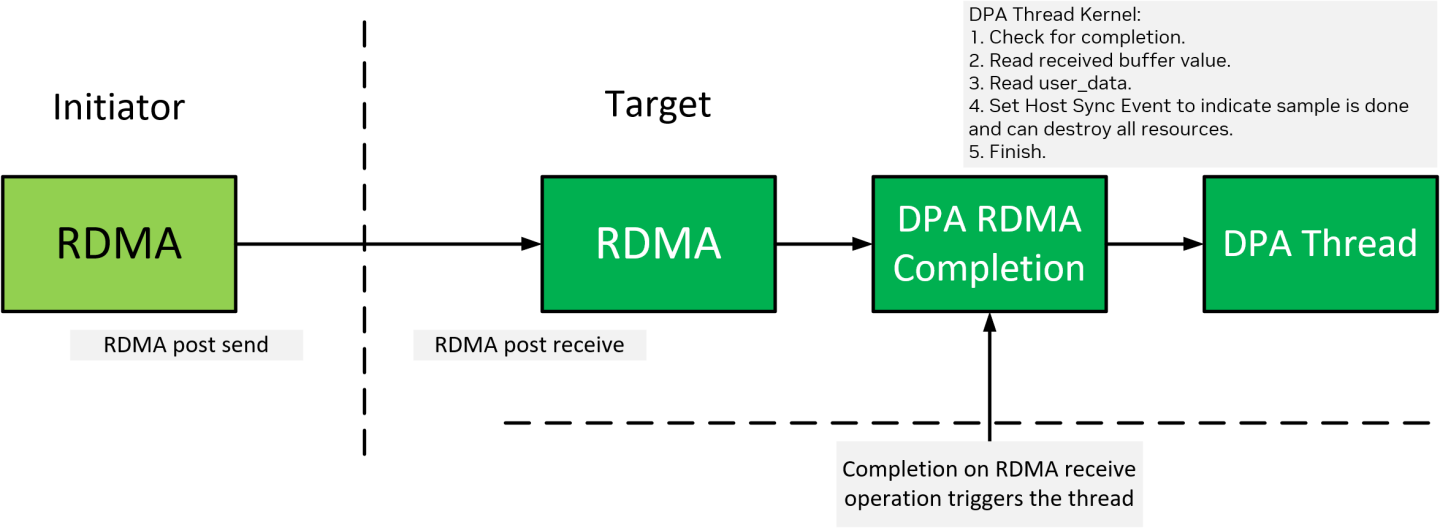

Basic Initiator Target

This sample illustrates how to trigger DPA Thread using DPA Completion Context attached to DOCA RDMA.

This sample consists of initiator and target endpoints.

In the initiator endpoint, a DOCA RDMA executes RDMA post send operation using DPA RPC.

In the target endpoint, a DOCA RDMA, attached to DPA Completion Context, executes RDMA post receive operation using DPA RPC.

Completion on the post receive operation triggers DPA Thread which prints completion info and sets DOCA Sync Event to release the host application that waits on that event before destroying all resources and finish.

The sample logic includes:

Allocating DOCA DPA and DOCA RDMA resources for both initiator and target endpoints.

Target – Attaching DOCA RDMA to DPA Completion Context which is attached to DPA Thread.

Running DPA thread.

Target – DPA RPC to execute RDMA post receive operation.

Initiator – DPA RPC to execute RDMA post send operation.

The completion on the post receive operation triggers DPA Thread.

Waiting on completion event to be set from DPA Thread.

Destroying all resources.

Reference:

/opt/mellanox/doca/samples/doca_dpa/dpa_basic_initiator_target/dpa_basic_initiator_target_main.c/opt/mellanox/doca/samples/doca_dpa/dpa_basic_initiator_target/host/dpa_basic_initiator_target_sample.c/opt/mellanox/doca/samples/doca_dpa/dpa_basic_initiator_target/device/dpa_basic_initiator_target_kernels_dev.c/opt/mellanox/doca/samples/doca_dpa/dpa_basic_initiator_target/meson.build/opt/mellanox/doca/samples/doca_dpa/dpa_common.h/opt/mellanox/doca/samples/doca_dpa/dpa_common.c/opt/mellanox/doca/samples/doca_dpa/build_dpacc_samples.sh

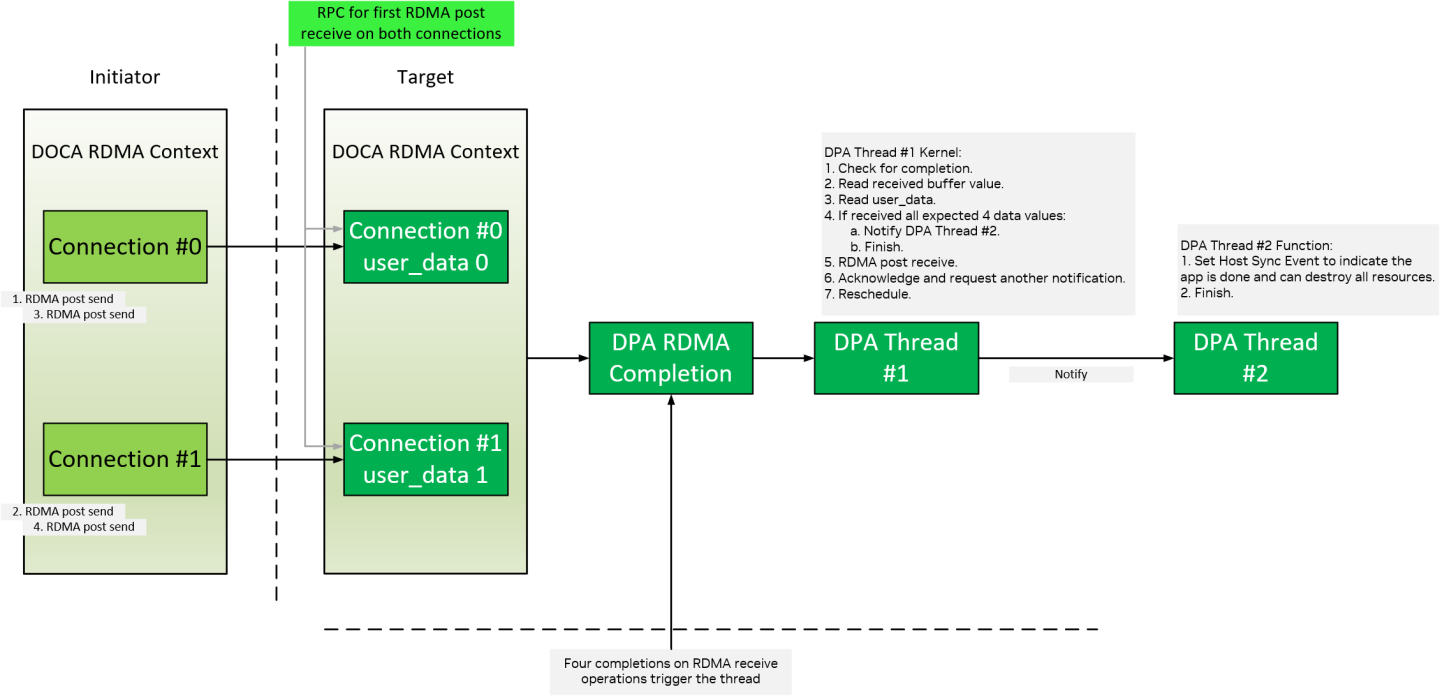

Advanced Initiator Target

This sample illustrates how to trigger DPA threads using both DPA Notification Completion and DPA Completion Context which is attached to a DOCA RDMA context with 2 connections.

This sample consists of initiator and target endpoints.

In the initiator endpoint, two DOCA RDMA connections execute an RDMA post send operation using DPA RPC in the following order:

Connection #0 executes the RDMA post send operation on buffer with value 1.

Connection #1 executes the RDMA post send operation on buffer with value 2.

Connection #0 executes the RDMA post send operation on buffer with value 3.

Connection #1 executes the RDMA post send operation on buffer with value 4.

In the target endpoint, DOCA RDMA context with two connections is attached to a single DPA Completion Context which is attached to DPA Thread #1.

Target RDMA execute the initial RDMA post receive operation using DPA RPC.

Completions on the post receive operations trigger DPA Thread #1 which:

Prints completion info including user data.

Updates a local data base with the receive buffer value.

Repost RDMA receive operation.

Ack, request completion and reschedule.

Once target DPA Thread #1 receives all expected values "1, 2, 3, 4", it notifies DPA Thread #2 and finishes.

Once DPA Thread #2 is triggered, it sets DOCA Sync Event to release the host application that waits on that event before destroying all resources and finishing.

The sample logic includes:

Allocating DOCA DPA and DOCA RDMA resources for both initiator and target endpoints.

Target: Attaching DOCA RDMA to DPA Completion Context which is attached to DPA Thread #1.

Target: Attaching DPA Notification Completion to DPA Thread #2.

Run DPA threads.

Target: DPA RPC to execute the initial RDMA post receive operation.

Initiator: DPA RPC to execute all RDMA post send operations.

Completions on the post receive operations (4 completions) trigger DPA Thread #1.

Once all expected values are received, DPA Thread #1 notifies DPA Thread #2 and finishes.

Waiting on completion event to be set from DPA Thread #2.

Destroying all resources.

Reference:

/opt/mellanox/doca/samples/doca_dpa/dpa_initiator_target/dpa_initiator_target_main.c/opt/mellanox/doca/samples/doca_dpa/dpa_initiator_target/host/dpa_initiator_target_sample.c/opt/mellanox/doca/samples/doca_dpa/dpa_initiator_target/device/dpa_initiator_target_kernels_dev.c/opt/mellanox/doca/samples/doca_dpa/dpa_initiator_target/meson.build/opt/mellanox/doca/samples/doca_dpa/dpa_common.h/opt/mellanox/doca/samples/doca_dpa/dpa_common.c/opt/mellanox/doca/samples/doca_dpa/build_dpacc_samples.sh

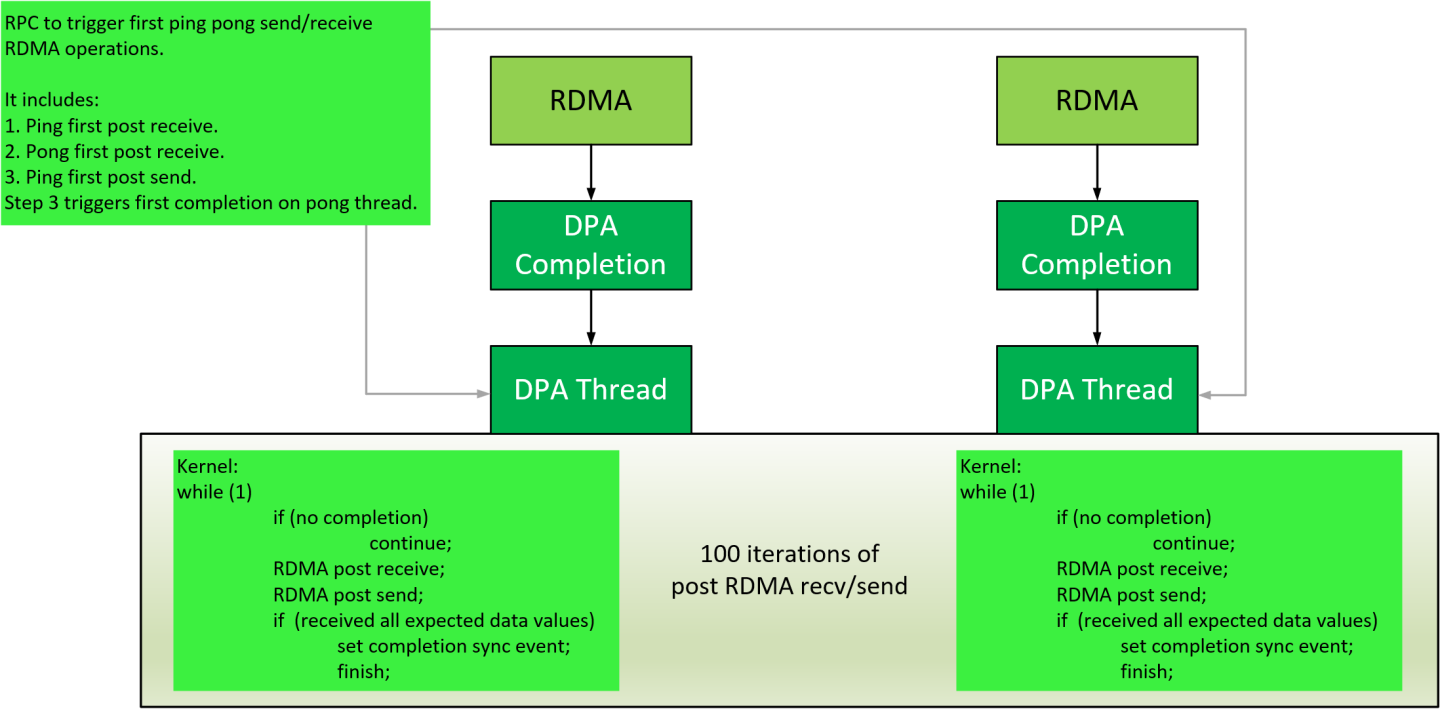

Ping Pong

This sample illustrates the functionality of the following DPA objects:

DPA Thread

DPA Completion Context

DOCA RDMA

This sample consists of ping and pong endpoints which run for 100 iterations. On each iteration, DPA threads (ping and pong) post RDMA receive and send operations for data buffers with values [0-99].

Once all expected values are received on each DPA thread, it sets a DOCA Sync Event to release the host application waiting on that event before destroying all resources and finishes.

To trigger DPA threads, the sample uses a DPA RPC.

The sample logic includes:

Allocating DOCA DPA and DOCA RDMA resources.

Attaching DOCA RDMA to DPA completion context which is attached to DPA thread.

Run DPA threads.

DPA RPC to trigger DPA threads.

100 ping pong iterations of RDMA post receive and send operations.

Waiting on completion events to be set from DPA threads.

Destroying all resources.

Reference:

/opt/mellanox/doca/samples/doca_dpa/dpa_ping_pong/dpa_ping_pong_main.c/opt/mellanox/doca/samples/doca_dpa/dpa_ping_pong/host/dpa_ping_pong_sample.c/opt/mellanox/doca/samples/doca_dpa/dpa_ping_pong/device/dpa_ping_pong_kernels_dev.c/opt/mellanox/doca/samples/doca_dpa/dpa_ping_pong/meson.build/opt/mellanox/doca/samples/doca_dpa/dpa_common.h/opt/mellanox/doca/samples/doca_dpa/dpa_common.c/opt/mellanox/doca/samples/doca_dpa/build_dpacc_samples.sh

Kernel Launch

This sample illustrates how to launch a DOCA DPA kernel with wait and completion DOCA sync events.

The sample logic includes:

Allocating DOCA DPA resources.

Initializing wait and completion DOCA sync events for the DOCA DPA kernel.

Running

hello_worldDOCA DPA kernel that waits on the wait event.Running a separate thread that triggers the wait event.

hello_worldDOCA DPA kernel prints "Hello from kernel".Waiting for the completion event of the kernel.

Destroying the events and resources.

Reference:

/opt/mellanox/doca/samples/doca_dpa/dpa_wait_kernel_launch/dpa_wait_kernel_launch_main.c/opt/mellanox/doca/samples/doca_dpa/dpa_wait_kernel_launch/host/dpa_wait_kernel_launch_sample.c/opt/mellanox/doca/samples/doca_dpa/dpa_wait_kernel_launch/device/dpa_wait_kernel_launch_kernels_dev.c/opt/mellanox/doca/samples/doca_dpa/dpa_wait_kernel_launch/meson.build/opt/mellanox/doca/samples/doca_dpa/dpa_common.h/opt/mellanox/doca/samples/doca_dpa/dpa_common.c/opt/mellanox/doca/samples/doca_dpa/build_dpacc_samples.sh

NVQual

This sample illustrates a stressor for DOCA DPA by running multiple DPA threads on all available EUs simultaneously. The kernels copy multiple buffers to the DPA heap and measure the total DPA run time.

The sample logic includes:

Allocating DOCA DPA resources, including:

Initializing DOCA sync event to synchronize host and DPA between iterations.

Initializing and configuring DPA threads for each available EU.

Initializing a notification completion for each thread to schedule the thread on available EU in every iteration.

Running the following loop, while sufficient time remains:

Notify DPA threads by DPA RPC.

Threads copy buffers to and from the DPA heap.

Wait for all the completion events of all threads.

Calculate average latency of copying a single byte to/from the DPA heap.

Printing sample data summary.

Destroying all resources.

Sample usage:

Usage: dpa_nvqual [DOCA Flags] [Program Flags]

DOCA Flags:

-h, --help Print a help synopsis

-v, --version Print program version information

-l, --log-level Set the (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE>

--sdk-log-level Set the SDK (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE>

-j, --json <path> Parse all command flags from an input json file

Program Flags:

-pf_dev, --pf-device <PF DOCA device name> PF device name that supports DPA (mandatory).

-test_dur, --test-duration-sec <test duration sec> test duration seconds (mandatory).

-user_fac, --user-factor [user factor] user factor from type float (optional). If not provided then 0.75f be chosen.

-ex_eu, --excluded-eus [excluded eus] excluded eus list, devided by ',' with no spaced (optional). If not provided then no eu will be excluded.

Reference:

/opt/mellanox/doca/samples/doca_dpa/dpa_nvqual/dpa_nvqual_main.c/opt/mellanox/doca/samples/doca_dpa/dpa_nvqual /host/dpa_nvqual_sample.c/opt/mellanox/doca/samples/doca_dpa/dpa_nvqual/device/ dpa_nvqual_kernels_dev.c/opt/mellanox/doca/samples/doca_dpa/ dpa_nvqual /meson.build/opt/mellanox/doca/samples/doca_dpa/dpa_common.h/opt/mellanox/doca/samples/doca_dpa/dpa_common.c/opt/mellanox/doca/samples/doca_dpa/build_dpacc_samples.sh

Verbs Initiator Target

This sample illustrates how to perform RDMA operations using DOCA Verbs API.

Sample logic:

Allocating DOCA DPA resources:

Initializing and configuring DPA threads and kernels.

Initializing a buffer on the DPA heap for Verbs operations and attaching mmap.

Initializing a DPA completion context to replace CQ.

Initializing a DOCA sync event to synchronize the host and DPA when the data path is finished.

Allocating Verbs resources:

Verbs context

Verbs QP attached to the DPA completion context

Verbs PD

Verbs AH

Establishing a connection between the initiator and target using a TCP socket.

Exchanging RDMA parameters:

Local buffer addresses

MKEYs

QP numbers

GID addresses

Configuring QP attributes and modifying QP states from Reset to Ready to Send.

Data path:

Initiator:

Waits for completion

Polls the local buffer value to sync with the target's Write operation

Increments the buffer value

Posts a Send work request

Acknowledges completion

Target:

Waits for completions

Posts a Receive work request

Increments the buffer value

Posts a Write work request

Acknowledges 2 completions

Verifying the final buffer value (initiator).

Updating the sync event to threshold value (both initiator and target).

Printing sample data summary.

Destroying all resources.

Sample usage:

Usage: dpa_verbs_initiator_target [DOCA Flags] [Program Flags]

DOCA Flags:

-h, --help Print a help synopsis

-v, --version Print program version information

-l, --log-level Set the (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE>

--sdk-log-level Set the SDK (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE>

Program Flags:

-i, --initiator <Sample is initiator, requires target OOB IP> Sample is initiator, requires target OOB IP

-d, --device <PF device name> PF device name

-sf_dev, --sf-device <SF device name> SF device name that supports RDMA.

-gid, --gid-index GID index for DOCA RDMA (optional)

Reference:

/opt/mellanox/doca/samples/doca_dpa/dpa_verbs_initiator_target/dpa_verbs_initiator_target_main.c/opt/mellanox/doca/samples/doca_dpa/dpa_verbs_initiator_target/host/dpa_verbs_initiator_target_sample.c/opt/mellanox/doca/samples/doca_dpa/dpa_verbs_initiator_target/device/dpa_verbs_initiator_target_kernels_dev.c/opt/mellanox/doca/samples/doca_dpa/dpa_verbs_initiator_target/common/dpa_verbs_initiator_target_common_defs.h/opt/mellanox/doca/samples/doca_dpa/dpa_verbs_initiator_target/meson.build/opt/mellanox/doca/samples/doca_dpa/dpa_common.h/opt/mellanox/doca/samples/doca_dpa/dpa_common.c/opt/mellanox/doca/samples/doca_dpa/build_dpacc_samples.sh