Inference Module

The Holoscan Inference component in the Holoscan SDK is a framework that facilitates designing and executing inference and processing applications through its APIs. All parameters required by the Holoscan Inference component are passed through a parameter set in the configuration file of an application. Detailed features and their corresponding parameter sets are explained in the section below.

Required parameters and related features available with the Holoscan Inference component are listed below, along with the limitations in the current release.

Data Buffer Parameters: Parameters are provided in the inference settings to enable data buffer locations at several stages of the inference. As shown in the figure below, three parameters

input_on_cuda,output_on_cudaandtransmit_on_cudacan be set by the user.input_on_cudarefers to the location of the data going into the inference.If value is

true, it means the input data is on the deviceIf value is

false, it means the input data is on the host

output_on_cudarefers to the data location of the inferred data.If value is

true, it means the inferred data is on the deviceIf value is

false, it means the inferred data is on the host

transmit_on_cudarefers to the data transmission as a GXF message.If value is

true, it means the data transmission from the inference extension will be from Device to DeviceIf value is

false, it means the data transmission from the inference extension will be from Host to Host

Inference Parameters

backendparameter is set to eithertrtfor TensorRT, oronnxrtfor Onnx runtime.TensorRT:

CUDA-based inference supported both on x86 and aarch64

End-to-end CUDA-based data buffer parameters supported.

input_on_cuda,output_on_cudaandtransmit_on_cudawill all be true for end-to-end CUDA-based data movement.input_on_cuda,output_on_cudaandtransmit_on_cudacan be eithertrueorfalse.

Onnx runtime:

Data flow via host only.

input_on_cuda,output_on_cudaandtransmit_on_cudamust befalse.CUDA or CPU based inference on x86, only CPU based inference on aarch64

infer_on_cpuparameter is set totrueif CPU based inference is desired.The tables below demonstrate the supported features related to the data buffer and the inference with

trtandonnxrtbased backend, on x86 and aarch64 system respectively.x86

input_on_cudaoutput_on_cudatransmit_on_cudainfer_on_cpuSupported values for

trttrueorfalsetrueorfalsetrueorfalsefalseSupported values for

onnxrtfalsefalsefalsetrueorfalseAarch64

input_on_cudaoutput_on_cudatransmit_on_cudainfer_on_cpuSupported values for

trttrueorfalsetrueorfalsetrueorfalsefalseSupported values for

onnxrtfalsefalsefalsetruemodel_path_map: User can design single or multi AI inference pipeline by populatingmodel_path_mapin the config file.With a single entry it is single inference and with more than one entry, multi AI inference is enabled.

Each entry in

model_path_maphas a unique keyword as key (used as an identifier by the Holoscan Inference component), and the path to the model as value.All model entries must have the models either in onnx or tensorrt engine file format.

pre_processor_map: input tensor to the respective model is specified inpre_processor_mapin the config file.The Holoscan Inference component supports same input for multiple models or unique input per model.

Each entry in

pre_processor_maphas a unique keyword representing the model (same as used inmodel_path_map), and the tensor name as the value.The Holoscan Inference component supports one input tensor per model.

inference_map: output tensor per model after inference is specified ininference_mapin the config file.Each entry in

inference_maphas a unique keyword representing the model (same as used inmodel_path_mapandpre_processor_map), and the tensor name as the value.

parallel_inference: Parallel or Sequential execution of inferences.If multiple models are input, then user can execute models in parallel.

Parameter

parallel_inferencecan be eithertrueorfalse.Inferences are launched in parallel without any check of the available GPU resources, user must make sure that there is enough memory and compute available to run all the inferences in parallel.

enable_fp16: Generation of the TensorRT engine files with FP16 optionIf

backendis set totrt, and if the input models are in onnx format, then users can generate the engine file with fp16 option to accelerate inferencing.It takes few mintues to generate the engine files for the first time.

is_engine_path: if the input models are specified in trt engine format inmodel_path_map, this flag must be set totrue.in_tensor_names: Input tensor names to be used bypre_processor_map.out_tensor_names: Output tensor names to be used byinference_map.

Other features: Table below illustrates other features and supported values in the current release.

Feature

Supported values

Data type

float32Inference Backend

trtoronnxrtInputs per model

1

GPU(s) supported

1

Inferred data size format

NHWC, NC (classification)

Model Type

All onnx or All trt engine type

Multi Receiver and Single Transmitter support for GXF based messages

The Holoscan Inference component provides an API to extract the data from multiple receivers.

The Holoscan Inference component provides an API to transmit multiple tensors via a single transmitter.

Following are the steps to be followed in sequence for creating an inference application using the Holoscan Inference component in the Holoscan SDK.

Parameter Specification

All required inference parameters of the inference application must be specified. Specification are provided in the application configuration file in C++ API based application in the Holoscan SDK. Inference parameter set from the sample multi AI application using C++ APIs in the Holoscan SDK is shown below.

multiai_inference:

backend: "trt"

model_path_map:

"plax_chamber": "../data/multiai_ultrasound/models/plax_chamber.onnx"

"aortic_stenosis": "../data/multiai_ultrasound/models/aortic_stenosis.onnx"

"bmode_perspective": "../data/multiai_ultrasound/models/bmode_perspective.onnx"

pre_processor_map:

"plax_chamber": ["plax_cham_pre_proc"]

"aortic_stenosis": ["aortic_pre_proc"]

"bmode_perspective": ["bmode_pre_proc"]

inference_map:

"plax_chamber": "plax_cham_infer"

"aortic_stenosis": "aortic_infer"

"bmode_perspective": "bmode_infer"

in_tensor_names: ["plax_cham_pre_proc", "aortic_pre_proc", "bmode_pre_proc"]

out_tensor_names: ["plax_cham_infer", "aortic_infer", "bmode_infer"]

parallel_inference: true

infer_on_cpu: false

enable_fp16: false

input_on_cuda: true

output_on_cuda: true

transmit_on_cuda: true

is_engine_path: false

Alternatively, if a GXF-based pipeline is used, specifications can be provided through an entity in the GXF pipeline.

If the user is creating a standalone application using the Holoscan Inference component APIs, the user must pass all required parameters for the application.

Inference workflow

Inference workflow is the core inference unit in the inference application. An inference workflow can be in the form of a GXF entity in a GXF pipeline, a Holoscan Operator wrapping a GXF extension, or APIs from the Holoscan Inference component can be used to create a standalone workflow. This section provides steps to be followed to create an inference workflow.

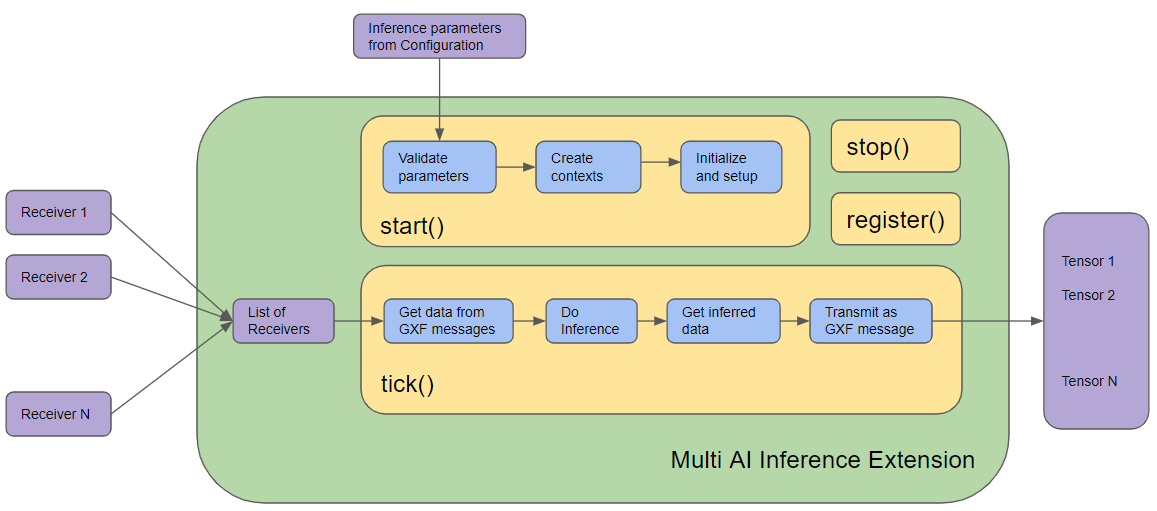

In Holoscan SDK, Multi AI Inference extension is designed using the Holoscan Inference component APIs. A Multi AI inference operator is then created by wrapping the Multi AI Inference extension as described here.

Arguments in the code sections below are referred to as …, the Holoscan Inference component APIs or the GXF extension Multi AI Inference can be referred to for details.

Parameter Validity Check: Input inference parameters via the configuration (from step 1) are verified for correctness.

auto status = HoloInfer::multiai_inference_validity_check(...);

Multi AI specification creation: For a single AI, only one entry is passed into the required entries in the parameter set. There is no change in the API calls below. Single AI or multi AI is enabled based on the number of entries in the parameter specifications from the configuration (in step 1).

// Declaration of multi AI inference specifications std::shared_ptr<HoloInfer::MultiAISpecs> multiai_specs_; // Creation of multi AI specification structure multiai_specs_ = std::make_shared<HoloInfer::MultiAISpecs>(...);

Inference context creation.

// Pointer to inference context. std::unique_ptr<HoloInfer::InferContext> holoscan_infer_context_; // Create holoscan inference context holoscan_infer_context_ = std::make_unique<HoloInfer::InferContext>();

Parameter setup with inference context: All required parameters of the Holoscan Inference component are transferred in this step, and relevant memory allocations are initiated in the multi AI specification.

// Set and transfer inference specification to inference context auto status = holoscan_infer_context_->set_inference_params(multiai_specs_);

Data extraction and allocation: If data is coming from a GXF message as in the Holoscan embedded SDK, the following API is used from the Holoscan Inference component to extract and allocate data for the specified tensor. If data is coming from other sources, the user must populate data in

multiai_specs_->data_per_tensor_and proceed to the next step.// Extract relevant data from input GXF Receivers, and update multi AI specifications gxf_result_t stat = HoloInfer::multiai_get_data_per_model(...);

Map data from per tensor to per model: This step is required in this release. This step maps data per tensor to data per model. As mentioned above, current release supports only one input tensor per model.

auto status = HoloInfer::map_data_to_model_from_tensor(...);

Inference execution

// Execute inference and populate output buffer in multiai specifications auto status = holoscan_infer_context_->execute_inference(multiai_specs_->data_per_model_, multiai_specs_->output_per_model_);

Transmit inferred data: With GXF, output buffer is transmitted as follows.

// Transmit output buffers via a single GXF transmitter auto status = HoloInfer::multiai_transmit_data_per_model(...);

If user has created a standalone application, the inferred data can be accessed from

multiai_specs_->output_per_model_

Figure below demonstrates Multi AI Inference extension in the Holoscan SDK. All blocks with blue color are the API calls from the the Holoscan Inference component.

Application creation

After creation of an inference workflow, an application creation is required to connect input data, pre-processors, inference workflow, post-processors and visualizers for end-to-end application creation. The application can be in form of a GXF based pipeline, a C++ API based application, a Python API based application or a standalone user created application. A sample multi AI pipeline from iCardio.ai’s Multi AI application are part of Holoscan SDK. This same application is provided in GXF, C++ API and Python API variants.

Application Execution

After a Holoscan SDK application has been successfully created, built and installed, execution is performed as described here for a sample Multi AI application