Holoscan by Example

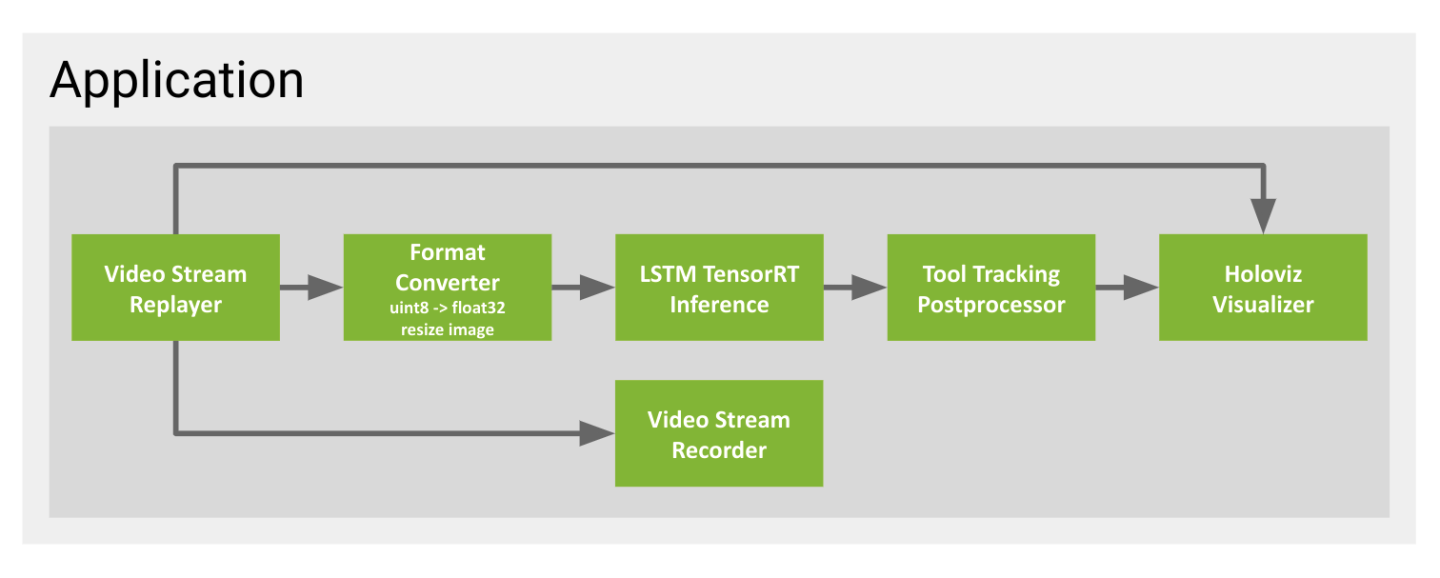

The following figure shows a workflow graph of the simple endoscopy tool tracking application that consists of a single fragment as an application.

Fig. 5 Simple Endoscopy Workflow

The fragment consists of six GXF operators that wrap existing GXF Codelets (see the full list of

/Python operators):

Video Stream Replayer: This operator replays a video stream from a file. It is a GXF Operator (

VideoStreamReplayerOp) that wraps a GXF Codelet ().Format Converter: This operator converts the data type of the image from

uint8tofloat32and resizes the image for feeding into the tool tracking model. It is a GXF Operator (FormatConverterOp) that wraps a GXF Codelet ().LSTM TensorRT Inference: This operator performs the inference of the tool tracking model. It is a GXF Operator (

LSTMTensorRTInferenceOp) that wraps a GXF Codelet ().Tool Tracking Postprocessor: This operator preprocesses the inference results from LSTM TensorRT Inference into the form needed for the overlay layer in the Holoviz Visualizer. It is a GXF Operator (

ToolTrackingPostprocessorOp) that wraps a GXF Codelet ().Holoviz Visualizer: This operator visualizes the tool tracking results. It is an Operator (

HolovizOp) that wraps a GXF Codelet ().Video Stream Recorder: This operator records the video stream to a file. It is a GXF Operator (

VideoStreamRecorderOp) that wraps a GXF Codelet ().

Let’s see how we can create the application by composing the operators.

The following code snippet shows how to create the application with Holoscan SDK’s C++ API.

Code Snippet: examples/basic_workflow/cpp/basic_workflow.cpp

Listing 1 examples/basic_workflows/cpp/basic_workflow.cpp

#include <holoscan/holoscan.hpp>

#include <holoscan/std_ops.hpp>

class App : public holoscan::Application {

public:

void compose() override {

using namespace holoscan;

auto replayer = make_operator<ops::VideoStreamReplayerOp>("replayer", from_config("replayer"));

auto recorder = make_operator<ops::VideoStreamRecorderOp>("recorder", from_config("recorder"));

auto format_converter = make_operator<ops::FormatConverterOp>(

"format_converter",

from_config("format_converter_replayer"),

Arg("pool") = make_resource<BlockMemoryPool>("pool", 1, 854 * 480 * 3 * 4, 2));

auto lstm_inferer = make_operator<ops::LSTMTensorRTInferenceOp>(

"lstm_inferer",

from_config("lstm_inference"),

Arg("pool") = make_resource<UnboundedAllocator>("pool"),

Arg("cuda_stream_pool") = make_resource<CudaStreamPool>("cuda_stream", 0, 0, 0, 1, 5));

auto tool_tracking_postprocessor = make_operator<ops::ToolTrackingPostprocessorOp>(

"tool_tracking_postprocessor",

from_config("tool_tracking_postprocessor"),

Arg("device_allocator") =

make_resource<BlockMemoryPool>("device_allocator", 1, 107 * 60 * 7 * 4, 2),

Arg("host_allocator") = make_resource<UnboundedAllocator>("host_allocator"));

auto visualizer = make_operator<ops::HolovizOp>("holoviz", from_config("holoviz"));

// Flow definition

add_flow(replayer, visualizer, {{"output", "receivers"}});

add_flow(replayer, format_converter);

add_flow(format_converter, lstm_inferer);

add_flow(lstm_inferer, tool_tracking_postprocessor, {{"tensor", "in"}});

add_flow(tool_tracking_postprocessor, visualizer, {{"out", "receivers"}});

add_flow(replayer, recorder);

}

};

int main() {

App app;

app.config("./apps/endoscopy_tool_tracking/cpp/app_config.yaml");

app.run();

return 0;

}

In main() method, we create an instance of the App class that inherits from holoscan::Application.

The App class overrides the compose() function to define the application’s flow graph.

The compose() function is called by the run() function of the holoscan::Application class.

Before we call run(), we need to set the application configuration by calling the config() function.

The configuration file is a YAML file that contains the configuration of the operators and the application.

The path to the configuration file is passed to the config() function as a string.

The configuration file for the simple application is located at apps/endoscopy_tool_tracking/cpp/app_config.yaml. Let’s take a look at the configuration file.

Code Snippet: apps/endoscopy_tool_tracking/cpp/app_config.yaml

Listing 2 apps/endoscopy_tool_tracking/cpp/app_config.yaml

...

replayer:

directory: "../data/endoscopy/video"

basename: "surgical_video"

frame_rate: 0 # as specified in timestamps

repeat: true # default: false

realtime: true # default: true

count: 0 # default: 0 (no frame count restriction)

...

In compose(), we create the operators and add them to the application flow graph.

The operators are created using the make_operator() function.

The make_operator() function takes the operator name and the operator configuration as arguments.

The operator name is used to identify the operator in the flow graph.

The operator configuration is holoscan::ArgList object(s) that contains the operator’s parameter values, or holoscan::Arg object(s).

The operator configuration (holoscan::ArgList object) is created using the from_config() function with a string argument that contains the name of the key in the configuration file.

For example, from_config("replayer") creates an holoscan::ArgList object that contains the arguments of the replayer operator (such as values for ‘directory’, ‘basename’, ‘frame_rate’, ‘repeat’, ‘realtime’, and ‘count’ parameters).

For the Operator parameters that are not defined in the configuration file, we can pass them as holoscan::Arg objects to the make_operator() function.

For example, the format_converter operator has a pool parameter that is not defined in the configuration file.

We pass the pool parameter as an holoscan::Arg object to the make_operator() function, using make_resource() function to create the holoscan::Arg object.

This section shows the available resources that can be used to create an operator resource.

After creating the operators, we add the operators to the application flow graph using the add_flow() function.

The add_flow() function takes the source operator, the destination operator, and the optional port name pairs.

The port name pair is used to connect the output port of the source operator to the input port of the destination operator.

The first element of the pair is the output port name of the upstream operator and the second element is the input port name of the downstream operator.

An empty port name (“”) can be used for specifying a port name if the operator has only one input/output port.

If there is only one output port in the upstream operator and only one input port in the downstream operator, the port pairs can be omitted.

The visualizer operator has a special “receivers” parameter to which any number of input connections can be made. The actual ports for these inputs will be generated dynamically at application run time. Here we can see that outputs from both the replayer and tool_tracking_postprocessor are connected to the “receivers” port on the visualizer.

The following code snippet creates edges between the operators in the flow graph as shown in Fig. 5.

add_flow(replayer, visualizer, {{"output", "receivers"}});

add_flow(replayer, format_converter);

add_flow(format_converter, lstm_inferer);

add_flow(lstm_inferer, tool_tracking_postprocessor, {{"tensor", "in"}});

add_flow(tool_tracking_postprocessor, visualizer, {{"out", "receivers"}});

add_flow(replayer, recorder);

Let’s see how we can create the same application as was done for the C++ API, but using the Python API instead. The implementation is very similar.

The following code snippet shows how to create the application with Holoscan SDK’s Python API.

Code Snippet: examples/basic_workflow/python/basic_workflow.py

Listing 3 examples/basic_workflow/python/basic_workflow.py

import os

from holoscan.core import Application, load_env_log_level

from holoscan.operators import (

FormatConverterOp,

HolovizOp,

LSTMTensorRTInferenceOp,

ToolTrackingPostprocessorOp,

VideoStreamRecorderOp,

VideoStreamReplayerOp,

)

from holoscan.resources import (

BlockMemoryPool,

CudaStreamPool,

MemoryStorageType,

UnboundedAllocator,

)

sample_data_path = os.environ.get("HOLOSCAN_SAMPLE_DATA_PATH", "../../../data")

class EndoscopyApp(Application):

def compose(self):

width = 854

height = 480

video_dir = os.path.join(sample_data_path, "endoscopy", "video")

if not os.path.exists(video_dir):

raise ValueError(f"Could not find video data:{video_dir=}")

replayer = VideoStreamReplayerOp(

self,

name="replayer",

directory=video_dir,

**self.kwargs("replayer"),

)

# 4 bytes/channel, 3 channels

source_pool_kwargs = dict(

storage_type=MemoryStorageType.DEVICE,

block_size=width * height * 3 * 4,

num_blocks=2,

)

recorder = VideoStreamRecorderOp(

name="recorder", fragment=self, **self.kwargs("recorder")

)

format_converter = FormatConverterOp(

self,

name="format_converter_replayer",

pool=BlockMemoryPool(self, name="pool", **source_pool_kwargs),

**self.kwargs("format_converter_replayer"),

)

lstm_cuda_stream_pool = CudaStreamPool(

self,

name="cuda_stream",

dev_id=0,

stream_flags=0,

stream_priority=0,

reserved_size=1,

max_size=5,

)

model_file_path = os.path.join(

sample_data_path, "endoscopy", "model", "tool_loc_convlstm.onnx"

)

engine_cache_dir = os.path.join(

sample_data_path, "endoscopy", "model", "tool_loc_convlstm_engines"

)

lstm_inferer = LSTMTensorRTInferenceOp(

self,

name="lstm_inferer",

pool=UnboundedAllocator(self, name="pool"),

cuda_stream_pool=lstm_cuda_stream_pool,

model_file_path=model_file_path,

engine_cache_dir=engine_cache_dir,

**self.kwargs("lstm_inference"),

)

tool_tracking_postprocessor_block_size = 107 * 60 * 7 * 4

tool_tracking_postprocessor_num_blocks = 2

tool_tracking_postprocessor = ToolTrackingPostprocessorOp(

self,

name="tool_tracking_postprocessor",

device_allocator=BlockMemoryPool(

self,

name="device_allocator",

storage_type=MemoryStorageType.DEVICE,

block_size=tool_tracking_postprocessor_block_size,

num_blocks=tool_tracking_postprocessor_num_blocks,

),

host_allocator=UnboundedAllocator(self, name="host_allocator"),

)

visualizer = HolovizOp(

self, name="holoviz", width=width, height=height, **self.kwargs("holoviz"),

)

# Flow definition

self.add_flow(replayer, visualizer, {("output", "receivers")})

self.add_flow(replayer, format_converter)

self.add_flow(format_converter, lstm_inferer)

self.add_flow(lstm_inferer, tool_tracking_postprocessor, {("tensor", "in")})

self.add_flow(tool_tracking_postprocessor, visualizer, {("out", "receivers")})

self.add_flow(replayer, recorder)

if __name__ == "__main__":

load_env_log_level()

config_file = os.path.join(os.path.dirname(__file__), "simple_endoscopy.yaml")

app = EndoscopyApp()

app.config(config_file)

app.run()

At the if __name__ == "__main__" section, we create an instance of the EndoscopyApp class that inherits from holoscan.core.Application.

The EndoscopyApp class overrides the compose method to define the application’s flow graph.

The compose() method is called by the run method of the holoscan.core.Application class.

Before we call run, we need to set the application configuration by calling the config method.

The configuration file is a YAML file that contains the configuration of the operators and the application.

The path to the configuration file is passed to the config method as a string.

The configuration file for the simple application is located in the same folder as the application.

Code Snippet: examples/basic_workflow/python/basic_workflow.yaml

Listing 4 examples/basic_workflow/python/basic_workflow.yaml

...

replayer:

# we pass directory as a kwarg in the Python application instead

# directory: "../data/endoscopy/video"

basename: "surgical_video"

frame_rate: 0 # as specified in timestamps

repeat: true # default: false

realtime: true # default: true

count: 0 # default: 0 (no frame count restriction)

...

In compose, we create the operators and add them to the application flow graph.

The operators are created using pre-defined operators imported from the holoscan.operators module.

The first argument to ah Operator is the Fragment it will belong to, this is why self is passed to assign the generated operators to the current application. Remaining arguments can be provided either via standard Python kwargs, or via a call to kwargs with a key name corresponding to a field in the application’s YAML configuration file.

For the Operator parameters that are not defined in the configuration file, we can pass them as Python objects via standard Python keyword arguments. For example, the format_converter operator has a pool parameter that is not defined in the configuration file.

We pass the pool parameter as a BlockMemoryPool object.

This section shows the available resources that can be used to create an operator resource.

After creating the operators, we add the operators to the application flow graph using the add_flow>() method.

The add_flow>() function takes the source operator, the destination operator, and the optional port name pairs.

The port name pair is used to connect the output port of the source operator to the input port of the destination operator.

The first element of the pair is the output port name of the upstream operator and the second element is the input port name of the downstream operator.

An empty port name (“”) can be used for specifying a port name if the operator has only one input/output port.

If there is only one output port in the upstream operator and only one input port in the downstream operator, the port pairs can be omitted.

The visualizer operator has a special “receivers” parameter to which any number of input connections can be made. The actual ports for these inputs will be generated dynamically at application run time. Here we can see that outputs from both the replayer and tool_tracking_postprocessor are connected to the “receivers” port on the visualizer.

The following code snippet creates edges between the operators in the flow graph as shown in Fig. 5.

self.add_flow(replayer, visualizer, {("output", "receivers")})

self.add_flow(replayer, format_converter)

self.add_flow(format_converter, lstm_inferer)

self.add_flow(lstm_inferer, tool_tracking_postprocessor, {("tensor", "in")})

self.add_flow(tool_tracking_postprocessor, visualizer, {("out", "receivers")})

self.add_flow(replayer, recorder)

You can run the application one of two ways:

follow the instructions for the NGC container here: prerequisites / run container / run example

follow the instructions to build from source here: prerequisites / run container / run example

When the application is first launched, it will create a TensorRT engine file (.engine) under data/endoscopy/model/tool_loc_convlstm_engines/ directory. If you are not using a Holoscan Developer Kit, it will take some time to create the engine file that is compatible with your system.

After the engine file is created, the application will start running.

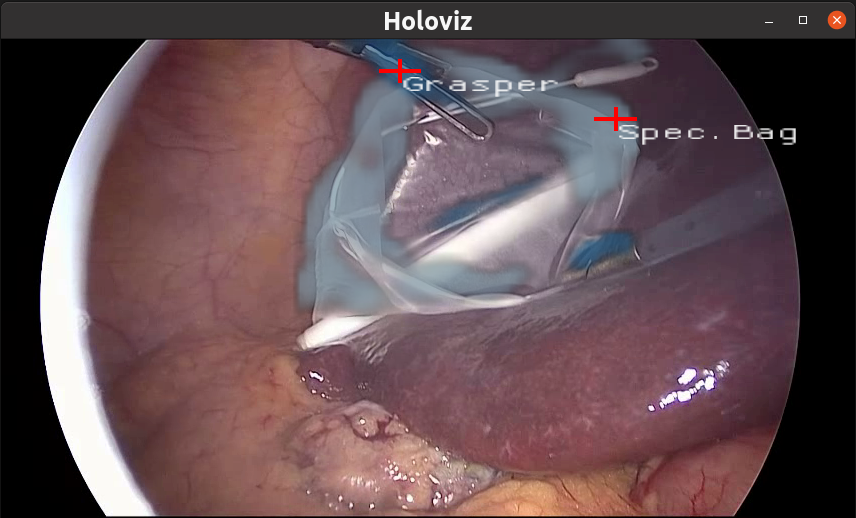

Fig. 6 Endoscopy application with tool tracking

Congratulations! You have successfully built and run the simple endoscopy application.