RDMA over Converged Ethernet (RoCE)

Remote Direct Memory Access (RDMA) is the remote memory management capability that allows server to server data movement directly between application memory without any CPU involvement. RDMA over Converged Ethernet (RoCE) is a mechanism to provide this efficient data transfer with very low latencies on loss-less Ethernet networks. With advances in data center convergence over reliable Ethernet, ConnectX® EN with RoCE uses the proven and efficient RDMA transport to provide the platform for deploying RDMA technology in mainstream data center application at 10GigE, 40GigE and 56GigE link-speed. ConnectX® EN with its hardware offload support takes advantage of this efficient RDMA transport (InfiniBand) services over Ethernet to deliver ultra-low latency for performance-critical and transaction intensive applications such as financial, database, storage, and content delivery networks. RoCE encapsulates IB transport and GRH headers in Ethernet packets bearing a dedicated ether type. While the use of GRH is optional within InfiniBand subnets, it is mandatory when using RoCE. Applications written over IB verbs should work seamlessly, but they require provisioning of GRH information when creating address vectors. The library and driver are modified to provide mapping from GID to MAC addresses required by the hardware.

In order to function reliably, RoCE requires a form of flow control. While it is possible to use global flow control, this is normally undesirable, for performance reasons.

The normal and optimal way to use RoCE is to use Priority Flow Control (PFC). To use PFC, it must be enabled on all endpoints and switches in the flow path.

In the following section we present instructions to configure PFC on Mellanox ConnectX™ cards. There are multiple configuration steps required, all of which may be performed via PowerShell. Therefore, although we present each step individually, you may ultimately choose to write a PowerShell script to do them all in one step. Note that administrator privileges are required for these steps.

For further information about RoCE configuration, please refer to: enterprise-support.nvidia.com/s/

System Requirements

The following are the driver’s prerequisites in order to set or configure RoCE:

RoCE: ConnectX®-3 and ConnectX®-3 Pro firmware version 2.30.3000 or higher

RoCEv2: ConnectX®-3 Pro firmware version 2.31.5050 or higher

All InfiniBand verbs applications which run over InfiniBand verbs should work on RoCE links if they use GRH headers

Operating Systems: Windows Server 2012, Windows Server 2012 R2, Windows 7 Client, Windows 8.1 Client and Windows Server 2016

Set HCA to use Ethernet protocol:

Display the Device Manager and expand “System Devices”. Please see Port Protocol Configuration.

Configuring Windows Host

Since PFC is responsible for flow controlling at the granularity of traffic priority, it is necessary to assign different priorities to different types of network traffic.

As per RoCE configuration, all ND/NDK traffic is assigned to one or more chosen priorities, where PFC is enabled on those priorities.

Configuring Windows host requires configuring QoS. To configure QoS, please follow the procedure described in Configuring Quality of Service (QoS).

Global Pause (Flow Control)

To use Global Pause (Flow Control) mode, disable QoS and Priority:

PS $ Disable-NetQosFlowControl

PS $ Disable-NetAdapterQos <interface name>

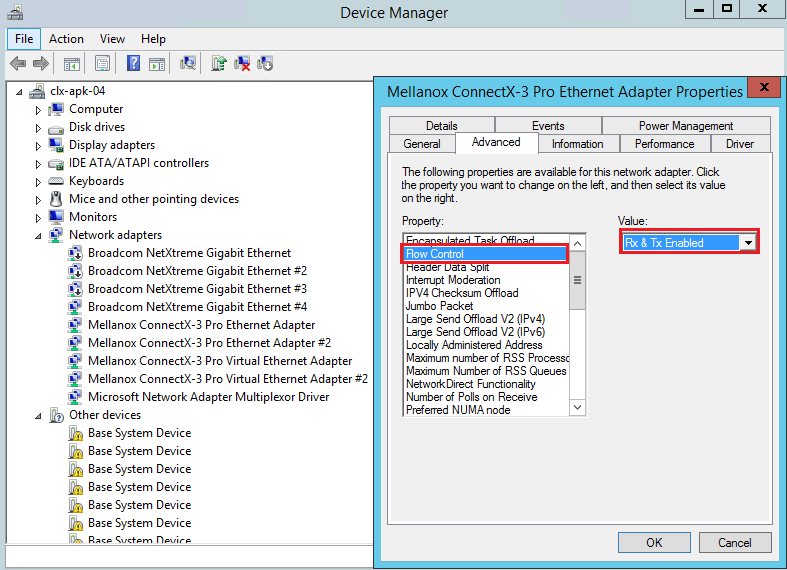

To confirm Flow Control is enabled in adapter parameters:

Device manager → Network adapters → Mellanox ConnectX-3 Ethernet Adapter → Properties → Advanced tab.