NVIDIA Optimized Frameworks

Deep learning (DL) frameworks offer building blocks for designing, training, and validating deep neural networks through a high-level programming interface. Widely-used DL frameworks, such as PyTorch, TensorFlow, PyTorch Geometric, DGL, and others, rely on GPU-accelerated libraries, such as cuDNN, NCCL, and DALI to deliver high-performance, multi-GPU-accelerated training.

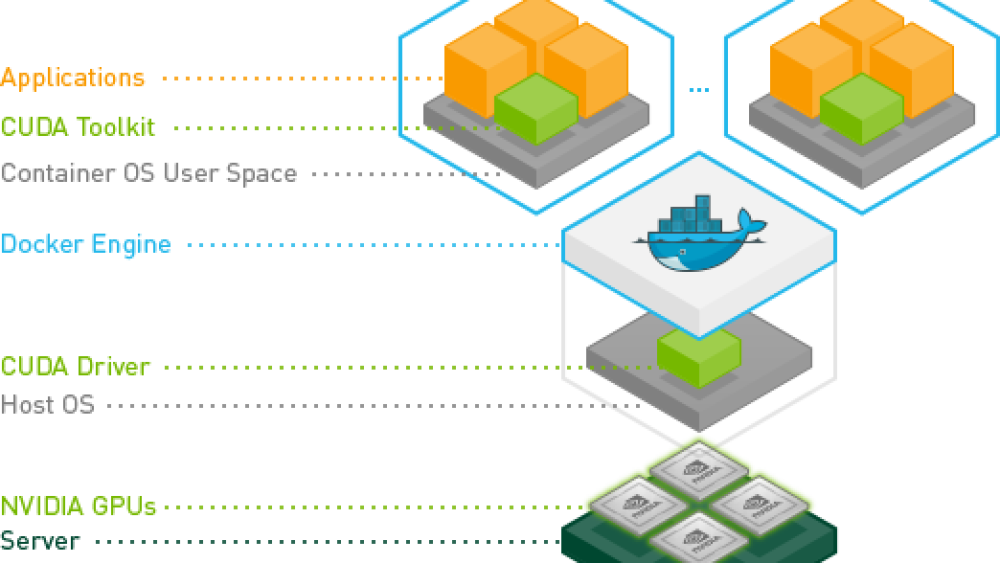

Developers, researchers, and data scientists can get easy access to NVIDIA AI-optimized DL framework containers with DL examples that are performance-tuned and tested for NVIDIA GPUs. This eliminates the need to manage packages and dependencies or build DL frameworks from source. Containerized DL frameworks, with all dependencies included, provide an easy place to start developing common applications, such as conversational AI, natural language understanding (NLU), recommenders, and computer vision. Visit the NVIDIA NGC™ catalog to learn more.