System Requirements

This page describes software and hardware requirements for the cuBB SDK.

The cuBB SDK includes the following components:

cuPHY: The GPU-accelerated 5G PHY software library and example code. It requires one server machine and one GPU accelerator card.

cuPHY-CP: the cuPHY Control-Plane software, which provides the control plane interface between the layer 1 cuPHY and the upper-layer stack.

Ubuntu 20.04 server with low-latency kernel

Docker 19.03

NVIDIA® CUDA® Toolkit 11.7

Mellanox MOFED kernel driver: https://network.nvidia.com/products/infiniband-drivers/linux/mlnx_ofed/

nvidia-peermem kernel driver (Included in CUDA driver)

Mellanox Firmware Tools

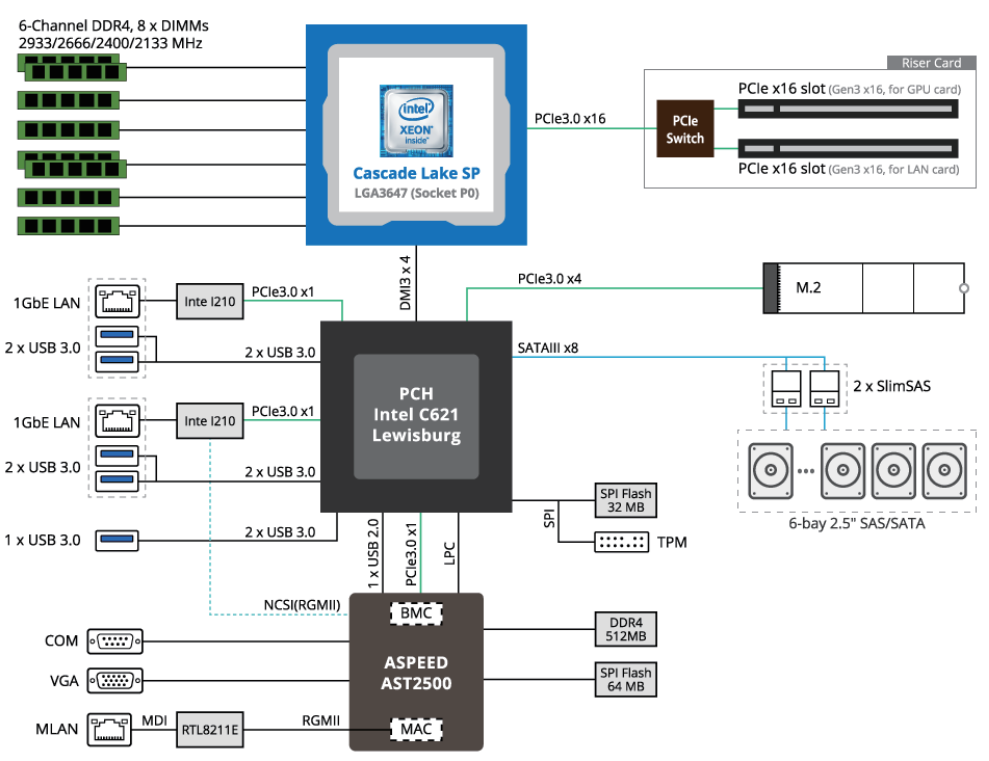

cuBB Server PC (gNB server)

The cuBB server PC is the primary machine for running the cuBB SDK and its cuPHY examples. We recommend using the Aerial DevKit.

If you decide to build your own cuBB server PC, this is the recommended configuration:

An NVIDIA graphics adapter or GPU accelerator with a GPU in the following NVIDIA® families:

A100 or A100X

Mellanox ConnectX6-DX 100 Gbps NIC (Part number: MCX623106AE-CDAT) (also referred to as “CX6-DX” in this document)

A Xeon Gold CPU with at least 24 cores, which is required for the L2+ upper layer stack.

The PCIe switch on the server should be gen3 or newer with two slots of x16 width, such as the Broadcom PLX Technology 9797. The switch should be capable of hosting both the GPU and the CX6-DX network card; refer to the PCIe Topology Requirements section for more details.

RAM: 64GB or greater

Traffic Generator Server PC (RU Emulator)

Beyond running cuBB SDK cuPHY examples, a second machine can function as an RU emulator that generates RU traffic for the cuBB server PC. The following is the recommended configuration:

A Mellanox ConnectX6-DX 100 Gbps NIC (Part number: MCX623106AE-CDAT)

A network cable for the Mellanox NIC (Part number: MCP1600-C001)

The PCIe switch on the server should be gen3 or newer with two slots of x16 width, such as the Broadcom PLX Technology 9797

The traffic generator server should be connected to the gNB server using a direct point-to-point connection with a 100 Gbe cable

The cuBB Installation Guide instructions assume that the 100GbE cable connects to CX6-DX port 0 on both the gNB and traffic generator.

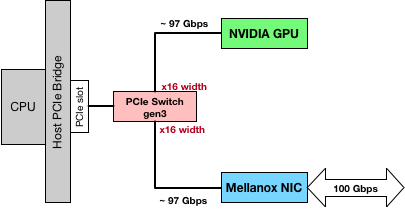

For the best network data throughput performance, the GPU and NIC should be located on a common PCIe switch.

Efficient internal system topology:

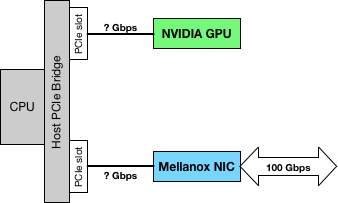

Poor internal system topology:

For example, the Aerial Devkit has the above PCIe topology with an external PCIe switch connected both GPU and NIC.