Architecture

NVIDIA Aerial is a set of SDKs that enable GPU-accelerated, software-defined 5G wireless RANs. It is the single software programming model that goes from Pico cells to CRAN to DRAN. Today, NVIDIA Aerial provides two critical SDKs: cuVNF and cuBB.

The NVIDIA cuVNF SDK provides optimized input/output (I/O) with memory allocations and support for network data flow and processing use cases. cuVNF improves performance for signal processing with NVIDIA GPU multi-core compute capability. It provides speed at line rate for I/O for packet flow and developers can spin off other VNFs, e.g. deep packet inspection, firewall and so on. As a part of cuVNF, GPU enabled DPDK (Data Plane Development Kit) supports high speed data transfer from devices connected to GPU. cuVNF also supports NVIDIA CUDA-X signal processing libraries like cuFFT and NPP offers drop-in APIs and over 5,000 primitives for image and signal processing. cuVNF includes the following examples:

- https://github.com/NVIDIA/l2fwd-nv provides an example of how to leverage your DPDK network application with the NVIDIA GPUDirect RDMA technology

- https://github.com/NVIDIA/5t5g shows how to leverage your DPDK network application with Mellanox 5T5G features such as Accurate Send Scheduling and eCPRI flow steering rules

The NVIDIA cuBB SDK provides a fully-offloaded 5G PHY layer processing pipeline (5G L1) which delivers unprecedented throughput and efficiency by keeping entire processing within the GPU’s high-performance memory. With 5G NR based uplink and downlink channel implementation, cuBB SDK provides high performance for latency and bandwidth utilization. As a part of cuBB, NVIDIA cuPHY SDK provides layer 1 (L1) beamforming, LDPC encode/decode and other functionalities for PHY pipeline, while the NVIDIA cuPHY-CP SDK orchestrates layer 1 and provides the control capability for interfacing with layer 2 (L2), O-RAN fronthaul, as well as Evolved Packet Core (EPC).

Aerial SDK is Kubernetes based and provides container orchestration for ease of deployment and management.

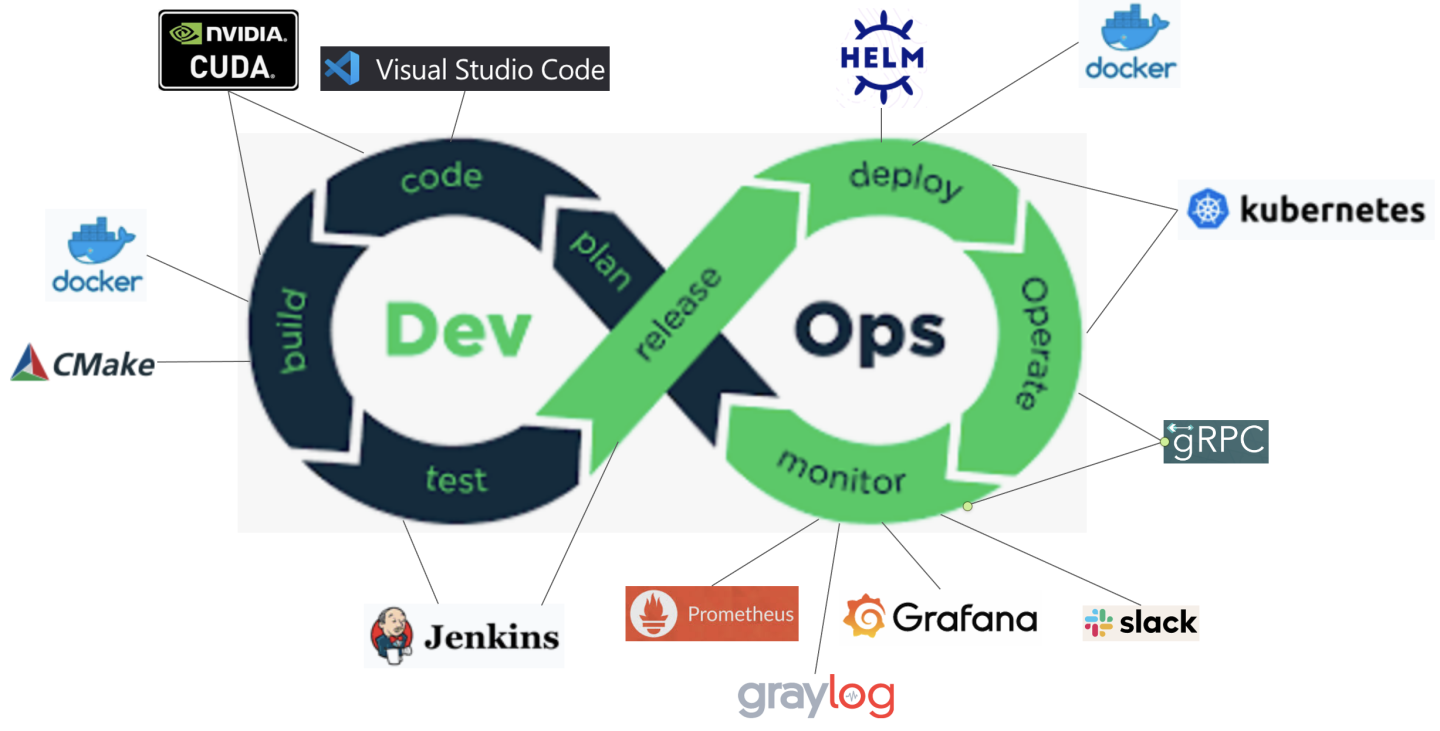

Aerial is based on cloud-native principles and supports a DevOps work-flow utilizing industry standard tools such as kubernetes, gRPC, and Prometheus.

Aerial SDK implements on COTS as well as cloud-native platforms such as EGX. Aerial SDK is Kubernetes based and provides container orchestration for ease of deployment and management. Cross platform support with NVIDIA EGX stack gives a wide range of services that can run edge applications. NVIDIA EGX is an enterprise-grade accelerated computing platform that enables companies to run real-time low-latency applications at the edge. To achieve the highest level of reliability, Aerial SDKs and libraries undergo extensive testing against the EGX software stack with an emphasis on performance and long-term stability.

The Aerial framework includes three primary applications for end to end L1 implementation, as well as several test/example applications. This section describes the three primary applications.

The full L1 stack application is called cuphycontroller. This application implements the adaptation layer from L2 to the cuPHY API, orchestrates the cuPHY API scheduling, and sends/receives ORAN compliant Fronthaul traffic over the NIC. Several independently configurable adaptation layers from L2 to the cuPHY API are available.

For integration testing, the test_mac application implements a mock L2 which is capable of interfacing with cuphycontroller over the L2/L1 API.

For integration testing, the ru-emulator application implements a mock O-RU + UE which is capable of interfacing with cuphycontroller over the ORAN compliant Fronthaul interface.

Every Aerial application supports the following

Configuration at startup through the use of YAML-format configuration files

Support for optionally-configured cloud-based logging and metrics backends

Support for optionally-deployed OAM clients for run-time configuration and status queries

When deployed as a Kubernetes pod

Support for application monitoring and configuration auto-discovery through the Kubernetes API

Configuration YAML files can optionally be mounted as a Kubernetes ConfigMap, separating the container image from the configuration

Configuration YAML files can optionally be templatized using the Kubernetes kustomization.yaml format

Functional Testing

For real-time functional correctness testing, test cases are generated offline in HDF5 binary file format, then played back in real-time through the testMAC and RU Emulator applications. The Aerial cuPHY-CP + cuPHY components under test run in real-time to exercise GPU and Fronthaul Network interfaces. Test case sequencing is enabled through configurable launch pattern files read by testMAC and RU Emulator. The diagram below shows an example of downlink functional testing.

The diagram below shows an example of uplink functional testing.

Roadmap Feature: For more comprehensive non-real-time functional correctness testing, including constrained random testing of L2/L1 parameters, a 5G Matlab Model “in-the-loop” is supported.

End to End Testing

A variety of end to end testing scenarios are possible. Shown below is one example using an Aerial Devkit implementing the CU+DU, an ORAN compliant RU connected to the DU via the ORAN fronthaul interface, and UE test equipment from Keysight.

Another example is the all-digital eCPRI topology is shown below with an Aerial Devkit implementing the CU+DU with the Keysight test equipment implementing the O-RU and UE functions.