Features

The application binary name for the combined cuPHY-CP + cuPHY is cuphycontroller. When cuphycontroller starts, it reads static configuration from configuration yaml files. This section describes the fields in the yaml files.

l2adapter_filename

Feature Status: MFL

This field contains the filename of the yaml-format config file for l2 adapter configuration

cuphydriver_config

Feature Status: MFL

This container holds configuration for cuphydriver

standalone

Feature Status: MFL

0 - run cuphydriver integrated with other cuPHY-CP components

1 - run cuphydriver in standalone mode (no l2adapter, etc)

validation

Feature Status: MFL

Enables additional validation checks at run-time

0 - Disabled

1 - Enabled

num_slots

Feature Status: MFL

This parameter is undocumented.

log_level

Feature Status: MFL

This parameter is undocumented.

profiler_sec

Feature Status: MFL

This parameter is undocumented.

dpdk_thread

Feature Status: MFL

Sets the CPU core used by the primary DPDK thread

dpdk_verbose_logs

Feature Status: MFL

Enable maximum log level in DPDK

0 - Disable

1 - Enable

accu_tx_sched_res_ns

Feature Status: MFL

Sets the accuracy of the accurate transmit scheduling, in units of nanoseconds.

accu_tx_sched_disable

Feature Status: MFL

Disable accurate TX scheduling.

0 - packets are sent according to the TX timestamp

1 - packets are sent whenever it is convenient

pdump_client_thread

Feature Status: MFL

CPU core to use for pdump client. Set to -1 to disable fronthaul RX traffic PCAP capture.

See:

https://doc.dpdk.org/guides/howto/packet_capture_framework.html

aerial-fh README.md

nics

Feature Status: MFL

Container for NIC configuration parameters

nic

Feature Status: MFL

PCIe bus address of the NIC port

mtu

Feature Status: MFL

Maximum transmission size, in bytes, supported by the Fronthaul U-plane and C-plane

cpu_mbufs

Feature Status: MFL

Number of preallocated DPDK memory buffers (mbufs) used for Ethernet packets.

uplane_tx_handles

Feature Status: MFL

The number of pre-allocated transmit handles which link the U-plane prepare() and transmit() functions.

txq_count

Feature Status: MFL

NIC transmit queue count.

Must be large enough to handle all cells attached to this NIC port.

Each cell uses one TXQ for C-plane and txq_count_uplane TXQs for U-plane.

rxq_count

Feature Status: MFL

Receive queue count

This value must be large enough to handle all cell attached to this NIC port.

Each cell uses one RXQ to receive all uplink traffic

txq_size

Feature Status: MFL

Number of packets which can fit in each transmit queue

rxq_size

Feature Status: MFL

Number of packets which can be buffered in each receive queue

gpu

Feature Status: MFL

CUDA device to receive uplink packets from this NIC port.

gpus

Feature Status: MFL

List of GPU device IDs. In order to use gpudirect, the GPU must be on the same PCIe root complex as the NIC. In order to maximize performance, the GPU should be on the same PCIe switch as the NIC. Currently only the first entry in the list is used.

workers_ul

Feature Status: MFL

List of pinned CPU cores used for uplink worker threads.

workers_dl

Feature Status: MFL

List of pinned CPU cores used for downlink worker threads.

workers_dl_slot_t1

Feature Status: MFL

This parameter is undocumented. Do not modify.

workers_dl_slot_t2

Feature Status: MFL

This parameter is undocumented. Do not modify.

workers_ul_slot_t1

Feature Status: MFL

This parameter is undocumented. Do not modify.

workers_ul_slot_t2

Feature Status: MFL

This parameter is undocumented. Do not modify.

workers_ul_slot_t3

Feature Status: MFL

This parameter is undocumented. Do not modify.

prometheus_thread

Feature Status: MFL

Pinned CPU core for updating NIC metrics once per second

section_id_pusch

Feature Status: MFL

ORAN CUS section ID for the PUSCH channel.

section_id_prach

Feature Status: MFL

ORAN CUS section ID for the PRACH channel.

section_3_time_offset

Feature Status: MFL

Time offset, in units of nanoseconds, for the PRACH channel.

ul_order_timeout_cpu_ns

Feature Status: MFL

Timeout, in units of nanoseconds, for the uplink order kernel to receive any U-plane packets for this slot.

ul_order_timeout_gpu_ns

Feature Status: MFL

Timeout, in units of nanoseconds, for the order kernel to complete execution on the GPU

pusch_sinr

Feature Status: MFL

Enable pusch sinr calculation (0 by default)

pusch_rssi

Feature Status: MFL

Enable PUSCH RSSI calculation (0 by default)

pusch_tdi

Feature Status: MFL

Enable PUSCH TDI processing (0 by default)

pusch_cfo

Feature Status: MFL

Enable PUSCH CFO calculations (0 by default)

cplane_disable

Feature Status: MFL

Disable C-plane for all cells

0 - Enable C-plane

1 - Disable C-plane

cells

Feature Status: MFL

List of containers of cell parameters

name

Feature Status: MFL

Name of the cell

cell_id

Feature Status: MFL

ID of the cell.

src_mac_addr

Feature Status: MFL

Source MAC address for U-plane and C-plane packets. Set to 00:00:00:00:00:00 to use the MAC address of the NIC port in use.

dst_mac_addr

Feature Status: MFL

Destination MAC address for U-plane and C-plane packets

nic

Feature Status: MFL

gNB NIC port to which the cell is attached

Must match the ‘nic’ key value in one of the elements of in the ‘nics’ list

vlan

Feature Status: MFL

VLAN ID used for C-plane and U-plane packets

pcp

Feature Status: MFL

QoS priority codepoint used for C-plane and U-plane Ethernet packets

txq_count_uplane

Feature Status: MFL

Number of transmit queues used for U-plane

eAxC_id_ssb_pbch

Feature Status: MFL

List of eAxC IDs to use for SSB/PBCH

eAxC_id_pdcch

Feature Status: MFL

List of eAxC IDs to use for PDCCH

eAxC_id_pdsch

Feature Status: MFL

List of eAxC IDs to use for PDSCH

eAxC_id_srs

Feature Status: MFL

List of eAxC IDs to use for CSI RS

eAxC_id_pusch

Feature Status: MFL

List of eAxC IDs to use for PUSCH

eAxC_id_pucch

Feature Status: MFL

List of eAxC IDs to use for PUCCH

eAxC_id_srs

Feature Status: MFL

List of eAxC IDs to use for SRS

eAxC_id_prach

Feature Status: MFL

List of eAxC IDs to use for PRACH

compression_bits

Feature Status: MFL

Number of bits used for each RE on DL U-plane channels.

decompression_bits

Feature Status: MFL

Number of bits used per RE on uplink U-plane channels.

fs_offset_dl

Feature Status: MFL

Downlink U-plane scaling per ORAN CUS 6.1.3

exponent_dl

Feature Status: MFL

Downlink U-plane scaling per ORAN CUS 6.1.3

ref_dl

Feature Status: MFL

Downlink U-plane scaling per ORAN CUS 6.1.3

fs_offset_ul

Feature Status: MFL

Uplink U-plane scaling per ORAN CUS 6.1.3

exponent_ul

Feature Status: MFL

Uplink U-plane scaling per ORAN CUS 6.1.3

max_amp_ul

Feature Status: MFL

Maximum full scale amplitude used in uplink U-plane scaling per ORAN CUS 6.1.3

mu

Feature Status: MFL

3GPP subcarrier bandwidth index ‘mu’

0 - 15 kHz

1 - 30 kHz

2 - 60 kHz

3 - 120 kHz

4 - 240 kHz

T1a_max_up_ns

Feature Status: MFL

Scheduled timing advance before time-zero for downlink U-plane egress from DU, per ORAN CUS.

T1a_max_cp_ul_ns

Feature Status: MFL

Scheduled timing advance before time-zero for uplink C-plane egress from DU, per ORAN CUS.

Ta4_min_ns

Feature Status: MFL

Start of DU reception window after time-zero, per ORAN CUS

Ta4_max_ns

Feature Status: MFL

End of DU reception window after time-zero, per ORAN CUS

Tcp_adv_dl_ns

Feature Status: MFL

Downlink C-plane timing advance ahead of U-plane, in units of nanoseconds, per ORAN CUS.

nRxAnt

Feature Status: MFL

Number of receive streams for this cell.

nTxAnt

Feature Status: MFL

Number of downlink transmit streams for this cell.

nPrbUlBwp

Feature Status: MFL

Number of uplink PRBs in the BWP for this cell.

nPrbDlBwp

Feature Status: MFL

Number of downlink PRBs in the BWP for this cell.

pusch_prb_stride

Feature Status: MFL

Memory stride, in units of PRBs, for the PUSCH channel. Affects GPU memory layout.

prach_prb_stride

Feature Status: MFL

Memory stride, in units of PRBs, for the PRACH channel. Affects GPU memory layout.

tv_pusch

Feature Status: MFL

HDF5 file containing static configuration (filter coefficients, etc) for the PUSCH channel.

tv_prach

Feature Status: MFL

HDF5 file containing static configuration (filter coefficients, etc) for the PRACH channel.

pusch_ldpc_n_iterations

Feature Status: MFL

PUSCH LDPC channel coding iteration count

pusch_ldpc_early_termination

Feature Status: MFL

PUSCH LDPC channel coding early termination

0 - Disable

1 - Enable

workers_sched_priority

Feature Status: MFL

cuPHYDriver worker threads scheduling priority

dpdk_file_prefix

Feature Status: MFL

Shared data file prefix to use for the underlying DPDK process

wfreq

Feature Status: MFL

Filename containing the coefficients for channel estimation filters, in HDF5 (.h5) format

cell_group

Feature Status: MFL

Enable cuPHY cell groups

0 - disable

1 - enable

cell_group_num

Feature Status: Unassigned

Number of cells to be configured in L1 for the test

fix_beta_dl

Feature Status: Unassigned

Fix the beta_dl for local test with RU Emulator so that the output values are a bytematch to the TV.

Duplicate of fix_beta_dl

Feature Status: Unassigned

Fix the beta_dl for local test with RU Emulator so that the output values are a bytematch to the TV.

msg_type

Feature Status: MFL

Defines the L2/L1 interface API. Supported options are:

scf_fapi_gnb - Use the small cell forum API

phy_class

Feature Status: MFL

Same as msg_type.

tick_generator_mode

Feature Status: MFL

The SLOT.incication interval generator mode:

0 - poll + sleep. During each tick the thread sleep some time to release the CPU core to avoid hang the system, then poll the system time.

1 - sleep. Sleep to absolute timestamp, no polling.

2 - timer_fd. Start a timer and call epoll_wait() on the timer_fd.

allowed_fapi_latency

Feature Status: MFL

Allowed maximum latency of SLOT FAPI messages which send from L2 to L1, otherwise the message will be ignored and dropped.

Unit: slot.

Default is 0, it means L2 message should be received in current slot.

allowed_tick_error

Feature Status: MFL

Allowed tick interval error. Unit: us

Tick interval error is printed in statistic style. If observed tick error > allowed, the log will be printed as Error level.

timer_thread_config

Feature Status: MFL

Configuration for the timer thread

name

Feature Status: MFL

Name of thread

cpu_affinity

Feature Status: MFL

Id of pinned CPU core used for timer thread.

sched_priority

Feature Status: MFL

Scheduling priority of timer thread.

message_thread_config

Feature Status: MFL

Configuration container for the L2/L1 message processing thread.

name

Feature Status: MFL

Name of thread

cpu_affinity

Feature Status: MFL

Id of pinned CPU core used for timer thread.

sched_priority

Feature Status: MFL

Scheduling priority of message thread.

ptp

Feature Status: MFL

ptp configs for GPS_ALPHA, GPS_BETA

gps_alpha

Feature Status: MFL

GPS Alpha value for ORAN WG4 CUS section 9.7.2.

Default value = 0 if undefined.

gps_beta

Feature Status: MFL

GPS Beta value for ORAN WG4 CUS section 9.7.2.

Default value = 0 if undefined.

mu_highest

Feature Status: MFL

Highest supported mu, used for scheduling TTI tick rate

slot_advance

Feature Status: MFL

Timing advance ahead of time-zero, in units of slots, for L1 to notify L2 of a slot request.

ul_configured_gain

Feature Status: MFL

UL Configured Gain used to convert dBFS to dBm. (default if unspecified: 48.68)

staticPuschSlotNum

Feature Status: MFL

Debugging param for testing against RU Emulator to send set static PUSCH slot number

staticPdschSlotNum

Feature Status: MFL

Debugging param for testing against RU Emulator to send set static PDSCH slot number

staticPdcchSlotNum

Feature Status: MFL

Debugging param for testing against RU Emulator to send set static PDCCH slot number

staticSsbPcid

Feature Status: MFL

Debugging param for testing against RU Emulator to send set static SSB phycellId

staticSsbSFN

Feature Status: MFL

Debugging param for testing against RU Emulator to send set static SSB SFN

instances

Feature Status: MFL

Container for cell instances

name

Feature Status: MFL

Name of the instance

layerMap

Feature Status: MFL

List of mappings to layers.

transport

Feature Status: MFL

Configuration container for L2/L1 message transport parameters

type

Feature Status: MFL

Transport type. One of shm, dpdk or udp

udp_config

Feature Status: MFL

Configuration container for the udp transport type.

local_port

Feature Status: MFL

UDP port used by L1

remote_port

Feature Status: MFL

UDP port used by L2.

shm_config

Feature Status: MFL

Configuration container for the shared memory transport type.

primary

Feature Status: MFL

Indicates process is primary for shared memory access.

prefix

Feature Status: MFL

Prefix used in creating shared memory filename.

cuda_device_id

Feature Status: MFL

Set this parameter to a valid GPU device ID to enable CPU data memory pool allocation in host pinned memory. Set to -1 to disable this feature.

ring_len

Feature Status: MFL

Length, in bytes, of the ring used for shared memory transport.

mempool_size

Feature Status: MFL

Configuration container for the memory pools used in shared memory transport.

cpu_msg

Feature Status: MFL

Configuration container for the shared memory transport for CPU messages (i.e. L2/L1 FAPI messages)

buf_size

Feature Status: MFL

Buffer size in bytes

pool_len

Feature Status: MFL

Pool length in buffers.

cpu_data

Feature Status: MFL

Configuration container for the shared memory transport for CPU data elements (i.e. downlink and uplink transport blocks)

buf_size

Feature Status: MFL

Buffer size in bytes

pool_len

Feature Status: MFL

Pool length in buffers.

cuda_data

Feature Status: MFL

Configuration container for the shared memory transport for GPU data elements

buf_size

Feature Status: MFL

Buffer size in bytes

pool_len

Feature Status: MFL

Pool length in buffers.

dpdk_config

Feature Status: MFL

Configurations for the DPDK over NIC transport type.

primary

Feature Status: MFL

Indicates process is primary for shared memory access.

prefix

Feature Status: MFL

The name used in creating shared memory files and searching DPDK memory pools

local_nic_pci

Feature Status: MFL

The NIC address or name used in IPC.

peer_nic_mac

Feature Status: MFL

The peer NIC MAC address, only need to be set in secondary process (L2/MAC).

cuda_device_id

Feature Status: MFL

Set this parameter to a valid GPU device ID to enable CPU data memory pool allocation in host pinned memory. Set to -1 to disable this feature.

need_eal_init

Feature Status: MFL

Whether nvipc need to call rte_eal_init() to initiate the DPDK context. 1 - initiate by nvipc; 0 - initiate by other module in the same process.

lcore_id

Feature Status: MFL

The logic core number for nvipc_nic_poll thread.

mempool_size

Feature Status: MFL

Configuration container for the memory pools used in shared memory transport.

cpu_msg

Feature Status: MFL

Configuration container for the shared memory transport for CPU messages (i.e. L2/L1 FAPI messages)

buf_size

Feature Status: MFL

Buffer size in bytes

pool_len

Feature Status: MFL

Pool length in buffers.

cpu_data

Feature Status: MFL

Configuration container for the shared memory transport for CPU data elements (i.e. downlink and uplink transport blocks)

buf_size

Feature Status: MFL

Buffer size in bytes

pool_len

Feature Status: MFL

Pool length in buffers.

cuda_data

Feature Status: MFL

Configuration container for the shared memory transport for GPU data elements

buf_size

Feature Status: MFL

Buffer size in bytes

pool_len

Feature Status: MFL

Pool length in buffers.

app_config

Feature Status: MFL

Configurations for all transport types, mostly used for debug.

grpc_forward

Feature Status: MFL

Whether to enable forwarding nvipc messages and how many messages to be forwarded automatically from initialization. Here count = 0 means forwarding every message forever.

0: disabled;

1: enabled but doesn’t start forwarding at initial;

-1: enabled and start forwarding at initial with count = 0;

Other positive number: enabled and start forwarding at initial with count = grpc_forward.

debug_timing

Feature Status: MFL

For debug only.

Whether to record timestamp of allocating, sending, receiving, releasing of all nvipc messages.

pcap_enable

Feature Status: MFL

For debug only.

Whether to capture nvipc messages to pcap file.

aerial_metrics_backend_address

Feature Status: MFL

Aerial Prometheus metrics backend address

pucch_dtx_thresholds

Feature Status: MFL

Array of DTX Thresholds for each PUCCH threshold. Units are dB.

Default value if not present is -100 dB.

Example:

pucch_dtx_thresholds: [-100.0, -100.0, -100.0, -100.0, -100.0]

enable_precoding

Feature Status: MFL

Enable/Disable Precoding PDUs to be parsed in L2Adapter.

Default value is 0

enable_precoding: 0/1

enable_beam_forming

Feature Status: MFL

Enables/Disables BeamIds to parsed in L2Adapter

Default value : 0

enable_beam_forming: 1

pusch_cfo (deprecated)

Feature Status: MFL

This field is deprecated in Aerial SDK 22.1.

[STRIKEOUT:Enable/Disable CFO correction for cuPHY’s PUSCH processing]

[STRIKEOUT:Default value is 0 ]

[STRIKEOUT:pusch_cfo: 1]

pusch_tdi (deprecated)

Feature Status: MFL

This field is deprecated in Aerial SDK 22.1.

[STRIKEOUT:Enable Time domain interpolation for cuPHY’s PUSCH]

[STRIKEOUT:Default value is 0]

[STRIKEOUT:pusch_tdi: 1]

dl_tb_loc

Feature Status: MFL

Transport block location in inside nvipc buffer

Default value is 1 ,

dl_tb_loc: 0 # TB is located in inline with nvipc’s msg buffer

dl_tb_loc: 1 # TB is located in nvipc’s CPU data buffer

dl_tb_loc: 2 # TB is located in nvipc’s GPU buffer

lower_guard_bw

Feature Status: MFL

Lower Guard Bandwidth expressed in kHZ. Used for deriving freqOffset for each Rach Occasion.

Default is 845 and is

lower_guard_bw: 845

staticSsbSlotNum

Feature Status: MFL

staticSsbSlotNum

Override the incoming slot number with the Yaml configured SlotNumber for SS/PBCH

Example

staticSsbSlotNum:10

The application binary name for the combined O-RU + UE emulator is ru-emulator. When ru-emulator starts, it reads static configuration from a configuration yaml file. This section describes the fields in the yaml file.

core_list

Feature Status: MFL

List of CPU cores that RU Emulator could use

nic_interface

Feature Status: MFL

PCIe address of NIC to use i.e. b5:00.1

peerethaddr

Feature Status: MFL

MAC address of cuPHYController port

nvlog_name

Feature Status: Unassigned

The nvlog instance name for ru-emulator. Detailed nvlog configurations are in nvlog_config.yaml

cell_configs

Cell configs agreed upon with DU

name

Feature Status: MFL

Cell string name (largely unused)

eth

Feature Status: MFL

Cell MAC address

compression_bits

Feature Status: MFL

Compression method for DL throughput packets

decompression_bits

Feature Status: MFL

Compression method for sending UL packets

flow_list

Feature Status: MFL

eAxC list

eAxC_prach_list

Feature Status: MFL

eAxC prach list

vlan

Feature Status: MFL

vlan to use for RX and TX

nic

Feature Status: Unassigned

Index of the nic to use in the nics list

tti

Feature Status: MFL

Slot indication inverval

Deprecated: dl_compress_bits

Feature Status: MFL

compression bits for DL

Deprecated: ul_compress_bits

Feature Status: MFL

compression bits for UL

validate_dl_timing

Validate DL timing (need to be PTP synchronized)

Deprecated: dl_timing_delay_us

Feature Status: MFL

t1a_max_up from ORAN

timing_histogram

Feature Status: MFL

generate histogram

timing_histogram_bin_size

Feature Status: MFL

histogram bin size

Deprecated: dl_symbol_window_size

Feature Status: MFL

symbol accepted window size

oran_timing_info

dl_c_plane_timing_delay

Feature Status: Unassigned

t1a_max_up from ORAN

dl_c_plane_window_size

Feature Status: Unassigned

DL C Plane RX ontime window size

ul_c_plane_timing_delay

Feature Status: Unassigned

T1a_max_cp_ul from ORAN

ul_c_plane_window_size

Feature Status: Unassigned

UL C Plane RX ontime window size

dl_u_plane_timing_delay

Feature Status: Unassigned

T2a_max_up from ORAN

dl_u_plane_window_size

Feature Status: Unassigned

DL U Plane RX ontime window size

ul_u_plane_tx_offset

Feature Status: Unassigned

Ta4_min_up from ORAN

During run-time, Aerial components can be re-configured or queried for status via gRPC remote procedure calls (RPCs). The RPCs are defined in “protocol buffers” syntax, allowing support for clients written in any of the languages supported by gRPC and protocol buffers.

More information about gRPC may be found at: https://grpc.io/docs/what-is-grpc/core-concepts/

More information about protocol buffers may be found at: https://developers.google.com/protocol-buffers

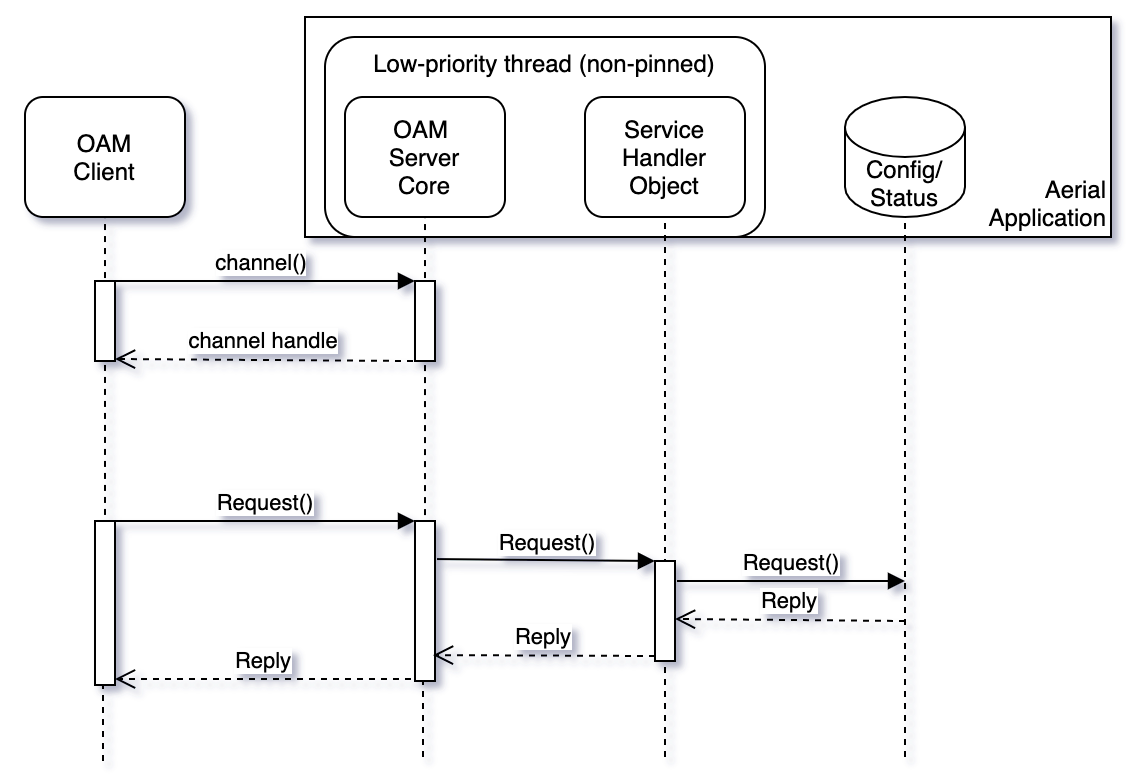

Simple Request/Reply Flow

Feature Status: MFL

Aerial applications support a request/reply flow using the gRPC framework with protobufs messages. At run-time, certain configuration items may be updated and certain status information may be queried. An external OAM client interfaces with the Aerial application acting as the gRPC server.

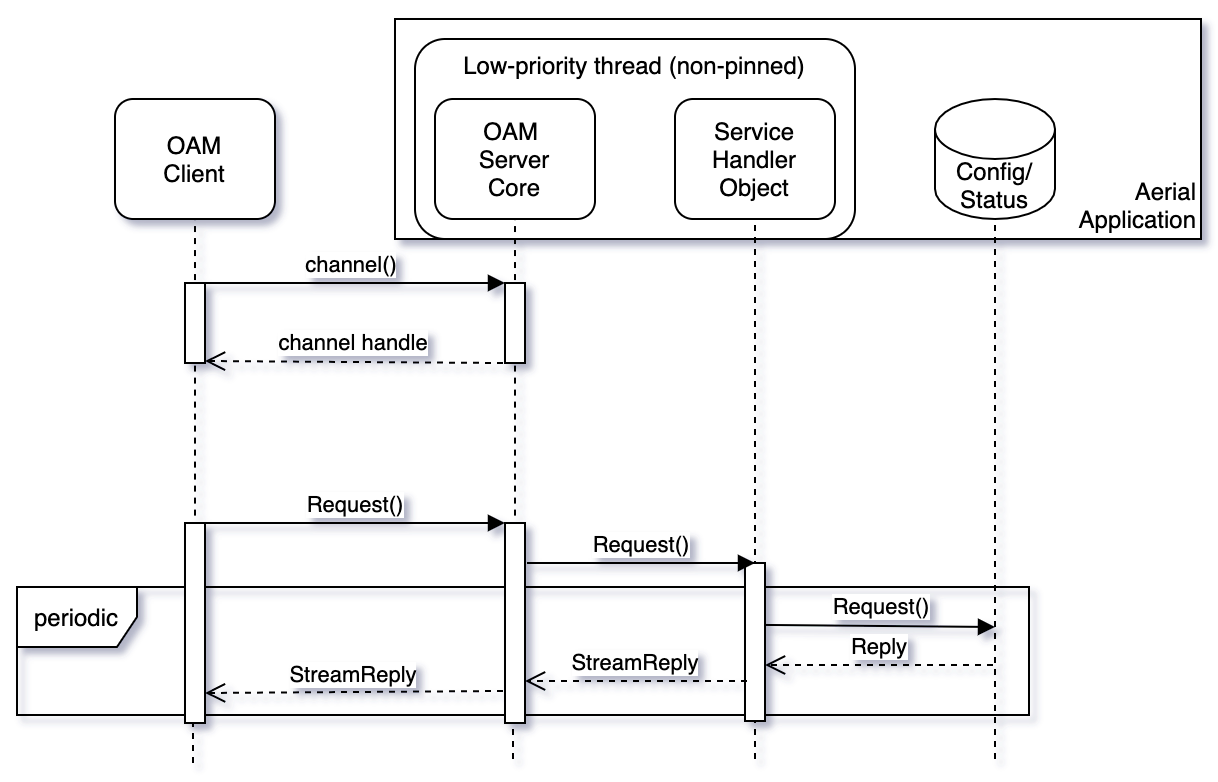

Streaming Request/Replies

Feature Status: MFL

Aerial applications support the gRPC streaming feature for sending (a)periodic status between client and server.

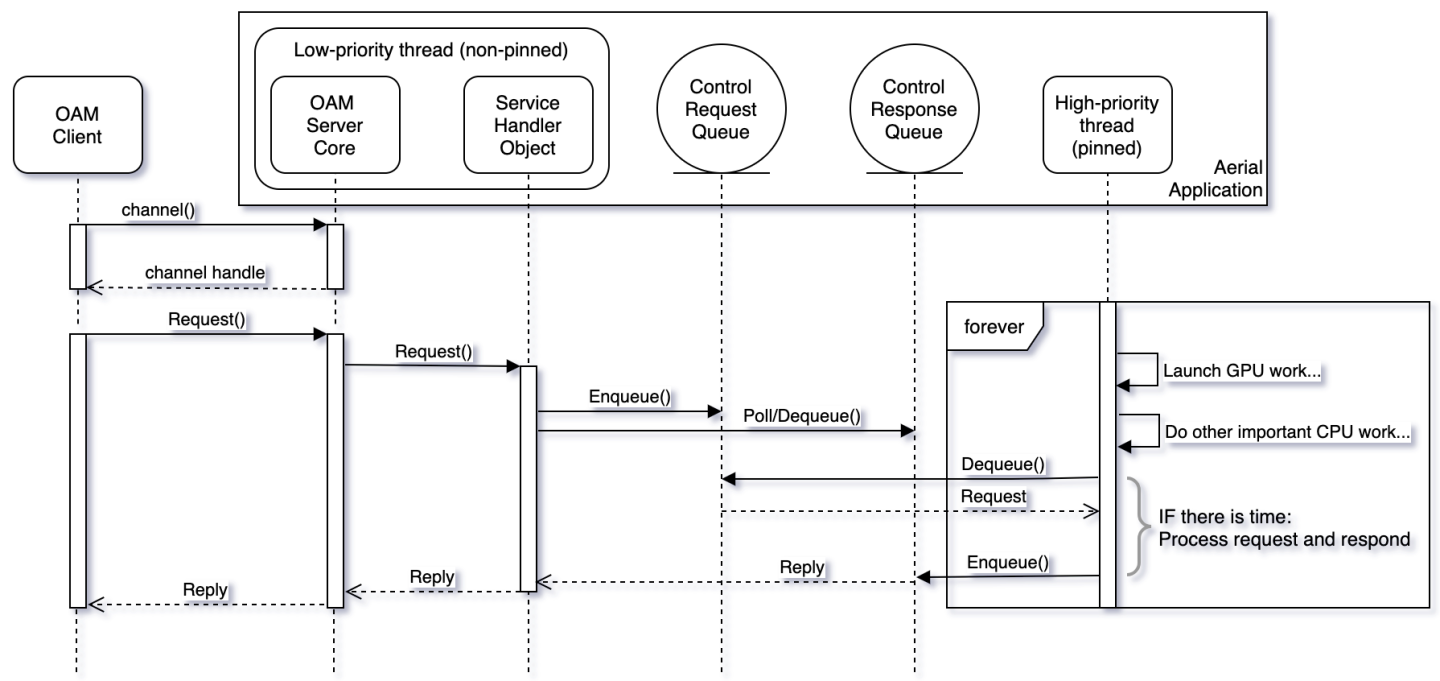

Asynchronous Interthread Communication

Feature Status: MFL

Certain request/reply scenarios require interaction with the high-priority CPU-pinned threads orchestrating GPU work. These interactions occur through Aerial-internal asynchronous queues, and requests are processed on a best effort basis which prioritizes the orchestration of GPU kernel launches and other L1 tasks.

Aerial Common Service definition

/\*

\* Copyright (c) 2021, NVIDIA CORPORATION. All rights reserved.

\*

\* NVIDIA CORPORATION and its licensors retain all intellectual property

\* and proprietary rights in and to this software, related documentation

\* and any modifications thereto. Any use, reproduction, disclosure or

\* distribution of this software and related documentation without an

express

\* license agreement from NVIDIA CORPORATION is strictly prohibited.

\*/

syntax = "proto3";

package aerial;

service Common {

rpc GetSFN (GenericRequest) returns (SFNReply) {}

rpc GetCpuUtilization (GenericRequest) returns (CpuUtilizationReply) {}

rpc SetPuschH5DumpNextCrc (GenericRequest) returns (DummyReply) {}

rpc GetFAPIStream (FAPIStreamRequest) returns (stream FAPIStreamReply)

{}

}

message GenericRequest {

string name = 1;

}

message SFNReply {

int32 sfn = 1;

int32 slot = 2;

}

message DummyReply {

}

message CpuUtilizationPerCore {

int32 core_id = 1;

int32 utilization_x1000 = 2;

}

message CpuUtilizationReply {

repeated CpuUtilizationPerCore core = 1;

}

message FAPIStreamRequest {

int32 client_id = 1;

int32 total_msgs_requested = 2;

}

message FAPIStreamReply {

int32 client_id = 1;

bytes msg_buf = 2;

bytes data_buf = 3;

}

rpc GetCpuUtilization

Feature Status: MFL

The GetCpuUtilization returns a variable-length array of CPU utilization per-high-priority-core.

CPU utilization is available through the Prometheus node exporter, however the design approach used by Aerial high-priority threads results in a false 100% CPU core utilization per thread. This RPC allows retrieval of the actual CPU utilization of high-priority threads. High-priority threads are pinned to specific CPU cores.

rpc GetFAPIStream

Feature Status: MFL

This RPC requests snooping of one or more (up to infinite number) of SCF FAPI messages. The snooped messages are delivered from the Aerial gRPC server to a third party client. See cuPHY-CP/cuphyoam/examples/aerial_get_l2msgs.py for an example client.

rpc TerminateCuphycontroller

Feature Status: MFL

This RPC message terminates cuPHYController with immediate effect

rpc CellParamUpdateRequest

Feature Status: MFL

This RPC message updates cell configuration without stopping the cell. Message specification:

message CellParamUpdateRequest {

int32 cell_id = 1;

string dst_mac_addr = 2;

int32 vlan_tci = 3;

}

dst_mac_addr must be in ‘XX:XX:XX:XX:XX:XX’ format

vlan_tci must include the 16-bit TCI value of 802.1Q tag

Log Levels

Feature Status: MFL

Nvlog supports the following log levels: Fatal, Error, Console, Warning, Info, Debug, and Verbose

A Fatal log message results in process termination. For other log levels, the process continues execution. A typical deployment will send Fatal, Error, and Console levels to stdout. Console level is for printing something which is neither a warning nor an error, but you want to print to stdout.

nvlog

Feature Status: MFL

This yaml container contains parameters related to nvlog configuration, see nvlog_config.yaml

name

Feature Status: MFL

Used to create the shared memory log file. Shared memory handle is /dev/shm/${name}.log and temp logfile is named /tmp/${name}.log

primary

Feature Status: MFL

In all processes logging to the same file, set the first starting porcess to be primary, set others to be secondary.

shm_log_level

Feature Status: MFL

Sets the log level threshold for the high performance shared memory logger. Log messages with a level at or below this threshold will be sent to the shared memory logger.

Log levels: 0 - NONE, 1 - FATAL, 2 - ERROR, 3 - CONSOLE, 4 - WARNING, 5 - INFO, 6 - DEBUG, 7 - VERBOSE

Setting the log level to LOG_NONE means no logs will be sent to the shared memory logger.

console_log_level

Feature Status: MFL

Sets the log level threshold for printing to the console. Log messages with a level at or below this threshold will be printed to stdout.

max_file_size_bits

Feature Status: MFL

Define the rotating log file /var/log/aerial/${name}.log size. Size = 2 ^ bits:

shm_cache_size_bits

Feature Status: MFL

Define the SHM cache file /dev/shm/${name}.log size. Size = 2 ^ bits.

log_buf_size

Feature Status: MFL

Max log string length of one time call of the nvlog API

max_threads

Feature Status: MFL

The maximum number of threads which are using nvlog all together

save_to_file

Feature Status: MFL

Whether to copy and save the SHM cache log to a rotating log file under /var/log/aerial/ folder

cpu_core_id

Feature Status: MFL

CPU core ID for the background log saving thread. -1 means the core is not pinned.

prefix_opts

Feature Status: MFL

bit5 - thread_id

bit4 - sequence number

bit3 - log level

bit2 - module type

bit1 - date

bit0 - time stamp

Refer to nvlog.h for more details.

nvlog_observer

Feature Status: Unassigned

Wheter to run nvlog_observer as primary.

0: Not to run nvlog_observer or to run nvlog_observer as secondary

process.

1: To run nvlog_observer as primary process.

The OAM Metrics API is used internally by cuPHY-CP components to report metrics (counters, gauges, and histograms). The metrics are exposed via a Prometheus Aerial exporter.

Host Metrics

Feature Status: MFL

Host metrics are provided via the Prometheus node exporter. The node exporter provides many thousands of metrics about the host hardware and OS, such as but not limited to

CPU statistics

Disk statistics

Filesystem statistics

Memory statistics

Network statistics

Please refer to https://github.com/prometheus/node_exporter and https://prometheus.io/docs/guides/node-exporter/ for detailed documentation on the node exporter.

GPU Metrics

Feature Status: MFL

GPU hardware metrics are provided through the GPU Operator via the Prometheus dcgm exporter. The dcgm exporter provides many thousands of metrics about the GPU and PCIe bus connection, such as but not limited to

GPU hardware clock rates

GPU hardware temperatures

GPU hardware power consumption

GPU memory utilization

GPU hardware errors including ECC

PCIe throughput

Please refer to https://github.com/NVIDIA/gpu-operator for details on the GPU operator.

Please refer to https://github.com/NVIDIA/gpu-monitoring-tools for detailed documentation on the dcgm exporter.

An example grafana dashboard is available at https://grafana.com/grafana/dashboards/12239

Aerial Metric Naming Conventions

Feature Status: MFL

In addition to metrics available through the node exporter and dcgm exporter, Aerial exposes several application metrics.

Metric names are per https://prometheus.io/docs/practices/naming/ and will follow the format aerial_<component>_<sub-component>_<metric description>_<units>

Metric types are per https://prometheus.io/docs/concepts/metric_types/

The component and sub-component definitions are in the table below:

Co mponent |

Su b-Component |

Description |

|---|---|---|

| cuphycp | cuPHY Control Plane application | |

| fapi | L2/L1 interface metrics | |

| cplane | Fronthaul C-plane metrics | |

| uplane | Fronthaul U-plane metrics | |

| net | Generic network interface metrics | |

| cuphy | cuPHY L1 library | |

| pbch | Physical Broadcast Channel metrics | |

| pdsch | Physical Downlink Shared Channel metrics | |

| pdcch | Physical Downlink Common Channel metrics | |

| pusch | Physical Uplink Shared Channel metrics | |

| pucch | Physical Uplink Common Channel metrics | |

| prach | Physical Random Access Channel metrics |

For each metric, the description, metric type and metric tags are provided. Tags are a way of providing granularity to metrics without creating new metrics.

Metrics exporter port

Feature Status: MFL

Aerial metrics are exported on port TBD

L2/L1 Interface Metrics

aerial_cuphycp_slots_total

Feature Status: MFL

Description: Counts the total number of processed slots

Metric type: counter

Metric tags:

type: “UL” or “DL”

cell: “cell number”

aerial_cuphycp_fapi_rx_packets

Feature Status: MFL

Description: Counts the total number of messages L1 receives from L2

Metric type: counter

Metric tags:

msg_type: “type of PDU”

cell: “cell number”

aerial_cuphycp_fapi_tx_packets

Feature Status: MFL

Description: Counts the total number of messages L1 transmits to L2

Metric type: counter

Metric tags:

msg_type: “type of PDU”

cell: “cell number”

Fronthaul Interface Metrics

aerial_cuphycp_cplane_tx_packets_total

Feature Status: MFL

Description: Counts the total number of C-plane packets transmitted by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

aerial_cuphycp_cplane_tx_bytes_total

Feature Status: MFL

Description: Counts the total number of C-plane bytes transmitted by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

aerial_cuphycp_uplane_rx_packets_total

Feature Status: MFL

Description: Counts the total number of U-plane packets received by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

aerial_cuphycp_uplane_rx_bytes_total

Feature Status: MFL

Description: Counts the total number of U-plane bytes received by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

aerial_cuphycp_uplane_tx_packets_total

Feature Status: MFL

Description: Counts the total number of U-plane packets transmitted by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

aerial_cuphycp_uplane_tx_bytes_total

Feature Status: MFL

Description: Counts the total number of U-plane bytes transmitted by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

aerial_cuphycp_uplane_lost_prbs_total

Feature Status: MFL

Description: Counts the total number of PRBs expected but not received by L1 over ORAN Fronthaul interface

Metric type: counter

Metric tags:

cell: “cell number”

channel: One of “prach” or “pusch”

NIC Metrics

aerial_cuphycp_net_rx_failed_packets_total

Feature Status: MFL

Description: Counts the total number of erroneous packets received

Metric type: counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_rx_nombuf_packets_total

Feature Status: MFL

Description: Counts the total number of receive packets dropped due to the lack of free mbufs

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_rx_dropped_packets_total

Feature Status: MFL

Description: Counts the total number of receive packets dropped by the NIC hardware

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_failed_packets_total

Feature Status: MFL

Description: Counts the total number of instances a packet failed to transmit

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_accu_sched_missed_interrupt_errors_total

Feature Status: MFL

Description: Counts the total number of instances accurate send scheduling missed an interrupt

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_accu_sched_rearm_queue_errors_total

Feature Status: MFL

Description: Counts the total number of accurate send scheduling rearm queue errors

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_accu_sched_clock_queue_errors_total

Feature Status: MFL

Description: Counts the total number accurate send scheduling clock queue errors

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_accu_sched_timestamp_past_errors_total

Feature Status: MFL

Description: Counts the total number of accurate send scheduling timestamp in the past errors

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_accu_sched_timestamp_future_errors_total

Feature Status: MFL

Description: Counts the total number of accurate send scheduling timestamp in the future errors

Metric type: Counter

Metric tags:

nic: “nic port BDF address”

aerial_cuphycp_net_tx_accu_sched_clock_queue_jitter_ns

Feature Status: MFL

Description: Current measurement of accurate send scheduling clock queue jitter, in units of nanoseconds

Metric type: Gauge

Metric tags:

nic: “nic port BDF address”

Details:

This gauge shows the TX scheduling timestamp jitter, i.e. how far each individual Clock Queue (CQ) completion is from UTC time.

If we set CQ completion frequency to 2MHz (tx_pp=500) we will might

see the following completions:

cqe 0 at 0 ns

cqe 1 at 505 ns

cqe 2 at 996 ns

cqe 3 at 1514 ns

…

tx_pp_jitter is the time difference between two consecutive CQ completions

aerial_cuphycp_net_tx_accu_sched_clock_queue_wander_ns

Feature Status: MFL

Description: Current measurement of the divergence of Clock Queue (CQ) completions from UTC time over a longer time period (~8s)

Metric type: Gauge

Metric tags:

nic: “nic port BDF address”

Application Performance Metrics

aerial_cuphycp_slot_processing_duration_us

Feature Status: MFL

Description: Counts the total number of slots with GPU processing duration in each 250us-wide histogram bin

Metric type: Histogram

Metric tags:

cell: “cell number”

channel: one of “pbch”, “pdcch”, “pdsch”, “prach”, or “pusch”

le: histogram less-than-or-equal-to 250us-wide histogram bins, for 250, 500, …, 2000, +inf bins.

aerial_cuphycp_slot_processing_duration_us

Feature Status: Roadmap

Description: Counts the total number of slots with GPU processing duration in each 250us-wide histogram bin

Metric type: Histogram

Metric tags:

cell: “cell number”

channel: one of “pucch”

le: histogram less-than-or-equal-to 250us-wide histogram bins, for 250, 500, …, 2000, +inf bins.

PUSCH Metrics

aerial_cuphycp_slot_pusch_processing_duration_us

Feature Status: MFL

Description: Counts the total number of PUSCH slots with GPU processing duration in each 250us-wide histogram bin

Metric type: Histogram

Metric tags:

cell: “cell number”

le: histogram less-than-or-equal-to 250us-wide histogram bins, range 0 to 2000us.

aerial_cuphycp_pusch_rx_tb_bytes_total

Feature Status: MFL

Description: Counts the total number of transport block bytes received in the PUSCH channel

Metric type: Counter

Metric tags:

cell: “cell number”

aerial_cuphycp_pusch_rx_tb_total

Feature Status: MFL

Description: Counts the total number of transport blocks received in the PUSCH channel

Metric type: Counter

Metric tags:

cell: “cell number”

aerial_cuphycp_pusch_rx_tb_crc_error_total

Feature Status: MFL

Description: Counts the total number of transport blocks received with CRC errors in the PUSCH channel

Metric type: Counter

Metric tags:

cell: “cell number”

aerial_cuphycp_pusch_nrofuesperslot

Feature Status: MFL

Description: Counts the total number of UEs processed in each slot per histogram bin PUSCH channel

Metric type: Histogram

Metric tags:

cell: “cell number”

le: Histogram bin less-than-or-equal-to for 2, 4, …, 24, +inf bins.

PRACH Metrics

aerial_cuphy_prach_rx_preambles_total

Feature Status: MFL

Description: Counts the total number of detected preambles in PRACH channel

Metric type: Counter

Metric tags:

cell: “cell number”

PDSCH Metrics

aerial_cuphycp_slot_pdsch_processing_duration_us

Feature Status: MFL

Description: Counts the total number of PDSCH slots with GPU processing duration in each 250us-wide histogram bin

Metric type: Histogram

Metric tags:

cell: “cell number”

le: histogram less-than-or-equal-to 250us-wide histogram bins, range 0 to 2000us.

aerial_cuphy_pdsch_tx_tb_bytes_total

Feature Status: MFL

Description: Counts the total number of transport block bytes transmitted in the PDSCH channel

Metric type: Counter

Metric tags:

cell: “cell number”

aerial_cuphy_pdsch_tx_tb_total

Feature Status: MFL

Description: Counts the total number of transport blocks transmitted in the PDSCH channel

Metric type: Counter

Metric tags:

cell: “cell number”

aerial_cuphycp_pdsch_nrofuesperslot

Feature Status: MFL

Description: Counts the total number of UEs processed in each slot per histogram bin PDSCH channel

Metric type: Histogram

Metric tags:

cell: “cell number”

le: Histogram bin less-than-or-equal-to for 2, 4, …, 24, +inf bins.

Example cuphycontroller config

# Copyright (c) 2017-2021, NVIDIA CORPORATION. All rights reserved.

#

# Redistribution and use in source and binary forms, with or without

modification, are permitted

# provided that the following conditions are met:

# \* Redistributions of source code must retain the above copyright

notice, this list of

# conditions and the following disclaimer.

# \* Redistributions in binary form must reproduce the above copyright

notice, this list of

# conditions and the following disclaimer in the documentation and/or

other materials

# provided with the distribution.

# \* Neither the name of the NVIDIA CORPORATION nor the names of its

contributors may be used

# to endorse or promote products derived from this software without

specific prior written

# permission.

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

"AS IS" AND ANY EXPRESS OR

# IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

WARRANTIES OF MERCHANTABILITY AND

# FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL

NVIDIA CORPORATION BE LIABLE

# FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

CONSEQUENTIAL DAMAGES (INCLUDING,

# BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

OF USE, DATA, OR PROFITS;

# OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF

LIABILITY, WHETHER IN CONTRACT,

# STRICT LIABILITY, OR TOR (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING

IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

---

l2adapter_filename: l2_adapter_config_nrSim_SCF.yaml

aerial_metrics_backend_address: 127.0.0.1:8081

cuphydriver_config:

standalone: 0

validation: 0

num_slots: 8

log_level: DBG

profiler_sec: 0

dpdk_thread: 1

dpdk_verbose_logs: 0

accu_tx_sched_res_ns: 500

accu_tx_sched_disable: 0

pdump_client_thread: -1

mps_uplink_sm: 0

mps_downlink_sm: 0

pdsch_fallback: 0

dpdk_file_prefix: cuphycontroller

nics:

- address: 0000:b5:00.1

mtu: 1514

cpu_mbufs: 65536

uplane_tx_handles: 16

txq_count: 8

rxq_count: 4

txq_size: 8192

rxq_size: 2048

gpu: 0

gpus:

- 0

# Set GPUID to the GPU sharing the PCIe switch as NIC

# run nvidia-smi topo -m to find out which GPU

workers_ul:

- 2

- 3

workers_dl:

- 4

- 5

workers_sched_priority: 95

workers_dl_slot_t1: 1

workers_dl_slot_t2: 1

workers_ul_slot_t1: 1

workers_ul_slot_t2: 1

workers_ul_slot_t3: 1

prometheus_thread: -1

section_id_pusch: 1

section_id_prach: 3

section_3_time_offset: 484

section_3_freq_offset: -3276

enable_cuphy_graphs: 0

ul_order_timeout_cpu_ns: 8000000

ul_order_timeout_gpu_ns: 8000000

cplane_disable: 0

cells:

- name: O-RU 0

cell_id: 1

# set to 00:00:00:00:00:00 to use the MAC address of the NIC port to use

src_mac_addr: 00:00:00:00:00:00

dst_mac_addr: 20:04:9B:9E:27:A3

vlan: 2

pcp: 0

txq_count_uplane: 1

eAxC_id_ssb_pbch: [8,0,1,2]

eAxC_id_pdcch: [8,0,1,2]

eAxC_id_pdsch: [8,0,1,2]

eAxC_id_csirs: [8,0,1,2]

eAxC_id_pusch_pucch: [8,0,1,2]

eAxC_id_prach: [15,7]

compression_bits: 16

decompression_bits: 16

fs_offset_dl: 0

exponent_dl: 4

ref_dl: 0

fs_offset_ul: 0

exponent_ul: 4

max_amp_ul: 65504

mu: 1

T1a_max_up_ns: 0

T1a_max_cp_ul_ns: 500000

Ta4_min_ns: 0

Ta4_max_ns: 100000

Tcp_adv_dl_ns: 500000

nRxAnt: 4

nTxAnt: 4

nPrbUlBwp: 273

nPrbDlBwp: 273

pusch_prb_stride: 273

prach_prb_stride: 12

pusch_ldpc_n_iterations: 10

pusch_ldpc_early_termination: 0

tv_pusch: TVnr_7202_PUSCH_gNB_CUPHY_s0p0.h5

tv_pdsch: TVnr_CP_F08_DS_01.1_PDSCH_gNB_CUPHY_s5p0.h5

tv_pdcch_dl: testMac_DL_ctrl-TC2002_pdcch.h5

tv_pdcch_ul: testMac_DL_ctrl-TC2006_pdcch.h5

tv_pbch: TVnr_1902_SSB_gNB_CUPHY_s0p0.h5

tv_prach: TVnr_5901_PRACH_gNB_CUPHY_s1p0.h5

- name: O-RU 1

cell_id: 2

# set to 00:00:00:00:00:00 to use the MAC address of the NIC port to use

src_mac_addr: 00:00:00:00:00:00

dst_mac_addr: 26:04:9D:9E:29:B3

vlan: 2

pcp: 0

txq_count_uplane: 1

eAxC_id_ssb_pbch: [1,2,4,9]

eAxC_id_pdcch: [1,2,4,9]

eAxC_id_pdsch: [1,2,4,9]

eAxC_id_csirs: [1,2,4,9]

eAxC_id_pusch_pucch: [1,2,4,9]

eAxC_id_prach: [5,6,7,10]

compression_bits: 16

decompression_bits: 16

fs_offset_dl: 0

exponent_dl: 4

ref_dl: 0

fs_offset_ul: 0

exponent_ul: 4

max_amp_ul: 65504

mu: 1

T1a_max_up_ns: 0

T1a_max_cp_ul_ns: 500000

Ta4_min_ns: 0

Ta4_max_ns: 100000

Tcp_adv_dl_ns: 500000

nRxAnt: 4

nTxAnt: 4

nPrbUlBwp: 273

nPrbDlBwp: 273

pusch_prb_stride: 273

prach_prb_stride: 12

pusch_ldpc_n_iterations: 10

pusch_ldpc_early_termination: 0

tv_pusch: TVnr_CP_F08_US_01.1_PUSCH_gNB_CUPHY_s5p0.h5

tv_pdsch: TVnr_CP_F08_DS_01.1_PDSCH_gNB_CUPHY_s5p0.h5

tv_pdcch_dl: testMac_DL_ctrl-TC2002_pdcch.h5

tv_pdcch_ul: testMac_DL_ctrl-TC2006_pdcch.h5

tv_pbch: TVnr_1902_SSB_gNB_CUPHY_s0p0.h5

tv_prach: TVnr_5901_PRACH_gNB_CUPHY_s1p0.h5

- name: O-RU 2

cell_id: 3

# set to 00:00:00:00:00:00 to use the MAC address of the NIC port to use

src_mac_addr: 00:00:00:00:00:00

dst_mac_addr: 20:34:9A:9E:29:B3

vlan: 2

pcp: 0

txq_count_uplane: 1

eAxC_id_ssb_pbch: [1,2,4,9]

eAxC_id_pdcch: [1,2,4,9]

eAxC_id_pdsch: [1,2,4,9]

eAxC_id_csirs: [1,2,4,9]

eAxC_id_pusch_pucch: [1,2,4,9]

eAxC_id_prach: [5,6,7,10]

compression_bits: 16

decompression_bits: 16

fs_offset_dl: 0

exponent_dl: 4

ref_dl: 0

fs_offset_ul: 0

exponent_ul: 4

max_amp_ul: 65504

mu: 1

T1a_max_up_ns: 0

T1a_max_cp_ul_ns: 500000

Ta4_min_ns: 0

Ta4_max_ns: 100000

Tcp_adv_dl_ns: 500000

nRxAnt: 4

nTxAnt: 4

nPrbUlBwp: 273

nPrbDlBwp: 273

pusch_prb_stride: 273

prach_prb_stride: 12

pusch_ldpc_n_iterations: 10

pusch_ldpc_early_termination: 0

tv_pusch: TVnr_CP_F08_US_01.1_PUSCH_gNB_CUPHY_s5p0.h5

tv_pdsch: TVnr_CP_F08_DS_01.1_PDSCH_gNB_CUPHY_s5p0.h5

tv_pdcch_dl: testMac_DL_ctrl-TC2002_pdcch.h5

tv_pdcch_ul: testMac_DL_ctrl-TC2006_pdcch.h5

tv_pbch: TVnr_1902_SSB_gNB_CUPHY_s0p0.h5

tv_prach: TVnr_5901_PRACH_gNB_CUPHY_s1p0.h5

- name: O-RU 3

cell_id: 4

# set to 00:00:00:00:00:00 to use the MAC address of the NIC port to use

src_mac_addr: 00:00:00:00:00:00

dst_mac_addr: 22:34:9C:9E:29:A3

vlan: 2

pcp: 0

txq_count_uplane: 1

eAxC_id_ssb_pbch: [0,1,2,3]

eAxC_id_pdcch: [0,1,2,3]

eAxC_id_pdsch: [0,1,2,3]

eAxC_id_csirs: [0,1,2,3]

eAxC_id_pusch_pucch: [0,1,2,3]

eAxC_id_prach: [5,6,7,10]

compression_bits: 16

decompression_bits: 16

fs_offset_dl: 0

exponent_dl: 4

ref_dl: 0

fs_offset_ul: 0

exponent_ul: 4

max_amp_ul: 65504

mu: 1

T1a_max_up_ns: 0

T1a_max_cp_ul_ns: 500000

Ta4_min_ns: 0

Ta4_max_ns: 100000

Tcp_adv_dl_ns: 500000

nRxAnt: 4

nTxAnt: 4

nPrbUlBwp: 273

nPrbDlBwp: 273

pusch_prb_stride: 273

prach_prb_stride: 12

pusch_ldpc_n_iterations: 10

pusch_ldpc_early_termination: 0

tv_pusch: TVnr_CP_F08_US_01.1_PUSCH_gNB_CUPHY_s5p0.h5

tv_pdsch: TVnr_CP_F08_DS_01.1_PDSCH_gNB_CUPHY_s5p0.h5

tv_pdcch_dl: testMac_DL_ctrl-TC2002_pdcch.h5

tv_pdcch_ul: testMac_DL_ctrl-TC2006_pdcch.h5

tv_pbch: TVnr_1902_SSB_gNB_CUPHY_s0p0.h5

tv_prach: TVnr_5901_PRACH_gNB_CUPHY_s1p0.h5

wfreq: WFreq.h5

...

Example l2_adapter_config yaml file

# Copyright (c) 2017-2020, NVIDIA CORPORATION. All rights reserved.

#

# Redistribution and use in source and binary forms, with or without

modification, are permitted

# provided that the following conditions are met:

# \* Redistributions of source code must retain the above copyright

notice, this list of

# conditions and the following disclaimer.

# \* Redistributions in binary form must reproduce the above copyright

notice, this list of

# conditions and the following disclaimer in the documentation and/or

other materials

# provided with the distribution.

# \* Neither the name of the NVIDIA CORPORATION nor the names of its

contributors may be used

# to endorse or promote products derived from this software without

specific prior written

# permission.

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

"AS IS" AND ANY EXPRESS OR

# IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

WARRANTIES OF MERCHANTABILITY AND

# FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL

NVIDIA CORPORATION BE LIABLE

# FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

CONSEQUENTIAL DAMAGES (INCLUDING,

# BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

OF USE, DATA, OR PROFITS;

# OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF

LIABILITY, WHETHER IN CONTRACT,

# STRICT LIABILITY, OR TOR (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING

IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

---

#gnb_module

msg_type: scf_5g_fapi

phy_class: scf_5g_fapi

slot_advance: 3

# tick_generator_mode: 0 - poll + sleep; 1 - sleep; 2 - timer_fd

tick_generator_mode: 1

# Allowed maximum latency of SLOT FAPI messages which send from L2 to

L1. Unit: slot

allowed_fapi_latency: 0

# Allowed tick interval error. Unit: us

allowed_tick_error: 10

timer_thread_config:

name: timer_thread

cpu_affinity: 9

sched_priority: 99

message_thread_config:

name: msg_processing

#core assignment

cpu_affinity: 9

# thread priority

sched_priority: 95

# Lowest TTI for Ticking

mu_highest: 1

slot_advance: 3

instances:

# PHY 0

-

name: scf_gnb_configure_module_0_instance_0

-

name: scf_gnb_configure_module_0_instance_1

-

name: scf_gnb_configure_module_0_instance_2

-

name: scf_gnb_configure_module_0_instance_3

# Transport settings for nvIPC

transport:

type: shm

udp_config:

local_port: 38556

remort_port: 38555

shm_config:

primary: 1

prefix: nvipc

cuda_device_id: 0

ring_len: 8192

mempool_size:

cpu_msg:

buf_size: 8192

pool_len: 4096

cpu_data:

buf_size: 576000

pool_len: 1024

cuda_data:

buf_size: 307200

pool_len: 0

dpdk_config:

primary: 1

prefix: nvipc

local_nic_pci: 0000:b5:00.0

peer_nic_mac: 00:00:00:00:00:00

cuda_device_id: 0

need_eal_init: 0

lcore_id: 11

mempool_size:

cpu_msg:

buf_size: 8192

pool_len: 4096

cpu_data:

buf_size: 576000

pool_len: 1024

cuda_data:

buf_size: 307200

pool_len: 0

app_config:

grpc_forward: 0

debug_timing: 0

pcap_enable: 0

dl_tb_loc: 1

enable_precoding: 0

lower_guard_bw: 845

pucch_dtx_thresholds: [-100.0, -100.0, -100.0, -100.0, -100.0]

...

Example ru-emulator configuration file

---

ru_emulator:

core_list: 1-19

# PCI Address of NIC interface used

nic_interface: b5:00.1

# MAC address of cuPHYController port in use

peerethaddr: 0c:42:a1:d1:d0:a1

# VLAN agreed upon with DU

vlan: 2

port: 0

# DPDK Configs

dpdk_burst: 32

dpdk_mbufs: 65536

dpdk_cache: 512

dpdk_payload_rx: 1536

dpdk_payload_tx: 1488

cell_configs:

-

name: "Cell1"

eth: "20:04:9B:9E:27:A3"

flow_list:

- 8

- 0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 9

- 10

- 11

- 12

- 13

- 14

- 15

eAxC_prach_list:

- 15

- 7

-

name: "Cell2"

eth: "26:04:9D:9E:29:B3"

flow_list:

- 1

- 2

- 4

- 9

- 0

- 3

- 5

- 6

- 7

- 8

- 10

- 11

- 12

- 13

- 14

- 15

-

name: "Cell3"

eth: "20:34:9A:9E:29:B3"

flow_list:

- 1

- 2

- 4

- 9

- 0

- 3

- 5

- 6

- 7

- 8

- 10

- 11

- 12

- 13

- 14

- 15

-

name: "Cell4"

eth: "22:34:9C:9E:29:A3"

flow_list:

- 0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

# Specify the number of slots to run

# num_slots_ul: 1

# num_slots_dl: 1

# TTI

tti: 500

# Interval in UL between C-plane RX and U-plane TX

c_interval: 0

# Expect C-plane per symbol for PUSCH (1 for yes, 0 for no)

c_plane_per_symbol: 1

# Expect C-plane per symbol for PRACH (1 for yes, 0 for no)

prach_c_plane_per_symbol: 0

# Send entire slot vs send symbol by symbol

# send_slot: 0

# 1 - time UL slot TX, 2 - time UL symbol TX

timer_level: 0

ul_enabled: 1

dl_enabled: 1

dl_compress_bits: 16

ul_compress_bits: 16

validate_dl_timing: 0

dl_warmup_slots: 0

dl_timing_delay_us: 345

timing_histogram: 0

timing_histogram_bin_size: 1000 # in nanoseconds

dl_symbol_window_size: 261 # in microseconds

forever: 1

#define LOG_NONE -1

#define LOG_ERROR 0

#define LOG_CONSOLE 1

#define LOG_WARN 2

#define LOG_INFO 3

#define LOG_DEBUG 4

#define LOG_VERBOSE 5

log_level_file: 3

log_level_console: 1

log_cpu_core: 20

Example test_mac configuration file

# MAC configuration file

---

name: fapi_gnb_configure_mac

class: test_mac

# nvlog config

# name: can see log file at /dev/shm/${name}.log and /tmp/${name}.log

# primary: In all processes logging to the same file, set the first

starting porcess to be primary, set others to be secondary.

# Log levels: -1 - LOG_NONE, 0 - LOG_ERROR, 1 - LOG_CONSOLE, 2 -

LOG_WARN, 3 - LOG_INFO, 4 - LOG_DEBUG, 5 - LOG_VERBOSE

nvlog:

name: phy

primary: 0

shm_log_level: 3

console_log_level: 1

recv_thread_config:

name: mac_recv

cpu_affinity: 10

sched_priority: 95

# Total slot number in test

test_slots: 0

# MAC-PHY SHM IPC sync mode: 0 - sync per TTI, 1 - sync per message

ipc_sync_mode: 0

ipc_sync_first_slot: 0

transport:

type: shm

udp_config:

local_port: 38556

remort_port: 38555

shm_config:

primary: 0

prefix: nvipc

cuda_device_id: -1

ring_len: 8192

mempool_size:

cpu_msg:

buf_size: 8192

pool_len: 4096

cpu_data:

buf_size: 576000

pool_len: 1024

cuda_data:

buf_size: 307200

pool_len: 0

message: cell_config_request

data:

nCarrierIdx: 0

# DMRS -Type A postion

# Range : pos2 = 2 , pos3 =3

nDMRSTypeAPos: 2

# Number of TX Antenna

nNrOfTxAnt: 8

#Number of receiving Antennas

nNrOfRxAnt: 4

# Cell wide Sub Carrier Spacing Common

# Range carrier frequency < 6 GHz, 0: 15kHz, 1: 30 kHz

# Range carrier frequency > 6 GHz, 2: 60kHz, 3: 120 kHz

nSubcCommon: 1

# Number of TDD Pattern configured.

numTDDPattern: 1

# dl-UL-TransmissionPeriodicity for each TDD pattern

# 0: ms0p5

# 1: ms0p625

# 2: ms1

# 3: ms1p25

# 4: ms2

# 5: ms2p5

# 6: ms5

# 7: ms10

TDDPeriod:

- 3

- 0 # should not read this index based on numTDDPattern

# absolute Frequency SSB in KHz

nSSBAbsFre : 3313920

# absolute Frequency of DL pointA in KHz

nDLAbsFrePointA : 3301680

# absolute Frequency of UL pointA in KHz

nULAbsFrePointA : 3301680

# Carrier bandwidth of DL in number of PRBs

nDLBandwidth: 272

# Carrier bandwidth of UL in number of PRBs

nULBandwidth: 272

# L1 parameter K0 for DL (Refer 38.211, section 5.3.1)

# Range 0: -6 1: 0 2: 6

nDLK0: 1

# L1 parameter K0 for UL (Refer 38.211, section 5.3.1)

# Range 0: -6 1: 0 2: 6

nULK0: 1

# ifft/fft size in DL

nDLFftSize: 4096

# ifft137

# PRACH zeroCorrelationZone Config

# nPrachZeroCorrConf: /fft size in UL

nULFftSize: 4096

# Range 0->15

nCarrierAggregationLevel: 0 # should this be 1

# Frame Duplex Type

# 0: FDD 1: TDD

nFrameDuplexType : 1

# Physical cell id

nPhyCellId: 41

# SSB block power

#0.001 dB step, -60 to 50dBm

nSSBPwr: 1

#SS/PBCH clock Subcarrier spacing

#0: Case A 15kHz 1: Case B 30kHz 2: Case C 30kHz 3: Case D 120kHz 4:

Case E 240kHz

nSSBSubcSpacing: 2

# SSB periodicity in msec

# 0: ms5 1: ms10 2: ms20 3: ms40 4: ms80 5: ms160

# PBCH once every 20 slots

nSSBPeriod: 2

# SSB Subcarrier Offset

# Range 0->23

nSSBSubcOffset: 0

# Bitmap for transmitted SSB.

# 0: not transmitted 1: transmitted. MSB->LSB of first 32-bit number

corresponds to SSB 0 to SSB 31

# MSB->LSB of second 32-bit number corresponds to SSB 32 to SSB 63

nSSBMask:

- 0 # Need to be changed for proper PBCH

- 0 # Need to be changed for proper PBCH

# MSB->LSB of first byte correspond to bit0 to bit7

# The same bit order applies to the other two bytes.

# Need to ne changed for PBCH

nMIB:

- 1

- 13

- 4

# PRACH Configuration Index

# Range 0->255

nPrachConfIdx : 69

# PRACH Subcarrier spacing

# Range 0->3

nPrachSubcSpacing: 1

# PRACH Root Sequence Index

# Range 0->837

nPrachRootSeqIdx: 137

# PRACH zeroCorrelationZone Config

# Range 0->15

nPrachZeroCorrConf: 1

# PRACH restrictedSetConfig

# 0: unrestricted

# 1: restrictedToTypeA

# 2: restrictedToTypeB

nPrachRestrictSet: 0

# Prach-FDM

# Range 1, 2, 4, 8

nPrachFdm: 1

# Offset of lowest PRACH transmission occasion in frequency domain

# Range 0->272

nPrachFreqStart: 3

# SSB-Per-RACH- occasion

# 0: 1/8 1: 1/4 2: 1/2 3: 1 4: 2 5: 4 6: 8 7: 16

nPrachSsbRach: 3

...

Example launch pattern file

####

# YAML file for launch policy

####

---

# Test case specific configs

# fapi_type: 0 - Altran FAPI; 1 - SCF FAPI.

fapi_type: 1

# data_buf_opt:

# -- 0: FAPI message and TB both in CPU_DATA buffer

# -- 1: FAPI message in CPU_MSG buffer, TB in CPU_DATA buffer

# -- 2: FAPI message in CPU_MSG buffer, TB in GPU_DATA buffer

data_buf_opt: 1

# Cell Configs

Cells: testMac_config_params_F08.h5

Num_Cells: 1

# RNTI

RNTI:

-

rnti_index : 0 ## refers cell_index in the cell_config.hdf5

rnti: 50

-

rnti_index : 1 ## refers cell_index in the cell_config.hdf5

rnti: 51

TV:

PUSCH:

-

name: TV1

path: TVnr_CP_F08_US_01.1_gNB_FAPI_s5.h5

PDSCH:

-

name: TV1

path: TVnr_CP_F08_DS_01.1_gNB_FAPI_s5.h5

-

name: TV2

path: TVnr_CP_F08_DS_01.2_gNB_FAPI_s5.h5

-

name: TV3

path: TVnr_CP_F08_DS_01.1_126_gNB_FAPI_s5.h5

PBCH:

-

name: TV1

path: TVnr_1902_gNB_FAPI_s0.h5

PDCCH_UL:

-

name: TV1

path: demo_msg4_4_ant_pdcch_ul_gNB_FAPI_s7.h5

PDCCH_DL:

-

name: TV1

path: demo_msg2_4_ant_pdcch_dl_gNB_FAPI_s7.h5

SCHED:

- ### Array of slots

slot: 0

config:

- ### Array of cell configs

cell_index: 0 # index into cell list refers cell_index in the

cell_config.hdf5

channels:

-

type: PBCH

tv: TV1

-

type: PDSCH

tv: TV3

rnti_list:

- 0 # rnti_index in RNTI: dict

-

slot: 1

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 2

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 3

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV2

rnti_list:

- 0

-

slot: 4

config:

-

cell_index: 0

channels:

-

type: PUSCH

tv: TV1

rnti_list:

- 0

-

slot: 5

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 6

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 7

config:

-

cell_index: 0

channels:

-

type: PDCCH_DL

tv: TV1

-

type: PDCCH_UL

tv: TV1

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 8

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV2

rnti_list:

- 0

-

slot: 9

config:

-

cell_index: 0

channels:

-

type: PUSCH

tv: TV1

rnti_list:

- 0

-

slot: 10

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 11

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 12

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 13

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV2

rnti_list:

- 0

-

slot: 14

config:

-

cell_index: 0

channels:

-

type: PUSCH

tv: TV1

rnti_list:

- 0

-

slot: 15

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 16

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 17

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV1

rnti_list:

- 0

-

slot: 18

config:

-

cell_index: 0

channels:

-

type: PDSCH

tv: TV2

rnti_list:

- 0

-

slot: 19

config:

-

cell_index: 0

channels:

-

type: PUSCH

tv: TV1

rnti_list:

- 0

...