DOCA Storage Initiator ComCh Application Guide

The doca_storage_initiator_comch application functions as a client to the DOCA storage service. It benchmarks the performance of data transfers over the ComCh interface, providing detailed measurements of throughput, bandwidth, and latency.

The doca_storage_initiator_comch application performs the following key tasks:

Initiates a sequence of I/O requests to exercise the DOCA storage service.

Measures and reports storage performance, including:

Millions of I/O operations per second (IOPS)

Effective data bandwidth

I/O operation latency:

Minimum latency

Maximum latency

Mean latency

The application establishes a connection to the storage service running on an NVIDIA® BlueField® platform using the doca_comch_client interface.

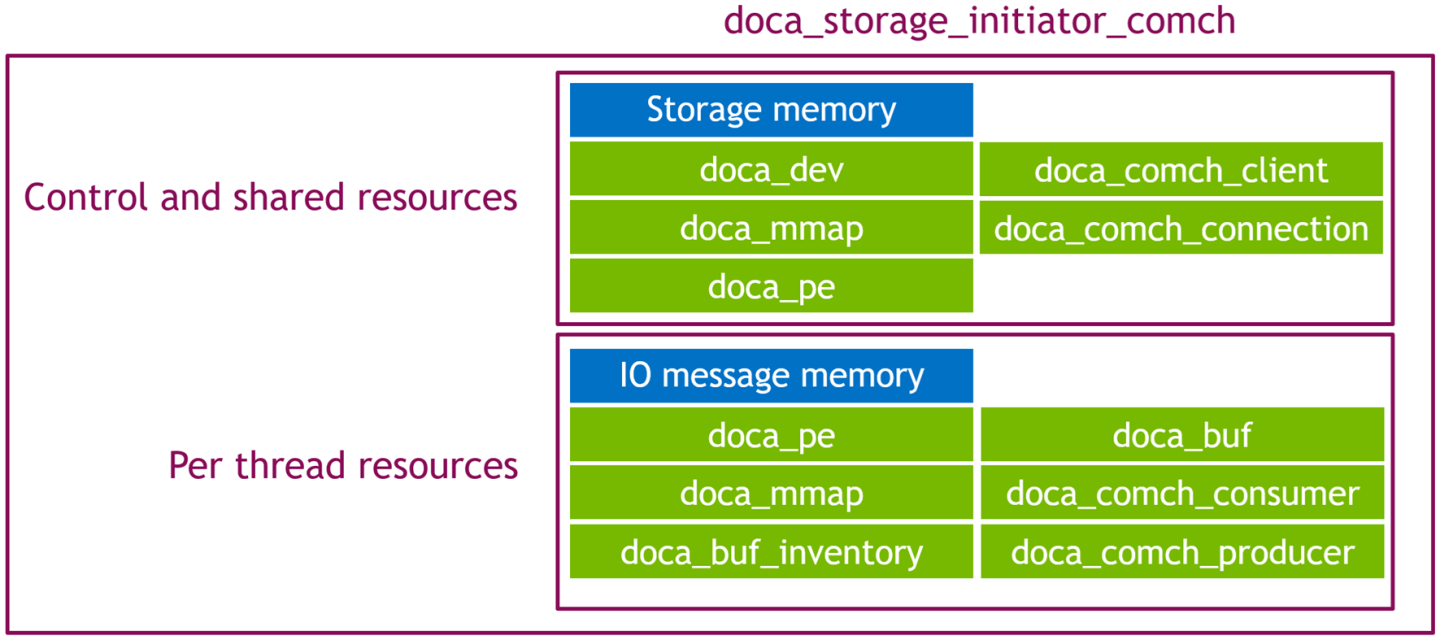

The application is logically divided into two functional areas:

Control-time and shared resources

Per-thread data path resources

The execution consists of two main phases:

Control Phase

Data Path Phase

Control Phase

The control phase is responsible for establishing and managing the storage session lifecycle. It performs the following steps:

Query storage – Retrieve storage service metadata.

Init storage – Initialize the storage window and prepare for data transfers.

Start storage – Begin the data path phase and launch data threads.

Once the data phase is initiated, the main thread waits for test completion. Afterward, it issues final cleanup commands:

Stop storage – Stop data movement.

Shutdown – Tear down the session and release resources.

Data Path Phase

The data path is executed by worker threads and operates in one of three modes:

Throughput Test (Read or Write)

Tasks are submitted once at the start.

A tight polling loop monitors progress until the target operation count is met.

Each task is assigned a storage window address, which is advanced after each submission.

If the end of the storage range is reached, the address wraps around to the beginning, ensuring round-robin access.

Read-Only Data Validity Test

A reference copy of expected storage contents is held in memory.

Tasks are submitted similarly to the throughput test.

After each segment is read, the application compares the contents with the expected data to verify correctness.

Once the entire range has been read and verified, the test concludes.

Write-Then-Read Data Validity Test

The application first writes a known memory pattern across the entire storage range.

Then, it performs the read-only validity test, but compares the read data against the previously written pattern (instead of a file-based reference).

This verifies both write and read correctness in a full-cycle validation.

This application leverages the following DOCA libraries:

This application is compiled as part of the set of storage applications. For compilation instructions, refer to the DOCA Storage Applications page.

Application Execution

This application can only run from the host.

DOCA Storage Initiator Comch is provided in source form. Therefore, compilation is required before the application can be executed.

Application usage instructions:

Usage: doca_storage_initiator_comch [DOCA Flags] [Program Flags] DOCA Flags: -h, --help Print a help synopsis -v, --version Print program version information -l, --log-level Set the (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE> --sdk-log-level Set the SDK (numeric) log level for the program <10=DISABLE, 20=CRITICAL, 30=ERROR, 40=WARNING, 50=INFO, 60=DEBUG, 70=TRACE> Program Flags: -d, --device Device identifier --cpu CPU core to which the process affinity can be set --storage-plain-content File containing the plain data that is represented by the storage --execution-strategy Define what to run. One of: read_throughput_test | write_throughput_test | read_write_data_validity_test | read_only_data_validity_test --run-limit-operation-count Run N operations (per thread) then stop. Default: 1000000 --task-count Number of concurrent tasks (per thread) to use. Default: 64 --command-channel-name Name of the channel used by the doca_comch_client. Default: "doca_storage_comch" --control-timeout Time (in seconds) to wait while performing control operations. Default: 5 --batch-size Batch size: Default: 4

InfoThis usage printout can be printed to the command line using the

-h(or--help) options:./doca_storage_initiator_comch -h

For additional information, refer to section "Command-line Flags".

CLI example for running the application on the BlueField:

./doca_storage_initiator_comch -d 3b:00.0 --execution-strategy read_throughput_test --run-limit-operation-count 1000000 --cpu 0

NoteBoth the DOCA Comch device PCIe address (

3b:00.0) should match the addresses of the desired PCIe devices.

Command-line Flags

Flag Type | Short Flag | Long Flag | Description |

General flags |

|

| Print a help synopsis |

|

| Print program version information | |

|

| Set the log level for the application:

| |

N/A |

| Set the log level for the program:

| |

Program flags |

|

| DOCA device identifier. One of:

Note

This flag is a mandatory. |

N/A |

| The data path routine to run. One of:

Note

This flag is a mandatory. | |

N/A |

| Index of CPU to use. One data path thread is spawned per CPU. Index starts at 0. Note

The user can specify this argument multiple times to create more threads.

Note

This flag is a mandatory. | |

N/A |

| Expected plain content that is expected to be read from storage during a | |

N/A |

| Number of IO operations to perform when performing a throughput test | |

N/A |

| Number of parallel tasks per thread to use | |

N/A |

| Allows customizing the server name used for this application instance if multiple comch servers exist on the same device. | |

N/A |

| Time, in seconds, to wait while performing control operations | |

N/A |

| Set how many tasks should be submitted before triggering the hardware to start processing them. |

Troubleshooting

Refer to the NVIDIA BlueField Platform Software Troubleshooting Guide for any issue encountered with the installation or execution of the DOCA applications.

Control Phase

Parse CLI arguments and initialize the application:

initiator_comch_app app{parse_cli_args(argc, argv)};

Connect to the storage service:

app.connect_to_storage_service();

Query storage capabilities:

app.query_storage();

Retrieves storage size, block size, and other metadata

Initialize storage session:

app.init_storage();

Allocates memory resources.

Sends the

init_storagecontrol message.Creates per-thread resources including

comch_producerandcomch_consumer.

Prepare worker threads and allocate tasks:

app.prepare_threads();

Start storage session and data threads:

app.start_storage();

Sends the

start_storagecontrol messageWaits for response

Launches the data path threads

Run the test:

app.run();

Starts all worker threads

Waits for thread completion

Collects and processes statistics

Join worker threads:

app.join_threads();

Stop storage session:

app.stop_storage();

Display results or failure banner:

if(run_success) { app.display_stats(); }else{ exit_value = EXIT_FAILURE;fprintf(stderr,"+================================================+\n");fprintf(stderr,"| Test failed!!\n");fprintf(stderr,"+================================================+\n"); }Shutdown the session and release resources:

app.shutdown();

Data Path Phase

Read/Write Throughput Test

Wait for

run_flagto be set:while(!hot_data.run_flag) { std::this_thread::yield();if(hot_data.error_flag)return; }Submit initial batch of transactions:

auto

constinitial_task_count = std::min(hot_data.transactions_size, hot_data.remaining_tx_ops);for(uint32_t ii = 0; ii != initial_task_count; ++ii) hot_data.start_transaction(hot_data.transactions[ii], std::chrono::steady_clock::now());Poll the progress engine in a tight loop until complete:

while(hot_data.run_flag) { doca_pe_progress(hot_data.pe) ? ++hot_data.pe_hit_count : ++hot_data.pe_miss_count; }

Read-Only Data Validity Test

Wait for

run_flagto be set:while(!hot_data.run_flag) { std::this_thread::yield();if(hot_data.error_flag)return; }Load expected content into memory:

read_and_validate_storage_memory(hot_data, hot_data.storage_plain_content);

Set expected operation count:

hot_data.remaining_tx_ops = hot_data.remaining_rx_ops = io_region_size / hot_data.io_block_size;

Submit read transactions:

auto

constinitial_task_count = std::min(hot_data.transactions_size, hot_data.remaining_tx_ops);for(uint32_t ii = 0; ii != initial_task_count; ++ii) {char*io_request; doca_buf_get_data(doca_comch_producer_task_send_get_buf(hot_data.transactions[ii].request), (void**)&io_request)); storage::io_message_view::set_type(storage::io_message_type::read, io_request); hot_data.start_transaction(hot_data.transactions[ii], std::chrono::steady_clock::now()); }Poll until all reads are completed:

while(hot_data.remaining_rx_ops != 0) { doca_pe_progress(hot_data.pe) ? ++(hot_data.pe_hit_count) : ++(hot_data.pe_miss_count); }Validate memory content:

for(size_toffset = 0; offset != io_region_size; ++offset) {if(hot_data.io_region_begin[offset] != expected_memory_content[offset]) { DOCA_LOG_ERR("Data mismatch @ position %zu: %02x != %02x", offset, hot_data.io_region_begin[offset], expected_memory_content[offset]); hot_data.error_flag =true;break; } }

Write-Then-Read Data Validity Test

Wait for

run_flagto be set:while(!hot_data.run_flag) { std::this_thread::yield();if(hot_data.error_flag)return; }Prepare memory content to write:

size_tconstio_region_size = hot_data.io_region_end - hot_data.io_region_begin; std::vector<uint8_t> write_data; write_data.resize(io_region_size);for(size_tii = 0; ii != io_region_size; ++ii) { write_data[ii] =static_cast<uint8_t>(ii); }Write memory to storage:

write_storage_memory(hot_data, write_data.data());

Set expected operation count:

hot_data.remaining_tx_ops = hot_data.remaining_rx_ops = io_region_size / hot_data.io_block_size;

Copy write pattern into local memory for validation:

hot_data.io_addr = hot_data.io_region_begin; std::copy(expected_memory_content, expected_memory_content + io_region_size, hot_data.io_region_begin);

Submit write transactions:

auto

constinitial_task_count = std::min(hot_data.transactions_size, hot_data.remaining_tx_ops);for(uint32_t ii = 0; ii != initial_task_count; ++ii) {char*io_request; doca_buf_get_data(doca_comch_producer_task_send_get_buf(hot_data.transactions[ii].request), (void**)&io_request)); storage::io_message_view::set_type(storage::io_message_type::write, io_request); hot_data.start_transaction(hot_data.transactions[ii], std::chrono::steady_clock::now()); }Poll until writes are completed:

while(hot_data.remaining_rx_ops != 0) { doca_pe_progress(hot_data.pe) ? ++(hot_data.pe_hit_count) : ++(hot_data.pe_miss_count); }

Read back and validate storage:

read_and_validate_storage_memory(hot_data, write_data.data())

Repeat steps to submit read transactions and validate memory as in the read-only test.

/opt/mellanox/doca/applications/storage/