DeepStream and Endoscopy Sample Apps

The DeepStream and Endoscopy sample applications provide examples of how to use the DeepStream SDK from a native C++ application in order to perform inference on a live video stream from a camera or video capture card. Both of these applications use a sample inference model that is able to detect the stomach and intestine regions of a Learning Resources Anatomy Model.

If the Learning Resources Anatomy Model is not available, the inference functionality of these sample applications can be tested using a subset of the original training images that are installed alongside the sample applications in the following directory:

/opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/model/organs/images

The inference model that is included with the DeepStream and Endoscopy sample applications was trained using the NVIDIA TAO Toolkit. This toolkit uses transfer learning to accelerate development time by enabling developers to fine-tune on high-quality NVIDIA pre-trained models with only a fraction of the data as training from scratch. For more information, see the NVIDIA TAO Toolkit webpage.

The DeepStream and Endoscopy sample apps work in either iGPU or dGPU mode on Clara AGX Developer Kit; however, the TensorRT engine files that are included with the sample apps are only compatible with the dGPU. Running the apps with the iGPU requires new TensorRT engine files to be generated. To do so, follow instructions given under Rebuilding TensorRT Engines.

Camera modules with CSI connectors are not supported in dGPU mode on Clara AGX Developer Kit.

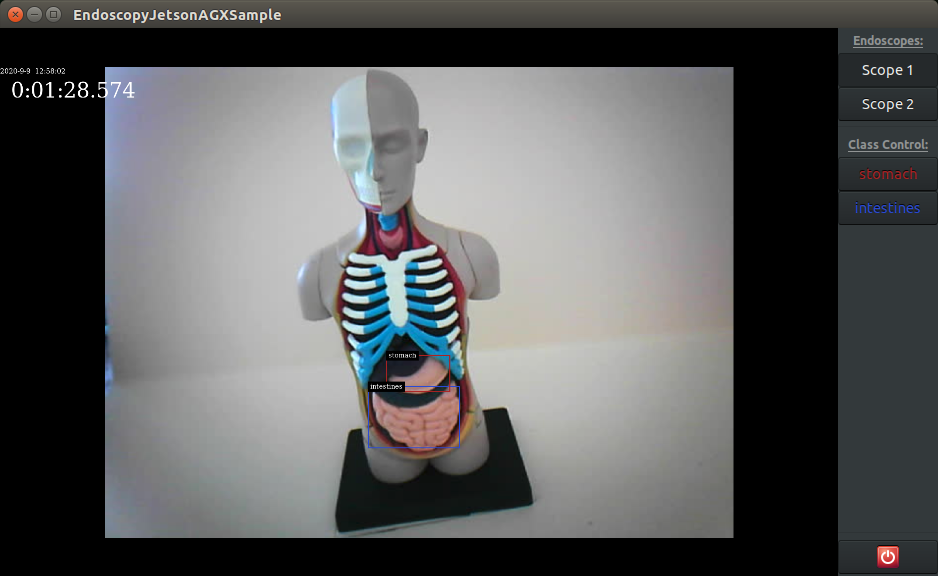

Endoscopy Sample Application

Follow these steps to run the Endoscopy Sample Application:

Navigate to the installation directory:

$ cd /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/EndoscopyJetsonAGXSample

Run the application:

$ ./EndoscopyJetsonAGXSample

The application window should appear.

All connected cameras should be visible on the top right.

Select a source by clicking one of the buttons named

"Scope <N>", where<N>corresponds to the video source.The main window area should show “Loading…” on the bottom while the camera is being initialized. When ready, the main window area will start displaying the live video stream from the camera.

Click any of the

"Class Control"buttons to dynamically enable or disable the bouding boxes and labels of detected objects.Click the button on the bottom right to quit the application.

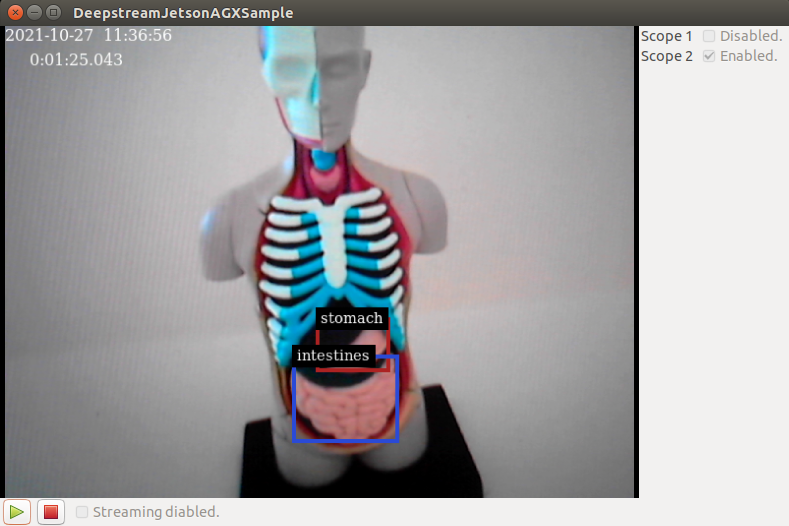

DeepStream Sample Application

Follow these steps to run the DeepStream Sample Application:

Navigate to the installation directory:

$ cd /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamJetsonAGXSample

Run the application:

$ ./DeepstreamJetsonAGXSample

The application window should appear.

All connected cameras should be visible on the right.

Use Enable to select which camera streams to process. This only works before you play the stream the first time after starting the application.

If desired, check the Enable Streaming box on the bottom to stream the processed output as a raw h.264 UDP stream. Once this box is checked, you can define the Host Port and Host IP address of the streaming destination (see step 4, below).

Click the green arrow to start video capture and object detection.

ImportantIf streaming video from multiple camera sensors at the same time, ensure that they have compatible capture mode. To check capture modes, use:

$ v4l2-ctl -d /dev/video<N> --list-formats-extwhereNcorresponds to the video source.If streaming was enabled above, the following command can be run on the destination host in order to receive the video stream output by the application:

$ gst-launch-1.0 udpsrc port=5000 ! application/x-rtp,encoding-name=H264, payload=96 ! rtph264depay ! queue ! avdec_h264 ! autovideosink sync=false

The Holoscan DeepStream sample application can also be run on a Linux x86 host using one or more identical USB cameras. To avoid bandwidth issues, ensure that each USB camera is connected to its own USB controller. Depending on the motherboard in use, it is typically sufficient to connect one camera to the front and one to the back of the PC.

Unlike with Tegra, it is difficult to predict the exact version of the GPU and software running on your PC, so it is likely that the engine files will need to be rebuilt for your machine. If errors are encountered when streaming of the applications is started, see Rebuilding TensorRT Engines.

Navigate to the installation directory:

$ cd /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamLinuxHostSample

Run the application:

$ ./DeepstreamLinuxHostSample

The application window should appear. See Step 3 of the DeepStream Sample Application section above for more details about the application.

The DeepStream sample application includes a sample model and prebuilt engine files that are compatible with the Clara AGX dGPU. These engine files are specific to the platform, GPU, and TensorRT version that is being used, and so these engine files must be rebuilt if any of these change. The following shows an example error message that may be seen if the engine files are not compatible with your current platform.

ERROR: [TRT]: coreReadArchive.cpp (38) - Serialization Error in verifyHeader: 0 (Version tag does not match)

ERROR: [TRT]: INVALID_STATE: std::exception

ERROR: [TRT]: INVALID_CONFIG: Deserialize the cuda engine failed.

ERROR: Deserialize engine failed from file: /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamJetsonAGXSample/model/resnet18_detector_fp16.trt

0:00:30.955545959 10922 0x559324d190 WARN nvinfer gstnvinfer.cpp:616:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::deserializeEngineAndBackend() <nvdsinfer_context_impl.cpp:1793> [UID = 1]: deserialize engine from file :/opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamJetsonAGXSample/model/resnet18_detector_fp16.trt failed

0:00:30.955619243 10922 0x559324d190 WARN nvinfer gstnvinfer.cpp:616:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:1900> [UID = 1]: deserialize backend context from engine from file :/opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamJetsonAGXSample/model/resnet18_detector_fp16.trt failed, try rebuild

0:00:30.955662861 10922 0x559324d190 INFO nvinfer gstnvinfer.cpp:619:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:1818> [UID = 1]: Trying to create engine from model files

ERROR: failed to build network since there is no model file matched.

ERROR: failed to build network.

0:00:30.956124613 10922 0x559324d190 ERROR nvinfer gstnvinfer.cpp:613:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:1838> [UID = 1]: build engine file failed

0:00:30.956157063 10922 0x559324d190 ERROR nvinfer gstnvinfer.cpp:613:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:1924> [UID = 1]: build backend context failed

0:00:30.956181128 10922 0x559324d190 ERROR nvinfer gstnvinfer.cpp:613:gst_nvinfer_logger:<primary-nvinference-engine> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1243> [UID = 1]: generate backend failed, check config file settings

0:00:30.956245196 10922 0x559324d190 WARN nvinfer gstnvinfer.cpp:816:gst_nvinfer_start:<primary-nvinference-engine> error: Failed to create NvDsInferContext instance

0:00:30.956268813 10922 0x559324d190 WARN nvinfer gstnvinfer.cpp:816:gst_nvinfer_start:<primary-nvinference-engine> error: Config file path: dsjas_nvinfer_config.txt, NvDsInfer Error: NVDSINFER_CONFIG_FAILED

Playing...

ERROR from element primary-nvinference-engine: Failed to create NvDsInferContext instance

Error details: /dvs/git/dirty/git-master_linux/deepstream/sdk/src/gst-plugins/gst-nvinfer/gstnvinfer.cpp(816): gst_nvinfer_start (): /GstPipeline:camera-player/GstBin:Inference Bin/GstNvInfer:primary-nvinference-engine:

Config file path: dsjas_nvinfer_config.txt, NvDsInfer Error: NVDSINFER_CONFIG_FAILED

Returned, stopping playback

If this is the case, follow these steps in order to rebuild the engine files on the device that will be running the DeepStream application.

Download the TensorRT

tlt-converterbinary for your platform:-

Jetson and Clara AGX with iGPU or dGPU (TensorRT 7.2):

https://developer.nvidia.com/tlt-converter-1 -

Linux x86 (TensorRT 7.2):

https://developer.nvidia.com/cuda111-cudnn80-trt72

-

Make the binary executable and move it to a location accessible through

$PATH(e.g./usr/bin):$ chmod a+x tlt-converter $ sudo mv tlt-converter /usr/bin

Navigate to the model directory of the DeepStream sample:

$ cd /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/model/organs

Run the

detectnet_convert.shscript to generate the engine files:$ sudo ./detectnet_convert.sh

The previous step creates the TensorRT engine files (

*.trt) and outputs them alongside the model files in the current directory. To use these new engines, copy them to the DeepStream application’smodeldirectory, e.g.:$ sudo cp resnet18_detector_*.trt /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamLinuxHostSample/model

Running DeepStream applications using Docker is currently only supported when using the iGPU. The following section only applies to an iGPU configuration.

Docker provides lightweight reusable containers for deploying and sharing your applications.

Due to the way containers are used with Jetson, with host-side libraries mounted into the container in order to force library compatibility with the host drivers, there are two ways to build a container image containing the Holoscan samples:

Build the sample applications on the Jetson, outside of a container, then copy the executables into an image for use in a container.

Build the sample applications within a container.

These instructions document the process for #2, since it should be relatively straightforward to adapt the instructions to use method #1.

Configure the Container Runtime

The DeepStream libraries are not mounted into containers by default, and so an additional configuration file must be added to tell the container runtime to mount these components into containers.

Add the following to a new file,

/etc/nvidia-container-runtime/host-files-for-container.d/deepstream.csv:dir, /opt/nvidia/deepstream/deepstream-6.0 sym, /opt/nvidia/deepstream/deepstream

Configure Docker to default to use nvidia-docker with

"default-runtime": "nvidia"in/etc/docker/daemon.json:{ "runtimes": { "nvidia": { "path": "nvidia-container-runtime", "runtimeArgs": [] } }, "default-runtime": "nvidia" }

Restart the Docker daemon:

$ sudo systemctl daemon-reload $ sudo systemctl restart docker

Build the Container Image

Follow steps 1 through 4 in Rebuilding TensorRT Engines to build the engine files for the iGPU, then copy these engines to the

model/organs/JetsonAGXdirectory:$ sudo cp /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/model/organs/*.trt \ /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/model/organs/JetsonAGX

Build the

clara-holoscan-samplesDocker image:$ cd /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample $ sudo docker build -t clara-holoscan-samples -f Dockerfile.igpu .

Running the Samples in a Container

Since the samples are graphical applications and need permissions to access the X server, the DISPLAY environment variable must be set to the ID of the X11 display you are using and root (the user running the container) must have permissions to access the X server:

$ export DISPLAY=:0

$ xhost +si:localuser:root

Then, using the clara-holoscan-samples image that was previously built, the samples can be run using the following:

$ sudo docker run --rm \

--device /dev/video0:/dev/video0 \

--env DISPLAY=$DISPLAY --volume /tmp/.X11-unix/:/tmp/.X11-unix \

--network host --volume /tmp:/tmp \

--workdir /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamJetsonAGXSample \

clara-holoscan-samples ./DeepstreamJetsonAGXSample

If you have no /dev/video0 device this means you do not have a camera

attached. Please see AGX Camera Setup.

The various arguments to docker run do the following:

Give the container access to the camera device

/dev/video0:--device /dev/video0:/dev/video0

Give the container access to the X desktop:

--env DISPLAY=$DISPLAY --volume /tmp/.X11-unix/:/tmp/.X11-unix

Give the container access to the Argus socket used by the Camera API:

--network host --volume /tmp:/tmp

Run the DeepstreamJetsonAGXSample from the clara-holoscan-samples image:

--workdir /opt/nvidia/clara-holoscan-sdk/clara-holoscan-deepstream-sample/build/DeepstreamJetsonAGXSample clara-holoscan-samples ./DeepstreamJetsonAGXSample