Overview

TAO Toolkit supports image classification, six object detection architectures–including YOLOv3, YOLOv4, FasterRCNN, SSD, DSSD, RetinaNet, and DetectNet_v2–and a semantic and instance segmentation architecture, namely UNet and MaskRCNN. In addition, there are 16 classification backbones supported by TAO Toolkit. For a complete list of all the permutations that are supported by TAO Toolkit, see the matrix below:

ImageClassification |

Object Detection |

Instance Segmentation |

Semantic Segmentation |

|||||||

Backbone |

DetectNet_V2 |

FasterRCNN |

SSD |

YOLOv3 |

RetinaNet |

DSSD |

YOLOv4 |

MaskRCNN |

UNet |

|

ResNet10/18/34/50/101 |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

VGG 16/19 |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

GoogLeNet |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

||

MobileNet V1/V2 |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

||

SqueezeNet |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|||

DarkNet 19/53 |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

||

CSPDarkNet 19/53 |

Yes |

Yes |

||||||||

Efficientnet B0 |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|||

Efficientnet B1 |

Yes |

Yes |

||||||||

The TAO Toolkit container contains Jupyter notebooks and the necessary spec files to train any network combination. The pre-trained weight for each backbone is provided on NGC. The pre-trained model is trained on Open image dataset. The pre-trained weights provide a great starting point for applying transfer learning on your own dataset.

To get started, first choose the type of model that you want to train, then go to the appropriate model card on NGC and choose one of the supported backbones.

Model to train |

NGC model card |

YOLOv3 |

|

YOLOv4 |

|

SSD |

|

FasterRCNN |

|

RetinaNet |

|

DSSD |

|

DetectNet_v2 |

|

MaskRCNN |

|

Image Classification |

|

UNet |

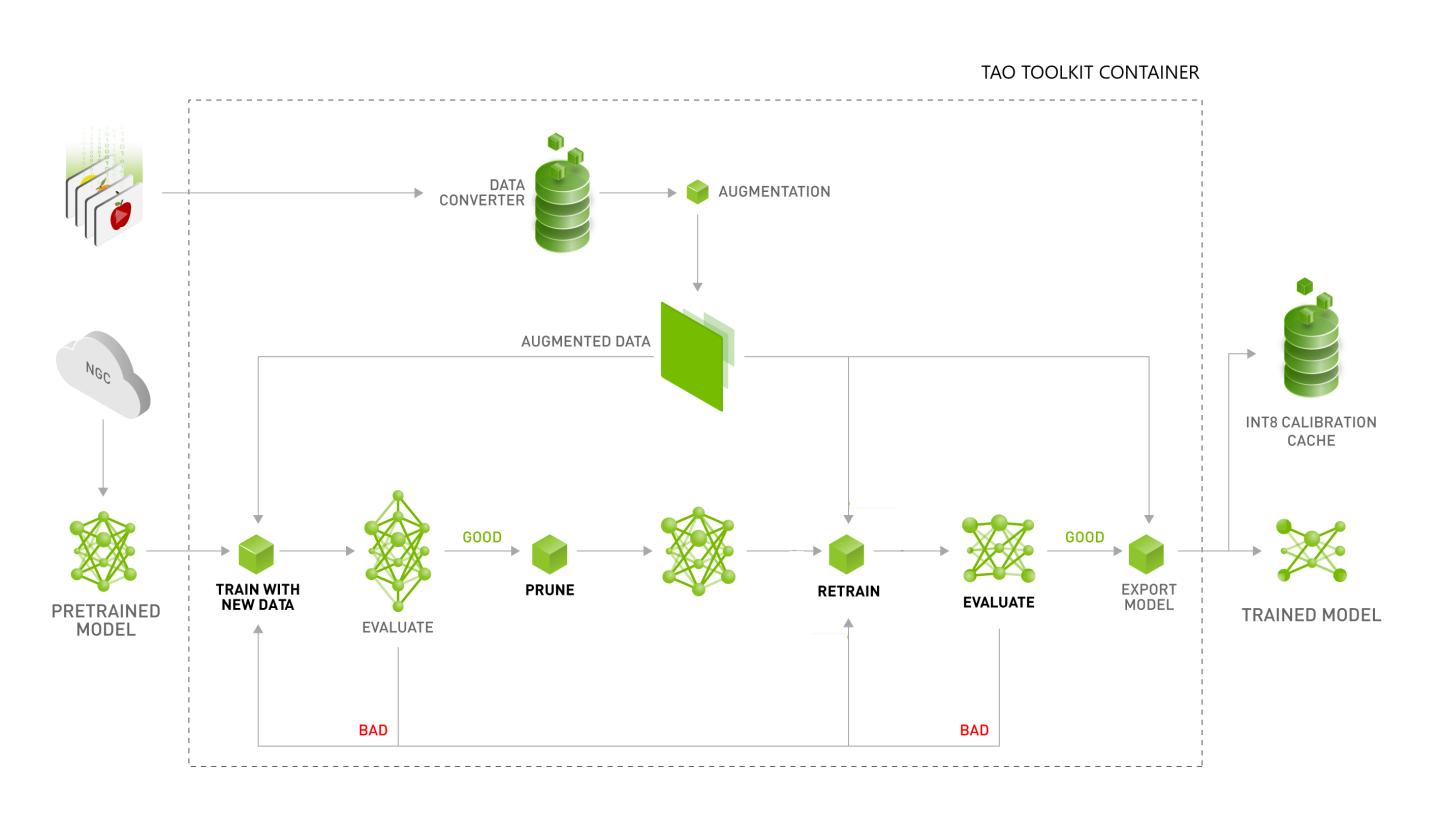

Once you pick the appropriate pre-trained model, follow the TAO workflow to use your dataset and pre-trained model to export a tuned model that is adapted to your use case. The TAOf Workflow sections walk you through all the steps in training.

You can deploy most trained models on any edge device using DeepStream and TensorRT. See the Integrating TAO models into DeepStream chapter for deployment instructions.

Some of the models are currently not supported in DeepStream, so we have provided a separate inference application. See the Integrating TAO Toolkit CV models with the inference pipeline chapter for deployment instructions.

We also have a reference application for deployment with Triton. See the Integrating TAO CV models with Triton Inference server chapter for Triton instructions.