Gesture Recognition

GestureNet is an NVIDIA-developed gesture classification model that is included in the TAO Toolkit. GestureNet supports the following tasks:

dataset_convert

train

evaluate

inference

export

These tasks can be invoked from the TAO Toolkit Launcher using the following convention on the command line:

tao gesturenet <sub_task> <args_per_subtask>

where args_per_subtask are the command-line arguments required for a given subtask. Each

subtask is explained in detail below.

The GestureNet App requires the data images and labels to be in a specific format. Once it is

prepared, the TAO Toolkit includes dataset_convert to prepare the

data for model training.

See the Data Annotation Format page for more information about the data format for gesture recognition.

Dataset Extraction Config

The dataset_config spec specifies the parameters neededed to crop hand bounding box and prepare dataset.

Here’s a sample spec:

{

"org_dataset": "data",

"mount_dir_path" :"/workspace/tao-experiments/gesturenet/",

"org_data_dir" : "original",

"post_data_dir" : "extracted",

"kpi_users": ["uid_1", "uid_2"],

"sampling_rate": 1,

"convert_bbox_square": true,

"bbox_enlarge_ratio": 1.1,

"annotation_config":{

"annotation_path": "annotation"

}

}

The following table describes the parameters:

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

org_dataset |

Name of dataset. |

String |

|

mount_dir_path |

Path to the root directory relative to which the data is stored. |

String |

|

org_data_dir |

Path to original images directory relative to |

String |

|

post_data_dir |

Path to directory relative to |

String |

|

kpi_users |

List of user IDs set aside for Test set. |

List of String |

|

sampling_rate |

Rate at which to select frames for labeling. If data is not video please set to 1. |

Integer |

1 if dataset is not from video |

convert_bbox_square |

Boolean variable to indicate if the labelled bounding box should be converted to a square. |

Boolean |

|

bbox_enlarge_ratio |

Scale factor used to enlarge bounding box. |

Float |

[1.0,1.2] |

annotation_config |

The nested annotation dictionary that contains path to folder with labels (relative to |

Dictionary |

Dataset Experiment Config

The dataset_experiment_config spec specifies the parameters neededed to combine different datasets. It allows user to provide user IDs that are set aside for validation or test set. It also allows different sampling strategies based on meta data and class counts.

Here’s a sample spec:

{

"mount_dir_path" :"/workspace/tao-experiments/gesturenet",

"org_data_dir" : "original",

"post_data_dir" : "extracted",

"set_list": {

"train_val": [

"data"

],

"kpi": [

"data"

]

},

"uid_list": {

"uid_name": "user_id",

"predefined_val_users": false,

"val_fraction": 0.25,

"validation": [

],

"kpi": [

"uid_1"

]

},

"image_feature_filter": {

"train_val":{

"*":[

{

"location":"outdoor"

}

]

},

"kpi":{

}

},

"sampling": {

"sampling_mode": "average",

"use_class_weights": false,

"class_weights": {

"thumbs_up": 0.5,

"v": 0.5

}

}

}

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

mount_dir_path |

Path to the root directory relative to which the data is stored. |

String |

|

org_data_dir |

Path to original images directory relative to |

String |

|

post_data_dir |

Path to directory relative to |

String |

|

set_list |

This nested configuration for parameters related to datasets. |

Dictionary |

|

uid_list |

This nested configuration for parameters related to user ids. |

Dictionary |

|

image_feature_filter |

This nested configuration for parameters related to filtering images based on metadata. |

Dictionary |

|

sampling |

This nested configuration for parameters related to class weights and sampling strategy |

Dictionary |

The following table describes the set_list parameters:

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

train_val |

List of datasets from which to select users for training and validation. |

List of String |

|

kpi |

List of datasets from which to select users for test set. |

List of String |

The following table describes the uid_list parameters:

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

uid_name |

Name of field that represents unique identifier of each subject. |

String |

|

predefined_val_users |

Flag to indicate if train-validation split of is specifed by config. |

Boolean |

|

val_fraction |

Fraction of non-kpi users used for validation set. Only used if |

Float |

|

validation |

List of uid used in validation set. Only used if |

List of String |

|

kpi |

List of uid assigned to test set. |

List of String |

The following table describes the image_feature_filter parameters:

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

train_val |

Metadata fields that used to discard images in training and validation set. |

Dictionary |

|

kpi |

Metadata fields that used to discard images in test set. |

Dictionary |

The following table describes the sampling parameters:

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

sampling_mode |

Sampling methodology when using class_weights

|

String |

“average” |

use_class_weights |

Boolean variable to indicate if sampling should be based on class weights. |

Boolean |

True / False |

class_weights |

Dictionary mapping gesture classes of interest and their class weight. |

Dictionary |

Sample Usage of the Dataset Converter Tool

TAO has built in commands to run prepare datset for GestureNet model and is given below.

tao gesturenet dataset_convert --dataset_spec <dataset_spec_path>

--experiment_spec <experiment_spec_path>

--k_folds <num_folds>

--output_filename <output_filename>

--experiment_name <experiment_name>

Required Arguments

--dataset_spec: The path to dataset spec.--experiment_spec: The path to dataset experiment spec.--k_folds: Number of folds.--output_filename: Output json that is ingested by GestureNet training pipeline.--experiment_name: Name of experiment.

Sample Usage

Here is an example using a GestureNet model.

tao gesturenet dataset_convert --dataset_spec $SPECS_DIR/dataset_config.json \

--k_folds 0 \

--experiment_spec $SPECS_DIR/dataset_experiment_config.json \

--output_filename $USER_EXPERIMENT_DIR/data.json \

--experiment_name v1

To do training, evaluation, and inference for GestureNet, several components need to be configured, each with their own parameters. The gesturenet train, gesturenet evaluate, and gesturenet inference commands for a GestureNet experiment share the same configuration file.

The main components of the configuration file is given below.

Trainer

Model

Evaluator

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

random_seed |

The random seed for the experiment. |

Unsigned int |

108 |

batch_size |

Batch size used for experiment. |

Unsigned Int |

64 |

output_experiments_fld |

Directory where experiments will be saved. |

String |

|

save_weights_path |

Folder in output_experiments_fld that the model will be saved to. |

String |

|

trainer |

Trainer configuration. |

||

model |

Model configuration. |

||

evaluator |

Evaluator configuration. |

Trainer Config

The trainer configuration allows you to configure how you want to train your model. The two main components are top_training and finetuning. num_workers allows you to specify how many workers to use to train your model. Details on the top_training configuration file is explained in detail in the next section.

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

top_training |

Top Training Configuration |

||

finetuning |

Fine Tuning Configuration |

||

num_workers |

Number of workers to train model. |

Unsigned Int |

1 |

Top Training Config

The top training configuration allows you to customize how your model trains. There are 5 main components to the top_training configuration and they are as follows:

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

stage_order |

1 |

||

loss_fn |

Loss function to use for top training the model. Currently only supports categorical cross entropy. |

String |

categorical_crossentropy |

train_epochs |

The number of epochs to perform top training. |

Unsigned Int |

5 |

num_layers_unfreeze |

The number of layers whose weights are updated during training. For example, if 3 layers are unfrozen then the model will freeze all the layers starting from the inputs until the last 3 layers in the model are left unfrozen. |

Unsigned Int |

3 |

optimizer |

The optimizer to use for top training. Currently support

|

String |

rmsprop |

Fine Tuning Config

Each fine tuning configuration file has 9 different options to perform fine tuning and is listed below.

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

stage_order |

|||

train_epochs |

The number of epochs to perform fine tuning. The fine tuning option will allow you to obtain the best results when switching datasets. Usually more layers are frozen and a lower learning rate is used to achieve the best results. |

Unsigned Int |

50 |

loss_fn |

The loss function to use for fine tuning. Currently only supports categorical crossentropy. |

String |

categorical_crossentropy |

initial_lrate |

The initial learning rate to be used for fine tuning. Fine tuning uses a step learning rate annnealing schedule according to the progress of the current experiment. The training progress is defined as the ratio of the current iteration to the maximum iterations. The scheduler adjusts the learning rate of the experiment in steps at regular intervals. |

Float |

3e-04 |

decay_step_size |

Decay step size for learning rate. Fine tuning uses a step learningrate annnealing schedule according to the progress of the current experiment. The training progress is defined as the ratio of the current iteration to the maximum iterations. The scheduler adjusts the learning rate of the experiment in steps at regular intervals. |

Float |

33 |

lr_drop_rate |

Drop rate for learning rate. Fine tuning uses a step learningrate annnealing schedule according to the progress of the current experiment. The training progress is defined as the ratio of the current iteration to the maximum iterations. The scheduler adjusts the learning rate of the experiment in steps at regular intervals. |

Float |

0.5 |

enable_checkpointing |

Flag to determine whether to enable checkpoints. |

Bool |

True |

num_layers_unfreeze |

The number of layers unfrozen (whose weights are updated) during training. It is advised to unfreeze most layers for finetuning step. |

Unsigned Int |

100 |

optimizer |

Optimizer to use for fine tuning. “sgd”, “adam” and “rmsprop” are supported. |

String |

sgd |

Model Config

The model configuration file allows you to customize the architecture you want to use and the hyperparameters. The key options available are given below.

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

base_model |

The base model to use. The public version uses a vanilla resnet but the release version uses an optimized model that obtains better results. |

String |

resnet_vanilla |

num_layers |

Number of layers to use in the model. The current supported layers are 6, 10, 12, 18, 26, 34, 50, 101, 152. |

Unsigned Int |

18 |

weights_init |

The path to the saved weights. Model loads in the weights. |

String |

|

gray_scale_input |

Image input type. It is best to use RGB images but grayscale inputs work as well. If the images are RGB then set this flag to be false. |

Bool |

False |

data_format |

The image format to use. This must align with the model provided. The current options are either channels_first (NCHW) or channels_last (NHWC). At the moment, NCHW is the preferred format to use. |

String |

channels_first |

image_height |

Image height of the model input. |

UnsignedInt |

160 |

image_width |

Image width of the model input. |

UnsignedInt |

160 |

use_batch_norm |

Flag to determine whether to use batch normalization or not to use batch normalization. To use batch normalization set to True. |

Bool |

False |

kernel_regularizer_type |

The regularization to use for the convolutional layers. If you want to prune the model it is recommended to use l1 / lasso regularization. This helps to generate sparse weights that can later be pruned from min weight pruning. The current options are either l1 or l2 regularization. |

String |

l2 |

Kernel_regularizer_factor |

The value to use for the regularization. |

Float |

0.001 |

Evaluator Config

Evaluator configuration is the configuration options for evaluating your GestureNet.

Field |

Description |

Data Type and Constraints |

Recommended/Typical Value |

evaluation_exp_name |

Name of experiment. |

String |

|

data_path |

Path to evaluation json file. |

String |

TAO Toolkit has built-in commands to train a GestureNet model and is given below.

tao gesturenet train -e <spec_file>

-k <key>

Required Arguments

-e, --experiment_spec_filename: Path to spec file.-k, –key: User specific encoding key to save or load a.tltmodel.

Sample Usage

Here’s an example of using the train command on GestureNet:

tao gesturenet train -e $SPECS_DIR/train_spec.json \

-k $KEY

TAO Toolkit has built in commands to evaluate a GestureNet model and is given below.

tao gesturenet evaluate -e <spec_file>

-m <model_file>

-k <key>

Required Arguments

-e, --experiment_spec_filename: Experiment spec file to set up the evaluation experiment. This should be the same as training spec file.-m, --model: Path to the model file to use for evaluation.-k, -–key: Provide the encryption key to decrypt the model. This is a required argument only with a.tltmodel file.

Sample Usage

Here’s an example of using the evaluation command on a GestureNet model.

tao gesturenet evaluate -e $USER_EXPERIMENT_DIR/model/train_spec.json \

-m $USER_EXPERIMENT_DIR/model/model.tlt \

-k $KEY

TAO Toolkit has built in commands to run inference on a GestureNet model and is given below.

tao gesturenet inference -e <spec_file>

-m <model_full_path>

-k <key>

--image_root_path <root_path>

--data_json <json_path>

--data_type <data_type>

-results_dir <results_dir>

Required Arguments

-e, --experiment_spec_filename: Experiment spec file to set up the evaluation experiment. The model parameters should be the same as training spec file.-m, --model: Path to the model file to use for evaluation.-k, -–key: Provide the encryption key to decrypt the model. This is a required argument. only with a.tltmodel file.--image_root_path: The root directpry that dataset is mounted at.--data_json: The json spec with image path and hand bounding box.--data_type: The dataset type within data_json that inference is to be run on.--results_dir: Directory where the results are saved.

Sample Usage

Here is an example of running inference using a GestureNet model.

tao gesturenet inference -e $USER_EXPERIMENT_DIR/model/train_spec.json \

-m $USER_EXPERIMENT_DIR/model/model.tlt \

-k $KEY \

--image_root_path /workspace/tao-experiments/gesturenet \

--data_json /workspace/tao-experiments/gesturenet/data.json \

--data_type kpi_set \

--results_dir $USER_EXPERIMENT_DIR/model

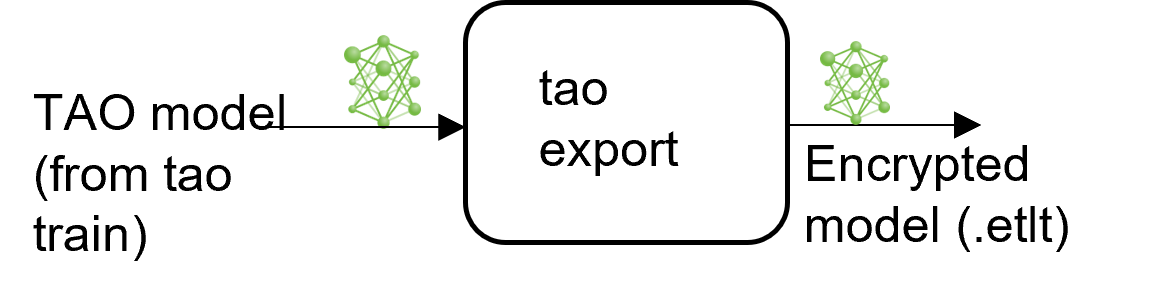

TAO Toolkit provides a utility for exporting a trained model to an encrypted onnx format or a TensorRT deployable engine format.

The export sub-task optionally generates the calibration cache for TensorRT INT8 engine

calibration.

Exporting the model decouples the training process from deployment and allows for conversion to

TensorRT engines outside the TAO environment. TensorRT engines are specific to each hardware

configuration and should be generated for each unique inference environment. This may be

interchangeably referred to as a .trt or .engine file. The same exported TAO model

may be used universally across training and deployment hardware. This is referred to as the

.etlt file, or encrypted TAO file. During model export, the TAO model is encrypted with a

private key, which is required when you deploy this model for inference.

INT8 Mode Overview

TensorRT engines can be generated in INT8 mode to run with lower precision,thus improving

performance. This process requires a cache file that contains scale factors

for the tensors to help combat quantization errors, which may arise due to low-precision arithmetic.

The calibration cache is generated using a calibration tensorfile when export is

run with the --data_type flag set to int8. Pre-generating the calibration

information and caching it removes the need for calibrating the model on the inference machine.

Moving the calibration cache is usually much more convenient than moving the calibration tensorfile

since it is a much smaller file and can be moved with the exported model. Using the calibration

cache also speeds up engine creation, as building the cache can take several minutes to generate

depending on the size of the Tensorfile and the model itself.

The export tool can generate an INT8 calibration cache by ingesting a sampled subset of training data. You need to create a sub-sampled directory of random images that best represent your test dataset. We recommend using at least 10-20% of the training data. The more data provided during calibration, the closer int8 inferences are to fp32 inferences. A helper script is provided with the sample notebook to select the subset data from the given training data.

Based on the evaluation results of the INT8 model, you might need to adjust the number of sampled images or the kind of selected to images to better represent test dataset. You can also use a portion of data from the test data for calibration to improve the results.

FP16/FP32 Model

The calibration.bin is only required if you need to run inference at INT8 precision. For

FP16/FP32 based inference, the export step is much simpler. All that is required is to provide

a model from the train step to export to convert it into an encrypted TAO

model.

Sample Usage of the Export tool

tao gesturenet export -m <Trained TAO Model Path> -k <Encode Key> -o <Output file .etlt>

-m: The path to the trained model to be exported-k: The encryption key for model loading-o: The path to the output.etltfile (.etltis appended to model path otherwise)-t: The target opset value for onnx conversion. The default value is 10--cal_data_file: The path to the calibration data file (.tensorfile)--cal_image_dirThe path to a directory with calibration image samples--cal_cache_fileThe path to the calibration file (.bin)--data_type: The data type for the TensorRT export. The options arefp32andint8.--batches: The number of images per batch. The default value is 1.--max_batch_size: The maximum batch size for the TensorRT engine builder. The default value is 1.--max_workspace_size: The maximum workspace size to be set for the TensorRT engine builder--batch_size: The number of batches to calibrate over. The default value is 1.--engine_file: The path to the exported TRT engine. Generates an engine file if specified.--input_dims: Input dims in channels first(CHW) or channels last (HWC) format as comma separated integer values. Default 3,160,160.--backend: The model type to export to.

INT8 Export Mode Required Arguments

--cal_image_dir: The directory of images that is preprocessed and used for calibration.--cal_data_file: The tensorfile generated using images incal_image_dirfor calibrating the engine. If this already exists, it is directly used to calibrate the engine. The INT8 tensorfile is a binary file that contains the preprocessed training samples.

The --cal_image_dir parameter applies the necessary preprocessing

to generate a tensorfile at the path mentioned in the --cal_data_file

parameter, which is in turn used for calibration. The number of generated batches in the

tensorfile is obtained from the value set to the --batches parameter,

and the batch_size is obtained from the value set to the --batch_size

parameter. Ensure that the directory mentioned in --cal_image_dir has at least

batch_size * batches number of images in it. The valid image extensions are

.jpg, .jpeg, and .png.

INT8 Export Optional Arguments

--cal_cache_file: The path to save the calibration cache file to. The default value is./cal.bin. If this file already exists, the calibration step is skipped.--batches: The number of batches to use for calibration and inference testing. The default value is 10.--batch_size: The batch size to use for calibration. The default value is 1.--max_batch_size: The maximum batch size of the TensorRT engine. The default value is 1.--max_workspace_size: The maximum workspace size of the TensorRT engine. The default value is2 * (1 << 30).--experiment_spec: The experiment_spec used for training. This argument is used to obtain the parameters to preprocess the data used for calibration.--engine_file: The path to the serialized TensorRT engine file. Note that this file is hardware specific and cannot be generalized across GPUs. Use this argument to quickly test your model accuracy using TensorRT on the host. As the TensorRT engine file is hardware specific, you cannot use this engine file for deployment unless the deployment GPU is identical to the training GPU.

Deploying to DeepStream 6.0

The pretrained model for GestureNet provided through NGC is available by default with DeepStream 6.0.

For more details, refer to DeepStream TAO Integration for GestureNet.